CHAPTER 3

CPU Virtualization

This chapter explains the concept of a virtual machine as well as the technology that embodies it. The technology is rather complex, inasmuch as it encompasses the developments in computer architecture, operating systems, and even data communications. The issues at stake here are most critical to Cloud Computing, and so we will take our time.

To this end, the name of the chapter is something of a misnomer: it is not only the CPU that is being virtualized, but the whole of the computer, including its memory and devices. In view of that it might have been more accurate to omit the word “CPU” altogether, had it not been for the fact that in the very concept of virtualization the part that deals with the CPU is the most significant and most complex.

We start with the original motivation and a bit of history—dating back to the early 1970s—and proceed with the basics of the computer architecture, understanding what exactly program control means and how it is achieved. We spend a significant amount of time on this topic also because it is at the heart of security: it is through manipulation of program control that major security attacks are effected.

After addressing the architecture and program control, we will selectively summarize the most relevant concepts and developments in operating systems. Fortunately, excellent textbooks exist on the subject, and we delve into it mainly to highlight the key issues and problems in virtualization. (The very entity that enables virtualization, a hypervisor, is effectively an operating system that “runs” conventional operating systems.) We will explain the critical concept of a process and list the operating system services. We also address the concept of virtual memory and show how it is implemented—a development which is interesting on its own, while setting the stage for the introduction of broader virtualization tasks.

Once the stage is set, this chapter will culminate with an elucidation of the concept of the virtual machine. We will concentrate on hypervisors, their services, their inner workings, and their security, all illustrated by live examples.

3.1 Motivation and History

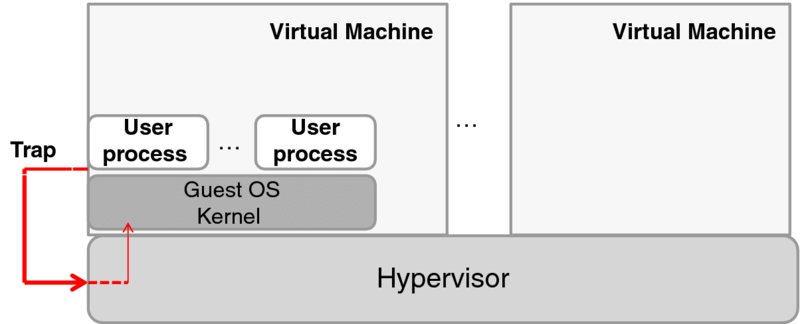

Back in the 1960s, as computers were evolving to become ever faster and larger, the institutions and businesses that used them weighed up the pros and cons when deciding whether to replace older systems. The major problem was the same as it is now: the cost of software changes, especially because back then these costs were much higher and less predictable than they are now. If a business already had three or four computers say, with all the programs installed on each of them and the maintenance procedures set in place, migrating software to a new computer—even though a faster one than all the legacy machines combined—was a non-trivial economic problem. This is illustrated in Figure 3.1.

Figure 3.1 A computing environment before and after virtualization.

But the businesses were growing, and so were their computing needs. The industry was working to address this problem, with the research led by IBM and MIT. To begin with, time sharing (i.e., running multiple application processes in parallel) and virtual memory (i.e., providing each process with an independent full-address-range contiguous memory array) had already been implemented in the IBM System 360 Model 67 in the 1960s, but these were insufficient for porting multiple “whole machines” into one machine. In other words, a solution in which an operating system of a stand-alone machine could be run as a separate user process now executing on a new machine was not straightforward. The reasons are examined in detail later in this chapter; in a nutshell, the major obstacle was (and still is) that the code of an operating system uses a privileged subset of instructions that are unavailable to user programs.

The only way to overcome this obstacle was to develop what was in essence a hyper operating system that supervised other operating systems. Thus, the term hypervisor was coined. The joint IBM and MIT research at the Cambridge Scientific Center culminated in the Control Program/Cambridge Monitor System (CP/CMS). The system, which has gone through four major releases, became the foundation of the IBM VM/370 operating system, which implemented a hypervisor. Another seminal legacy of CP/CMS was the creation of a user community that pre-dated the open-source movement of today. CP/CMS code was available at no cost to IBM users.

IBM VM/370 was announced in 1972. Its description and history are well presented in Robert Creasy's famous paper [1]. CMS, later renamed the Conversational Monitor System, was part of it. This was a huge success, not only because it met the original objective of porting multiple systems into one machine, but also because it effectively started the virtualization industry—a decisive enabler of Cloud Computing.

Since then, all hardware that has been developed for minicomputers and later for microcomputers has addressed virtualization needs in part or in full. Similarly, the development of the software has addressed the same needs—hand in hand with hardware development.

In what follows, we will examine the technical aspects of virtualization; meanwhile, we can summarize its major achievements:

- Saving the costs (in terms of space, personnel, and energy—note the green aspect!) of running several physical machines in place of one;

- Putting to use (otherwise wasted) computing power;

- Cloning servers (for instance, for debugging purposes) almost instantly;

- Isolating a software package for a specific purpose (typically, for security reasons)—without buying new hardware; and

- Migrating a machine (for instance, when the load increases) at low cost and in no time—over a network or even on a memory stick.

The latter capability—to move a virtual machine from one physical machine to another—is called live migration. In a way, its purpose is diametrically opposite to the one that brought virtualization to life—that is, consolidating multiple machines on one physical host. Live migration is needed to support elasticity, as moving a machine to a new host—with more memory and reduced load—can increase its performance characteristics.

3.2 A Computer Architecture Primer

This section is present only to make the book self-contained. It provides the facts that we find essential to understanding the foremost virtualization issues, especially as far as security is concerned. It can easily be skipped by a reader familiar with computer architecture and—more importantly—its support of major programming control constructs (procedure calls, interrupt and exception handling). To a reader who wishes to learn more, we recommend the textbook [2]—a workhorse of Computer Science education.

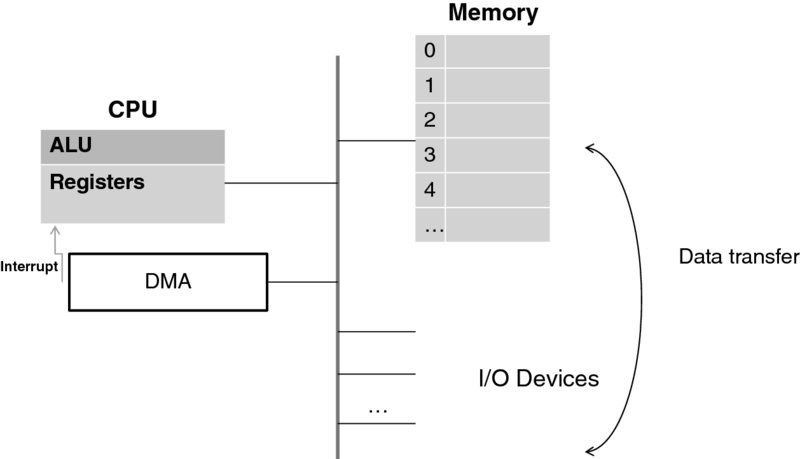

3.2.1 CPU, Memory, and I/O

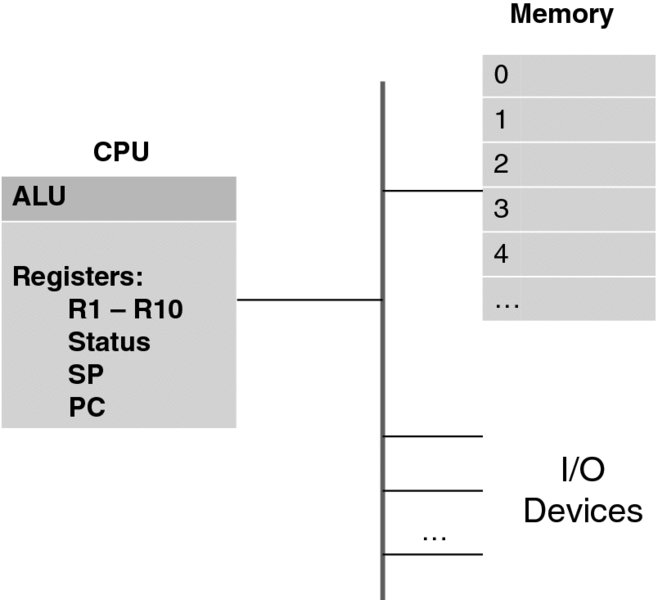

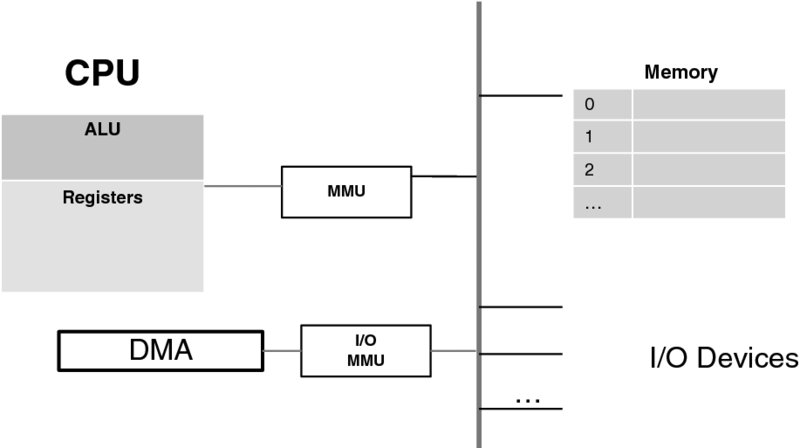

Figure 3.2 depicts pretty much all that is necessary to understand the blocks that computers are built of. We will develop more nuanced understanding incrementally.

Figure 3.2 Simplified computer architecture.

The three major parts of a computer are:

- The Central Processing Unit (CPU), which actually executes the programs;

- The computer memory (technically called Random Access Memory (RAM)), where both programs and data reside; and

- Input/Output (I/O) devices, such as the monitor, keyboard, network card, or disk.

All three are interconnected by a fast network, called a bus, which also makes a computer expandable to include more devices.

The word random in RAM (as opposed to sequential) means that the memory is accessed as an array—through an index to a memory location. This index is called a memory address.

Note that the disk is, in fact, also a type of memory, just a much slower one than RAM. On the other hand, unlike RAM, the memory on the disk and other permanent storage devices is persistent: the stored data are there even after the power is turned off.

At the other end of the memory spectrum, there is much faster (than RAM) memory inside the CPU. All pieces of this memory are distinct, and they are called registers. Only the registers can perform operations (such as addition or multiplication—arithmetic operations, or a range of bitwise logic operations). This is achieved through a circuitry connecting the registers with the Arithmetic and Logic Unit (ALU). A typical mode of operation, say in order to perform an arithmetic operation on two numbers stored in memory, is to first transfer the numbers to registers, and then to perform the operation inside the CPU.

Some registers (we denote them R1, R2, etc.) are general purpose; others serve very specific needs. For the purposes of this discussion, we identify three registers of the latter type, which are present in any CPU:

- The Program Counter (PC) register always points to the location memory where the next program instruction is stored.

- The Stack Pointer (SP) register always points to the location of the stack of a process—we will address this concept in a moment.

- The STATUS register keeps the execution control state. It stores, among many other things, the information about the result of a previous operation. (For instance, a flag called the zero bit of the STATUS register is set when an arithmetical operation has produced zero as a result. Similarly, there are positive-bit and negative-bit flags. All these are used for branching instructions: JZ—jump if zero; JP—jump if positive; JN—jump if negative. In turn, these instructions are used in high-level languages to implement conditional if statements.) Another—quite essential to virtualization—use of the STATUS register, which we will discuss later, is to indicate to the CPU that it must work in trace mode, that is execute instructions one at a time. We will introduce new flags as we need them.

Overall, the set of all register values (sometimes called the context) constitutes the state of a program being executed as far as the CPU is concerned. A program in execution is called a process.1 It is a very vague definition indeed, and here a metaphor is useful in clarifying it. A program can be seen as a cookbook, a CPU as a cook—using kitchen utensils, and then a process can be defined as the act of cooking a specific dish described in the cookbook.

A cook can work on several dishes concurrently, as long as the state of a dish (i.e., a specific step within the cookbook) is remembered when the cook switches to preparing another dish. For instance, a cook can put a roast into the oven, set a timer alarm, and then start working on a dessert. When the alarm rings, the cook will temporarily abandon the dessert and attend to the roast.

With that, the cook must know whether to baste the roast or take it out of the oven altogether. Once the roast has been attended to, the cook can resume working on the dessert. But then the cook needs to remember where the dessert was left off!

The practice of multi-programming—as maintained by modern operating systems—is to store the state of the CPU on the process stack, and this brings us to the subject of CPU inner workings.

We will delve into this subject in time, but to complete this section (and augment a rather simplistic view of Figure 3.2) we make a fundamental observation that modern CPUs may have more than one set of identical registers. As a minimum, one register set is reserved for the user mode—in which application programs execute– and the other for the system (or supervisory, or kernel) mode, in which only the operating system software executes. The reason for this will become clear later.

3.2.2 How the CPU Works

All things considered, the CPU is fairly simple in its concept. The most important point to stress here is that the CPU itself has no “understanding” of any program. It can deal only with single instructions written in its own, CPU-specific, machine code. With that, it keeps the processing state pretty much for this instruction alone. Once the instruction has been executed, the CPU “forgets” everything it had done and starts a new life executing the next instruction.

While it is not at all necessary to know all the machine code instructions of any given CPU in order to understand how it works, it is essential to grasp the basic concept.

As Donald Knuth opined in his seminal work [3], “A person who is more than casually interested in computers should be well schooled in machine language, since it is a fundamental part of a computer.” This is all the more true right now—without understanding the machine language constructs one cannot even approach the subject of virtualization.

Fortunately, the issues involved are surprisingly straightforward, and these can be explained using only a few instructions. To make things simple, at this point we will avoid referring to the instructions of any existing CPU. We will make up our own instructions as we go along. Finally, even though the CPU “sees” instructions as bit strings, which ultimately constitute the machine-level code, there is no need for us even to think at this level. We will look at the text that encodes the instructions—the assembly language.

Every instruction consists of its operation code opcode, which specifies (no surprise here!) an operation to be performed, followed by the list of operands. To begin with, to perform any operation on a variable stored in memory, a CPU must first load this variable into a register.

As a simple example: to add two numbers stored at addresses 10002 and 10010, respectively, a program must first transfer these into two CPU registers—say R1 and R2. This is achieved with a LOAD instruction, which does just that: loads something into a register. The resulting program looks like this:

LOAD R1 @10002

LOAD R2 @10010

ADD R1, R2(The character “@” here, in line with assembly-language conventions, signals indirect addressing. In other words, the numeric string that follows “@” indicates an address from which to load the value of a variable rather than the value itself. When we want to signal that the addressing is immediate—that is, the actual value of a numeric string is to be loaded—we precede it with the character “#,” as in LOAD R1, #3.)

The last instruction in the above little program, ADD, results in adding the values of both registers and storing them—as defined by our machine language—in the second operand register, R2.

In most cases, a program needs to store the result somewhere. A STORE instruction—which is, in effect, the inverse of LOAD—does just that. Assuming that variables x, y, and z are located at addresses 10002, 10010, and 10020, respectively, we can augment our program with the instruction STORE R2, @10020 to execute a C-language assignment statement: z = x + y.

Similarly, arithmetic instructions other than ADD can be introduced, but they hardly need any additional explanation. It is worth briefly mentioning the logical instructions: AND and OR, which perform the respective operations bitwise. Thus, the instruction OR R1, X sets those bits of R1 that are set in X; and the instruction AND R1, X resets those bits of R1 that are reset in X. A combination of logical instructions, along with the SHIFT instruction (which shifts the register bits a specified number of bits to the right or to the left, depending on the parameter value, while resetting the bits that were shifted), can achieve any manipulation of bit patterns.

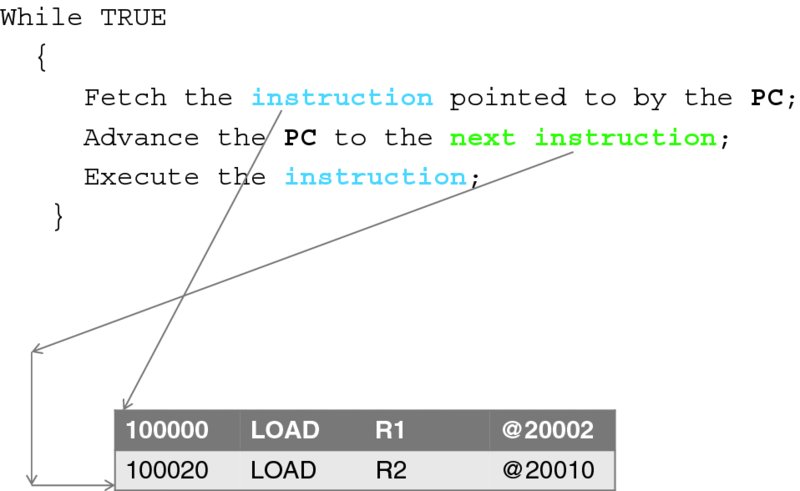

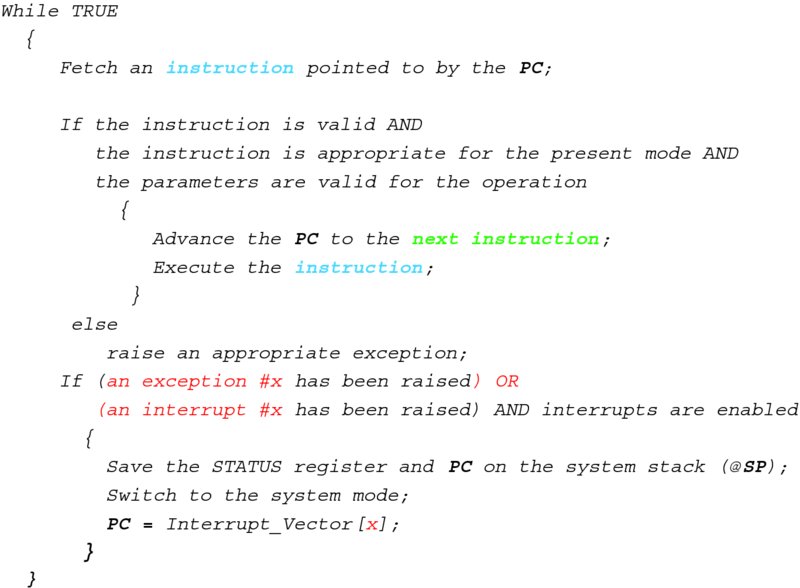

We will introduce other instructions as we progress. Now we are ready to look at the first—and also very much simplified—description of a CPU working mechanism, as illustrated in Figure 3.3. We will keep introducing nuances and important detail to this description.

Figure 3.3 A simplified CPU loop (first approximation).

The CPU works like a clock—which is, incidentally, a very deep analogy with the mechanical world. All the operations of a computer are carried out at the frequency of the impulses emitted by a device called a computer clock, just as the parts of a mechanical clock move in accordance with the swinging of a pendulum. To this end, the speed of a CPU is measured by the clock frequency it can support.

All a CPU does is execute a tight infinite loop, in which an instruction is fetched from the memory and executed. Once this is done, everything is repeated. The CPU carries no memory of the previous instruction, except what is remaining in its registers.

If we place our little program into memory location 200000,2 then we must load the PC register with this value so that the CPU starts to execute the first instruction of the program. The CPU then advances the PC to the next instruction, which happens to be at the address 200020. It is easy to see how the rest of our program gets executed.

Here, however, for each instruction of our program, the next instruction turns out to be just the next instruction in the memory. This is definitely not the case for general programming, which requires more complex control-transfer capabilities, which we are ready to discuss now.

3.2.3 In-program Control Transfer: Jumps and Procedure Calls

At a minimum, in order to execute the “if–then–else” logic, we need an instruction that forces the CPU to “jump” to an instruction stored at a memory address different from that of the next instruction in contiguous memory. One such instruction is the JUMP instruction. Its only operand is a memory address, which becomes the value of the PC register as a result of its execution.

Another instruction in this family, JNZ (Jump if Non-Zero) effects conditional transfer to an address provided in the instruction's only operand. Non-zero here refers to the value of a zero bit of the STATUS register. It is set every time the result of an arithmetic or logical operation is zero—a bit of housekeeping done by the CPU with the help of the ALU circuitry. When executing this instruction, a CPU does nothing but change the value of the PC to that of the operand. The STATUS register typically holds other conditional bits to indicate whether the numeric result is positive or negative. To make the programmer's job easier (and its results faster), many CPUs provide additional variants of conditional transfer instructions.

More interesting—and fundamental to all modern CPUs—is an instruction that transfers control to a procedure. Let us call this instruction JPR (Jump to a Procedure). Here, the CPU helps the programmer in a major way by automatically storing the present value of the PC (which, according to Figure 3.3, initially points to the next instruction in memory) on the stack3—pointed to by the SP. With that, the value of the SP is changed appropriately. This allows the CPU to return control to exactly the place in the program where the procedure was called. To achieve that, there is an operand-less instruction, RTP (Return from a Procedure). This results in popping the stack and restoring the value of the PC. This must be the last instruction in the body of every procedure.

There are several important points to consider here.

First, we observe that a somewhat similar result could be achieved just with the JUMP instruction alone; after all, a programmer (or a compiler) could add a couple of instructions to store the PC on the stack. A JUMP—to the popped PC value—at the end of the procedure would complete the task. To this end, everything would have worked even if the CPU had had no notion of the stack at all—it could have been a user-defined structure. The two major reasons that modern CPUs have been developed in the way we describe here are (1) to make procedure calls execute faster (by avoiding the fetching of additional instructions) and (2) to enforce good coding practices and otherwise make adaptability of the ALGOL language and its derivatives straightforward (a language-directed design). As we have noted already, the recursion is built in with this technique.

Second, the notion of a process as the execution of a program should become clearer now. Indeed, the stack traces the control transfer outside the present main line of code. We will see more of this soon. It is interesting that in the 1980s, the programmers in Borroughs Corporation, whose highly innovative—at that time—CPU architecture was ALGOL-directed, used the words process and stack interchangeably! This is a very good way to think of a process—as something effectively represented by its stack, which always traces a single thread of execution.

Third, this structure starts to unveil the mechanism for supporting multi-processing. Assuming that the CPU can store all its states on a process stack and later restore them—the capability we address in the next section—we can imagine that a CPU can execute different processes concurrently by switching among respective stacks.

Fourth—and this is a major security concern—the fact that, when returning from a procedure, the CPU pops the stack and treats as the PC value whatever has been stored there means that if one manages to replace the original stored value of the PC with another memory address, the CPU will automatically start executing the code at that memory address. This fact has been exploited in distributing computer worms. A typical technique that allows overwriting the PC is when a buffer is a parameter to a procedure (and thus ends up on the stack). For example, if the buffer is to be filled by reading a user-supplied string, and the procedure's code does not check the limits of the buffer, this string can be carefully constructed to pass both (1) the worm code and (2) the pointer to that code so that the pointer overwrites the stored value of the PC. This technique has been successfully tried with the original Morris's worm of 1988 (see [4] for a thorough technical explanation in the context of the worm's history unfolding).4 We will address security in the last section of this chapter.

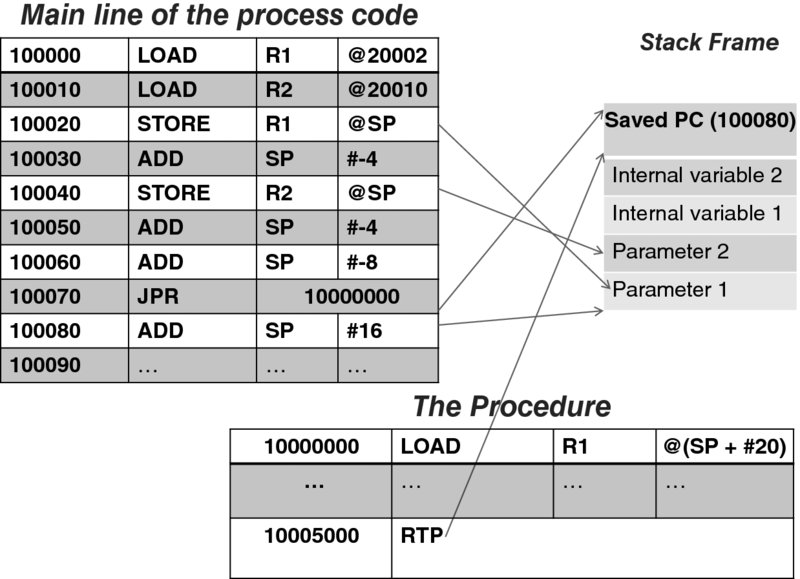

For what follows, it is important to elaborate more on how the stack is used in implementing procedure calls.

With a little help from the CPU, it is now a programmer's job (if the programmer writes in an assembly language) or a compiler's job (if the programmer writes in a high-level language) to handle the parameters for a procedure. The long-standing practice has been to put them on the stack before calling the procedure.

Another essential matter that a programmer (or a compiler writer) must address in connection with a procedure call is the management of the variables that are local to the procedure. Again, a long-standing practice here is to allocate all the local memory on the stack. One great advantage of doing so is to enable recursion: each time a procedure is invoked, its parameters and local memory are separate from those of the previous invocation.

Figure 3.4 illustrates this by following the execution of an example program,5 along with the state of the process stack at each instruction. Here, a procedure stored at location 1000000 is called from the main program. The procedure has two parameters stored at locations 20002 and 20010, respectively.

Figure 3.4 The process stack and the procedure call.

The first six instructions implement the act of pushing the procedure parameters on the stack. (Note that we consider each parameter to be four units long, hence ADD SP #-4; of course, as the stack—by convention—diminishes, the value of the stack pointer is decreased.)

The seventh instruction (located at the address 100060), prepares the internal memory of the procedure on the stack, which happens in this particular case to need eight units of memory for the two, four-unit-long variables.

In the eighth instruction, the procedure code is finally invoked. This time the CPU itself pushes the value of the PC (also four bytes long) on the stack; then, the CPU loads the PC with the address of the procedure. At this point, the procedure's stack frame has been established.

Execution of the first instruction of the procedure results in retrieving the value of the first parameter, which, as we know, is located on the stack, exactly 20 units above the stack pointer. Similarly, another parameter and the internal variables are accessed indirectly, relative to the value of the stack pointer. (We intentionally did not show the actual memory location of the stack: with this mode of addressing, that location is irrelevant as long as the stack is initialized properly! This is a powerful feature in that it eliminates the need for absolute addressing. Again, this feature immediately supports recursion, as the same code will happily execute with a new set of parameters and new internal memory.)

When the procedure completes, the RTP instruction is executed, which causes the CPU to pop the stack and restore the program counter to its stored value. Thus, the program control returns to the main program. The last instruction in the example restores the stack to its original state.

A minor point to note here is that a procedure may be a function—that is, it may return a value. How can this value be passed to the caller? Storing it on the stack is one way of achieving that; the convention of the C-language compilers though has been to pass it in a register—it is faster this way.

This concludes the discussion of a procedure call. We are ready to move on to the next level of detail of CPU mechanics, motivated by the new forms of control transfer.

3.2.4 Interrupts and Exceptions—the CPU Loop Refined

So far, the simple CPU we have designed can only deal with one process. (We will continue with this limitation in the present section.) The behavior of the process is determined by the set of instructions in its main line code—and the procedures, to which control is transferred—but still in an absolutely predictable (sometimes called “deterministic” or “synchronous”) manner. This type of CPU existed in the first several decades of computing, and it does more or less what is needed to perform in-memory processing. It has been particularly suitable for performing complex mathematical algorithms, so long as not much access to I/O devices is needed.

But what happens when an I/O request needs to be processed? With the present design, the only solution is to have a subroutine that knows how to access a given I/O device—say a disk or a printer. We can assume that such a device is memory-mapped: there is a location in main memory to write the command (read or write), pass a pointer to a data buffer where the data reside (or are to be read into), and also a location where the status of the operation can be checked. After initiating the command, the process code can do nothing else except execute a tight loop checking for the status.

Historically, it turned out that for CPU-intensive numeric computation such an arrangement was more or less satisfactory, because processing I/O was an infrequent action—compared with computation. For business computing, however—where heavy use of disks, multiple tapes, and printers was required most of the time—CPU cycles wasted on polling are a major performance bottleneck. As the devices grew more complex, this problem was further aggravated by the need to maintain interactive device-specific protocols, which required even more frequent polling and waiting. (Consider the case when each byte to a printer needs to be written separately, followed by a specific action based on how the printer responds to processing the previous byte.) For this reason, the function of polling was transferred into the CPU loop at the expense of a change—and a dramatic change at that!—of the computational model.

The gist of the change is that whereas before a subroutine was called from a particular place in a program determined by the programmer (whether from the main line or another subroutine), now the CPU gets to call certain routines by itself, acting on a communication from a device. With that, the CPU effectively interrupts the chain of instructions in the program, which means that the CPU needs to return to this chain exactly at the same place where it was interrupted. More terminology here: the signal from a device, which arrives asynchronously with respect to the execution of a program, is appropriately called an interrupt; everything that happens from that moment on, up to the point when the execution of the original thread of instruction resumes, is called interrupt processing.

Of course, the actual code for processing the input from a device—the interrupt handling routine—still has to be written by a programmer. But since this routine is never explicitly called from a program, it has to be placed in a specified memory location where the CPU can find it. This location is called an interrupt vector. Typically, each device has its own vector—or even a set of vectors for different events associated with the device. (In reality this may be more complex, but such a level of detail is unnecessary here.) At initialization time, a program must place the address of the appropriate interrupt routine in the slot assigned to the interrupt vector. The CPU jumps to the routine when it detects a signal from the device. When the interrupt is serviced, the control is returned to the point where the execution was interrupted—the execution of the original program is resumed.

This mechanism provides the means to deal with the external events that are asynchronous with the execution of a program. For reasons that will become clear later, the same mechanism is also used for handling certain events that are actually synchronous with program execution. They are synchronous in that they are caused by the very instruction to be executed (which, as a result, may end up being not executed). Important examples of such events are:

- A computational exception (an attempt to divide by zero).

- A memory-referencing exception (such as an attempt to read or write to a non-existent location in memory).

- An attempt to execute a non-existing instruction (or an instruction that is illegal in the current context).

- (A seemingly odd one!) An explicit in-line request (called a trap) for processing an exception. In our CPU this is caused by an instruction (of the form TRAP <trap number>) which allows a parameter—the trap number—to associate a specific interrupt vector with the trap, so that different trap numbers may be processed differently.

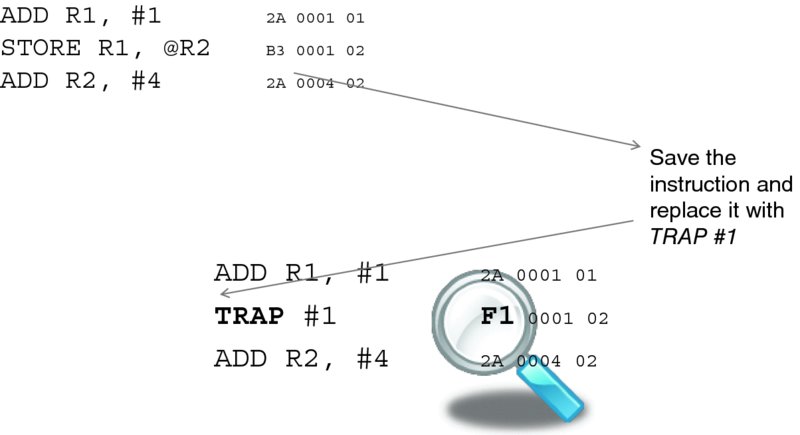

The fundamental need for the trap instruction will be explained later, but one useful application is in setting breakpoints for debugging. When a developer wants a program to stop at a particular place so that the memory can be examined, the resulting instruction is replaced with the trap instruction, as Figure 3.5 illustrates. The same technique is used by hypervisors to deal with non-virtualizable instructions, and we will elaborate on this later too.

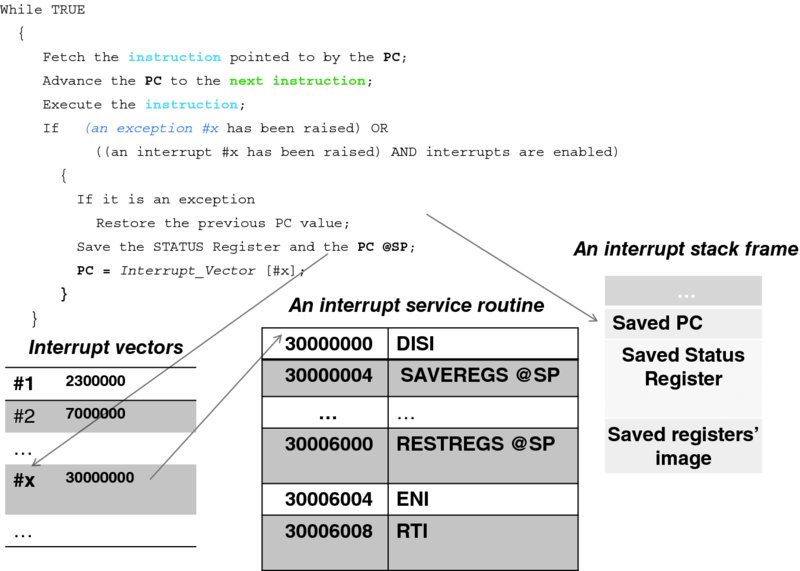

Figure 3.6 illustrates the following discussion.

Figure 3.5 Setting a breakpoint.

Figure 3.6 The second approximation of the CPU loop.

We are ready to modify the simplistic CPU loop of Figure 3.3. The new loop has a check for an interrupt or exception signal. Note that, as far as the CPU is concerned, the processing is quite deterministic: the checking occurred exactly at the end of the execution of the present instruction, no matter when the signal arrived. The CPU's internal circuitry allows it to determine the type of interrupt or exception, which is reflected in the interrupt number x. This number serves as the index to the interrupt vector table.6 There is an important difference between processing interrupts and processing exceptions: an instruction that has caused an exception has not been executed; therefore, the value of the PC to be stored must remain the same. Other than that, there is no difference in processing between interrupts and exceptions, and to avoid pedantic repetition, the rest of this section uses the word “interrupt” to mean “an interrupt or an exception.”

The table on the left in Figure 3.6 indicates that the interrupt service routine for processing interrupt x starts at location 30000000. The CPU deals with this code similarly to that of a procedure; however, this extraordinary situation requires an extraordinary set of actions! Different CPUs do different things here; our CPU does more or less what all modern CPUs do.

To this end, our CPU starts by saving the present value of the STATUS register on the process stack. The reason is that whatever conditions have been reflected in the STATUS register flags as a result of the previous instruction will disappear when the new instruction is executed. For example, if the program needs to branch when a certain number is greater than another, this may result in the four instructions as follows:

- Two instructions to load the respective values into R0 and R1.

- One instruction to subtract R1 from R0.

- One last instruction to branch, depending on whether the result of the subtraction is positive.

The execution of the last instruction depends on the flag set as a result of the execution of the third instruction, and so if the process is interrupted after that instruction, it will be necessary for it to have the flags preserved when it continues.

After saving the STATUS register, the CPU saves the value of the PC, just as it did with the procedure call stack frame. Then it starts executing the interrupt service routine.

The first instruction of the latter must be the DISI (Disable Interrupts) instruction. Indeed, from the moment the CPU discovers that an interrupt is pending—and up to this instruction—everything has been done by the CPU itself, and it would not interrupt itself! But the next interrupt from the same device may have arrived already. If the CPU were to process it, this very interrupt routine would be interrupted. A faulty device (or a malicious manipulation) would then result in a set of recursive calls, causing the stack to grow until it overflows, which will eventually bring the whole system down. Hence, it is necessary to disable interrupts, at least for a very short time—literally for the time it takes to execute a few critical instructions.

Next, our sample interrupt service routine saves the rest of the registers (those excluding the PC and the STATUS register) on the stack using the SAVEREGS instruction.7 With that, in effect, the whole state of the process is saved. Even though all the execution of the interrupt service routine uses the process stack, it occurs independently of—or concurrently with—the execution of the process itself, which is blissfully unaware of what has happened.

The rest of the interrupt service routine code deals with whatever else needs to be done, and when it is finished, it will restore the process's registers (via the RESTREGS instruction) and enable interrupts (via the penultimate instruction, ENI). The last instruction, RTI, tells the CPU to restore the values of the PC and the STATUS register. The next instruction the CPU will execute is exactly the instruction at which the process was interrupted.

(Typically, and also in the case of our CPU, there is a designated STATUS register flag (bit) that indicates whether the interrupts are disabled. DISI merely sets this flag, and ENI resets it.)

An illustrative example is a debugging tool, as mentioned earlier: a tool that allows us to set breakpoints so as to analyze the state of the computation when a breakpoint is reached.8 The objective is to enable the user to set—interactively, by typing a command—a breakpoint at a specific instruction of the code, so that the program execution is stopped when it reaches this instruction. At that point the debugger displays the registers and waits for the next command from the user.

Our debugging tool, as implemented by the command_line( ) subroutine, which is called by the TRAP #1 service routine, accepts the following six commands:

- Set <location>, which sets the breakpoint in the code to be debugged. Because this may need to be reset, the effect of the command is that

- both the instruction (stored at <location>) and the value of <location> are stored in the respective global variables, and

- the instruction is replaced with that of TRAP #1.

Figure 3.5 depicts the effect of the command; following convention, we use hexadecimal notation to display memory values. With that, the opcode for the TRAP #1 instruction happens to be F1.

- Reset, which returns the original instruction, replaced by the trap, to its place.

- Register <name>, <value>, which sets a named register with its value. The images of all CPU registers (except the PC and the STATUS register, which require separate handling) are kept in a global structure registers_struct so when, for instance, a user enters a command: Register R1, 20, an assignment “registers_struct.R1 = 20;” will be executed.

- Go, to start executing the code–based on the respective values of the registers stored in registers_struct.

- Show <memory_location>, <number_of_units>, which simply provides a core dump of the piece of memory specified by the parameters.

- Change <memory_location>, <value>, which allows us to change the value of a memory unit.

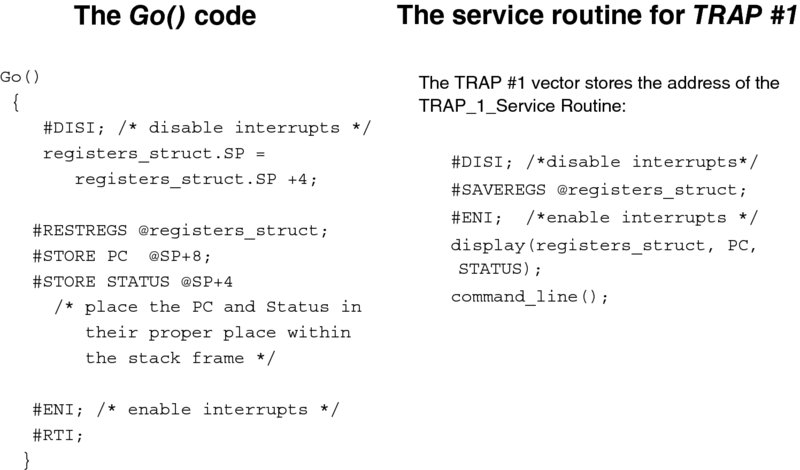

Both the Go( ) procedure and the interrupt vector routine TRAP_1_Service_Routine( ) are presented in Figure 3.7. We will walk through them in a moment, but first let us start with the initialization. In the beginning, we:

Figure 3.7 Go( ) and the interrupt service routines.

- Store the address of the pointer to the TRAP_1_Service_Routine( ) at the interrupt vector for the TRAP #1 instruction. (This location, which depends on a particular CPU, is supplied by the CPU manual.)

- Execute the TRAP #1 instruction, which will result in the execution of the TRAP_1_Service_Routine( ). The latter calls the command_line( ) procedure, which prompts the user for a command and then interprets it.

Now we can start debugging. Say we want a program, whose first instruction is located at memory address 300000, to stop when it reaches the instruction located at address 350000.

We type the following three commands:

>Register PC, 300000

>Set 350000

>GoWhen the interpreter invokes Go( ), it first pops the stack to make up for the debugger's command-line procedure frame (With our design, we will never return from this call by executing RTP.). Then it transfers the register values stored in registers_struct to the CPU. The same task is repeated separately for the PC and the STATUS registers, whose values must be modified on the stack to build the proper stack frame. Finally, the RTI instruction is executed.

This will get the program moving. When it reaches the trapped instruction, our TRAP handler will be invoked. As a result, we will see the values of the registers and a prompt again. We can examine the memory, possibly change one thing or another, replace the trapped instruction, and maybe set another trap.

Note that the Go( ) procedure has, in effect, completed interrupt handling: the RTI instruction has not been part of the trap service routine. We can have more than one program in memory, and by modifying the registers appropriately, we may cause another program to run by “returning” to it.

This is a dramatic point: we have all we need to run several processes concurrently!

To do so, we allocate each process appropriate portions of memory for the stack and for the rest of the process's memory, called a heap, where its run-time data reside. We also establish the proper stack frame. In the latter, the value of the PC must point to the beginning of the process's code, and the value of the SP must point to the process's stack. (The rest of the registers do not matter at this point.) We only need to execute the last three instructions of Figure 3.5; the magic will happen when the RTI instruction is executed!9

And thus, with the CPU described so far—however simple it may be—it is possible to make the first step toward virtualization, that is multi-processing. With this step, multiple processes (possibly belonging to different users) can share a CPU. This is the view of multi-processing “from outside.” The “inner” view—a process's view—is that the process is given its own CPU as a result.

The perception of owning the CPU is the first step toward virtualization. But the ideal of “being virtual” cannot quite be achieved without virtualizing memory, that is making a process “think” that it has its own full memory space starting from address 0.

These two aspects—the CPU and memory virtualization—are addressed in the next two sections. It turns out that adding new capabilities requires changes to the architecture. As the needs of multi-processing become clear, our CPU will further evolve to support them.

3.2.5 Multi-processing and its Requirements—The Need for an Operating System

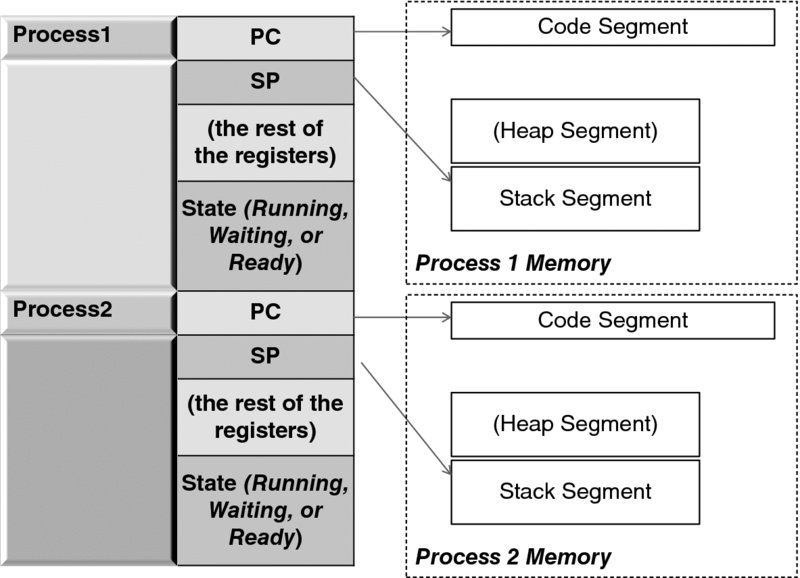

Let us consider the case of only two processes. To keep track of their progress, we create and populate a data structure—an array, depicted in Figure 3.8—each entry of which contains:

- The complete set of values of a process's registers (with the PC initially pointing to the respective program's code segment, and the SP pointing to the stack).

- The state of the process, which tells us whether the process is (a) waiting for some event (such as completion of I/O), (b) ready to run, or (c) in fact, running. Of course, only one of the two processes can be in the latter state. (For this matter, with one CPU, only one process can be running, no matter how many other processes there are.)

- The segment and page table pointers (see Section 3.2.6), which indirectly specify the address and size of the process heap memory (that is, the memory allocated for its global variables).

Figure 3.8 The process table.

It is easy to imagine other entries needed for housekeeping, but just with that simple structure—called the process table—we can maintain the processes, starting them in the manner described at the end of the previous section and intervening in their lives during interrupts.

It is also easy to see that two, for the number of processes, is by no means a magic number. The table can have as many entries as memory and design allow.

The program that we need to manage the processes is called an operating system, and its objectives set a perfect example for general management: the operating system has to accomplish much but whatever it does must be done very fast, without noticeably interfering with the lives of the processes it manages.

The major events in those lives occur during interrupts. To this end, the operating system at this point is but a library—a set of procedures called from either the main line of code or the interrupt service routines. Incidentally, the system could even allocate CPU fairly equally among the processes by processing clock interrupts and checking whether a CPU-bound process has exceeded its share of time—the time it is allowed to own the CPU. (This share is called quantum. Its value, assigned at the configuration time, allows us to support the CPU virtualization claim: that a CPU with a speed of θ Hz,10 when shared among N processes, will result in each process being given a virtual CPU of θ/N Hz.)

Overall, the operating system is responsible for the creation and scheduling of the processes and for allocating resources to them. In a nutshell, this is achieved by carefully maintaining a set of queues. In its life, a process keeps changing its state. Unless the process is executing, it is waiting for one thing or another (such as a file record to be read, a message from another process, or a CPU—when it is ready to execute). The operating system ensures that each process is in its proper queue at all times. We will keep returning to the subject of operating systems, but this subject requires an independent study. Fortunately, there are excellent and comprehensive textbooks available [6, 7], which we highly recommend.

Getting back to the architecture at our disposal, we note three fundamental problems.

The first problem is that a user program must somehow be aware of specific addresses in memory where its code and data reside, but these addresses are never known until run time. We defer the resolution of this problem until the next section.

The second problem is that, at the moment, we have no way to prevent a process from accessing another process's memory (whether for malicious reasons or simply because of a programmer's error). Even worse, each process can access the operating system data too, because at this point it is in no way different from the user data.

The third problem is that we cannot prevent processes from executing certain instructions (such as disabling interrupts). If a user can disable interrupts in its program, the computer may become deaf and mute. Incidentally, this is a fine—and extremely important—point that needs some elaboration. Since the system code we discuss is only a library of system routines, it is being executed by one or another process. Indeed, either the process's program calls the respective library procedure directly or it is called during the interrupt—which is processed on the interrupted process's stack. Yet, it is intuitively obvious that only the system code, not the user program code, should be allowed to disable the interrupts. Hence the problem here, again, is that the user code so far has been indistinguishable from the system code.

The last problem has been addressed by recognizing that the CPU must have (at least) two modes of execution: the user mode and the system mode. The former is reserved for user programs and some non-critical system code. The latter is reserved for the critical operating system code (or kernel). With that, certain instructions must be executed only in the system mode. Correspondingly, both CPUs and modern operating systems have evolved to support this principle.

How can we implement it? We observe that the system code has been invoked when either:

- It is called explicitly from a user's program, or

- An interrupt (or an exception) has occurred.

To ensure that system processing is entered only through a designated gate, we postulate that all system calls be made via an exception—by executing the TRAP instruction. (Now we have fulfilled the promise made earlier to explain the further use of this instruction!) Every procedure that needs access to system resources or external devices must do so. This reduces the first case above to the second: Now the system code can be invoked only via an interrupt or exception.

We further postulate that the CPU is (1) to start in the system mode and (2) automatically enter the system mode whenever an interrupt or exception is processed. With that, the CPU may switch from system into user mode only when explicitly instructed (by the kernel) to do so.

In what follows, the main idea is to ensure that what a process can do in the user mode is limited only by what can affect this very process—and no other process or the operating system. To achieve that, we first need to make the CPU aware of what code it is running—the user code or the operating system code. Second, we need to restrict the actions that can be performed in the user mode. Again, the guiding principle is that the CPU starts in the system mode and switches to the user mode only when explicitly instructed to do so. While it is in the user mode, the CPU's actions must be restricted.

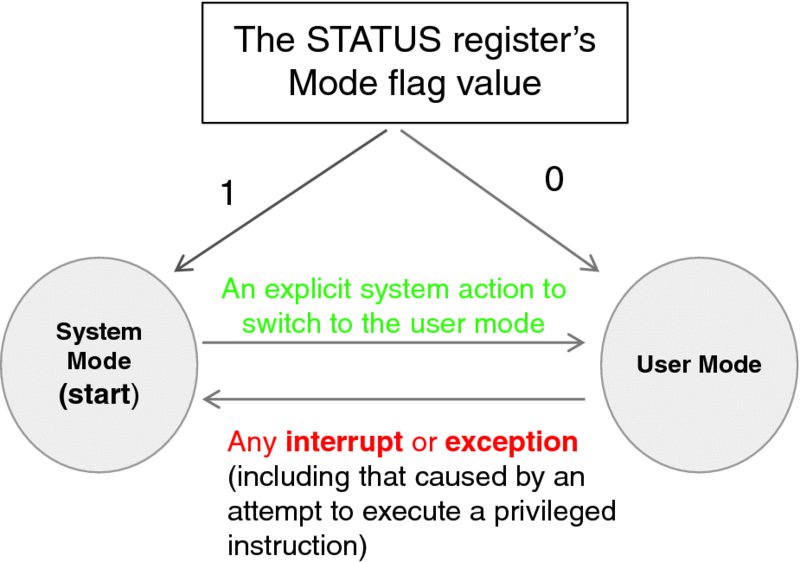

We make the CPU aware of the operating mode by introducing a new mode flag in the STATUS register, which is a bit indicating the system mode (when set) or the user mode (when reset). Figure 3.9 depicts the state machine that governs the transitions between the system and user states.

Figure 3.9 The CPU mode state machine.

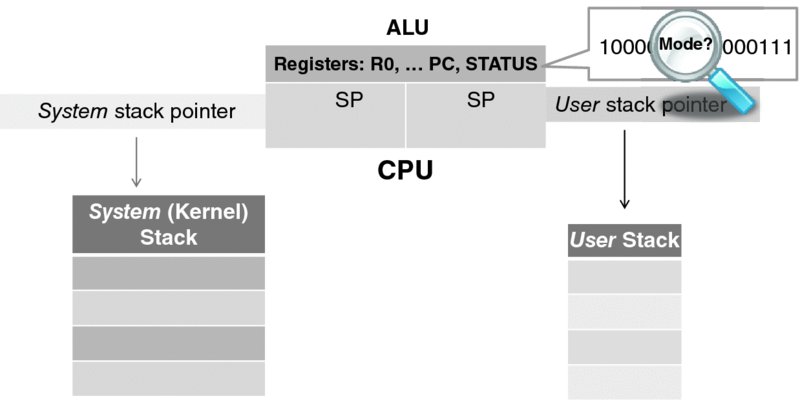

We further modify our CPU by adding a new stack pointer register, so we have two now—one (called the system SP) for the system mode and the other (called the user SP) for the user mode. Figure 3.10 illustrates this modification. The register name (or rather encoding) in instructions intentionally remains invariant over the choice of register, but the CPU knows which register to use because of the mode flag in the STATUS register.

Figure 3.10 The modified CPU and the two process stacks.

With that change, an operating system can now maintain two stacks—one for executing the system code and one for executing the user code. The notion of maintaining two stacks may appear stunningly complex at first, yet it actually simplifies the operating system design. It has been implemented with great success in the Unix operating system, with the design explained in Bach's prominent monograph [8]. (The attentive reader may ask right away which stack is used to save the CPU state at interrupt. The answer is: the system stack. The first thing that happens at interrupt or exception is that the CPU switches to the system mode, which automatically activates the system stack pointer.)

Next, we introduce privileged instructions: those instructions that can be executed successfully only when the CPU is in the system mode. RTI, DISI, and ENI are the first three instructions in this set. The reason for RTI being privileged is that it causes restoration of the STATUS register, and hence may cause a transition to the user mode. As we postulated earlier, this transition may happen only as a result of the system code execution.

Now, DISI and ENI are mnemonics for operations on a flag in the STATUS register. With our CPU, any instruction (such as logical AND, OR, or LOAD) that alters the value of the STATUS register is declared privileged. Similarly, any instruction that changes the value of the system stack pointer is privileged by design, since the user code has no way even to refer to the system SP.

The context in which an instruction is used is an important factor in determining whether it needs to be privileged or not.

To this end, we postulate that all instructions that deal with the I/O access (present in some CPUs) are privileged. We will discuss handling privileged instructions in Section 1.2.7; first we need to understand the issues in virtual memory management, which necessitates a whole new class of privileged instructions.

3.2.6 Virtual Memory—Segmentation and Paging

The modern notion of memory being virtual has two aspects. First, with virtual memory a process must be unaware of the actual (physical memory) addresses it has been allocated. (Instead, a process should “think” that it and its data occupy the same addresses in memory every time it runs.) Second, the process must “think” that it can actually access all addressable memory, the amount of which typically far exceeds that of the available physical memory. (It is still uncommon, to say the least, to expect a computer with 64-bit addressing to have two exabytes—2 × 1018 bytes—of actual memory!)

Except for the need for some advanced hardware address-translation mechanism, common to enabling both aspects, there are significant differences in their respective requirements.

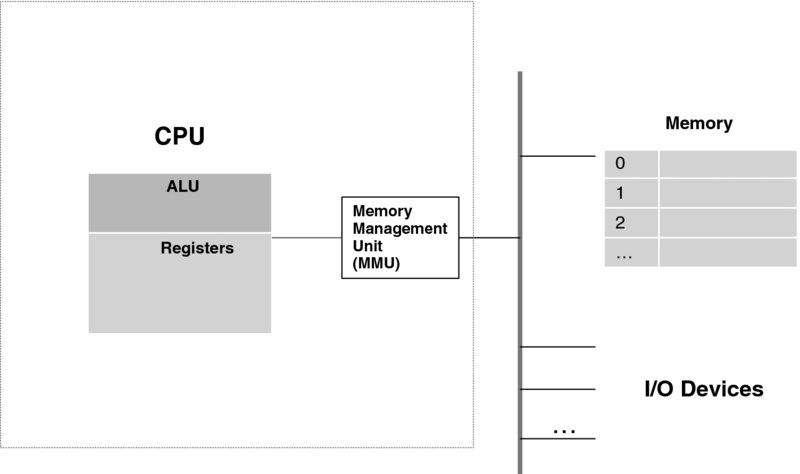

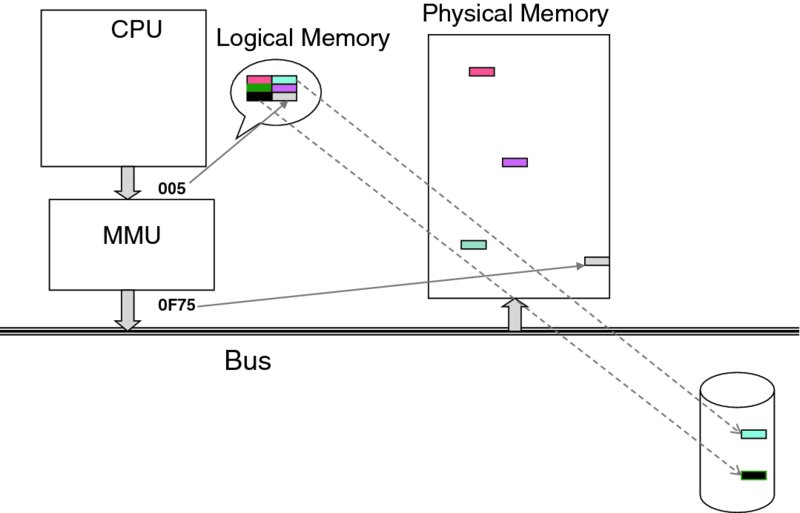

We start with the first aspect. Even in the case of a two-process system, as Figure 3.8 demonstrates, it is clear that the segments allocated to them will have different addresses. Yet, with our CPU, each instruction has its pre-defined address in memory, and the memory allows absolute addressing too. And so, if the code of Process 2 has an instruction LOAD R1, 30000 but the data segment for this process starts at location 5000, the instruction must somehow be changed. One potential approach here is to effectively rewrite the program at load time, but this is impracticable. A far better approach is to employ a tried-and-true electrical engineering midbox trick, depicted in Figure 3.11. This figure modifies Figure 3.2 with a translation device, called a Memory Management Unit (MMU), inserted between the CPU and the bus. This device has a table that translates a logical (i.e., virtual) address into an actual physical address. (In modern CPUs, this device has actually been integrated into the CPU box.)

Figure 3.11 Introducing the MMU.

Suppose a process has been programmed (or compiled) with the following assumptions:

- its code starts at location x;

- the user stack starts at location y;

- the system stack starts at location z; and

- the heap starts at location t.

Each of these is a contiguous piece of memory—a segment. In fact, even smaller segments can be thought of and used in practice. For instance, it is very convenient to put all initialization code in one place in memory (which can later be reused after initialisation is completed). Segments are enumerated and referred to by their numbers.

At the time of loading the process, the operating system brings its code into location x′, while allocating both stack segments and the heap memory segment starting at addresses y′, z′, and t′, respectively. A segment number is an index into the MMU table. One entry in this table is the segment base register (e.g., x′ or y′), which the operating system fills out. The MMU has the circuitry to translate a logical address into a physical address. Thus, the logical address a in the code segment gets translated into a − x + x′, which is the sum of the segment base register, x′, and the offset within the code segment, (a − x). This translation can be performed very fast by hardware, so its effect on timing is negligible.

The MMU also helps to ensure that no process can read or write beyond the segment allocated to it. For that there is another entry in the MMU table: the size of the segment memory which the operating system has allocated. Continuing with the example of the above paragraph, if X is the size of the code segment, then the inequality a − x < X must hold for each address a. If this does not happen, an exception is generated. Again, the comparison with a constant is performed so fast by the MMU circuitry that its effect is negligible.

Figure 3.12 summarizes the MMU address translation process. We should add that an MMU can do (and typically does) much more in terms of protection. For example, in the segment table it may also contain the flags that indicate whether a segment is executable (thus preventing accidental “walking over” the data, as well as preventing attacks where malicious code is placed into a data segment so as to be executed later). Additional flags can indicate whether it is read only, can be shared, and so on. This allows much versatility. For example, an operating system library may be placed into a read-only code segment available to all processes.

Figure 3.12 Segmentation: The MMU translation processing.

The MMU is typically accessed through its registers. It hardly needs an explanation why only privileged instructions may access the MMU entries!

The second aspect of memory virtualization—that is, creating the perception of “infinite” memory—is much more complex, and we will describe it only schematically here. Either of the operating system books [7, 8] does an excellent job of explaining the details.

To begin with, the technology employed here also solves another problem—memory fragmentation. With several processes running, it may be impossible for the operating system to find a contiguous memory segment to allocate to yet another process; however, cumulatively there may be enough “holes” (i.e., relatively small pieces of unused memory) to provide enough contiguous memory, should these “holes” be merged into a contiguous space.

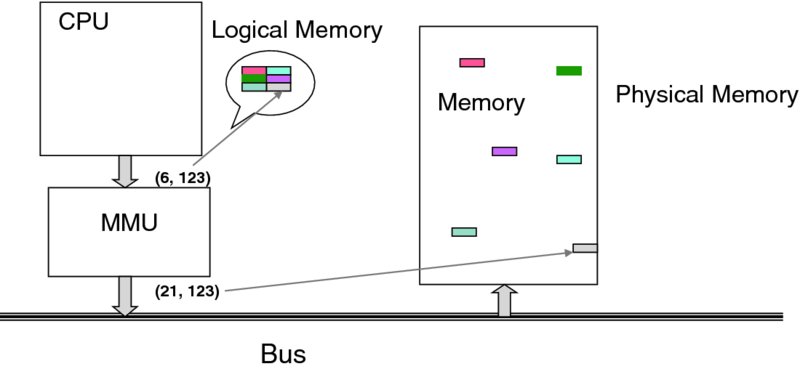

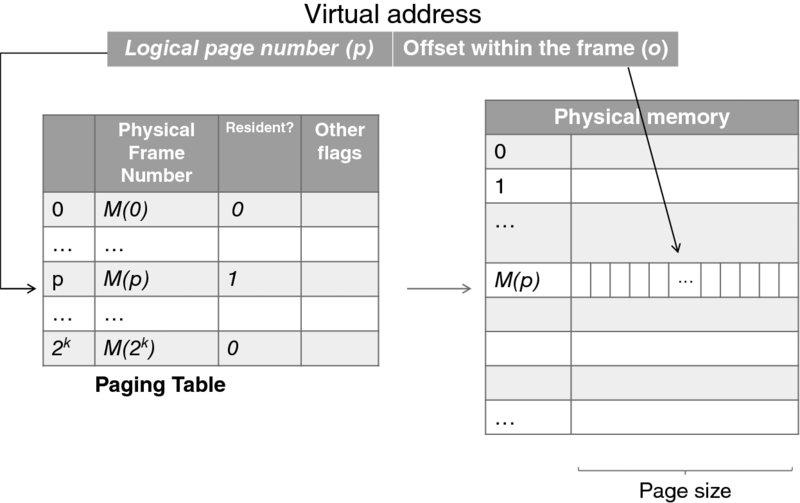

To achieve that, another type of MMU is used. It works as follows. The logical memory is treated as an array of blocks called pages. All pages have the same size (which is always chosen to be an exponent of 2, so that with the maximum addressable memory n and page size f there are exactly n/f logical pages). Similarly, the actual physical memory is treated as an array of physical frames, all of the same size, which is the same as the page size. Each process has a page table, which—for the moment—maps each logical page into a corresponding frame in physical memory. The MMU uses this table to perform the translation.

Figure 3.13 demonstrates a case where, with the help of the MMU, scattered pieces of memory make up what appears to a process to be a contiguous segment. (The page size is actually a fragmentation unit. In other words, no memory fragment larger than or equal to the page size is left unused.)

Figure 3.13 Paging—establishing contiguous memory.

Suppose that a process needs to access what it thinks is address ![]() As the figure shows, this address is interpreted as belonging to page number 6, with offset 123. The MMU table maps page 6 into the physical frame 21. Ultimately, the address is translated to

As the figure shows, this address is interpreted as belonging to page number 6, with offset 123. The MMU table maps page 6 into the physical frame 21. Ultimately, the address is translated to ![]() which is the actual physical location.

which is the actual physical location.

Providing contiguous memory is a fairly simple feature. The most ingenious feature of paging though is that it supports the case when the number of frames in the memory is fewer than the total number of pages used by all active processes. Then, even the logical address space of a single process may be larger than the whole physical memory! To achieve that, the operating system maps the process memory to a storage device (typically, a disk). As Figure 3.14 shows, some pages (called resident pages) are kept in the physical memory, while other pages are stored on the disk. When a logical address that corresponds to a non-resident page is referenced by a process, it must be brought into physical memory.

Figure 3.14 Storing pages on the disk to achieve the “infinite” memory illusion.

Of course, when all the frames in memory are occupied, it is a zero-sum game: if a page is to be brought into memory, some other page must be evicted first. The choice of the page to be evicted is made by a page-replacement algorithm employed by the operating system. This choice is crucial to performance, and hence substantial research into the problem has been carried out over a period of decades. Its results, now classic, can be found in any operating systems text.

Incidentally, the actual memory/storage picture is not just black-and-white—that is, fast but relatively small and volatile RAM and relatively slow but voluminous and long-lasting disk storage. In Chapter 6 we introduce and discuss different types of storage solution, which together fill in the spectrum between the two extremes and establish the hierarchy of memory.

The page table depicted in Figure 3.15 provides a few hints as to the translation implementation. The left-most bits of a virtual address provide the logical page number; the rest is the offset, which remains invariant. If a page is resident, as indicated by the respective flag in the table, the translation is straightforward (and rather fast). If it is non-resident, the MMU generates an exception—the page fault.

Figure 3.15 Page table and virtual address in-memory translation.

To process a page fault, the operating system needs to find a page to evict. (The page-replacement algorithms use other flags stored at the page table.) Then, the page to be evicted may be written to the disk and, finally, the page that is waiting to be referenced will be read from its place on the disk into the memory frame. If this looks like a lot of work, it is! But with what has been learned about paging, it is made rather efficient. While virtual memory is slower than real memory, complex heuristics-based algorithms make it not that much slower. It is also worthy of note that process page tables can grow large—much larger than the extent an MMU may fit in, which brings into place additional machinery employed in this type of translation.

Again, just as segment tables do, page tables also employ memory protection—a page may be executable only, read only, writable, or any combination of these. And, as in the case of segment tables, all instructions that are involved in changing page tables are privileged.

A final note to relate different pieces of this section: segmentation can be combined with paging. For instance, each segment can have its own page table.

3.2.7 Options in Handling Privileged Instructions and the Final Approximation of the CPU Loop

An important question to ask at this point is what the CPU does when it encounters a privileged instruction while in the user mode. (How could a privileged instruction end up in a user's program? Of course no legitimate compiler will produce it, but it may end up there because of an assembly programmer's mistake or because of malicious intent. And it will end up there because of full virtualization—the subject we explore in Section 3.3.)

All existing CPUs deal with this situation in one of two ways. When a CPU encounters a privileged instruction while in the user mode, it either (1) causes an exception or (2) ignores the instruction altogether (i.e., skips it without performing any operation—just wasting a cycle).

Either way, it is ensured that a user program will be incapable of causing any damage by executing a privileged instruction; however, case 2 causes problems with virtualization. We will dwell on this problem later, when studying hypervisors.

By design, our little CPU,11 which we have been developing for quite a while now, does cause an exception when it encounters a privileged instruction while in the user mode. In this way, the CPU provides a clear mechanism to indicate that something went wrong. It also helps improve security. As far as security considerations are concerned, detecting an attempt at gaining control of the system may be essential for preventing other, perhaps more sophisticated, attempts from the same source. Ignoring a “strange” instruction does not help; taking the control away from the program that contains it does.

With that, we arrive at the third—and final—approximation of the CPU loop, shown in Figure 3.16. The first major change, compared with earlier versions, is that each instruction is examined at the outset. If it cannot be recognized, or has wrong parameters, or if it is inappropriate for the present mode (i.e., if it is a privileged instruction and the CPU is running in the user mode), an exception is raised. Another change is that when the CPU starts processing an interrupt or an exception, it changes to the system mode, and also switches to the system stack. (As far as timing is concerned, the STATUS register is saved before the CPU mode is switched, of course. So the CPU could return to the mode in which it was interrupted.) Again, the registers are saved at the system stack—not the user stack—which is yet another security measure. With good design, the user code can neither touch nor even see the system data structures.

Figure 3.16 CPU loop—the final version.

3.2.8 More on Operating Systems

The CPU that we have constructed so far supports virtualization inasmuch as each process can “think” of owning the whole CPU (even though a slower one), all I/O devices, and infinite, uniformly addressed memory. The physical machine is governed by the operating system software, which creates and maintains user processes. An operating system is characterized by the services that it provides.

We have addressed such services as CPU access and memory management. The latter extends to long-term memory—the establishment and maintenance of a file system on a disk. A disk is just one of the I/O devices managed by an operating system. A monitor, keyboard, and printer are examples of other devices. Yet another example is a network drive. A process gets access to a device only through the operating system.

While a process has seeming ownership of the CPU, memory, and devices, it needs to be aware of other processes. To this end, all modern operating systems have mechanisms for interprocess communication, which allows processes to exchange messages. Combined with data communications, this capability enables communications among processes that are run on different machines, thus creating the foundation for distributed computing.

The services are built in layers, each layer serving the layer immediately above it. If the file system service is implemented on top of the data networking service, then it is possible to map a process's file system across disks attached to different computers, with the process never being aware of the actual location of the device and consequently the location of a specific file record.

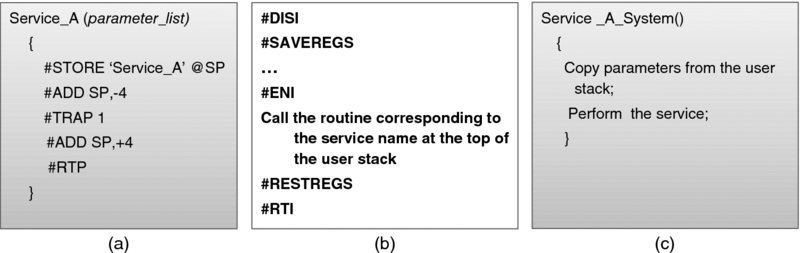

A process requests an operating system service via a system call. This call—to a programmer—is just a procedure call. Invariably, the corresponding procedure contains a trap instruction, which causes a break in process execution. Figure 3.17(a) contains pseudo-code for an example Service_A routine. All it does is push the Service_A mnemonics on the user stack and pass control to the system via TRAP 1.

Figure 3.17 System call processing: (a) the Service_A routine—user part; (b) the TRAP 1 service routine; (c) the Service_A routine—system part.

The process will resume when the TRAP 1 exception is processed, as depicted in Figure 3.17(b). The handler reads the top of the process's stack to determine that service A is requested and then calls the Service_A_System routine. As shown in Figure 3.17(c), the latter proceeds in the system mode, likely calling other system procedures. When finished, it returns to the exception handler of Figure 3.17(b), which at the end passes control back to the user program code via the RTI instruction. (You may remember that the value of the STATUS register, with the mode flag indicating the user mode, was saved at the time of the interrupt. The switch to the user mode is effected merely by restoring that value.)

It is important to understand that a user process goes through a sequence of instructions that alternate between the user program code and the system code; however, the user program has no knowledge or control of the system data structures or other processes' data (unless, of course, other processes intentionally share such data).

We briefly note that when a service routine starts an I/O operation, it typically does not wait until the operation completes. Instead, it parks the process in a queue for a specific event from this device and passes control to a scheduler so that another process can run. To this end, a time-slice interrupt may result in exactly the same treatment of the interrupted process, if the operating system determines that the process's quantum has been reached. In this way, the operating system can ensure that the CPU has been allocated fairly to all processes.

At this point it is appropriate to explain the difference between the terms process and thread. A process is assigned resources: its memory space, devices, and so on. Initially, these resources were associated with a single thread of control. Later, the term process became associated only with the address space and resources—not the thread of execution. With that, multiple tasks (threads) can execute within a process, all sharing the same resources. Sometimes, threads are also called light-weight processes, because context switching of threads is a much less involved procedure (no need to reload page tables, etc.) than that of processes. Unfortunately, this terminology is not used consistently.

The evolution of the operating systems has been influenced by two factors: application needs and hardware capabilities (and, consequently, hardware price). When considering the implementation specifics, there is still a gray area within which it is impossible to postulate whether a given function is performed in hardware or in the operating systems.

Advances in data networking have resulted in distributing the operating system functions among different nodes in the network. This has actually been a trend in the 1990s and 2000s, until Cloud Computing took over. With that, the trend seems to be reversed (with effort concentrating on one—virtual—machine), while advancing in spirit (the virtual machine can easily migrate across the network from one machine to another).

Throughout the history of operating systems the general problem of security (as well as the narrower problem of privacy) has been addressed constantly. Interestingly enough, it was addressed even when it was a non-issue—that is, in the absence of networking and at a time when very few people even had access to computers. The history of operating systems has demonstrated though what the overall history of mankind has also demonstrated: the best idea and the best design do not necessarily win. To this end, MIT's Multiplexed Information and Computing Service (Multics) [10] operating system's feature set has never ended up fully in a ubiquitous commercial product, although Multics has influenced the design of several operating systems—most notably the Unix operating system.

Multics took security seriously. Robert Graham, a Multics contributor and a visionary, wrote in his seminal 1968 paper [11] (which very much predicted the way things turned out to be almost half a century later): “The community of users will certainly have diverse interests; in fact, it will probably include users who are competitive commercially. The system will be used for many applications where sensitive data, such as company payroll records, will need to be stored in the system. On the other hand, there will be users in the community who wish to share with each other data and procedures. There will even be groups of users working cooperatively on the same project. … Finally, there will be public libraries of procedures supplied by the information processing utility management. Indeed, a primary goal of such a system is to provide a number of different users with flexible, but controlled, access to shared data and procedures.”

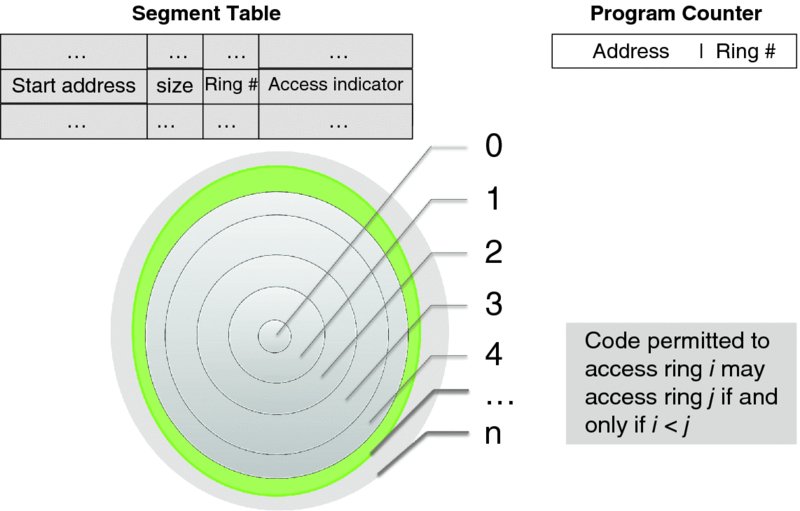

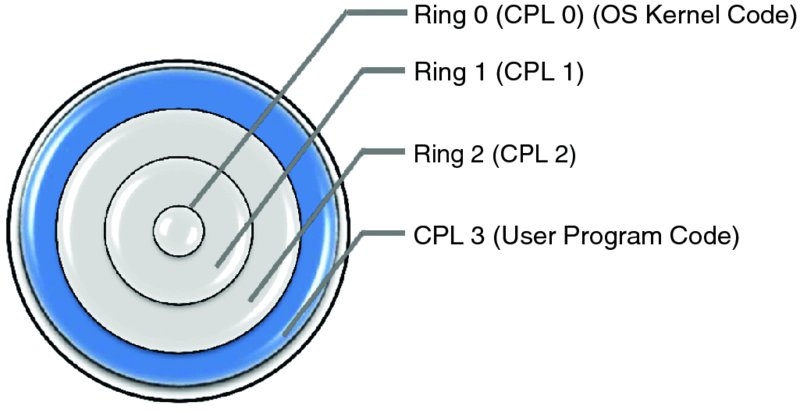

Graham's design, which has been implemented in Multics, specified protection rings. We start with a simplified scheme, as depicted in Figure 3.18. Revisiting the segment table discussion of Section 3.2.6, we can further refine the permission to access a segment.

Figure 3.18 Graham's security rings (hardware support).

The decision to focus on a per-segment rather than a per-page protection scheme was deliberate. In Graham's lucid prose: “a segment is a logical unit of information of which a user is cognizant,” while “a page is a unit of information which is useful to the system for storage management and is thus invisible to the user.” The idea of the protection scheme is based on the military principle of need to know, defined by the respective clearance level. At a given clearance level, those who have it may access the information at any lower clearance level but not the other way round; hence the representation of the privilege levels as a set of concentric rings. The smallest ring (ring 0) has the highest privilege level. (The graphical significance of this is that the higher the privilege level the smaller the set of those who are granted access at this level, so smaller circles delineate rings with higher privilege levels.)

Graham presents two models in [11]. The first is simple and straightforward; the second is more complex as it provides an optimization.

Both models require a change to the segment table. The access indicator field for both models contains four independent flags:

- User/System flag—indicates whether the segment can be accessed in the user mode.

- Read/Write flag—indicates whether the segment can be written to.

- Execute flag—indicates whether the segment can be executed.12

- Fault/No fault flag—indicates whether accessing the segment results in an exception no matter what (an extra level of protection, which overwrites all other flags).

The PC register is augmented with the ring number indicating the privilege level of the code. In addition, each segment table entry is augmented by a reference to the ring structure, but here the models differ.

In the first model, each segment is now assigned its ring number to indicate its privilege, as shown in the segment table entry. Note that this scheme results in different privileges assigned to disjoint sets of segments.

Let us consider a code segment. If the segment's access ring value is i, the code in the segment may access only a segment whose access ring value is j > i. (Of course, this is only the first line of defense. Access is further restricted by the values of the access indicator flags.) Jumps out of a segment require special intervention of the system, so all of them cause an exception.

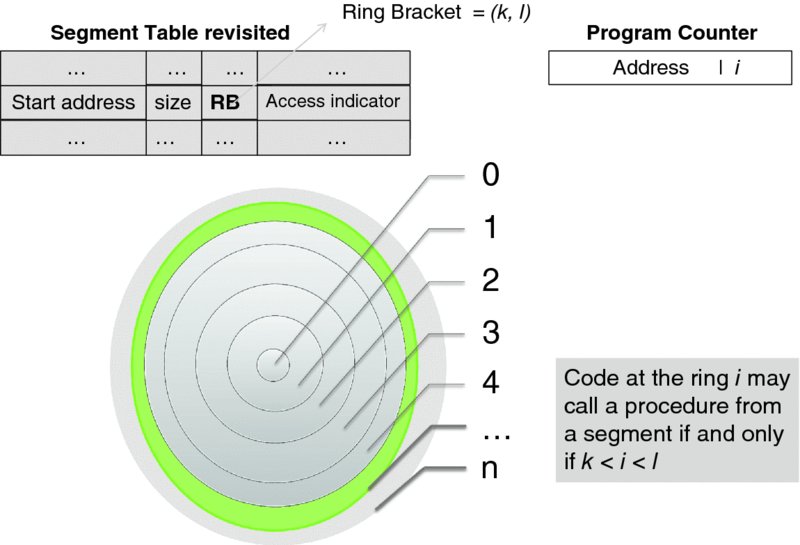

While this model works, Grahams shows that it can be made more efficient if the requirement that the rings be disjoint is relaxed to allow execution of shared routines at the privilege level of the caller—but not higher. This observation results in the second model, depicted in Figure 3.19.

Figure 3.19 Optimization with non-disjoint security rings.

Here, the ring number in a segment table entry is replaced with two numbers, which respectively indicate the lower and upper bound of the segment's ring bracket. All code in a segment—intended to be a procedure library—is assigned to the consecutive segments in the bracket. Thus, when a procedure executing at the privilege level i calls a procedure whose access bracket is [k, l] no exception occurs as long as k < i < l. A call outside the bracket limits results in an exception.

So far we have considered only the transfer of control among code segments, but the use of ring brackets can be extended further to the data segments as follows. If a data segment has a ring bracket [k, l] then an instruction executing at the privilege level i may

- write to this segment as long as it is writable and i ⩽ k (i.e., the instruction has higher privilege level than the segment) or

- read this segment if k < i ⩽ l.

The instruction may not access the segment at all if i > l.

Multics specifies eight protection rings. The number of rings is restricted by hardware. Back in the 1980s, the Data General Eclipse MV/8000 CPU had a three-bit ring indicator in the PC. (See [12] for a well-written story about this.)

All that development was foreseeing the future—which is happening right now. The renewed interest in protection, in view of virtualization, has made many computer scientists nostalgic for the 1970s and 1980s.

On this note, we conclude our primer. To summarize:

- A process as a unit has its own virtual world of resources: a CPU, an “infinite” memory, and all I/O devices.

- A process relies on an operating system—the government of a physical machine, which ensures that all processes are treated fairly.

- The operating system kernel is the only entity that can execute privileged instructions. The kernel is entered as a result of processing an interrupt or an exception (including a trap).

- A physical machine may execute more than one process.

- A process may be aware of other processes, and it may communicate with them.

The next section explains how this can be taken to the next level of abstraction so as to develop a virtual machine of the type shown in Figure 3.1.

3.3 Virtualization and Hypervisors

Let us revisit our objectives.

By introducing virtualization we want, first of all, to save the cost—in terms of space, energy, and personnel—of running several machines in place of one. An increasingly important aspect here is the green issue: as a rule, one machine uses less energy than several machines.

Yet another objective of virtualization is to increase CPU utilization. Even with multi-processing, a CPU is almost never fully utilized. Long before the term “Cloud Computing” was coined, it was observed that the machines that ran web servers had low CPU utilization. Running several servers on their respective virtual machines, now grouped on one physical machine that used to run a single server, allowed them to get good use of this single machine while saving money on energy and hardware.

Other objectives include cloning the computing environment (such as a server) for debugging, at low cost; migrating a machine—for instance, in response to increasing load; and isolating an appliance (again a server is a good example) for a specific purpose without investing in new hardware.

As far as security is concerned, isolation is a boon for analyzing an unknown application. A program can be tested in a virtual machine, and consequently any security risk it poses will be isolated to this virtual machine. Granted, this wonderful feature depends on the security of the hypervisor itself, which we address in the last section of this chapter.

In our opinion, the very emergence of Cloud Computing as the business of providing computing services as a utility has also been an outcome (rather than an objective) of virtualization. Of course, it was not virtualization alone but rather a combination of virtualization and availability of fast networks, but we feel that virtualization technology has played a major role here.

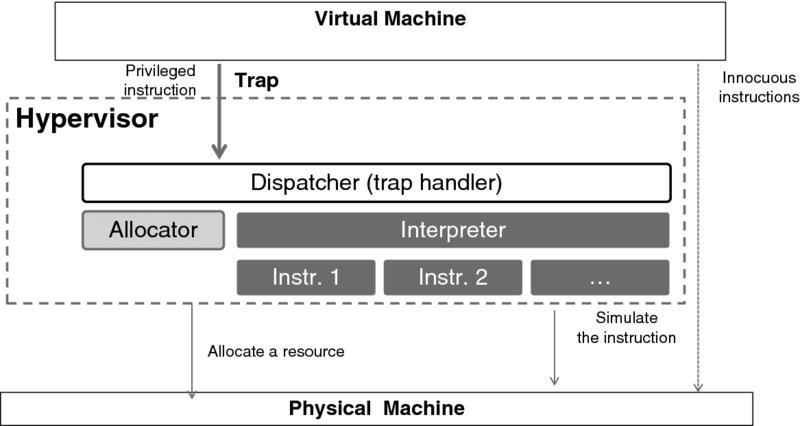

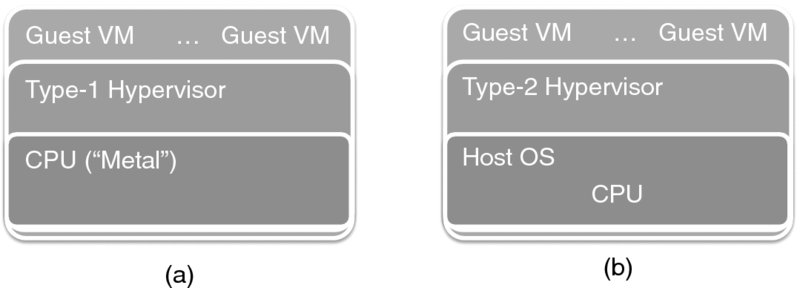

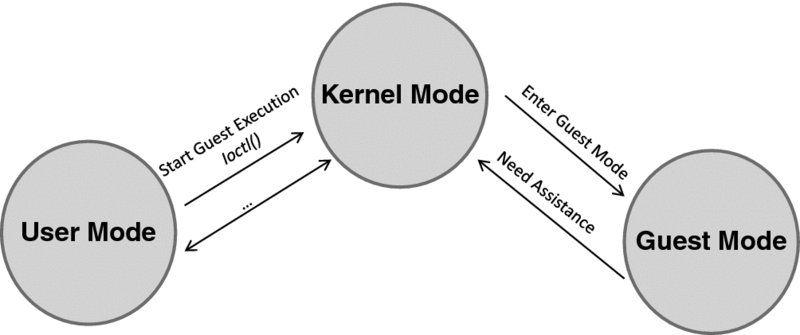

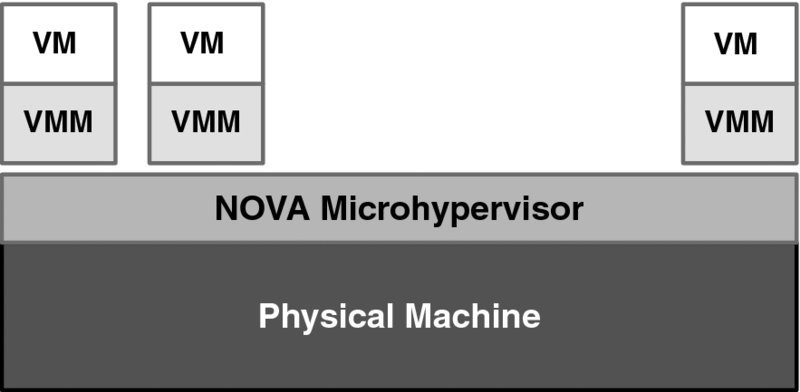

We start with a general assumption—based on the traditional CPU architectures—that an operating system kernel by itself cannot create an isolated virtual machine. With the fairly recent CPU virtualization extensions, however, this assumption no longer holds, and later we will review a special case of the Kernel-based Virtual Machine (KVM) hypervisor, which—as its name suggests—is indeed kernel based.

From the onset of virtualization, the software charged with creating and maintaining virtual machines has been called the Virtual Machine Monitor (VMM) or hypervisor. Both terms are used interchangeably in the literature; in the rest of this book, we will use only the latter term. The virtual machine environment is created by a hypervisor.

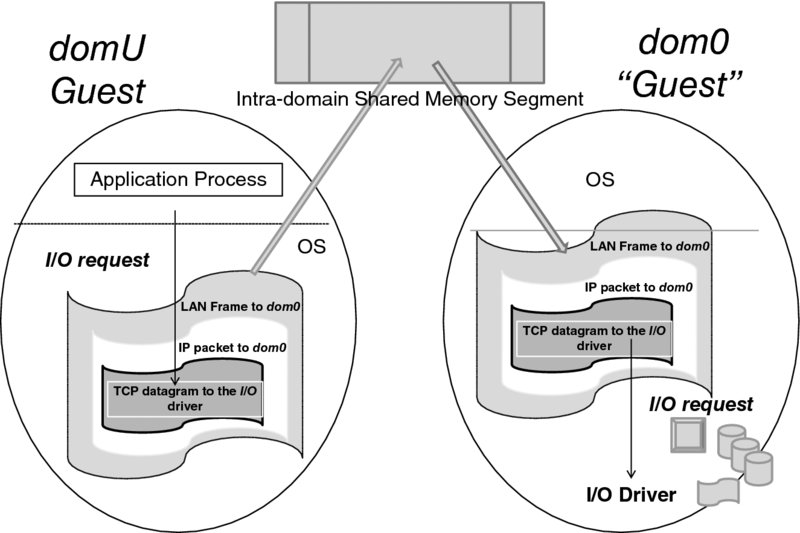

3.3.1 Model, Requirements, and Issues

The classical abstract model for addressing the resources needed by processes executing on virtual machines, as presented in [13], has been known for over 40 years. In this model, two functions are employed—one visible to the operating system and the other visible only to a hypervisor. The former function maps process IDs into (virtual) resource names; the latter one maps virtual resource names into physical resource names. Such mapping is recursive, allowing several virtual machine layers on top of physical hardware. Whenever a fault (i.e., a reference to an unmapped resource) occurs, an exception results in passing control to the next-layer hypervisor. This model has provided a foundation for the systematic study and implementation of virtualization.