Chapter 3

Architecture and Design

COMPTIA SECURITY+ EXAM OBJECTIVES COVERED IN THIS CHAPTER INCLUDE THE FOLLOWING:

3.1 Explain use cases and purpose for frameworks, best practices and secure configuration guides.

3.1 Explain use cases and purpose for frameworks, best practices and secure configuration guides.

- Industry-standard frameworks and reference architectures

- Regulatory

- Non-regulatory

- National vs. international

- Industry-specific frameworks

- Benchmarks/secure configuration guides

- Platform/vendor-specific guides

- Web server

- Operating system

- Application server

- Network infrastructure devices

- General purpose guides

- Platform/vendor-specific guides

- Defense-in-depth/layered security

- Vendor diversity

- Control diversity

- Administrative

- Technical

- User training

- Industry-standard frameworks and reference architectures

3.2 Given a scenario, implement secure network architecture concepts.

3.2 Given a scenario, implement secure network architecture concepts.

- Zones/topologies

- DMZ

- Extranet

- Intranet

- Wireless

- Guest

- Honeynets

- NAT

- Ad hoc

- Segregation/segmentation/isolation

- Physical

- Logical (VLAN)

- Virtualization

- Air gaps

- Tunneling/VPN

- Site-to-site

- Remote access

- Security device/technology placement

- Sensors

- Collectors

- Correlation engines

- Filters

- Proxies

- Firewalls

- VPN concentrators

- SSL accelerators

- Load balancers

- DDoS mitigator

- Aggregation switches

- Taps and port mirror

- SDN

- Zones/topologies

3.3 Given a scenario, implement secure systems design.

3.3 Given a scenario, implement secure systems design.

- Hardware/firmware security

- FDE/SED

- TPM

- HSM

- UEFI/BIOS

- Secure boot and attestation

- Supply chain

- Hardware root of trust

- EMI/EMP

- Operating systems

- Types

- Network

- Server

- Workstation

- Appliance

- Kiosk

- Mobile OS

- Patch management

- Disabling unnecessary ports and services

- Least functionality

- Secure configurations

- Trusted operating system

- Application whitelisting/blacklisting

- Disable default accounts/passwords

- Types

- Peripherals

- Wireless keyboards

- Wireless mice

- Displays

- WiFi-enabled MicroSD cards

- Printers/MFDs

- External storage devices

- Digital cameras

- Hardware/firmware security

3.4 Explain the importance of secure staging deployment concepts.

3.4 Explain the importance of secure staging deployment concepts.

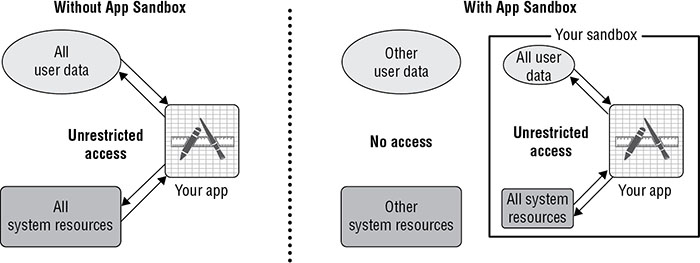

- Sandboxing

- Environment

- Development

- Test

- Staging

- Production

- Secure baseline

- Integrity measurement

3.5 Explain the security implications of embedded systems.

3.5 Explain the security implications of embedded systems.

- SCADA/ICS

- Smart devices/IoT

- Wearable technology

- Home automation

- HVAC

- SoC

- RTOS

- Printers/MFDs

- Camera systems

- Special purpose

- Medical devices

- Vehicles

- Aircraft/UAV

3.6 Summarize secure application development and deployment concepts.

3.6 Summarize secure application development and deployment concepts.

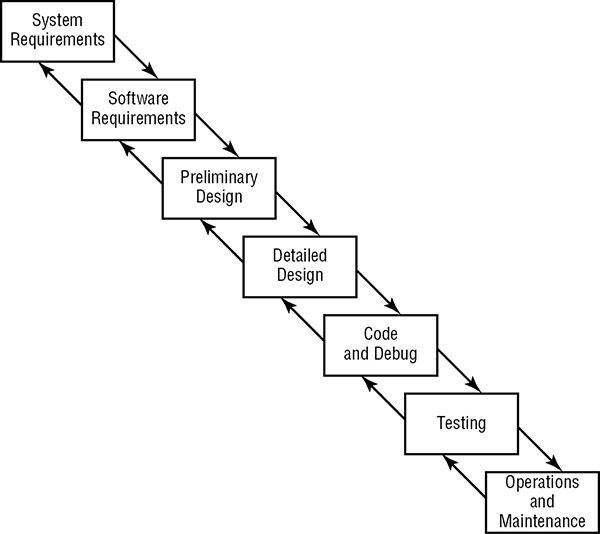

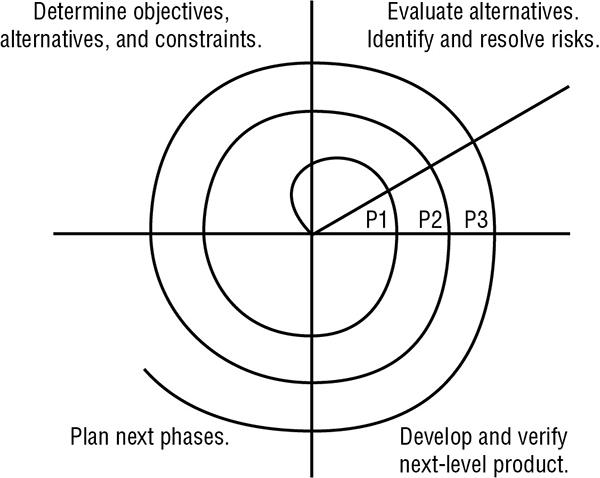

- Development life-cycle models

- Waterfall vs. Agile

- Secure DevOps

- Security automation

- Continuous integration

- Baselining

- Immutable systems

- Infrastructure as code

- Version control and change management

- Provisioning and deprovisioning

- Secure coding techniques

- Proper error handling

- Proper input validation

- Normalization

- Stored procedures

- Code signing

- Encryption

- Obfuscation/camouflage

- Code reuse/dead code

- Server-side vs. client-side execution and validation

- Memory management

- Use of third-party libraries and SDKs

- Data exposure

- Code quality and testing

- Static code analyzers

- Dynamic analysis (e.g., fuzzing)

- Stress testing

- Sandboxing

- Model verification

- Compiled vs. runtime code

- Development life-cycle models

3.7 Summarize cloud and virtualization concepts.

3.7 Summarize cloud and virtualization concepts.

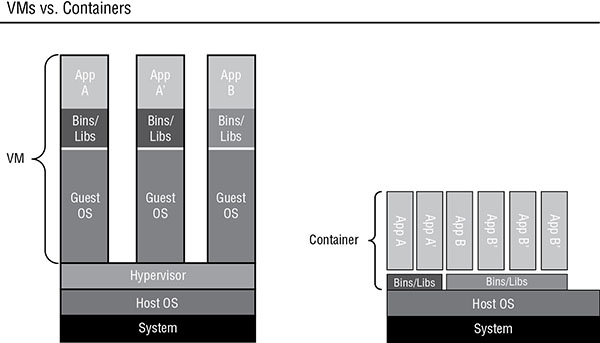

- Hypervisor

- Type I

- Type II

- Application cells/containers

- VM sprawl avoidance

- VM escape protection

- Cloud storage

- Cloud deployment models

- SaaS

- PaaS

- IaaS

- Private

- Public

- Hybrid

- Community

- On-premise vs. hosted vs. cloud

- VDI/VDE

- Cloud access security broker

- Security as a Service

- Hypervisor

3.8 Explain how resiliency and automation strategies reduce risk.

3.8 Explain how resiliency and automation strategies reduce risk.

- Automation/scripting

- Automated courses of action

- Continuous monitoring

- Configuration validation

- Templates

- Master image

- Non-persistence

- Snapshots

- Revert to known state

- Rollback to known configuration

- Live boot media

- Elasticity

- Scalability

- Distributive allocation

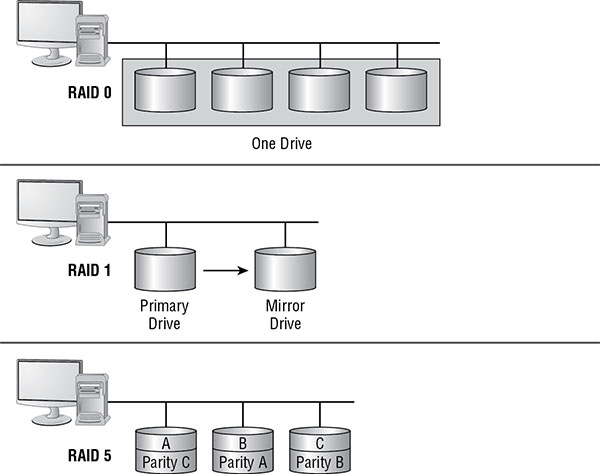

- Redundancy

- Fault tolerance

- High availability

- RAID

- Automation/scripting

3.9 Explain the importance of physical security controls.

3.9 Explain the importance of physical security controls.

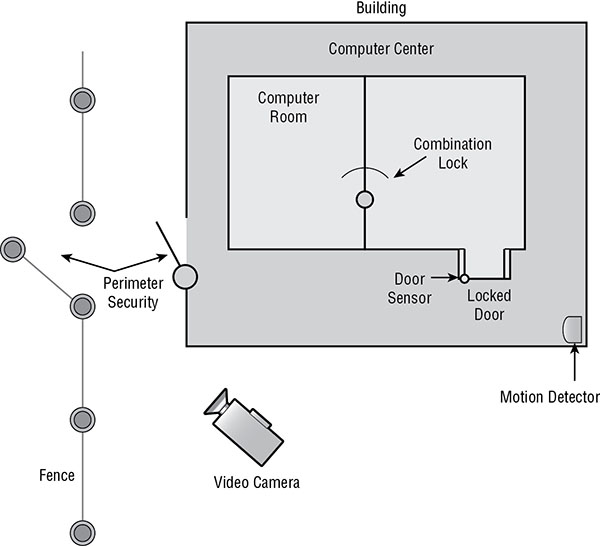

- Lighting

- Signs

- Fencing/gate/cage

- Security guards

- Alarms

- Safe

- Secure cabinets/enclosures

- Protected distribution/Protected cabling

- Airgap

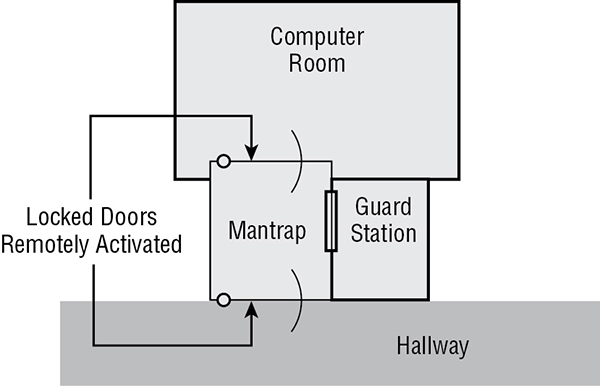

- Mantrap

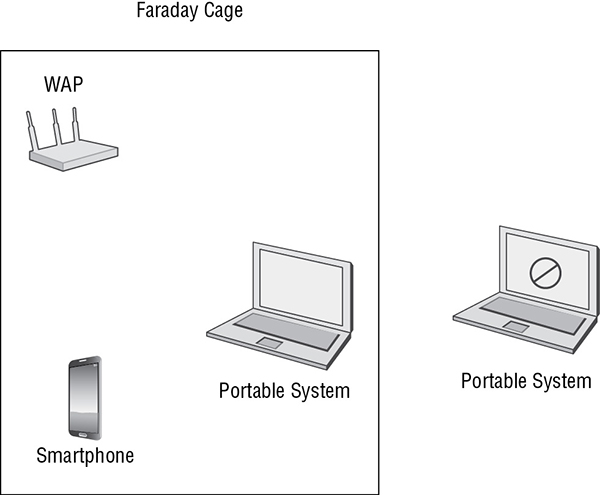

- Faraday cage

- Lock types

- Biometrics

- Barricades/bollards

- Tokens/cards

- Environmental controls

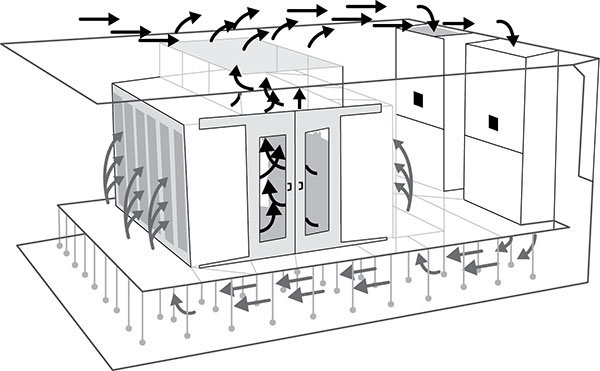

- HVAC

- Hot and cold aisles

- Fire suppression

- Cable locks

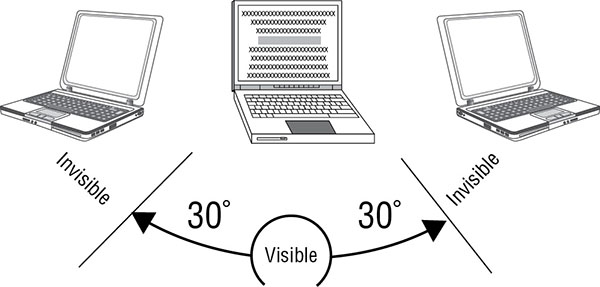

- Screen filters

- Cameras

- Motion detection

- Logs

- Infrared detection

- Key management

The Security+ exam will test your understanding of the architecture and design of an IT environment and its related security. To pass the test and be effective in implementing security, you need to understand the basic concepts and terminology related to network security design and architecture as detailed in this chapter.

The Security+ exam will test your understanding of the architecture and design of an IT environment and its related security. To pass the test and be effective in implementing security, you need to understand the basic concepts and terminology related to network security design and architecture as detailed in this chapter.

3.1 Explain use cases and purpose for frameworks, best practices and secure configuration guides.

Security is complicated. The task of designing and implementing security can be so daunting that many organizations may put it off until it’s too late and they have experienced a serious intrusion or violation. A means to simplify the process, or at least to get started, is to adopt predefined guidance and recommendations from trusted entities. There are many government, open-source, and commercial security frameworks, best practices, and secure configuration guides that can be used as both a starting point and a goalpost for security programs for large and small organizations.

Industry-standard frameworks and reference architectures

A security framework is a guide or plan for keeping your organizational assets safe. It provides a structure to the implementation of security for both new organizations and those with a long history. A security framework should provide perspective that security is not just an IT concern, but an important business operational function. A well-designed security framework should address personnel issues, network security, portable and mobile equipment, operating systems, applications, servers and endpoint devices, network services, business processes, user tasks, communications, and data storage.

Industry-standard frameworks are those that are adopted and respected by a majority of organizations within a specific line of business. A reference architecture may accompany a security framework. Often a reference architecture is a detailed description of a fictitious organization and how security could be implemented. This concept serves as a guide for real-world organizations to use as a template to follow for adapting and implementing a framework.

Some security frameworks are designed to help new organizations implement their initial and foundational security elements, whereas others are designed to improve the existing in-place security infrastructure.

Regulatory

A regulatory security framework is a security guidance established by a government regulation or law. Regulatory frameworks are thus crafted or sponsored by government agencies. However, this does not necessarily limit their use to government entities. Many regulatory frameworks are publicly available and thus can be adopted and applied to private organizations as well.

A security framework does not have to be designed specifically for an organization, nor does an entire framework need to be implemented. Each organization is unique and thus should use several frameworks to assemble a solution that addresses their specific security needs.

Non-regulatory

A nonregulatory security framework is any security guidance crafted by a nongovernment entity. This would include open-source communities as well as commercial entities. Nonregulatory frameworks may require a licensing fee or a subscription fee in order to view and access the details of the framework. Some commercial entities will even provide customized implementation guidance or compliance auditing.

National vs. international

A national security framework is any security guidance designed specifically for use within a particular country. The author of a national framework may attempt to restrict access to the details of their framework in order to control or limit implementation to just their local industries. National frameworks also may include country-specific limitations, requirements, utilities, or other concerns that are not applicable to any or most other countries. Such national nuances may also serve as a limiting factor for the use of such frameworks in other lands.

International security frameworks are designed on purpose to be nation independent. These are crafted with the goal of avoiding any country-specific limitations or idiosyncrasies in order to support worldwide adoption of the framework. Compliance with international security frameworks simplifies the interactions between organizations located across national borders by ensuring they have compatible and equivalent security protections.

Industry-specific frameworks

Industry-specific frameworks are those crafted to be applicable to one specific industry, such as banking, health care, insurance, energy management, transportation, or retail. These types of frameworks are tuned to address the most common issues within an industry and may not be as easily applicable to organizations outside of that target.

Benchmarks/secure configuration guides

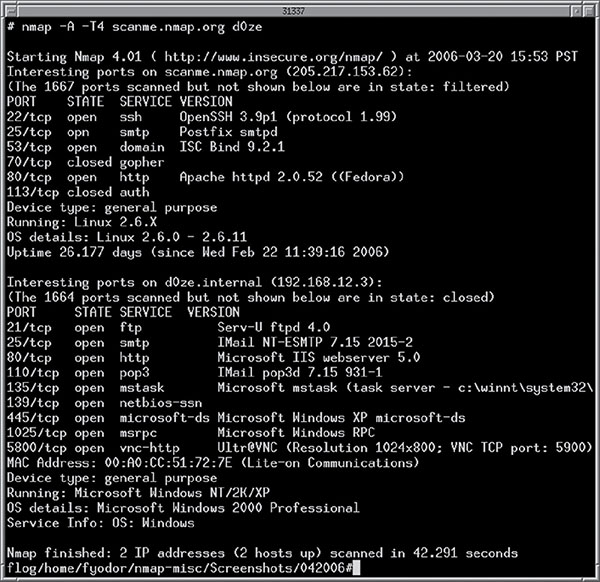

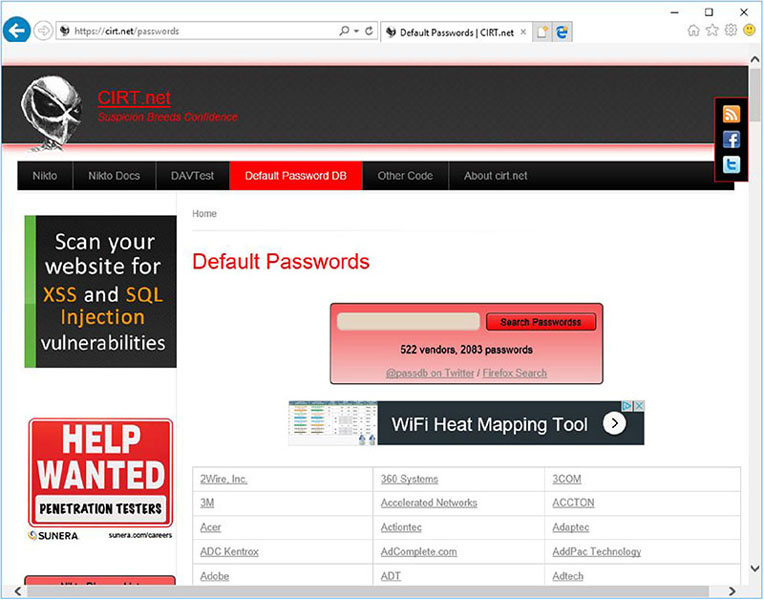

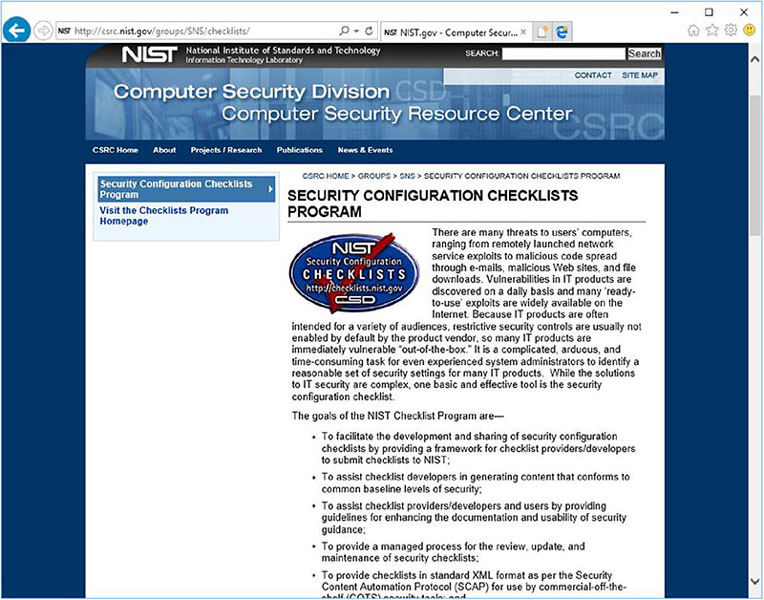

A benchmark is a documented list of requirements that is used to determine whether or not a system, device, or software solution is allowed to operate within a securely management environment (Figure 3.1). A secure configuration guide is another term for a benchmark. It can also be known as a standard or a baseline.

FIGURE 3.1 The CIS Benchmarks website

A benchmark can include specific instructions on installation and configuration of a product. It may also suggest alterations, modifications, and supplemental tools, utilities, drivers, and controls to improve the security of the system. A benchmark may also recommend operational steps, SOPs (standard operation procedures), and end-user guides to maintain security while business tasks are taking place.

A benchmark can be adopted from external entities, such as government regulations, commercial guidance, or community recommendations. But ultimately, a benchmark should be customized for the organization’s assets, threats, and risks.

Platform/vendor-specific guides

Security configuration guides are often quite specific to an operating system/platform, application, or product vendor. These types of guides can be quite helpful in securing a product since they may provide step-by-step, click-by-click, command-by-command instructions on securing a specific application, OS, or hardware product.

Web server

Benchmarks and security configuration guides can focus on specific web server products, such as Microsoft’s Internet Information Service or Apache Web Server.

Operating system

Benchmarks and security configuration guides can focus on specific operating systems, such as Microsoft Windows, Apple Macintosh, Linux, or Unix.

Application server

Benchmarks and security configuration guides can focus on specific application servers, such as Domain Name System (DNS), Dynamic Host Configuration Protocol (DHCP), databases, Network Area Storage (NAS), Storage Area Network (SAN), directory services, virtual private network (VPN), or Voice over Internet Protocol (VoIP).

Network infrastructure devices

Benchmarks and security configuration guides can focus on specific network infrastructure devices, such as firewalls, switches, routers, wireless access points, VPN concentrators, web security gateways, virtual machines/hypervisors, or proxies.

General purpose guides

General-purpose security configuration guides are more generic in their recommendations rather than being focused on a single software or hardware product. This makes them useful in a wide range of situations, but they provide less detail and instruction on exactly how to accomplish the recommendations. A product-focused guide might provide hundreds of steps of configuring a native firewall, whereas a general-purpose guide may provide only a few dozen general recommendations. This type of guide leaves the specific actions to accomplish the recommendations up to the system manager to determine how to accomplish the goals or implement the suggestions.

Defense-in-depth/layered security

Defense in depth is the use of multiple types of access controls in literal or theoretical concentric circles or layers. This form of layered security helps an organization avoid a monolithic security stance. A monolithic mentality is the belief that a single security mechanism is all that is required to provide sufficient security.

Only through the intelligent combination of countermeasures can you construct a defense that will resist significant and persistent attempts at compromise. Intruders or attackers would need to overcome multiple layers of defense to reach the protected assets.

As with any security solution, relying on a single security mechanism is unwise. Defense in depth, multilayered security, or diversity of defense uses multiple types of access controls in literal or theoretical concentric circles or layers. By having security control redundancy and diversity, an environment can avoid the pitfalls of a single security feature failing; the environment has several opportunities to deflect, deny, detect, and deter any threat. Of course, no security mechanism is perfect. Each individual security mechanism has a flaw or a workaround just waiting to be discovered and abused by a hacker.

Vendor diversity

Vendor diversity is important for establishing defense in depth in order to avoid security vulnerabilities due to one vendor’s design, architecture, and philosophy of security. No one vendor can provide a complete end-to-end security solution that protects against all known and unknown exploitations and intrusions. Thus, to improve the security stance of an organization, it is important to integrate security mechanisms from a variety of vendors, manufacturers, and programmers.

Control diversity

Control diversity is essential in order to avoid a monolithic security structure. Do not depend on a single form or type of security; instead, integrate a variety of security mechanisms into the layers of defense. Using three firewalls is not as secure as using a firewall, an IDS, and strong authentication.

Administrative

Administrative controls typically include security policies as well as mechanisms for managing people and overseeing business processes. It is important to ensure a diversity of administrative controls rather than relying on a single layer or single type of security mechanism.

Technical

Technical controls include any logical or technical mechanism used to provide security to an IT infrastructure. Technical security controls need to be broad and varied in order to provide a robust wall of protection against intrusions and exploit attempts. Single defenses, whether a single layer or repetitions of the same defense, can fall to a singular attack. Diverse and multilayered defenses require a more complex attack approach requiring numerous exploitations to be used in a series, successfully, without detection in order to compromise the target. The concept of attacking with a series of exploits is known as daisy-chaining.

User training

User training is always a key part of any security endeavor. Users need to be trained in how to perform their work tasks in accordance with the limitations and restrictions of the security infrastructure. Users need to understand, believe in, and support the security efforts of the organization; otherwise, users will by default cause problems with compliance, cause a reduction in productivity, and may cause accidental or intentional security control sabotage.

Exam Essentials

Be aware of industry-standard frameworks. A security framework is a guide or plan for keeping your organizational assets safe. It provides guidance and a structure to the implementation of security for organizations. Security frameworks may be regulatory, nonregulatory, national, international, and/or industry-specific.

Understand benchmarks. A benchmark is a documented list of requirements that is used to determine whether a system, device, or software solution is allowed to operate within a secure management environment. Benchmarks may be platform- or vendor-specific or general-purpose.

Define defense in depth. Defense in depth or layered security is the use of multiple types of access controls in literal or theoretical concentric circles or layers. Defense in depth should include vendor diversity and control diversity.

3.2 Given a scenario, implement secure network architecture concepts.

Reliable network security depends on a solid foundation. That foundation is the network architecture. Network architecture is the physical structure of your network, the divisions or segments, the means of isolation and traffic control, whether or not remote access is allowed, the means of secure remote connection, and the placement of sensors and filters. This section discusses many of the concepts of network architecture.

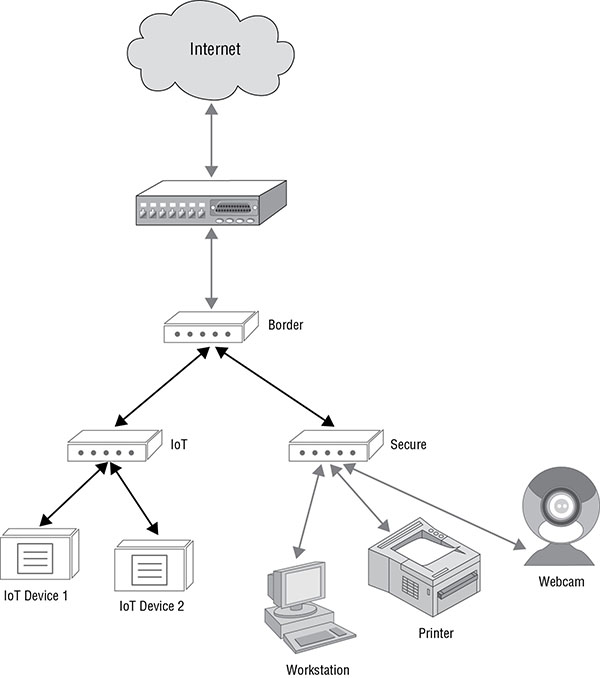

Zones/topologies

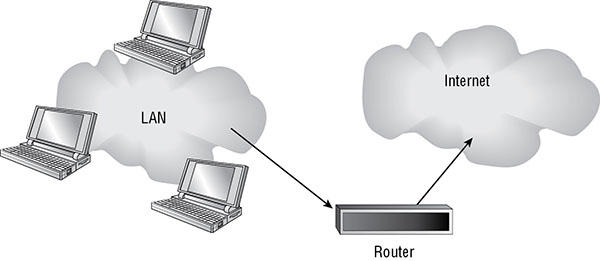

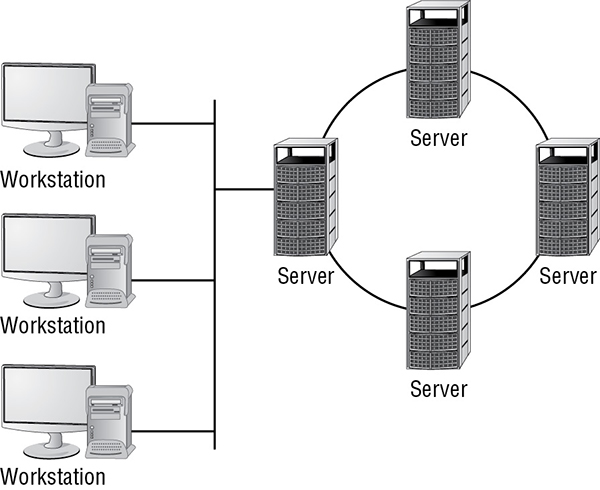

A network zone is an area of a network designed for a specific purpose, such as internal use or external use. Network zones are logical and/or physical divisions or segments of a LAN that allow for supplementary layers of security and control (see Figure 3.2). Each security zone is an area of a network that has a single defined level of security. That security may focus on encoding authorized access, preventing access, protecting confidentiality and integrity, or limiting traffic flow. Different security zones usually host different types of resources with different levels of sensitivity. Zones are often designated and isolated through the use of unique IP subnets and firewalls. Another term for network zone is network topology. There are many types of network zones; several are covered in the next sections.

FIGURE 3.2 A typical LAN connection to the Internet

DMZ

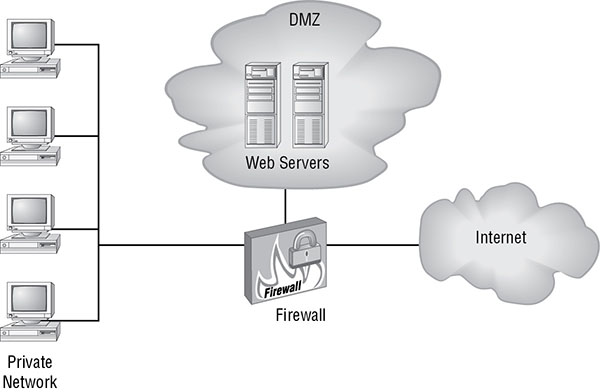

A demilitarized zone (DMZ) is a special-purpose subnet. A network consists of networking components (such as cables and switches) and hosts (such as clients and servers). Often, large networks are logically and physically subdivided into smaller interconnected networks. These smaller networks are known as subnets. Subnets are usually fairly generic, but some have special uses and/or configurations.

A DMZ is an area of a network that is designed specifically for low-trust users to access specific systems, such as the public accessing a web server. If the DMZ (as a whole or as individual systems within the DMZ) is compromised, the private LAN isn’t necessarily affected or compromised. Access to a DMZ is usually controlled or restricted by a firewall and router system.

The DMZ can act as a buffer network between the public untrusted Internet and the private trusted LAN. This implementation is known as a screened subnet. It is deployed by placing the DMZ subnet between two firewalls, where one firewall leads to the Internet and the other to the private LAN.

A DMZ can also be deployed through the use of a multihomed firewall (see Figure 3.3). Such a firewall has three interfaces: one to the Internet, one to the private LAN, and one to the DMZ.

FIGURE 3.3 A multihomed firewall DMZ

A DMZ gives an organization the ability to offer information services, such as web browsing, FTP, and email, to both the public and internal clients without compromising the security of the private LAN.

A typical scenario where a DMZ would be deployed is when an organization wants to offer resources, such as web server, email server, or file server, to the general public.

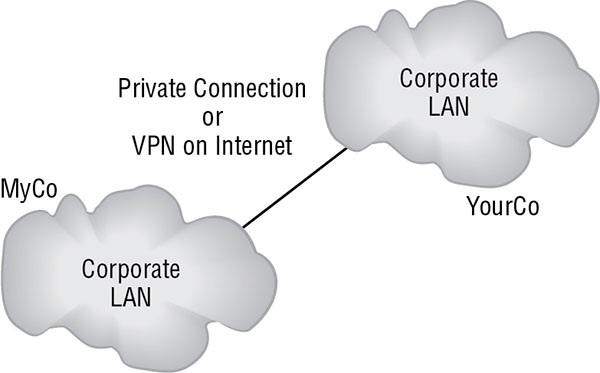

Extranet

An extranet (see Figure 3.4) is a privately controlled network segment or subnet that functions as a DMZ for business-to-business transactions. It allows an organization to offer specialized services to business partners, suppliers, distributors, or customers. Extranets are based on TCP/IP and often use the common Internet information services, such as web browsing, FTP, and email. Extranets aren’t accessible to the general public. They often require outside entities to connect using a VPN. This restricts unauthorized access and ensures that all communications with the extranet are secured. Another important security concern with extranets is that companies that are partners today may be competitors tomorrow. Thus, you should never place data into an extranet that you’re unwilling to let a future competitor have access to.

FIGURE 3.4 A typical extranet between two organizations

A common scenario for use of an extranet is when an organization needs to grant resource access to a business partner or external supplier. This allows the external entity to access the offered resources without exposing those resources to the open Internet and does not allow the external entities access into the private LAN.

Intranet

An intranet is a private network or private LAN. This term was coined in the 1990s when there was a distinction between traditional LANs and those adopting Internet technologies, such as the TCP/IP protocol, web services, and email. Now that most networks use these technologies, the term intranet is no longer distinct from LAN.

All organizations that have a network have an intranet. Thus, any scenario involving a private LAN is also an intranet.

Wireless

A wireless network is a network that uses radio waves as the communication media instead of copper or fiber-optic cables. A wireless network zone can be isolated using encryption (such as WPA-2) and unique authentication (so that only users and devices authorized for a specific network zone are able to log into that wireless zone).

Scenarios where wireless is a viable option include workspaces where portable devices are needed or when running network cables is cost prohibitive.

Guest

A guest zone or a guest network is an area of a private network designated for use by temporary authorized visitors. It allows nonemployee entities to partially interact with your private network, or at least with a subset of strictly controlled resources, without exposing your internal network to unauthorized user threats. A guest network can be a wireless or wired network. A guest network can also be implemented using VLAN enforcement.

Any organization that has a regularly recurring need to grant visitors and guests some level of network access—even if just to grant them Internet connectivity–should consider implementing a guest network.

Honeynets

A honeynet consists of two or more networked honeypots used in tandem to monitor or re-create larger, more diverse network arrangements. Often, these honeynets facilitate IDS deployment for the purposes of detecting and catching both internal and external attackers. See the section “Honeynet” in Chapter 2, “Technologies and Tools,” for more information.

NAT

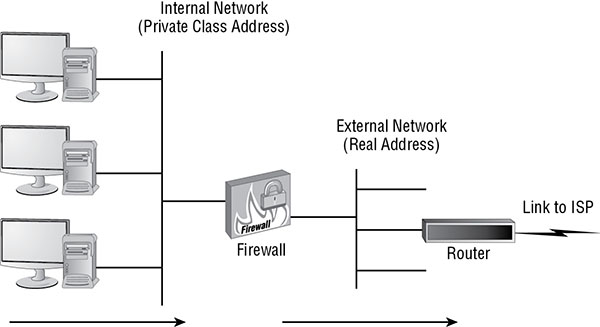

In order for systems to communicate across the Internet, they must have an Internet-capable TCP/IP address. Unfortunately, leasing a sufficient number of public IP addresses to assign one to every system on a network is expensive. Plus, assigning public IP addresses to every system on the network means those systems can be accessed (or at least addressed) directly by external benign and malicious entities. One way around this issue is to use network address translation (NAT) (see Figure 3.5).

FIGURE 3.5 A typical Internet connection to a local network

NAT converts the private IP addresses (see the discussion of RFC 1918) of internal systems found in the header of network packets into public IP addresses. It performs this operation on a one-to-one basis; thus, a single leased public IP address can allow a single internal system to access the Internet. Because Internet communications aren’t usually permanent or dedicated connections, a single public IP address could effectively support three or four internal systems if they never needed Internet access simultaneously. So, when NAT is used, a larger network needs to lease only a relatively small number of public IP addresses.

NAT provides the following benefits:

- It hides the IP addressing scheme and structure from external entities.

- It serves as a basic firewall by only allowing incoming traffic that is in response to an internal system’s request.

- It reduces expense by requiring fewer leased public IP addresses.

- It allows the use of private IP addresses (RFC 1918).

Closely related to NAT is port address translation (PAT), which allows a single public IP address to host up to 65,536 simultaneous communications from internal clients (a theoretical maximum; in practice, you should limit the number to 100 or fewer in most cases). Instead of mapping IP addresses on a one-to-one basis, PAT uses the Transport layer port numbers to host multiple simultaneous communications across each public IP address.

The use of the term NAT in the IT industry has come to include the concept of PAT. Thus, when you hear or read about NAT, you can assume that the material is referring to PAT. This is true for most OSs and services; it’s also true of the Security+ exam.

Another issue to be familiar with is that of NAT traversal (NAT-T). Traditional NAT doesn’t support IPSec VPNs, because of the requirements of the IPSec protocol and the changes NAT makes to packet headers. However, NAT-T was designed specifically to support IPSec and other tunneling VPN protocols, such as Layer 2 Tunneling Protocol (L2TP), so organizations can benefit from both NAT and VPNs across the same border device/interface.

As the conversion from IPv4 to IPv6 takes place, there will be a need for NATing between these two IP structures. V4-to-v6 gateways or NAT servers will become more prevalent as the migration gains momentum, in order to maintain connectivity between legacy IPv4 networks and updated IPv6 networks. Once a majority of systems are using IPv6, the number of v4-to-v6 NATing systems will decline.

Scenarios where NAT implementation is essential include when using private IP addresses from RFC 1918 or when wanting to prevent external initiation of communications to internal devices.

Ad hoc

Ad hoc is a form of wireless network also known as the peer-to-peer network. It is a form of wireless network in which individual hosts connect directly to each other rather than going through a middleman. For more on this topic, see the Chapter 2 section “WiFi direct/ad hoc.”

Segregation/segmentation/isolation

Network segmentation involves controlling traffic among networked devices. Complete or physical network segmentation occurs when a network is isolated from all outside communications, so transactions can only occur between devices within the segmented network. Logical network segmentation can be imposed with switches using VLANs, or through other traffic-control means, including MAC addresses, IP addresses, physical ports, TCP or UDP ports, protocols, or application filtering, routing, and access control management. Network segmentation can be used to isolate static environments in order to prevent changes and/or exploits from reaching them.

Security layers exist where devices with different levels of classification or sensitivity are grouped together and isolated from other groups with different security levels. This isolation can be absolute or one-directional. For example, a lower level may not be able to initiate communication with a higher level, but a higher level may initiate with a lower level. Isolation can also be logical or physical. Logical isolation requires the use of classification labels on data and packets, which must be respected and enforced by network management, OSs, and applications. Physical isolation requires implementing network segmentation or air gaps between networks of different security levels.

Bridging between networks can be a desired feature of network design. Network bridging is self-configuring, is inexpensive, maintains collision-domain isolation, is transparent to Layer 3+ protocols, and avoids the 5-4-3 rule’s Layer 1 limitations (see https://en.wikipedia.org/wiki/5-4-3_rule). However, network bridging isn’t always desirable. It doesn’t limit or divide broadcast domains, doesn’t scale well, can cause latency, and can result in loops. In order to eliminate these problems, you can implement network separation or segmentation. There are two means to accomplish this. First, if communication is necessary between network segments, you can implement IP subnets and use routers. Second, you can create physically separate networks that don’t need to communicate. This can also be accomplished using firewalls instead of routers to implement secured filtering and traffic management.

All networks are involved in scenarios where segregation, segmentation, and isolation are needed. Without establishing a distinction between internal private networks and external public networks, maintaining privacy, security, and control is very challenging for the protection of sensitive data and systems. Network segmentation should be used to divide communication areas based on sensitivity of activities, value of data, risk of data loss or disclosure, level of classification, physical location, or any other distinction deemed important to an organization.

Physical

Physical segmentation occurs when no links are established between networks. This is also known as an air gap. If there are no cables and no wireless connections between two networks, then a physical network segregation/segmentation/isolation has been achieved. This is the most reliable means of prohibiting unwanted transfer of data. However, this configuration is also the most inconvenient for the rare events where communications are desired or necessary.

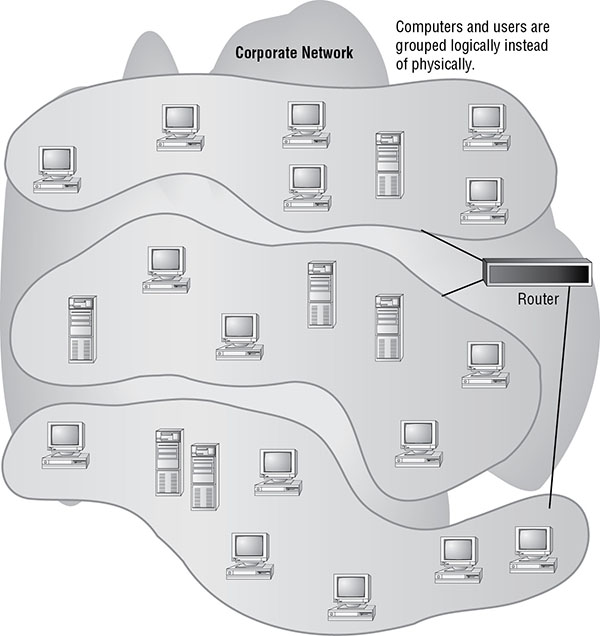

Logical (VLAN)

A virtual local area network (VLAN) is a hardware-imposed network segmentation created by switches. By default, all ports on a switch are part of VLAN 1. But as the switch administrator changes the VLAN assignment on a port-by-port basis, various ports can be grouped together and kept distinct from other VLAN port designations.

VLANs are used for traffic management. Communications between ports within the same VLAN occur without hindrance, but communications between VLANs require a routing function, which can be provided either by an external router or by the switch’s internal software (one reason for the term multilayer switch). VLANs are treated like subnets but aren’t subnets. VLANs are created by switches. Subnets are created by IP address and subnet mask assignments.

VLAN management is the use of VLANs to control traffic for security or performance reasons. VLANs can be used to isolate traffic between network segments. This can be accomplished by not defining a route between different VLANs or by specifying a deny filter between certain VLANs (or certain members of a VLAN). Any network segment that doesn’t need to communicate with another in order to accomplish a work task/function shouldn’t be able to do so. Use VLANs to allow what is necessary and to block/deny anything that isn’t necessary. Remember, “deny by default; allow by exception” isn’t a guideline just for firewall rules, but for security in general.

A VLAN consists of network divisions that are logically created out of a physical network. They’re often created using switches (see Figure 3.6). Basically, the ports on a switch are numbered; each port is assigned the designation VLAN1 by default. Through the switch’s management interfaces, the device administrator can assign ports other designations, such as VLAN2 or VLAN3, in order to create additional virtual networks.

FIGURE 3.6 A typical segmented VLAN

VLANs function in much the same way as traditional subnets. In order for communications to travel from one VLAN to another, the switch performs routing functions to control and filter traffic between its VLANs.

VLANs are used to segment a network logically without altering its physical topology. They’re easy to implement, have little administrative overhead, and are a hardware-based solution (specifically a Layer 3 switch). As networks are being crafted in virtual environments or in the cloud, software switches are often used. In these situations, VLANs are not hardware-based on or implemented by the software of a switch whether a physical device or a virtual system.

VLANs let you control and restrict broadcast traffic and reduce a network’s vulnerability to sniffers, because a switch treats each VLAN as a separate network division. In order to communicate between segments, the switch must provide a routing function. It’s the routing function that blocks broadcasts between subnets and VLANs, because a router (or any device performing Layer 3 routing functions, such as a Layer 3 switch) doesn’t forward Layer 2 Ethernet broadcasts. This feature of a switch blocks Ethernet broadcasts between VLANs and so helps protect against broadcast storms. A broadcast storm is a flood of unwanted Ethernet broadcast network traffic.

Virtualization

Virtualization technology is used to host one or more OSs in the memory of a single host computer. This mechanism allows virtually any OS to operate on any hardware. It also lets multiple OSs work simultaneously on the same hardware. Common examples include VMware, Microsoft’s Virtual PC or Hyper-V, VirtualBox, and Apple’s Parallels.

Virtualization offers several benefits, such as the ability to launch individual instances of servers or services as needed, real-time scalability, and the ability to run the exact OS version required for an application. Virtualized servers and services are indistinguishable from traditional servers and services from a user’s perspective. Additionally, recovery from damaged, crashed, or corrupted virtual systems is often quick: you simply replace the virtual system’s main hard drive file with a clean backup version, and then relaunch the affected virtual system.

With regard to security, virtualization offers several benefits. It’s often easier and faster to make backups of entire virtual systems rather than the equivalent native hardware installed system. Plus, when there is an error or problem, the virtual system can be replaced by a backup in minutes. Malicious code compromises of virtual systems rarely affect the host OS. This allows for safer testing and experimentation.

Custom virtual network segmentation can be used in relation to virtual machines in order to make guest OSs members of the same network division as that of the host, or guest OSs can be placed into alternate network divisions. A virtual machine can be made a member of a different network segment from that of the host or placed into a network that only exists virtually and does not relate to the physical network media. See the later section “SDN” for more about this technique, known as software-defined networking.

Air gaps

An air gap is another term for physical network segregation, as discussed in the earlier section “Physical.”

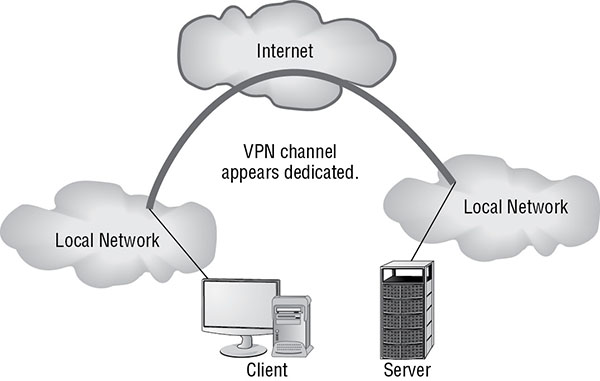

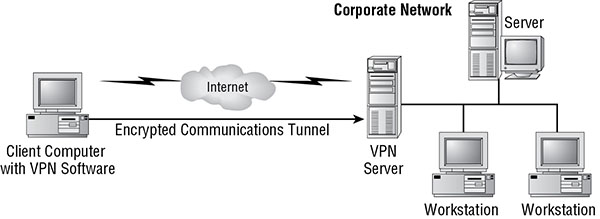

Tunneling/VPN

A virtual private network (VPN) is a communication tunnel between two entities across an intermediary network. In most cases, the intermediary network is an untrusted network, such as the Internet, and therefore the communication tunnel is usually encrypted. Numerous scenarios lend themselves to the deployment of VPNs; for example, VPNs can be used to connect two networks across the Internet (see Figure 3.7) or to allow distant clients to connect into an office local area network (LAN) across the Internet (see Figure 3.8). Once a VPN link is established, the network connectivity for the VPN client is exactly the same as a LAN connected by a cable connection. The only difference between a direct LAN cable connection and a VPN link is speed.

FIGURE 3.7 Two LANs being connected using a VPN across the Internet

FIGURE 3.8 A client connecting to a network via a VPN across the Internet

VPNs offer an excellent solution for remote users to access resources on a corporate LAN. They have the following advantages:

- They eliminate the need for expensive dial-up modem banks, including landline and ISDN.

- They do away with long-distance toll charges.

- They allow a user anywhere in the world with an Internet connection to establish a VPN link with the office network.

- They provide security for both authentication and data transmission.

Sometimes VPN protocols are called tunneling protocols. This naming convention is designed to focus attention on the tunneling capabilities of VPNs.

VPNs work through a process called encapsulation. As data is transmitted from one system to another across a VPN link, the normal LAN TCP/IP traffic is encapsulated (encased, or enclosed) in the VPN protocol. The VPN protocol acts like a security envelope that provides special delivery capabilities (for example, across the Internet) as well as security mechanisms (such as data encryption).

When firewalls, intrusion detection systems, antivirus scanners, or other packet-filtering and -monitoring security mechanisms are used, you must realize that the data payload of VPN traffic won’t be viewable, accessible, scannable, or filterable, because it’s encrypted. Thus, in order for these security mechanisms to function against VPN-transported data, they must be placed outside of the VPN tunnel to act on the data after it has been decrypted and returned back to normal LAN traffic.

VPNs provide the following critical functions:

- Access control restricts users from accessing resources on a network.

- Authentication proves the identity of communication partners.

- Confidentiality prevents unauthorized disclosure of secured data.

- Data integrity prevents unwanted changes of data while in transit.

VPN links are established using VPN protocols. There are several VPN protocols, but these are the four you should recognize:

- Point-to-Point Tunneling Protocol (PPTP)

- Layer 2 Tunneling Protocol (L2TP)

- OpenVPN (SSL VPN, TLS VPN)

- Internet Protocol Security (IPsec) (see the Chapter 2 section “IPSec”)

PPTP was originally developed by Microsoft. L2TP was developed by combining features of Microsoft’s proprietary implementation of PPTP and Cisco’s Layer 2 Forwarding (L2F) VPN protocols. Since its development, L2TP has become an Internet standard (RFC 2661) and is quickly becoming widely supported.

Both L2TP and PPTP are based on Point-to-Point Protocol (PPP) and thus work well over various types of remote-access connections, including dial-up. L2TP can support just about any networking protocol. PPTP is limited to IP traffic. L2TP uses UDP port 1701, and PPTP uses TCP port 1723.

PPTP can use any of the authentication methods supported by PPP, including the following:

- Challenge Handshake Authentication Protocol (CHAP)

- Extensible Authentication Protocol (EAP)

- Microsoft Challenge Handshake Authentication Protocol version 1 (MS-CHAP v.1)

- Microsoft Challenge Handshake Authentication Protocol version 2 (MS-CHAP v.2)

- Shiva Password Authentication Protocol (SPAP)

- Password Authentication Protocol (PAP)

Not all implementations of PPTP can provide data encryption. For example, when working with a PPTP VPN between Windows systems, the authentication protocol MS-CHAP v.2 enables data encryption.

L2TP can rely on PPP and thus on PPP’s supported authentication protocols. This is typically referenced as IEEE 802.1x (see Chapter 4, “Identity and Access Management,” and Chapter 6, “Cryptography and PKI,” for their sections on IEEE 802.1x), which is a derivative of EAP from PPP. IEEE 802.1x enables L2TP to leverage or borrow authentication services from any available AAA server on the network, such as RADIUS or TACACS+. L2TP does not offer native encryption, but it supports the use of encryption protocols, such as Internet Protocol Security (IPSec). Although it isn’t required, L2TP is most often deployed using IPsec.

L2TP can be used to tunnel any routable protocol but contains no native security features. When L2TP is used to encapsulate IPsec, it obtains authentication and data- encryption features because IPsec provides them. The main reason to use L2TP-encapsulated IPSec instead of naked IPSec is when needing to traverse a Layer 2 network that is either untrustworthy or its security is unknown. This can include a telco’s business connection offerings, such as Frame Relay and Asynchronous Transfer Mode (ATM) or the public switched telephone network (PSTN). Otherwise, IPSec can be used without the extra overhead of L2TP.

OpenVPN is based on TLS (formally SSL) and provides an easy-to-configure but robustly secured VPN option. OpenVPN is an open-source implementation that can use either preshared secrets (such as passwords) or certificates for authentication. Many wireless access points support OpenVPN, which has a native VPN option for using a home or business WAP as a VPN gateway.

Site-to-site

A site-to-site VPN is a connection between two organizational networks. See the Chapter 2 section “Remote access vs. site-to-site” for more information.

Remote access

A remote-access VPN is a variant of the site-to-site VPN. The difference is that with a remote-access VPN one endpoint is the single entity of a remote user that connects into an organizational network. See the Chapter 2 section “Remote access vs. site-to-site” for more information.

Site-to-site and remote access VPNs are variants of tunnel mode VPN. Another type of VPN is the transport mode VPN, which provides end-to-end encryption and can be described as a host-to-host VPN. In this type of VPN, all traffic is fully encrypted between the endpoints, but those endpoints are only individual systems, not organizational networks.

Security device/technology placement

When designing the layout and structure of a network, it is important to consider the placement of security devices and related technology. The goal of planning the architecture and organization of the network infrastructure is to maximize security while minimizing downtime, compromises, or other interruptions to productivity.

Sensors

A sensor is a hardware or software tool used to monitor an activity or event in order to record information or at least take notice of an occurrence. A sensor may monitor heat, humidity, wind movement, doors and windows opening, the movement of data, the types of protocols in use on a network, when a user logs in, any activity against sensitive servers, and much more.

For sensors to be effective, they need to be located in proper proximity to be able to take notice of the event of concern. This might require the sensor to monitor all network traffic, monitor a specific doorway, or monitor a single computer system.

Collectors

A security collector is any system that gathers data into a log or record file. A collector’s function is similar to the functions of auditing, logging, and monitoring. A collector watches for a specific activity, event, or traffic, and then records the information into a record file. Targets could be, for example, logon events, door opening events, all launches of a specific executable, any access to sensitive files, or all activity on mission-critical servers.

A collector, like any auditing system, needs sufficient space on a storage device to record the data it collects. Such data should be treated as more sensitive than the original data, programs, or systems it was collected from. A collector should be placed where it has the ability to review and retrieve information on the system, systems, or network that it is intended to monitor. This might require a direct link or path to the monitored target, or it may be able to operate on a cloned or mirrored copy of communications, such as the SPAN, audit, mirror, or IDS port of a switch.

Correlation engines

A correlation engine is a type of analysis system that reviews the contents of log files or live events. It is programmed to recognize related events, sequential occurrences, and interdependent activity patterns in order to detect suspicious or violating events. Through a correlation engine’s ability to aggregate and analyze system logs using fuzzy logic and predictive analytics, it may be able to detect a problem or potential problem long before a human administrator would have taken notice.

A correlation engine does not need to be in line on the network or installed directly onto monitored systems. It must have access to the recorded logs or the live activity stream in order to perform its analysis. This could allow it to operate on or near a data warehouse or centralized logging server (which is a system that maintains a real-time cloned copy of all live logs from servers and other critical systems) or off a switch SPAN port.

Filters

A filter is used to recognize or match an event, address, activity, content, or keyword and trigger a response. In most cases a filter is used to block or prevent unwanted activities or data exchanges. The most common example of a filtering tool is a firewall.

A filter should be located in line along any communication path where control of data communications is necessary. Keep in mind that filters cannot inspect encrypted traffic, so filtering of such traffic must be done just before encryption or just after decryption.

Proxies

For an introduction to proxies, see the Chapter 2 section “Proxy.”

The placement or location of a proxy should be between source or origin devices and their destination systems. The location of a transparent proxy must be along the routed path between source and destination, whereas a nontransparent proxy can be located along an alternate or indirect routed path, since source systems will direct traffic to the proxy themselves.

Firewalls

For an introduction to firewalls, see the Chapter 2 section “Firewall.”

The placement or location of a firewall should be at any transition between network segments where there is any difference in risk, sensitivity, security, value, function, or purpose. It is standard security practice to deploy a hardware security firewall between an internal network and the Internet as well as between the Internet, a DMZ or extranet, and an intranet (Figure 3.9).

FIGURE 3.9 A potential firewall deployment related to a DMZ

Software firewalls are also commonly deployed on every host system, both servers and clients, throughout the organizational network.

It may also be worth considering implementing firewalls between departments, satellite offices, VPNs, and even different buildings or floors.

VPN concentrators

For an introduction to VPN concentrators, see the Chapter 2 section “VPN concentrator.”

A VPN concentrator should be located on the boundary or border of the organizational network at or near the primary Internet connection. The VPN concentrator may be located inside or outside of the primary network appliance firewall. If the security policy is that all traffic is filtered entering the private network, then the VPN concentrator must be located outside the firewall. If traffic from remote locations over VPNs is trusted, then the VPN concentrator can be located inside the firewall.

SSL accelerators

For an introduction to SSL/TLS accelerators, see the Chapter 2 section “SSL/TLS accelerators.”

An SSL/TLS accelerator should be located at the boundary or border of the organizational network at or near the primary Internet connection and before the resource server being accessed by those protected connections. Usually the SSL/TLS accelerator is located in line with the communication pathway so that no abusive network access can reach the network segment between the accelerator and the resource host. The purpose of this device or service is to offload the computational burden of encryption in order for a resource host to devote its system resources to serving visitors.

Load balancers

For an introduction to Load balancers, see the Chapter 2 section “Load balancer.”

A load balancer should be located in front of a group of servers, often known as a cluster, which all support the same resource. The purpose of the load balancer is to distribute the workload of connection requests among the members of the cluster group, so this determines its location. A load balancer is placed between requesting clients and the cluster or group of servers hosting a resource.

DDoS mitigator

A DDoS mitigator is a software solution, hardware device, or cloud service that attempts to filter and/or block traffic related to DoS attacks. See Chapter 1, “Threats, Attacks, and Vulnerabilities,” sections “DoS” and “DDoS” for information about these attacks.

A DDoS mitigator will attempt to differentiate legitimate packets from malicious packets. Benign traffic will be sent toward its destination, whereas abusive traffic will be discarded. Low-end DDoS mitigators may be called flood guards. Flood guarding is often a feature of firewalls. However, such solutions only change the focus of the DDoS attack rather than eliminate it. A low-level DDoS mitigator or flood guard solution will prevent the malicious traffic from reaching the target server, but the filtering system may itself be overloaded. This can result in the DoS event still being able to cut off communications for the network, even when the targeted server is not itself harmed in the process.

Commercial-grade DDoS solution, especially those based on a cloud service, operate differently. Instead of simply filtering traffic on the spot, they reroute traffic to the cloud provider’s core filtering network. The cloud-based DDoS mitigator will often use a load balancer in front of 10,000+ virtual machines in order to dilute and distribute the traffic for analysis and filtering. All garbage packets are discarded, and legitimate traffic is routed back to the target network.

A DDoS mitigator should be positioned in line along the pathway into the intranet, DMZ, and extranet from the Internet. This provides the DDoS mitigator with the ability to filter all traffic from external attack sources before it reaches servers or the network as a whole.

Aggregation switches

An aggregation switch is the main or master switch used as the interconnection point for numerous other switches. In the past, this device may have been known as the master distribution frame (MDF), central distribution frame, core distribution frame, or primary distribution frame. In large network deployments, an initial master primary switch is deployed near the demarcation point (which is the point where internal company wiring meets the external telco wiring), and then additional switches for various floors, departments, or network segments are connected off the master primary switch.

Taps and port mirror

A tap is a means to eavesdrop on network communications. In the past taps were physical connections to the copper wires themselves, often using a mechanical means to strip or pierce the insulation to make contact with the conductors. These types of taps were often called vampire taps. Today, taps can be installed in line without damaging the existing cable. To install an inline tap, first the original cable must be unplugged from the switch (or other network management device) and then plugged into the tap. Then the tap is plugged into the vacated original port. A tap should be installed wherever traffic monitoring on a specific cable is required and when a port mirroring function is either not available or undesired.

A port mirror is a common feature found on managed switches; it will duplicate traffic from one or more other ports out a specific port. A switch may have a hardwired Switched Port Analyzer (SPAN) port, which duplicates the traffic for all other ports, or any port can be set as the mirror, audit, IDS, or monitoring port for one or more other ports. Port mirroring takes place on the switch itself.

SDN

The concept of OS virtualization has given rise to other virtualization topics, such as virtualized networks. A virtualized network or network virtualization is the combination of hardware and software networking components into a single integrated entity. The resulting system allows for software control over all network functions: management, traffic shaping, address assignment, and so on. A single management console or interface can be used to oversee every aspect of the network, a task that required physical presence at each hardware component in the past. Virtualized networks have become a popular means of infrastructure deployment and management by corporations worldwide. They allow organizations to implement or adapt other interesting network solutions, including software-defined networks, virtual SANs, guest operating systems, and port isolation.

Software-defined networking (SDN) is a unique approach to network operation, design, and management. The concept is based on the theory that the complexities of a traditional network with on-device configuration (routers and switches) often force an organization to stick with a single device vendor, such as Cisco, and limit the flexibility of the network to adapt to changing physical and business conditions. SDN aims at separating the infrastructure layer (hardware and hardware-based settings) from the control layer (network services of data transmission management). Furthermore, this also negates the need for the traditional networking concepts of IP addressing, subnets, routing, and the like to be programmed into or deciphered by hosted applications.

SDN offers a new network design that is directly programmable from a central location, is flexible, is vendor neutral, and is based on open standards. Using SDN frees an organization from having to purchase devices from a single vendor. It instead allows organizations to mix and match hardware as needed, such as to select the most cost-effective or highest throughput–rated devices, regardless of vendor. The configuration and management of hardware are then controlled through a centralized management interface. In addition, the settings applied to the hardware can be changed and adjusted dynamically as needed.

Another way of thinking about SDN is that it is effectively network virtualization. It allows data transmission paths, communication decision trees, and flow control to be virtualized in the SDN control layer rather than being handled on the hardware on a per-device basis.

Another interesting development arising out of the concept of virtualized networks is the virtual storage area network (SAN). A SAN is a network technology that combines multiple individual storage devices into a single consolidated network-accessible storage container. A virtual SAN or a software-defined shared storage system is a virtual re-creation of a SAN on top of a virtualized network or an SDN.

A storage area network (SAN) is a secondary network (distinct from the primary communications network) used to consolidate and manage various storage devices. SANs are often used to enhance networked storage devices such as hard drives, drive arrays, optical jukeboxes, and tape libraries so they can be made to appear to servers as if they were local storage.

SANs can offer greater storage isolation through the use of a dedicated network. This makes directly accessing stored data difficult and forces all access attempts to operate against a server’s restricted applications and interfaces.

Exam Essentials

Comprehend network zones. A network zone is an area of a network designed for a specific purpose, such as internal use or external use. Network zones are logical and/or physical divisions or segments of a LAN that allow for supplementary layers of security and control.

Understand DMZs. A demilitarized zone (DMZ) is an area of a network that is designed specifically for public users to access. The DMZ is a buffer network between the public untrusted Internet and the private trusted LAN. Often a DMZ is deployed through the use of a multihomed firewall.

Understand extranets. An extranet is an intranet that functions as a DMZ for business-to-business transactions. Extranets let organizations offer specialized services to business partners, suppliers, distributors, or customers.

Understand intranets. An intranet is a private network or private LAN.

Know about guest networks. A guest zone or a guest network is an area of a private network designated for use by temporary authorized visitors.

Understand honeynets. A honeynet consists of two or more networked honeypots used in tandem to monitor or re-create larger, more diverse network arrangements.

Be aware of NAT. NAT converts the IP addresses of internal systems found in the headers of network packets into public IP addresses. It hides the IP addressing scheme and structure from external entities. NAT serves as a basic firewall by only allowing incoming traffic that is in response to an internal system’s request. It reduces expense by requiring fewer leased public IP addresses, and it allows the use of private IP addresses (RFC 1918).

Understand PAT. Closely related to NAT is port address translation (PAT), which allows a single public IP address to host multiple simultaneous communications from internal clients. Instead of mapping IP addresses on a one-to-one basis, PAT uses the Transport layer port numbers to host multiple simultaneous communications across each public IP address.

Know RFC 1918. RFC 1918 defines the ranges of private IP addresses that aren’t routable across the Internet: 10.0.0.0–10.255.255.255 (10.0.0.0 /8 subnet), 1 Class A range; 172.16.0.0–172.31.255.255 (172.16.0.0 /12 subnet), 16 Class B ranges; and 192.168.0.0–192.168.255.255 (192.168.0.0 /16 subnet), 256 Class C ranges.

Understand network segmentation. Network segmentation involves controlling traffic among networked devices. Logical network segmentation can be imposed with switches using VLANs, or through other traffic-control means, including MAC addresses, IP addresses, physical ports, TCP or UDP ports, protocols, or application filtering, routing, and access control management.

Comprehend VLANs. Switches are often used to create virtual LANs (VLANs)—logical creations of subnets out of a single physical network. VLANs are used to logically segment a network without altering its physical topology. They are easy to implement, have little administrative overhead, and are a hardware-based solution.

Understand virtualization. Virtualization technology is used to host one or more OSs within the memory of a single host computer. Related issues include snapshots, patch compatibility, host availability/elasticity, security control testing, and sandboxing.

Understand VPNs. A virtual private network (VPN) is a communication tunnel between two entities across an intermediary network. In most cases, the intermediary network is an untrusted network, such as the Internet, and therefore the communication tunnel is also encrypted.

Know VPN protocols. PPTP, L2TP, OpenVPN, and IPSec are VPN protocols.

Understand PPTP. Point-to-Point Tunneling Protocol (PPTP) is based on PPP, is limited to IP traffic, and uses TCP port 1723. PPTP supports PAP, SPAP, CHAP, EAP, and MS-CHAP v.1 and v.2.

Know L2TP. Layer 2 Tunneling Protocol (L2TP) is based on PPTP and L2F, supports any LAN protocol, uses UDP port 1701, and often uses IPSec for encryption.

Understand OpenVPN. OpenVPN is based on TLS (formerly SSL) and provides an easy-to-configure but robustly secured VPN option.

Realize the importance of security device placement. When designing the layout and structure of a network, it is important to consider the placement of security devices and related technology. The goal of planning the architecture and organization of the network infrastructure is to maximize security while minimizing downtime, compromises, or other interruptions to productivity.

Understand software-defined networking. Software-defined networking (SDN) is a unique approach to network operation, design, and management. SDN aims at separating the infrastructure layer (hardware and hardware-based settings) from the control layer (network services of data transmission management).

3.3 Given a scenario, implement secure systems design.

Any effective security infrastructure is built following the guidelines of a security policy and consists of secure systems. A secure system must be planned and developed with security not just as a feature but as a central core concept. This section discusses some of the important design concepts that contribute to secure systems.

Hardware/firmware security

Security is an integration of both hardware/firmware components and software elements. This section looks at several hardware and firmware security technologies.

FDE/SED

Full-disk encryption (FDE) or whole-disk encryption is often used to provide protection for an OS, its installed applications, and all locally stored data. FDE encrypts all of the data on a storage device with a single master symmetric encryption key. Anything written to the encrypted storage device, including standard files, temporary files, cached data, memory swapped data, and even the remnants of deletion and the contents of slack space, is encrypted when FDE is implemented.

However, whole-disk encryption provides only reasonable protection when the system is fully powered off. If a system is accessed by a hacker while it’s active, there are several ways around hard drive encryption. These include a FireWire direct memory access (DMA) attack, malware stealing the encryption key out of memory, slowing down memory-decay rates with liquid nitrogen, or even just user impersonation. The details of these attacks aren’t important for this exam. However, you should know that whole-disk encryption is only a partial security control.

To maximize the defensive strength of whole-disk encryption, you should use a long, complex passphrase to unlock the system on bootup. This passphrase shouldn’t be written down or used on any other system or for any other purpose. Whenever the system isn’t actively in use, it should be powered down (hibernation is fine, but not sleep mode) and physically locked against unauthorized access or theft. Hard drive encryption should be viewed as a delaying tactic, rather than as a true prevention of access to data stored on the hard drive.

Hard drive encryption can be provided by a software solution, as discussed previously, or through a hardware solution. One option is self-encrypting drives (SED). Some hard drive manufacturers offer hard drive products that include onboard hardware-based encryption services. However, most of these solutions are proprietary and don’t disclose their methods or algorithms, and some have been cracked with relatively easy hacks.

Using a trusted software encryption solution can be a cost-effective and secure choice. But realize that no form of hard drive encryption, hardware- or software-based, is guaranteed protection against all possible forms of attack.

USB encryption is usually related to USB storage devices, which can include both USB-connected hard drives as well as USB thumb drives. Some USB device manufacturers include encryption features in their products. These often have an autorun tool that is used to gain access to encrypted content once the user has been authenticated. An example of an encrypted USB device is an IronKey.

If encryption features aren’t provided by the manufacturer of a USB device, you can usually add them through a variety of commercial or open-source solutions. One of the best-known, respected, and trusted open-source solutions is VeraCrypt (Figure 3.10) (the revised and secure replacement for its abandoned predecessor, TrueCrypt). This tool can be used to encrypt files, folders, partitions, drive sections, or whole drives, whether internal, external, or USB.

FIGURE 3.10 VeraCrypt encryption dialog box

TPM

The trusted platform module (TPM) is both a specification for a cryptoprocessor and the chip in a mainboard supporting this function. A TPM chip is used to store and process cryptographic keys for a hardware-supported/implemented hard drive encryption system. Generally, a hardware implementation rather than a software-only implementation of hard drive encryption is considered more secure.

When TPM-based whole-disk encryption is in use, the user/operator must supply a password or physical USB token device to the computer to authenticate and allow the TPM chip to release the hard drive encryption keys into memory. Although this seems similar to a software implementation, the primary difference is that if the hard drive is removed from its original system, it can’t be decrypted. Only with the original TPM chip can an encrypted hard drive be decrypted and accessed. With software-only hard drive encryption, the hard drive can be moved to a different computer without any access or use limitations.

HSM

A hardware security module (HSM) is a special-purpose cryptoprocessor used for a wide range of potential functions. The functions of an HSM can include accelerated cryptography operations, managing and storing encryption keys, offloading digital signature verification, and improving authentication. An HSM can be a chip on a motherboard, an external peripheral, a network-attached device, or an add-on or extension adapter or card (which is inserted into a device, such as a router, firewall, or rack-mounted server blade). Often an HSM includes tamper protection technology in order to prevent or discourage abuse and misuse even if physical access is obtained by the attacker. One example of an HSM is the TPM (see the previous section).

UEFI/BIOS

Basic input/output system (BIOS) is the basic low-end firmware or software embedded in the hardware’s electrically erasable programmable read-only memory (EEPROM). The BIOS identifies and initiates the basic system hardware components, such as the hard drive, optical drive, video card, and so on, so that the bootstrapping process of loading an OS can begin. This essential system function is a target of hackers and other intruders because it may provide an avenue of attack that isn’t secured or monitored.

BIOS attacks, as well as complementary metal-oxide-semiconductor (CMOS) and device firmware attacks, are becoming common targets of physical hackers as well as of malicious code. If hackers or malware can alter the BIOS, CMOS, or firmware of a system, they may be able to bypass security features or initiate otherwise prohibited activities.

Protection against BIOS attacks requires physical access control for all sensitive or valuable hardware. Additionally, strong malware protection, such as current antivirus software, is important.

A replacement or improvement to BIOS is Unified Extensible Firmware Interface (UEFI). UEFI provides support for all of the same functions as BIOS with many improvements, such as support for larger hard drives (especially for booting), faster boot times, enhanced security features, and even the ability to use a mouse when making system changes (BIOS was limited to keyboard control only). UEFI also includes a CPU-independent architecture, a flexible pre-OS environment with networking support, secure boot (see the next section), and backward and forward compatibility. It also runs CPU-independent drivers (for system components, drive controllers, and hard drives).

Secure boot and attestation

Secure boot is a feature of UEFI that aims to protect the operating environment of the local system by preventing the loading or installing of device drivers or an operating system that is not signed by a preapproved digital certificate. Secure boot thus protects systems against a range of low-level or boot-level malware, such as certain rootkits and backdoors. Secure boot ensures that only drivers and operating systems that pass attestation (the verification and approval process accomplished through the validation of a digital signature) are allowed to be installed and loaded on the local system.

Although the security benefits of secure boot attestation are important and beneficial to all systems, there is one important drawback to consider: if a system has a locked UEFI secure boot mechanism, it may prevent the system’s owner from replacing the operating system (such as switching from Windows to Linux) or block them from using third-party vendor hardware that has not been approved by the motherboard vendor (which means the third-party vendor did not pay a fee to have their product evaluated and their drivers signed by the motherboard vendor). If there is any possibility of using alternate OSs or changing hardware components of a system, be sure to use a motherboard from a vendor that will provide unlock codes/keys to the UEFI secure boot.

Supply chain

Supply chain security is the concept that most computers are not built by a single entity. In fact, most of the companies we know of as computer manufacturers, such as Dell, HP, Asus, Acer, and Apple, mostly perform the final assembly rather than manufacture all of the individual components. Often the CPU, memory, drive controllers, hard drives, SSDs, and video cards are created by other third-party vendors. Even these vendors are unlikely to have mined their own metals or processed the oil for plastics or etched the silicon of their chips. Thus, any finished system has a long and complex history, known as its supply chain, that enabled it or caused it to come into existence.

A secure supply chain is one in which all of the vendors or links in the chain are reliable, trustworthy, reputable organizations that disclose their practices and security requirements to their business partners (although not necessarily to the public). Each link in the chain is responsible and accountable to the next link in the chain. Each hand-off, from raw materials to refined products to electronics parts to computer components to finished product, is properly organized, documented, managed, and audited. The goal of a secure supply chain is to ensure that the finished product is of sufficient quality, meets performance and operational goals, and provides stated security mechanisms, and that at no point in the process was any element subjected to unauthorized or malicious manipulation or sabotage.

Hardware root of trust

A hardware root of trust is based or founded on a secure supply chain. The security of a system is ultimately dependent upon the reliability and security of the components that make up the computer as well as the process it went through to be crafted from original raw materials. If the hardware that is supporting an application has security flaws or a backdoor, or fails to provide proper HSM-based cryptography functions, then the software is unable to accommodate those failings. Only if the root of the system—the hardware itself—is reliable and trustworthy can the system as a whole be considered trustworthy. System security is a chain of many interconnected links; if any link is weak, then the whole chain is untrustworthy.

EMI/EMP

Electromagnetic interference (EMI) is the noise caused by electricity when used by a machine or when flowing along a conductor. Copper network cables and power cables can pick up environmental noise or EMI, which can corrupt the network communications or disrupt the electricity feeding equipment. An electromagnetic pulse (EMP) is an instantaneous high-level EMI, which can damage most electrical devices in the vicinity.

EMI shielding is important for network-communication cables as well as for power- distribution cables. EMI shielding can include upgrading from UTP (unshielded twisted pair) to STP (shielded twisted pair), running cables in shielding conduits, or using fiber-optic networking cables. EMI-focused shielding can also provide modest protection against EMPs, although that depends on the strength and distance of the EMP compared to the device or cable. Generally, these two types of cables (networking and electrical) should be run in separate conduits and be isolated and shielded from each other. The strong magnetic fields produced by power-distribution cables can interfere with network-communication cables.

Operating systems

Any secure system design requires the use of a secure operating system. Although no operating system is perfectly secure, the selection of the right operating system for a particular task or function can reduce the ongoing burden of security management.

Types

There many ways to categorize or group operating systems. This section includes several specific examples of OS types, labels, and groupings. In all cases, the selection of an OS should focus on features and capabilities without overlooking the native security benefits. Although security can often be added through software installation, native security features are often superior.

Network

A network operating system (NOS) is any OS that has native networking capabilities and was designed with networking as a means of communication and data transfer. Most OSs today are NOSs, but not all OSs are network capable. There are still many situations where a stand-alone or isolated OS is preferred for function, stability, and security.

Server

A server is a form of NOS. It is a resource host that offers data, information, or communication functions to other requesting systems. Servers are the computer systems on a network that support and maintain the network. They require greater physical and logical security protections than workstations because they represent a concentration of assets, value, and capabilities. End users should be restricted from physically accessing servers, and they should have no reason to log on directly to a server—they should interact with servers over a network through their workstations.

Workstation

A workstation is another form of NOS. A workstation is a resource consumer. A workstation is typically where an end user will log in and then from the workstation reach out across the network to servers to access resources and retrieve data. Workstations are also called clients, terminals, or end-user computers. Access to workstations should be restricted to authorized personnel. One method to accomplish this is to use strong authentication, such as two-factor authentication with a smartcard and a password or PIN.

Appliance

An appliance OS is yet another variation of NOS. An appliance NOS is a stripped-down or single-purpose OS that is typically found on network devices, such as firewalls, routers, switches, wireless access points, and VPN gateways. An appliance NOS is designed around a primary set of functions or tasks and usually does not support any other capabilities.

Kiosk

A kiosk OS is either a stand-alone OS or a variation of NOS. A kiosk OS is designed for end-user use and access. The end user might be an employee of an organization or anyone from the general public. A kiosk OS is locked down so that only preauthorized software products and functions are enabled. A kiosk OS will revert to the locked-down mode each time it is rebooted, and some will even revert if they experience a flaw, crash, error, or any attempt to perform an unauthorized command or launch an unapproved executable. The goal and purpose of a kiosk OS is to provide a robust information service to a user while preventing accidental or intentional misuse of the system. A kiosk OS is often deployed in a public location and thus must be configured to implement security effectively for that situation.

Mobile OS