Chapter 4

Identity and Access Management

COMPTIA SECURITY+ EXAM OBJECTIVES COVERED IN THIS CHAPTER INCLUDE THE FOLLOWING:

4.1 Compare and contrast identity and access management concepts.

4.1 Compare and contrast identity and access management concepts.

- Identification, authentication, authorization and accounting (AAA)

- Multifactor authentication

- Something you are

- Something you have

- Something you know

- Somewhere you are

- Something you do

- Federation

- Single sign-on

- Transitive trust

4.2 Given a scenario, install and configure identity and access services.

4.2 Given a scenario, install and configure identity and access services.

- LDAP

- Kerberos

- TACACS+

- CHAP

- PAP

- MSCHAP

- RADIUS

- SAML

- OpenID Connect

- OAuth

- Shibboleth

- Secure token

- NTLM

4.3 Given a scenario, implement identity and access management controls.

4.3 Given a scenario, implement identity and access management controls.

- Access control models

- MAC

- DAC

- ABAC

- Role-based access control

- Rule-based access control

- Physical access control

- Proximity cards

- Smart cards

- Biometric factors

- Fingerprint scanner

- Retinal scanner

- Iris scanner

- Voice recognition

- Facial recognition

- False acceptance rate

- False rejection rate

- Crossover error rate

- Tokens

- Hardware

- Software

- HOTP/TOTP

- Certificate-based authentication

- PIV/CAC/smart card

- IEEE 802.1x

- File system security

- Database security

- Access control models

4.4 Given a scenario, differentiate common account management practices.

4.4 Given a scenario, differentiate common account management practices.

- Account types

- User account

- Shared and generic accounts/credentials

- Guest accounts

- Service accounts

- Privileged accounts

- General Concepts

- Least privilege

- Onboarding/offboarding

- Permission auditing and review

- Usage auditing and review

- Time-of-day restrictions

- Recertification

- Standard naming convention

- Account maintenance

- Group-based access control

- Location-based policies

- Account policy enforcement

- Credential management

- Group policy

- Password complexity

- Expiration

- Recovery

- Disablement

- Lockout

- Password history

- Password reuse

- Password length

- Account types

The Security+ exam will test your knowledge of identity and access management concepts, since they relate to secure networked systems both for the home office and in corporate environments. To pass the test and be effective in implementing security, you need to understand the basic concepts and terminology related to network security as detailed in this chapter. You will also need to be familiar with when and why to use various tools and technologies, given a scenario.

The Security+ exam will test your knowledge of identity and access management concepts, since they relate to secure networked systems both for the home office and in corporate environments. To pass the test and be effective in implementing security, you need to understand the basic concepts and terminology related to network security as detailed in this chapter. You will also need to be familiar with when and why to use various tools and technologies, given a scenario.

4.1 Compare and contrast identity and access management concepts.

Identity is the concept of uniquely naming and referencing each individual user, program, and system component in order to authenticate, authorize, and audit for the purposes of holding users accountable for their actions. This is also known as “identification followed by authentication.” Access management is the concept of defining and enforcing what can and cannot be done by each identified subject. This is also known as authorization.

Identification, authentication, authorization and accounting (AAA)

It’s important to understand the differences between identification, authentication, and authorization. Although these concepts are similar and are essential to all security mechanisms, they’re distinct and must not be confused.

Identification and authentication are commonly used as a two-step process, but they’re distinct activities. Identification is the assertion of an identity. This needs to occur only once per authentication or access process. Any one of the common authentication factors can be employed for identification. Once identification has been performed, the authentication process must take place. Authentication is the act of verifying or proving the claimed identity. The issue is both checking that such an identity exists in the known accounts of the secured environment and ensuring that the human claiming the identity is the correct, valid, and authorized human to use that specific identity.

A username is the most common form of identification. It’s any name used by a subject in order to be recognized as a valid user of a system. Some usernames are derived from a person’s actual name, some are assigned, and some are chosen by the subject. Using a consistent username across multiple systems can help establish a consistent reputation across those platforms. However, it’s extremely important to keep all authentication factors unique between locations, even when duplicating a username.

Authentication can take many forms, most commonly of one-, two-, or multifactor configurations. The more unique factors used in an authentication process, the more resilient and reliable the authentication itself becomes. If all the proffered authentication factors are valid and correct for the claimed identity, it’s then assumed that the accessing person is who they claim to be. Then the permission- and action-restriction mechanisms of authorization take over to control the activities of the user from that point forward.

Identity proofing—that is, authentication—typically takes the form of one or more of the following authentication factors:

- Something you know (such as a password, code, PIN, combination, or secret phrase)

- Something you have (such as a smartcard, token device, or key)

- Something you are (such as a fingerprint, a retina scan, or voice recognition; often referred to as biometrics, discussed later in this chapter)

- Somewhere you are (such as a physical or logical location); this can be seen as a subset of something you know.

- Something you do (such as your typing rhythm, a secret handshake, or a private knock). This can be seen as a subset of something you know.

The authentication factor of something you know is also known as a Type 1 factor, something you have is also known as a Type 2 factor, and something you are is also known as a Type 3 factor. The factors of somewhere you are and something you do are not given Type labels.

When only one authentication factor is used, this is known as single-factor authentication (or, rarely, one-factor authentication).

Authorization is the mechanism that controls what a subject can and can’t do, access, use, or view. Authorization is commonly called access control or access restriction. Most systems operate from a default authorization stance of deny by default or implicit deny. Then all needed access is granted by exception to individual subjects or to groups of subjects.

Once a subject is authenticated, its access must be authorized. The process of authorization ensures that the requested activity or object access is possible, given the rights and privileges assigned to the authenticated identity (which we refer to as the subject from this point forward). Authorization indicates who is trusted to perform specific operations. In most cases, the system evaluates an access-control matrix that compares the subject, the object, and the intended activity. If the specific action is allowed, the subject is authorized; if it’s disallowed, the subject isn’t authorized.

Keep in mind that just because a subject has been identified and authenticated, that doesn’t automatically mean it has been authorized. It’s possible for a subject to log on to a network (in other words, be identified and authenticated) and yet be blocked from accessing a file or printing to a printer (by not being authorized to perform such activities). Most network users are authorized to perform only a limited number of activities on a specific collection of resources. Identification and authentication are “all-or-nothing” aspects of access control. Authorization occupies a wide range of variations between all and nothing for each individual subject or object in the environment. Examples would include a user who can read a file but not delete it, or print a document but not alter the print queue, or log on to a system but not be allowed to access any resources.

Multifactor authentication

Multifactor authentication is the requirement that a user must provide two or more authentication factors in order to prove their identity. There are three generally recognized categories of authentication factors.

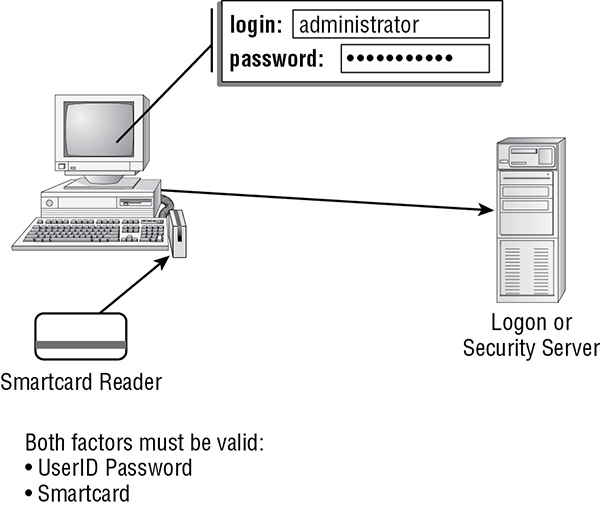

When two different authentication factors are used, the strategy is known as two-factor authentication (see Figure 4.1). If two or more authentication factors are used but some of them are of the same type, it is known as strong authentication. Using different factors (whether two or three) is always a more secure solution than any number of factors of the same authentication type, because with two or more different factors, two or more different types of attacks must take place to capture the authentication factor. With strong authentication, even if 10 passwords are required, only a single type of password-stealing attack needs to be waged to break through the authentication security.

FIGURE 4.1 Two-factor authentication

Authentication factors are the concepts used to verify the identity of a subject.

Something you are

Something you are is often known as biometrics. Examples include fingerprints, a retina scan, or voice recognition. See “Biometric factors” later in the chapter for more information.

Something you have

Something you have requires the use of a physical object. Examples include a smartcard, token device, or key.

Something you know

Something you know involves information you can recall from memory. Examples include a password, code, PIN, combination, or secret phrase.

Somewhere you are

Somewhere you are is a location-based verification. Examples include a physical location or a logical address, such as a domain name, an IP address, or a MAC address.

Something you do

Something you do involves some skill or action you can perform. Examples include solving a puzzle, a secret handshake, or a private knock. This concept can also include activities that are biometrically measured and semi-voluntary, such as your typing rhythm, patterns of system use, or mouse behaviors.

Federation

Federation or federated identity is a means of linking a subject’s accounts from several sites, services, or entities in a single account. It’s a means to accomplish single sign-on. Federated solutions often implement trans-site authentication using SAML (see the later section “SAML”).

Federation creates authentication trusts between systems in order to facilitate single sign-on benefits. Federation trusts can be one-way or two-way and can be transitive or nontransitive. In a one-way trust, as when system A is trusted by system B, users from A can access resources in both A and B systems, but users from B can only access resources in B. In a two-way trust, such as between system A and system B, users from either side can access resources on both sides. If three systems are trust-linked using two-way nontransitive trusts, such as A links to B which links to C, then A resources are accessible by users from A and B, B resources are accessible by users from A, B, and C, and C resources are accessible by users from B and C. If three systems are trust-linked using two-way transitive trusts, then all users from all three systems can access resources from all three systems.

Single sign-on

Single sign-on (SSO) means that once a user (or other subject) is authenticated into the realm, they don’t need to reauthenticate to access resources on any realm entity. (Realm is another term for domain or network.) This allows users to access all the resources, data, applications, and servers they need to perform their work tasks with a single authentication procedure. SSO eliminates the need for users to manage multiple usernames and passwords, because only a single set of logon credentials is required. Some examples of single sign-on include Kerberos, SESAME, NetSP, KryptoKnight, directory services, thin clients, and scripted access. Kerberos is one of the SSO solution options you should know about for the Security+ exam; it is discussed in the later section “Kerberos.”

Transitive trust

Transitive trust or transitive authentication is a security concern when a block can be bypassed using a third party. A transitive trust is a linked relationship between entities (such as systems, networks, or organizations) where trust from one endpoint crosses over or through middle entities to reach the farthest linked endpoint. For example, if four systems are transitive trust linked, such as A-B-C-D, then entities in A can access resources in B, C, and D thanks to the nature of a transitive trust. It can be thought of as a shared trust.

A real-world example of transitive trust occurs when you order a pizza. The cook makes the pizza and passes it on to the assistant, who packages the pizza in a box. The assistant then hands the pizza to the delivery person, the delivery person brings it to your location to hand it to your roommate, and then your roommate brings the pizza into the kitchen, where you grab a slice to eat. Since you trust each link in the chain, you are experiencing transitive trust.

Keep in mind that transitive trust can be both a beneficial feature of linked systems as well as a source of risk or compromise. If the cook placed pineapple, mushrooms, or anchovies on the pizza that you did not want or order, then the trust is broken.

Attackers often seek out transitive trust situations in order to bypass defenses and blockades against a direct approach. For example, the company firewall prevents the attacker from launching a direct attack against the internal database server. The attacker instead targets a worker who uses social networks. After friending the target, the attacker sends the worker a link that leads to a malware infector. If the worker clicks on the link, their system may become infected by remote-control malware. Then, when the worker takes the compromised system back into the office, it provides the attacker with an access pathway to attack the internal database. Thus, the transitive trust of attacker through social network to worker to company network allowed a security breach to take place.

Exam Essentials

Understand identification. Identification is the act of claiming an identity using just one authentication factor.

Define authentication. Authentication is the act of proving a claimed identity using one or more authentication factors.

Understand multifactor authentication. Multifactor authentication is the requirement that users must provide two or more authentication factors in order to prove their identity.

Know about multifactor authentication. Multifactor authentication or strong authentication occurs when two or more authentication factors are used but some of them are of the same type.

Understand two-factor authentication. Two-factor authentication occurs when two different authentication factors are used.

Comprehend federation. Federation or federated identity is a means of linking a subject’s accounts from several sites, services, or entities in a single account.

Understand single sign-on. Single sign-on means that once a user (or other subject) is authenticated into a realm, they need not reauthenticate to access resources on any realm entity.

Know about transitive trust. Transitive trust or transitive authentication is a security concern when a block can be bypassed using a third party. A transitive trust is a linked relationship between entities where trust from one endpoint crosses over or through middle entities to reach the farthest linked endpoint.

4.2 Given a scenario, install and configure identity and access services.

Authentication is the mechanism by which users prove their identity to a system. It’s the process of proving that a subject is the valid user of an account. Often, the authentication process involves a simple username and password. But other more complex authentication factors or credential-protection mechanisms are involved in order to provide strong protection for the logon and account-verification processes. The authentication process requires that the subject provide an identity and then proof of that identity.

Many systems and technologies are involved with identification, authentication, and access control. Several of these are discussed in this section.

LDAP

Please see the “LDAPS” section in Chapter 2, “Technologies and Tools,” for an introduction to this technology.

LDAP is usually present by default in every private network because it is the primary foundation of network directory services, such as Active Directory. LDAP is used to grant access to information about available resources in the network. The ability to view or search network resources can be limited through the use of authorization restrictions.

Kerberos

Early authentication transmission mechanisms sent logon credentials from the client to the authentication server in clear text. Unfortunately, this solution is vulnerable to eavesdropping and interception, thus making the security of the system suspect. What was needed was a solution that didn’t transmit the logon credentials in a form that could be easily captured, extracted, and reused.

One such method for providing protection for logon credentials is Kerberos, a trusted third-party authentication protocol that was originally developed at MIT under Project Athena. The current version of Kerberos in widespread use is version 5. Kerberos is used to authenticate network principles (subjects) to other entities on the network (objects, resources, and servers). Kerberos is platform independent; however, some OSs require special configuration adjustments to support true interoperability (for example, Windows Server with Unix).

Kerberos is a centralized authentication solution. The core element of a Kerberos solution is the key distribution center (KDC), which is responsible for verifying the identity of principles and granting and controlling access within a network environment through the use of secure cryptographic keys and tickets.

Kerberos is a trusted third-party authentication solution because the KDC acts as a third party in the communications between a client and a server. Thus, if the client trusts the KDC and the server trusts the KDC, then the client and server can trust each other.

Kerberos is also a single sign-on solution. Single sign-on means that once a user (or other subject) is authenticated into the realm, they need not reauthenticate to access resources on any realm entity. (A realm is the network protected under a single Kerberos implementation.)

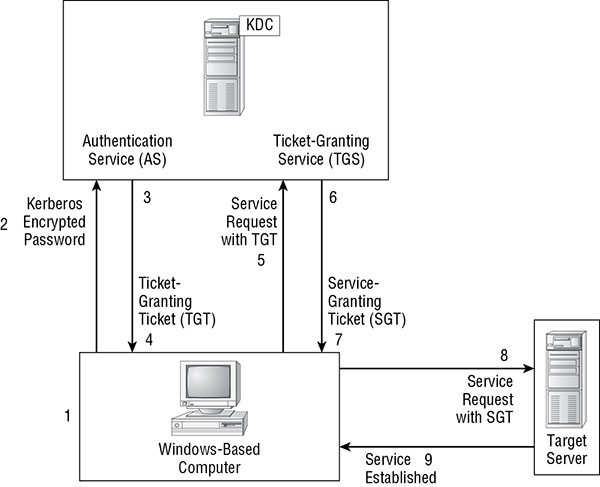

The basic process of Kerberos authentication is as follows:

- The subject provides logon credentials.

- The Kerberos client system encrypts the password and transmits the protected credentials to the KDC.

- The KDC verifies the credentials and then creates a ticket-granting ticket (TGT)—a hashed form of the subject’s password with the addition of a time stamp that indicates a valid lifetime. The TGT is encrypted and sent to the client.

- The client receives the TGT. At this point, the subject is an authenticated principle in the Kerberos realm.

- The subject requests access to resources on a network server. This causes the client to request a service ticket (ST) from the KDC.

- The KDC verifies that the client has a valid TGT and then issues an ST to the client. The ST includes a time stamp that indicates its valid lifetime.

- The client receives the ST.

- The client sends the ST to the network server that hosts the desired resource.

- The network server verifies the ST. If it’s verified, it initiates a communication session with the client. From this point forward, Kerberos is no longer involved.

Figure 4.2 shows the Kerberos authentication process.

FIGURE 4.2 The Kerberos authentication process

The Kerberos authentication method helps ensure that logon credentials aren’t compromised while in transit from the client to the server. The inclusion of a time stamp in the tickets ensures that expired tickets can’t be reused. This prevents replay and spoofing attacks against Kerberos.

Kerberos supports mutual authentication (client and server identities are proven to each other). It’s scalable and thus able to manage authentication for large networks. Being centralized, Kerberos helps reduce the overall time involved in accessing resources within a network.

TACACS+

Terminal Access Controller Access Control System (TACACS) is another example of an AAA server. TACACS is an Internet standard (RFC 1492). Similar to RADIUS, it uses ports TCP 49 and UDP 49. XTACACS was the first proprietary Cisco revision of the standard RFC form. TACACS+ was the second major revision by Cisco of this service into yet another proprietary version. None of these three versions of TACACS are compatible with each other. TACACS and XTACACS are utilized on many older systems but have been all but replaced by TACACS+ on current systems.

TACACS+ differs from RADIUS in many ways. One major difference is that RADIUS combines authentication and authorization (the first two As in AAA), whereas TACACS+ separates the two, allowing for more flexibility in protocol selection. For instance, with TACACS+, an administrator may use Kerberos as an authentication mechanism while choosing something entirely different for authorization. With RADIUS these options are more limited.

Scenarios where TACACS+ would be used include any remote access situation where Cisco equipment is present. Cisco hardware is required in order to operate a TACACS+ AAA service for authenticating local or remote systems and users.

CHAP

Challenge Handshake Authentication Protocol (CHAP) is an authentication protocol used over a wide range of Point-to-Point Protocol (PPP) connections (including dial-up, ISDN, DSL, and cable) as a means to provide a secure transport mechanism for logon credentials. It was developed as a secure alternative and replacement for PAP, which transmitted authentication credentials in clear text.

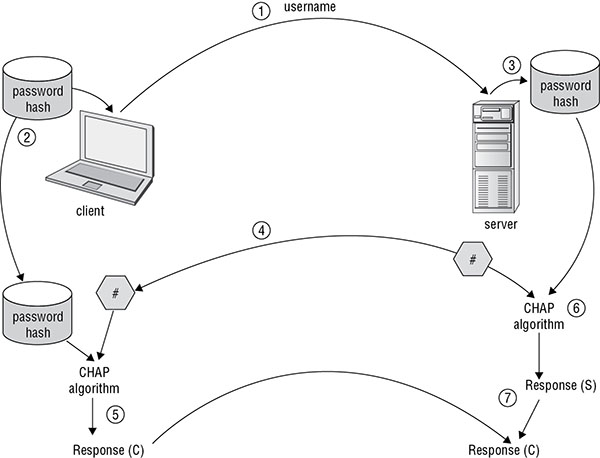

CHAP uses an initial authentication-protection process to support logon and a periodic midstream reverification process to ensure that the subject/client is still who they claim to be. The process is as follows:

- The user is prompted for their name and password. Only the username is transmitted to the server.

- The authentication process performs a one-way hash function on the subject’s password.

- The authentication server compares the username to its accounts database to verify that it is a valid existing account.

- If there is a match, the server transmits a random challenge number to the client.

- The client uses the password hash and the challenge number as inputs to the CHAP algorithm to produce a response, which is then transmitted back to the server.

- The server retrieves the password hash from the user account stored in the account database and then, using it along with the challenge number, computes the expected response.

- The server compares the response it calculated to that received from the client.

If everything matches, the subject is authenticated and allowed to communicate over the connection link. Figure 4.3 shows the CHAP authentication process.

Once the client is authenticated, CHAP periodically sends a challenge to the client at random intervals. The client must compute the correct response to the issued challenge; otherwise, the connection is automatically severed. This post-authentication verification process ensures that the authenticated session hasn’t been hijacked.

FIGURE 4.3 CHAP authentication

Whenever a CHAP or CHAP-like authentication system is supported, use it. The only other authentication option that is more secure than CHAP is mutual certificate–based authentication.

PAP

Password Authentication Protocol (PAP) is an insecure plain-text password-logon mechanism. PAP was an early plain old telephone service (POTS) authentication mechanism. PAP is mostly unused today, because it was superseded by CHAP and numerous EAP add-ons. Don’t use PAP—it transmits all credentials in plain text.

MSCHAP

MSCHAP is Microsoft’s customized or proprietary version of CHAP. The original MSCHAPv1 was integrated into the earliest versions of Windows but was dropped with the release of Windows Vista. MSCHAPv2 was originally added to Windows NT 4.0 through Service Pack 4, as well as Windows 95 and Windows 98 with network update packages. MSCHAPv1 and MSCHAPv2 were both available on Windows NT 4.0 through Windows XP and Windows Server 2003. MSCHAP is often associated with the Point-to-Point Tunneling Protocol (PPTP), a VPN protocol, and Protected Extensible Authentication Protocol (PEAP). One of the key differences between MSCHAP and CHAP is support for mutual authentication rather than client-only authentication. MSCHAPv2 uses DES encryption to encrypt the transmitted NTML password hash, which is weak and easily cracked. Thus, MSCHAP should generally be avoided and not used in any scenario where other, stronger authentication options are available.

RADIUS

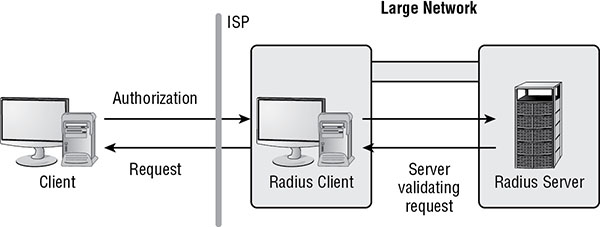

Remote Authentication Dial-In User Service (RADIUS) is a centralized authentication system. It’s often deployed to provide an additional layer of security for a network. By offloading authentication of remote access clients from domain controllers or even the remote access server itself to a dedicated authentication server such as RADIUS, you can provide greater protection against intrusion for the network as a whole. RADIUS can be used with any type of remote access, including dial-up, virtual private network (VPN), and terminal services.

RADIUS is known as an AAA server. AAA stands for authentication, authorization (or access control), and accounting (sometimes referred to as auditing). RADIUS provides for distinct AAA functions for remote-access clients separate from those of normal local domain clients. RADIUS isn’t the only AAA server, but it’s the most widely deployed.

When RADIUS is deployed, it’s important to understand the terms RADIUS client and RADIUS server, both of which are depicted in Figure 4.4. The RADIUS server is obviously the system hosting the RADIUS service. However, the RADIUS client is the remote-access server (RAS), not the remote system connecting to RAS. As far as the remote-access client is concerned, it sees only the RAS, not the RADIUS server. Thus, the RAS is the RADIUS client. RADIUS is a tried-and-true AAA solution, but alternatives include the Cisco proprietary TACACS+ as well as the direct RADIUS competitor Diameter.

FIGURE 4.4 The RADIUS client manages the local connection and authenticates against a central server.

RADIUS can be used in any remote-access authentication scenario. RADIUS is platform independent and thus does not require any specific vendor’s hardware. Most RADIUS products from any vendor are interoperable with all others. RADIUS is a widely supported AAA service and can be used as the authentication system for most implementations of IEEE 802.1x (see the section “IEEE 802.1x,” later in this chapter), including the ENT authentication option on wireless access points.

SAML

Security Assertion Markup Language (SAML) is an open-standard data format based on XML for the purpose of supporting the exchange of authentication and authorization details between systems, services, and devices. SAML was designed to address the difficulties related to the implementation of single sign-on (SSO) over the web. SAML’s solution is based on a trusted third-party mechanism in which the subject or user (the principle) is verified through a trusted authentication service (the identity provider) in order for the target server or resource host (the service provider) to accept the identity of the visitor. SAML doesn’t dictate the authentication credentials that must be used, so it’s flexible and potentially compatible with future authentication technologies.

SAML is used to create and support federation of authentication. The success of SAML can be seen online wherever you are offered the ability to use an alternate site’s authentication to access an account. For example, if you visit feedly.com and click the “Get started for free” button, a dialog box appears (Figure 4.5) where you can select to link a new Feedly account to an existing account at Google, Facebook, Twitter, Windows, Evernote, or your own company’s enterprise authentication if you don’t want to use a unique email and password for Feedly.

FIGURE 4.5 An example of a SAML/OAuth single sign-on interface

SAML should be used in any scenario where linking of systems, services, or sites is desired but the authentication solutions are not already compatible. SAML allows for the creation of interfaces between authentication solutions in order to allow federation.

OpenID Connect

OpenID Connect is an Internet-based single sign-on solution. It operates over the OAuth protocol (see next section) and can be used in relation to Web services as well as smart device apps. The purpose or goal of OpenID Connect is to simplify the process by which applications are able to identify and verify users. For more detailed information and programming guidance, please see https://openid.net/connect/.

OpenID Connect should be considered for use as an authentication solution for any online application or web service. It is a simple solution that may be robust enough for your soon-to-be virally popular digital app.

OAuth

OAuth is an open standard for authentication and access delegation (federation). OAuth is widely used by websites, web services, and mobile device applications. OAuth is an easy means of supporting federation of authentication between primary and secondary systems. A primary system could be Google, Facebook, or Twitter, and secondary systems are anyone else. OAuth is often implemented using SAML. OAuth can be recognized as being in use when you are offered the ability to use an existing authentication from a primary service as the authentication at a secondary one (see Figure 4.5 earlier).

OAuth can be used in any scenario where a new, smaller secondary entity wants to employ the access tokens from primary entities as a means of authentication. In other words, OAuth is used to implement authentication federation.

Shibboleth

Shibboleth is another example of an authentication federation and single sign-on solution. Shibboleth is a standards-based open-source solution that can be used for website authentication across the Internet or within private networks. Shibboleth was developed for use by Internet2 and is now available for use in any networking environment, public or private. Shibboleth is based on SAML.

For more information on Shibboleth, please visit https://shibboleth.net/.

Secure token

A secure token is a protected, possibly encrypted authentication data set that proves a particular user or system has been verified through a formal logon procedure. Access tokens include web cookies, Kerberos tickets, and digital certificates. A secure token is an access token that does not leak any information about the subject’s credentials or allow for easy impersonation.

A secure token can also refer to a physical authentication device known as a TOTP or HOTP device (see the “HOTP/TOTP” section later in this chapter).

Secure tokens should be considered for use in any private or public authentication scenario. Minimizing the risk of information leakage or impersonation should be a goal of anyone designing, establishing, or managing authentication solutions.

NTLM

New Technology LAN Manager (NTLM) is a password hash storage system used on Microsoft Windows. NTLM exists in two versions. NTLMv1 is a challenge-response protocol system that, using a server-issued random challenge along with the user’s password (in both LM hash and MD4 hash), produces two responses that are sent back to the server (this is assuming a password with 14 or fewer characters; otherwise only an MD4 hash-based response is generated). NTLMv2 is also a challenge-response protocol system, but it uses a much more complex process that is based on MD5. Both versions of NTLM use a challenge response–based hashing mechanism whose result is nonreversible and thus much more secure than LM hashing. However, reverse-engineering password- cracking mechanisms can ultimately reveal NTLMv1 or v2 stored passwords if the passwords are relatively short (under 15 characters) and the hacker is given enough processing power and time.

LANMAN, or what is typically referred to as LM or LAN Manager, is a legacy storage mechanism developed by Microsoft to store passwords. LM was replaced by NTLM on Windows NT 4.0 and should be disabled (usually left disabled) and avoided on all current versions of Windows.

One of the most significant issues with LM is that it limited passwords to a maximum of 14 characters. Shorter passwords were padded out to 14 characters using null characters. The 14 characters of the password were converted to uppercase and then divided into two seven-character sections. Each seven-character section was then used as a DES encryption key to encrypt the static ASCII string “KGS!@#$%”. The two results were recombined to form the LM hash. Obviously, this system is fraught with problems. Specifically, the process is reversible and not truly a one-way hash, and all passwords are ultimately no stronger than seven characters.

As a user, you can completely avoid LM by using passwords of at least 15 characters. LM has been disabled by default on all versions of Windows since Windows 2000. However, this disabling only addresses the initial request for and the default transmission of LM for the authentication process. The Security Accounts Manager (SAM) still contains an LM equivalent of all passwords with 14 or fewer characters through Windows Vista, at least by default. Windows 7 and later versions of Windows do not even create the LM version of user passwords to store in the user account database by default. Settings are available in the Registry and Group Policy Objects to turn on this backward-compatibility feature.

You should leave LM disabled and disable it when it isn’t. If you need LM to support a legacy system, you should find a way to upgrade the legacy system rather than continue to use LM. The use of LM is practically equivalent to using only plain text.

NTLM is used in nearly every scenario of Windows-to-Windows authentication. Although it is not the most robust or secure form of authentication, it is secure enough in most circumstances. When NTLM is deemed insufficient or incompatible (such as when connecting to non-Windows systems), then digital certificate-based authentication should be used.

Exam Essentials

Understand Kerberos. Kerberos is a trusted third-party authentication protocol. It uses encryption keys as tickets with time stamps to prove identity and grant access to resources. Kerberos is a single sign-on solution employing a key distribution center (KDC) to manage its centralized authentication mechanism.

Know about TACACS+. TACACS is a centralized remote access authentication solution. It’s an Internet standard (RFC 1492); however, Cisco’s proprietary implementations of XTACACS and now TACACS+ have quickly gained popularity as RADIUS alternatives.

Understand CHAP. The Challenge Handshake Authentication Protocol (CHAP) is an authentication protocol used primarily over dial-up connections (usually PPP) as a means to provide a secure transport mechanism for logon credentials. CHAP uses a one-way hash to protect passwords and periodically reauthenticate clients. A good example of CHAP usage is a point-to-point link between two corporate routers.

Define PAP. Password Authentication Protocol (PAP) is an insecure plain-text password-logon mechanism. PAP was an early plain old telephone service (POTS) authentication mechanism.

Understand MSCHAP. MSCHAP is Microsoft’s customized or proprietary version of CHAP. One of the key differences between MSCHAP and CHAP is that MSCHAP supports mutual authentication, rather than client-only authentication. MSCHAPv2 uses DES encryption to encrypt the transmitted NTML password hash, which is weak and easily cracked.

Comprehend RADIUS. RADIUS is a centralized authentication system. It’s often deployed to provide an additional layer of security for a network.

Understand SAML. Security Assertion Markup Language is an open-standard data format based on XML for the purpose of supporting the exchange of authentication and authorization details between systems, services, and devices.

Know about OpenID Connect. OpenID Connect is an Internet-based single sign-on solution. It operates over the OAuth protocol and can be used in relation to web services as well as smart device apps.

Understand OAuth. OAuth is an open standard for authentication and access delegation (federation). OAuth is widely used by websites/services and mobile device applications.

Define Shibboleth. Shibboleth is another example of an authentication federation and single sign-on solution. Shibboleth is a standards-based, open source-solution that can be used for website authentication across the Internet or within private networks.

Understand secure tokens. A secure token is a protected, possibly encrypted authentication data set that proves a particular user or system has been verified through a formal logon procedure. Access tokens include web cookies, Kerberos tickets, and digital certificates. A secure token is an access token that does not leak any information about the subject’s credentials or allow for easy impersonation.

Know about NTLM. New Technology LAN Manager (NTLM) is a password hash storage system used on Microsoft Windows. It’s a challenge-response protocol system that is nonreversible and thus much more secure than LM hashing. One place where NTLM is frequently used is in Microsoft Active Directory for user logon authentication in lieu of a RADIUS or TACACS solution.

4.3 Given a scenario, implement identity and access management controls.

Authorization is the second element of AAA services. Thus, authorization or access control is an essential part of security through an organization. Understanding the variations and options for identity verification and access control management is important for security management.

Access control models

The mechanism by which users are granted or denied the ability to interact with and use resources is known as access control. Access control is often referred to using the term authorization. Authorization defines the type of access to resources that users are granted—in other words, what users are authorized to do. Authorization is often considered the next logical step immediately after authentication. Authentication is proving your identity to a system or the act of logging on. With proper authorization or access control, a system can properly control access to resources in order to prevent unauthorized access.

There are three common access control methods:

- Mandatory access control (MAC)

- Discretionary access control (DAC)

- Role-based access control (RBAC)

These three models are widely used in today’s IT environments. Familiarity with these models is essential for the Security+ exam.

In most environments, DAC is a sufficient authorization mechanism to use to control and manage a subject’s access to and use of resources. Most operating systems are DAC by default. In government or military environments, where classifications are deemed an essential control mechanism, MAC should be used to directly enforce and restrict access based on a subject’s clearance. RBAC is a potential alternative in many environments, but it is most appropriate in those situations where there is a high rate of employee turnover.

MAC

Mandatory access control (MAC) is a form of access control commonly employed by government and military environments. MAC specifies that access is granted based on a set of rules rather than at the discretion of a user. The rules that govern MAC are hierarchical in nature and are often called sensitivity labels, security domains, orclassifications. MAC environments define a few specific security domains or sensitivity levels and then use the associated labels from those domains to impose access control on objects and subjects.

A government or military implementation of MAC typically includes the following five levels (in order from least sensitive to most sensitive):

- Unclassified

- Sensitive but unclassified

- Confidential

- Secret

- Top secret

Objects or resources are assigned sensitivity labels corresponding to one of these security domains. Each specific security domain or level defines the security mechanisms and restrictions that must be imposed in order to provide protection for objects in that domain.

MAC can also be deployed in private sector or corporate business environments. Such cases typically involve the following four security domain levels (in order from least to most sensitive):

- Public

- Sensitive

- Private

- Confidential/Proprietary

The primary purpose of a MAC environment is to prevent disclosure: the violation of the security principle of confidentiality. When an unauthorized user gains access to a secured resource, it is a security violation. Objects are assigned a specific sensitivity label based on the damage that would be caused if disclosure occurred. For example, if a top-secret resource was disclosed, it could cause grave damage to national security.

A MAC environment works by assigning subjects a clearance level and assigning objects a sensitivity label—in other words, everything is assigned a classification marker. The name of the clearance level is the same as the name of the sensitivity label assigned to objects or resources. A person (or other subject, such as a program or a computer system) must have the same or greater assigned clearance level as the resources they wish to access. In this manner, access is granted or restricted based on the rules of classification (that is, sensitivity labels and clearance levels).

MAC is so named because the access control it imposes on an environment is mandatory. Its assigned classifications and the resulting granting and restriction of access can’t be altered by users. Instead, the rules that define the environment and judge the assignment of sensitivity labels and clearance levels control authorization.

MAC isn’t a security environment with very granular control. An improvement to MAC includes the use of need to know: a security restriction in which some objects (resources or data) are restricted unless the subject has a need to know them. The objects that require a specific need to know are assigned a sensitivity label, but they’re compartmentalized from the rest of the objects with the same sensitivity label (in the same security domain). The need to know is a rule in itself, which states that access is granted only to users who have been assigned work tasks that require access to the cordoned-off object. Even if users have the proper level of clearance, without need to know, they’re denied access. “Need to know” is the MAC equivalent of the principle of least privilege from DAC (described in the following section).

DAC

Discretionary access control (DAC) is the form of access control or authorization that is used in most commercial and home environments. DAC is user-directed or, more specifically, controlled by the owner and creators of the objects (resources) in the environment. DAC is identity-based: access is granted or restricted by an object’s owner based on user identity and on the discretion of the object owner. Thus, the owner or creator of an object can decide which users are granted or denied access to their object. To do this, DAC uses ACLs.

An access control list (ACL) is a security logical mechanism attached to every object and resource in the environment. It defines which users are granted or denied the various types of access available based on the object type. Individual user accounts or user groups can be added to an object’s ACL and granted or denied access.

If your user account isn’t granted access through an object’s ACL, then often your access is denied by default (note: not all OSs use a deny-by-default approach). If your user account is specifically granted access through an object’s ACL, then you’re granted the specific level or type of access defined. If your user account is specifically denied access through an object’s ACL, then you’re denied the specific level or type of access defined. In some cases (such as with Microsoft Windows), a Denied setting in an ACL overrides all other settings. Table 4.1 shows an access matrix for a user who is a member of three groups, and the resulting access to specific files within a folder on a network server. As you can see, the presence of the Denied setting overrides any other access granted from another group. Thus, if your membership in one user group grants you write access over an object, but another group specifically denies you write access to the same object, then you’re denied write access to the object.

TABLE 4.1 Cumulative access based on group memberships

| Sales Group | User group | Research group | Resulting access | Filename |

| Change | Read | None specified | Change | SalesReport.xls |

| Read | Read | Change | Change | ProductDevelopment.doc |

| None specified | Read | Denied | Denied | EmailPolicy.pdf |

| Full control | Denied | None specified | Denied | CustomerContacts.doc |

User-assigned privileges are permissions granted or denied on a specific individual user basis. This is a standard feature of DAC-based OSs, including Linux and Windows. All objects in Linux have an owner assigned. The owner (an individual) is granted specific privileges. In Windows, an access control entry (ACE) in an ACL can focus on an individual user to grant or deny permissions on the object.

In a DAC environment, it is common to use groups to assign access to resources in aggregate rather than only on an individual basis. This often results in users being members of numerous groups. In these situations, it is often important to determine the effective permissions for a user. This is accomplished by accumulating all allows or grants of access to a resource, and then subtracting or removing any denials for that resource.

ABAC

Attribute-based access control (ABAC) is a mechanism for assigning access and privileges to resources through a scheme of attributes or characteristics. The attributes can be related to the user, the object, the system, the application, the network, the service, time of day, or even other subjective environmental concerns. ABAC access is then determined through a set of Boolean logic rules, similar to if-then programming statements, that relate who is making a request, what the object is, what type of access is being sought, and results the action would cause. ABAC is a dynamic, context-aware authorization scheme that can modify access based on risk profiles and changing environmental conditions (such as system load, latency, whether or not encryption is in use, and whether the requesting system has the latest security patches). ABAC is also known by the terms policy-based access control (PBAC) and claims-based access control (CBAC).

Role-based access control

Role-based access control (RBAC) is another strict form of access control. It may be grouped with the nondiscretionary access control methods along with MAC. The rules used for RBAC are basically job descriptions: users are assigned a specific role in an environment, and access to objects is granted based on the necessary work tasks of that role. For example, the role of backup operator may be granted the ability to back up every file on a system to a tape drive. The user given the backup operator role can then perform that function.

RBAC is most suitable for environments with a high rate of employee turnover. It allows a job description or role to remain static even when the user performing that role changes often. It’s also useful in industries prone to privilege creep, such as banking.

Rule-based access control

Rule-based access control (RBAC or rule-BAC) is typically used in relation to network devices that filter traffic based on filtering rules, as found on firewalls and routers. Rule-based access control (RBAC) systems enforce rules independent of the user or the resource, as the rules are the rules. If a firewall rule sets a port as closed, then it is closed regardless of who is attempting to access the system. These filtering rules are often called rules, rule sets, filter lists, tuples, or ACLs. Be sure you understand the context of the Security+ exam question before assuming role or rule when you see RBAC.

Physical access control

Often overlooked when considering IT security is the need to manage physical access. Physical access controls are needed to restrict physical access violations, whereas logical access controls are needed to restrict logical access violations.

Physical access controls should be implemented in any scenario in which there is a difference in value, risk, or use between one area of a facility and another. Any place where it would make sense to have a locked door, technology-managed physical access controls should be implemented.

Proximity cards

In addition to smart and dumb cards, proximity devices can be used to control physical access. A proximity device or proximity card can be a passive device, a field-powered device, or a transponder. The proximity device is worn or held by the authorized bearer. When it passes a proximity reader, the reader is able to determine who the bearer is and whether they have authorized access. A passive device reflects or otherwise alters the electromagnetic field generated by the reader. This alteration is detected by the reader.

The passive device has no active electronics; it is just a small magnet with specific properties (like antitheft devices commonly found on DVDs). A field-powered device has electronics that activate when the device enters the electromagnetic (EM) field that the reader generates. Such devices generate electricity from an EM field to power themselves (such as card readers that only require the access card be waved within inches of the reader to unlock doors). A transponder device is self-powered and transmits a signal received by the reader. This can occur continuously or only at the press of a button (like a garage door opener or car alarm key fob).

In addition to smart/dumb cards and proximity readers, physical access can be managed with radio frequency identification (RFID) or biometric access-control devices.

Smart cards

See the later section “PIV/CAC/smart card” in the discussion of implementing certificate-based authentication.

Biometric factors

Biometrics is the term used to describe the collection of physical attributes of the human body that can be used as an identification or authentication factor. Biometrics fall into the authentication factor category of something you are: you, as a human, have the element of identification as part of your physical body.

Numerous biometric factors can be considered for identification and authentication purposes. Several of these options are discussed in the following sections.

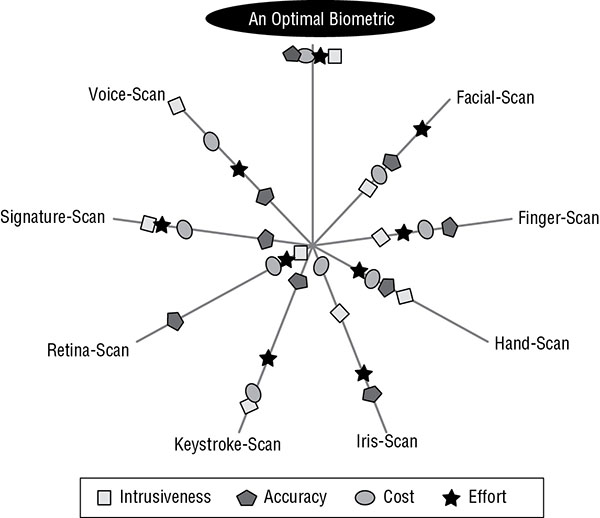

However, when an organization decides to implement a biometric factor, it is important to evaluate the available options in order to select a biometric solution that is most in line with the organization’s security priorities. One method to accomplish this is to consult a Zephyr analysis chart (Figure 4.6). This type of chart presents the relative strengths and weaknesses of various characteristics of biometric factor options. The specific example shown in Figure 4.6 evaluates eight biometric types on four characteristics (intrusiveness, accuracy, cost, and effort). The security administrator should select a form of biometric based on their organization’s priorities for the evaluated characteristics.

FIGURE 4.6 A Zephyr analysis chart

Once the type of biometric is selected, then a specific make and model needs to be purchased. Finding the most accurate device to implement is accomplished using a crossover error rate analysis (see the section “Crossover error rate” later in this chapter).

Biometric factor devices or biometric scanners should be used as an element in multifactor authentication. Any scenario in which there is sensitive data carries a corresponding need for greater security. One element of stronger security is more robust authentication. Any form of multifactor authentication is stronger than a single-factor authentication solution.

Fingerprint scanner

A fingerprint scanner is used to analyze the visible patterns of skin ridges on the fingers and thumbs of people. Fingerprints are thought to be unique to an individual and have been used for decades in physical security for identification, and are now often used as an electronic authentication factor as well. Fingerprint readers are now commonly used on laptop computers, smartphones, and USB flash drives as a method of identification and authentication. Although fingerprint scanners are common and seemingly easy to use, they can sometimes be fooled by photos of fingerprints, black-powder and tape-lifted fingerprints, or gummy re-creations of fingerprints.

Retinal scanner

Retinal scanners focus on the pattern of blood vessels at the back of the eye. Retinal scans are the most accurate form of biometric authentication and are able to differentiate between identical twins. However, they are the least acceptable biometric scanning method for employees because they can reveal medical conditions, such as high blood pressure and pregnancy. Older retinal scans blew a puff of air into the user’s eye (which is uncomfortable), but newer ones typically use an infrared light instead. Retinal patterns can also change as people age and retinas deteriorate.

Iris scanner

Iris scanners focus on the colored area around the pupil. They are the second most accurate form of biometric authentication. Iris scans are often recognized as having a longer useful authentication life span than other biometric factors because the iris remains relatively unchanged throughout a person’s life (barring eye damage or illness). Iris scans are considered more acceptable by general users than retina scans because they don’t reveal personal medical information. However, some scanners can be fooled with a high-quality image in place of an actual person’s eye; sometimes a contact lens can be placed on the photo to improve the subterfuge. Additionally, accuracy can be affected by changes in lighting.

Voice recognition

Voice recognition is a type of biometric authentication that relies on the characteristics of a person’s speaking voice, known as a voiceprint. The user speaks a specific phrase, which is recorded by the authentication system. To authenticate, the user repeats the same phrase and it is compared to the original. Voice pattern recognition is sometimes used as an additional authentication mechanism but is rarely used by itself.

Facial recognition

Facial recognition is based on the geometric patterns of faces for detecting authorized individuals. Face scans are used to identify and authenticate people before accessing secure spaces, such as a secure vault. Many photo sites now include facial recognition, which can automatically recognize and tag individuals once they have been identified in other photos.

False acceptance rate

As with all forms of hardware, there are potential errors associated with biometric readers. Two specific error types are a concern: false rejection rate (FRR) or Type I errors and false acceptance rate (FAR) or Type II errors. The FRR is the number of failed authentications for valid subjects based on device sensitivity, whereas the FAR is the number of accepted invalid subjects based on device sensitivity.

False rejection rate

Discussed in the previous section.

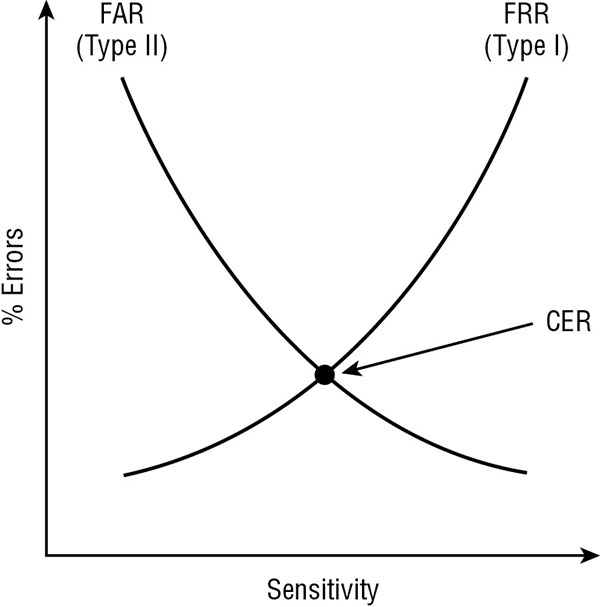

Crossover error rate

The two error measurements of biometric devices (FRR and FAR) can be mapped on a graph comparing sensitivity level to rate of errors. The point on this graph where these two rates intersect is known as the crossover error rate (CER); see Figure 4.7. Notice how the number of FRR errors increases with sensitivity, whereas FAR errors decrease with an increase in sensitivity. The CER point (as measured against the error scale) is used to determine which biometric device for a specific body part from various vendors or of various models is the most accurate. The comparatively lowest CER point is the more accurate biometric device for the relevant body part.

FIGURE 4.7 A graphing of FRR and FAR, which reveals the CER

Tokens

A token is a form of authentication factor that is something you have. It’s usually a hardware device, but it can be implemented in software as a logical token. A token is used to generate temporary single-use passwords for the purpose of creating stronger authentication. In this way, a user account isn’t tied to a single static password. Instead, the user must be in physical possession of the password-generating device. Users enter the currently valid password from the token as their password during the logon process.

There are several forms of tokens. Some tokens generate passwords based on time (see Figure 4.8 later in the chapter), whereas others generate passwords based on challenges from the authentication server. In either case, users can use (or attempt to use) the generated password just once before they must either wait for the next time window or request another challenge. Passwords that can be used only once are known as one-time passwords (OTP). This is the most secure form of password, because regardless of whether its use results in a successful logon, that one-use password is never valid again. One-time passwords can be employed only when a token is used, due to the complexity and ever-changing nature of the passwords. However, a token need not be a device; there are paper-based options as well as smartphone app–based solutions.

FIGURE 4.8 The RSA SecurID token device

A token may be a device (Figure 4.8), like a small calculator with or without a keypad. It may also be a high-end smartcard. When properly deployed, a token-based authentication system is more secure than a password-only system.

A token should be used in any scenario in which multifactor authentication is needed or warranted. Almost every authentication event would be improved by implementing a multifactor solution as opposed to remaining with single-factor authentication.

Hardware

An authentication token can be a hardware device that must be present each time the user attempts to log on. Often hardware tokens are designed to be small and attach to a keychain or lanyard. They are often referred to as keychain tokens or key fobs.

Software

An authentication token can be a software solution, such as an app on a smart device. Since many of us carry a smartphone with us almost everywhere we go, having an app that provides OTP when necessary can eliminate the need for carrying around another hardware device or physical token. Software token apps are widely available and implemented on many Internet services; thus, they are easy to adopt for use as an authentication factor for a private network.

HOTP/TOTP

HMAC-based one-time password (HOTP) tokens, or asynchronous dynamic password tokens, are devices or applications that generate passwords based not on fixed time intervals but on a nonrepeating one-way function, such as a hash or hash message authentication code (HMAC—a type of hash that uses a symmetric key in the hashing process) operation. These tokens often generate a password after the user enters a PIN into the token device. The authentication process commonly includes a challenge and a response in which a server sends the user a PIN and the user enters the PIN to create the password. These tokens have a unique seed (or random number) embedded along with a unique identifier for the device. See the earlier section “CHAP” for a description of this operation.

There is a potential downside to using HOTPs, known as the off-by-one problem. If the non–time-based seed or key synchronization gets desynchronized, the client may be calculating a value that the server has already tossed or has not yet generated. This requires the device to be resynced with the authentication server.

Time-based one-time password (TOTP) tokens, or synchronous dynamic password tokens, are devices or applications that generate passwords at fixed time intervals, such as every 60 seconds. Time-interval tokens must have their clocks synchronized to an authentication server. To authenticate, the user enters the password shown along with a PIN or passphrase as a second factor of authentication. The generated one-time password provides identification, and the PIN/passphrase provides authentication.

Certificate-based authentication

Certificates or digital certificates are a trusted third-party authentication technology derived from asymmetric public key cryptography. Please see Chapter 6 for detailed coverage of digital certificates.

Certificate-based authentication is often a reliable mechanism for verifying the identity of devices, systems, services, applications, networks, and organizations. However, certificates alone are insufficient to identify or authenticate individuals, since the certificate is a digital file and can be lost, stolen, or otherwise abused for impersonation attacks. However, when implemented as a multifactor authentication process, certificates can be a significant improvement in logon security for a wide range of scenarios.

PIV/CAC/smart card

Smartcards (Figure 4.8) are credit card–sized IDs, badges, or security passes with embedded integrated circuit chips. They can contain information about the authorized bearer that can be used for identification and/or authentication purposes. Some smartcards can even process information or store reasonable amounts of data in a memory chip. Many smartcards are used as the means of hardware-based removable media storage for digital certificates. This enables users to carry a credit card–sized device on their person, which is then used as an element in multifactor authentication, specifically supporting certificate authentication as one of those factors.

A smartcard may be known by several terms:

- An identity token containing integrated circuits (ICs)

- A processor IC card

- An IC card with an ISO 7816 interface

Smartcards are often viewed as a complete security solution, but they should not be considered complete by themselves. Like any single security mechanism, smartcards are subject to weaknesses and vulnerabilities. They can fall prey to physical attacks, logical attacks, Trojan horse attacks, or social engineering attacks.

Memory cards are machine-readable ID cards with a magnetic strip or a read-only chip, like a credit card, a debit card, or an ATM card. Memory cards can retain a small amount of data but are unable to process data like a smartcard. Memory cards often function as a type of two-factor control: the card is something you have, and its PIN is something you know. However, memory cards are easy to copy or duplicate and are insufficient for authentication purposes in a secure environment.

The Common Access Card (CAC) is the name given to the smartcard used by the U.S. government and military for authentication purposes. Although the CAC name was assigned by the Department of Defense (DoD), the same technology is widely used in commercial environments. This smartcard is used to host credentials, specifically digital certificates, that can be used to grant access to a facility or to a computer terminal.

Personal identification verification (PIV) cards, such as badges, identification cards, and security IDs, are forms of physical identification and/or electronic access control devices. A badge can be as simple as a name tag indicating whether you’re a valid employee or a visitor. Or it can be as complex as a smartcard or token device that employs multifactor authentication to verify and prove your identity and provide authentication and authorization to access a facility, specific rooms, or secured workstations. Badges often include pictures, magnetic strips with encoded data, and personal details to help a security guard verify identity.

Badges can be used in environments in which physical access is primarily controlled by security guards. In such conditions, the badge serves as a visual identification tool for the guards. They can verify your identity by comparing your picture to your person and consult a printed or electronic roster of authorized personnel to determine whether you have valid access.

Badges can also serve in environments guarded by scanning devices rather than security guards. In such conditions, a badge can be used either for identification or for authentication. When a badge is used for identification, it’s swiped in a device, and then the badge owner must provide one or more authentication factors, such as a password, passphrase, or biological trait (if a biometric device is used). When a badge is used for authentication, the badge owner provides an ID, username, and so on, and then swipes the badge to authenticate.

IEEE 802.1x

IEEE 802.1x is a port-based authentication mechanism. It’s based on Extensible Authentication Protocol (EAP) and is commonly used in closed-environment wireless networks. However, 802.1x isn’t exclusively used on wireless access points (WAPs); it can also be used on firewalls, proxies, VPN gateways, and other locations where an authentication handoff service is desired. Think of 802.1x as an authentication proxy. When you wish to use an existing authentication system rather than configure another, 802.1x lets you do that.

When 802.1x is in use, it makes a port-based decision about whether to allow or deny a connection based on the authentication of a user or service. 802.1x was initially used to compensate for the weaknesses of Wired Equivalent Privacy (WEP), but today it’s often used as a component in more complex authentication and connection-management systems, including Remote Authentication Dial-In User Service (RADIUS), Diameter, Cisco System’s Terminal Access Controller Access-Control System Plus (TACACS+), and Network Access Control (NAC).

Like many technologies, 802.1x is vulnerable to man-in-the-middle and hijacking attacks because the authentication mechanism occurs only when the connection is established.

802.1x is a standard port-based network-access control that ensures that clients can’t communicate with a resource until proper authentication has taken place. Effectively, 802.1x is a handoff system that allows any device to use the existing network infrastructure’s authentication services. Through the use of 802.1x, other techniques and solutions such as RADIUS, TACACS, certificates, smartcards, token devices, and biometrics can be integrated into any communications system. 802.1x is most often associated with wireless access points, but its use isn’t limited to wireless.

File system security

Filesystem security is usually focused on authorization instead of authentication. To protect a filesystem, either access to the computer through which the storage device is accessed needs to be locked down in order to deny access to anyone not specifically authorized (such as using multifactor authentication), or the storage device should be encrypted to block access to all but the intentionally authorized. See the section “Access control models” earlier in this chapter for details on authorization control via DAC, MAC, RBAC, and others.

Filesystem security should be used in all scenarios to define what access users have. Such access should be granted based on their work responsibilities in order to enable users to complete work tasks without placing the organization at any significant level of additional and unwarranted risk. This concept is known as the principle of least privilege, and it should be adopted and enforced across all means of resource access management.

Database security

Database security is an important part of any organization that uses large sets of data as an essential asset. Without database security efforts, business tasks can be interrupted and confidential information disclosed. The wide array of topics that are part of database security includes aggregation, inference, aggregation, data mining, data warehousing, and data analytics.

Structured Query Language (SQL), the language used to interact with most databases, provides a number of functions that combine records from one or more tables to produce potentially useful information. This process, known as aggregation, is not without its security vulnerabilities. Aggregation attacks are used to collect numerous low-level security items or low-value items and combine them to create something of a higher security level or value.

For example, suppose a low-level military records clerk is responsible for updating records of personnel and equipment as they are transferred from base to base. As part of his duties, this clerk may be granted the database permissions necessary to query and update personnel tables.

The military might not consider an individual transfer request (in other words, Sergeant Jones is being moved from Base X to Base Y) to be classified information. The records clerk has access to that information because he needs it to process Sergeant Jones’s transfer. However, with access to aggregate functions, the records clerk might be able to count the number of troops assigned to each military base around the world. These force levels are often closely guarded military secrets, but the low-ranking records clerk could deduce them by using aggregate functions across a large number of unclassified records.

For this reason, it’s especially important for database security administrators to strictly control access to aggregate functions and adequately assess the potential information they may reveal to unauthorized individuals.

The database security issues posed by inference attacks are very similar to those posed by the threat of data aggregation. Inference attacks involve combining several pieces of nonsensitive information to gain access to information that should be classified at a higher level. However, inference makes use of the human mind’s deductive capacity rather than the raw mathematical ability of modern database platforms.

A commonly cited example of an inference attack is that of the accounting clerk at a large corporation who is allowed to retrieve the total amount the company spends on salaries for use in a top-level report but is not allowed to access the salaries of individual employees. The accounting clerk often has to prepare those reports with effective dates in the past and so is allowed to access the total salary amounts for any day in the past year. Say, for example, that this clerk must also know the hiring and termination dates of various employees and has access to this information. This opens the door for an inference attack. If an employee was the only person hired on a specific date, the accounting clerk can now retrieve the total salary amount on that date and the day before and deduce the salary of that particular employee—sensitive information that the user would not be permitted to access directly.

As with aggregation, the best defense against inference attacks is to maintain constant vigilance over the permissions granted to individual users. Furthermore, intentional blurring of data may be used to prevent the inference of sensitive information. For example, if the accounting clerk were able to retrieve only salary information rounded to the nearest million, he would probably not be able to gain any useful information about individual employees. Finally, you can use database partitioning, dividing up a single database into multiple distinct databases according to content value, risk, and importance, to help subvert these attacks.

Many organizations use large databases, known as data warehouses (a predecessor to the idea of big data), to store large amounts of information from a variety of databases for use with specialized analysis techniques. These data warehouses often contain detailed historical information not normally stored in production databases because of storage limitations or data security concerns.

A data dictionary is commonly used for storing critical information about data, including usage, type, sources, relationships, and formats. Database management software (DBMS) reads the data dictionary to determine access rights for users attempting to access data.

Data mining techniques allow analysts to comb through data warehouses and look for potential correlated information. For example, an analyst might discover that the demand for lightbulbs always increases in the winter months and then use this information when planning pricing and promotion strategies. Data mining techniques result in the development of data models that can be used to predict future activity.

The activity of data mining produces metadata—information about data. Metadata is not exclusively the result of data mining operations; other functions or services can produce metadata as well. Think of metadata from a data mining operation as a concentration of data. It can also be a superset, a subset, or a representation of a larger data set. Metadata can be the important, significant, relevant, abnormal, or aberrant elements from a data set.

One common security example of metadata is that of a security incident report. An incident report is the metadata extracted from a data warehouse of audit logs through the use of a security auditing data mining tool. In most cases, metadata is of a greater value or sensitivity (due to disclosure) than the bulk of data in the warehouse. Thus, metadata is stored in a more secure container known as the data mart.

Data warehouses and data mining are significant to security professionals for two reasons. First, as previously mentioned, data warehouses contain large amounts of potentially sensitive information vulnerable to aggregation and inference attacks, and security practitioners must ensure that adequate access controls and other security measures are in place to safeguard this data. Second, data mining can actually be used as a security tool when it’s used to develop baselines for statistical anomaly–based intrusion detection systems.

Data analytics is the science of raw data examination with the focus of extracting useful information out of the bulk information set. The results of data analytics could focus on important outliers or exceptions to normal or standard items, a summary of all data items, or some focused extraction and organization of interesting information. Data analytics is a growing field as more organizations are gathering an astounding volume of data from their customers and products. The sheer volume of information to be processed has demanded a whole new category of database structures and analysis tools. It has even picked up the nickname of “big data.”

Big data refers to collections of data that have become so large that traditional means of analysis or processing are ineffective, inefficient, and insufficient. Big data involves numerous difficult challenges, including collection, storage, analysis, mining, transfer, distribution, and results presentation. Such large volumes of data have the potential to reveal nuances and idiosyncrasies that more mundane sets of data fail to address. The potential to learn from big data is tremendous, but the burdens of dealing with big data are equally great. As the volume of data increases, the complexity of data analysis increases as well. Big data analysis requires high-performance analytics running on massively parallel or distributed processing systems. With regard to security, organizations are endeavoring to collect an ever more detailed and exhaustive range of event data and access data. This data is collected with the goal of assessing compliance, improving efficiencies, improving productivity, and detecting violations.

A relational database is a means to organize and structure data in a flat two-dimensional table. The row and column–based organizational scheme is widely used, but it isn’t always the best solution. Relational databases can become difficult to manage and use when they grow extremely large, especially if they’re poorly designed and managed. Their performance can be slowed when significant numbers of simultaneous users perform queries. And they might not support data mapping needed by modern complex programming techniques and data structures. In the past, most applications of RDBMSs did not experience any of these potential downsides. However, in today’s era of big data and services the size of Google, Amazon, Twitter, and Facebook, RDBMSs aren’t sufficient solutions to some data-management needs.