Chapter 4

The Information Domain

Intuition is useful in business. But...it isn’t enough.

The Wall Street Journal, October 23, 2007

From the first discussions of enterprise architecture, information management has been the lynchpin of every major strategy to gain control over the increasing complexity of managing IT functionality. Spewak and NIST, TOGAF and Zachman, all began with the management of information.

I use the term information, not data. Originally, the terms were interchangeable, but two factors are changing that:

- First, as the discipline matures, we’ve realized that there’s a great deal of difference between simple, raw data like transaction amounts, and complex, derived data liked predictive analytic outcomes. To call them all data would miss important nuances that are critical to understand in order to manage all types of information successfully. See page 99 for more details.

- Furthermore, another group of professionals who specialize in the visualization of data on screen or page has taken to calling themselves data architects, creating LinkedIn groups and conferences under that name. That’s a very useful specialty, but things get confusing when both trades take the same name.

To avoid any ambiguity, the practice today is to use the term information to describe the full range of business data, from simple to complex, both at rest in physical repositories or in flight using batch files and web services. We refer to this area of IT as the information domain. Information management refers to the architecture and governance processes we use to administrate this domain. The people who set up these management structures are information architects. We try to only use the term “data” when discussing the simplest type of information: raw data created and maintained by operational processes in order to provide core business functionality.

In this book, you’ll find a great deal more discussion of the information domain than of the application or technology domains. There’s a reason for this. As I said in the introduction, I’m not trying to teach anyone how to perform any of the functions in any of the domains. I have to assume you know, or have people who know how to perform these functions: how to model data, how to develop software, how to upgrade hardware, and so forth. The purpose of this book is to tell you how to coordinate all those different efforts into an EAG program that checks two key boxes:

- Spans all the information systems you support (i.e. how to model data at the enterprise level, rather than project by project)

- Integrates with all the other functions (i.e. how your data modeling function needs to integrate with your data security function and application development function)

In the discussion that follows, we aren’t going to try to teach you how to perform the basic functions; there are already whole books dedicated to each with far more information and expertise than I can provide. Instead, we are going to focus on the aspects of each IT function that need special attention when you take those efforts from isolated silos of expertise and make them instead components of an enterprise architecture and governance (EAG) functional framework that spans all functions and all information systems in the enterprise.

In that context, I have more to say about the management of information than about applications or technology. Although the entire focus of computer technology in business is to improve the efficiency of managing information, most companies have been focusing on the hardware and software, not the information that the hardware and software was supposed to be supporting.

The information domain is the weakest link in corporate IT today. Many companies have great EAG programs in place to manage hardware and software, but still practically ignore information management as a discipline.

This is why I feel driven to speak at such length in the pages ahead about the information domain; because most of us are doing a poor job of it. There are far more gaps to address in the information domain than the other two domains put together.

Information architecture in an EAG program

In the context of enterprise architecture and governance (EAG), information architecture suffers from a bit of an identity problem.

Unfortunately, information architecture is still often treated as an IT-centric initiative. When you focus on data for data’s sake, you’re missing the bigger picture. Information is only as valuable as the business processes and decisions it improves. The ultimate goal of an information management program is to generate the greatest return from corporate information assets.

In an EAG program, the information architecture should be second only to business architecture. Once the business has identified a problem or goal, the first questions shouldn’t be about the hardware the solution will sit on. Your first questions should target the information required by the business. These could include:

- How should the data be represented in the enterprise logical data model (entities, attributes, data type and length, relationships, and constraints)?

- What’s the data volume?

- What’s the system of record for this data?

- Is any of the data sensitive?

Only after you understand the information needs should you consider the application requirements needed to collect, maintain, and deliver the information and the technology needed to support those application requirements.

As late as the 1990’s, very few companies formally recognized information as an independent domain equal to the application and technology domains. The application domain rolled up to the CIO, and the technology domain rolled up to the CTO. However, the information domain, if it existed at all, was usually subservient to one of those two in the org structure. In some cases, the developers in the application domain might perform their own information architecture functions within the scope of the project they’re working on, or might employ a dedicated project-level data modeler. In other cases, the DBAs in the technology domain might serve as data modelers. However, in all these cases, information management was a project-level tactical function, not an enterprise-level strategic one.

I once worked for a large company that developed banking software. One of the products I was responsible for was a Customer Relationship Management (CRM) system that was the golden copy of the customer name and address information across all our product lines. When developing features for this product, each project followed the same flow:

- The business would give us requirements for new functionality.

- The data modelers would model the required data through several layers of abstraction, eventually producing an updated physical model. This physical model was instantiated as both DDL to create tables, and XML to give to the application developers.

- The first team of application developers would create simple data maintenance CRUD (Create, Read, Update, and Delete) services. These simple data access and maintenance services are commonly and collectively known as Data as a Service, or DaaS.

- Then a second team of developers would develop front-end applications which orchestrated those DaaS services together into complex business process services (Software as a Service, or SaaS).

- A third team would then expose the functionality to our users through a web-based user interface.

- Technology architects would address issues with the Enterprise Service Bus (ESB), network access, firewalls, server capacity, and the like.

In this example, the business gives the initial direction, but, true to Spewak’s vision, everything else is driven by information.

Even today, this isn’t often the way things work at most companies. I think the reason for this has to do with how companies were organized and how they functioned before the advent of EAG. Data had been around for thousands of years and was considered just part of the business. However, when computers came on the scene, we suddenly needed specialized roles to manage all the new hardware and software that came with them. Suddenly all the interest was focused on the shiny new computers, not the information those computers existed to support.

I liken this to someone who buys an expensive sports car, then keeps it locked up in a garage where they can admire it, enhance it with more powerful parts and keep it polished. The purpose of a car isn’t to sit in the garage; a car exists to take you places you want to go. The business doesn’t exist so that IT can assemble the latest technology; the technology is assembled to manage the information assets of the business. Without information, hardware and software have no purpose.

Unfortunately, for most IT organizations, the Information Age isn’t about information. From the beginning, the IT department was supposed to be all about the information, never the computer. The term Information Technology (IT) described the original intent that all this technology existed to support information.

Until recently, the process flow at most companies was that a business request would be routed straight to an application developer with no real guidance regarding enterprise information standards and interoperability. The developer pretty much had free rein to implement the information in any way they thought best. Many of these developers were talented, intelligent people who wanted to write the best code they could for their company, but they were hampered by:

- Lack of experience with information management disciplines

- Lack of an articulated enterprise vision for information

- Lack of guidelines regarding how to best manage information

- Lack of communication with developers from other teams across the enterprise

- Lack of time and money to spend worrying about the bigger picture

As such, any consideration of information architecture was left to the hardware and software professionals. Information Management seldom rose above project-level planning and scope. A data modeler (if they existed at all) reported to the project developer and implemented the project team’s decisions.

Although most companies followed established best practices and defined information architecture as a separate domain of EAG from the very beginning, many remain under the assumption that information architecture is a sub-function of the application or technology domains. They did not create an organization structure with a separate domain for information. This has been changing since 2000, slowly at first, but accelerating each year as companies realize that the central purpose of information management had somehow been lost. Somehow, IT had become all about the hardware and software.

Consider this. Every organization, regardless of size or industry, has a finance function. There’s some person in the organization whose responsibility includes protecting and optimizing the value of the organization’s financial assets. This person is usually the CFO, a direct report to the CEO, and often sits on the board of directors. Likewise, every organization has a similar role that is responsible for technology assets, the CTO. While the financial assets do rely on supporting and enabling technology, the financial assets aren’t a technology domain responsibility. In the same manner, the human assets of a company rely on the technology infrastructure, but HR isn’t a technology domain responsibility.

The growing recognition of the importance of information assets has led to the establishment of a dedicated C-level executive role to manage information assets, the Chief Data Officer (CDO). The CDO manages the information assets. The Chief Analytics Officer (or CAO) manages the things that are done to those information assets to provide insight and make decisions. It took thirty years, but the work of NIST and Spewak is finally beginning to bear fruit in the boardroom.

Why now? I believe that the progress we have seen in the steady rise of the influence of the information domain within enterprise-level IT management is due to more than thirty-year-old books and theories. Whether or not they’ve acted on the knowledge, most companies have recognized the need for enterprise architecture and governance for at least two decades now. But only in the last five years has mainstream IT culture suddenly become interested in information architecture. What’s going on?

I believe the answer lies in looking at how corporate competition has evolved over time.

Information as a competitive differentiator

I believe businesses today are feeling more pressure than ever before to manage their information assets strategically to create a competitive advantage. I see this as the inevitable evolution in the nature of competition in the information age. I have a theory. I believe that every industry follows a standard evolutionary path.

Phase 1 - competing on infrastructure

In the early stages of any industry, companies compete by building out infrastructure. During this phase, financial institutions are rushing to build branch banks on every street corner, car manufacturers are building car lots and showrooms, and telecoms are buying up the rights to put up cell towers. During this phase, you aren’t so much competing against other companies as you are staking your claims on the unwashed masses.

I imagine this phase of competition as a reenactment of the great land rush in Oklahoma. On April 22 of 1889, an estimated 50,000 people waited at a starting line for the noon gun that would release them to rush out and claim up to 160 acres of some of the most valuable land in the United States. Regardless of how you feel about the morality of the Indian Appropriations Act parceling out this “unoccupied” land, this vivid image of a rush to stake a claim is a perfect picture of the earliest phase of competition in every developing industry.

If you have a young niece or nephew just beginning to pursue a degree in computer science who comes to you for advice, this is when you would advise them to focus their studies on technology and infrastructure. “Learn as much as you can about security, LAN/WAN and NAS/SAN.” “Get your Cisco certification and buy a wheelbarrow to take all your money to the bank.” You want to work in the technology domain.

Technological advances can still return a company to this phase of competition overnight. When 4G cellular service technology became widely available and the Feds opened up bidding on the new frequencies, telco carriers suddenly found themselves in another land rush to stake out their claims to 4G tower locations across the country.

Phase 2 - competing on feature/function

At some point, though, the infrastructure rollout saturates the marketplace. There’s a branch bank and car lot on every corner and no more cell tower licenses are available for purchase. No unclaimed customers remain, so you have to figure out how to steal customers from your competitors while protecting your own customer base. At this point, companies begin to compete on feature/function and it becomes an application development arms race. At one time, the only way to access your bank account was to drive to a branch during business hours, park, get out of your car, and walk in to talk to a teller. Then one bank began offering drive through windows where customers didn’t have to get out of their cars. All the customers would leave the bank across the street and rush over to take advantage of this cutting edge feature. Remember those amazing pneumatic chutes? The bank they left would not only have to even the playing field by getting drive through windows, but they would then have to one-up the other bank by rolling out ATMs. And so on. ATMs, telebanking, internet banking – each bank would have to bring out some attractive new feature function in order to up the ante and regain the competitive advantage.

This is the point where you tell your niece or nephew to get into application development. “Learn C++.” “Learn HTML.” “Learn Java and you can name your price.” Companies are always willing to invest their money in the people who are most critical to the work that will make the company competitive. Steer them toward the application domain.

At this point in the competitive landscape, the infrastructure just gets you in the game. You have to have it, but it isn’t a competitive differentiator. No matter what your industry is, you need to ante into the game with technology before you can increase the bid with feature/function. At some point, the technology playing field is level, and you must turn to feature/function to remain competitive.

As with technology, there are times when new functionality becomes available and disrupts the industry. Companies who thought they were past all this suddenly find themselves competing over feature/function again. Blockchain, for example, is a disruptive game changer. Once the functionality overcomes the performance and scale issues facing that technology today, companies in every industry will find themselves fighting to roll out blockchain-enabled features that will differentiate them from their competition.

However, you can’t depend forever on your ability to keep coming up with the next killer app. At some point, the feature/function landscape becomes a level playing field. My little community bank has a great internet banking site. How do you remain competitive? Do you desperately throw your corporate investment into finding the next killer app? Or do you change tactics once again?

Phase 3 - competing on information

Eventually, in every industry, the focus shifts again and companies begin to compete on information. It’s not enough to know your product; you have to know your customer:

- You must know more about your customers and prospects than your competitors and you must know it faster.

- You must be able to apply that knowledge to generate business decisions.

- You must offer individualized products and services that appeal to your target market while maximizing your profit.

- You move from one-size-fits-all to products and services customized for a customer segment of one.

This is the point when you would encourage your niece or nephew to learn data modeling, or learn statistical analysis. “Whatever you do, do it in the information domain because the future is in information, not application or technology.” Forbes consistently lists data scientist salaries well above the highest developer salaries15 and Harvard Business Review recently named “data scientist” the sexiest job of the twenty-first century.16

Companies are outsourcing more and more these days. Hardware and software infrastructure are quickly becoming cloud-based virtual commodities. Application development is being outsourced to offshore contractors who do great work for a quarter of what you’ll have to pay locally. In any stage of competition, businesses are going to try to hang on to those skills that they perceive as enabling their competitive differentiators. If a company believes that the key to their success is their ability to cleanse, integrate, and mine information, then that’s where they’re going to be offering the most competitive salaries in an attempt to attract and retain the best people.

When I was working in the telecommunications industry, I thought it was very interesting to compare the advertising strategies of the big carriers:

- Verizon focused their advertising on technology. Consider their advertising message, “Can you hear me now?” They very intentionally focused on telling the public that Verizon had the best network infrastructure. They were competing on technology and infrastructure. A phone was a piece of hardware.

- Sprint and AT&T had advertising that seemed to focus on feature/function. You could watch the game, manage your stock portfolio, and listen to music all on one device. They were competing on applications. A phone was an application platform.

- Alltel’s campaign focused on customer-centricity and flexibility. Rollover minutes, discounted rates on calls to your friends and family, and no fees for early termination. Their tagline – “Come and get your love” – was intended to communicate that they will treat you like a person, not a number. A phone was a social connection. If I traveled to the other side of the country, I would see exactly the same Verizon commercial that aired back home, but the Alltel commercials would be completely customized for the local region. Alltel was competing with information.

Today, information is the key to competition. You must have a strong information architecture team and you must build an information-centric IT infrastructure to ante up. Companies that don’t have strong information management programs are steadily losing ground in the market, wondering why everyone else seems to be playing a different game.

Phase 4 – What’s next?

I don’t know what the next phase of competition will look like. The future will certainly bring more disruptive technological advances like the coming flood of internet connected devices or quantum computing, and there will be application breakthroughs like blockchain that create new opportunities for companies to differentiate themselves and grow their customer base. Those aren’t new phases of competition so much as brief returns to a previous phase until the playing field is level once more.

If technology infrastructure were required before we could begin competing on application feature/function, and applications were required before we could begin competing on information, then it would seem that the next phase of game-changing corporate competition would be built on top of well-managed information. Personally, I have my eyes on artificial intelligence and machine learning. I think they’re much more than a new application. They’re a completely new kind of competitive advantage.

However, like all the previous phases of competition, each new phase will require a solid mastery of what’s come before. Whatever comes next, you won’t be able to jump on board until you first master competing on information. I can’t predict the future, but I can predict that it’s going to require rock-solid information management.

Recommended Reading:

Competing on Analytics: The New Science of Winning, by Thomas H. Davenport and Jeanne G. Harris, 2007, Harvard Business School Publishing.

Two kinds of people – knowledge sharers versus knowledge hoarders

There are two kinds of people in this world: those who think knowledge is more valuable when shared, and those who think knowledge is more valuable when withheld.

We’ve all seen people who are undisputed experts at what they do, yet still become a serious bottleneck in your organization because they can’t seem to pass their knowledge and experience on to others. They insist that it will be faster for them to do the task themselves than to take the time to teach someone else, especially when the less experienced person will inevitably make mistakes which the more experienced person will then have to spend even more time correcting.

Architects don’t spring forth fully formed from the forehead of God. Every architect started in another role. This is a good thing – you don’t want someone in an architect role who has no real-world experience and expertise. Architects should always be prepared to share their knowledge and experience. The primary responsibility of an architect is creating and communicating a shared vision. It’s a teaching position as much as anything else.

It’s quite common for a developer to do a great job applying their expertise, but be very poor at sharing it. In a developer position, it would certainly be better if your senior resources mentored others, but they can provide value to the company even if they have zero communication skills. But an architect that can’t mentor others isn’t an architect at all – just someone who wants the power and pay grade but isn’t willing or able to do the job.

Some people seem to have more difficulty letting go of control than others. In the end, it may come down to an issue of trust. Do you trust the people who are supposed to do that job to get it done without your help? They may struggle with it and cause you additional work for a while, but eventually they’ll catch on. This is better for everyone. Not only is there more bandwidth and backup for critical functions, but the day-to-day stuff can be handled by the people who are actually being paid to do that job, allowing the architect to focus on the tasks they are being paid to focus on.

For myself, I’m far too easily bored to keep all that knowledge close to the vest. If I don’t make it easy for someone else to do this job, then I’ll be stuck with it forever, and I’ll never get to do the exciting new stuff.

Trends

Before we begin discussing how to manage the business’ information at the enterprise level, it’s important to understand a couple of trends that are shaping how we manage that information.

The coupling of operational and analytical

The functions that comprise the lifecycle of information are, at a high level, the same for both operational systems which process data in many small transactions (Online Transaction Processing, or OLTP) and for analytical data marts and data warehouses that process many rows at a time in a few large batches (Online Analytical Processing, or OLAP). While operational systems are responsible for the day-to-day operations of the business, they are, in the end, little more than data maintenance applications, adding, updating, and deleting records. The resulting data produced by these systems is the raw, unrefined source material for analytical systems.

That sounds as if I’m saying that the operational systems are trivial to develop, and the true work is happening in the analytical systems like the data marts and the data warehouse. In the information domain, there’s some truth to this. Other than the initial data modeling, the bulk of the complexity of developing an operational system lies in the business logic, which is the responsibility of the application domain. Honestly, very few systems have ever been purely operational or analytical. Most of our core operational systems have always had some form of reporting and analytics. In fact, there has been a little-discussed trend in the last fifteen years to break down the barriers between operational and analytical. We’re blurring lines.

Back in the day, there were recognized best practices in place to keep operational systems separate from analytical systems. The operational system was the business. It was dedicated to transactional performance and availability. Analytical systems were seen as just the opposite: poorly performing resource hogs not critical to business operations. All analytical functions were deliberately separated from operational functions so that they didn’t impact the critical business systems. Analytical systems were typically lower tier environments, often without redundancy and failover, with large scheduled maintenance windows when the analytical systems were completely unavailable. The performance expectations were quite low, and the system itself wasn’t considered mission critical. Often analytical systems weren’t even part of the disaster recovery plan because, in the days before competing on information, the business could get along quite easily without analytical platforms until things got back to normal. Because of these factors, you didn’t want your analytical processes to be in the middle of an operational process flow. Operational processes could feed data into an analytical process, but you didn’t want an operational process depending on the output of an analytical process.

But over the years, as companies have had to rely more and more on analytically derived information to compete, the analytical systems have become much more closely coupled with the operational. We no longer deem it poor practice to intertwine the two in the same process flow, provided both are designed with acceptable performance, availability, and latency.

One example of the power of this trend is in making real-time decisions. At the telecom company where I worked as an EA architect, we were investigating the potential of combining operational and analytical information into a real-time offer generation solution. Our enterprise architecture team looked at several commercial products and made a recommendation to the business executives. The solution, with hardware, software, and the development necessary to integrate it into our environment was going to cost upwards of three million dollars. We made a compelling case for the business investment, but before signing the check, the executives wanted us to put together a proof of concept (POC).

At the time, if one of our customers called our automated support line and chose all the correct menu options to navigate to the “I hate your company and want to drop my contract” selection, they got routed to a call center in San Antonio, Texas. In that call center, every time the phone rang it was someone who was in a very bad mood and hated our company. The call center had a very high turnover and none of the operators lasted long enough to be trusted to make decisions about granting bill credits or discounts on a new phone. At best, they could put the customer on hold while they went and asked their supervisor to investigate. At that time, the call center was able to save about 1000 calls per month. “Save” in this context meant that the customer hung up without dropping their service. They might call back tomorrow, but in this call, they were saved.

To demonstrate the power of real-time decisions, we spun up a little project where one Java developer wrote some code in about two weeks to combine analytically derived information like profitability, customer segment, and risk score with real-time information entered by the operator to auto-generate a pre-approved decision. If the customer was calling about a problem with their phone, they’ve been a profitable customer for ten years, and are only two months away from the contract renewal date when we would have given them a brand new phone for renewing their contract, then just give them a new phone! Tell them to go down to the store and pick one out, on you. If they weren’t a profitable customer, then thank them for their business and give them the phone number of our competition.

We defined about five different decision rules for things like billing issues, network problems, and competitor offers. We figured that almost all of these people were going to leave the company anyway, so there was very little risk to implementing the solution. With a development investment of only 80 hours, the POC cost the business very little.

Two months after implementing the POC, the number of saves had increased from 1000 to 11,000 customers a month, almost all of which were permanent saves. This little two-week POC was saving 10,000 additional customers per month! At that time, the way the telecom industry worked was that if a customer signed a two-year contract, the company would subsidize about $300 of the cost of a new phone. The company would go in the hole $300 in order to bring a new customer on board, on the premise that they’ll remain a customer long enough to eventually break even and become profitable. There are lots of subtle costs for replacing lost customers, but at the very least, it was going to cost that $300. So saving 10,000 customers a month translated to an easily demonstrated reduction in $3 million dollars in expenses – every month! All for a POC developed by one developer in two weeks. This is the power of combining operational and analytical information. This is competing on information.

We got the funding. Nevertheless, they never did let us take down that little POC. It became a permanent application, with many enhancements over the years.

In the discussion of information lifecycle functions later in this book, we’re going to combine the discussion of operational and analytical functions. For the information lifecycle, the functions you perform on analytical data are a superset of functions you perform on operational data, so the discussion may appear a little analytics-heavy. Get used to it. If you haven’t realized it yet, go back and read about the three phases of competition again (page 91). The entire IT industry is racing to compete on information. Knowledge is power, my friend!

Data monetization

Data monetization has been gaining more and more attention in recent years, changing the way we think about and manage information assets. InformationWeek (January 2016) claims that, for many companies, data is their single most valuable asset.17 If so, then how much is data worth? If data is a corporate asset, does it belong on the financial statement alongside hardware assets and cash reserves?

There’s no doubt that information has monetary value. Companies buy and sell information every day. There are very successful companies whose entire business model is based on buying and selling information.

Unfortunately, Generally Accepted Accounting Principles (GAAP) doesn’t currently specify how to measure the value of information as a corporate asset. However, while there’s no standard, there’s also no specific ruling against the practice, and many companies are already listing information as an asset on their corporate balance sheets.

If we treated data as a corporate asset in the same sense as financial assets, the business would be much more concerned with where data assets were located and who was using them for what purposes. How are we investing in those assets to improve their value? How are we leveraging those assets to increase revenue, reduce expenses, and grow the company? Data monetization is changing the way we think about information assets and how we manage information-related IT functions.

The companies that are changing the way we do business are the ones who are thinking about data differently, such as Google, Amazon, and Facebook. It isn’t a matter of collecting data for data’s sake. It’s a matter of understanding the problems facing the business, and knowing how to use information to solve those problems. Information only has value to the degree that it impacts your company. If it isn’t being used by the business, information has no actual value.

In the context of this book, in order to efficiently and effectively manage the complexity of IT functionality on behalf of the business, it’s important that you realize that the business’ data is a valuable asset that IT is helping to manage. The functional framework will help us to not forget the information lifecycle functions in the press of other demands.

Unfortunately, for most organizations, making information a true corporate asset is a major cultural shift. But that shift is coming whether you’re ready for it or not. An IT organization that is truly serving its business will help, not hinder this transformational shift.

Information lifecycle functions

In the last couple of decades, most companies have realized the strategic importance of being able to use their data competitively and are trying to put together an information management program for the enterprise. Frankly, companies who haven’t realized the importance of their information aren’t surviving. Information management “is the gas powering the operating model’s engine, enabling organizations to more effectively communicate and reach their specific goals.”18

The information domain of the functional framework addresses the infrastructure necessary to compete on information, breaking down the complexities of managing information into a series of simple functions.

At the detail level, there’s no one functional framework that can be dropped in to every corporation’s information management program. Different organizations will have different drivers for data security, accuracy, latency, and for how information is delivered. They’ll have different requirements for data volume and performance. Different organization will begin their journey with the components of their functional framework at different levels of maturity. In most cases, the real world includes accommodating a legacy infrastructure that will impact one or more components of the functional framework for years to come.

Throughout the remainder of this book, in the discussion of all three IT domains, I am not attempting to replace or even summarize the many very fine resources available on each separate IT function. Instead, we’ll discuss these functions from the standpoint of how they must be coordinated and configured in order to fit into the overall vision of a company’s IT management program. We have to assume you already know how to perform these functions. This book will focus on the higher-level task of integrating these practices with other functions in a larger picture that spans functions and spans departments across your organization in a coordinated enterprise strategy for managing IT complexity, as dictated by your functional framework. Knowing how to perform a function within the scope of one information system is one thing. It’s another thing to manage that function across information systems, and integrate that function into other functions. The rest of this book will focus on these latter challenges.

In one sense, the central purpose of this book has already been achieved – the introduction of the functional framework. The rest of this book is really just my attempt to highlight some of the areas where I think IT is struggling today, and illustrate by example how the functional framework helps clarify and organize the thought process, simplifying the process of controlling the chaos that is IT.

So, let’s talk about the information domain of the framework. First, what’s the best way to organize information management functions? Or, for that matter, the best way to organize functions within any domain of the framework? We want a framework that checks the following boxes:

- A framework that is reduced to an easily graspable concept. Everyone in the organization should be able to easily sketch and understand the EAG napkin drawing.

- A framework that is flexible enough to adapt to changing requirements, best practices, and technology.

- A framework whose organization that reflects the real-world, to instill confidence that the framework encompasses the complete picture. The framework shouldn’t look as if someone loaded a random list of functions into a shotgun and blasted them onto a wall. The organization should reflect some tangible, familiar concept. The organization should be meaningful.

You can use any organization of information management functions you want, but I find that the most easily grasped, flexible, comprehensive organization is to arrange the information domain functions so that they reflect the management of an information lifecycle. Just like hardware and software assets, information has a lifecycle that begins with creation/acquisition, and includes integration, inventory, and management of updates, security, and so forth, until the information asset reaches an end-of-life.

If you had organized your information functions alphabetically, the organization wouldn’t be a reflection of the real world. There’s no way you could be confident that you had captured all the functions, and if you did later discover a function you had missed, it could just as easily go in several different places in the organization depending which of several interchangeable words you used to describe it. The information lifecycle organization, however, is an easily grasped reflection of the real world. Looking at the organization, any big gaps would be readily apparent, and the proper place of any missing function would be pretty obvious. That’s the kind of organization structure we are looking for.

The information domain functions don’t just happen – you have to manage them as an information lifecycle. How? You manage them by setting up that lifecycle of functions in your functional framework.

Before we go much further, let’s dig a little deeper into the meaning of “information” that we touched on briefly earlier in this chapter. What is this information we are managing? Information comes in many flavors. One categorization that’s going to be very useful for the purpose of the discussion in this book is the following breakdown:

Raw data is the names, addresses, account numbers, purchases, etc. that you manipulate during the normal course of operating your business. It’s the core information you collect, generate, and maintain in order to operate your business every day. It doesn’t include data collected purely for analytical purposes.

Contextual data is information that puts the raw data in context. This information is often purchased (i.e. retail demographics), but it can also be collected directly. Like raw data, contextual data is just basic facts. The main difference is that contextual information isn’t critical to running your business; it’s critical to running the business smarter and more efficiently.

An insurance company wouldn’t normally need to know a person’s education level in order to pay an insurance claim. Education level just adds context that the business finds useful, perhaps as a predictor of lifestyle or attitude. For the insurance industry, then, education level would be contextual information, not raw operational data. For a university, a person’s education level may indeed be raw data needed to run their business, perhaps a pre-requisite to certain courses. Gender may be core business data to a health insurance company, necessary to pay certain types of claims, but gender may be contextual data at a banking institution, whose checking and savings products are not gender specific.

Contextual data is appended to raw data, but isn’t derived from it. Things like income level, marital status, education level, age, and gender are contextual if they aren’t included in your operational raw business data, and have to be acquired and appended.

Knowledge is information that is derived from the raw and contextual information. Customer profitability scores, churn risk scores, and customer segmentation. An enterprise-wide unique person identifier generated by a CDI/MDM (Customer Data Integration/Master Data Management) tool would be derived knowledge.

Decisions are actions taken based upon information. Examples of decisions include generated customer offers, equipment renewal orders, automated account blocks for suspected fraud, or patient risk-based outreach lists. Decisions are actions based upon raw data, contextual data, and knowledge. We then feed the results of these decisions into applications such as vendor order management processes or employee work queues.

The information lifecycle function Enhancing information (page 135) will discuss these different types of information in more detail, but I wanted to introduce them now because the terms are necessary to the discussion of the modeling and consuming information functions.

Modeling information

If you’ve reached the maturity level where your corporation is competing on information, then it’s not your servers or your software that define your company; it’s your information and what you do with it. The two most important assets your company has are its employees and its information. Vendors can replace applications and technology, but they can’t replace your accumulated corporate information assets.

When introducing the information domain earlier, I said that I would be giving it the most attention of the three EAG IT domains, because, despite thirty years of best practice insisting that IT architecture and governance must begin with information management, it’s the least-well managed domain within most companies today. I’m going to spend most of the discussion of the information domain talking about data modeling for the same reason. You can’t manage what you aren’t tracking. If you want to manage enterprise information, you have to understand it, and that begins with data modeling.

Previously, when discussing data monetization, we talked about information assets being as important as financial assets. Just as you would for your financial assets, you want to keep track of your information assets: where they are, how they are being used, which are returning value, and who has access to them. Tracking your information assets begins with data modeling.

Data modeling is the name given to the processes and techniques that are used to document information assets. Modern data modeling techniques have been around since 1970. They bring clarity and understanding to the information structures within database repositories and web services, and optimize those structures for efficient support of information access.

This book isn’t intended to teach or replace any of those techniques. Instead, this is a discussion of where those data modeling techniques fit into your overall program to manage IT complexity at the enterprise level. Assuming you know the techniques of data modeling, what else do you need to be thinking about when you ramp data modeling up from a project or departmental effort to an enterprise-wide function, part of a holistic effort to manage all aspects of your IT infrastructure across the enterprise?

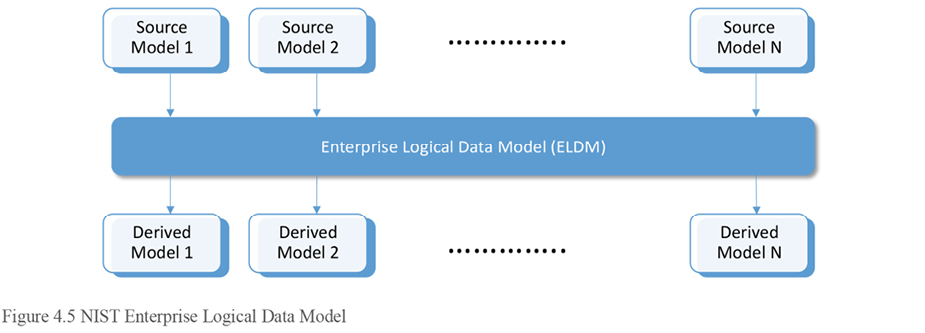

In 1989, The National Institute of Standards and Technology (NIST) introduced the idea of an Enterprise Logical Data Model (ELDM). The ELDM wasn’t about making a data model for any one repository. Instead, it was about building a conceptual data model for all the information across the entire enterprise. The ELDM was the centerpiece of NIST’s concept of a three-tiered modeling approach.

In this approach, the enterprise data architect would first gather all of the pre-existing source data models, including both internal and external applications. From those disparate sources, and from discussions with the business regarding how they conceptualize the company’s information assets, one master data model of the company’s information would be created and maintained.

The ELDM was never designed to be a physical repository. Its purpose isn’t focused on a single project or information system, but on the enterprise. It’s a single data model that shows how the business understands the entities and attributes in single holistic picture, as if all the applications shared one, single, all-encompassing enterprise database. The intent was that this ELDW would let you design one logical model making modeling decisions in one place rather than repeatedly in each repository.

If you try to manage all the different data models in your company separately, it’s simply too overwhelming, and conceptual differences will be introduced that will cause ongoing problems until they’re resolved. By creating one master data model in one place, when you need to implement a model for a project or system, you simply derive it from the ELDM, copying out the subset that you need. There are some segments of our industry today which call the ELDM a Target Information Model (or TIM), but I think that’s misleading, as the intention is for it to become the source, from which many targets are instantiated. I prefer the original ELDM terminology. Of course, the sources and targets of the three-tier NIST model are source and targets only in terms of model building, not information flow.

These derived models will still need modification in order to support application-specific data needs. However, we can do most of the business-related modeling in one consistent model, using consistent terminology, relationships, constraints, and reference data.

Of course, creating a master model of the entire enterprise is a huge task, one that would take many years to complete. This dedicated effort is unlikely to ever be justified in terms of business value. The architectural goal is an enterprise logical model. b Raw data is the names, addresses, account numbers, and purchases that you collect during the normal course of operating your business. It’s the core information you collect, generate, and maintain in order to operate your business every data. It doesn’t include data collected purely for analytical purposes.

Contextual data is information that puts the raw data in context. This information is often purchased (i.e. retail demographics), but it can also be collected directly. Like raw data, contextual data is just basic facts. The main difference is that contextual information isn’t critical to running your business; it’s critical to running the business smarter and more efficiently. But like all strategic goals, we implement that goal over time, in a series of stages consisting of smaller projects that align with business priorities and provide immediate value. Start your enterprise logical model with the entities needed by active business projects, and add to it over time. Like all of IT, the ELDM should only be implemented as it provides real business value, not as an end in itself.

In a company where the technology domain dominates the decision-making, you may find that the data modeling function is the responsibility of the technology team DBAs. There’s no real thought to enterprise data modeling. The modeling that does occur is very technology-centric, with a scope suited to the technology domain focus on server performance, efficiency of disk space utilization, etc. The technology domain isn’t focused on enterprise information management; they are focused on managing pieces of technology.

In other cases, people only model data within the scope of an application development project. In these cases, data may be modeled by the application developer. However, application developers are also not focused on information. Their focus, and rightly so, is on the implementation of business logic in their applications. They aren’t going to make the information easy to manage; they are going to make the applications easy to write. Document databases like MongoDB are especially well adapted to this mentality.

But enterprise information architecture isn’t supposed to be a sub-function of any of the other domains of enterprise architecture. It requires the attention of resources with specific training and a focus on information assets.

I’ve been a fan of IBM’s Rational Data Architect (now renamed Infosphere Data Architect) for many years. From the beginning it had these features:

- The ability to work with multiple data models at once, rather than individually, in isolation.

- Two completely different models could be mapped to each other. This let you reverse engineer the repositories in your legacy sources into models within the tool, and, when you built the enterprise logical data model (ELDM) in another model, document which element in the ELDM model maps to which element in each of these information system models. Between derived models and mapped models, all of your information across the enterprise can map to a single ELDM. The tool didn’t work with a single model at a time, but allowed you to work, simultaneously, with all of the models and with the relationships between them. You could easily use this to trace inheritance back to see where specific ELDM attributes originated.

- Likewise, when you derive new implementation models from the ELDM, the tool could document how they map, so that all the data elements these derived models tie back to the same master ELDM information concepts. You could perform impact analysis when you make a conceptual change to the ELDM in order to identify potential impacts in the derived information system repositories.

- The tool could be used to generate both DDL (for implementing physical repositories) and XML (for implementing data services), resulting in consistency of information representation and exchange throughout the enterprise. It was designed from the beginning to exchange these structures with the application development tools and the technology team database management tools using the Zachman model artifacts.

- The data model could be shared between multiple data modelers and data architects. Most major modeling tools these days have the capability of allowing multiple modelers to work on the same model at the same time, but this is usually separately-purchased functionality. Typically, this works like a code repository, where the modeler makes changes locally, then checks them in to a central repository, where they are merged and distributed.

When this suite of products first came out, it was the only major data modeling tool to have these features. Since that time, other data modeling tools are also adopting more of an enterprise data modeling approach, allowing the modelers to work with multiple models at once, coordinating, and mapping the data attributes across them.

These functions separate an application-level or project-level data modeling tool from an enterprise-level data modeling tool. If you want to implement an information management program to help your enterprise manage complexity, you have to start thinking at the enterprise level of abstraction, eliminating silos of redundant, inconsistent data modeling effort. If you aren’t managing your enterprise data with an enterprise logical data model, then you’ll be overwhelmed by the effort of trying to manually:

- Maintain consistency across physical repositories

- Document the data dictionary over and over within each of your information system models

- Make the same modeling decisions over and over again within each of your information system data models

- Deal with inconsistent, locally-managed reference data

- Maintain consistency across information exchange structures, and consistency between exchange structures and the physical repositories.

- Attempt to manage data at the enterprise level using tools that manage it at the application level.

The data modeling function must integrate with the:

- Data quality function, so that new entities and attributes created in the model can also be added to the metadata repository

- Security function, to identify sensitive data elements consistently across information systems

- Software development functions, to coordinate and simplify the integration of information domain data structures and application domain business logic

The functional framework is a great tool for considering one IT function in light of all the others.

Big data

A big data repository, also known as a data lake, is an array of servers working together as a single distributed solution, using very low cost, commodity hardware and software. It can be used to store both structured and unstructured data. You can use many different tools to manipulate data in a data lake. Some of these tools are similar to tools for relational databases. Other tools are unique to data lakes. Big data repositories are finding their way into more and more business solutions as the products continue to mature.

Why mention big data in a chapter on data modeling? Because big data repositories (by their very design) don’t require data modeling. When the data is initially inserted into a relational database, the data structure must be defined. With a big data repository, modelers just pour raw data into the lake. It’s up to the consumer to interpret the data when the data is read. We describe this difference as “schema-on-insert” versus “schema-on-read.” This difference will impact your long-term vision for enterprise data modeling (and information delivery, data quality, and data security).

There are pros and cons to both approaches. Relational databases typically run on much more expensive hardware and software, and require significant time and development effort to integrate new types of data. However, once the data is loaded into a well-designed data model, it has a great deal of integrity and can be safely, easily and efficiently consumed by end users who aren’t necessarily data experts using many off-the-shelf business tools.

A data lake, on the other hand, is orders of magnitude faster to on-board new types of data. The cost to the business of adding new data is very low, and the time required is almost negligible. One of the major challenges of such a solution, however, is how well the data can be trusted and how easily it can be integrated. It’s up to the user to collect, collate, and interpret the raw data. It is unwise to turn the average end-user loose in a data lake for ad-hoc reporting. When users who are not data experts use poor-quality data to make business decisions, there exists serious risk.

Think of the differences between the two as the differences between using an encyclopedia and using the internet when writing a scholarly paper about an historical event. The encyclopedia set is vetted by experts, but has limited information. It can take years to add new information. On the other hand, lots of lay people (who are not experts) are constantly adding things to the internet. The internet has a lot more information, is almost constantly updated, and it costs a lot less than an encyclopedia, but you really have to use a lot more judgment when dealing with the information. You have to know how much you can trust the different sources it contains and may need to work harder to integrate the different styles of content.

Big data solutions like data lakes are growing more and more broadly useful each year. If you aren’t working with them already, you will be soon. It’s an amazingly useful technology, but I don’t think big data is ever going to completely replace relational databases, at least in the foreseeable future. Relational databases are simply too useful at cleansing, analyzing, and reporting data with defined attribute properties and relationships.

The following two business cases for big data are common, given the current state of technology:

- Big data can be used to offload the kinds of work that relational databases don’t do well, such as working with free-form text or very sparsely populated, dynamically defined data elements. Relational databases do many things very well, but they don’t do everything. There have always been some types of transforms and types of analysis that are better done outside of a database, and the results plugged back in when the outsourced work is done. There have always been some kinds of data that are difficult to store in a rigid schema. Analysis of free form text or of data streams that dynamically define new elements on the fly is better done in a big data solution.

- Big data can serve as a fast-to-fail proof of concept (POC) environment for testing new ideas without going through the delays and overhead of moving data that may never prove useful into a more rigorously designed relational repository. If the idea proves valuable in the POC, then it can be hardened with the data model and data quality inherently found in a relational database. Big data can be a development sandbox for expert business users to trial new ideas. Just be aware that you need to limit the users to those who truly understand the data, and limit the rollout to the development environment only, not to production.

Today, relational databases are the clear default for supporting business solutions, with data lakes being used primarily for tasks that relational databases don’t do well. Some industry experts foresee data lakes gradually becoming more and more the default platform for data storage. Vendors who sell applications for big data platforms certainly have a vested interest in convincing you that this is true. These pundits believe that there’ll be a tipping point where data lakes will become the default, and relational databases will only be used for the things that data lakes don’t do well. Moreover, the list of things that data lakes do well is growing every year. Even heavy-duty statistical analysis tools such as SA, R, and MADlib already support Hadoop big data natively.

However, my opinion is that the future will be less a choice between the two technologies than a merging of the two technologies. One of the primary challenges of using a data lake in a production environment is the lack of a defined schema. We’re already seeing products like Hive allow the imposition of relational database constraints on data lakes, blurring the distinction between the two. More than likely, big data and relational database technologies will merge over time, with the result containing features of both.

It wouldn’t be unusual for database vendors to adopt and incorporate emerging technologies. Several modern relational databases have the following features:

- Can be configured as either Symmetric Multi-Processing (SMP) or Massively Parallel Processing (MPP)

- Can have some tables in the repository defined as row-based and other tables as columnar

- Can leverage solid state disks and in-memory implementations

- Are rolling out implementations that leverage arrays of low-cost commodity servers

The major relational database vendors have consistently realized that no one underlying technology is going to be ideal for all purposes. Rather than lose revenue to another vendor, these databases assimilate the technological advances into their own infrastructure. If big-name database manufacturers begin losing significant revenue to big data solutions, how long do you think it will take them to begin incorporating big data functionality? I predict that relational databases will expand their infrastructure once again to include big data functionality, just as we are seeing big data expand its functionality to include things like relational table modeling. As the two technologies grow, I believe they will inevitably merge. I predict that all the major database vendors like Oracle and IBM will be purchasing big data companies and their intellectual property within the next five years, in the same way they’ve acquired and incorporated columnar, in memory, and XML web service technology. In the future, I predict that some data will be schema-less, and other data in the same repositories will have all the formal constraints of today’s relational databases.

I also predict that the current big data hype will deflate as it comes face-to-face with the cold, hard reality of supporting large-scale, real-world business systems rather than niche solutions targeted for the sweet spot of the current state of big data technology. As more relational capability is adopted by big data platforms, the commodity nodes in a data lake will function very much like nodes in a relational MPP environment such as Teradata, Netezza, or DB2 DPF . As an MPP database solution, data lakes will need to find a way to overcome the same kinds of challenges that MPP relational databases face. An MPP solution is excellent at operational and analytical operations over a single table and over tables where related data can be guaranteed to be collocated on the same node. However, the solutions don’t perform nearly as well in situations where data in two different tables that aren’t co-located must be united (see in depth discussion of this in the hardware domain chapter, page 234). Other emerging technologies that distribute data over an array of servers (including NoSQL databases such as MongoDB) have this same co-location challenge, making the technology difficult to use for large-scale enterprise analytical applications.

Regardless of what component lies at the center of your infrastructure, both big data and relational databases are here for the foreseeable future. You need to determine at the enterprise level how they will be used. What are your policies for big data? How do you secure sensitive data in a schema-less data lake? Perhaps the most important to deal with immediately, what kind of review and approval is needed before standing up a data lake? I’ve seen individual departments stand up data lake and NoSQL solutions with no other justification than that the solution let the application development teams bypass the use of DBAs and data architects entirely, and let their developers create corporate applications without any information management oversight. Get a handle on your big data strategy before it becomes a problem, or it’ll quickly be out of your control.

Zones of information within an enterprise repository

Most application repositories, whether operational or analytical, have several zones of information, though they may not be formally recognized as such. Figure 4.6 demonstrates one way in which information zones within a single repository might be defined in order to create policies and standards specific to different types of information.

You can use this same classification in repositories across the enterprise, allowing you to make your architectural and governance much simpler and more consistent. Thinking about information zones in this way allows you to make fewer, broader, more consistent decisions about architectural vision and governance standards, resulting in reduced complexity, greater uniformity, and increased efficiency.

The following table contains a few simple policies, showing how the division of information into zones reduces the amount of documentation and ensures consistency.

If you set up your architecture and governance around these information zones, then you have far fewer decisions to make than if you made your decisions table by table, repository by repository, and the result will be more consistent across the enterprise. With clear, simple guidelines, your development teams will have far fewer questions, and will tend to do the right thing without much oversight.

Layers of information across enterprise repositories

There is a sense in which data flows through these zones, from temp/working zones, to operational zones, to analytical zones. These zones don’t exist solely within a repository; to some degree, they exist across the information infrastructure of the larger enterprise.

Data flows from repository to repository, being consumed, transformed, and enhanced along the way. In a large organization, there can be thousands of databases. Managing that complexity is impossible without some sort of organization – some way of grouping them into categories that can be managed similarly. You need a napkin drawing that explains your enterprise data repository strategy in a few simple terms. Unlike the application and technology domains, few companies have an enterprise-wide strategy for managing information. Oh, they manage information, but often this happens more intuitively than systematically.

Figure 4.7 represents an enterprise-wide organization of information structures. Note that this is the structure of information repositories, not the structure of application software or the hardware that supports them.

There are several layers of repositories in the conceptual picture. At the top are your operational repositories, where the raw data that runs the business is created and maintained. Beneath that are two transport layers for exchanging data between information systems. The first transport layer is the enterprise bus, over which data is exchanged using real-time web service transactions. The second is the secure file transport area, through which batch data is exchanged using files. At the bottom of the picture are the analytical repositories, the data marts, and the data warehouse.

The advantage of thinking of your company’s information assets in these layers is, again, that you can make broad decisions for each layer, rather than have to plan and document repository by repository. In order to simplify and reduce the complexity of managing the company’s IT functions, the EAG architectural vision for the information modeling function in the information domain of the functional framework should include some such high-level information model. All the repositories should be classified by layer, and all the tables within each repository classified by information zone, such as user-facing tables, reference tables, and temp tables.

The next few sections will discuss these information asset layers in a little more detail.

Operational layer

Operational Repositories contain the raw transactional data that runs the enterprise. This is the information created and maintained by customer systems, account systems, web portals, HR systems, and so forth. These contain business data that is maintained, for the most part, by inserting, updating, and deleting a single record at a time. From a data management standpoint, it doesn’t matter if these repositories are within your firewall or are cloud-based services. These repositories are the System of Record (SoR) for the business data they create and maintain. In an integrated IT infrastructure, information systems will need access to all of the enterprise business data spread across dozens of applications, each of which is the system of record for some subset of the ELDM.

What you do not want to do is distribute complete copies of data throughout the enterprise so that each operational layer information system has its own local copy. This wastes disk space, network bandwidth, and developer time. It causes synchronization and data integrity issues. Each local copy of the data is another potential security breach, and another component that must be managed in a disaster recovery environment.

Instead, the information in these operational repositories should be exposed through service interfaces to your application infrastructure, a practice known as Data as a Service (DaaS). These data services allow the data to be loosely coupled, meaning that the data could be accessed in the same manner by any application that called the correct services interface, and that the application could access any data, provided the data was exposed using the same services interface. Loosely coupled data makes your infrastructure more flexible, easier to enhance, and faster to adapt to changing requirements. Loosely coupled data can be moved to a different data storage technology, or even to the cloud with no impact, so long as the service interface, security, and performance are sufficient. Any application you build or buy today should include the capacity to expose data through transactional web services. Applications should expose data not only internally to their own business logic, but also externally to the rest of the corporate infrastructure. Build or buy with this extra volume requirement in mind.

In some legacy software, the system data is housed in a proprietary storage format or is constrained in some other way such that it can’t be accessed directly even though it is the system of record. In those cases only, you may be forced to stand up an Operational Data Store (ODS) – a copy of your source data with as little latency as possible, which can be used in turn to provide DaaS to the rest of your application infrastructure. The ODS serves as the services interface that the application is unable to provide. When the system forces you to set up an operational data store, that ODS becomes the System of Access (SoA) for operational reads, but the OLTP repository is still the system of record. An ODS typically only exposes read-only services to other applications. Any maintenance of the data must go back through the real source system, after which it will naturally flow down and update the ODS. Since most intra-application DaaS service calls are select statements, this approach works well for most business needs.

When evaluating any new third party software, one of the information domain requirements should be that the application exposes its data externally through services. No new application you build or buy should require an operational data store in order for other applications to access its information via data services. An ODS is only justified for legacy software in your infrastructure. Figure 4.8 shows an ODS in every operational information system. Hopefully, this won’t be the case in your environment!

Information exchange layer

Ideally, information is exchanged between applications in only two ways:

- On a transactional basis, via data services

- In bulk, through secure file transfer

Your enterprise service bus coordinates the exchange of services. The secure file transfer area coordinates the exchange of files.

No single project is likely to want to bear the expense of setting up these areas of your information exchange layer. The cost and complexity is simply too much for one project to bear. Your EAG team will have to build this layer. This may be one of those cases where the EAG team must go request money for an IT-centric project. However, the information exchange layer isn’t a case of IT wanting to play with the latest toys. These information transport areas provide significant value to the business in the long term.

Enterprise Service Bus

An Enterprise Service Bus (ESB) is a means of passing a very high volume of small chunks of transactional data through your network in real time, without having to stage data through files. ESB’s support both software as a service (SaaS) and data as a service (DaaS).

It’s possible to implement services without an ESB using a point-to-point architecture. While better than no services interface at all, a point-to-point architecture forces the source and target to handle a great deal of the overhead of configuring connection points, authenticating the service, orchestrating services into larger business processes and translating from one service protocol to another. You may implement point-to-point as a tactical step toward your strategic vision for managing your corporation’s information assets, but you should make plans to move to a service bus as soon as it can be justified. The business isn’t paying IT’s salary so IT can develop software in-house that could have been purchased off the shelf for less money with more features.

Ideally, in a Services Oriented Architecture (SOA), all data will be accessed via services. This is true even for a software application accessing its own data inside the information system.

Accessing data via services is what creates the loosely coupled architecture that insulates the applications from changes to the repository implementation and vice versa. If a new field is added to a table, applications can still read data with the old service. Likewise, applications can be upgraded or replaced with limited impact, as long as the new/updated application supports the same data service interfaces. For example, you may initially develop a customer data hub in-house to assign a master ID to every customer . As long as all of your operational systems access that information via services, you may one day be able to replace that homegrown functionality with a commercial product, provided you can translate your homegrown interface services to and from those required by the commercial software.

DaaS Services developed for one use are immediately available for any other information system that needs the same data. DaaS services initially developed for the web presentation business logic can be reused by the mobile device presentation logic. This speeds development time and reduces testing.

Data within these services should be modeled on your ELDM model. In a third-party product with a proprietary data model, the data services within the application will reflect the native third-party model. But in this case those data services should still be translated to reflect your ELDM before exposing that information outside the system. Your data modeling tool used to generate the ELDM should be able to generate both DDL to build physical repositories and XML to build data services. This way, you design the information once in the ELDM, then, from that ELDM model, generate consistent representations for various subsets of information at rest and in motion throughout the enterprise.

Most ESB appliance solutions today have the capacity to translate service protocols, for example, from SOAP to REST. One information system can expose data in one format, and, through the ESB, another information system can consume the data in another format. From an information management standpoint, one of the most useful ESB translations is the ability to take a database-stored procedure and translate that procedure name, input, and output into SOAP or REST services. This is a very useful approach for several reasons:

- You don’t have to stand up dedicated web servers to support Java DaaS services developed in-house. You can create a simple stored procedure in the database and let the ESB translation tool expose it as a service. Fewer components means the solution is easier to manage, faster, and your disaster recovery plan is simpler.

- By forcing database access to go through stored procedures, you’re limiting the type of data access that can be done, mitigating the risk of inappropriate access. You can grant a user access to a stored procedure without granting them access to the data in the repository that the stored procedure accesses.

- The stored procedure can be developed to include embedded logging, insuring that there’s an audit trail for all service-based data access. Auditors like an architecture where data can’t be accessed without generating an audit trail.

- When you alter the database structure, you use the same people and tools to modify table structures and to modify the stored procedure. This minimizes the number of resources needed to implement the change. You don’t have to get a DBA and a Java developer to make the database change and the associated service change.

There are several considerations to keep in mind when building data services. Data services should be quite small in size. I once worked at a company with a very powerful ESB infrastructure, yet the system was always bogged down, performing poorly because the developers had coded a one-stop shop “get me everything I could possibly need” service, so that they only had to call a single get_account_info( ) service. Unfortunately, this account information included all the customer information, all the product information, and all the transaction information, all in one multi-megabyte service.