7

Design of Stretched Cluster on VxRail

In the previous chapter, you learned about the design of the VxRail vSAN Standard and two-node cluster, including the network, hardware, and software requirements, and some failure scenarios. When you plan to design a disaster recovery solution or a small-sized VMware environment, the VxRail vSAN two-node cluster is a great choice.

Disaster recovery is a critical factor for all systems and virtual infrastructures. VxRail Appliance delivers different disaster recovery solutions. VxRail Stretched Cluster is one of these options; it can provide the synchronous replication of data across two sites located at separate geographical locations. This solution allows the management of the failure of an entire site and supports the different Failure Tolerance Methods (FTMs). In this chapter, you will see an overview of VxRail Stretched Cluster, the planning and designing of VxRail Stretched Cluster, and the different failure scenarios.

This chapter includes the following main topics:

- Overview of VxRail Stretched Cluster

- Design of VxRail Stretched Cluster

- A scenario using VxRail Stretched Cluster

- Failure scenarios of VxRail Stretched Cluster

Overview of VxRail Stretched Cluster

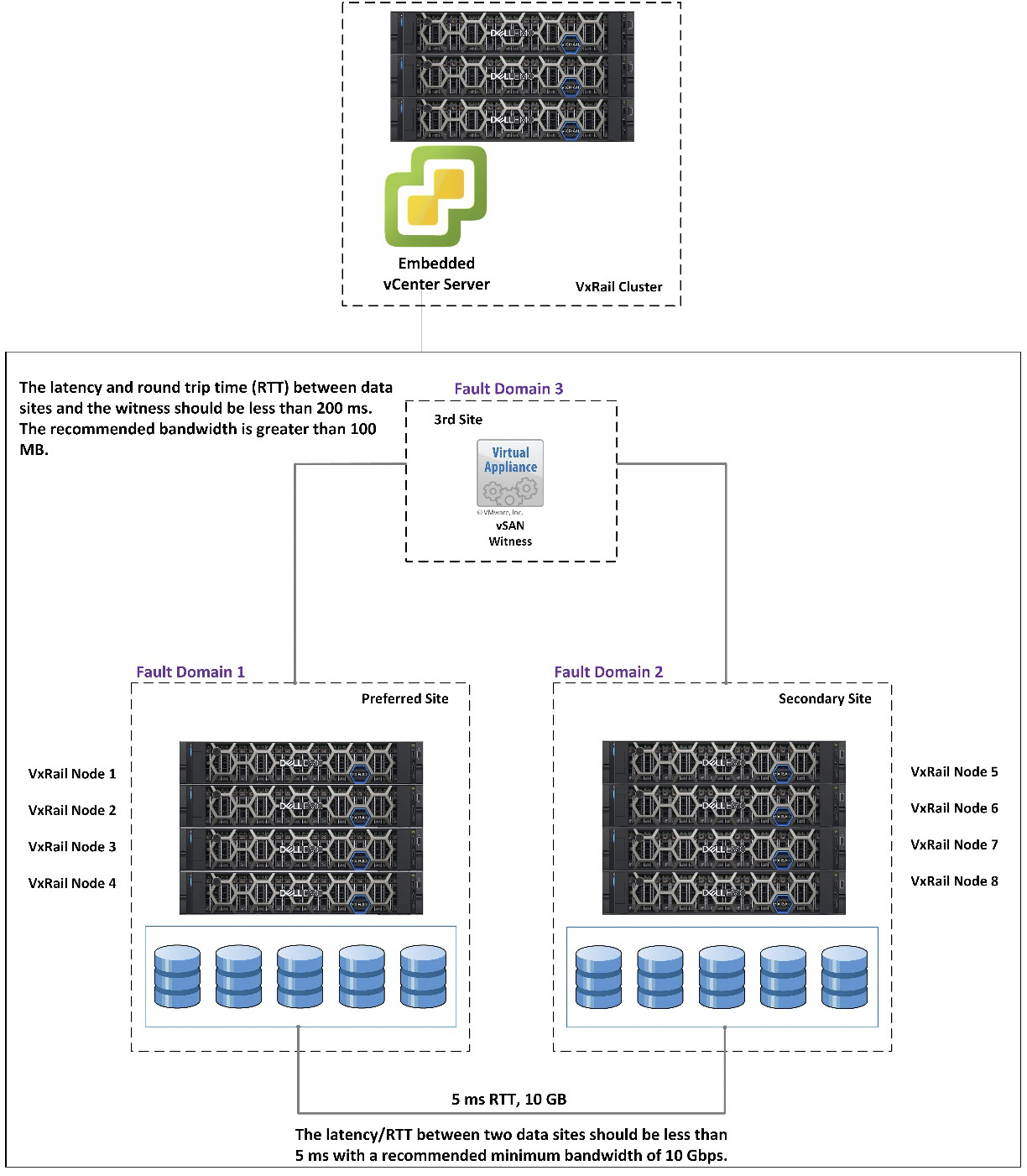

VxRail Stretched Cluster is designed for an active-active data center solution, and it can provide redundancy and failure protection across two separate physical sites. In Figure 7.1, VxRail Stretched Cluster is built between two individual sites, including two data sites (Preferred Site and Secondary Site) and one witness site. The witness host deploys a third site that contains the witness components of the VM objects. The VMs can continue to provide the services when one of the data sites fails. This is the main feature of VxRail Stretched Cluster:

Figure 7.1 – VxRail Stretched Cluster with eight nodes

If you choose the deployment of VxRail Stretched Cluster, you need to consider the following:

- The minimum supported configuration for VxRail Stretched Cluster is 1+1+1 (two VxRail nodes plus one witness). The maximum supported configuration is 15+15+1 (30 VxRail nodes plus 1 witness).

- VxRail Stretched Cluster must be deployed across two individual physical sites in an active-active configuration.

- Starting from VxRail version 4.7.0, the configurations of 2+2+1 (four VxRail nodes plus one witness) are supported.

- Starting from VxRail version 7.0, either a VxRail vCenter Server or a customer-supplied vCenter Server instance can be used for VxRail Stretched Cluster, but a customer-supplied vCenter Server instance is recommended.

- The vSAN witness appliance or a physical ESXi host can be used as a witness. The vSAN witness appliance includes a vSphere license. The physical host requires a vSphere license.

- The vSAN witness cannot be a part of VxRail Stretched Cluster. Also, it must have one VMkernel adapter with vSAN traffic enabled and connected to all nodes in VxRail Stretched Cluster. The witness uses a separate VMkernel adapter for managing traffic.

- Both Layer 2 and Layer 3 support the vSAN connectivity between the data nodes across two sites. A static route is required if it is Layer 2, but in Layer 3, it is not required.

- The maximum supported Round Trip Time (RTT) between the data nodes and vSAN witness is 200 milliseconds. For configurations up to 10+10+1, latency or RTT less than or equal to 200 milliseconds is acceptable. For configuration greater than 10+10+1, latency or RTT less than or equal to 100 milliseconds is required.

- The maximum supported RTT among the data nodes is 5 milliseconds.

- A bandwidth of 10 GB between the data sites is recommended.

- A bandwidth of 100 Mb between the data sites and the witness site is recommended.

Next, let’s look at its architecture.

The architecture of VxRail Stretched Cluster

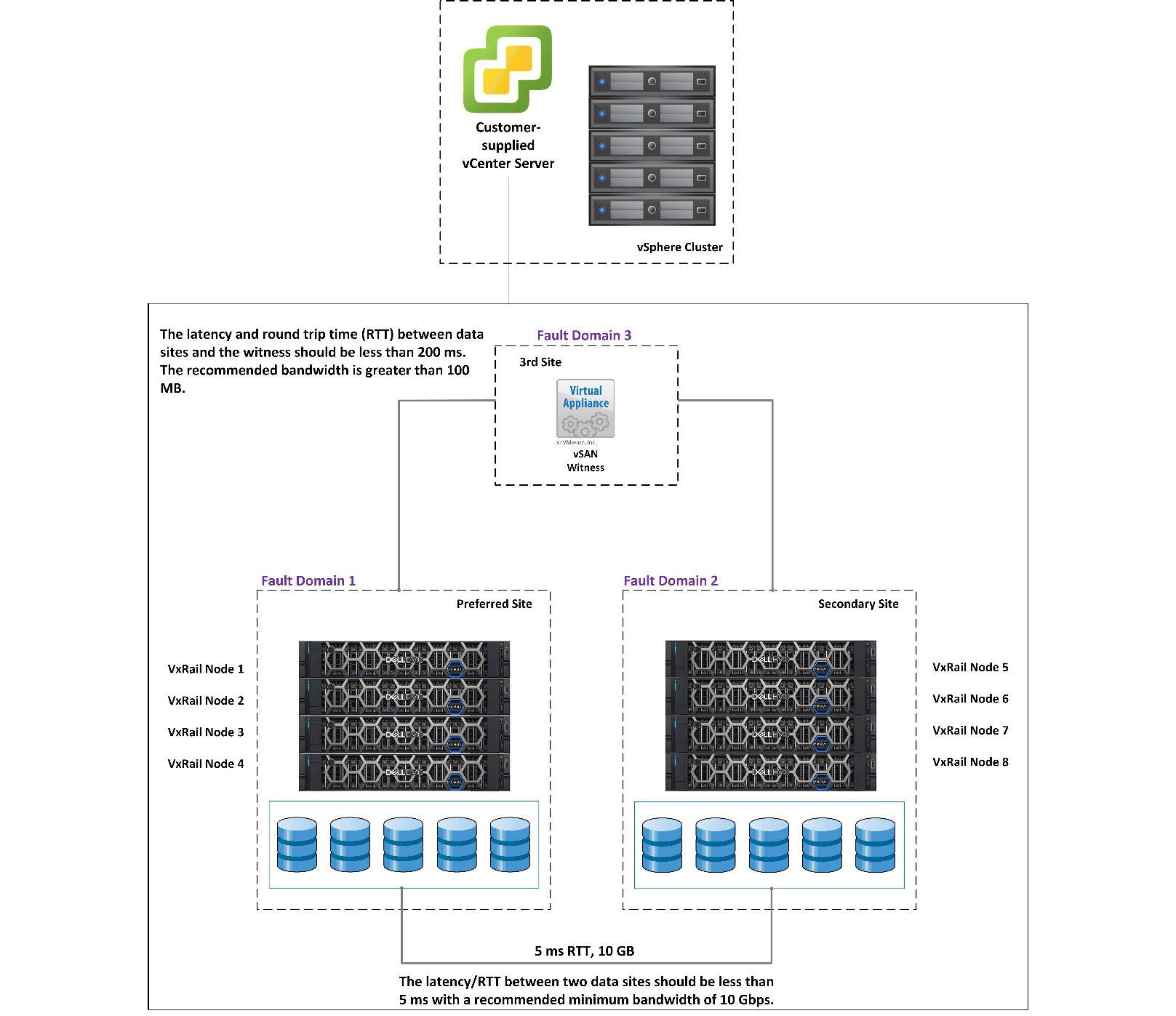

The architecture of VxRail Stretched Cluster includes the data nodes and one witness node. In Figure 7.2, each node is configured as a Fault Domain (FD); VxRail nodes 1, 2, 3, and 4 are configured to Fault Domain 1 (preferred), VxRail nodes 5, 6, 7, and 8 are configured to Fault Domain 2 (secondary), and vSAN Witness is configured to Fault Domain 3. It supports three types of site disaster tolerance—dual-site mirroring, keeping data in the preferred site, and keeping data in the secondary site:

Figure 7.2 – Three FDs of VxRail Stretched Cluster

When three FDs are available and the Failure to Tolerate (FTT) parameter is set to RAID-1, a VM is created on VxRail Stretched Cluster that has mirror protection, one copy of the data on FD 1 and the second copy of the data on FD 2. The witness component is placed on the vSAN witness.

The next section will discuss the types of site disaster tolerance for VxRail Stretched Cluster.

Site disaster tolerance

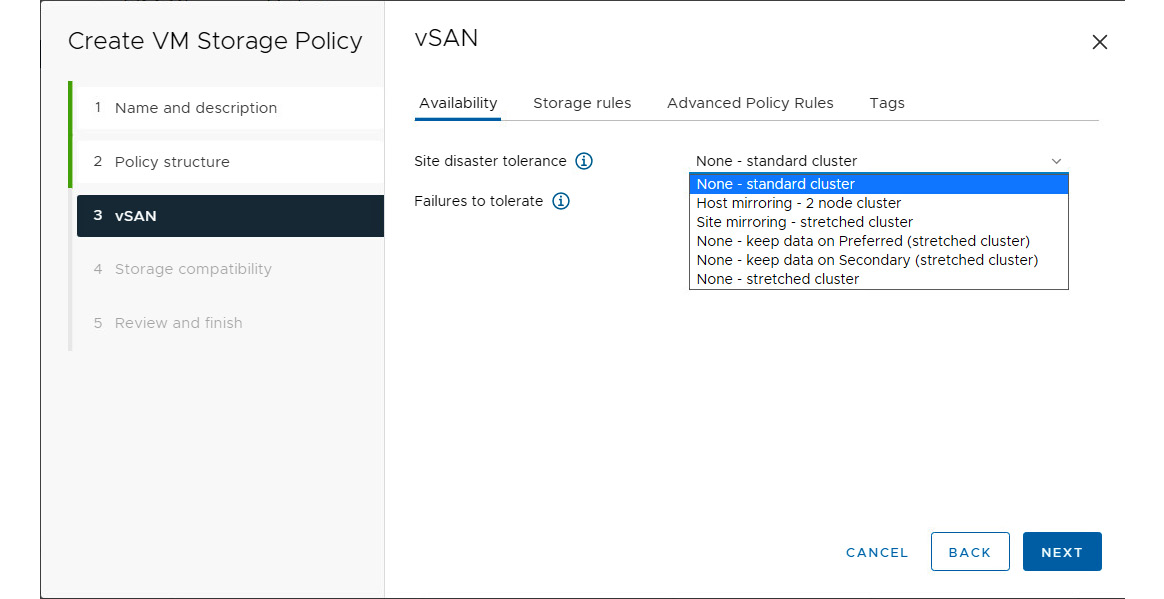

When you create a storage policy for VxRail Stretched Cluster, two parameters are required: site disaster tolerance and FTT. For VxRail Stretched Cluster, you can choose the following options from the Site disaster tolerance menu:

- Site mirroring - stretched cluster: This enables data protection across the preferred and secondary sites

- None - keep data on Preferred (stretched cluster): This keeps the data on the preferred site only and there is no cross-site protection

- None - keep data on Secondary (stretched cluster): This keeps the data on the secondary site only and there is no cross-site protection

If you do not deploy VxRail Stretched Cluster, you can choose the following options from the Site disaster tolerance menu:

- None - standard cluster: This is used to deploy a standalone VxRail cluster

- Host mirroring - 2 node cluster: This is used to deploy the VxRail vSAN two-node cluster

- None - stretched cluster: This is used to deploy the non-stretched cluster

You can see an overview of these parameters in Figure 7.3:

Figure 7.3 – Site disaster tolerance parameters

The next section will discuss the Failures to tolerate options for VxRail Stretched Cluster.

Failures to tolerate

VxRail Stretched Cluster can support different types of protection; these parameters are used to define the protection methodologies. You can select the following options from the Failures to tolerate menu:

Figure 7.4 – Failures to tolerate parameters

Here are brief descriptions of what these options mean:

- No data redundancy: No data protection in both the preferred and secondary sites.

- No data redundancy with host affinity: No data protection in the preferred site or secondary site, and it is controlled by host affinity rules.

- 1 failure - RAID-1 (Mirroring): It is a mirrored volume and gives good performance across the preferred and secondary sites, and full redundancy with 200% capacity usage.

- 1 failure - RAID-5 (Erasure Coding): It is a stripped volume with parity. It has good performance with redundancy that requires a minimum of five nodes per site.

- 2 failures - RAID-1 (Mirroring): It is a mirrored volume and there is good performance across the preferred and secondary sites, and full redundancy with 600% capacity usage.

- 2 failures - RAID-6 (Erasure Coding): It is a stripped volume with double parity. It has good performance with redundancy that requires a minimum of seven nodes per site.

- 3 failures - RAID-1 (Mirroring): It is a mirrored volume and there is good performance across the preferred and secondary sites, and full redundancy with 800% capacity usage.

The following sections will discuss sample configurations of a VM storage policy in VxRail Stretched Cluster, including non-dual-site mirroring, RAID-1, and RAID-5 configurations.

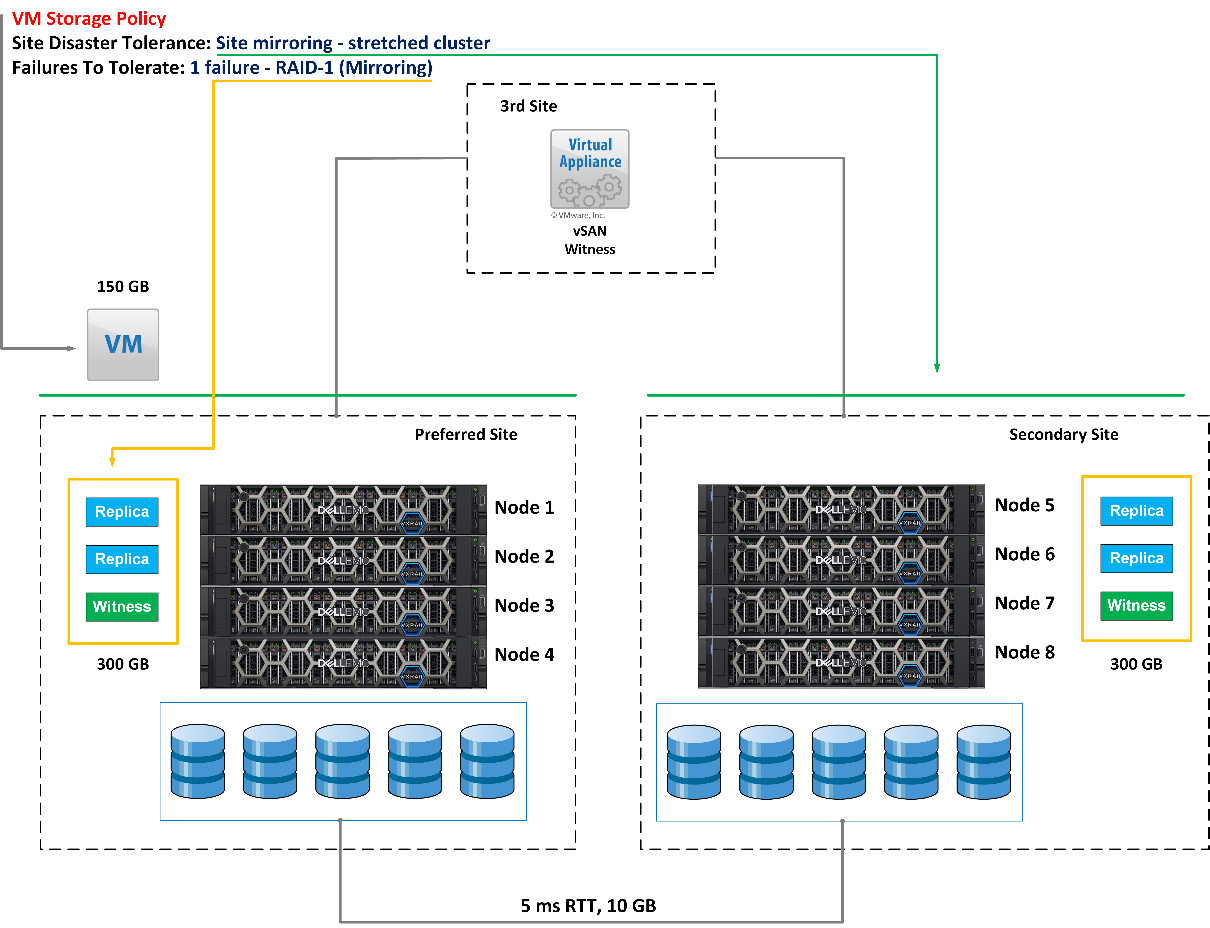

Site mirroring with RAID-1

Figure 7.5 shows VxRail Stretched Cluster with eight nodes; nodes 1, 2, 3, and 4 are installed in the preferred site and nodes 5, 6, 7, and 8 are installed in the secondary site. One VM (100 GB storage) is running in the preferred site; the VM storage policy with the following parameters is found in this VM:

- Site Disaster Tolerance: Site mirroring - stretched cluster

- Failures To Tolerate: 1 failure - RAID-1 (Mirroring)

Figure 7.5 – Site mirroring with RAID-1 in VxRail Stretched Cluster

In the example of Figure 7.5, due to the Site Disaster Tolerance parameter being set to Site mirroring - stretched cluster, the VM can be protected by RAID-1 mirroring across the preferred and secondary sites. The Failures to tolerate parameter is set to 1 failure - RAID-1 (Mirroring), so the VM can also be protected by RAID-1 mirroring in the preferred and secondary sites.

Using this VM storage policy setting, the VM has a full copy of Replica if one of the sites fails. Also, the VM has a full data copy if one of the four nodes fails in each site (preferred and secondary sites). If you choose this protection option, it can deliver site resilience and mirroring protection per site, but it requires four times more storage requirements than the protected VM. In this example, the storage capacity of the VM is 100 GB, and it requires 400 GB storage capacity in VxRail Stretched Cluster if you apply this storage policy to the VM. In the next section, we will discuss site mirroring with RAID-5 protection in VxRail Stretched Cluster across two sites.

Site mirroring with RAID-5

Figure 7.6 shows VxRail Stretched Cluster with eight nodes; nodes 1, 2, 3, and 4 are installed in the preferred site and nodes 5, 6, 7, and 8 are installed in the secondary site. One VM (100 GB storage) is running in the preferred site; the VM storage policy with the following parameters is found in this VM:

- Site Disaster Tolerance: Site mirroring - stretched cluster

- Failures To Tolerate: 1 failure - RAID-5 (Erasure Coding)

Figure 7.6 – Site mirroring with RAID-5 in VxRail Stretched Cluster

In this example, due to the Site Disaster Tolerance parameter being set to Site mirroring - stretched cluster, the VM can be protected by RAID-1 mirroring across the preferred and secondary sites. If the Failures To Tolerate parameter is set to 1 failure - RAID-5 (Erasure Coding), the VM can be protected by RAID-5 protection in the preferred and secondary sites.

Using these VM Storage Policy settings, the VM has a full copy of Replica if one of the sites fails. The VM also has RAID-5 protection if one of the four nodes fails in each site (preferred and secondary sites). If you choose this protection option, it can deliver site resilience and RAID-5 protection per site, but it requires 2.66 times the protected VM’s storage requirements. In this example, the storage capacity of the VM is 100 GB, and it requires 266 GB storage capacity in VxRail Stretched Cluster if you apply this VM storage policy to the VM. Compared to the example in Figure 7.5, the storage capacity requirement is lower than RAID-1 mirroring. In the next section, we will discuss site affinity rules in VxRail Stretched Cluster across two sites.

Important note

VxRail All-Flash Stretched Cluster only supports RAID-5 (Erasure Coding) and RAID-6 (Erasure Coding).

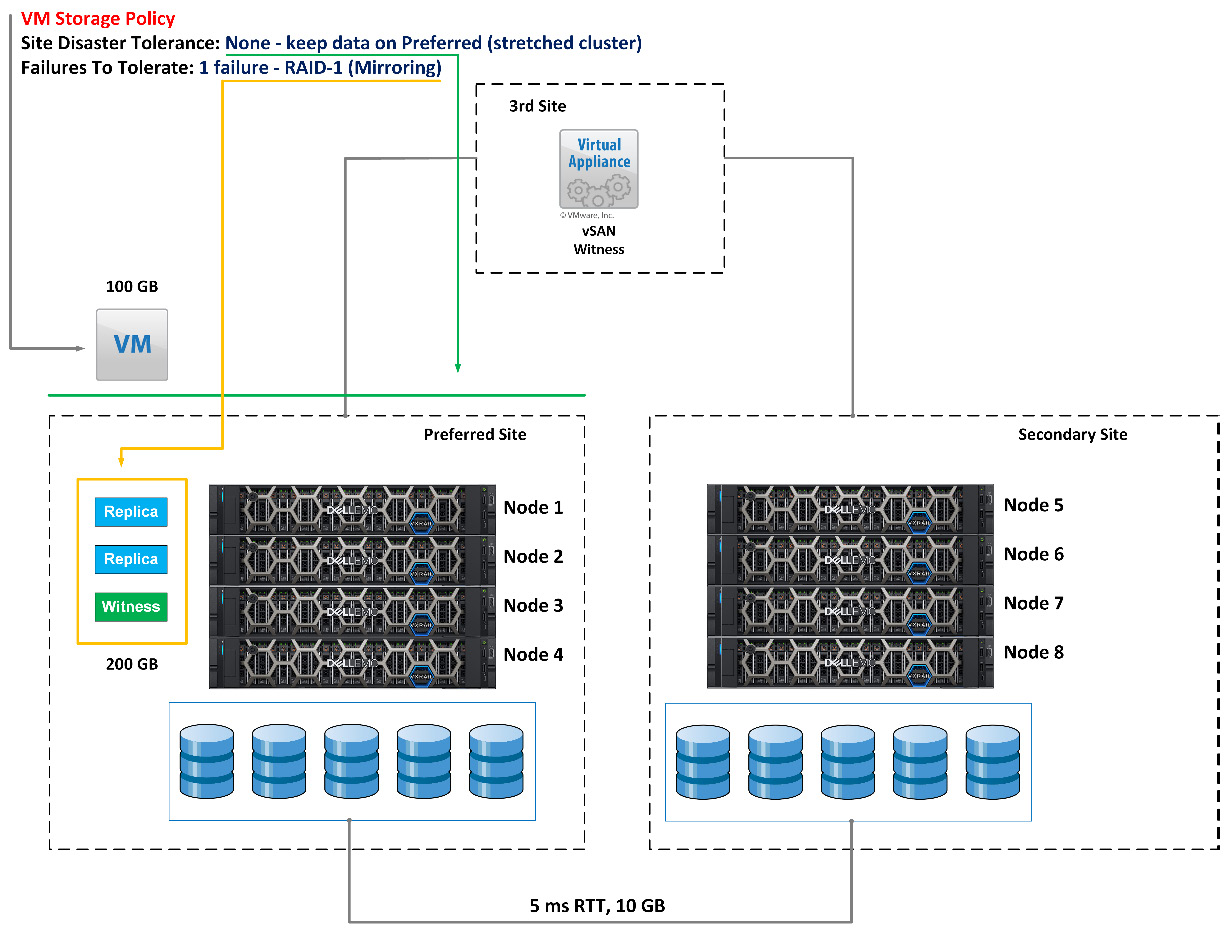

Keeping the data in the preferred site

Figure 7.7 shows VxRail Stretched Cluster with eight nodes; nodes 1, 2, 3, and 4 are installed in the preferred site and nodes 5, 6, 7, and 8 are installed in the secondary site. One VM (100 GB storage) is running in the preferred site; the VM storage policy with the following parameters is found in this VM:

- Site Disaster Tolerance: None - keep data on Preferred (stretched cluster)

- Failures To Tolerate: 1 failure - RAID-1 (Mirroring)

Figure 7.7 – Site affinity rules in VxRail Stretched Cluster

In this example, due to the Site Disaster Tolerance parameter being set to None - keep data on Preferred (stretched cluster), all VMs are only stored in the preferred site. Also, the Failures To Tolerate parameter is set to 1 failure - RAID-1 (Mirroring), so the VM can also be protected by RAID-1 in the preferred site.

Using these VM storage policy settings, the VM has a full data copy stored in the nodes in the preferred site. The VM also has RAID-1 protection if one of four nodes fails in the preferred site. If you choose this protection option, it cannot deliver site resilience and only provides RAID-1 protection in one site based on affinity rule settings, but it requires twice the amount of storage requirements as the protected VM. In this example, the storage capacity of the VM is 100 GB, and it requires 200 GB storage capacity in VxRail Stretched Cluster if you apply this VM storage policy to the VM. Compared to the examples in Figure 7.5 and Figure 7.6, the storage capacity requirement is lower in this scenario. The preceding examples help you understand VM storage policies in VxRail Stretched Cluster.

Table 7.1 shows a summary of all VM storage policy settings in VxRail Stretched Cluster, assuming the VM’s storage capacity is 100 GB:

|

Site Availability |

Capacity Requirement in Preferred Site |

Capacity Requirement in Secondary Site |

Capacity Requirement |

|

Dual-site mirroring without redundancy |

100 GB |

100 GB |

200 GB (2x) |

|

Dual-site mirroring with RAID-1 (one failure) |

200 GB |

200 GB |

400 GB (4x) |

|

Dual-site mirroring with RAID-1 (two failures) |

300 GB |

300 GB |

600 GB (6x) |

|

Dual-site mirroring with RAID-1 (three failures) |

400 GB |

400 GB |

800 GB (8x) |

|

Dual-site mirroring with RAID-5 (one failure) |

133 GB |

133 GB |

266 GB (2.66x) |

|

Dual-site mirroring with RAID-6 (two failures) |

150 GB |

150 GB |

300 GB (3x) |

|

Preferred site only with RAID-1 (one failure) |

200 GB |

0 |

200 GB (2x) |

|

Preferred site only with RAID-1 (two failures) |

300 GB |

0 |

300 GB (3x) |

|

Preferred site only with RAID-1 (three failures) |

400 GB |

0 |

400 GB (4x) |

|

Preferred site only with RAID-5 (one failure) |

133 GB |

0 |

133 GB (1.3x) |

|

Preferred site only with RAID-6 (two failures) |

150 GB |

0 |

150 GB (1.5x) |

|

Secondary site only with RAID-1 (one failure) |

0 |

200 GB |

200 GB (2x) |

|

Secondary site only with RAID-1 (two failures) |

0 |

300 GB |

300 GB (3x) |

|

Secondary site only with RAID-1 (three failures) |

0 |

400 GB |

400 GB (4x) |

|

Secondary site only with RAID-5 (one failure) |

0 |

133 GB |

133 GB (1.3x) |

|

Secondary site only with RAID-6 (two failures) |

0 |

150 GB |

150 GB (1.5x) |

Table 7.1 – Summary of all VM storage policy settings in VxRail Stretched Cluster

The following section will discuss the design of VxRail Stretched Cluster, including Witness Traffic Separation (WTS) and vCenter Server options.

Design of VxRail Stretched Cluster

When you design VxRail Stretched Cluster, network infrastructure is an important factor for its deployment. This section will discuss WTS, which is an optional network configuration. We will discuss VxRail Stretched Cluster with and without WTS. This feature provides a flexible network configuration by separating the network of data nodes into data nodes and then data nodes to witness traffic. All operational tasks of VxRail Stretched Cluster are delivered with a vCenter Server instance; it supports two deployment options. The following sections will discuss the design of WTS and the VMware vCenter Server deployment options.

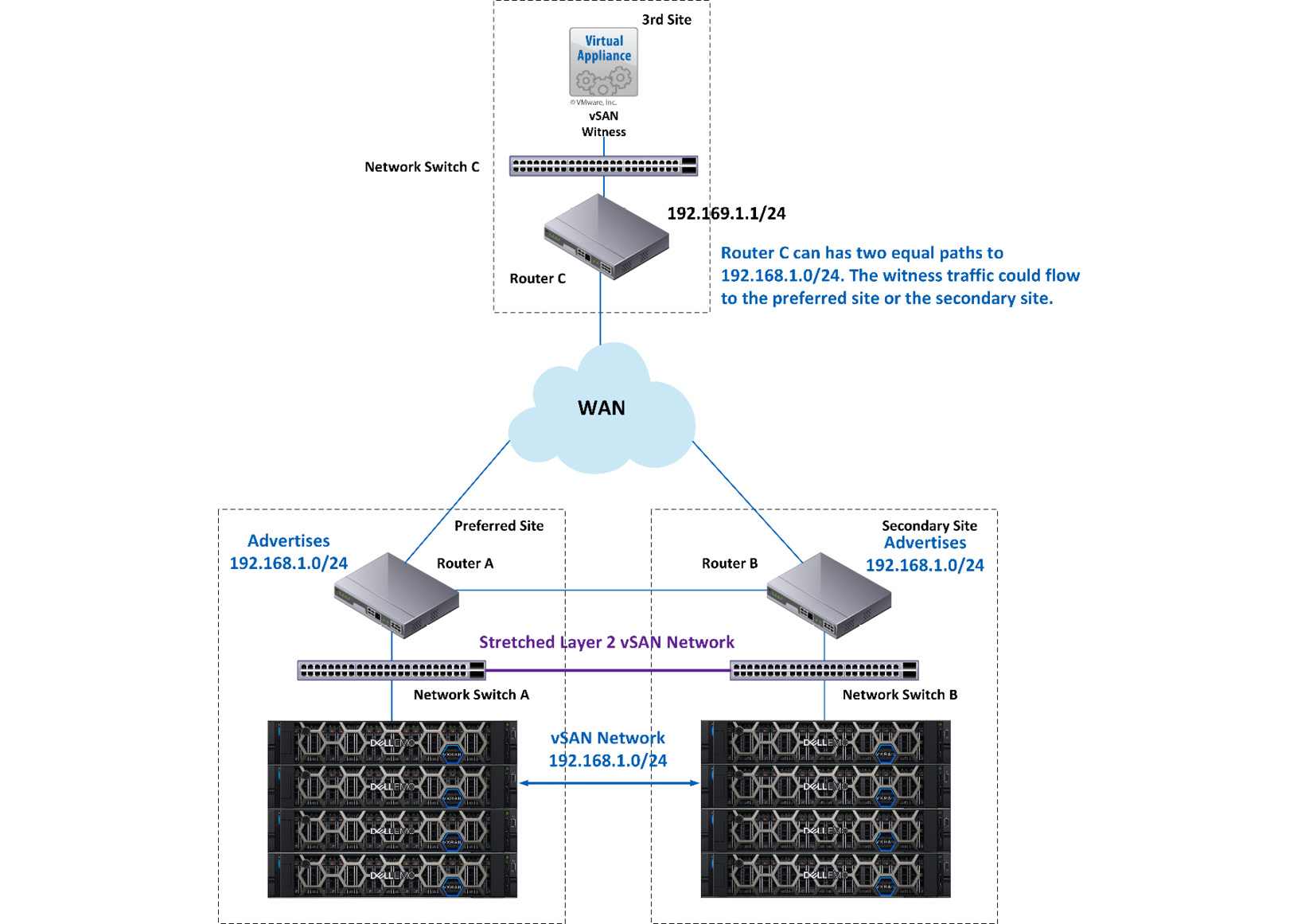

VxRail Stretched Cluster without WTS

Figure 7.8 shows a standard VxRail Stretched Cluster configuration without WTS. There are four data nodes installed in the preferred and secondary sites. The data nodes are connected through Stretched Layer 2 vSAN Network across the preferred and secondary sites. The vSAN communication between the preferred and secondary sites is routed over Layer 3 to the third site. If you are using this configuration, the data nodes use a static route from the vSAN VMkernel interface to the vSAN witness VMkernel interface tagged from the vSAN traffic:

Figure 7.8 – VxRail Stretched Cluster without WTS

In this configuration, the witness traffic cannot be separated from the vSAN traffic. The witness traffic can flow to either the preferred or secondary site through the 192.168.1.0/24 network. This configuration is not recommended. Router C in the third site has two equally costing paths to the 192.168.1.0/24 network, and the network problem will happen when the traffic is sent back from the third site. It can cause issues if stateful firewall rules exist on each site. VxRail Stretched Cluster with WTS is recommended, which will be discussed in the following section.

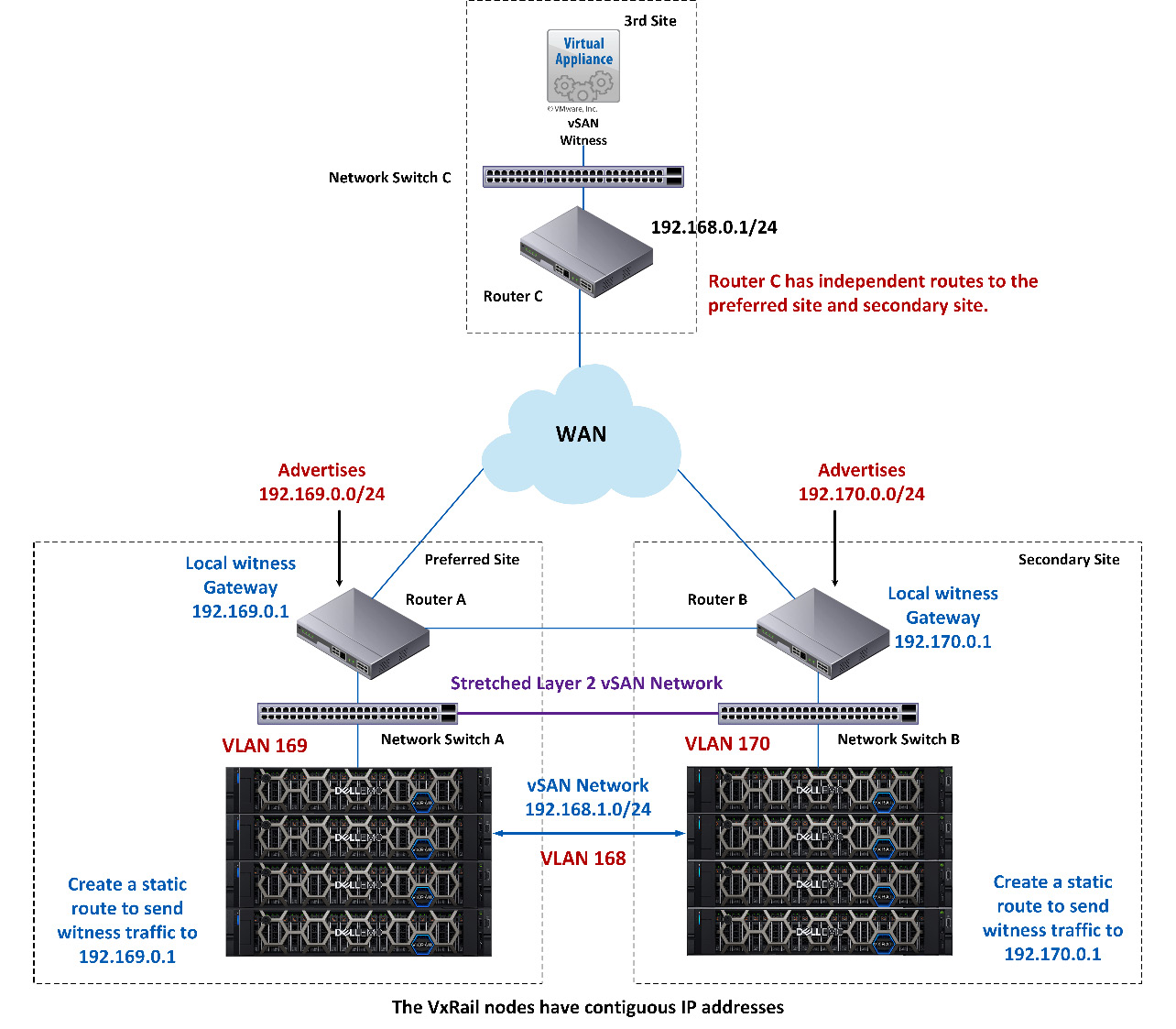

VxRail Stretched Cluster with WTS

Now, we will discuss VxRail Stretched Cluster with WTS. Figure 7.9 shows a standard VxRail Stretched Cluster configuration with WTS:

Figure 7.9 – VxRail Stretched Cluster with WTS

In this configuration, you need to create a distributed port group tagged as VLAN 169 in the preferred site and a port group tagged as VLAN 170 in the secondary site. Also, create a new witness VMkernel interface and add it to each data node in each site. The local witness gateway is used at each site, which is used to route the witness traffic from the data nodes to the vSAN witness. The preferred site advertises the 192.169.0.0/24 network to the third site, and the secondary site advertises the 192.170.0.0/24 network to the third site. Now, you can create static routes between the data nodes to send and receive witness traffic from the data nodes to the vSAN witness. If you use this configuration, the witness traffic is on a separate VLAN from the vSAN network because the third site has two independent preferred and secondary site routes, and you can enable the contiguous vSAN IP addresses on each data node across preferred and secondary sites.

With the preceding two examples, you will now understand the difference between VxRail Stretched Cluster with WTS and without WTS.

The following section will discuss the design of the embedded vCenter Server instance and customer-supplied vCenter Server instance.

VMware vCenter Server options

During the deployment of VxRail Stretched Cluster, we have two options for the vCenter Server instance—that is, embedded vCenter Server (internal vCenter Server) and customer-supplied vCenter Server (external vCenter Server). An embedded vCenter Server is deployed on the VxRail cluster during VxRail’s initial installation and a customer-supplied vCenter Server is deployed in your VMware environment. Customer-supplied vCenter Server is the recommended configuration.

In Figure 7.10, VxRail Stretched Cluster is managed by an embedded vCenter Server instance. In this deployment, the embedded vCenter Server instance can only manage a VxRail cluster—for example, a VxRail standard cluster, VxRail vSAN two-node cluster, or VxRail Stretched Cluster. If you are using this deployment, the lifecycle management is also supported by VxRail Stretched Cluster. Starting with VxRail 7.0, either an embedded vCenter Server or a customer-supplied vCenter Server can be used for VxRail Stretched Cluster.

Figure 7.10 shows VxRail Stretched Cluster with an embedded vCenter Server instance:

Figure 7.10 – VxRail Stretched Cluster with an embedded vCenter Server instance

In Figure 7.11, VxRail Stretched Cluster is managed by a customer-supplied vCenter Server instance. In this deployment, the customer-supplied vCenter Server instance can manage both a VxRail cluster and a non-VxRail cluster—for example, a VxRail standard cluster, a vSphere cluster, or a VxRail Stretched Cluster. If you are using this deployment, it has the following advantages:

- It can provide central management of VxRail clusters and non-VxRail clusters.

- You can easily migrate the VM’s workload between the VxRail cluster and the non-VxRail cluster.

- It can manage different types of VxRail clusters—that is, a VxRail All-Flash cluster and a hybrid cluster.

- Each VxRail cluster with a customer-supplied vCenter Server instance has its own VxRail Manager.

Figure 7.11 shows VxRail Stretched Cluster with the customer-supplied vCenter Server instance:

Figure 7.11 – VxRail Stretched Cluster with the customer-supplied vCenter Server instance

With Figure 7.10 and Figure 7.11, you now understand the differences between both vCenter Server instances. Table 7.2 shows a comparison of vCenter features:

|

Embedded vCenter Server |

Customer-supplied vCenter Server | |

|

vCenter Server License |

The vCenter Server Standard license is bundled with VxRail Appliance. |

It requires the optional vCenter Server Standard license. |

|

Compatibility |

It is bundled with VxRail software that is compatible with VxRail Manager and vSphere software. |

You need to verify the VxRail and external vCenter interoperability matrix. |

|

Deployment |

It will deploy automatically into the VxRail cluster during VxRail initialization. |

You need to deploy it manually into your VMware environment. |

|

Lifecycle management |

VxRail’s one-click upgrade includes this feature. |

It does not include this feature. |

|

vCenter migration |

It cannot be migrated from the VxRail cluster. |

It can be migrated to either a VxRail cluster or a non-VxRail cluster. |

|

Cluster migration |

It can be migrated to a customer-supplied vCenter Server. |

It cannot be migrated to an embedded vCenter Server. |

|

Multihoming |

It does not support multihoming. |

It can support multihoming. |

|

Single sign-on domain |

It only supports a single vsphere.local single sign-on (SSO) domain. |

It has no domain restrictions. |

Table 7.2 – vCenter feature comparison

When designing VxRail Stretched Cluster, you can choose which configuration is suitable for your environment. The next section will discuss a sample configuration of VxRail Stretched Cluster.

A scenario using VxRail Stretched Cluster

This section will discuss a sample configuration of VxRail Stretched Cluster, shown in Figure 7.12. Now, we will discuss VxRail Stretched Cluster with eight nodes, including the requirements, the design of the network configuration, and the VM storage policy configuration. When you design the deployment of VxRail Stretched Cluster, you need to consider all hardware and software requirements; you can refer to the Overview of VxRail Stretched Cluster section in this chapter for more details:

Figure 7.12 – VxRail Stretched Cluster with eight nodes

This configuration is a standard VxRail Stretched Cluster with eight nodes. Table 7.3 shows the hardware configuration of each VxRail node. Each P670F model installed one 800 GB SSD (cache tier), four 7.68 TB SSDs (capacity tier), and one quad-port 10 GB Ethernet adapter:

|

VxRail model |

VxRail P670F |

|

CPU model |

2 x Intel Xeon Gold 5317 3G, 12C/24T |

|

Memory |

512 GB (8 x 64 GB) |

|

Network adapter |

Intel Ethernet X710 Quad Port 10GbE SFP+, OCP NIC 3 |

|

Cache drive |

1 x 800 GB SSD SAS drive |

|

Capacity drive |

4 x 7.68 TB SSD SAS drive |

Table 7.3 – A sample configuration of VxRail P670F

Each VxRail P670F model includes the following software; Table 7.4 shows the software edition of each VxRail and VMware component:

|

VxRail software release |

7.0.370 build 27485531 |

|

VMware ESXi edition |

7.0 Update 3d |

|

VMware vCenter Server |

7.0 Update 3d |

|

VMware vSAN |

7.0 Update 3d |

|

VMware vSAN witness |

7.0 Update 3d |

Table 7.4 – VxRail software releases

The next section will discuss the network design of the scenario in Figure 7.12.

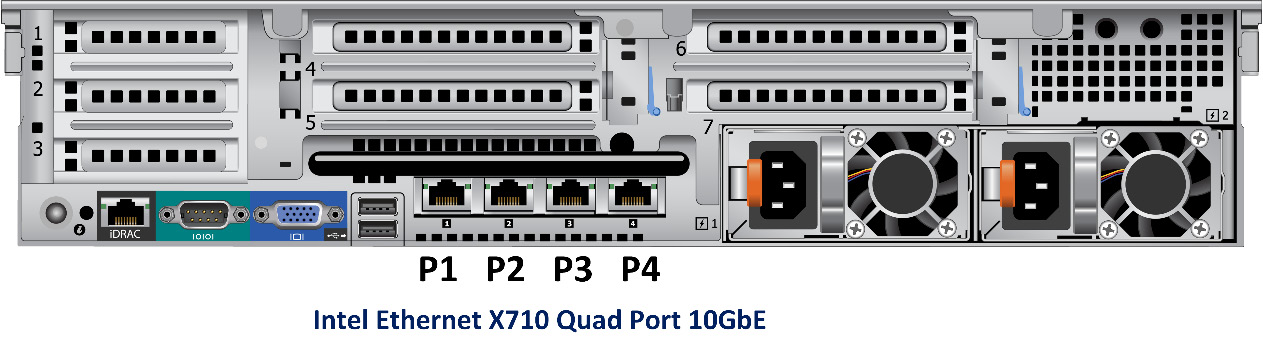

Network settings

Each VxRail P670F model has four 10 GB network ports, as shown in Figure 7.13. The P1 and P2 ports are used for ESXi with a VxRail management network and witness traffic. The P3 and P4 ports are used for the vSAN and vMotion networks:

Figure 7.13 – Rear view of VxRail P670F

For the network design of VxRail Stretched Cluster, you can refer to the following table. Table 7.5 shows a network layout used for VxRail Stretched Cluster with WTS:

|

Network Traffic |

NIOC Shares |

VMkernel ports |

VLAN |

P1 |

P2 |

P3 |

P4 |

|

Management network |

40% |

vmk2 |

101 |

Standby |

Active |

Unused |

Unused |

|

vCenter Server management network |

N/A |

N/A |

101 |

Standby |

Active |

Unused |

Unused |

|

VxRail management network |

N/A |

vmk1 |

101 |

Standby |

Active |

Unused |

Unused |

|

vSAN network |

100% |

vmk3 |

200 |

Unused |

Unused |

Active |

Standby |

|

vMotion network |

50% |

vmk4 |

100 |

Unused |

Unused |

Standby |

Active |

|

Witness traffic |

N/A |

vmk5 |

102 |

Active |

Standby |

Unused |

Unused |

|

Virtual machines |

60% |

N/A |

N/A |

Active |

Standby |

Unused |

Unused |

Table 7.5 – Network layout of VxRail Stretched Cluster with WTS

Table 7.5 helps you understand VxRail Stretched Cluster’s network layout. The next section will discuss the storage design of the scenario in Figure 7.12.

Storage settings

The VxRail P670F model installed one 800 GB SSD and four 7.68 TB SSDs; you can create a vSAN disk group with one 800 GB SSD for the cache tier and four 7.68TB SSDs for the capacity tier. For the disk groups upgrade, you can refer to the Design of disk groups on VxRail P-Series section in Chapter 5. Since P670F is an All-Flash model, it can support RAID-1, RAID-5, and RAID-6 site protection. In the VM storage policy, make sure the Site Disaster Tolerance parameter is configured to Site mirroring - stretched cluster and the Failures to tolerate parameter is configured to 1 failure - RAID-1 (Mirroring). For the other type of site protection, you can refer to the Overview of VxRail Stretched Cluster section in this chapter. The next section will discuss the required software licenses for this scenario.

Software licenses

When you deploy VxRail Stretched Cluster, it requires the following VMware licenses. Table 7.6 shows a summary of all the bundled software licenses on VxRail P670F:

|

Software Name |

License Edition |

Quantity |

Remark |

|

VMware vSphere |

VMware vSphere Enterprise Plus per CPU |

8 |

N/A |

|

VMware vSAN |

VMware vSAN Enterprise per CPU |

8 |

You can choose the vSAN Enterprise or Enterprise Plus edition. |

|

VMware vCenter Server |

VMware vCenter Server Standard instance |

1 |

Suggest deploying the customer-supplied vCenter Server. It requires an optional vCenter Server Standard license. |

|

VMware vRealize Log Insight |

Bundled with VxRail Appliance |

1 |

N/A |

|

VMware Replication |

Bundled with VxRail Appliance |

N/A |

N/A |

|

Dell EMC RecoverPoint for Virtual Machines |

Bundled with VxRail Appliance |

N/A |

Five VM licenses per node |

Table 7.6 – Summary of all bundled software licenses on VxRail P670F

With the preceding information, you understand the requirements of VxRail Stretched Cluster in Figure 7.12.

Failure scenarios of VxRail Stretched Cluster

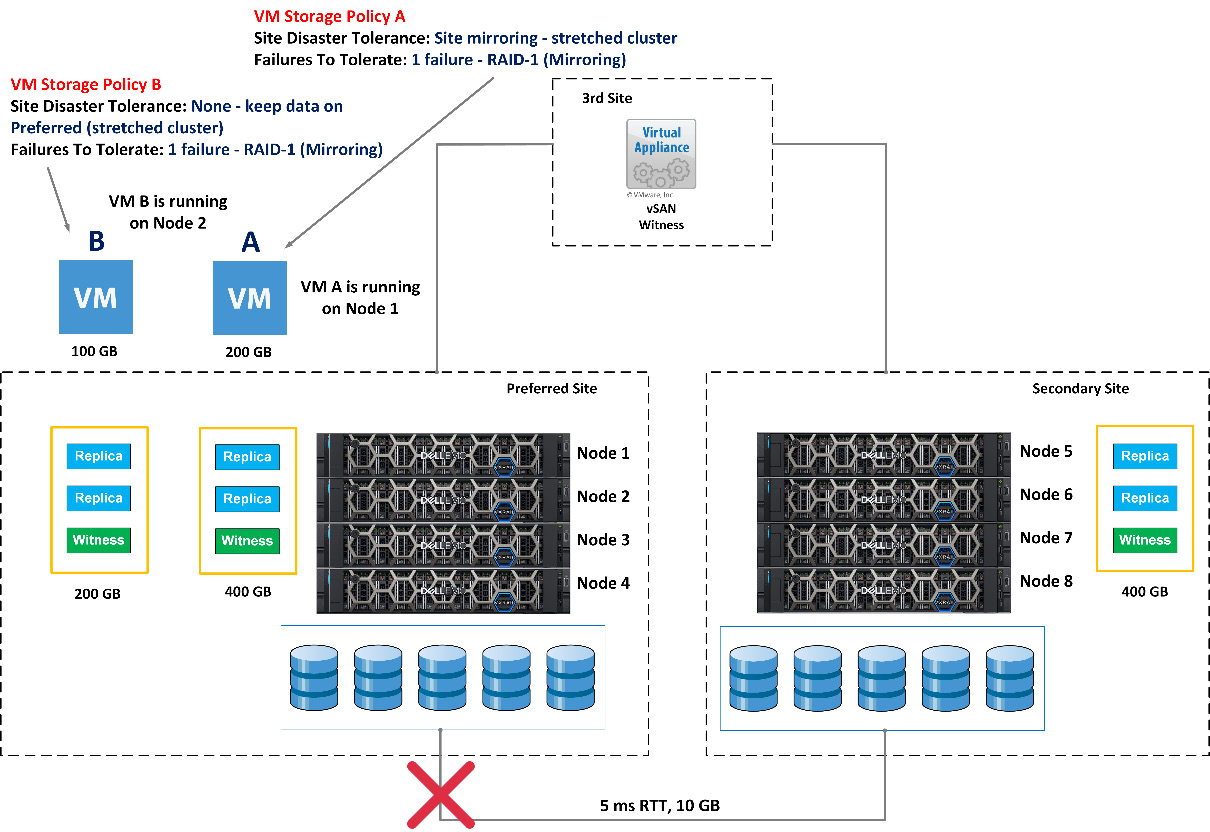

This section will discuss some failure scenarios of VxRail Stretched Cluster. The VMs allocated on VxRail Stretched Cluster trigger different behavior when any hardware failure (for example, VxRail node, vSAN witness, HDD, network uplinks, and so on) exists in the cluster. In Figure 7.14, two VMs (VM A and VM B) are running on this VxRail Stretched Cluster instance; these two VMs are allocated on the preferred site, and the different VM storage policies assign each VM. VM A is configured with VM Storage Policy A, and VM B is configured with VM Storage Policy B:

Figure 7.14 – VxRail Stretched Cluster with eight nodes

Now, we will discuss each failure scenario.

Failure scenario one

In Figure 7.15, what status will the VMs trigger if the vSAN communication is disconnected between the preferred and secondary sites?

Figure 7.15 – Failure scenario one of VxRail Stretched Cluster

VM A and VM B keep running in this scenario, but the vSAN object status of VM A will be degraded because VM Storage Policy A is configured to Site mirroring - stretched cluster. The vSAN object status of VM B is healthy because VM Storage Policy B is configured to None - keep data on Preferred (stretched cluster). When the data nodes (preferred and secondary sites) cannot communicate and the vSAN witness is available, the VMs allocated on the secondary site will shut down and restart in the preferred site.

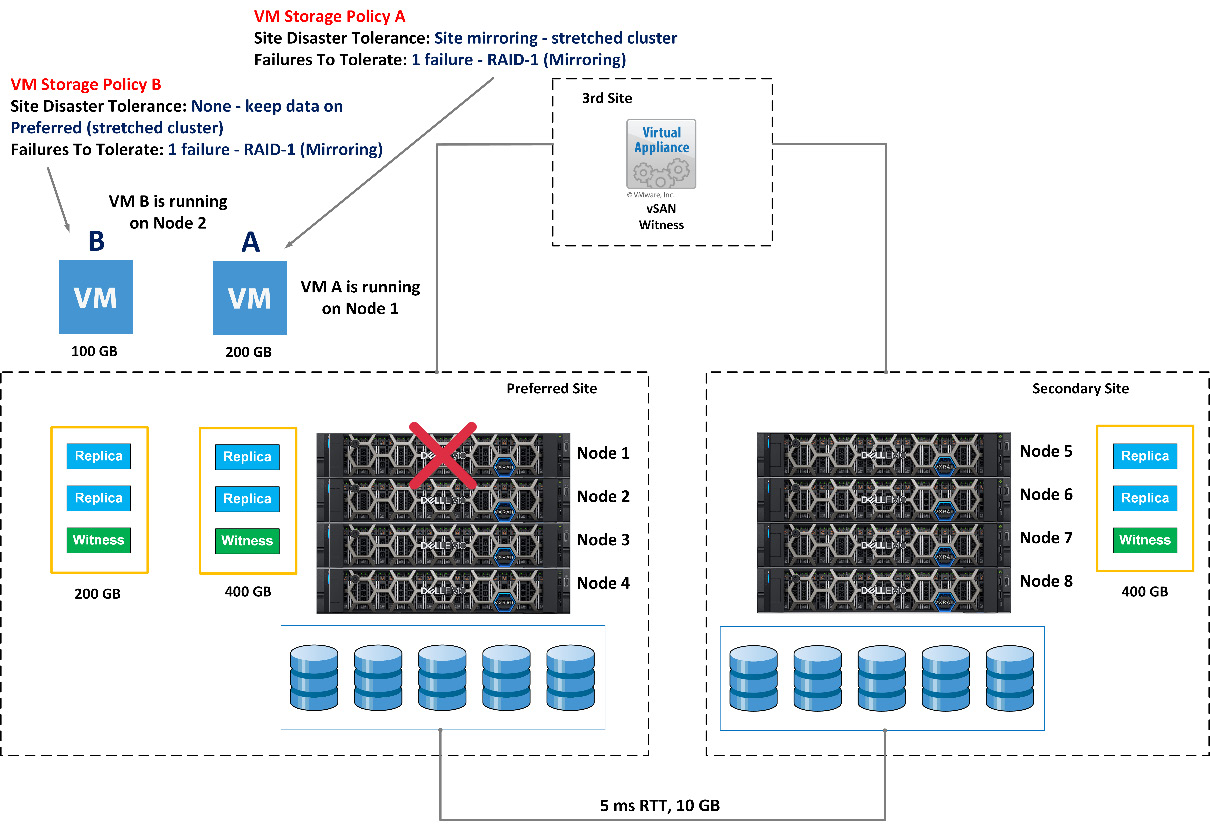

Failure scenario two

In Figure 7.16, what status will the VMs trigger if Node 1 fails in VxRail Stretched Cluster?

Figure 7.16 – Failure scenario two of VxRail Stretched Cluster

VM B keeps running, but the vSAN object status of VM B will be degraded, and one of the replicas will be built into Node 4 because VM Storage Policy B is configured to 1 failure - RAID-1 (Mirroring). VM A will shut down and trigger the high-availability restart into VxRail Node 4, and one of the replicas will be built in Node 4. This is a normal hardware failure case, triggering vSphere High Availability.

Failure scenario three

In Figure 7.17, what status will the VMs trigger if the communication of the vSAN witness and VxRail’s data nodes is disconnected?

Figure 7.17 – Failure scenario three of VxRail Stretched Cluster

All VMs (VM A and VM B) keep running in VxRail Stretched Cluster because the communication between two VxRail nodes can be connected.

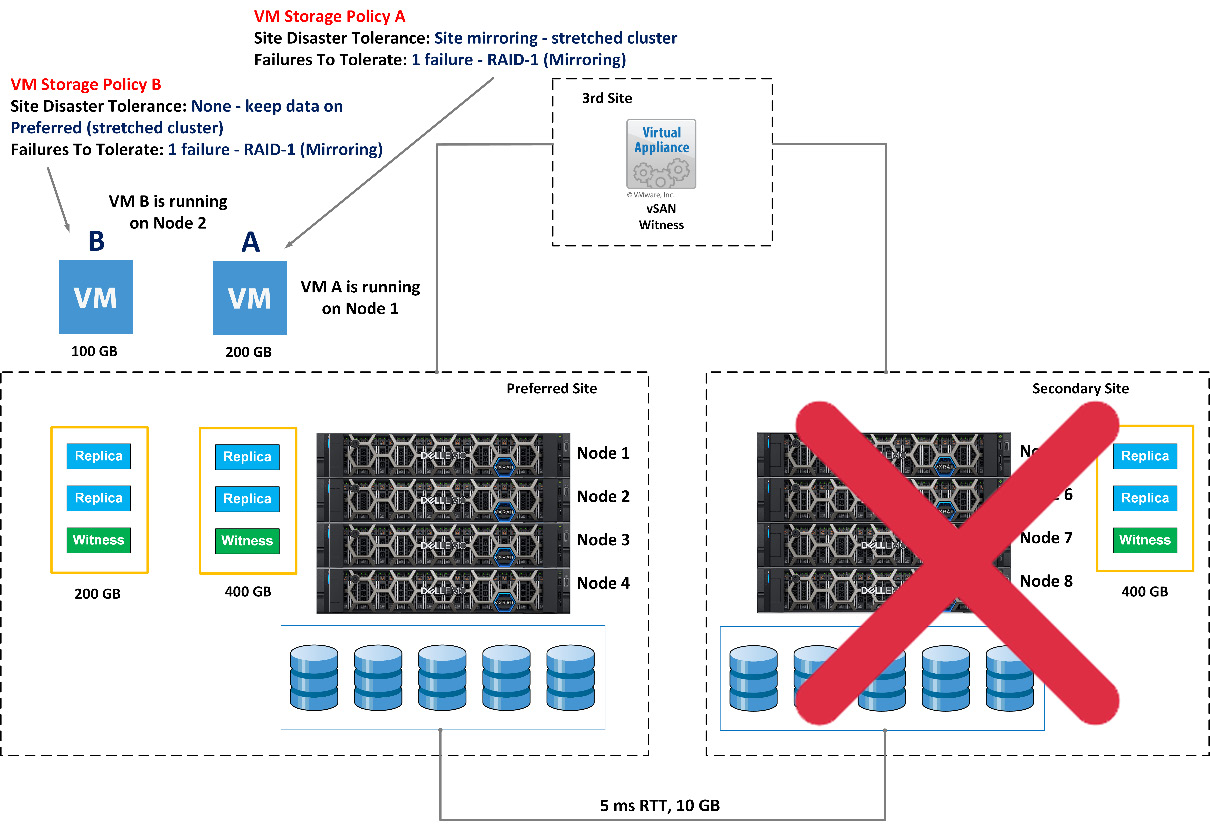

Failure scenario four

In Figure 7.18, what status will the VMs trigger if the preferred site fails in VxRail Stretched Cluster?

Figure 7.18 – Failure scenario four of VxRail Stretched Cluster

VM A will shut down and trigger the high-availability restart into the VxRail nodes in the secondary site. The vSAN object status of VM A will be degraded because VM Storage Policy A is configured to Site mirroring - stretched cluster. This is a normal hardware failure case, triggering vSphere High Availability. VM B will shut down and cannot trigger the high-availability feature because VM Storage Policy B is configured to None - keep data on Preferred (stretched cluster).

Failure scenario five

In Figure 7.19, what status will the VMs trigger if the secondary site fails in VxRail Stretched Cluster?

Figure 7.19 – Failure scenario five of VxRail Stretched Cluster

VM A and VM B keep running in this scenario, but the vSAN object status of VM A will be degraded because VM Storage Policy A is configured to Site mirroring - stretched cluster. The vSAN object status of VM B is healthy because VM Storage Policy B is configured to None - keep data on Preferred (stretched cluster).

With the preceding scenario, you now understand the expected results of VxRail Stretched Cluster.

Summary

In this chapter, you saw an overview and learned about the design of VxRail Stretched Cluster, including the network, hardware, and software requirements, and some failure scenarios. When you plan to design an active-active data center or site failure solution, VxRail Stretched Cluster is a good option.

In the next chapter, you will learn about the design of VxRail with VMware SRM and the best practices for implementing this solution.

Questions

The following are a short list of review questions to help reinforce your learning and help you identify areas which require some improvement.

- Which network bandwidth can be supported on VxRail Stretched Cluster?

- 1 GB network bandwidth

- 1 GB and 10 GB network bandwidth

- 10 GB network bandwidth only

- 10 GB and 25 GB network bandwidth

- 25 GB network bandwidth only

- All of these

- What is the minimum number of nodes configuration supported with VxRail Stretched Cluster?

- 1+0+1

- 1+1+1

- 2+2+1

- 3+3+1

- 4+4+1

- 5+5+1

- What is the maximum number of nodes configuration supported with VxRail Stretched Cluster?

- 5+5+1

- 8+7+1

- 10+10+1

- 12+13+1

- 15+15+1

- 20+20+1

- Which VxRail software releases can support VxRail vCenter Server on VxRail Stretched Cluster?

- VxRail 4.7.200

- VxRail 4.7.300

- VxRail 4.7.410

- VxRail 7.0.240

- VxRail 7.0.300

- All of these

- What is the maximum supported RTT between VxRail Stretched Cluster and the vSAN witness?

- 100 milliseconds

- 200 milliseconds

- 300 milliseconds

- 400 milliseconds

- 500 milliseconds

- All of these

- How many FDs need to be created in VxRail Stretched Cluster?

- One FD

- Two FDs

- Three FDs

- Four FDs

- Five FDs

- Six FDs

- Which network setting is used to separate the vSAN network traffic and witness network traffic?

- NIOC

- WTS

- vSphere Standard Switch

- vSphere Distributed Switch

- DRS

- None of these

- Which setting is supported with site mirroring in the VM storage policy?

- None - keep data on Preferred

- None - keep data on Secondary

- Site mirroring - stretched cluster

- None - standard cluster

- None - stretched cluster

- None of these

- Which setting can support three vSAN nodes’ failures that can be selected in the VM storage policy? Assume the three nodes are in a single site.

- RAID-1 (Mirroring)

- RAID-0

- RAID-5 (Erasure Coding)

- RAID-6 (Erasure Coding)

- RAID-10 (Mirroring)

- RAID-5/6 (Erasure Coding)

- What status will the VMs trigger if the vSAN communication is disconnected between the preferred and secondary sites?

- The VMs keep running.

- All of the VMs shut down.

- The VMs on the preferred FD will shut down and power on the secondary FD.

- The VMs on the secondary FD will shut down and power on the preferred FD.

- None of these.

- What status will the VMs trigger if the vSAN communication is disconnected between data nodes (preferred and secondary sites) and the witness in VxRail Stretched Cluster?

- The VMs keep running.

- All of the VMs shut down.

- The VMs on the preferred FD will shut down and power on the secondary FD.

- The VMs on the secondary FD will shut down and power on the preferred FD.

- None of these.

- Which vSAN license edition/s supports VxRail Stretched Cluster?

- vSAN Standard edition

- vSAN Advanced edition

- vSAN Enterprise edition only

- vSAN Enterprise and Enterprise Plus editions

- vSAN Enterprise Plus only

- All of these