Hour 20

Feedback for Continuous Improvement

What You’ll Learn in This Hour:

Summary and Case Study

Summary and Case Study

In this hour, we focus on three simple techniques and two more strategic techniques useful for collecting and learning from the feedback necessary for continuous product and solution improvement. From Looking Back and Testing Feedback, to Gathering Silent Design Feedback, to running Context Mapping and instrumenting our products and solutions for continuous feedback, we have many opportunities throughout the Design Thinking Cycle for Progress to learn and iterate. A “What Not to Do” reflecting the impact of waiting on late feedback concludes Hour 20.

Simple Feedback Techniques

In life and at work, no matter the source or frequency, feedback helps us learn. Feedback helps us improve who we are, how our teams deliver, what we deliver, and how we can improve what we deliver. From general big-picture feedback to the feedback we instrument across our Design Thinking Model for Tech, feedback keeps us on the road to ongoing reflection, continuous improvement, and fewer surprises.

Note

The Principle of No Surprises

Consider what it means to delight users and stakeholders. When it comes to designs, user interfaces, artifacts, standard documents, status reports, feedback mechanisms, and other outcomes, we should not have to struggle with how to use, read, or otherwise consume them. Design should inspire, delight, be intuitive, and drive clarity rather than surprise.

Design Thinking in Action: Looking Back

Consider the analogy of driving a car, where we must check the rearview mirror now and then to see what has happened and what might be catching up with us. It’s important to look back occasionally, though of course we cannot drive for long and make progress while looking back.

The most general and big-picture technique for acquiring feedback is to simply look back at what’s taken place in the recent past. What have we done well? What should we stop doing? What might we do differently and better? These questions are fundamental to Looking Back, an umbrella technique that comprises numerous other techniques and exercises. Common techniques under the umbrella of Looking Back include

The Retrospective. Run a classic Retrospective with a team at the conclusion of a sprint or release using the Good, the Bad, and the Ugly approach. Discuss what the team accomplished and what still remains to be accomplished.

The Retrospective. Run a classic Retrospective with a team at the conclusion of a sprint or release using the Good, the Bad, and the Ugly approach. Discuss what the team accomplished and what still remains to be accomplished. Think about why things aren’t moving fast enough and in other cases why we have achieved a reasonable velocity. What are the difference makers, and where can we repeat the good, improve the bad, and totally eliminate the ugly?

Think about why things aren’t moving fast enough and in other cases why we have achieved a reasonable velocity. What are the difference makers, and where can we repeat the good, improve the bad, and totally eliminate the ugly? Perform a twist on the classic Retrospective by using the Retrospective Board, a 2×2 matrix organized into four quadrants for discussion and reflection. The four quadrants include

Perform a twist on the classic Retrospective by using the Retrospective Board, a 2×2 matrix organized into four quadrants for discussion and reflection. The four quadrants include Things we will continue doing

Things we will continue doing Things we will do differently next time

Things we will do differently next time Things we want to try out

Things we want to try out Things that are no longer relevant

Things that are no longer relevant

Lessons Learned. Run a Lessons Learned session organized around the broad-based successes, opportunities for improvement, failures that resulted in learnings, and the misses or missteps of a project, initiative, or significant period of time (such as a six-month stretch of prototyping, testing, and iterating). To be of later use to others, ensure these learnings are captured regularly in a Lessons Learned register or knowledgebase throughout (rather than exclusively at the end of) a project or initiative.

Lessons Learned. Run a Lessons Learned session organized around the broad-based successes, opportunities for improvement, failures that resulted in learnings, and the misses or missteps of a project, initiative, or significant period of time (such as a six-month stretch of prototyping, testing, and iterating). To be of later use to others, ensure these learnings are captured regularly in a Lessons Learned register or knowledgebase throughout (rather than exclusively at the end of) a project or initiative. Postmortem. Run a deeper Postmortem on the misses or outright failures regardless of where they exist within the project or initiative lifecycle. View these through the lens of both a Growth Mindset and what we would do differently if we had a do-over. Remember that Postmortems do not need to be exclusively dedicated to dead things! Consider running a Postmortem on our greatest victories and accomplishments too. Identify and celebrate the things we did right—and therefore absolutely need to keep doing—which led to these victories.

Postmortem. Run a deeper Postmortem on the misses or outright failures regardless of where they exist within the project or initiative lifecycle. View these through the lens of both a Growth Mindset and what we would do differently if we had a do-over. Remember that Postmortems do not need to be exclusively dedicated to dead things! Consider running a Postmortem on our greatest victories and accomplishments too. Identify and celebrate the things we did right—and therefore absolutely need to keep doing—which led to these victories.

Consider how each of these feedback mechanisms tends to occupy a different part of the timeline (see Figure 20.1). Our place in the Design Thinking process is less important in these cases than our place in a project’s or initiative’s lifecycle.

FIGURE 20.1

Looking Back naturally occurs at different phases or stages within the project or initiative lifecycle.

To ensure we actually do the work of Looking Back, use a Forcing Function (as covered in Hour 16) and calendar these Retrospectives, Lessons Learned sessions, and Postmortems. Reserve 30 minutes on our calendars each month. Include others who can help us see what went well and not so well. And document these findings and insights. Reviewing the lessons we learn along the way is critical to understanding and not repeating how and where we ran into problems. Look back to learn and reflect and consider.

Design Thinking in Action: Testing Feedback

As we covered extensively in Hour 19, testing is intended to provide us with feedback. Each of the various forms of testing, spanning traditional as well as our Design Thinking-inspired testing types, gives us a particular form of feedback. For starters, consider the feedback gained through the five traditional testing types:

Unit Testing, which provides feedback on code quality and how well we understand our users’ requirements, their use cases, or their user scenarios

Unit Testing, which provides feedback on code quality and how well we understand our users’ requirements, their use cases, or their user scenarios Process Testing, which provides feedback on how well our developers and testers are adhering to standards, talking to one another (in cases where different developers code different components of a process), and so on

Process Testing, which provides feedback on how well our developers and testers are adhering to standards, talking to one another (in cases where different developers code different components of a process), and so on End-to-End Testing, which provides feedback on how well we understand key functional areas and their mega-processes

End-to-End Testing, which provides feedback on how well we understand key functional areas and their mega-processes System Integration Testing, which provides feedback on how well we have considered external endpoints, integrations to other systems, and orchestrated regression testing

System Integration Testing, which provides feedback on how well we have considered external endpoints, integrations to other systems, and orchestrated regression testing User Acceptance Testing, which provides us feedback from our users along the lines of fit-for-use, directional status, and acceptance

User Acceptance Testing, which provides us feedback from our users along the lines of fit-for-use, directional status, and acceptance

Additionally, the three types of traditional performance testing provide feedback in the following ways:

Performance Testing, which provides good data around the performance and user experience of a transaction or process

Performance Testing, which provides good data around the performance and user experience of a transaction or process Scalability Testing, which provides feedback for the technology and architecture teams

Scalability Testing, which provides feedback for the technology and architecture teams Load and Stress Testing, which provides feedback around where our system will break under load and which components are likely to break first, second, and third

Load and Stress Testing, which provides feedback around where our system will break under load and which components are likely to break first, second, and third

Finally, the five types of Design Thinking test approaches help us fill in gaps in the feedback we receive through traditional testing:

A/B Testing provides user feedback on two alternatives.

A/B Testing provides user feedback on two alternatives. Experience Testing provides user experience feedback.

Experience Testing provides user experience feedback. Structured Usability Testing provides user feedback across a breadth of functional areas, user experience, and more.

Structured Usability Testing provides user feedback across a breadth of functional areas, user experience, and more. Solution Interviewing provides a final bit of valuable user feedback prior to promoting a product or solution into production.

Solution Interviewing provides a final bit of valuable user feedback prior to promoting a product or solution into production. Velocity-inspired Automated Regression Testing provides the functional teams, developers, and testers with insights related to their work and in particular the quality and understanding of proposed bug fixes.

Velocity-inspired Automated Regression Testing provides the functional teams, developers, and testers with insights related to their work and in particular the quality and understanding of proposed bug fixes.

All of the aforementioned feedback capabilities are useful prior to promoting a product or solution to production. How might we benefit from our users after our product or solution is already in productive use, though? Let us turn our attention to another technique for gathering feedback called Silent Design.

Design Thinking in Action: Gathering Silent Design Feedback

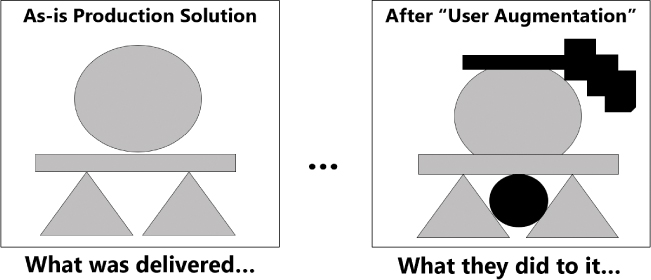

Silent Design is an important aspect of feedback we can obtain from our users of products and services that are already in production (and therefore already being used). Based on research by Peter Gorb and Angela Dumas in the 1980s, Silent Design reflects the changes that end users make to our products and services after we deploy them to production (see Figure 20.2). These user augmentations represent valuable sources of feedback.

FIGURE 20.2

Consider how the changes that our user communities make to our production systems represent yet another opportunity to collect and use their feedback for the continuous improvement and sustainment of our products and solutions.

Learning from Silent Design can make the products and solutions we already have deployed even more usable. Therefore, we need to treat user-derived changes to our products and solution like user feedback—because such changes are indeed feedback. Further, we need to regularly and repeatably pursue these kinds of insights. After all, the easiest addition we can make to our backlog is to incorporate proposed changes coming out of the user community that is already using and has found ways to improve our products and services.

Strategic Feedback and Reflection Techniques

While the previous techniques are simple, they are effective. If we have a bit more time and budget, though, or simply wish to approach feedback and reflection differently and more deeply than we might be accustomed to, then consider Context Mapping and Instrumenting for Continuous Feedback. Each of these techniques is covered next.

Design Thinking in Action: Context Building and Mapping

Another way to gather feedback is to flip this reflection method on its head. Instead of asking a group of end users for their thoughts on how they work and what they do, physically or virtually travel to where they work today. Then passively watch and learn how they use their current product or service (or alternatively how they use our prototype or MVP we may be targeting as a replacement for their current product or solution). Pay attention to the surroundings and context by which they get their work done, building and mapping context along the way.

Organize this context into various Affinity Clusters or groups. Some like to use the STEEP acronym used by the Futures Wheel and Possible Futures Thinking (Hour 13) or the AEIOU for Questioning warm-up technique and taxonomy (covered in Hour 10). Still others might prefer to create a custom context taxonomy composed of the Design Thinking phases or a set of dimensions such as environment, challenges, economy, politics and systems, uncertainties, needs, and so on. Consider organizing these into a circle or daisy with five or eight sections or petals, respectively (a la the Golden Ratio Thinking explored in Hour 12).

This Design Thinking feedback technique is a bit like rolling Journey Mapping, “Day in the Life of” Analysis, and Empathy Immersion all together. Context Building and Mapping reflects research, observation, understanding, and empathy. Of course, in this case our learnings and empathy come through passively watching others rather than actively and personally doing. Still, it’s a powerful tool for retrospectively learning and truly understanding why people want what they say they want.

Design Thinking in Action: Instrumenting for Continuous Feedback

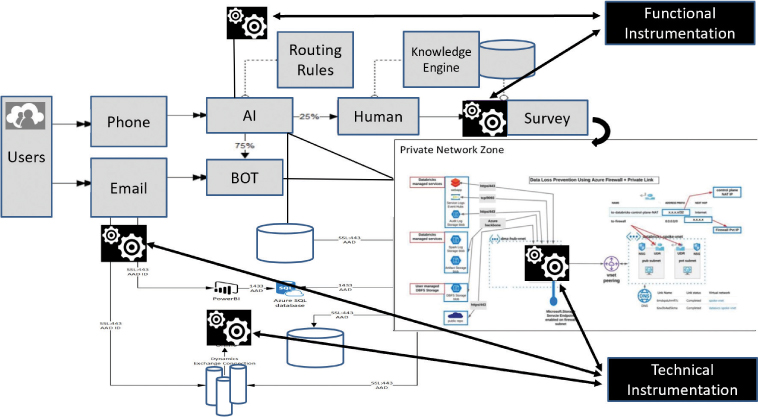

Borrowed from the worlds of engineering and electronics, instrumenting our systems and solutions for continuous feedback is also known as creating a closed-loop or feedback control system. The premise is simple: build feedback mechanisms into our technology and/or our system’s functionality so we and our systems learn over time and make smarter user experience-based or satisfaction-based decisions.

Technology-instrumented feedback loop: The easier of the two options, the idea is to establish smart thresholds within our technology stack through systems management tooling and automated cloud Dev/Ops. In this way, if our technology stack experiences high demand, for example, it will automatically provision additional compute, memory, or storage as needed. This kind of feedback is intended to preserve user experience from a performance or throughput perspective despite the peaks and valleys of demand.

Technology-instrumented feedback loop: The easier of the two options, the idea is to establish smart thresholds within our technology stack through systems management tooling and automated cloud Dev/Ops. In this way, if our technology stack experiences high demand, for example, it will automatically provision additional compute, memory, or storage as needed. This kind of feedback is intended to preserve user experience from a performance or throughput perspective despite the peaks and valleys of demand. Functionality-instrumented feedback loop: The more difficult of the two options, the idea is to build artificial intelligence (AI) or machine learning (ML) into the functionality itself. In this way, if we see that a particular type of user or persona tends to follow a proscribed user journey, we can onboard users who begin to meet the attributes of that persona profile earlier into that user journey, presumably to drive greater user or customer satisfaction. And if another type of user tends to need or purchase Y after obtaining X, the system can be instrumented to look for such trends and more quickly offer Y to our X customers, again to improve the user’s satisfaction and experience.

Functionality-instrumented feedback loop: The more difficult of the two options, the idea is to build artificial intelligence (AI) or machine learning (ML) into the functionality itself. In this way, if we see that a particular type of user or persona tends to follow a proscribed user journey, we can onboard users who begin to meet the attributes of that persona profile earlier into that user journey, presumably to drive greater user or customer satisfaction. And if another type of user tends to need or purchase Y after obtaining X, the system can be instrumented to look for such trends and more quickly offer Y to our X customers, again to improve the user’s satisfaction and experience.

Combinations of instrumented technology and functionality can help automate repetitive end user tasks so those users benefit from greater process throughput or get to the right “place” within our systems faster than otherwise possible. We might automate how emails sent from a user to a customer-service function, for example, are automatically routed to achieve the best functionality or greatest end user responsiveness to a particular class of users or personas.

Other forms of automation can be used to drive mini-surveys of users navigating our systems so we can learn more about why those users are making particular choices. We might also inject A/B Testing (as we covered in Hour 19) into our workflows, for example, to derive a sampling while in production. Similarly, we can inject user experience–related AI into different aspects of a user’s customer journey, or automate a subset of Structured Usability Testing within our systems, or configure our survey mechanism to auto-survey every 100th user trying a new feature.

Instrumenting our platforms and solutions from a technical as well as a functional perspective therefore provides us with a steady stream of feedback upon which to make subsequent decisions and derive other insights (see Figure 20.3).

FIGURE 20.3

Note how we might instrument our solution from a technical as well as functional perspective to provide us with a steady stream of feedback and other insights.

The more we understand our users and their needs, the better and faster we can service them, all with an eye toward improving their user experience and ultimate satisfaction. In turn, greater satisfaction helps organizations achieve the kinds of improved revenue flows, profitability, and other financial and value-based outcomes tied to the organization’s Objectives and Key Results (OKRs), as we outlined in Hour 17.

What Not to Do: Wait for Late Feedback

While feedback in any form and at any time is useful, waiting for weeks on feedback prior to deploying a prototype or updating an MVP isn’t a good idea. But a financial services firm did just that. The firm waited to update its bare-bones MVP while feedback from an early round of Structured Usability Testing was being collected and analyzed. Then the firm waited for a sprint that promised new capability to be completed…and waited on sprint feedback from its product owner and consulting manager…and waited a bit longer for yet another sprint promising even more capabilities to be completed.

In the meantime, the firm’s bare-bones MVP just sat there, static, serving the needs of a few users but doing nothing to help an even broader community of additional users waiting in the wings for the chance to weigh in.

By keeping the broader community on ice, waiting on something to get their hands on, the firm missed three months’ worth of opportunities to learn early on that the prototype was still missing some pretty important additional features that would take another few months to develop. The firm missed the chance to introduce new users to its work-in-progress too, which would later hurt the MVP’s adoption.

The lesson is simple. Wait for feedback when that feedback loop runs in parallel to other work we’re completing. But when we are ready to deploy something, be it a prototype or MVP or updates to our MVP, just deploy. There will be time for more feedback soon enough. Don’t let perfection be the enemy of learning, of progress. And don’t let the fear of a bit of rework keep us from moving forward either. Rework is just another word for iterating, and that’s the key as we apply Design Thinking and its techniques and exercises to our design, development, and deployment work.

If we ever intend to maintain any kind of velocity or stick to a plan, we need to leave late feedback behind, to be used for the next round of feature updates. Treat it like an unexpected gift for the product or solution backlog and move ahead!

Summary

In this hour, we focused on three simple techniques for collecting feedback, including the umbrella technique called Looking Back, various forms of Testing Feedback, and Gathering Silent Design Feedback for continuous improvement. Then we turned our attention to two strategic techniques for regular feedback and reflection, including Context Building and Mapping and Instrumenting for Continuous Feedback our products and solutions. A “What Not to Do” reflecting the impact of waiting around for feedback concluded Hour 20.

Workshop

Case Study

Consider the following case study and questions. You can find the answers to the questions related to this case study in Appendix A, “Case Study Quiz Answers.”

Situation

BigBank’s Chief Digital Officer, Satish, has always been a huge fan of feedback. He built his career around meaningful feedback and deployed quite a few transformational business programs and large systems well before joining BigBank.

So when Satish asked for your assistance to improve how the bank was obtaining and using feedback in support of the dozen OneBank initiatives and the velocity of some of those initiatives, you knew there must be a pretty big problem. As it turns out, Satish needs new tools in his tool bag of feedback techniques. He has questions, too, and needs your fresh perspective.

Quiz

1. What is the name of the technique covered in this hour that reflects feedback gained from users and how they may have made changes to a product or solution after it has been in production?

2. Which broad-based technique reflects feedback and other learnings from the breadth of traditional and newer Design Thinking testing techniques?

3. What are the three specific feedback techniques or methods underneath the umbrella of the Looking Back technique?

4. Which technique reflects an ongoing review we should conduct with our design and development teams or other sprint teams on a regular cadence?

5. What might we tell Satish about the timing of feedback, including how to treat late feedback?