Hour 19

Testing for Validation

What You’ll Learn in This Hour:

Summary and Case Study

Summary and Case Study

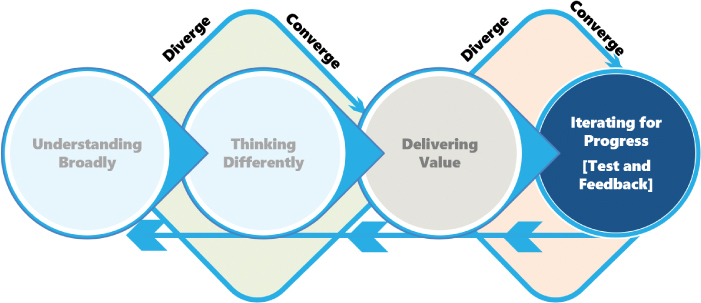

Hour 19 commences the final six hours in Part V, “Iterating for Progress,” where we focus on Phase 4 of our Design Thinking Model for Tech, including testing and the feedback loops between phases (see Figure 19.1). Here in Hour 19, we look at the Testing Mindset, set the stage with traditional types of testing, and then detail five Design Thinking testing techniques for learning more about and validating user needs. We conclude with a look at two feedback testing tools and a “What Not to Do” focused on negative ROI from automating too much regression testing.

FIGURE 19.1

Phase 4 of our Design Thinking Model for Tech.

The Testing Mindset

It has been said that the flip side of thinking is testing. We test to prove our theories and ideas. This kind of Testing Mindset is integral to problem solving as we validate what we think we know, discover gaps as we uncover what we don’t know, and generally learn more throughout the testing process. In the context of prototyping and building a solution, testing is central as well. We prototype to test our theories and learn in the process, and we execute POCs to validate our own thinking. And we run MVPs (Minimum Viable Products) and Pilots to validate with our users that a proposed solution is directionally on track (as we covered in Hour 17).

Testing is also an early form of doing in the sense that we have a first-hand chance to quickly learn so we can avoid the wasted time associated with incorrectly designing, developing, or building. Done early enough, testing helps us avoid dead-end ideas and theories too. Finally, testing gives us the early feedback we desperately need from others and through what we observe and learn.

And it is this feedback that helps us refine how we are empathizing, thinking, prototyping, retesting, and building our solutions. The Testing Mindset gives us new insights we can bake into how we work through problems and situations to realize value. Testing therefore gets us closer and closer to something that truly solves our problems. We might Build to Think, but we test to learn, do, and solve.

Traditional Types of Testing

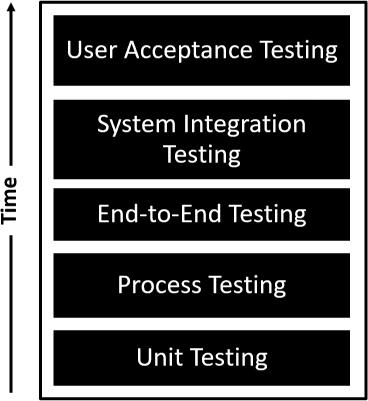

There are many types of testing as we see illustrated in Figure 19.2, and most of these types are focused on validating that what we have designed or prototyped or built does what we expect. While outside of the scope of this book, these types of testing span the prototyping and solutioning lifecycle. We run some of these tests very early and others much later and with a subset of our users. Let us set the stage with these traditional testing approaches:

Unit Testing. We need to test that a specific Proof of Concept or atomic user transaction or bit of customized code that we have developed does what we expect it to do.

Unit Testing. We need to test that a specific Proof of Concept or atomic user transaction or bit of customized code that we have developed does what we expect it to do. Process Testing. We need to build on Unit Testing to validate that a string of developed code or string of previously tested user transactions works together as expected in the form of a process (oftentimes synonymous with a business process).

Process Testing. We need to build on Unit Testing to validate that a string of developed code or string of previously tested user transactions works together as expected in the form of a process (oftentimes synonymous with a business process). End-to-End Testing. We need to build on Process Testing to ensure that a collection of related processes works together to deliver an end-to-end business capability or feature, such as Order-to-Cash or Procure-to-Pay.

End-to-End Testing. We need to build on Process Testing to ensure that a collection of related processes works together to deliver an end-to-end business capability or feature, such as Order-to-Cash or Procure-to-Pay. System Integration Testing. We need to test that our collection of capabilities and features—our solution—holistically works together as a fully integrated system. In particular, we need to ensure that one process doesn’t break another and that all processes receive their inputs as expected, execute as expected, and deliver their outputs as expected.

System Integration Testing. We need to test that our collection of capabilities and features—our solution—holistically works together as a fully integrated system. In particular, we need to ensure that one process doesn’t break another and that all processes receive their inputs as expected, execute as expected, and deliver their outputs as expected. User Acceptance Testing (UAT). Finally, we need to give a subset of our users the chance to exercise and test our solutions before those solutions are finally promoted to production and made available to the whole of the community. Properly executed UAT validates not only that the system works as expected (a la happy path testing), but that it also reacts as expected when users use the system in unconventional or unexpected ways.

User Acceptance Testing (UAT). Finally, we need to give a subset of our users the chance to exercise and test our solutions before those solutions are finally promoted to production and made available to the whole of the community. Properly executed UAT validates not only that the system works as expected (a la happy path testing), but that it also reacts as expected when users use the system in unconventional or unexpected ways.

FIGURE 19.2

Traditional testing comprises Unit Testing, Process Testing, End-to-End Testing, System Integration Testing, and User Acceptance Testing.

User Acceptance Testing does not represent the final form of traditional testing, however. As our solution is hardened or made ready for production, we also need to consider how that solution will perform under the weight of many users concurrently accessing the system, or reports being generated, or long-running batch processes or jobs being run behind the scenes. How will the system perform under these loads? Where are the system’s weak spots? What are the user community’s expectations around performance and availability? How might these expectations need to be reset to accommodate business or seasonal peaks or simply the capabilities of the system? How might we test and validate performance and scalability under different loads or conditions?

With so many questions to answer, it is paramount to consider three more performance-related types of testing and the questions posed here for each:

Performance Testing. How well does a single user transaction or a single process perform with no other load on the system? How does that single user transaction’s performance degrade as additional transactions are placed on the system?

Performance Testing. How well does a single user transaction or a single process perform with no other load on the system? How does that single user transaction’s performance degrade as additional transactions are placed on the system? Scalability Testing. Also called usability testing at scale, how well does the system perform when the full user community is using the system as intended?

Scalability Testing. Also called usability testing at scale, how well does the system perform when the full user community is using the system as intended? Load and Stress Testing. How well does the system perform under load or stress? What level of performance degradation might the user community experience at peak times in their season or business calendar? How might we set expectations with the community regarding performance in light of varying loads or various points in time?

Load and Stress Testing. How well does the system perform under load or stress? What level of performance degradation might the user community experience at peak times in their season or business calendar? How might we set expectations with the community regarding performance in light of varying loads or various points in time?

Note that performance, scalability, and load and stress testing are prepped for as early as practical and actually performed once the solution is stabilized and ready for that particular type of testing. These final three performance-related testing approaches (along with others related to specific attributes of performance as well as security testing, user profile testing, and more) complete what we call our Traditional Testing Framework, as illustrated in Figure 19.3. Again, none of this is necessarily Design Thinking–inspired but rather simply a good set of user-centric practices for responsibly testing products and solutions prior to deploying those products and solutions for productive use.

FIGURE 19.3

The Traditional Testing Framework represents all seven traditional testing types (and others not specifically outlined here).

Finally, to validate and quantitatively measure our system’s performance, we need to instrument our system for performance and availability monitoring (which is yet again out of the scope of this book but important to share in the context of testing). What kinds of performance monitors and thresholds should we establish as part of Service Reliability Engineering to help us understand and manage our solution day to day and under load? What do we need to carefully watch, and what can we potentially automate so that we’re alerted before our solution runs into major performance problems?

Note

What Is SRE?

SRE, or Service Reliability Engineering, comprises the engineering, technical, and change control methodologies and procedures necessary to manage the reliability of a system and resolve reliability operations and infrastructure issues (often in an automated or self-healing kind of way). Synonymous with a culture or mindset of service reliability, service reliability engineers work to automate what can be responsibly automated as a way to avoid manually introduced issues (in the same way that we seek to automate perhaps 80 percent of our system’s regression testing as we make changes to that system, covered later this hour).

With our Traditional Testing Framework and the notion of Service Reliability Engineering in place, let us turn our attention to the Design Thinking techniques and tools that build on and add value to these long-standing mainstays of testing and monitoring.

Testing Techniques for Learning and Validating

Beyond the many types of traditional testing outlined previously, we also can lean on several Design Thinking-inspired testing techniques. Of course, Prototyping in all of its forms (covered in Hour 16) is one of our earliest testing methods. We prototype to test our ideas and test our partial solutions, after all. Beyond prototyping, we also test and validate our ideas and solutions through POCs, MVPs, and Pilots, too, as we covered in Hour 17.

But how might we better engage our users to prototype and test more deeply? What can we do differently to better ensure our solution meets their spoken and perhaps even unspoken needs? How might we learn those unspoken needs before our solution is ever even turned into an MVP or Pilot?

The answer to these questions lies with several techniques outlined next. Most of these Design Thinking techniques are associated with the iterative testing we do surrounding prototyping, but indeed every one of these techniques is useful throughout solutioning and even afterward when we find ourselves iterating on a solution already in production.

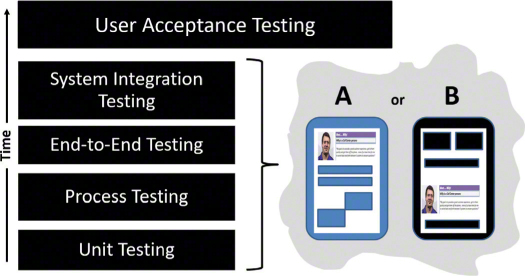

Design Thinking in Action: A/B Testing

A/B Testing is one of the simplest and yet most valuable testing techniques at our disposal. When we want to validate a preference around two features, or two aspects of an interface, or perhaps two different approaches to solving a problem, we can turn to A/B Testing. As the name implies, the idea is to test one alternative against another alternative, as we see in Figure 19.4. Users sometimes prefer this approach of comparing one feature to another feature, for example, rather than trying to explain why they don’t like a particular feature.

FIGURE 19.4

Consider how A/B Testing may be employed alongside traditional testing to determine a user community’s preference for one alternative over another.

Because it can be easily structured and rapidly executed, A/B Testing lends itself to quantitative evaluation. That is, from a user community of tens or hundreds or thousands, we can quickly determine where to next spend our time based on the likes and other feedback from the community. Use this technique early in prototyping and continue using this technique throughout testing and well after a product or solution has been deployed to production. A/B Testing is great for evaluating minor proposed changes to our production solution—for example, in the name of continuous feature testing and improvement.

Design Thinking in Action: Experience Testing

When we need valuable prototype insights from the only people who really matter—prospective users—turn to Experience Testing. This type of testing often overlaps with Structured Usability Testing (covered next), and it may be used as part of traditional End-to-End and System Integration Testing to assess a user’s experience with our prototype or proposed product or solution. Wireframes, rough and ready prototypes, and even simple line drawings can also serve as a source for Experience Testing.

Again, the idea is to gain early feedback from the very people who will presumably use our product or solution one day. Encourage these people to vocalize their likes, dislikes, and what they might change. Good products and solutions are generally viewed as intuitive to use. Confirm the extent this intuitiveness to be true, and capture what has been missed in design or the implementation of that design.

Practice Experience Testing early on. A product’s or solution’s intuitiveness can often be assessed very early in the design and development phases. As a product or solution matures, other modalities and methods can be used to gather such feedback too, ranging from traditional post-sprint or post-release User Acceptance Testing to the other Design Thinking techniques outlined this hour and techniques such as Silent Design outlined later in Hour 20.

Design Thinking in Action: Structured Usability Testing

To test and validate our prototypes early on with our users, turn to Structured Usability Testing. The idea is to confirm that a product or solution works effectively and is indeed usable. The difference between this kind of testing and traditional User Acceptance Testing is in the timing; Structured Usability Testing occurs well beforehand, when we have time to make fundamental product and solution changes.

The key to Structured Usability Testing lies in creating a uniform and repeatable environment for this testing. By establishing a plan that includes level-setting each user about the test’s purpose and goals, along with a sequenced set of test cases or scenarios to execute, our earliest users can help us accomplish a number of objectives:

Gain an early perspective from the very people who will use our product or solution one day, including how well the different personas interact with our early prototypes. Do some personas naturally understand our prototype better than others?

Gain an early perspective from the very people who will use our product or solution one day, including how well the different personas interact with our early prototypes. Do some personas naturally understand our prototype better than others? Validate our assumptions and early direction about the product or solution. Are we on the right track?

Validate our assumptions and early direction about the product or solution. Are we on the right track? Validate the effectiveness of the inputs, processing, and outputs required by the product or solution. Are we missing something?

Validate the effectiveness of the inputs, processing, and outputs required by the product or solution. Are we missing something? Confirm how intuitive our interface or design is based on questions asked or time spent by our users to navigate our product or solution. Did our users have to ask a lot of questions to actually use our prototype?

Confirm how intuitive our interface or design is based on questions asked or time spent by our users to navigate our product or solution. Did our users have to ask a lot of questions to actually use our prototype? Consider product or solution performance including individual users’ thoughts and expectations. Is the system snappy, slow, or somewhere in between? Are certain aspects of the experience faster or slower than others?

Consider product or solution performance including individual users’ thoughts and expectations. Is the system snappy, slow, or somewhere in between? Are certain aspects of the experience faster or slower than others? Rate each user’s overall experience on a scale of 1 (deeply intuitive and satisfied) to 10 (unclear and unsatisfied), for example. Would additional context, user preparation, or training be effective?

Rate each user’s overall experience on a scale of 1 (deeply intuitive and satisfied) to 10 (unclear and unsatisfied), for example. Would additional context, user preparation, or training be effective?

In these ways, we can quickly pivot and change our design, our interface, the underlying technology, or even the fundamental nature of the product or solution based on quasi real-world experiences and feedback. Doing so earlier rather than later saves cost and time while creating much-needed clarity early on. The test lead or a test facilitator should consider videoing or in some other way recording these structured usability tests too so that the whole team can benefit from these early learnings. Such learnings constitute a portion of our user engagement metrics too.

Note

What Are User Engagement Metrics?

As we work through testing our products and solutions (and eventually deploying and operationalizing them), we need to track from our users how well they believe we are engaging them. Called User Engagement Metrics, this direct feedback and knowledge help us understand what we might need to do differently to make our testing more user-centric and effective.

Design Thinking in Action: Solution Interviewing

After we conclude traditional User Acceptance Testing, which is oftentimes the final type of testing we conduct prior to promoting a product or solution to production status, we need to confirm in another important way that our product or solution is truly “accepted.” We can accomplish this final checkpoint through Solution Interviewing.

Solution Interviewing builds on the static pass/fail results we tend to get from UAT. Users validating pass/fail for the transactions and business processes they will execute as part of their “Day in the Life of” is important feedback, to be sure. But it’s dry feedback. On the other hand, Solution Interviewing gives us the rich feedback we need to make smart updates to our products and solutions even if they are accepted.

Be sure to interview a breadth of users, and group or apply Affinity Clustering to key subsets or personas within the user community. Our goal is to determine first-hand and verbally what a user likes, dislikes, would like to be changed, and so on. Create a list of such questions organized around product features, solution capabilities, and so on.

Above all, ensure we listen much more than we talk. Lean on our listening and understanding skills acquired in Hour 6, using Active Listening, Silence by Design, Supervillain Monologuing, and Probing for Better Understanding techniques. Ideally, we should be able to create a backlog of new ideas and features that the team can consider, prototype, and iterate against.

One final point: Solution Interviewing is ideal for providing confirmation for soon-to-become production systems, but we should seek this confirmation prior to deploying MVPs and Pilots too. Use Solution Interviewing to build on the feedback obtained not just from preproduction UAT but from our earlier testing conducted prior to releasing MVPs and Pilots. In these ways we can learn and iterate earlier.

Design Thinking in Action: Automating for Regression Velocity

Automation is about velocity. We need to automate what we can, especially repetitive complex business processes subject to manual testing errors. Much has been written in blogs and other books about the need to automate something like 80 to 90 percent of our regression tests. The upfront investment in tools and scripting pays dividends when we’re 10 sprints into a release and need to quickly test changes and validate that bug fixes haven’t broken yet another part of our system.

Note

What Is Regression Testing?

After we make any kind of substantive change (and some would rightly argue any change) to a prototype, MVP, Pilot, or production system, we should run a series of functional and nonfunctional tests to validate that our new change didn’t break our existing system or any of our existing functionality. Such tests are combined together and labeled Regression Testing, as we are testing our system to validate that it still works as expected and has not regressed. If an existing feature “breaks” in light of our change, we say it has experienced a regression. To be clear, the problem might lie with our change, but it might also reflect a deficiency in our existing feature or code. Either way, something needs to be remediated.

It sounds tempting to fully automate a regression test. But we need to take care not to automate every regression test case. The diminishing returns come quickly as we exceed 80 to 90 percent of our test surface area. Beyond this range of 80 to 90 percent, where each new sprint requires script maintenance and each bug fix potentially breaks our scripts (sometimes erroneously if the bug fix fails to actually remediate what it was intended to fix), we eventually wind up doing more manual script maintenance than regression testing. Automate the easy test cases, automate the test cases especially prone to manual human error, and seek to automate complex test cases as much as feasible.

Testing Tools for Feedback

Though we cover feedback in detail in the next hour, two user feedback capture tools are worth exploring here. These Design Thinking tools are used in the context of testing and can be useful across both traditional and Design Thinking-inspired types of testing.

The Testing Sheet. As the name implies, this is typically a one-page template useful for consistently gathering the kind of feedback we need to iterate on a prototype, MVP, or product or solution as is. We are looking for the learnings and insights our users have as they interact with our work-in-progress. Use this tool in conjunction with any of the traditional or Design Thinking–inspired techniques covered this hour. See Figure 19.5 for a sample Testing Sheet.

The Testing Sheet. As the name implies, this is typically a one-page template useful for consistently gathering the kind of feedback we need to iterate on a prototype, MVP, or product or solution as is. We are looking for the learnings and insights our users have as they interact with our work-in-progress. Use this tool in conjunction with any of the traditional or Design Thinking–inspired techniques covered this hour. See Figure 19.5 for a sample Testing Sheet.

FIGURE 19.5

Consider this sample template to create and customize a Testing Sheet for our prototype, MVP, Pilot, or other product or solution.

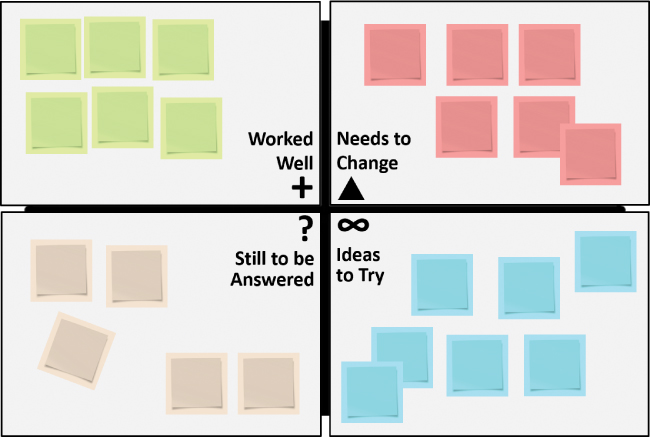

The Feedback Capture Grid. This simple 2×2 matrix tool provides the structure necessary for users to quickly test and record our findings, observations, and other learnings. Because it is pretty general in nature, the Feedback Capture Grid can also be used by attendees to capture their feedback after concluding a meeting, workshop, Design Thinking exercise, and so on. See Figure 19.6 for a sample Feedback Capture Grid.

The Feedback Capture Grid. This simple 2×2 matrix tool provides the structure necessary for users to quickly test and record our findings, observations, and other learnings. Because it is pretty general in nature, the Feedback Capture Grid can also be used by attendees to capture their feedback after concluding a meeting, workshop, Design Thinking exercise, and so on. See Figure 19.6 for a sample Feedback Capture Grid.

FIGURE 19.6

Use a simple 2×2 Feedback Capture Grid to quickly record test feedback organized by what worked well, needs to change, still needs to be answered, and ideas to try.

Remember to capture customer and user verbatims and other feedback as accurately as possible. If we find it necessary to add our own details and comments alongside our users’ feedback, make it clear who exactly provided what feedback.

What Not to Do: Automate Everything

We might be tempted to fully automate our regression tests as time goes on and we see the repercussions from poorly executed manual testing. In the wake of a series of regression “misses” in their complex ERP (Enterprise Resource Planning) and web landscape, a well-known petrochemical company invested heavily in automated regression testing. The company intended to find bugs and validate the functionality of its existing features in the wake of regularly introduced updates well before its user community found issues in production.

But there’s a fine line between automating too little and automating too much, and this petrochemical company learned the hard way the cost of trying to automate everything.

First, its automation test tool didn’t support all of the user interface actions in the primary UI and provided even worse coverage on the mobile interface. So the time invested in automating perhaps 10 percent of the overall regression test cases was wasted. Second, as the UI technology itself was updated several times a year, the automation scripts would break. Each instance required time to troubleshoot and remediate another 10 percent of the scripts that didn’t survive those UI updates. The company found that the test tool provider’s annual update broke another subset of the scripts as well. These were easy fixes but required combing through the full regression test suite and making another round of script updates. And finally, as everyone fully expected, the regular feature updates and bug fixes coming every four weeks as scheduled required the team to do a good amount of script maintenance as well. The expected time and cost in this case were not a surprise, but combined with the other issues noted here, the expected return on investment in automated regression testing would be impossible to realize.

Summary

In Hour 19, we explored the Testing Mindset, eight traditional types of testing, and Service Reliability Engineering. With this foundation in place, we then turned our attention to five Design Thinking testing techniques for learning more about and validating our users’ needs. These techniques included Structured Usability Testing, A/B Testing, Experience Testing, Solution Interviewing, and Automating for Regression Velocity. Next, we explored the Testing Sheet and the Feedback Capture Grid, two tools for gathering richer feedback during testing. We concluded the hour with a “What Not to Do” focused on the misconception that automating everything is actually possible or financially prudent.

Workshop

Case Study

Consider the following case study and questions. You can find the answers to the questions related to this case study in Appendix A, “Case Study Quiz Answers.”

Situation

As each OneBank initiative engages its respective users in testing, Satish has noticed little consistency in how feedback is given or documented. Worse, he is seeing no consistency in how the testing is conducted and has asked you to look into this matter. Satish sees opportunities to bring people and teams together more effectively across the business and the technology organizations to test smarter. For starters, though, you have pulled together the test leads from most of the initiatives to level-set as well as share new approaches.

Quiz

1. What are the five traditional types of testing and the three performance-related types of testing that should be organized by every initiative’s test leads?

2. How would you explain the Testing Mindset?

3. What are the five Design Thinking testing techniques covered in this hour?

4. How is Structured Usability Testing similar to or different from a traditional type of testing?

5. What are the two Design Thinking testing tools we might use for capturing user feedback?

6. In terms of overall percentage of regression test cases, what number should the test leads target for Automated Regression Testing?