10

Positioning Event Processing in the IT World

In this chapter, we’ll explain how event processing contributes to business process management (BPM) initiatives. We’ll also look at the relationship between traditional business intelligence (BI) and business activity monitoring (BAM) systems. Finally, we’ll compare and contrast complex event processing (CEP) technology with rule engines and business rule management systems (BRMSs).

Events in Business Process Management

BPM projects are more successful when they use event-driven architecture (EDA) and CEP appropriately. BPM encompasses two related concepts:

![]() In some contexts, it is a discipline for designing, simulating, monitoring, and optimizing systems in a deliberate and systematic way that is conscious of endto-end business processes.

In some contexts, it is a discipline for designing, simulating, monitoring, and optimizing systems in a deliberate and systematic way that is conscious of endto-end business processes.

![]() Elsewhere, it refers to the use of BPM software, such as orchestration engines and workflow engines, at run time to direct the sequence of execution of software components and human activity steps in a process.

Elsewhere, it refers to the use of BPM software, such as orchestration engines and workflow engines, at run time to direct the sequence of execution of software components and human activity steps in a process.

Both concepts of BPM always involve events, but they don’t always involve event objects or the discipline of event processing. The next two sections explain the role of events in BPM initiatives.

The BPM Discipline

The BPM discipline is a collection of methods, policies, metrics, management practices, and tools used to design, run, and manage systems that support a company’s business processes. Business managers, users, business analysts, and software engineers all have roles to play when using a BPM approach.

The life cycle of a business process begins with process discovery, analysis, and design. Process modelers develop an understanding of how the business should work, not just how it currently works. The goal is to avoid re-creating a suboptimal process in new technology (“paving the cow paths”). Business processes and data are modeled to develop a “to be” business architecture—a conceptual view of how things will be done in the future. A process modeler, usually a business analyst or system analyst, will take the lead in developing and documenting the logical rules for the sequence of activities and data flows. This is the basis for determining which aspects of the process should be automated in application software and which should be done manually or in a machine of some kind. This leads to subsequent steps in the development cycle, including detailed design, coding, testing, and deployment, and then maintaining application systems and databases.

Note: The maxims that system design should begin at a business level and that it should reflect a broad, end-to-end view of the process have been part of good IT practices for many decades.

The origin of systematic approaches to business process design can be traced to Frederick Winslow Taylor, the inventor of scientific management and the time-andmotion study, who did his major work between 1880 to 1915. Scientific management provided an early foundation to the much-later business process re-engineering (BPR) movement that was inspired by Hammer and Champy’s seminal 1993 book Reengineering the Corporation: A Manifesto for Business Revolution (see Appendix A).

The underlying principles of modern BPM reflect the insights of scientific management and BPR. However, modern BPM puts more emphasis on continuous change and ad hoc variability. The process and the business environment in which the process lives are constantly monitored and measured. Business processes are adjusted frequently, and hence the terms “continuous process improvement” and “business process improvement” have become goals for many advanced development organizations. Processes are becoming better able to support instance agility because they allow certain flow decisions to be made by a person dynamically at run time. Activities may be skipped or executed out of order, or additional activities may be inserted into the process. This is especially important for semistructured business processes that cannot be fully planned in advance.

Software vendors offer a variety of sophisticated tools for business process analysis, modeling, and simulation. However, many BPM initiatives are still carried out with manual analysis and simple drawing tools.

BPM Software

BPM software manages the flow of business processes at run time. It keeps track of each instance of a business process, using rules to evaluate events as they occur and programmatically activating the next step for each instance at the appropriate time. Vendors offer several types of run-time BPM software products, including orchestration engines, workflow products, composite application tools, and other BPM tools. Orchestration tools primarily control activities that are executed by software agents. Workflow tools primarily control activities that are executed by people.

BPM software is sold as a separate product or bundled into a business process management suite (BPMS), enterprise service bus (ESB), packaged application suite, or other application infrastructure product. A BPMS is the most complete of the product types that offer run-time BPM software. It includes tools for all of the aspects of the BPM discipline, including process modeling, analysis, simulation, and run-time monitoring and reporting.

The sequence of execution and the rules for controlling the conditional flow of a process are mostly or entirely specified at development time by a software engineer or a business analyst. Most process development tools have a graphical interface, although sometimes a scripting language or traditional programming language can be used. Modern BPM also seeks to make it possible for power users and managers outside the IT department to directly modify a business process in certain, limited ways without the direct involvement of IT staff.

The BPM discipline doesn’t require the use of BPM software at run time. A process can be designed with a formal BPM methodology and modeled and simulated with BPM design tools, but still be instantiated as an application system that doesn’t use run-time BPM software. When run-time BPM software is not used, the process flow is controlled by scripts, application programs, or direct human control. Conversely, run-time BPM software can be used without a formal BPM design program. However, the trend in application development projects is toward using both.

BPM is naturally complementary to service-oriented architecture (SOA). SOA’s modular nature and well-documented interfaces can reduce the effort required to modify or add activities in a business process. SOA applications are more likely to use BPM engines at run time than traditional (non-SOA) applications are.

Note: SOA can be successful without using either development-time BPM design tools or run-time BPM orchestration or workflow software. However, you should never develop an SOA application without considering the architecture of the business process and considering the end-to-end business process model prior to development.

BPM and EDA

Business processes always involve business events in a general sense. However, they aren’t event-driven in every aspect, nor do they use EDA for every interaction. As you know, most processes are a mix of event-, request-, and time-driven interactions. We’ll describe the relationship between BPM and EDA in this section. The next section describes the role of CEP in BPM.

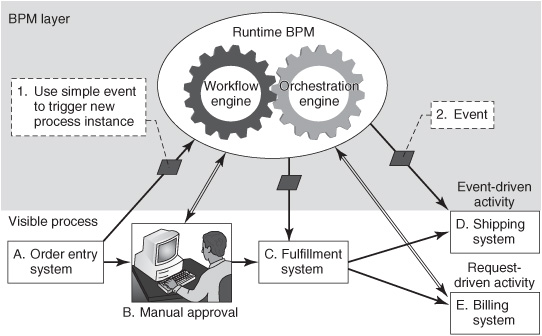

The disciplines of event processing and BPM sometimes use different terms to describe the same phenomena. Consider an order-to-cash business process consisting of the five steps shown in the visible process at the bottom of Figure 10-1:

A. Capturing a customer’s order

B. Performing a manual approval if the order has some unusual characteristics

C. Filling the order

D. Shipping the goods

E. Issuing a bill (concurrently with shipping)

Figure 10-1: Using EDA with BPM.

In event-processing terminology, running through this whole process once to implement a specific action such as “Fred Smith buys a laptop” would be a coarsegrained business event. In BPM terminology, this would be a “process instance” or “business transaction.”

In both BPM and event-processing terms, the process instance is initiated by a business event—capturing Fred’s laptop order in the order entry system (A). The order-capture event is fine-grained. It is only one of many business events that take place within the larger “Fred Smith buys a laptop” event or process instance. In eventprocessing terms, manual approval (B), order fulfillment (C), shipping the goods (D), and issuing a bill (E) would also be business events. Within each, additional layers of more-detailed events might be identified, studied, and implemented. In BPM terms, each of these steps is an “activity.” The event-processing perspective describes the change that occurs, whereas the BPM perspective describes the actions that carry out the change, but they’re referring to the same part of the same process.

The relationships among agents that implement this business process can be structured in an event- or request-driven manner, or a combination of the two. Assume that BPM software is being used to orchestrate the flow of this process. The order-entry application (A) can inform the run-time BPM software that a new order has arrived by sending a notification containing an event object that says “Fred Smith just submitted an order for a laptop” (see “1. Use simple event to trigger new process instance” in Figure 10-1). Each event in an event stream from application A would trigger a new process instance (a separate order). Alternatively, application A could have triggered the start of a new process instance in a non-event-driven way by invoking a request/reply web service that instructed the BPM engine to “enter new laptop order for Fred Smith and send a reply back to acknowledge that the order had been received.” Either way, the BPM software would create a new process instance and assume control of the process, triggering steps B, C, D, and E in succession until the process instance for Fred’s purchase had completed.

The choice between an EDA or a request-driven style of interaction between application A and the run-time BPM engine has implications on the looseness of the coupling and the agility of the application. But either technique accomplishes the goal of signaling the occurrence of a business event that initiates a new business process instance. Similarly, the BPM software can trigger each subsequent activity step by issuing a request (steps B and E) or by sending event notifications (steps C and D). The notification that triggers step D is labeled “2. Event” in Figure 10-1. In some cases, an activity or the whole process can be time-driven rather than request- or eventdriven (not shown in Figure 10-1).

The run-time BPM software (workflow engine or orchestration engine) must be aware of certain events to know when to start or stop activities. For example, it must learn that a previous step has concluded before it starts the next step. Again, an EDAstyle notification can be used to convey this information within the run-time BPM software, or a request-driven mechanism may be used.

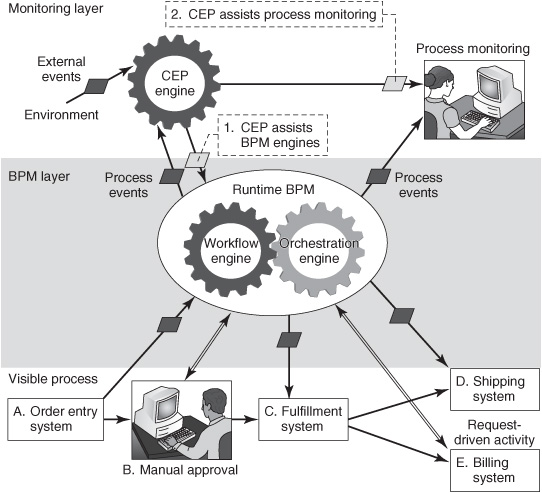

BPM and CEP

Conventional BPM engines control the process flow by applying rules that refer to things that have happened to the application software that is implementing the business process (in this case, it would be steps A through E). A recent advance in BPM technology involves the use of CEP software to augment the intelligence of run-time BPM software (see “1. CEP assists BPM engines” in Figure 10-2). The CEP engine gets information about events that occur outside this business process from sources such as sensors, the Web, or other application systems. It also gets information about events that are occurring within the business process from the BPM software. From these base events, it can synthesize complex events. These are forwarded to the BPM software to enable sophisticated, context-dependent, situation-aware flow decisions. Only a few BPM engines have this capability today, but we expect this to become more common in the future.

Most commercial run-time BPM software products have a BAM dashboard feature for process monitoring (this is sometimes called “process intelligence”). Run-time BPM software emits notifications to report events that occur in the life of each process instance. The monitoring software captures these events, tracks the history of each instance, calculates average process duration and other statistics, and reports on the health of the operation through the dashboard and other notification mechanisms. The monitor can give early warnings of anomalies for individual process instances and for aggregates (groups of instances, such as all orders for computers submitted in the past week). Process-monitoring dashboards generally enable the user to drill down into the detailed history of an individual process instance when necessary.

All process monitors implement a basic form of CEP in the sense that they apply rules and perform computations on multiple event objects to calculate what is happening in a business process. However, process monitors are not general-purpose CEP engines, and most process monitors don’t listen to events from outside the managed business process (that is, all of their input is derived from internal process events happening within applications A through E). However, a few leading-edge projects have linked CEP engines with process-monitoring tools (see “2. CEP assists process monitoring” in Figure 10-2) to provide a broad, robust, situation-awareness capability that encompasses both internal process events and external business events. We expect that this will become more common in the future.

Figure 10-2: Using CEP with BPM.

Process monitoring can also be implemented in scenarios where there is no runtime BPM software (not shown in Figure 10-2). Business processes that are not controlled by run-time BPM software are more common than those that are. Businesspeople still want visibility into what is happening in those processes so that they may deploy a process monitor and BAM dashboard. Supply chain management is a type of process monitoring that usually is implemented without run-time BPM software, or with only small parts of the supply chain under the control of run-time BPM software.

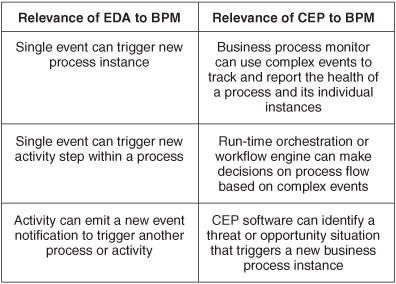

Summary of Event Processing in BPM

The BPM and event-processing disciplines evolved independently, but they are beginning to influence each other more heavily as CEP moves beyond technical domains to support business applications, and as BPM becomes more sophisticated and dynamic. The relevant technologies are a good fit with each other, and the resulting synergy produces better process management and business decisions (see Figure 10-3). A combined discipline is forming under the label “Event-Driven Business Process Management” (EDBPM) encompassing a body of work on technology and best practices.

Figure 10-3: Summary of event processing in BPM.

Event Processing in Business Intelligence

Enterprises use business intelligence (BI) systems to make better decisions. BI applications can be categorized as analyst-driven, process-driven, or strategy-driven. Analystdriven systems, the most common type of BI applications, rarely use event-driven CEP. Some process-driven BI systems deal with near-real-time information—these are known as business activity monitoring (BAM) systems. BAM systems leverage event-driven CEP extensively. Strategy-driven BI systems, including performance management systems, occasionally use event-driven CEP. This section explains these three kinds of BI in more detail and then describes the relationship between BI and event processing.

Analyst-Driven BI

Analyst-driven BI applications primarily serve analysts and knowledge workers. They provide in-depth, domain-specific analysis and information delivery using ad hoc queries, periodic reports, data mining, and statistical techniques. This style of BI answers questions such as:

![]() Do we have enough account executives on the street to meet our sales quota?

Do we have enough account executives on the street to meet our sales quota?

![]() Which customer segments are buying the most?

Which customer segments are buying the most?

![]() Should we retire a product line because it is underperforming?

Should we retire a product line because it is underperforming?

BAM

BAM provides situation awareness and access to current business performance indicators to improve the speed and effectiveness of operations. It’s a type of continuous intelligence application and it uses CEP in most usage scenarios.

BAM differs from analyst-driven BI in fundamental ways:

![]() BAM systems are typically used by line managers or other businesspeople charged with making immediate operational decisions. In contrast, analystdriven BI systems are used mostly by staff people preparing recommendations for strategic or tactical decisions.

BAM systems are typically used by line managers or other businesspeople charged with making immediate operational decisions. In contrast, analystdriven BI systems are used mostly by staff people preparing recommendations for strategic or tactical decisions.

![]() Much of the input for BAM is event data that has arrived within the past few seconds or minutes. Historical data is used to put the new data in context and to enrich the information before it is distributed. The event data is often in a fairly raw form—it’s observational notifications, sometimes with missing, outof-order, or unverified messages. BAM data is often held in memory for immediate processing, and in some cases, it isn’t saved on disk. However, some BAM applications need access to vast amounts of historical data. They may use inmemory databases with specialized data models such as vector database management systems (DBMSs) or real-time, in-memory variants of online analytical processing (OLAP) technology. In contrast, analyst-driven BI relies mostly on historical data from previous days, weeks, months, or years. Analyst-driven BI data is a mix of event, state, and reference data. Most data used in analyst-driven BI has been validated, reformatted into a database schema that is optimized for queries (such as conventional OLAP), and stored in a data warehouse or data mart on disk.

Much of the input for BAM is event data that has arrived within the past few seconds or minutes. Historical data is used to put the new data in context and to enrich the information before it is distributed. The event data is often in a fairly raw form—it’s observational notifications, sometimes with missing, outof-order, or unverified messages. BAM data is often held in memory for immediate processing, and in some cases, it isn’t saved on disk. However, some BAM applications need access to vast amounts of historical data. They may use inmemory databases with specialized data models such as vector database management systems (DBMSs) or real-time, in-memory variants of online analytical processing (OLAP) technology. In contrast, analyst-driven BI relies mostly on historical data from previous days, weeks, months, or years. Analyst-driven BI data is a mix of event, state, and reference data. Most data used in analyst-driven BI has been validated, reformatted into a database schema that is optimized for queries (such as conventional OLAP), and stored in a data warehouse or data mart on disk.

![]() Most BAM systems run continuously, listening to incoming events and communicating with businesspeople through dashboards, e-mail, or other channels. BAM systems typically use a mix of event-driven (push) and request-driven (pull) communication to interact with users. The event-driven communication alerts people when something significant happens. Users then make requests to drill down into root causes or look up information needed to formulate a response. In contrast, most analyst-driven BI is request-driven, where the end user submits ad hoc queries or asks “what if” questions through a spreadsheet that sits in front of a database. Alternatively, analyst-oriented BI is sometimes time-driven, using periodic batch reports.

Most BAM systems run continuously, listening to incoming events and communicating with businesspeople through dashboards, e-mail, or other channels. BAM systems typically use a mix of event-driven (push) and request-driven (pull) communication to interact with users. The event-driven communication alerts people when something significant happens. Users then make requests to drill down into root causes or look up information needed to formulate a response. In contrast, most analyst-driven BI is request-driven, where the end user submits ad hoc queries or asks “what if” questions through a spreadsheet that sits in front of a database. Alternatively, analyst-oriented BI is sometimes time-driven, using periodic batch reports.

![]() Some BAM systems execute a response through a computer system, actuator, or other fully automated mechanism. In contrast, analyst-driven BI always involves a human decision maker.

Some BAM systems execute a response through a computer system, actuator, or other fully automated mechanism. In contrast, analyst-driven BI always involves a human decision maker.

Strategy-Driven BI

Strategy-driven BI, the third category of BI, is used to measure and manage overall business performance against strategic and tactical plans and objectives. These applications typically serve corporate performance management (CPM) and other performance management purposes. They provides visibility into such issues as

![]() Are we on track to meet our monthly sales targets?

Are we on track to meet our monthly sales targets?

![]() Will any of our operating divisions overspend their capital budgets this quarter?

Will any of our operating divisions overspend their capital budgets this quarter?

Formal performance management applications are less common than analystdriven BI or BAM systems. Most managers still use less-systematic approaches to performance management, such as spreadsheets or manual analysis of traditional reports. However, performance management systems for sales, marketing, supply chain, HR, and others areas are becoming more common for the same reason that operational decision-making BAM is becoming more common—the growing amount of data available in electronic form.

Performance management systems differ from BAM systems in several ways:

![]() Performance management system users are typically at a higher level in the organization than BAM system users. Executives, senior managers, and department heads use performance management systems, whereas lowerlevel line managers or functional decision makers are more likely to use BAM systems.

Performance management system users are typically at a higher level in the organization than BAM system users. Executives, senior managers, and department heads use performance management systems, whereas lowerlevel line managers or functional decision makers are more likely to use BAM systems.

![]() Performance management dashboards differ drastically from BAM dashboards, although most of the input data for both kinds of dashboards are event data. Performance management dashboards generally show summary-level data from one or more business units collected over many days or weeks. Totals and averages are compared against targets, often using scorecard-style displays. In contrast, most data used in BAM systems is a only few seconds or minutes old and the data is summarized at a lower level.

Performance management dashboards differ drastically from BAM dashboards, although most of the input data for both kinds of dashboards are event data. Performance management dashboards generally show summary-level data from one or more business units collected over many days or weeks. Totals and averages are compared against targets, often using scorecard-style displays. In contrast, most data used in BAM systems is a only few seconds or minutes old and the data is summarized at a lower level.

![]() Decisions made on the basis of performance management systems typically have a medium-term time horizon. If nothing is done for a few hours or days, it often doesn’t matter. In contrast, BAM systems are generally targeted at moreurgent, although often narrower and less consequential, decisions. Decisions made on the basis of BAM may only affect a small part of the company’s work, such as one or two customers or one day’s results.

Decisions made on the basis of performance management systems typically have a medium-term time horizon. If nothing is done for a few hours or days, it often doesn’t matter. In contrast, BAM systems are generally targeted at moreurgent, although often narrower and less consequential, decisions. Decisions made on the basis of BAM may only affect a small part of the company’s work, such as one or two customers or one day’s results.

![]() Performance management systems are used by people. The issues tend to be complex and require human judgment. In contrast, some BAM decisions are simple enough to fully automate. The majority of BAM systems still involve human users too, but automated decisions and responses are becoming more common over time.

Performance management systems are used by people. The issues tend to be complex and require human judgment. In contrast, some BAM decisions are simple enough to fully automate. The majority of BAM systems still involve human users too, but automated decisions and responses are becoming more common over time.

Positioning BI and Event Processing

In the language of event processing, all three kinds of BI systems perform CEP because they compute complex business events from simpler base events. However, BI users and developers rarely think of events or CEP in a formal or explicit manner. They are aware that they are dealing with things that happen, such as business transactions, but they don’t think of them as “event notifications,” “business events,” or “complex events.”

Note: Typical Interaction Patterns Used in Business Intelligence Systems

![]() Analyst-driven BI is usually request-driven, but occasionally time-driven.

Analyst-driven BI is usually request-driven, but occasionally time-driven.

![]() BAM, the near-real-time aspect of process-driven BI, relies heavily on event-driven CEP for data collection and the initial notification, and then supports request-driven inquiries for subsequent drilldown.

BAM, the near-real-time aspect of process-driven BI, relies heavily on event-driven CEP for data collection and the initial notification, and then supports request-driven inquiries for subsequent drilldown.

![]() Performance management and other strategy-driven BI uses time-driven, periodic reports as its primary mode of interaction.

Performance management and other strategy-driven BI uses time-driven, periodic reports as its primary mode of interaction.

BAM is the only type of BI that makes heavy use of message oriented middleware (MOM), low-latency event-stream processing (ESP) engines, real-time, in-memory DBMSs, and other trappings of event-driven CEP. Other kinds of BI are more likely to leverage traditional BI analytical tools and DBMS technology that can support very large databases and OLAP data models. Those technologies provide rich analytical capabilities for ad hoc inquiries into historical event data and other data.

Companies that invest in formal, systematic BI programs generally try to standardize BI data models, metrics, and tools across a broad swath of their business units and management levels. However, BAM initiatives are generally outside the scope of such programs. Most BAM implementations are “stovepiped”—they are associated with a single functional area, process, or application system. The most common way of acquiring BAM capabilities is to buy a packaged application that includes a dashboard as an accessory. The second most common way of acquiring BAM is to implement a dashboard or other monitoring capability as part of a custom application development project.

Piecemeal, stovepiped BAM can be quite valuable because it provides timely visibility into the key metrics that matter to a particular task. However, it falls short of providing the comprehensive situation awareness that is helpful for certain decisions. Even today, some businesspeople use two or three different BAM systems as part of their job. As the number of stovepiped BAM systems grows within a company, the company naturally acquires MOM, CEP suites, visualization tools, analytical tools, and, most importantly, experience in implementing BAM. This provides the foundation for more-improved, holistic BAM systems. Over time, we expect that BAM stovepipes will become somewhat more integrated. It will be more common to see a single BAM dashboard (or “cockpit”) provide monitoring that spans multiple application systems and business units.

This doesn’t mean that one dashboard will satisfy the needs of everyone in a company. BAM dashboards must implement very different views of the situation for each business role. The view of a supply chain will look different on a BAM dashboard on the loading dock than it does on the sales manager’s BAM dashboard or the fleet manager’s BAM dashboard. And the performance management dashboards for mid- and upper-level managers will inherently differ from the BAM dashboards used by lowerlevel managers and individual contributors.

BI professionals and BAM developers will need to collaborate more often in the future. Businesspeople are pressing BI teams to provide more-current data, and they’re also pressing BAM developers to use more historical data to put real-time event data into context. Most of the underlying business-event objects and other data are the same for conventional BI and BAM systems. Both kinds of systems ultimately draw from the notifications that are generated in transactional applications. Architects and data administrators will be able to improve both types of applications by developing their understanding of the flow of transactional and observational notification data between applications. Conventional BI and BAM won’t merge, because the kinds of decisions and the end-user interaction patterns are inherently different. However, they will need to exchange data more often and cooperate more closely on defining data semantics.

Rule Engines and Event Processing

In Chapter 1 we observed that CEP software is a type of rule engine. In this section, we’ll clarify the similarities and differences between CEP software and other kinds of rule engines used in business applications.

Business Rule Engines

A business rule engine (BRE) is a software component that executes business rules that have been segregated from the rest of the application software. Most rules are expressed declaratively rather than procedurally, which implies that the developer has provided a description of what is supposed to be done rather than providing a step-by-step sequential algorithm for how to perform the computation. The BRE maps the declarative rules into the computer instructions that will implement the logic. A BRE can be physically bound into the application program or it can run independently and communicate with the application by exchanging messages at run time (regardless of whether it runs on the same computer or elsewhere in the network). BREs evolved from expert systems that originally appeared in the 1980s.

Business Rule Management Systems

BREs are often packaged into larger, more-comprehensive BRMS products that incorporate a variety of complementary features. A BRMS is more than a rule execution engine and development environment. It facilitates the creation, registration, classification, verification, and deployment of rules. It incorporates support for:

![]() A rule repository

A rule repository

![]() An integrated development environment (IDE)

An integrated development environment (IDE)

![]() Rule-model simulation

Rule-model simulation

![]() Monitoring and analysis

Monitoring and analysis

![]() Management and administration, and

Management and administration, and

![]() Rule templates

Rule templates

A BRMS contains development tools to help power users, business analysts, and software engineers specify and classify the business rules. It stores rules and checks them for logical consistency. For example, a rule that says “if the credit verification system is down, no purchase over $100 can be approved” would conflict with another rule that says “if the customer is rated as platinum level, they can buy up to $500 of merchandise without credit verification.” In practice, most company’s business rules, whether on paper, in computer systems, or just in people’s heads, are rife with logical inconsistencies of this type. One of the major benefits of a BRMS is that it can identify the relationships among rules, expose many of the inconsistencies, and make it easier for users to resolve them.

A BRMS is based on the premise that business rules usually change more often than the rest of the application, so the application will be easier to modify if the rules are managed and stored separately from the other logic. When business conditions change, the rule can be modified on-the-fly without recompiling the rest of the application system. Moreover, the rules are shareable among multiple applications so that when the rules change, all applications that use the same rules automatically begin using the new version of the rules.

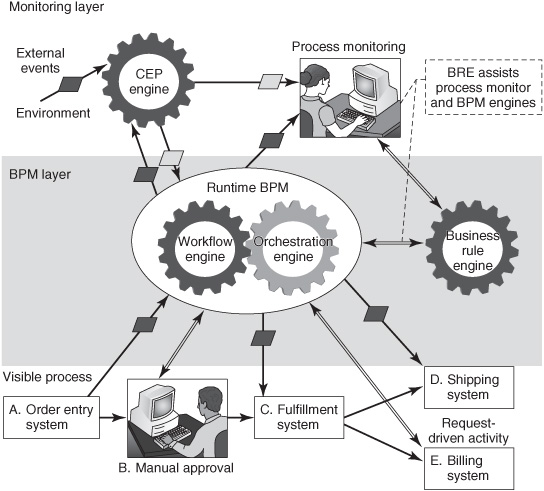

BRMS and CEP Engine Similarities

CEP software has many characteristics in common with BRMS BREs. Both are rule engines that externalize business rules and both are commonly packaged as discrete software components. Much of the data that is processed by a BRE or CEP engine is event data. Businesspeople must be involved in preparing the rules for both types of engines, although BRMS products have a longer track record for providing development tools that are used directly by power users. Both types of engines can be used to support human decision making or to compute fully automated decisions. Both have database adaptors that allow them to look up historical or reference data as part of their processing logic. BREs are sometimes used to complement the operation of run-time BPM engines in a manner that is similar to the way CEP engines complement them (see Figure 10-4). However, because BREs and CEP engines serve different purposes, they use different algorithms and are constructed differently.

Figure 10-4: Using a BRE and CEP engine with run-time BPM.

Request-Driven vs. Event-Driven

BREs are typically request-driven. An application program working on a business transaction needs to make a decision on how to proceed. Instead of having the business rules embedded in its application code, the application invokes the BRE to derive a conclusion from a set of premises. The BRE, called a production system or inference engine, then swings into action to perform the computation and return the result to the application. The general model for a BRE rule is “If <some condition> then <do action X> or else <do action Y>.” In many applications, multiple if-then-else rules must be resolved to make one decision—in some cases, hundreds of such rules.

By contrast, CEP engines are event-driven. They run continuously, processing notification messages as soon as they arrive, in accordance with the principles of EDA. Recent events are stored in the CEP engine, and additional input event data may arrive every second or minute. The notion of automatic, implicit inference does not exist in most CEP engines. However, a CEP engine can compute a complex event that is then fed into another CEP computation to implement a type of explicit forward chaining. The counterpart to a BRE if-then-else rule is a CEP when-then rule: “When <something happens or some condition is detected> then <do action X>.” The counterpart to a BRE “else” clause is a CEP clause that says “When <something has not happened in a specified time frame> then <do action Y>.”

The internal design of a BRE is optimized for its request-driven mode of operation. A BRE functions as a service to applications that perform the input and output (I/O) to messaging systems or people. By contrast, CEP systems maintain their own view of the world, independent of an invoking application. Because they directly handle I/O to and from messaging systems, they run faster and are more efficient at receiving and handling notifications. CEP systems can listen to multiple event channels (for example, multiple messaging systems) to get input from diverse sources. CEP systems may also handle a larger number of independent rules than a typical rule engine handles. The event data in notification messages determines which rules are executed.

BREs and CEP engines are not always used online as part of an immediate business transaction or operational decision. Both kinds of engines are sometimes used offline to process data at rest in a file or database.

Temporal Support

As we described in Chapter 7, time is a central focus in many CEP systems. A CEP system can look for situations such as “If the number of hits on this web page in a 5-minute period exceeds the daily average by more than 50 percent, bring up a second web server and notify the marketing department.” The 5-minute window is continuously sliding or jumping forward into the future. BREs can apply rules to data that is selected according to time windows, although this type of operation is generally cumbersome to specify at development time in a BRE. Moreover, BREs typically have more overhead (and thus higher latency) for handling incoming messages. This makes them less applicable for demanding ESP applications that must carry out computations on large sets of event messages in a few milliseconds. Some BREs demonstrate high throughput (they can scale up to many hundreds of rule requests per second and tens of millions of requests per day), but their latency is not as low as in purpose-built CEP engines for this type of application.

Individual vs. Set-Based Processing

BREs are designed to operate on a working set of data. The input data is passed in as part of a transaction, or is retrieved from a database (it is not stored in the BRE between requests). BREs are commonly used to apply known insights and data to transactional business processes with a modest amount of data that applies to each rule.

For example, about 90 percent of all credit card transactions in the United States are checked for fraud as they take place. A BRE validates the card number and amount against a database of account numbers to determine if the card is good or bad. The databases are typically updated nightly. BREs are also used in online, event-based “precision marketing” strategies where cross-selling and upselling opportunities are identified as the customer interaction is taking place. BREs are sometimes used immediately after a transaction has executed to check for regulatory compliance. Any of these applications may involve hundreds or even thousands of individual transactions per second, and the logic must execute within a second or two. However, each transaction is logically independent, so the work can be spread across dozens of copies of the BRE application on dozens of systems.

CEP engines are appropriate when large amounts of potentially related data must be quickly manipulated as a set. For example, CEP engines are used in context brokers for location-aware applications that monitor the movement of thousands or tens of thousands of people. The CEP system can track where each person is by tapping into the cell phone network. It ingests thousands of messages per second and correlates the event data so that it can notify a person if any member of his or her personal network is within one-quarter mile of his or her current location. This involves immediate pattern detection across a huge set of data—it cannot be accomplished by viewing data items individually.

The distinction between individual and set-based processing extends to offline applications as well. BREs are used in nightly batch systems that check each transaction from the previous day for regulatory compliance, fraud, opportunities for personalized, follow-up marketing activities, or other transaction analytics. CEP systems may also be used offline, but they still deal with large data sets simultaneously rather than individually. Offline CEP applications may replay the event log from a previous day to detect correlations and patterns that were overlooked earlier by the online CEP application. In this scenario, the CEP engine is still seemingly event-driven by the notifications as they arrive, although the event data may represent things that happened at a much earlier time.

Overlap

The technology that is used in typical BRMSs is inherently different from that used in most CEP engines, although they are both rule engines. Nothing prevents a vendor from creating a single BRE/CEP product that is good at both kinds of rule processing. However, it needs to implement both sets of algorithms and technical architectures.

A few anti-money-laundering, fraud-detection, pretrade-checking, networkmonitoring, and other applications can be implemented with either kind of rule engine, so there is a minor overlap in their usage scenarios. In general, BRMSs and CEP systems are complementary notions. Together, they are the core technology needed to implement intelligent decision management (IDM) programs (sometimes calls enterprise decision management [EDM] programs). IDM programs are, as their name implies, systematic ways to provide computer support for making better business decisions. The terms IDM and EDM originated in the rule engine market, but they can encompass BI (including BAM and CEP) because they all are ways to use computers in decision making.

Summary

The discipline of event processing is related to several other major IT-based initiatives found in many companies. Business events are inherent in BPM, although event objects and notifications are not always used when BPM tools deal with business events. CEP technology is used for process monitoring and for some sophisticated, new types of run-time BPM flow management. CEP has introduced a new decision management technology that complements other types of BI tools and rule engines.