Chapter 1. Converging Forces

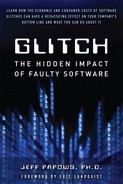

On July 15, 2009, 22-year-old New Hampshire resident Josh Muszynski swiped his debit card at a local gas station to purchase a pack of cigarettes. A few hours later, while checking his bank balance online, Muszynski discovered that he had been charged $23,148,855,308,184,500.00 for the cigarettes, as shown in Figure 1.1.1

Figure 1.1 Josh Muszynski’s bank statement

That’s twenty-three quadrillion, one hundred forty-eight trillion, eight hundred fifty-five billion, three hundred eight million, one hundred eighty-four thousand, five hundred dollars. Plus the $15 overdraft fee charged by the bank. This is about 2,007 times the U.S. national debt.

In a statement from Visa, the issuer of Muszynski’s debit card, what had happened to Muszynski, along with “fewer than 13,000 prepaid transactions,” resulted from a “temporary programming error at Visa Debit Processing Services ... which caused some transactions to be inaccurately posted to a small number of Visa prepaid accounts.” In a follow-up statement, customers were assured “that the problem has been fixed and that all falsely issued fees have been voided. Erroneous postings have been removed ... this incident had no financial impact on Visa prepaid cardholders.”

No financial impact. It’s hard to believe that this incident had no impact on Muszynski, considering that he had to go back and forth between the debit card issuer and the bank to address the situation.

Although the issue was settled after a few days, Muszynski was never formally notified of the cause of the error aside from the information he discovered by doing an Internet search. He saw that the $23 quadrillion cigarette charge and overdraft fee had been corrected only after he continuously checked the balance himself.

When I spoke with Muszynski about the situation,2 he said, “I was surprised that they even let it go through at first. Then, after it happened, I was even more surprised that I was the one who had to notify them about it.” Muszynski is still a customer of the bank, but now he checks his account balance every day. As another result of this incident, he has shifted his spending behavior to a more old-fashioned approach: “I now pay for things using cash. I used to rely on my debit card, but it’s just easier and safer for me to go to the bank and take cash out for what I need.”

Now that’s something every bank that’s making a killing in ATM transaction fees wants to hear—a customer would rather stand in line for face-to-face service than risk being on the receiving end of another glitch.

Glitches Are More Than Inconveniences

Barely a day goes by where we don’t hear about one or more computer errors that affect tens of thousands of people all over the world. In the content of this book, when we address glitches, it is from the perspective of software development and its impact on businesses and consumers. More specifically, it’s about the way that software is developed and managed and how inconcistent approaches and methodologies can lead to gaps in the software code that compromises the quality of product or services that is ultimately delivered to the customer.

Unless these types of glitches affect us directly, we tend to shrug and write them off. For consumers who are grounded in an airport for several hours or who are reported as being late on their mortgage payments, these types of computer errors have a much longer and far more public impact thanks to the rise of social media tools such as Twitter and Facebook. A quick Google search revealed that the story of the $23 quadrillion pack of cigarettes appeared in more than 44,800 media outlets, including newspapers, blogs, radio, and television.

For the businesses servicing these customers, the cost of addressing these types of issues goes beyond brand damage control. This is because what can initially appear to be an anomaly can actually be a widespread error that is staggeringly expensive to fix. These unanticipated costs can include extensive software redevelopment or the need to bring down systems for hours, if not days, to uncover the underlying causes and to halt further mistakes.

Although charts and tables can try to estimate the cost of downtime to an organization, it’s difficult to quantify the impact because of many variables such as size of company, annual revenues, and compliance violation fines.

According to a research note written by IT industry analyst Bill Malik of Gartner, a Stamford, Connecticut-based technology analyst firm, “Any outage assessment based on raw, generic industry averages alone is misleading.” The most effective way to gauge the cost of downtime, according to Gartner, is to estimate the cost of an outage to your firm by calculating lost revenue, lost profit, and staff cost for an average hour—and for a worst-case hour—of downtime for each critical business process.3

What’s Behind the Glitches?

Given how much technology has evolved, we have to ask why these software glitches continue to happen. Why are we seeing more glitches instead of fewer? And now that technology is pervasive in our business and personal lives, how do we make sure that we’re not the cause or the victim of one of these hidden threats that may take days, weeks, or months to unravel?

Flight delays, banking errors, and inaccurate record keeping are just some of the millions of glitches that affect consumers and businesses all over the world. However, when you consider the amount of technology that’s in place at companies of every size all over the world, it’s easy to see how these glitches make their way into our computer systems. We’ve already seen the effects of these types of glitches. What will be different in the future is that they will be more widespread and affect more people due to our increased connectedness and reliance on technology. The result will be more costly and time-consuming outages because the glitches will be harder to detect among the millions of lines of software code that allow us to connect to the office and with our friends all over the globe.

How did we get to this point, and why am I convinced that these issues and many like them will, in fact, happen? The simple answer is that many IT professionals have unwittingly created an industry so hyper-focused on the next big thing that we have taken some shortcuts in the creation of solid foundations—otherwise known in the IT world as infrastructures—that will firmly support us in the future. In these situations, the actions that lead to these glitches are not deliberate. What typically happens is that shortcuts are taken during the software development process to accelerate product release cycles with the goal of boosting revenues. While that’s the simple explanation, the issue obviously is more complex.

Three of the most pressing drivers are

• Loss of intellectual knowledge

Although at first these may appear to be disparate forces, they actually are intertwined.

Loss of Intellectual Knowledge

First, let’s cover the basics of the intellectual knowledge issue. As computers became part of the business landscape, the mainframe was the de facto standard for financial institutions, government agencies, and insurance companies. These entities continue to rely on these fast and stable workhorses to keep their operations running. Software developers programmed mainframes using a language called COBOL (common business-oriented language).

Half a century later, COBOL is still going strong, because mainframes are still the backbone of 75 percent of businesses and government agencies according to industry analysts at Datamonitor.4 Of that 75 percent, 90 percent of global financial transactions are processed in COBOL. Yet as different and more modern languages were introduced to reflect the evolution of technology, there was less emphasis and, frankly, less incentive for the next generation to learn COBOL.

As the lines of COBOL code continued to grow over the years, the number of skilled programmers has steadily declined. It’s difficult to quantify the number of active COBOL programmers currently employed. In 2004, the last time Gartner tried to count COBOL programmers, the consultancy estimated that there were approximately two million worldwide and the number was declining at 5 percent annually.5

The greatest impact of COBOL programmers leaving the workforce will be felt from 2015 through 2029. This makes sense when you realize that the oldest of the baby boomer generation are those born between 1946 and 1950 and that this generation will approach the traditional retirement age of 65 between the years 2011 and 2015.6

As these workers prepare for retirement or are part of a generation that doesn’t stay at one company for their entire career, last-minute scrambles to capture a career’s worth of programming expertise and how it’s been applied to company-specific applications are challenging.

However, efforts are under way to address this issue. They include IBM’s Academic Initiative and the Academic Connection (ACTION) program from Micro Focus International plc, a software company that helps modernize COBOL applications. Both programs are actively involved in teaching COBOL skills at colleges and universities worldwide. However, very little was done to document the knowledge of these skilled workers in the real world while they were on the job.

The COBOL Skills Debate

It’s debatable whether a COBOL skills shortage actually exists. To some degree, the impact of the dearth of skilled workers will depend on how many of a company’s applications rely on COBOL.

For companies that will be affected, industry analysts at Gartner published a report in 2010 titled “Ensuring You Have Mainframe Skills Through 2020.”7 In the report, Gartner analysts advise companies that depend on mainframes how to prepare for the impending skills shortage. The report says companies should work closely with human resources professionals to put a comprehensive plan in place that will help guide them through the next decade, when the mainframe skills shortage will be more prominent.

Mike Chuba, a vice president at Gartner and author of the report, wrote, “Many organizations have specialists with years of deep knowledge—a formal plan to capture and pass on that knowledge via cross-training or mentoring of existing personnel is critical.”

From a day-to-day perspective, the inability to sufficiently support the mainframe could have a significant impact on the economy. Micro Focus International plc has found that COBOL applications are involved in transporting up to 72,000 shipping containers, caring for 60 million patients, processing 80 percent of point-of-sale transactions, and connecting 500 million mobile phone users.8

According to Kim Kazmaier, a senior IT architect with over 30 years of industry experience, this skills challenge is the result of a combination of factors: “The demographics have changed. You are unlikely to find many people who remain in one company, industry, or technology focus for long periods of time, unlike previous generations of IT professionals. Also, the sheer volume and complexity of technology make it virtually impossible for any individual to master the information about all the technology that’s in use within a large IT organization. It used to be that an IT professional would literally study manuals cover to cover, but those days have been replaced by just-in-time learning.”

“To be fair, the information that once filled a bookcase would now fill entire rooms. We simply don’t have the luxury to master that much information individually, so we rely on information mash-ups provided by collaboration, search engines, metadata repositories, and often overstretched subject-matter experts.”

How to Prepare for Any Pending Skills Drought

This issue adds up to fewer skilled IT workers to handle the increasing issues of developing and managing software, which results in the proliferation of glitches.

How can organizations address this issue in a logical and realistic way? The most practical approach—and one that can be applied to nearly any challenge of this nature—is to first fully understand the business issue, and then figure out how your people can help address it through technology.

The first step is to conduct an IT audit by invoking a comprehensive inventory of all the technology in the infrastructure. Along with tracking all the COBOL-specific applications, you should understand which non-COBOL applications intersect with COBOL applications. Given that we are more connected every day, applications are no longer relegated to specific departments or companies. Because COBOL is behind a significant number of business transactions, there is a strong likelihood that the non-COBOL applications being created today will also pass through a mainframe. The inventory process is actually not as arduous as it may initially seem, given the amount of available technology resources that can accelerate this step.

The next step is to get an update on the percentage of COBOL expertise in your company versus other technologies, as well as tenure and retirement dates. Based on an IT audit and staff evaluation, you can get a clear picture of just how much of a risk the COBOL situation is to your organization.

If you determine that your company is at risk due to a lack of COBOL expertise, consider the following recommendations:

• Be realistic about knowledge transfer

• Automate as much as possible

Be Realistic About Knowledge Transfer

A logical course of action would be to suggest knowledge transfer, but that won’t completely resolve the situation because of two significant issues. The first is that the applications that were put in place decades ago have been consistently tweaked, updated, and enhanced through the years. It would be impossible to review every change made along the way to pick up exactly where a COBOL expert with 30 years of experience left off. This won’t be a showstopper, but it can result in longer-than-expected cycles to identify the source of a glitch.

This leads to the second issue—experience. It’s simply not possible to do a Vulcan mind meld with the retiring workforce and expect a new team of developers to be as conversant in COBOL as people who have dedicated their careers to it. Although knowledge transfer is important, companies should be realistic in their expectations.

Cross-Train Staff

There’s no reason that the IT job of the future couldn’t or shouldn’t be a hybrid of various kinds of technology expertise. For example, you could offer positions that mix different skill sets such as Flash programming and COBOL and offer additional salary and benefits to the cross-trained developers. This would result in greater expertise across the company and would help you avoid creating knowledge silos.

Automate Where Possible

If your company is facing a skills shortage, consider using technology to automate as many tasks as possible. You can never fully replace intellectual knowledge, but this step can help alleviate the time-consuming and less-strategic functions that still need to happen on a regular basis.

Computer Science Is Cool Again

Whether or not you believe that a pending COBOL skills drought is imminent, you can’t deny that there is a demand for IT skills across the board. The U.S. Bureau of Labor Statistics (BLS) estimates that by 2018, the information sector will create more than one million jobs. The BLS includes the following areas in this category: data processing, web and application hosting, streaming services, Internet publishing, broadcasting, and software publishing.9 Although this represents great opportunities for the next generation, we will face a supply-and-demand issue when it comes to building and maintaining the technology that runs our businesses.

This is due to the fact that the number of students studying computer science and related disciplines at the college and university level is just now on the upswing after steadily declining from 2000 to 2007, largely as a result of the dotcom collapse. In March 2009, The New York Times10 reported on the Computing Research Association Taulbee Survey. It found that as of 2008, enrollment in computer science programs increased for the first time in six years, by 6.2 percent.11 But the gap will still exist from nearly a decade of students who opted out of studying the fundamentals associated with software design, development, and programming. Adding to this is the evidence that students studying computer science today are more interested in working with “cooler” front-end application technologies—the more visible and lucrative aspects in the industry. They’re not as interested in the seemingly less-exciting opportunities associated with the mainframe. The Taulbee Survey found that part of the resurgence in studying computer science is due to the excitement surrounding social media and mobile technologies.

Market Consolidation

The next factor that is creating this perfect storm is mergers and consolidation. Although market consolidation is part of the natural ebb and flow of any industry, a shift has occurred in the business model of mergers and consolidation. It is driven by larger deals and the need to integrate more technology.

From an IT perspective, when any two companies merge, the integration process is often much lengthier and more time-consuming than originally anticipated. This holds true regardless of the merger’s size. Aside from the integration of teams and best practices, there is the very real and potentially very costly process of making the two different IT infrastructures work together.

One of the biggest “hurry up” components of mergers is the immediacy of combining the back-office systems of the new collective entity. At a minimum, similar if not duplicate applications will be strewn throughout the infrastructure of both companies. With all these variations on the same type of application, such as customer accounts, sales databases, and human resources files, there will undoubtedly be inconsistencies in how the files were created. These inconsistencies become very apparent during the integration process. For example, John Q. Customer may be listed as both J.Q. Customer and Mr. John Customer and might have duplicate entries associated with different addresses and/or accounts, yet all of those accounts represent one customer.

Along with trying to streamline the number of applications is the challenge of integrating the various technologies that may or may not adhere to industry standards. Getting all the parts of the orchestra to play the right notes at the right time presents a significant challenge for even the most talented IT professionals.

From a more mainstream point of view, spotty efforts to merge infrastructures can have a very real impact on consumers. For example, according to the Boston, Massachusetts television station and NBC affiliate WHDH, as well as the local Boston CW news affiliate, when tuxedo retailers Mr. Tux and Men’s Wearhouse merged in 2007, a computer glitch didn’t properly track inventory and customer orders.12 This resulted in wedding parties and others on their way to formal events without their preordered tuxedos. Since you can’t change the date of such events, many customers had to incur additional expenses by going to another vendor to ensure they were properly attired for their big day.

Before you start chuckling at the thought of groomsmen wearing tuxedo T-shirts instead of formal wear, keep in mind that the U.S. wedding industry represents $86 billion annually13 and that men’s formal wear represents $250 million annually.14 Talk about a captive audience—you can well imagine the word-of-mouth influence of an entire wedding reception.

Hallmarks of Successful Mergers

Every company that’s been through a merger can share its own tales of what went right, what went wrong, and what never to do again. Yet I believe that successful mergers have consistent hallmarks:

• A cross-functional team: This group is dedicated to the success of the merger. It needs to represent all the different functions of the newly formed organization and should work on the integration full time.

• A realistic road map: When millions of dollars are at stake, especially if one or both of the companies are publicly traded, there may be a tendency to set aggressive deadlines to accelerate the integration. Don’t sacrifice the quality of the efforts and the customer experience for short-term financial gains. For example, if your senior-level IT staff tells you the deadlines are unrealistic, listen carefully and follow their lead.

• Humility: Don’t assume that the acquiring organization has the better infrastructure and staff. Part of the responsibility of the integration team is to take a closer look at all the resources that are now available to create the strongest company possible.

• Technology overlap: Whether a successful integration takes three months or three years, do not shut off any systems until the merger is complete from an IT perspective. You may need to spend more resources to temporarily keep simultaneous systems running, but this is well worth the investment to avoid any disruptions in service to customers.

• Anticipate an extensive integration process. The biggest mistake an acquiring company can make is to assume that the integration will be complete in 90 days. Although it may be complete from a legal and technical stand-point, don’t overlook the commitment required from a cultural perspective or you may risk degrading the intellectual value of the acquisition.

The Ubiquity of Technology

The third force that is contributing to the impending IT storm is the sheer volume and ubiquity of technology that exists among both businesses and consumers. It’s difficult to understate the scale at which the IT industry has transformed productivity, stimulated economic growth, and forever changed how people work and live.

It’s hard to overlook the contributions of the information technology sector to the gross domestic product (GDP). Even with the natural expansion and contractions in the economy, the IT sector continues to be a growth engine. In 2007, U.S. businesses spent $264.2 billion on information and communication technology (ICT) equipment and computer software, representing a 4.4 percent increase over the year 2006.15

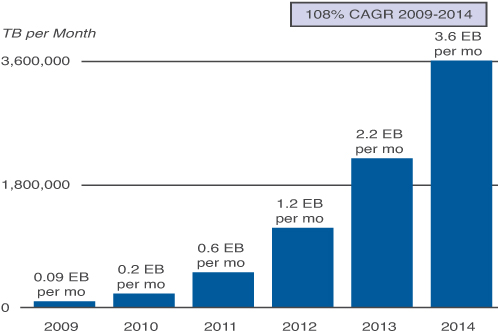

The next decade shows no signs of stopping. A February 2010 Internet and mobile growth forecast jointly developed by IT networking vendor Cisco and independent analysts said that global Internet traffic growth is expected to reach 56 exabytes (EB) per month. The various forms of video, including TV, video on demand (VOD), Internet video, and peer-to-peer (P2P), will exceed 90 percent of global consumer traffic. This will all happen by the year 2013. The same report forecast that the mobile industry will realize a compounded annual growth rate of 108 percent per year through the year 2014. Figure 1.2 shows Cisco’s forecast for mobile data growth in terabytes (TB) and exabytes.16

Figure 1.2 Cisco VNI mobile growth

Source: Cisco VNI Mobile, 2010

I often equate technology with fire: It can warm your hands on a cold night, or it can burn down your house. The innovations of the past few decades illustrate this point. They’ve resulted in a massive amount of software and devices that, if not properly developed and managed, can bring down a network and shut out those who rely on it.

The irony is that the technology industry has created and perpetuated the ubiquity of its products, which is leading to this potential perfect storm of sorts.

Does this mean that a technology Armageddon is under way? Well, that’s a bit dramatic, but it’s not too far from what could potentially happen if we continue to allow these technology glitches to fester in our infrastructures.

Endnotes

1. Bank of America Statement. Josh Muszynski.

2. Interview with Josh Muszynski by Kathleen Keating and Barbara McGovern. December 2009.

3. Gartner. “Q&A: How Much Does an Hour of Downtime Cost?” Bill Malik. September 29, 2009.

4. Datamonitor. “COBOL: Continuing to Drive Value in the 21st Century.” Alan Roger, et al. November 2008.

5. Computerworld. “Confessions of a COBOL Programmer.” Tam Harbert. February 20, 2008. http://www.computerworld.com/s/article/print/9062478/Confessions_of_a_Cobol_programmer?taxonomyName=Web+Services&taxonomyId=61.

6. Forrester Research. “Academic Programs Are Beginning to Offset Anticipated Mainframe Talent Shortages.” Phil Murphy with Alex Cullen and Tim DeGennaro. March 19, 2008.

7. Gartner. “Ensuring You Have Mainframe Skills Through 2020.” Mike Chuba. April 5, 2010.

8. Micro Focus International plc. http://www.itworldcanada.com/news/action-program-makes-cobol-cool-again/140364.

9. Bureau of Labor Statistics. Occupational Outlook Handbook, 2010–2011 Edition. Overview of the 2008–2018 Projections. December 17, 2009. http://www.bls.gov/oco/oco2003.htm#industry.

10. The New York Times. “Computer Science Programs Make a Comeback in Enrollment.” John Markoff. March 16, 2009. http://www.nytimes.com/2009/03/17/science/17comp.html.

11. Computer Research Association. Taulbee Survey. http://www.cra.org/resources/taulbee/.

12. 7News WHDH. “Company Merger and Computer Glitch Delays Tuxedos.” October 13, 2007. http://www1.whdh.com/news/articles/local/BO64447/.

13. Association for Wedding Professionals International, Sacramento, CA. Richard Markel.

14. International Formalwear Association, Galesburg, IL. Ken Pendley, President.

15. U.S. Census Bureau. Press release. Business Spending on Information and Communication Technology Infrastructure Reaches $264 Billion in 2007. February 26, 2009. http://www.census.gov/Press-Release/www/releases/archives/economic_surveys/013382.html.

16. Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update 2009–2014. http://www.cisco.com/en/US/solutions/collateral/ns341/ns525/ns537/ns705/ns827/white_paper_c11-520862.html.