Chapter 3. The Test Engineer

Testability and long-term viability of test automation infrastructure is the purview of the Software Engineer in Test (SET). The Test Engineer (TE) plays a related but different role where the focus is on user impact and risk to the overall mission of the software product. Like most technical roles at Google, there is some coding involved, but the TE role is by far the most broad of all the engineers. He contributes to the product, but many of the tasks a TE takes on require no coding.1

A User-Facing Test Role

In the prior chapter, we introduced the TE as a “user-developer” and this is not a concept to take lightly. The idea that all engineers on a product team fit the mold of a developer is an important part of keeping all stakeholders on an equal footing. At companies like Google where honoring “the writing of code” is such an important part of our culture, TEs need to be involved as engineers to remain first-class citizens. The Google TE is a mix of technical skills that developers respect and a user facing focus that keeps developers in check. Talk about a split personality!

The TE job description is the hardest to nail down as one size definitely does not fit all. TEs are meant as an overseer of all things quality as the various build targets come together and ultimately comprise the entire product. As such, most TEs get involved in some of this lower-level work where another set of eyes and more engineering expertise is needed. It’s a matter of risk: TEs find and contribute to the riskiest areas of the software in whatever manner makes the most sense for that particular product. If SET work is the most valuable, then that’s what a TE does; if code reviews are the most valuable, then so be it. If the test infrastructure is lacking, then it gets some TE attention.

These same TEs can then lead exploratory testing sessions at some other point in a project or manage a dogfood or beta testing effort. Sometimes this is time-driven as early phase work means far more SET-oriented tasks are needed, and later in the cycle, TE-oriented work is prevalent. Other cases are the personal choice of the TEs involved and there are a number of cases where engineers convert from one of these roles to another. There are no absolutes. What we describe in the following section is essentially the ideal case.

The Life of a TE

The TE is a newer role at Google than either Software Engineers (SWEs) or SETs. As such, it is a role still in the process of being defined. The current generation of Google TEs is blazing a trail that will guide the next generation of new hires for this role. Here we present the latest emerging TE processes at Google.

Not all products require the attention of a TE. Experimental efforts and early-stage products without a well defined mission or user story are certainly projects that won’t get a lot of (or any) TE attention. If the product stands a good chance of being cancelled (in the sense that as a proof of concept, it fails to pass muster) or has yet to engage users or have a well defined set of features, testing is largely something that should be done by the people writing the code.

Even if it is clear that a product is going to get shipped, TEs often have little testing to do early in the development cycle when features are still in flux and the final feature list and scope are undetermined. Overinvesting in test engineering too early, especially if SETs are already deeply engaged, can mean wasted effort. Testing collateral that gets developed too early risks being cast aside or, worse, maintained without adding value. Likewise, early test planning requires fewer TEs than later-cycle exploratory testing when the product is close to final form and the hunt for missed bugs has a greater urgency.

The trick in staffing a project with TEs has to do with risk and return on investment. Risk to the customer and to the enterprise means more testing effort and requires more TEs, but that effort needs to be in proportion with the potential return. We need the right number of TEs and we need them to engage at the right time and with the right impact.

After they are engaged, TEs do not have to start from scratch. There is a great deal of test engineering and quality-oriented work performed by SWEs and SETs, which becomes the starting point for additional TE work. The initial engagement of the TE is to decide things such as:

• Where are the weak points in the software?

• What are the security, privacy, performance, reliability, usability, compatibility, globalization, or other concerns?

• Do all the primary user scenarios work as expected? Do they work for all international audiences?

• Does the product interoperate with other products (hardware and software)?

• In the event of a problem, how good are the diagnostics?

Of course these are only a subset. All of this combines to speak to the risk profile of releasing the software in question. TEs don’t necessarily do all of this work, but they ensure that it gets done and they leverage the work of others in assessing where additional work is required. Ultimately, TEs are paid to protect users and the business from bad design, confusing UX, functional bugs, security and privacy issues, and so on. At Google, TEs are the only people on a team whose full-time job is to look at the product or service holistically for weak points. As such, the life of a TE is much less prescriptive and formalized than that of an SET. TEs are asked to help on projects in all stages of readiness: everything from the idea stage to version 8 or even watching over a deprecated or “mothballed” project. Often, a single TE even spans multiple projects, particularly those TEs with specialty skill sets such as security, privacy, or internationalization.

Clearly, the work of a TE varies greatly depending on the project. Some TEs spend much of their time programming, but with more of a focus on medium and large tests (such as end-to-end user scenarios) rather than small tests. Other TEs take existing code and designs to determine failure modes and look for errors that can cause those failures. In such a role, a TE might modify code but not create it from scratch. TEs must be more systematic and thorough in their test planning and completeness with a focus on the actual usage and systemwide experience. TEs excel at dealing with ambiguity in requirements and at reasoning and communicating about fuzzy problems.

Successful TEs accomplish all this while navigating the sensitivities and, sometimes, strong personalities of the development and product team members. When weak points are found, TEs happily break the software and drive to get these issues resolved with the SWEs, PMs, and SETs. TEs are generally some of the most well known people on a team because of the breadth of interactions their jobs require.

Such a job description can seem like a frightening prospect given the mix of technical skill, leadership, and deep product understanding required. Indeed, without proper guidance, it is a role in which many would expect to fail. However, at Google, a strong community of TEs has emerged to counter this. Of all job functions, the TE role is perhaps the best peer-supported role in the company. The insight and leadership required to perform the TE job successfully means that many of the top test managers in the company come from the TE ranks.

There is fluidity to the work of a Google TE that belies any prescriptive process for engagement. TEs can enter a project at any point and must assess the state of the project, code, design, and users quickly and decide what to focus on first. If the project is just getting started, test planning is often the first order of business. Sometimes TEs are pulled in late in the cycle to evaluate whether a project is ready for ship or if there are any major issues before an early “beta” goes out. If they are brought into a newly acquired application or one in which they have little prior experience, they often start doing some exploratory testing with little or no planning. Sometimes projects haven’t been released for quite a while and just need some touchups, security fixes, or UI updates—calling for an even different approach.

One size rarely fits all for TEs at Google. We often describe a TE’s work as “starting in the middle” in that a TE has to be flexible and integrate quickly into a product team’s culture and current state. If it’s too late for a test plan, don’t build one. If a project needs tests more than anything else, build just enough of a plan to guide that activity. Starting at “the beginning” according to some testing dogma is simply not practical.

Following is the general set of practices we prescribe for TEs:

• Test planning and risk analysis

• Review specs, designs, code, and existing tests

• Exploratory testing

• User scenarios

• Executing test cases

• Crowd sourcing

• Usage metrics

• User feedback

Of course, TEs with a strong personality and excellent communication skills are the ones who do all these things to maximum impact.

Test Planning

Developers have a key advantage over testers in that the artifact they work on is one that everyone cares about. Developers deal with code and because that code becomes the application that users covet and that makes profit for the company, it is by definition the most important document created during project execution.

Testers, on the other hand, deal with documents and artifacts of a far more temporal nature. In the early phases of a project, testers write test plans; later, they create and execute test cases and create bug reports. Still, later they write coverage reports and collect data about user satisfaction and software quality. After the software is released and is successful (or not), few people ask about testing artifacts. If the software is well loved, people take the testing for granted. If the software is poor, people might question the testing, but it is doubtful that anyone would want to actually see it.

Testers cannot afford to be too egotistical about test documentation. In the throes of the coding, reviewing, building, testing, rinsing, and repeating cycle that is software development, there is little time to sit around and admire a test plan. Poor test cases rarely achieve enough attention to be improved; they simply get thrown out in favor of those that are better. The attention is focused on the growing codebase and as the only artifact that actually matters, this is as it should be.

As test documentation goes, test plans have the briefest actual lifespan of any test artifact.2 Early in a project, there is a push to write a test plan (see Appendix A, “Chrome OS Test Plan,” for an early Chrome OS test plan). Indeed, there is often an insistence among project managers that a test plan must exist and that writing it is a milestone of some importance. But, once such a plan is written, it is often hard to get any of those same managers to take reviewing and updating it seriously. The test plan becomes a beloved stuffed animal in the hands of a distracted child. We want it to be there at all times. We drag it around from place to place without ever giving it any real attention. We only scream when it gets taken away.

Test plans are the first testing artifact created and the first one to die of neglect. At some early point in a project, the test plan represents the actual software as it is intended to be written but unless that test plan is tended constantly, it soon becomes out of date as new code is added, features veer from their preplanned vision, and designs that looked good on paper are reevaluated as they are implemented and meet feedback from users. Maintaining a test plan through all these planned and unplanned changes is a lot of work and only worthwhile if the test plan is regularly consulted by a large percentage of the projects’ stakeholders.

This latter point is the real killer of the idea of test planning: How much does a test plan actually drive testing activity throughout the entire lifecycle of a product? Do testers continually consult it as they divvy up the features among them so as to divide and conquer the app? Do developers insist the test plan be updated as they add and modify functionality? Do development managers keep a copy open on their desktop as they manage their to-do list? How often does a test manager refer to the contents of a test plan in status and progress meetings? If the test plan is actually important, all of these things would be happening every day.

Ideally, the test plan should play such a central role during project execution. Ideally, it should be a document that lives as the software lives, getting updates as the codebase gets updates and representing the product as it currently exists, not as it existed at the start of a project. Ideally, it should be useful for getting new engineers up to speed as they join a project already in progress.

That’s the ideal situation. It’s also a situation few testers have actually achieved here at Google or anywhere else for that matter.

Here are some features we want in a test plan:

• It is always up to date.

• It describes the intent of the software and why it will be loved by its users.

• It contains a snapshot of how the software is structured and the names of the various components and features.

• It describes what the software should do and summaries how it will do it.

From a purely testing point of view, we have to worry about the test plan being relevant while not making its care and feeding such a burden that it becomes more work than it is worth:

• It cannot take long to create and must be readily modifiable.

• It should describe what must be tested.

• It should be useful during testing to help determine progress and coverage gaps.

At Google, the history of test planning is much the same as other companies we’ve experienced. Test planning was a process determined by the people doing it and executed according to local (meaning the individual team) custom. Some teams wrote test plans in Google Docs (text documents and spreadsheets), shared with their engineering team but not in a central repository. Other teams linked their test plans on their product’s home page. Still others added them to the internal Google Sites pages for their projects or linked to them from the engineering design documents and internal wikis. A few teams even used Microsoft Word documents, sent around in emails to the team in a proper old-school way. Some teams had no test plans at all, just test cases whose sum total, we must suppose, represented the plan.

The review path for these plans was opaque and it was hard to determine the authors and reviewers. Far too many of the test plans had a time and date stamp that made it all too clear that they had been written and long forgotten like the sell-by date on that old jar of jam in the back of the refrigerator. It must have been important to someone at some time, but that time has passed.

There was a proposal floated around Google to create a central repository and template for all product test plans. This was an interesting idea that has been tried elsewhere, but one clearly contrary to Google’s inherently distributed and self-governed nature where “states rights” was the norm and big government a concept that brought derision.

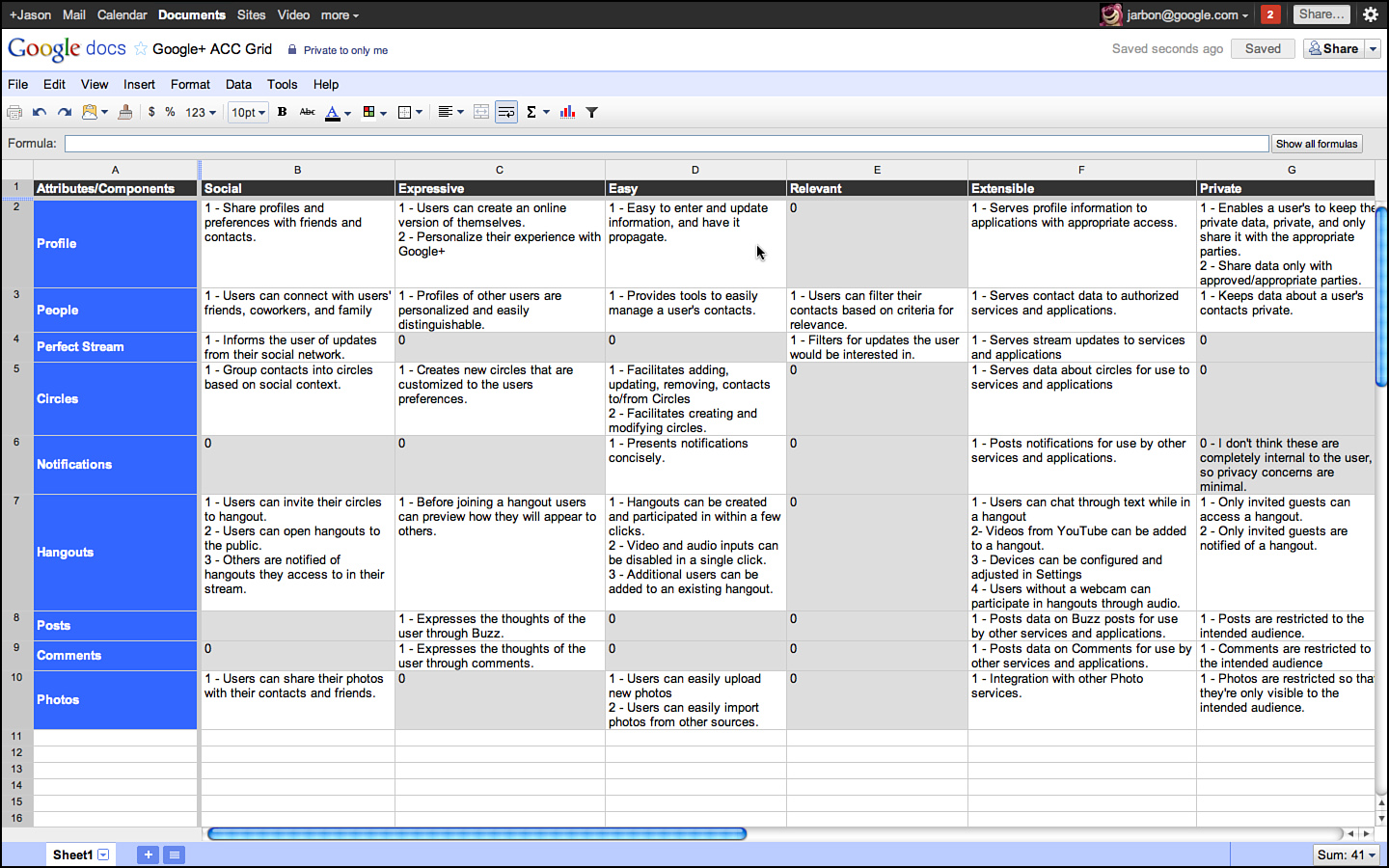

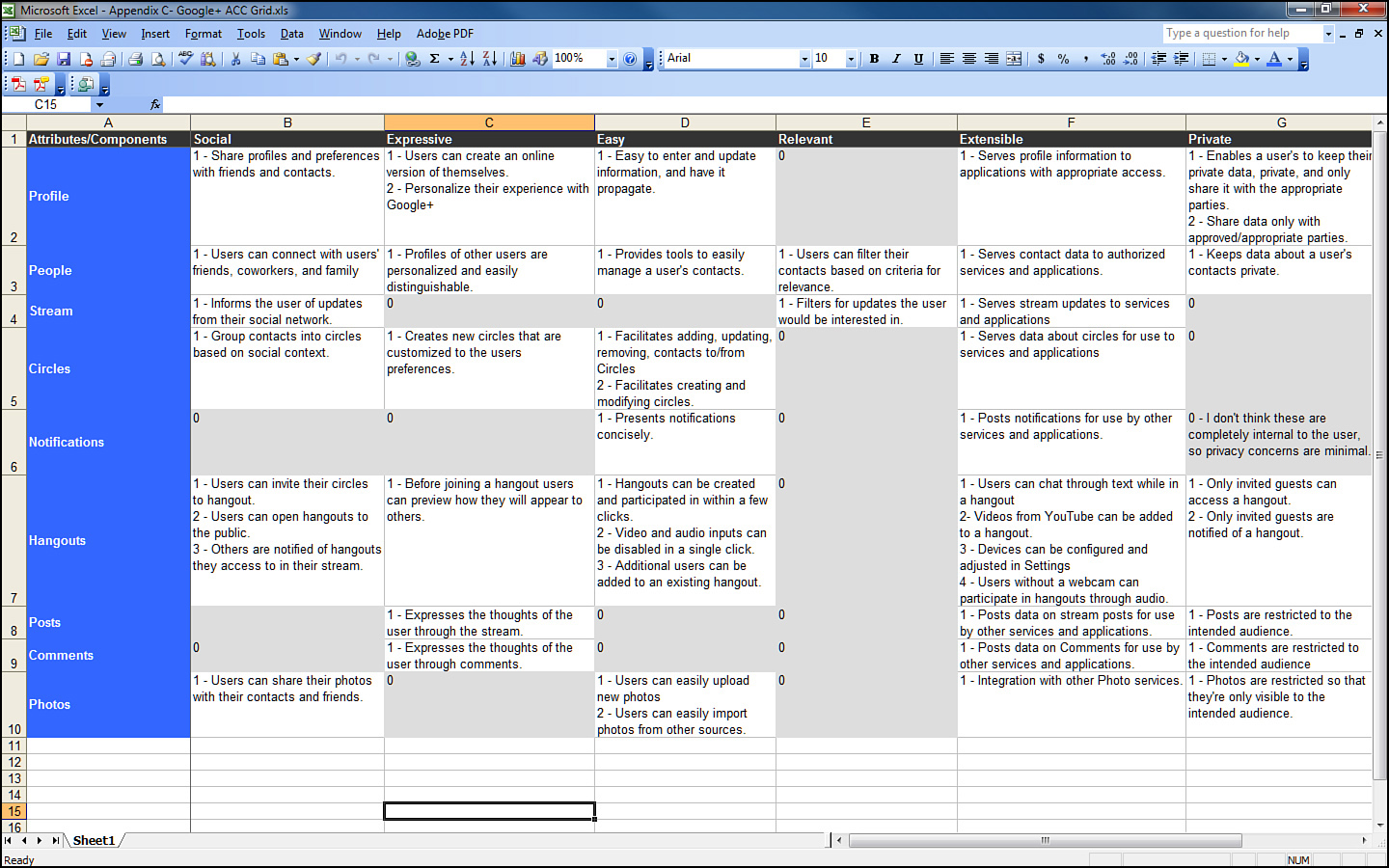

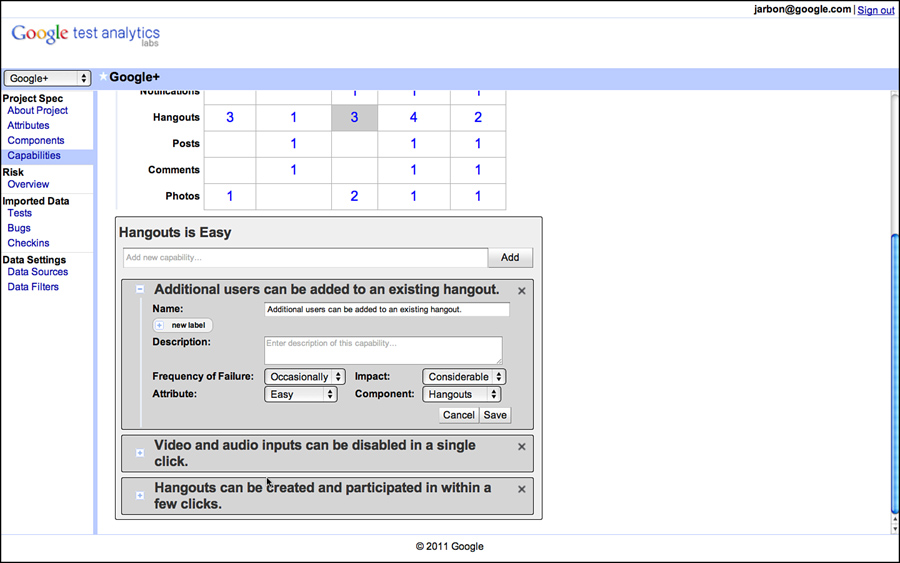

Enter ACC (Attribute Component Capability) analysis, a process pulled together from the best practices of a number of Google test teams and pioneered by the authors and several colleagues in various product areas. ACC has passed its early adopter’s phase and is being exported to other companies and enjoying the attention to tool developers who automate it under the “Google Test Analytics” label.

ACC has the following guiding principles:

• Avoid prose and favor bulleted lists. Not all testers wish to be novelists or possess the skill set to adequately capture in prose a product’s purpose in life or its testing needs. Prose can be hard to read and is easily misinterpreted. Just the facts please!

• Don’t bother selling. A test plan is not a marketing document or a place to talk about how important a market niche the product satisfies or how cool it is. Test plans aren’t for customers or analysts; they are for engineers.

• No fluff. There is no length expectation for a test plan. Test plans are not high school term projects where length matters. Bigger is not better. The size of the plan is related to the size of the testing problem, not the propensity of the author to write.

• If it isn’t important and actionable, don’t put it in the plan. Not a single word in the plan should garner a “don’t care” reaction from a potential stakeholder.

• Make it flow. Each section of the test plan should be an expansion of earlier sections so that one can stop reading at anytime and have a picture of the product’s functionality in his head. If the reader wants more detail, he can continue reading.

• Guide a planner’s thinking. A good planning process helps a planner think through functionality and test needs and logically leads from higher-level concepts into lower-level details that can be directly implemented.

• The outcome should be test cases. By the time the plan is completed, it should clearly describe not just what testing needs to be done but that it should also make the writing of test cases obvious. A plan that doesn’t lead directly to tests is a waste of time.

This last point is crucial: If the test plan does not describe in enough detail what test cases need to be written, then it hasn’t served its primary purpose of helping us test the application we are building. The planning of tests should put us in a position to know what tests need to be written. You are finished planning when you are at exactly that spot: You know what tests you need to write.

ACC accomplishes this by guiding the planner through three views of a product corresponding to 1) adjectives and adverbs that describe the product’s purpose and goals, 2) nouns that identify the various parts and features of the product, and 3) verbs that indicate what the product actually does. It follows that testing allows us to test that those capabilities work and the components as written satisfy the application’s purpose and goals.

A is for “Attribute”

When starting test planning or ACC, it is important to first identify why the product is important to users and to the business. Why are we building this thing? What core value does it deliver? Why is it interesting to customers? Remember, we’re not looking to either justify or explain these things, only to label them. Presumably the PMs and product planners, or developers, have done their job of coming up with a product that matters in the marketplace. From a testing perspective, we just need to capture and label these things so we can ensure they are accounted for when we test it.

We document the core values in a three-step process called Attribute, Component, Capability analysis and we do so in that order, with attributes as the first target.

Attributes are the adjectives of the system. They are the qualities and characteristics that promote the product and distinguish it from the competition. In a way, they are the reasons people would choose to use the product over a competitor. Chrome, for example, is held to be fast, secure, stable, and elegant, and these are the attributes we try to document in the ACC. Looking ahead, we want to get to a point where we can attach test cases to these labels so that we know how much testing we have done to show that Chrome is fast, secure, and so on.

Typically, a product manager has a hand in narrowing down the list of attributes for the system. Testers often get this list by reading the product requirements document, the vision and mission statement of the team, or even by simply listening to a sales guy describe the system to a prospective customer. Indeed, we find at Google that salespeople and product evangelists are an excellent source of attributes. Just imagine back-of-the-box advertising or think about how the product would be pitched on QVC, and you can get the right mindset to list the attributes.

Some tips on coming up with attributes for your own projects:

• Keep it simple. If it takes more than an hour or two, you are spending too long on this step.

• Keep it accurate. Make sure it comes from a document or marketing information that your team already accepts as truth.

• Keep it moving. Don’t worry if you missed something—if it’s not obvious later, it probably wasn’t that important anyway.

• Keep it short. No more than a dozen is a good target. We boiled an operating system down to 12 key attributes (see Figure 3.1), and in retrospect, we should have shortened that list to 8 or 9.

Figure 3.1 Original Chrome risk analysis.

Note

Some figures in this chapter are representational and are not intended to be read in detail.

Attributes are used to figure out what the product does to support the core reasons for the product’s existence and to surface these reasons to testers so they can be aware of how the testing they do impacts the application’s ultimate reason for existence.

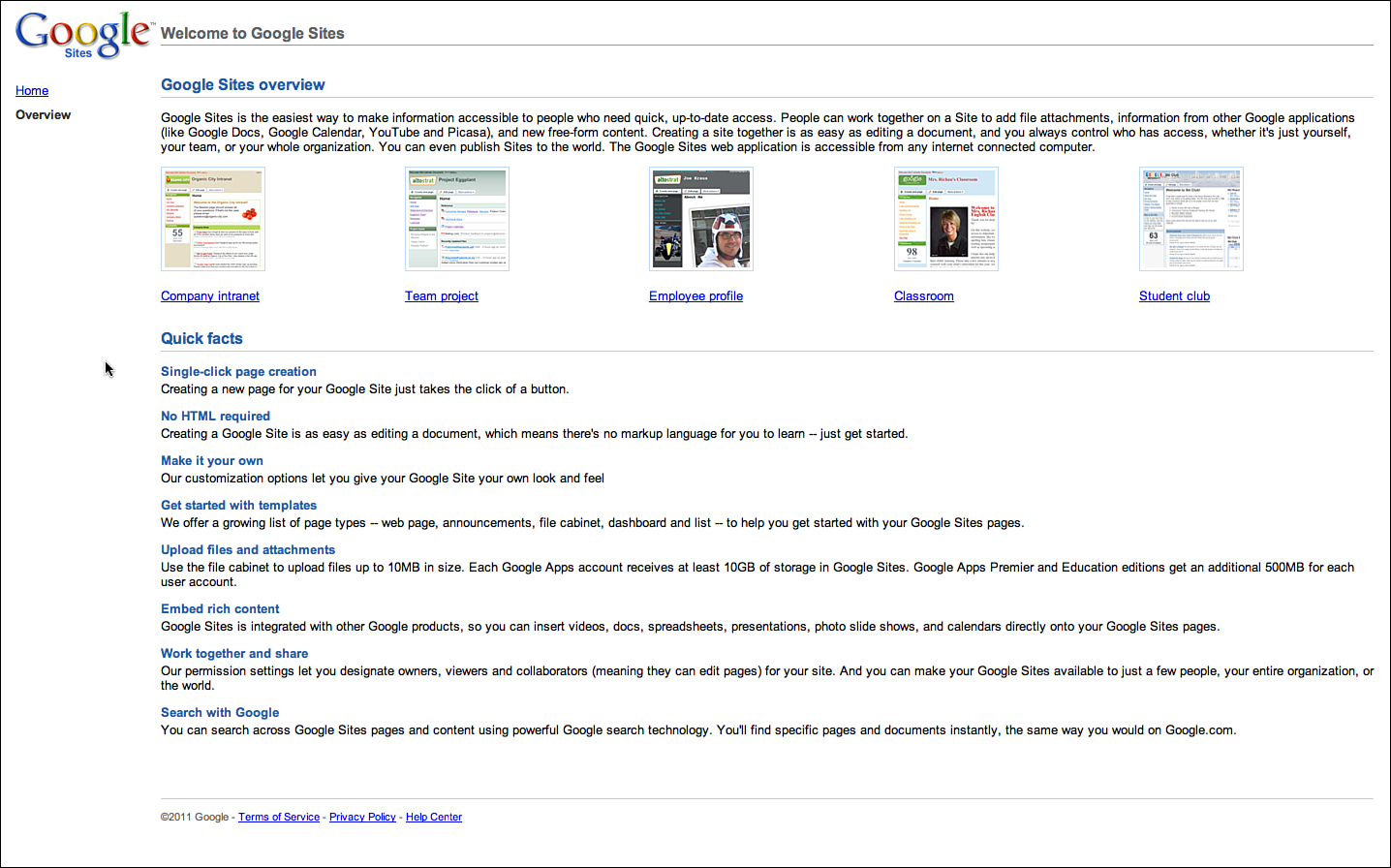

As an example, consider the attributes for a product called Google Sites, which is a freely available application for building a shared website for some open or closed community. Sites, as you’ll find with many end-user applications, is kind enough to provide most of its attributes for you in its own documentation, as shown in Figure 3.2.

Figure 3.2 Welcome to Google Sites.

Indeed, most applications that have some sort of Getting Started page or sales-oriented literature often do the work of identifying attributes for you. If they do not, then simply talking to a salesperson, or better yet, simply watching a sales call or demo, gets you the information you need.

Attributes are there for the taking. If you have trouble enumerating them in anything more than a few minutes, then you do not understand your product well enough to be an effective tester. Learn your product and listing its attributes becomes a matter of a few minutes of work.

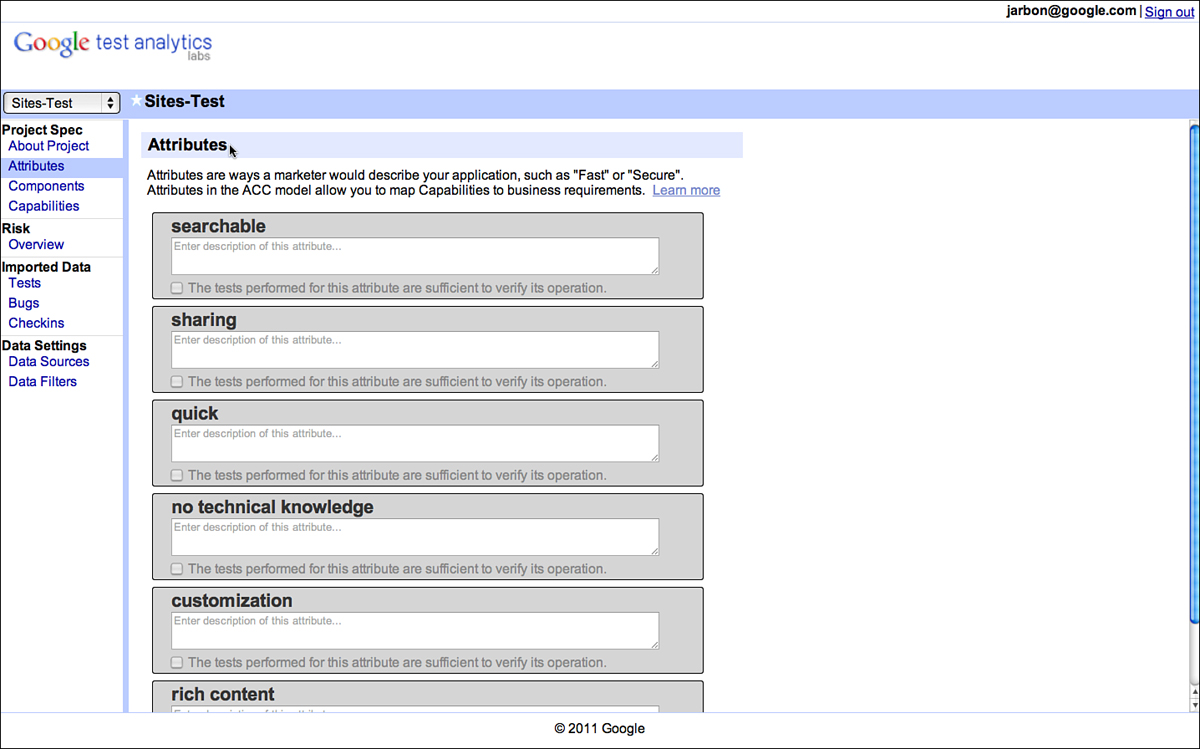

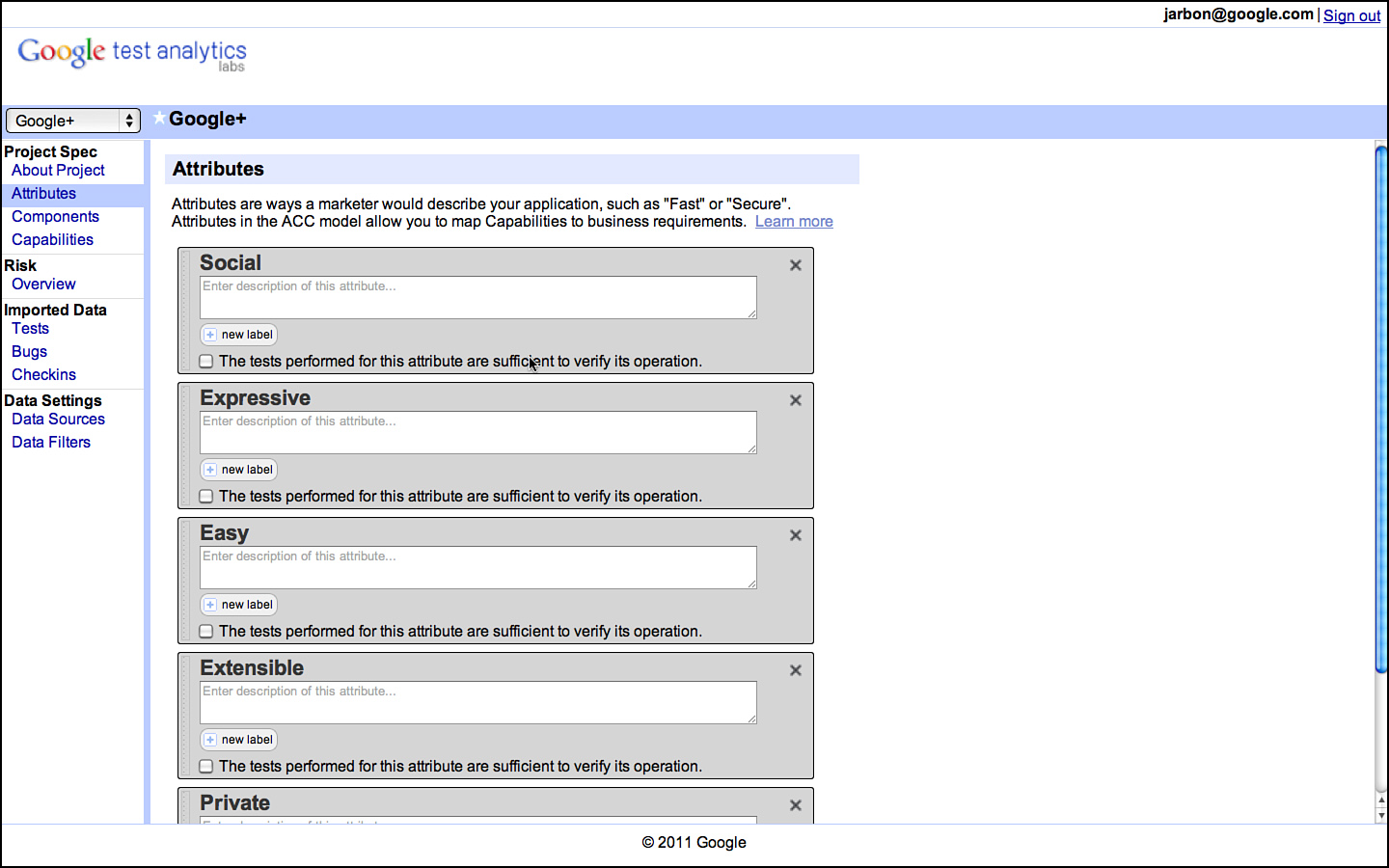

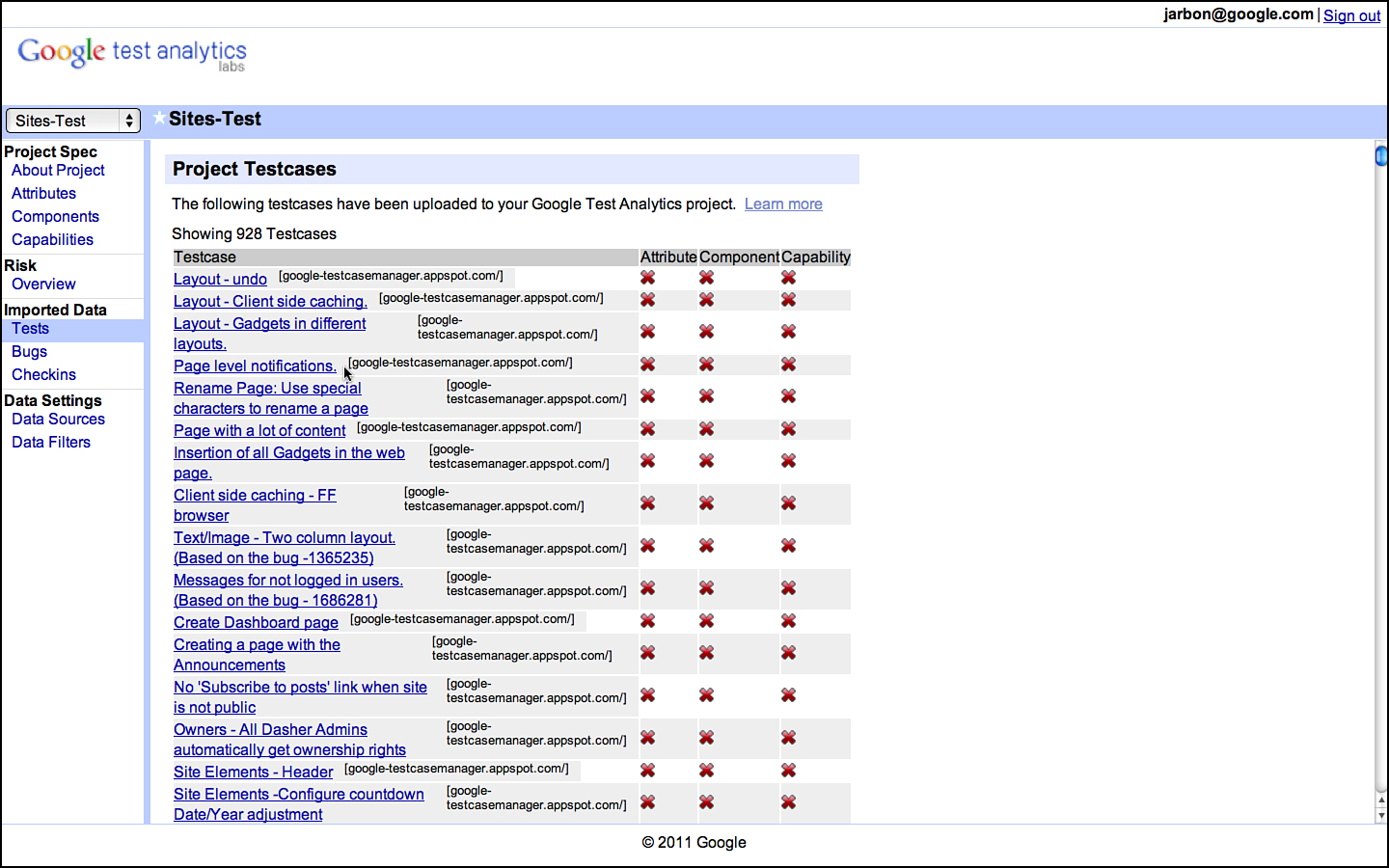

At Google, we use any number of tools for documenting risk; from documents to spreadsheets to a custom tool built by some enterprising engineers called Google Test Analytics (GTA). It doesn’t really matter what you use, just that you get them all written down (see Figure 3.3).

Figure 3.3 Attributes for Google Sites as documented in GTA.

C is for “Component”

Components are the nouns of the system and the next target of enumeration after the attributes are complete. Components are the building blocks that together constitute the system in question. They are the shopping cart and the checkout feature for an online store. They are the formatting and printing features of a word processor. They are the core chunks of code that make the software what it is. Indeed, they are the very things that testers are tasked with testing!

Components are generally easy to identify and often already cast in a design document somewhere. For large systems, they are the big boxes in an architectural diagram and often appear in labels in bug databases or called out explicitly in project pages and documentation. For smaller projects, they are the classes and objects in the code. In every case, just go and ask each developer: “What component are you working on?” and you will get the list without having to do much else.

As with attributes, the level of detail in identifying components of the product is critical. Too much detail and it becomes overwhelming and provides diminishing returns. Too little detail, and there’s simply no reason to bother in the first place. Keep the list small; 10 is good and 20 is too many unless the system is very large. It’s okay to leave minor things out. If they are minor, then they are part of another component or they don’t really matter enough to the end user for us to focus on them.

Indeed, for both the attributes and the components, spending minutes tallying them should suffice. If you are struggling coming up with components, then you seriously lack familiarity with your product and you should spend some time using it to get to the level of a power user quickly. Any actual power user should be able to list attributes immediately and any project insider with access to the source code and its documentation should be able to list the components quickly as well. Clearly we believe it is important for testers to be both power users and, obviously, project insiders.

Finally, don’t worry about completeness. The whole ACC process is based on doing something quick and then iterating as you go. If you miss an attribute, you might discover it as you are listing the components. Once you get to the capability portion, which is described next, you should shake out any attributes or components you missed earlier.

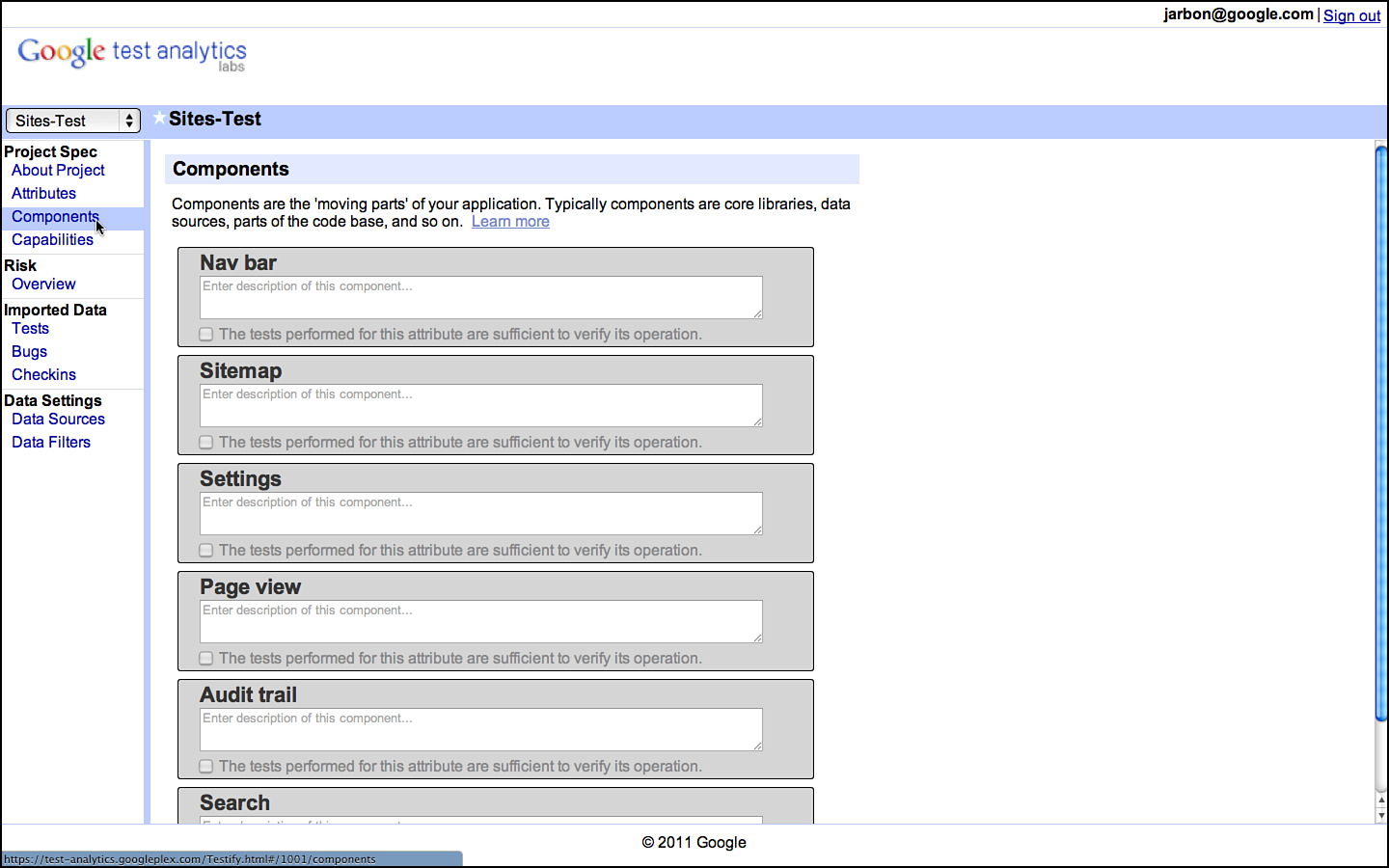

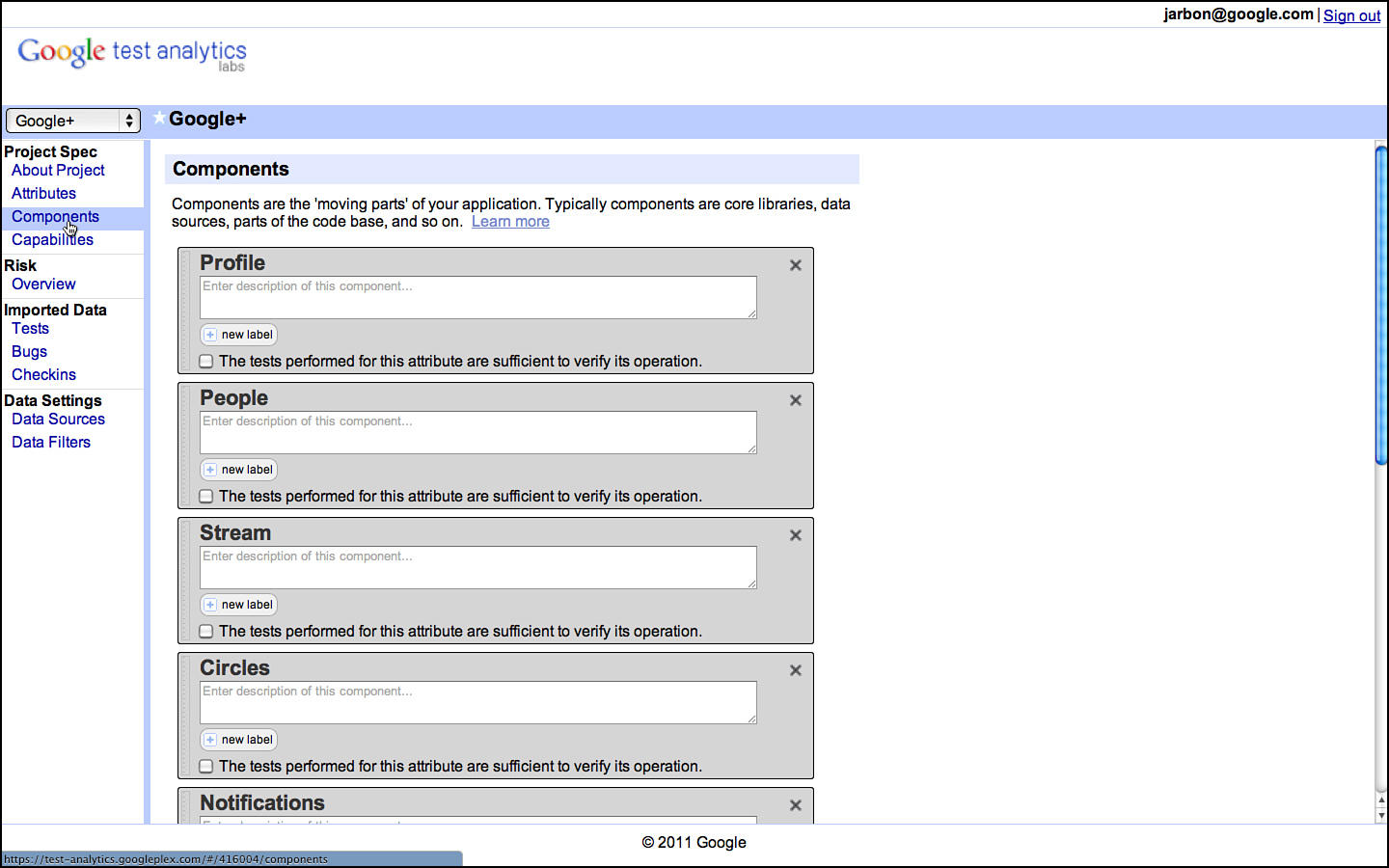

The components for Google Sites appear in Figure 3.4.

Figure 3.4 Components for Google Sites as documented in GTA.

C is for “Capability”

Capabilities are the verbs of the system; they represent the actions the system performs at the command of the user. They are responses to input, answers to queries, and activities accomplished on behalf of the user. Indeed, they are the very reason users choose to use your software in the first place: They want some functionality and your software provides it.

Chrome, for example, has the capabilities of rendering a web page and playing a Flash file. Chrome can synchronize between clients and download a document. All these are capabilities and these and many more represent the full set of capabilities of the Chrome web browser. A shopping app, on the other hand, has the capability of performing a product search and completing a sale. If an application can perform a task, then this task is labeled as one of its capabilities.

Capabilities lie at the intersection of attributes and components. Components perform some function to satisfy an attribute of the product and the result of this activity is providing a capability to a user. Chrome renders a web page fast. Chrome plays a Flash file securely. If your product does something that isn’t covered by the intersection of an attribute and a component, it probably doesn’t matter and warrants raising the question as to why we are bothering to have it in the product. A capability that doesn’t serve a core value to the product sounds very much like fat that can be trimmed and represents potential points of failure with little to be gained. Either that or there is an explanation for the capabilities’ existence and you don’t understand it. Not understanding your product is unacceptable in the testing profession. If any single engineer on a project understands his product’s value proposition to its users, that someone is a tester!

Here are some examples of capabilities for an online shopping site:

• Add/remove items from the shopping cart. This is a capability of the Cart component when trying to meet the Intuitive UI attribute.

• Collect credit card and verification data. This is a capability of the Cart component when trying to meet the Convenient attribute and the Integrated (for instance, integrated with the payment system) attribute.

• Processes monetary transactions using HTTPS. This is a capability of the Cart component when trying to meet the Secure attribute.

• Provide suggestions to shoppers based on the products they are viewing. This is a capability of the Search component when trying to meet the Convenient attribute.

• Calculate shipping cost. This is a capability of the UPS Integration component when trying to meet the Fast and Secure attribute.

• Display available inventory. This is a capability of the Search component when trying to meet the Convenient and Accurate attribute.

• Defer a purchase for a later date. This is a capability of the Cart component when trying to meet the Convenient attribute.

• Search for items by keyword, SKU, and category. This is a capability of the Search component when trying to satisfy the Convenient and Accurate attributes. In general, we prefer to treat each search category as a separate capability.

Obviously there can be a large number of capabilities and if it feels like you are listing everything you could test, then you are getting the hang of ACC! The entire idea is to list, quickly and succinctly, the most important capabilities of the system that need to be verified to be in working order.

Capabilities are generally user-oriented and written to convey a user’s view of what the system does. They are also far more numerous than either attributes or components. Whereas brevity rules in the first two stages of ACC, capabilities should in sum total describe everything the system is capable of doing and therefore be greater in number based on the feature richness and complexity of the application in question.

For systems, we have worked on at Google, capabilities tend to be in the hundreds for larger more complicated applications (Chrome OS has more than 300, for example) and in the dozens for smaller applications. Surely there is a case to be made for products with only a few capabilities to not require testing beyond what the developers and a few early users could accomplish. So if you are testing something with fewer than 20 capabilities, you might want to question your presence on the project!

The most important aspect of capabilities is that they are testable. This is the primary reason we write them in an active voice. They are verbs because they require action on our part, specifically that we will write test cases to determine whether each capability is implemented correctly and that the user will find the experience useful. We discuss more about translating capabilities into test cases later.

The right level of abstraction for capabilities is a point that gets argued about a great deal among Google TEs. Capabilities, by definition, are not meant to be atomic actions. A single capability can describe any number of actual use cases. In the previous shopping example, the capabilities don’t specify which items are in the cart or the outcome of a specific search. They describe general activities a user might be involved in. This is intentional as such details are too voluminous to document unless they are actually going to be tested. We cannot test all possible searches and shopping cart configurations, so we only bother translating these capabilities into test cases for the ones that actually get tested.

Capabilities are not meant to be test cases in the sense that they contain all the information necessary to run them as actual tests. We don’t put exact values and specific data into a capability. The capability is that a user can shop. The test case specifies what they shop for. Capabilities are general concepts of actions the software can take or a user can request. They are meant to be general. They imply tests and values but are not tests themselves.

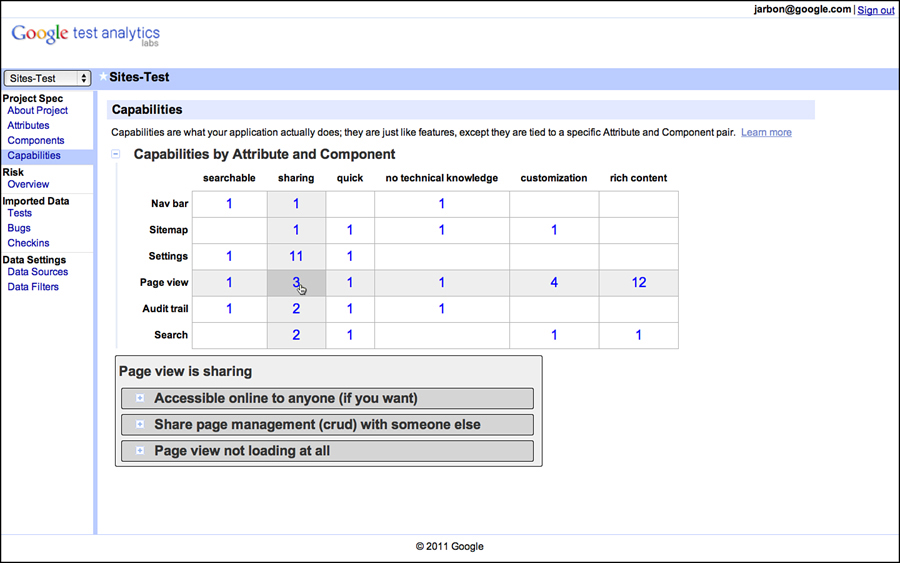

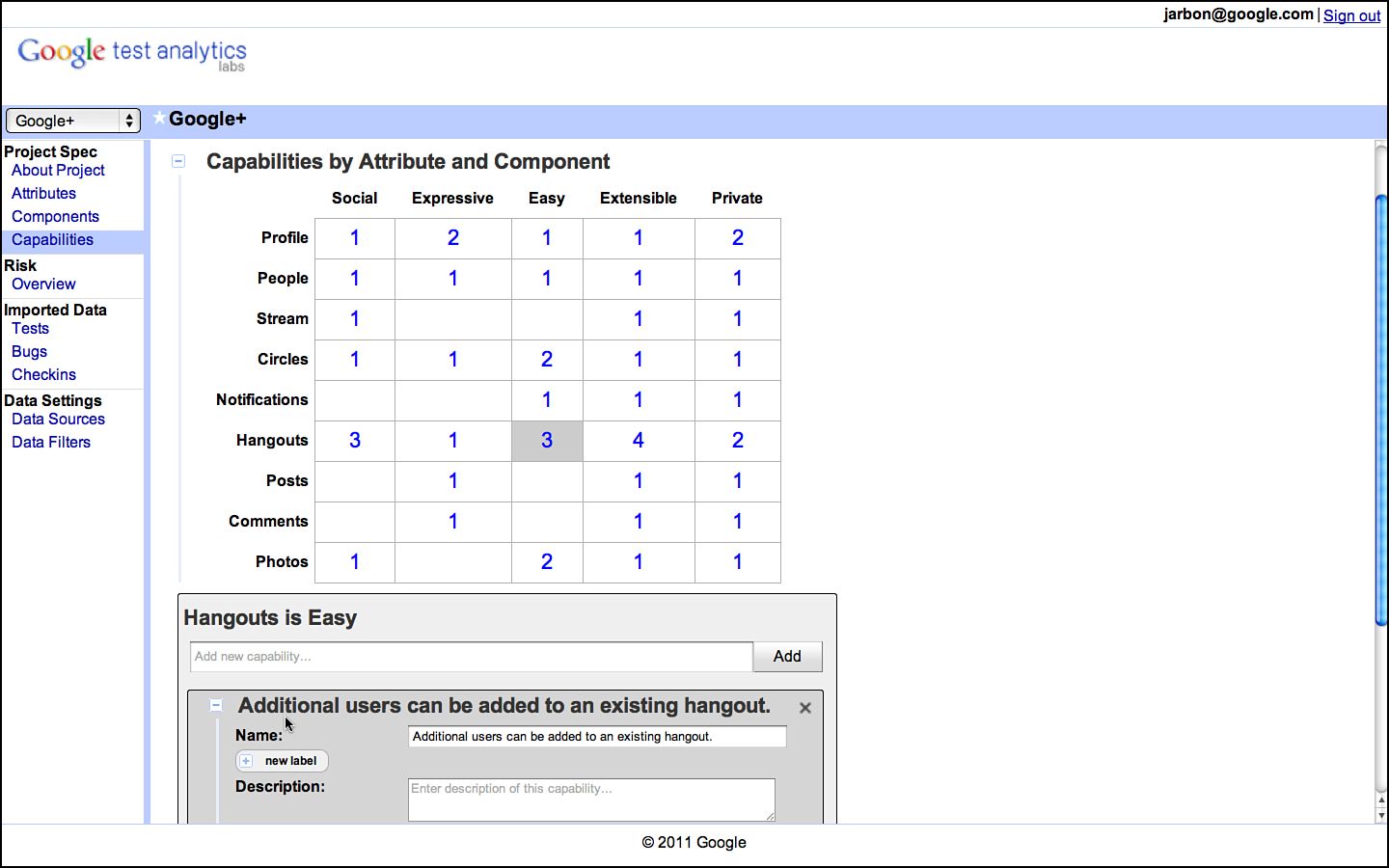

Continuing with the Google Sites example, we show in Figure 3.5 a grid showing attributes across the x axis and components across the y axis. This is the way we link capabilities back to attributes and components. The first thing to note is the large number of empty squares. This is typical as not every component has an impact on every attribute. For Chrome, only some of the components are responsible for making it fast or secure; the others will have blanks representing no impact. These blank entries mean that we don’t have to test this particular attribute component pair.

Figure 3.5 Capabilities are tied to attributes and components in GTA.

Each row or column of the table of capabilities represents a slice of functionality that is related in some fashion. A single row or column is a good way to break the application’s functionality into testable sessions. A test manager might take each row and assign it to separate test teams or have a bug bash to really hit a row or column hard. They also make excellent targets for exploratory testing and when each exploratory tester takes a different row and column, you can manage overlap and get better coverage.

For entries that contain a numeric value, this is the number of capabilities provided by that component to satisfy that attribute. The higher the number, the more test points for that particular intersection. For example, the Page View components impact the Sharing attributes with the following three capabilities:

• Make the document accessible to collaborators.

• Share page management duties with a collaborator.

• View collaborator position within a page.

These capabilities conveniently specify things that need to be tested for the Page View/Sharing pair. We can either write test cases for them directly or test a combination of capabilities by combining them into a larger use case or test scenario.

Writing good capabilities requires some discipline. Here are some properties we’ve found to be useful guidance for writing capabilities:

1. A capability should be written as an action and convey the sense of the user accomplishing some activity using the application under test.

2. A capability should provide enough guidance for a tester to understand what variables are involved in writing test cases for the behavior it describes. For example, process monetary transactions using https requires that the tester understands what types of monetary transactions the system is capable of performing and a mechanism to validate whether the transaction occurs over https. Obviously, there is a great deal of work to be done here. If we believe there are monetary transactions that might be missed by, say, a new tester on the team, then by all means, replicate this capability to expose the various transaction types. If not, then the level of abstraction is good enough. Likewise, if https is something well understood by the team, then the capability is fine as it is worded. Don’t fall into the trap of trying to document everything as capabilities; they are supposed to be abstract. Leave it to the test cases or exploratory testers themselves to provide that level of detail.3

3. A capability should be composed with other capabilities. In fact, a user story or use case (or whatever terminology you may prefer) should be describable with a series of capabilities. If a user story cannot be written with only the existing capabilities, then there are missing capabilities or the capabilities are at too high a level of abstraction.

Transforming a set of capabilities into user stories is an optional interim step that can add a great deal of flexibility to testing. In fact, there are several groups in Google that prefer more general user stories over more specific test cases when engaging with external contractors or when organizing a crowd-sourced exploratory testing effort. Test cases can be specific and cause boredom as they are executed over and over by a contractor, whereas a user story provides enough leeway in deciding specific behaviors that it makes testing more fun and less prone to mistakes from boring, rote execution.

Whether it is user stories, test cases, or both that are the ultimate goal, here is the general guidance for translating capabilities to test cases. Keep in mind these are goals, not absolutes.

• Every capability should be linked to at least one test case. If the capability is important enough to document, it is important enough to test.

• Many capabilities require more than one test case. Whenever there is variation in the inputs, input sequences, system variables, data used, and so on, multiple test cases are required. The attacks in How to Break Software and the tours in Exploratory Software Testing provide good guidance in test case selection and ways to think through data and inputs that are more likely to turn a capability into a test case that finds a bug.

• Not all capabilities are equal and some are more important than others. The next step in the process (described in the next section) addresses how risk is associated with the capabilities to distinguish their importance.

Once the ACC is complete, it specifies everything we could test if budget and time were not a problem. Given that both of these are pretty major problems, it helps to prioritize. At Google, we call such prioritization risk analysis and that’s the subject matter we turn to next.

Risk

Risk is everywhere. At home, on the roads, and at our workplace, everything we do has an element of risk, and shipping software is no different. We buy safer cars and practice defensive driving to mitigate the risk of driving. At work, we watch what we say in meetings and try to find projects suitable to our skill set to mitigate the risk of losing our job. How do we mitigate the risk of shipping software? How can we navigate the overwhelming odds that the software will fail (after all, no software is perfect) and cause untold damage to our company’s reputation?

Certainly not shipping the software isn’t an option despite its complete negation of the risk of failure. The enterprise benefits from well calculated risks.

Note that we did not say “well quantified” risks. Risk, at least for our purposes, isn’t something that requires mathematical precision. We walk on sidewalks and not the street not because of a formula that shows a 59 percent decrease in risk but a general knowledge that roads are not a safe place for pedestrians. We buy cars with air bags not because we know the math behind increasing our survival chances in case of a wreck but because they represent an obvious mitigation to the risk of breaking our faces on the steering wheel. Risk mitigations can be incredibly powerful without a great deal of precision and the process of determining risk is called risk analysis.

Risk Analysis

For software testing, we follow a common sense process for understanding risk. The following factors have been helpful to us when understanding risk:

• What events are we concerned about?

• How likely are these events?

• How bad would they be to the enterprise?

• How bad would they be for customers?

• What mitigations does the product implement?

• How likely is it that these mitigations would fail?

• What would be the cost of dealing with the failure?

• How difficult is the recovery process?

• Is the event likely to recur or be a one-time problem?

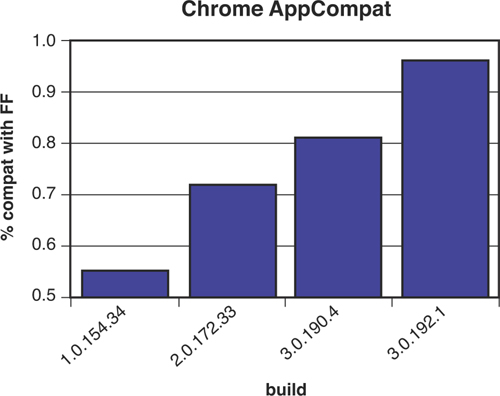

There are many variables in determining risk that makes quantifying it more trouble than mitigating it. At Google, we boil risk down to two primary factors: frequency of failure and impact. Testers assign simple values for each of these factors to each capability. We’ve found that risk is real as a qualitative number rather than as an absolute number. It isn’t about assigning an accurate value; it’s about determining whether one capability is more or less risky than another capability. This is enough to determine which capabilities to test and which order to test them in. GTA presents the options, as shown in Figure 3.8.

Figure 3.8 Estimating risk in terms of frequency and impact in GTA for Google+.

GTA uses four predefined values for frequency of occurrence:

• Rarely: It’s hard to imagine a case where failure would occur and recovery would not be trivial.

• Example: Download page for Chrome.4 The content is largely static with parameterization for only auto-detection of the client OS, even if there was a break in the core HTML or script on the page that would be detected quickly by monitoring code.

• Seldom: There are cases where failure can occur, but low complexity or low usage would make such occurrences rare.

• Example: The Forward button in Chrome. This button is used, but far less frequently than the Back button. Historically, it doesn’t fail often, and even if it did regress, we would expect our early adopters on the early release channels to catch this issue quickly as it would be fairly obvious.

• Occasionally: Failure circumstances are readily imaginable, somewhat complex, and the capability is one we expect to be popular.

• Example: Chrome Sync capabilities. Chrome synchronizes the bookmarks, themes, form-fill, history, and other user profile data across clients. There are different data types and multiple OS platforms, and merging changes is a somewhat complex computer science problem in its own right. Users are also likely to notice if this data isn’t synchronized. Synchronization happens only when data to be synchronized changes, like when a new bookmark has been added.

• Often: The capability is part of a high-use, high-complexity feature that experiences failure on a regular basis.

• Example: Rendering web pages. This is the primary use case of a browser. Rendering HTML, CSS, and JavaScript code of whatever origin and quality is the principle task a browser performs. If any of this code fails, it’s the browser that the user blames. Risk increases when you consider such a failure on a high-traffic site. Rendering issues aren’t always caught by users either; they often result in elements misaligned slightly, but still functional, or elements go missing, but the user wouldn’t know they aren’t there.

Testers choose one of these values for each capability. We used an even number of values on purpose to keep testers from simply picking the middle value. It’s important to think about it a bit more carefully.

Estimating impact takes a similarly simplistic approach and is also based on choosing from an even number of possibilities (more examples from the Chrome browser):

• Minimal: A failure the user might not even notice.

• Example: Chrome Labs. This is optional functionality. Failure to load the chrome://labs page would affect few users. This page contains optional Chrome experimental features. Most users don’t even know they are there; the features themselves are labeled “use at your own risk” and don’t pose a threat to the core browser.

• Some: A failure that might annoy the user. If noticed, retry or recovery mechanisms are straightforward.

• Example: Refresh button. If this fails to refresh the current page, the user can retype the URL in the same tab, simply open a new tab to the URL, or even restart the browser in the extreme case. The cost of the failure is mostly annoyance.

• Considerable: A failure would block usage scenarios.

• Example: Chrome extensions. If users installed Chrome extensions to add functionality to their browser and those extensions failed to load in a new version of Chrome, the functionality of that extension is lost.

• Maximal: A failure would permanently damage the reputation of the product and cause users to stop using it.

• Example: Chrome’s autoupdate mechanism. Should this feature break, it would deny critical security updates or perhaps even lead the browser to stop working.

Sometimes the impact to the enterprise and the user are at odds. A banner ad that fails to load is a problem for Google but might not even be noticed by a user. It is good practice to note whether risk to the enterprise or risk to the user is being considered when assigning a score.

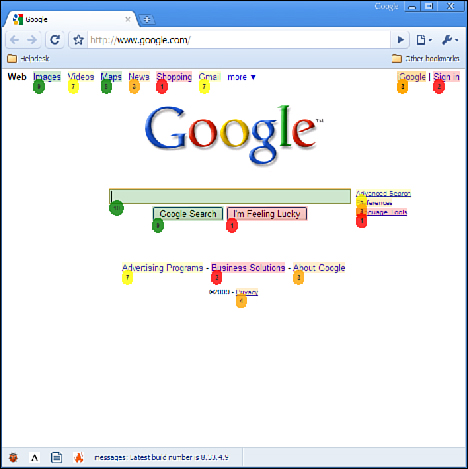

We can generate a heat map of risk areas of Google Sites based on the values entered by the tester and the Attribute-Component grid shown earlier. This appears in Figure 3.9.

Figure 3.9 Heat map of risk for attribute-component grid for an early version of Google+.

The entries in the grid light up as red, yellow, or green depending on the risk level of the components assigned to those intersections. It is a simple calculation of risk for each of the values you’ve entered—we simply take the average of each capability’s risk. GTA generates this map, but a spreadsheet can also be used.

This diagram represents the testable capabilities of the product and their risk as you assign the values. It’s difficult to keep bias from these numbers, and testers do represent a specific point of view. We’re careful to solicit feedback from other stakeholders as well. Following is a list of stakeholders and some suggestions about getting them involved in assigning these risk values:

• Developers: Most developers, when consulted, will assign the most risk to the features they own. If they wrote the code, they want tests for it! It’s been our experience that developers overrate the features they own.

• Program Managers: PMs are also humans and introduce their own biases. They favor the capabilities they see as most important. In general, they favor the features that make the software stand out from its competition and make it “pop.”

• Salespeople: Sales are the ones who get paid for attracting users. They are biased toward features that sell the product and look good in demos.

• Directors and VPs: Executives often highlight the features that set the software apart from its major competitors.

Obviously, all stakeholders have significant biases so our approach has been to solicit all their opinions and have them each separately score the capabilities using the previous two scales. It isn’t always easy to get their participation, but we’ve hit upon a strategy that has been successful. Instead of explaining the process and convincing them to help, we simply do it ourselves and present them with our heat map. Once they see our bias, they are quick to supply their own. Developers participate in mass if they know we are using the map to prioritize testing; the same goes for PMs and salespeople. They all have a stake in quality.

There is power in this approach. In determining risk ourselves, we have undoubtedly come to a conclusion they will argue with. Indeed, in presenting our risk analysis as the basis for all forthcoming tests, we have given them something to argue about! And that is the point. Instead of asking their opinions about a concept they consider nebulous, we have shown them a specific conclusion they can argue against. People are often ready to tell you what the answer is not. We also avoid having everyone wade through all data for which they have little interest or context. With this little ruse in our toolkit, we generally get a lot of focused input that we can factor into the risk calculations.

Once risk is generally agreed upon, it’s time to start mitigating it.

Risk Mitigation

Risk can rarely be eliminated. Driving is risky, yet we have places to go. Travel is risky, yet we do it anyway, and more often than not, the risk never manifests as any real injury. Why is this the case? Because we mitigate risks based on our actions. There are certain times of the day we choose not to drive and certain destinations to which we choose not to travel. This is mitigation.

For software, the ultimate mitigation is to simply eliminate the riskiest components: the less software we ship, the less risk we assume. But besides outright elimination of risk, there are many things we can do to mitigate risk:

• We can write user stories around risky capabilities to define paths of less risk and feed this back to development so that more constraints can be added to the app.

• We can write regression test cases to ensure that failures cannot be reintroduced without being detected.

• We can write and run tests to show the need for recovery and fallback features.

• We can add instrumentation and watchdog code to detect failures early.

• We can add instrumentation that notices behavioral changes across versions of the software that indicate a bug has regressed.

The exact mitigation needed depends greatly on the application and what users expect of its safety and security. For testers, we might be involved in the actual mitigation, but we are most certainly involved in exposing the risk. We proceed by prioritizing the capabilities marked in red over those in yellow or green. We want to test in the order of risk. This is important: If you can’t test everything, test the most important stuff first, and the most important stuff is the riskiest stuff.

Depending on the type of project, it is up to you the tester to advise about readiness to ship. A tester should be able to glance at the risk heat map and make a recommendation to other engineers whether the product is ready for release. If it is a Google Labs Experiment, it is probably fine to ship with some red areas as long as they don’t deal with privacy or security. If it is a major new release of Gmail, any yellows will likely be a blocking concern. The colors are also simple enough that even test directors can understand them.

Concerns about risk fade over time and a significant amount of successful testing is a good indication that risk is at acceptable levels. This makes it crucial that we tie test cases back to individual capabilities and back to attributes and components in the risk grid. Indeed, ACC fits this requirement perfectly and it’s why we designed it the way we did.

Last Words on Risk

Google Test Analytics supports risk analysis based on values of the categories (very rarely, seldom, occasionally, often) we presented earlier. We specifically do not want to turn risk analysis into a complicated effort or it won’t get done. We also aren’t concerned about the actual numbers and math because a single number in isolation means little anyway. Simply knowing something is more risky than something else is often enough. Risk is about choice, choosing one test over another because it tests a more risky area of the application. It is enough to know that A is riskier than B without paying too much attention to the exact risk value of either. Simply knowing that one feature is riskier than another allows an individual test manager to distribute testers to features more optimally and on an organizational level, it allows someone like Patrick Copeland to decide how many testers each product team is allocated. Understanding risk provides benefit at every level of the organization.

Risk analysis is a field of its own and taken very seriously in many different industries. We use a lightweight version here, but remain interested in additional research that can improve the way we test. If you want to read more about risk analysis, we suggest searching for Risk Management Methodology on Wikipedia. It will get you to a good starting point for a more in-depth treatment of this important topic.

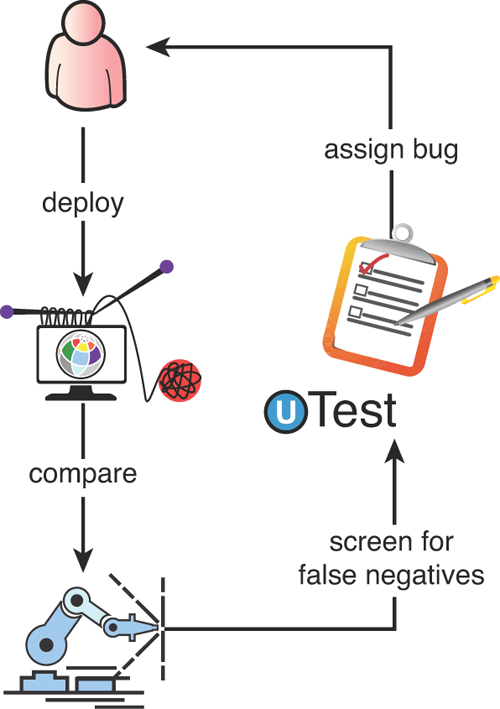

GTA helps us identify risk, and testing is what helps us mitigate it. The Google TE is a facilitator of this mitigation. A TE might decide to perform tests in house on some of the riskier areas and might request regression tests to be added by SWEs and SETs. Other tools at the TE’s disposal include manual and exploratory testing and the use of dogfood users, beta users, and crowd sourcing.

The TE is responsible for understanding all the risk areas and creates mitigations using any and every tool he or she can get his hands on.

Here is a set of guidelines we’ve found helpful.

1. For every set of high-risk capabilities and attribute-component pairs that appear red in the GTA matrix, write a set of user stories, use cases, or test guidance that address them. Google TEs take personal responsibility for the highest risk areas, and even though they might coordinate with other TEs or use tools, the ultimate responsibility is their own.

2. Take a hard look at all the previous SET- and SWE-oriented testing that has occurred and assess its impact on the risk levels exposed in GTA. Was this testing well placed to manage the risk? Are there additional tests that need to be added? A TE might need to write these tests or negotiate with the attending SET or SWE to get them written. The important thing is not who does the coding, but that it gets done.

3. Analyze the bugs that have been reported against every high-risk attribute-capability pair and ensure that regression tests are written. Bugs have a tendency to recur as code is modified. Every bug in every high-risk component should have a regression test.

4. Think carefully about the high-risk areas and inquire about fallback and recovery mechanisms. Surface any concerns about user impact by coming up with worst-case scenarios and discussing them with other engineers. Try and determine how realistic the scenarios are. TEs who cry wolf too often won’t be listened to again. It’s important to reduce the shrill warnings unless they cover high-risk scenarios that are also very realistic and unmitigated by existing tests.

5. Involve as many concerned stakeholders as possible. Dogfood users are often internal and if left on their own will just use the system without providing a lot of input. Actively engage dogfood users. Specifically ask them to run certain experiments and scenarios. Ask them questions such as, “How does this work on your machine?” and “How would you use a feature like this one?” Dogfood users are numerous at Google, and TEs should be very active in pushing them for more than just casual use.

6. If none of the previous is working and a high-risk component continues to be under-tested and fails often, lobby hard for its removal. This is a chance to explain the concept of risk analysis to project owners and underscore the value a TE provides.

For less risky capabilities, we can be somewhat less insistent in our execution. We may decide that writing specific test cases for lower risk areas is too much investment for too little return. Instead, we might choose to perform exploratory testing or use crowd sourcing to handle these areas. The concept of “tours” as high-level guidance for exploratory testing5 is often used as direction for crowd source testers. “Run the Fed Ex tour on this set of capabilities” will often get far better results from the crowd than simply tossing them an app and hoping for the best. At once, we have identified the features we want testing and instructed them how to go about testing it.

The real power of the ACC is that it presents a set of capabilities that can be rank-ordered by risk and also parceled out in sections to various quality collaborators. The set of actual project TEs can be given different sets of capabilities to validate. Dogfood users, 20-percent contributors, contract testers, crowd testers, SWEs, SETs, and so on can all take a subset of capabilities and the TE can feel good about getting coverage of important areas with less overlap than simply throwing out the application to a free for all of usage.

The range of a TE, unlike that of an SET, does not stop when our software is released.

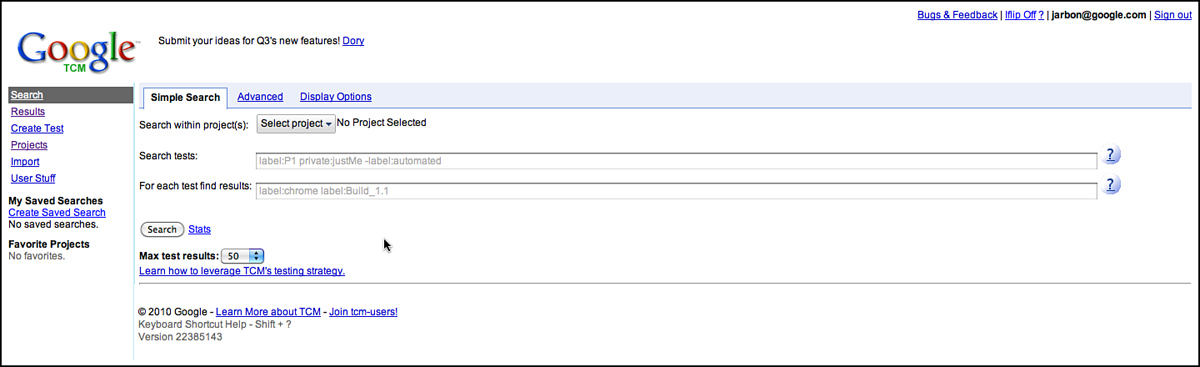

Life of a Test Case

Whether they come from user stories or from direct translation of capabilities, Google TEs create a large number of test cases that prescribe, in terms ranging from general to precise, inputs and data to test an application. Unlike code and automated test artifacts that are managed by common infrastructure, test case management is still a group-by-group thing, but a new tool is beginning to change all that.

Spreadsheets and documents have often been the tool of choice for storing test cases. Teams with rapid feature development and fast ship cycles aren’t too concerned with keeping test cases around for long. As new features arrive, they often invalidate the scripts, requiring all the tests to be rewritten. In such cases, documents that can be shared and then trashed are as suitable as any other format. Documents are also a suitable mechanism to describe the context of a test session as opposed to specific test case actions. Such tests are less prescriptive and more general suggestions about which feature areas to explore.

Of course, some teams have rather elaborate spreadsheets for storing test procedures and data and some teams even document ACC tables in spreadsheets because they are more flexible than Google Test Analytics (GTA). But this takes discipline and some continuity in the TE team as one TE’s procedure will be another TE’s shackles. Large team turnover requires a more structured approach, a structure that can outlast any individual team member.

Spreadsheets are preferred over documents because they provide convenient columns for procedure, data, and pass or fail tags, and they are easy to customize. Google Sites and other types of online wikis are often used to display the test information to other stakeholders. They can be easily shared and edited by anyone on the team.

As Google grew, many teams had a growing set of prescriptive test cases and regression tests that needed better management. Indeed, the test cases documented grew so large, they became burdensome to search and to share. Another solution was needed and some enterprising testers built a system called Test Scribe that was loosely based on any number of commercial tools and other homegrown test case management systems our testers were familiar with from previous employers.

Test Scribe stored test cases in a rigid schema and had the capability to include or exclude test cases from a specific test pass. It was a basic implementation and enthusiasm over using it and maintaining it was waning; however, many teams had taken dependencies on it and after nursing it along for a few quarters, it was trashed and a new tool was written in 2010 by a Senior SET named Jordanna Chord. Google Test Case Manager (GTCM) was born.

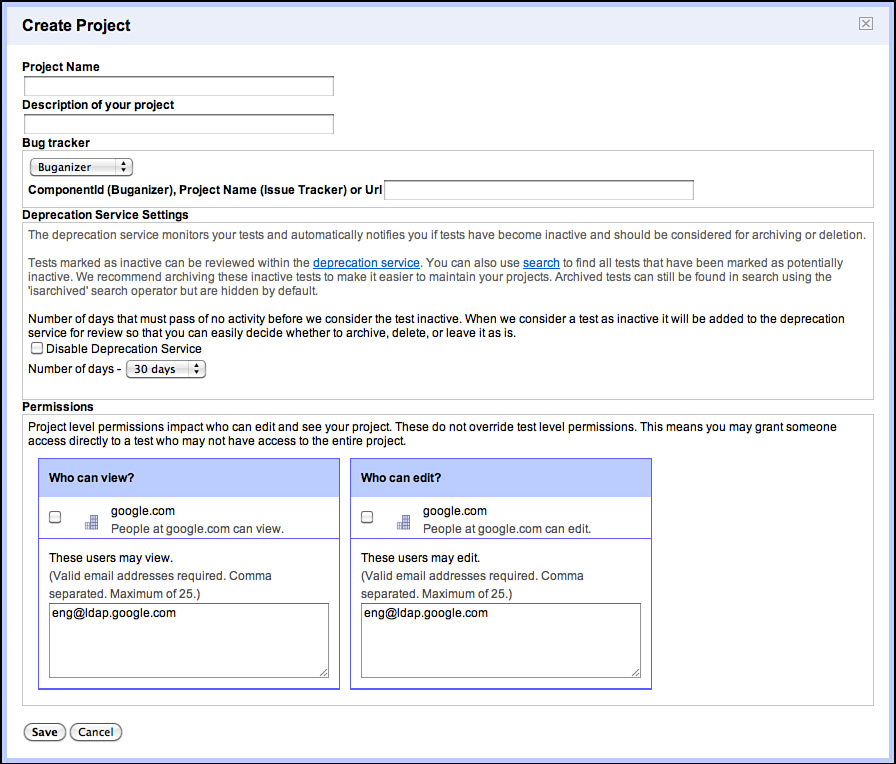

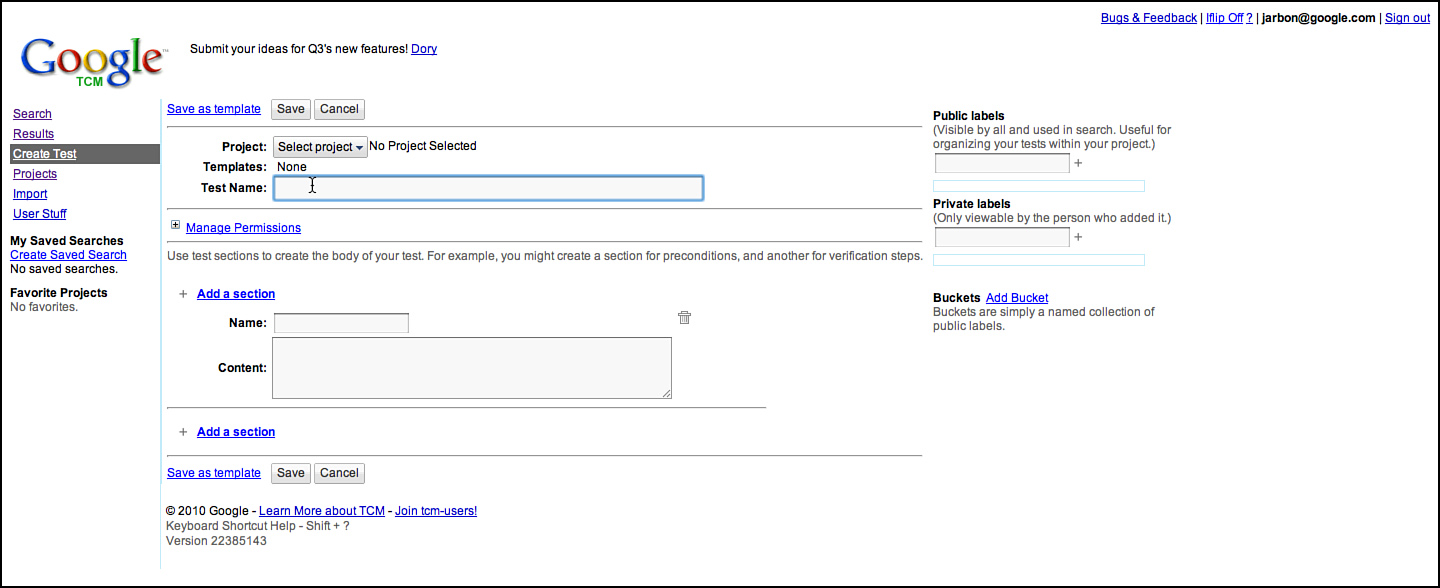

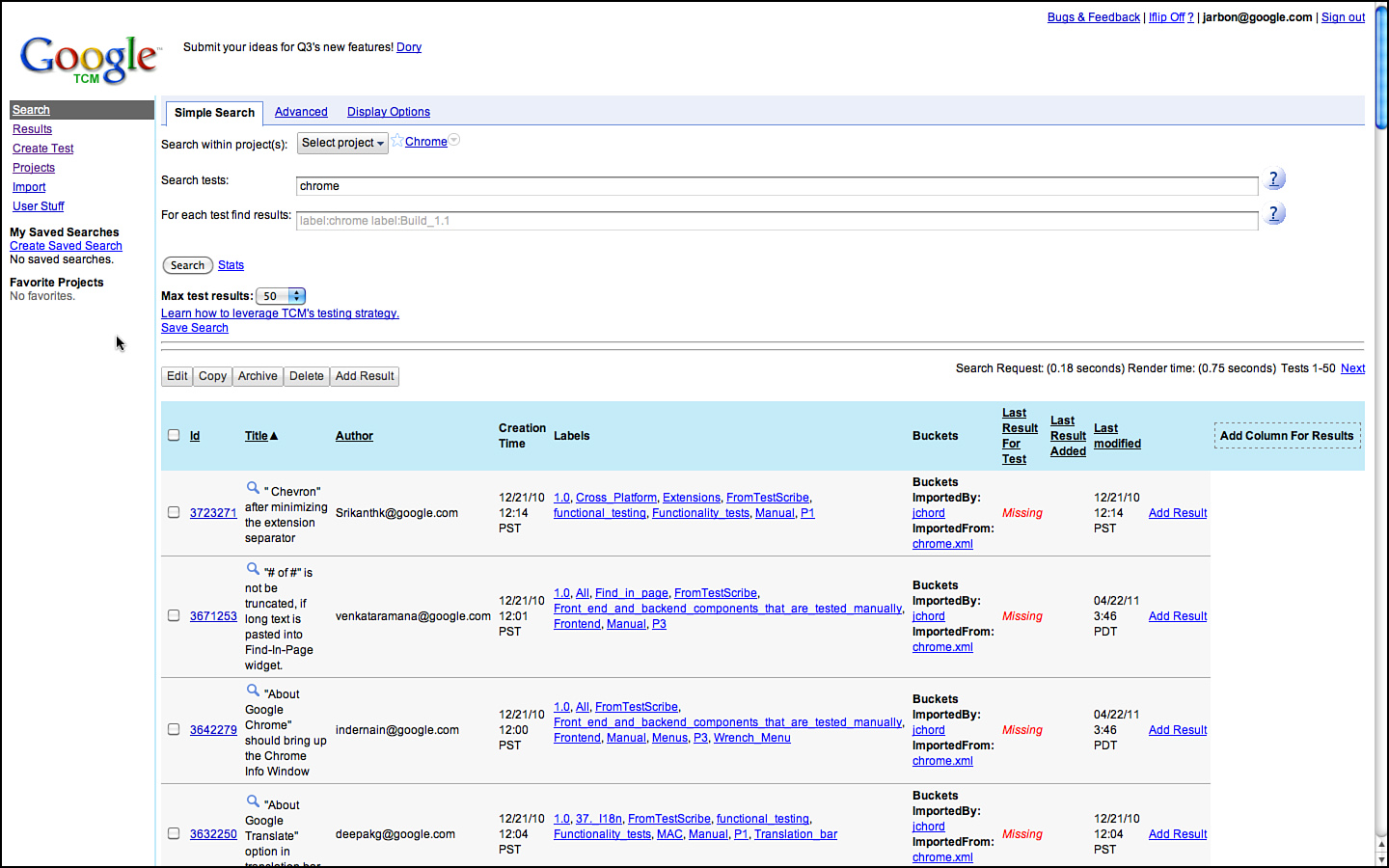

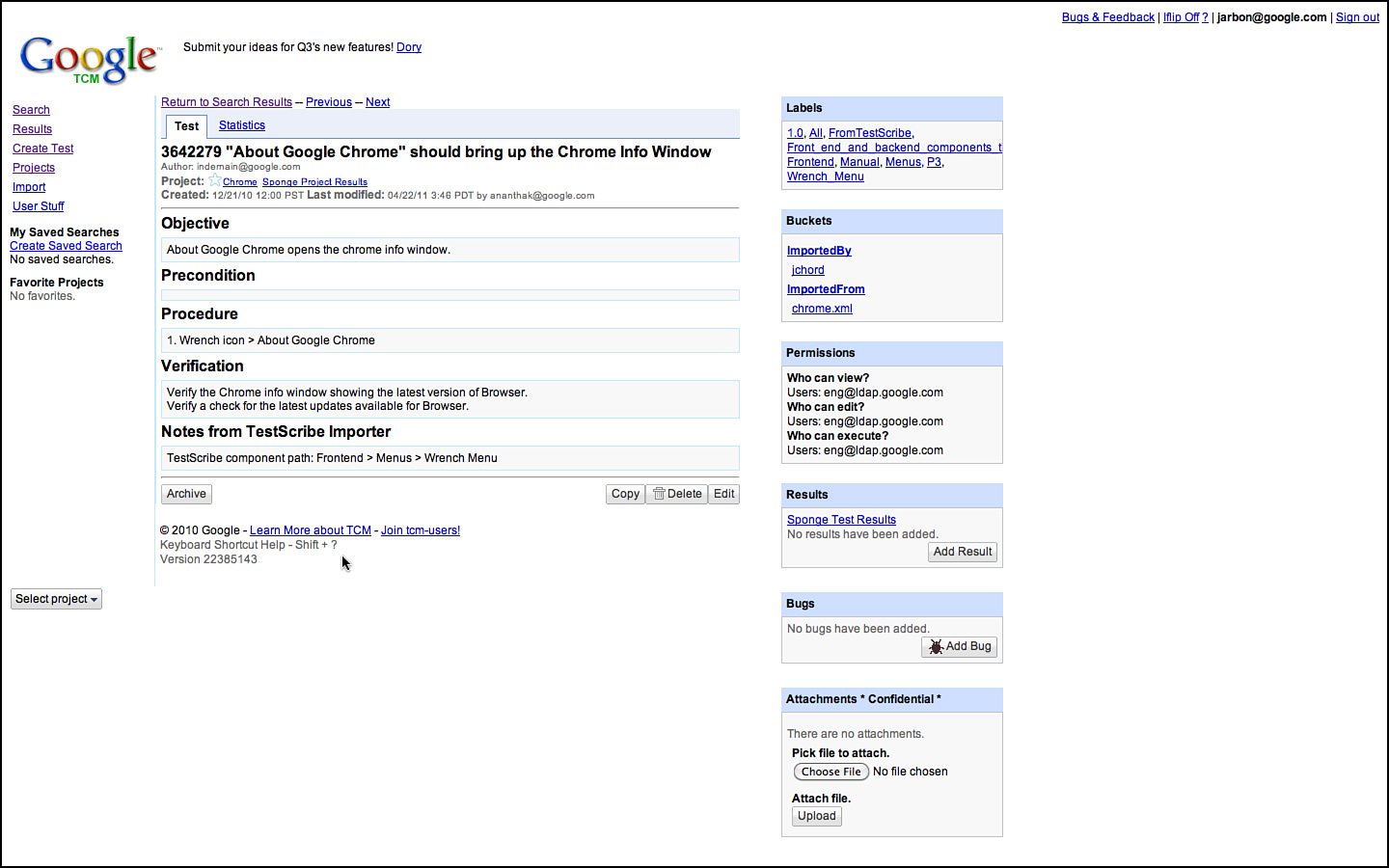

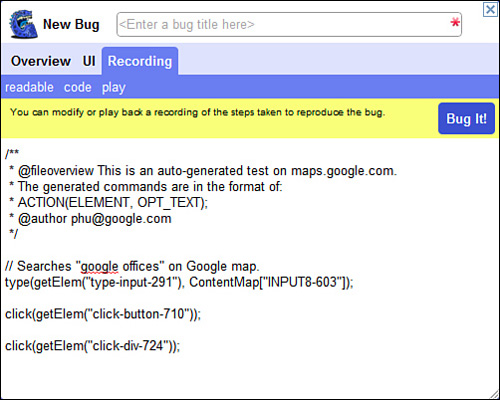

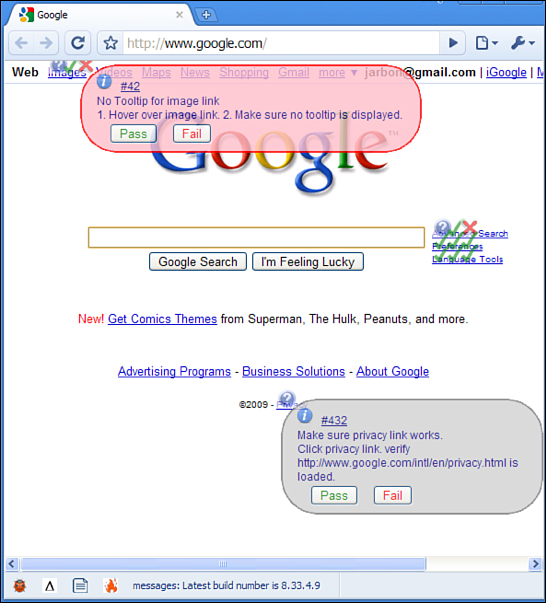

The idea behind GTCM was to make it simple to write tests, provide a flexible tagging format that can be tailored to any project, make the tests easily searchable and reusable, and, most importantly, integrate GTCM with the rest of Google’s infrastructure so that test results could become a first-class citizen. Figures 3.10 through 3.14 show various screenshots of GTCM. Figure 3.11 shows the page for creating test cases. Test cases can have arbitrary sections or labels. This allows GTCM to support everything from classic test and validation steps, to exploratory tours, cukes,7 and userstory descriptions, and some test teams even store code or data snippets in the GTCM test case itself. GTCM had to support a wide variety of test teams and their varied test case representations.

Figure 3.10 The GTCM home page is focused on the search experience.

Figure 3.11 Creating a project in GTCM.

Figure 3.12 Creating a test in GTCM.

Figure 3.13 View of test cases when searching for Chrome in GTCM.

Figure 3.14 Simple test case for Chrome’s About dialog.

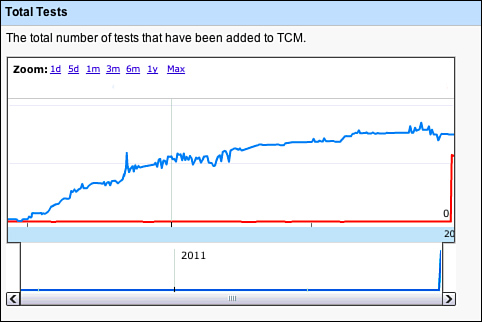

The metrics around GTCM are interesting to get a feel for what testers are doing with test cases in aggregate. The total number of tests and tests result trends are interesting, as shown in Figures 3.15 and 3.16. The total number of tests is reaching an asymptote. Basic analysis of why this is so is that Google is also deprecating older, more manual-regression focused projects, along with their tests. Also, GTCM largely holds manual tests, and many teams are replacing their manual testing with automation or external crowd-sourcing and exploratory testing, which is putting downward pressure on total test case counts in our internal TCM—even while coverage is going up. The numbers of tests logged are increasing as these numbers are dominated by several large and necessarily manually focused teams such as Android.

Figure 3.15 Test case counts over time in GTCM.

The total number of manual test results logged is increasing, as one would generally expect (see Figure 3.16).

Figure 3.16 Test result counts over time in GTCM.

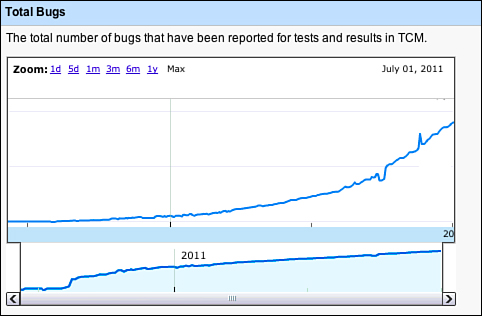

Taking a look of a plot of the number of bugs associated with GTCM, in Figure 3.17, is interesting to look at, but it also doesn’t tell the whole story. Google is bottom-up, so some teams are particular about tracking which bugs came from which test cases; others are much looser with their data, as they don’t find that information too valuable for the project. Also, some of these logged bugs are filed via automation; not all of these are from manual test execution.

Figure 3.17 Total number of bugs logged during GTCM test execution over time.

A primary requirement of GTCM from day one was to have a clean and simple API. TestScribe technically had an API, but was SOAP-like and the authentication scheme was so painful that few people used it. And, as security tightened internally, the original authentication mode used made it too awkward to use. To resolve all these issues, GTCM now has a restful JSON API.

The team intends to open GTCM up for general external usage soon. We also hope to open source this test case database for the world to collectively maintain. GTCM was also designed with external re-use in mind. It is built on a Google App Engine for scalability and also to allow other testers outside of Google to host their own instance if they prefer. GTCM’s internals are also designed to abstract much of the logic and UI from Google App Engine, so it can be ported to other stacks if people prefer. Watch the Google Testing Blog for any updates on these efforts.

Life of a Bug

Bugs and bug reports are the one artifact every tester understands. Finding bugs, triaging bugs, fixing bugs, and regressing bugs are the heartbeat and workflow for software quality. This is the part of testing that is the most conventional at Google, but there are still a few interesting deviations from the norm. For this section, we ignore the bugs that are filed to track work items and use the term to identify actual broken code. As such, bugs often represent the hour-to-hour and day-to-day workflow for engineering teams.

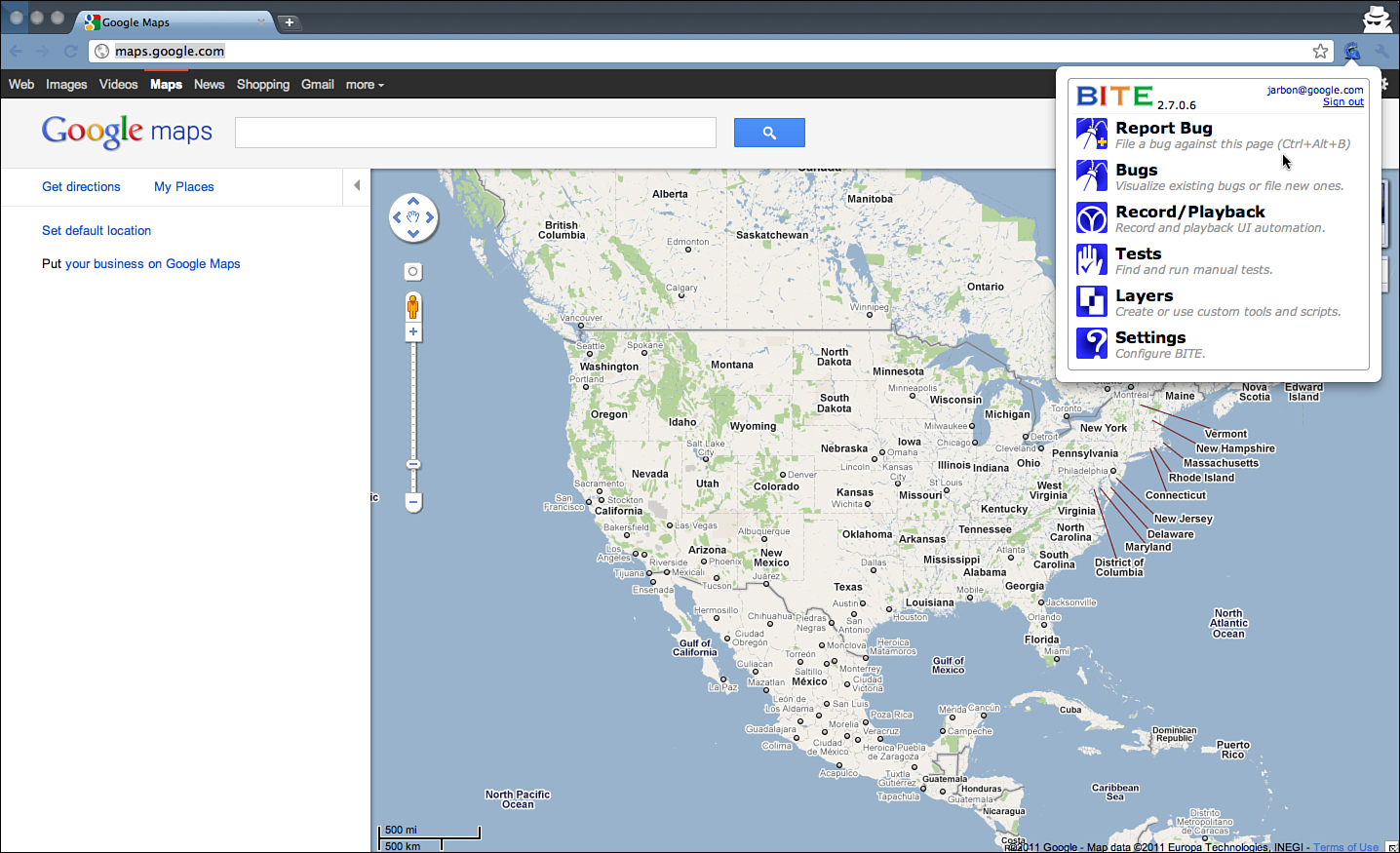

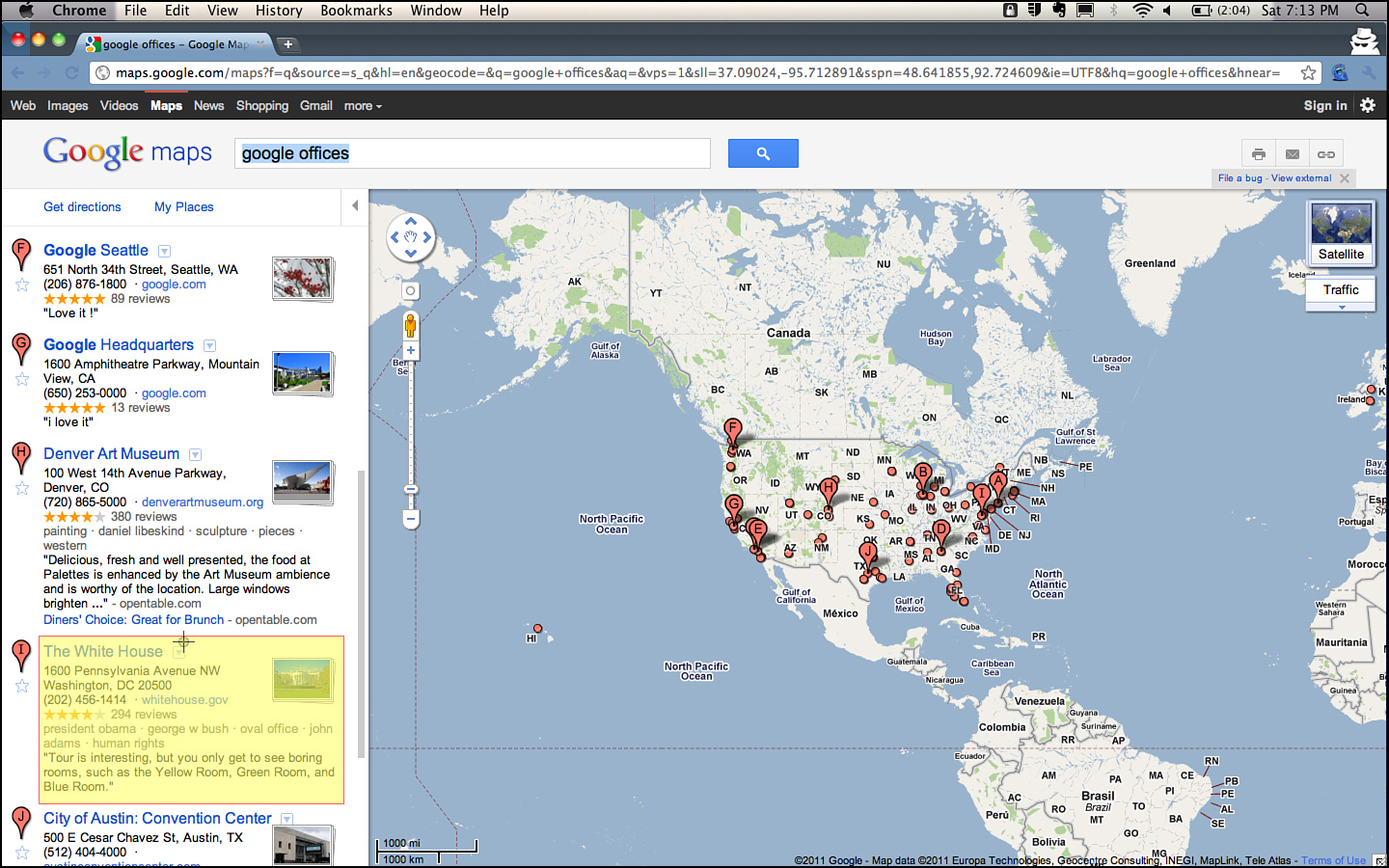

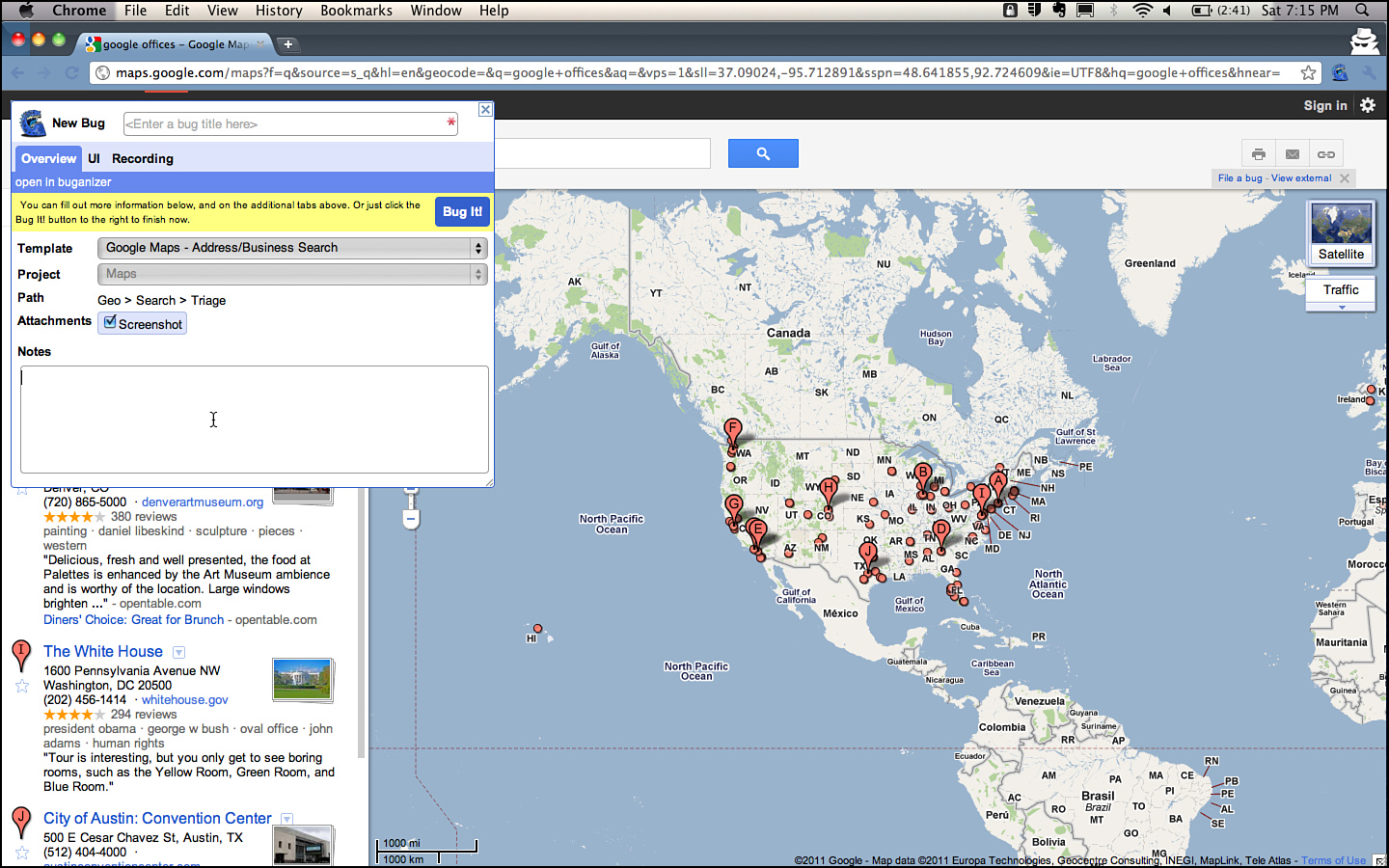

A bug is born. Bugs are found and filed by everyone at Google. Product Managers file bugs when they catch issues in the early builds that differ form their specifications/thoughts. Developers file bugs when they realize they accidentally checked in an issue, or find an issue somewhere else in the codebase, or while dogfooding Google products. Bugs also come in from the field, from crowd-sourced testers, external vendor testing, and are filed by Community Managers monitoring the product-specific Google Groups. Many internal versions of apps also have quick one-click ways to file bugs, like Google maps. And, sometimes, software programs create bugs via an API.

Because the tracking and workflow around a bug is such a large part of what engineers do, a great deal of effort has been expended to automate this process. Google’s first bug database was called BugsDB. It was nothing more than a few database tables to store information and a set of queries to retrieve bugs and compute statistics about them. BugDB was used until 2005 when a couple of enterprising engineers, Ted Mao8 and Ravi Gampala, created “Buganizer.”

The key motivations for Buganizer were:

• More flexible n-level component hierarchy to replace the simple Project > Component > Version hierarchy used in BugDB (and all the other commercial bug databases at that time)

• Better accountability for bug tracking and a new workflow around triage and maintenance

• Easier tracking of a group of bugs with the capability to create and manage hotlists

• Improved security with login authentication

• The capability to create summary charts and reports

• Full text search and change history

• Default settings for bugs

• Improved usability and a more intuitive UI

Some Buganizer Trivia and Metrics

The oldlest bug filed and still in existence: May 18, 2001, 15:33. The title is Test Bug. The body of the documentation is “First Bug!.” Interestingly, this bug is often used accidentally when developers are asked to provide a bug that their CL fixes.

The oldest active bug was filed in March of 1999. This bug suggests a performance investigation to reduce latency for serving for ads based on geographic region. The last activity was in 2009. The last edit said that this could be investigated, but that it would require architectural work, and the latency metrics are just fine.

Here are some bug charts of overall bug activity at Google. Some of these are filed automatically and some manually; these are aggregate information. Some of the automation dominates these bug trends, and we don’t highlight any single teams here, but it is interesting nonetheless.

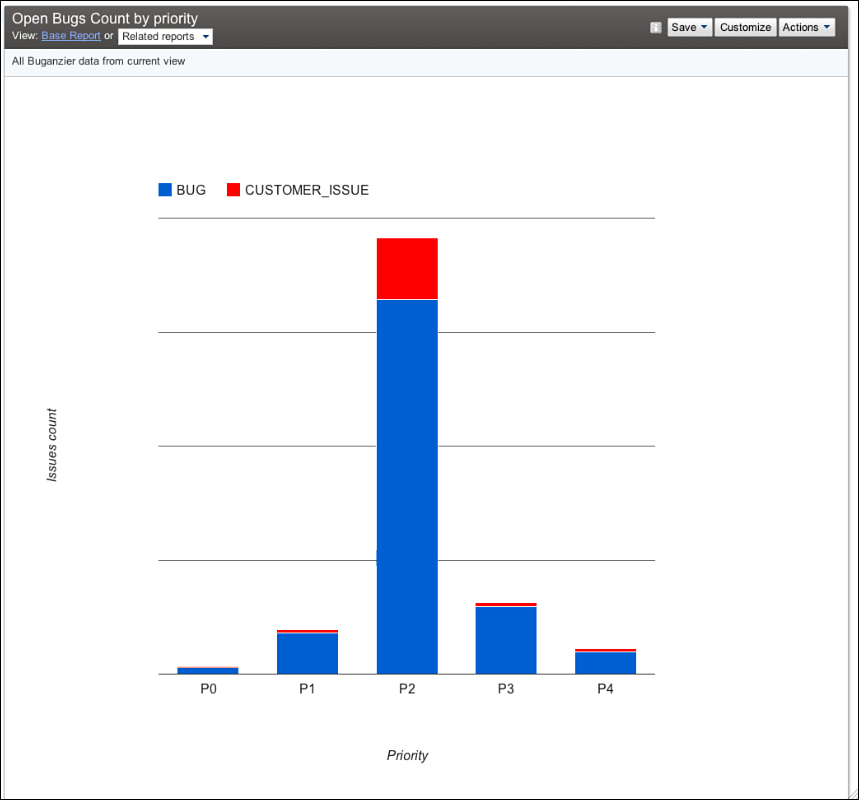

As you can see in Figure 3.18, there are many P29 bugs, far fewer P1, and even fewer P0 bugs. Correlation is not causation, but it can be a sign that the engineering methodology described in this book works. It might also be that no one bothers to file P1 issues, but that isn’t what we see anecdotally. P3 and P4 bugs are often not filed as they are rarely looked at.

Figure 3.18 Number of bugs in Buganizer by priority.

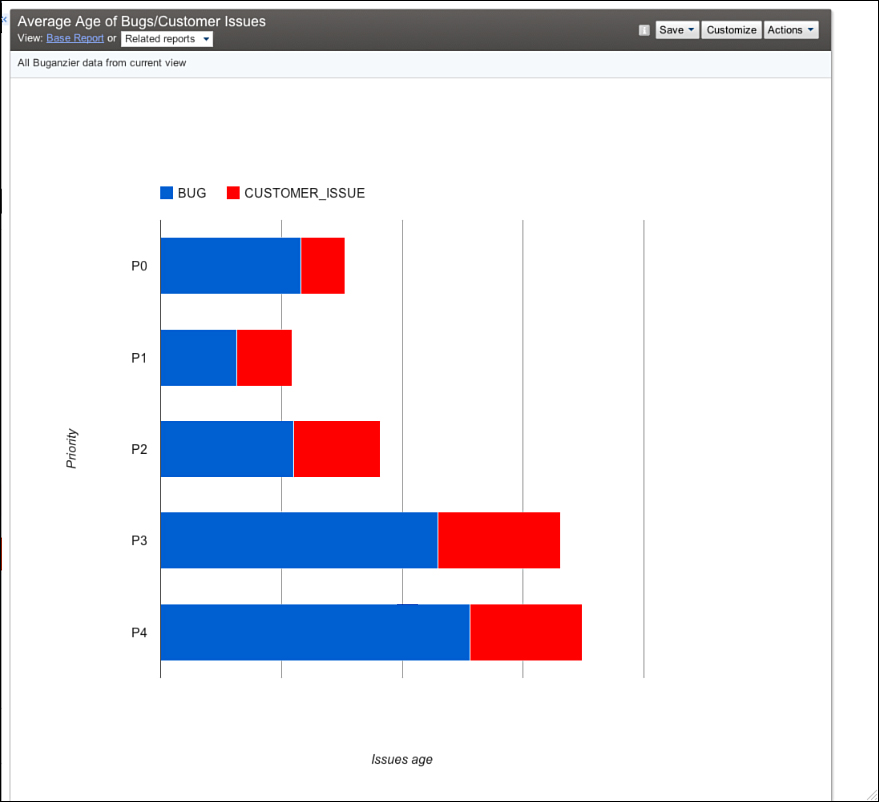

The average age of bugs is also generally what would be expected (see Figure 3.19). The anomaly seems to be with P0 bugs. In practice, though, P0 bugs are often more difficult to fix as they represent severe design or deployment issues, which are often complex to debug and resolve. The rest of the bugs on average take longer to fix, with increasing priority numbers, as they are less important.

Figure 3.19 Average age of bugs in Buganizer.

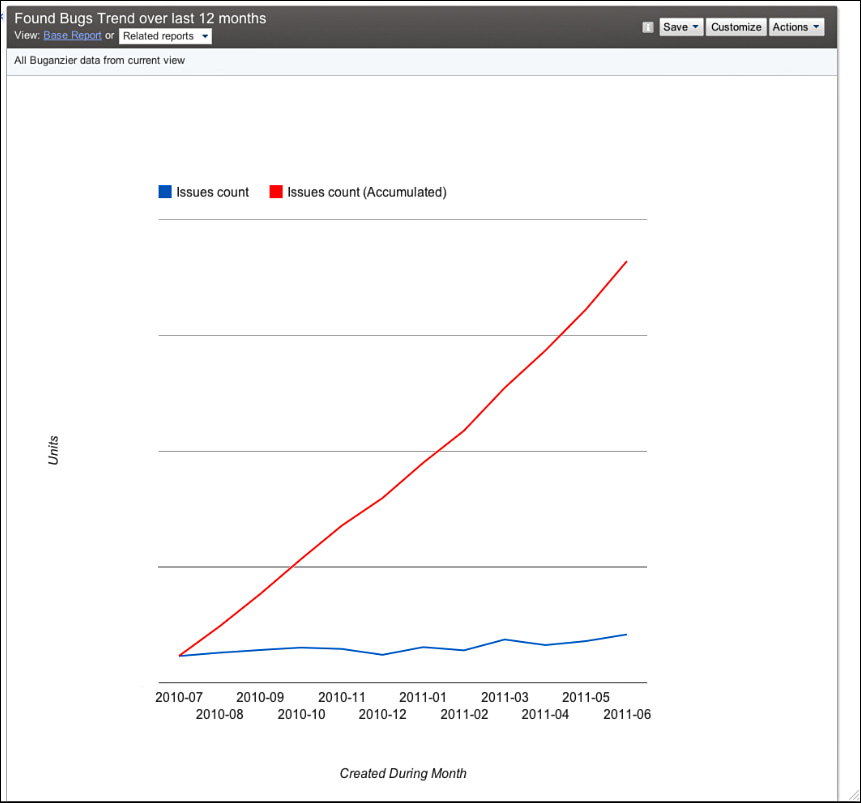

The chart for the number of bugs found over time in Figure 3.20 shows a slight uptick in bugs found each month. We don’t systematically understand why this is increasing. The obvious explanation is that we have more code and more coders over time, but the bug rate increases at a rate lower than the increase in number of testers and developers. Perhaps either our code is getting better with quality controls or we aren’t finding as many of them.

Figure 3.20 Trend of bugs found.

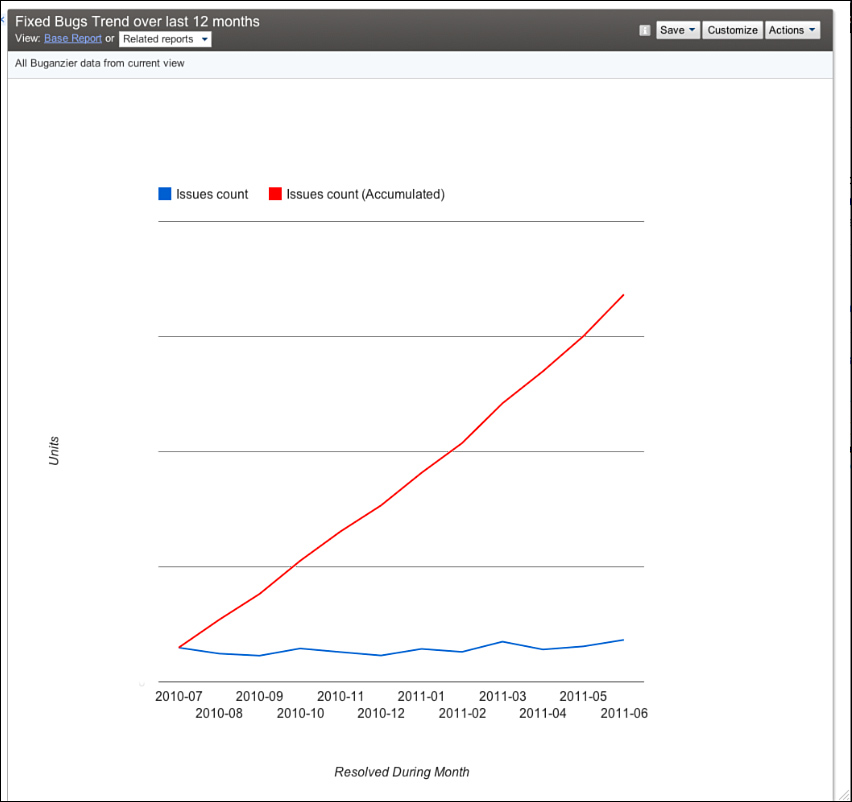

Our fix rate in Figure 3.21 shows that teams generally have a handle on the bug rate. Many teams simply stop adding features when the incoming bug rate exceeds the team’s ability to fix them. This practice is highly recommended versus focusing on the feature or code-complete milestones. Focus on small bits of tested code, incrementally testing, and dogfooding help keep bug rates in check.

Figure 3.21 Bugs fixed over time. It’s a good thing this looks similar to the trend of bugs found!

As Google’s products have moved more into the open, such as Chrome and Chrome OS, it’s no longer possible to maintain a single bug database. These projects also deal with externally visible bug databases such as Mozilla’s Bugzilla for WebKit issues and issue tracker for chromium.org issues. Googlers are encouraged to file issues in any product they see, including those from competitive products. The primary goal is making the Web as a whole better.

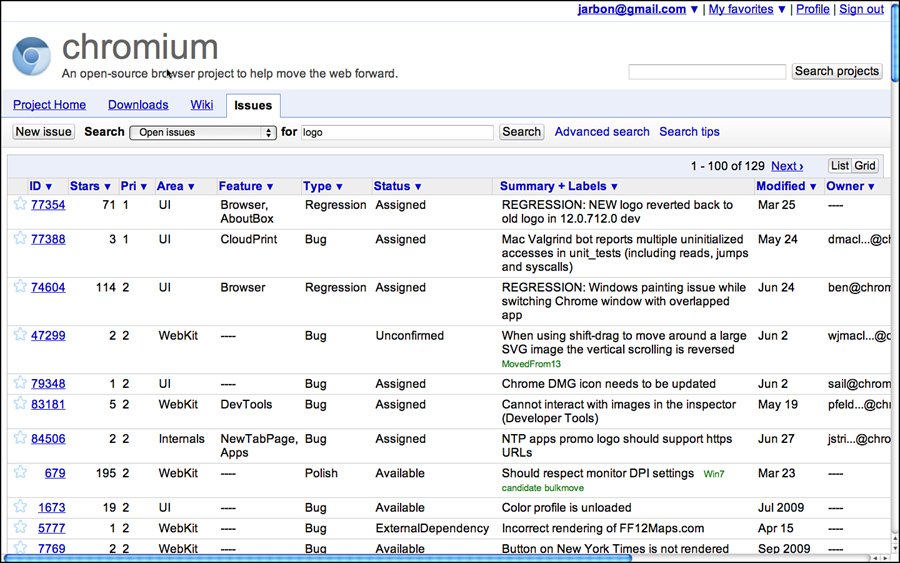

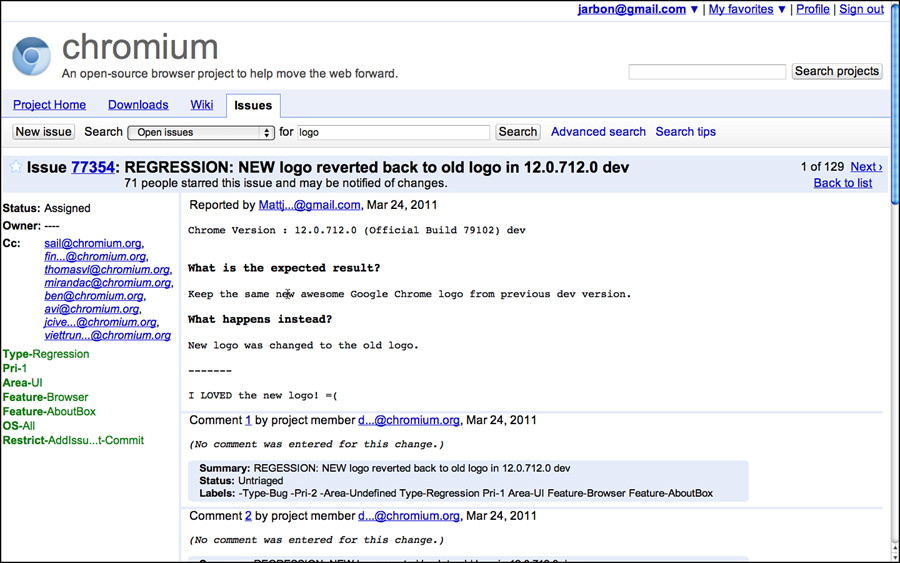

Issue Tracker is the core repository for all Chrome and Chrome OS bugs. Its bug database is public. This means anyone can look at the bug activity, even the press. Security bugs are sometimes hidden between the time they are found and fixed to avoid tipping off hackers, but other than that, it is a public bug database. External users are free to file bugs, and they are a valuable source of bug information. Figures 3.22 and 3.23 show searching and finding a bug related to the Chrome logo in the About box.

Figure 3.22 Issue tracker search.

Figure 3.23 Open issue in Chromium Issue Tracker.

However, the longest-lived and most widely used piece of testing infrastructure at Google is Buganizer and it deserves further discussion. For the most part, it is a typical bug database, but it also supports the core quality cycle of tracking problems in our software, from discovery to resolution, and in building regression test cases. Buganizer is also built on top of Google’s latest core storage technology for scale and speed.

Bugs are filed with a subset of the following fields, very few of which are required, and their definition is deliberately not well defined so individual teams can decide how they want to manage the information to fit their own workflow.

• Assigned to (Assignee)

[Optional] LDAP name of one person who should take the next step in handling this issue. This person is automatically emailed when the issue is created and whenever anyone changes the value of any field in the issue. Buganizer administrators specify a default assignee for every component.

• CC

[Optional] Zero or more LDAP names of people to email when an issue is created or modified. Names are LDAP or mailing list names only, no @google, so only Google mailing lists or employees are valid entries. This is a comma-delimited list. Do not include the “Assign to” name when filing an issue, because this person is emailed by default.

• Attachments

[Optional] Zero or more attachments to the bug. Any file type is accepted. There is no limit to the number of attachments for an issue, but the maximum total size of each attachment is 100MB.

• Blocking

[Optional] The IDs of bugs that this bug prevents from being resolved. This is a comma-delimited list. Updating this list will automatically update the Depends On field in the listed bugs.

• Depends On

[Optional] The IDs of bugs that must be fixed before this bug can be fixed. Updating this list will automatically update the Blocking field of all listed bugs. This is a comma-delimited list.

• Changed

[Read-only] The date and time when any value in the issue was last changed.

• Changelists

[Optional] Zero or more change list (CL) numbers for CLs dealing with this issue in some way. Only specify CLs that have been submitted; do not specify pending CLs.

[Required] The thing that has the bug or feature request if it is known. When filing an issue, this should be a complete path to the component. An infinitely long path is now supported. When filing an issue, you do not need to assign the issue to a leaf component (that is, one without children).

Additional components can be created only by project and engineering managers.

• Created

[Read-only] The date when the bug was created.

• Found In

[Optional] Use for versioning by entering the number of the software version where you found the issue, such as 1.1.

• Last modified

[Read-only] The date that any field in the issue was last modified.

• Notes

[Optional] Detailed description of the problem and running comments as the issue is handled over time. When filing the issue, describe the steps required to reproduce a bug or how to get to a screen involving a feature request. The more information you put here, the less likely future issue handlers will need to contact you. You cannot edit previous entries in the Notes field, not even if you added them; you can add only new values to the Notes field.

• Priority

[Required] The importance of a bug, where P0 is the highest priority. This indicates how soon it should be fixed and how many resources should be allocated to fix it. For example, misspelling “Google” in the search page logo would be low severity (the page function would not be affected), but high priority (it would be a Very Bad Thing). Setting both fields lets the bug fix team allocate its time more wisely. See also the Severity description.

• Reported by (Reporter)

[Read-only] The Google login of the person who originally reported the bug. The default value is assigned to the person creating the bug, but this can be modified to give credit where credit is due.

• Resolution

[Optional, entered by Buganizer] The final action chosen by the verifier. Values include Not feasible, Works as intended, Not repeatable, Obsolete, Duplicate, and Fixed.

[Required] How much the bug affects the use of the product, where S0 is the most severe. Setting both priority and severity can help prioritize the importance of this bug to bug fixers. For example, misspelling “Google” in the search page logo would be low severity (the page function would not be affected), but high priority (it would be a Very Bad Thing). Setting both fields lets the bug fix team allocate its time more wisely. Severity values have the following text equivalents:

— s0 = System unusable

— s1 = High

— s2 = Medium

— s3 = Low

— s4 = No effect on system

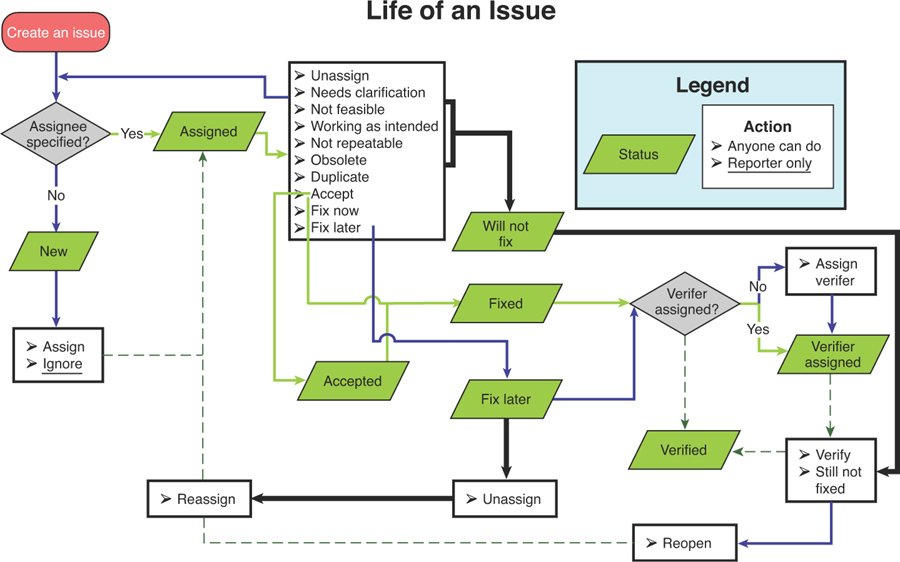

• Status

[Required] The current state of the bug. See Life of an issue (see Figure 3.24) for details on how these values are set in the issue. Available statuses include the following:

— New: The issue has just been created and not assigned yet.

— Assigned: An assignee has been specified.

— Accepted: The assignee has accepted the issue.

— Fix later: The assignee has decided that the issue will be fixed in the future.

— Will not fix: The assignee has decided that the issue will not be fixed for some reason.

— Fixed: The issue has been fixed, but the fix has not been verified.

— Verifier assigned: A verifier has been assigned to the issue.

— Verified: The fix has been verified by the verifier.

• Summary

[Required] A descriptive summary of this issue. Be sure to make this as descriptive as possible; when scrolling through a list of issues in a search result, this is what helps the user decide whether or not to examine the issue further.

• Targeted To

[Optional] Use for versioning by entering the number of the software version in which the issue should be fixed, such as 1.2.

[Required] What type of issue:

— Bug: Something that causes the program not to work as expected

— Feature request: Something you would like to see added to the program

— Customer issue: A training issue or general discussion

— Internal cleanup: Something requiring maintenance

— Process: Something tracked automatically via the API

• Verified In

[Optional] Use for versioning by entering the number of the software version where the issue fix was verified, such as 1.2.

• Verifier

[Required before issue can be resolved] Each issue is assigned one person who has the right to mark the issue as resolved. This person need not be assigned until the issue is ready to be resolved, but the verifier is the only one who can change the status to “Verified” (the issue is closed). The verifier can be the same person as the assignee.

Figure 3.24 The basic workflow for bugs in a Buganizer world.

Figure 3.24 summarizes the life of an issue.

A few key differences between bugs at Google and elsewhere:

• For the most part, the bug database is completely open. Any Googler can see any bug in any project.

• Everyone files bugs, including engineering directors and (Senior Vice Presidents (SVPs). Googlers file bugs against products they use even if they are not part of that product team. Groups of testers often “dog pile” on a product just to be helpful.

• There are no formal top-down bug triage processes. How bugs are triaged10 varies widely from team to team. Sometimes it’s an individual task or a TE and SWE do it together informally at one or the other’s desk. Sometimes triage is part of a weekly or daily standup meeting. The team figures out who should be there and what works best. There are no formal methods or dashboards for triaging or big brothers checking in on a team. Google leaves this up to the individual teams to decide.

Google projects are often in one of two states: new and rapid development swamped with a continual stream of issues or established infrastructure released incrementally and with so much upfront unit testing and fault tolerance that bugs aren’t very common and kept to a small and manageable number.

The dream of a unified dashboard has haunted Googlers, much like it has at many other companies. Every year or so, a new effort tried to take root to build a centralized bug or project dashboard for all projects at Google. It makes sense, even if confined only to Buganizer data. But, as each group has different ideas of what metrics are important for shipping and for day-to-day health of the projects, these projects have consistently not gotten far off the ground. This idea may be useful at more uniform companies, but at Google, well-intentioned folks often keep ending up frustrated due to the variety of projects and engineering at Google.

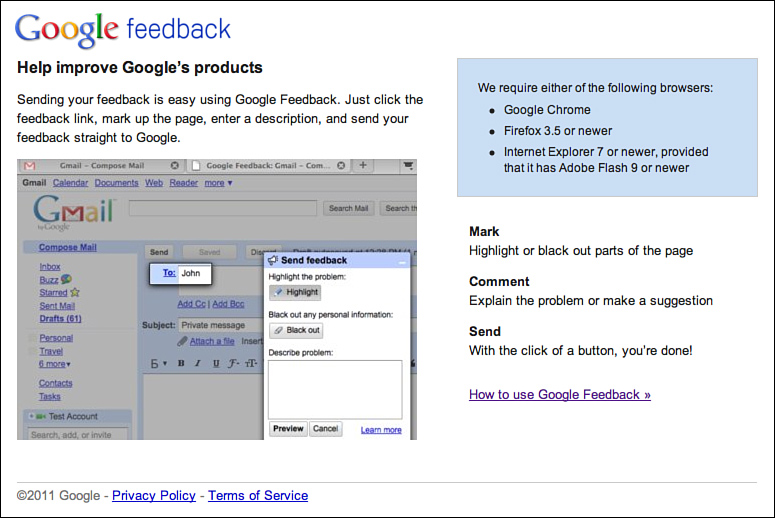

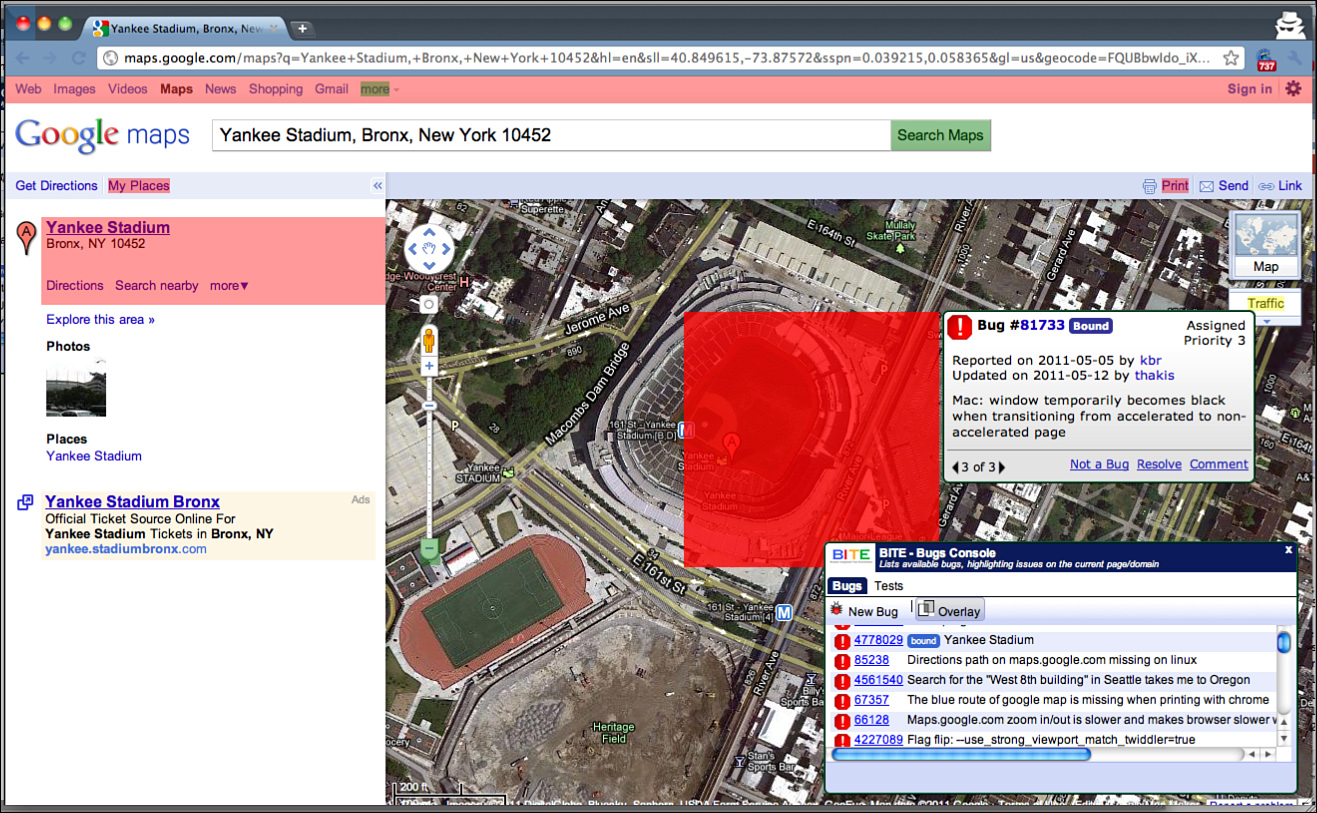

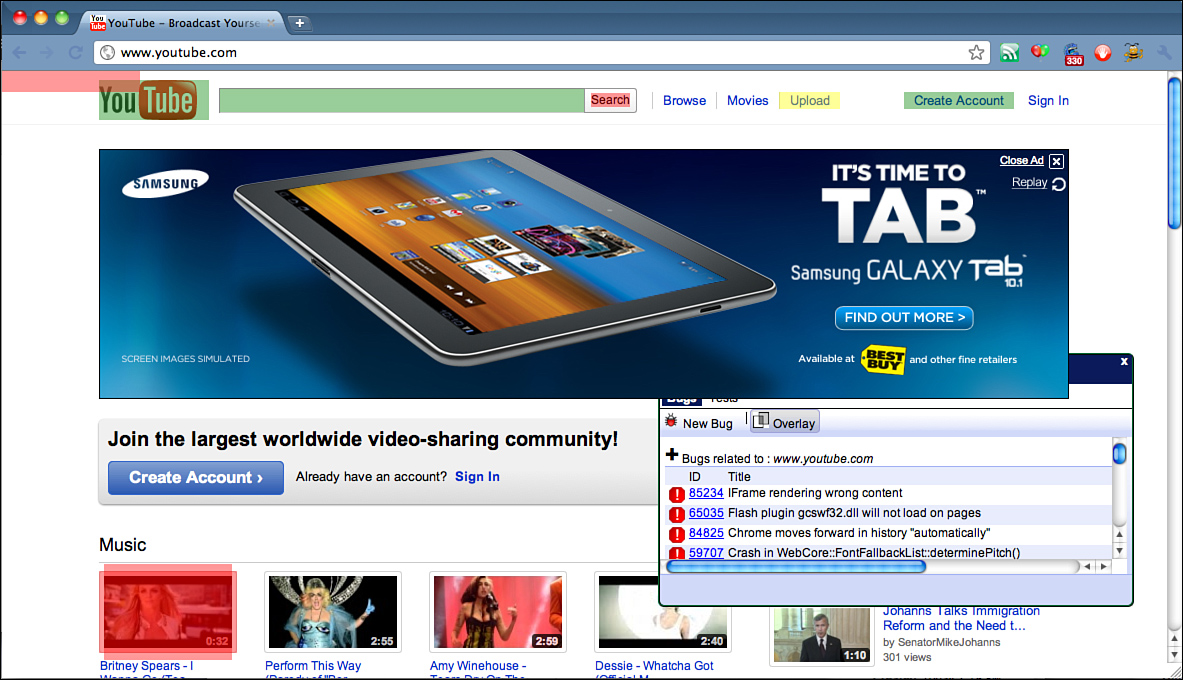

A Google-scale approach to filing bugs was started by the Google Feedback team11 (http://www.google.com/tools/feedback/intl/en/index.html). Google Feedback enables end users to file bugs against select Google services. The general idea is that external users don’t have full context on what bugs have already been filed, or even already fixed, and we want to make it quick and easy to get feedback from users. So, Google testers and software engineers have made it point-and-click simple for users to file a bug. The engineers on this team have gone to great lengths to ensure that users can intuitively omit parts of the page that might contain private data when filing bugs back to Google (see Figure 3.25).

Figure 3.25 Google Feedback shown with privacy filtering.

The Google Feedback team has done some very cool work to avoid blindly dropping these bugs from the wild into our bug databases, as duplicate issues can overwhelm the bug triage process. They use clustering algorithms automatically to de-dupe and identify the top issues coming in. Google Feedback has a helpful dashboard showing this processed data of user-reported issues to the product team for analysis. It is not unusual for many tens of thousands of pieces of feedback to pour in the week after a major application launch—and have them boil down to just 10 or so major and common issues. This saves a lot of engineering time and makes it possible for Google to actually listen to end users without having to process each individual piece of feedback that would be prohibitive. The team is beta testing this with a small set of Google properties, but aims to provide this for all Google properties in the future.

Recruiting TEs

Google takes hiring engineers seriously. In general, our engineers have computer science or related degrees from accredited universities. However, few schools systematically teach software testing. This makes hiring good testers a challenge for any company, because the right mix of coding and testing skills is truly rare.

The TE role is particularly difficult to hire because the best ones aren’t raw algorithm, theorem-proving, function-implementing people. Google TEs break the mold of the SWE/SET that we have traditionally built our recruiting and interviewing process around. To be honest, we haven’t always gotten it right. Indeed, we owe apologies to all the testers caught up in our early attempts to perfect our TE interview process. TEs are rare individuals. They are technical, care about the user, and understand the product at a system and end-to-end perspective. They are relentless, great negotiators, and most importantly, they are creative and able to deal with ambiguity. It’s a small wonder Google, or any company for that matter, struggles to hire them.