Appendix C. Blog Posts on Tools and Code

This appendix presents some of the blog posts from the Google Testing Blog.

Take a BITE out of Bugs and Redundant Labor

Wednesday, October 12, 2011 9:21 AM

http://googletesting.blogspot.com/2011/10/take-bite-out-of-bugs-and-redundant.html

By Joe Allan Muharsky

In a time when more and more of the Web is becoming streamlined, the process of filing bugs for websites remains tedious and manual. Find an issue. Switch to your bug system window. Fill out boilerplate descriptions of the problem. Switch back to the browser, take a screenshot, attach it to the issue. Type some more descriptions. The whole process is one of context switching; from the tools used to file the bug, to gather information about it, to highlight problematic areas, most of your focus as the tester is pulled away from the very application you’re trying to test.

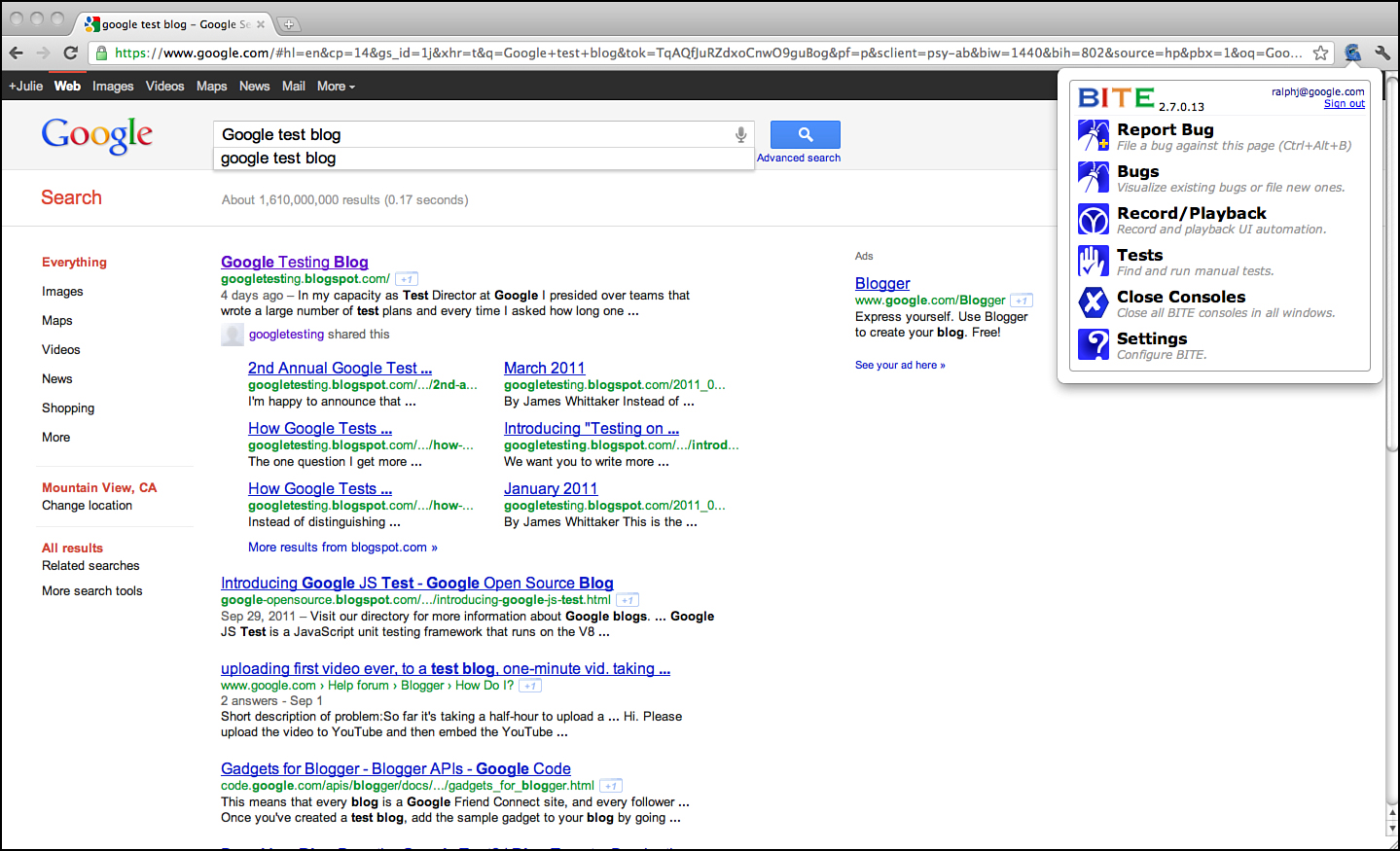

The Browser Integrated Testing Environment, or BITE, is an open source Chrome extension (http://code.google.com/chrome/extensions/index.html) that aims to fix the manual web-testing experience (see Figure C.1). To use the extension, it must be linked to a server providing information about bugs and tests in your system. BITE then provides the capability to file bugs from the context of a website, using relevant templates.

Figure C.1 BITE extension menu added to the Chrome Browser.

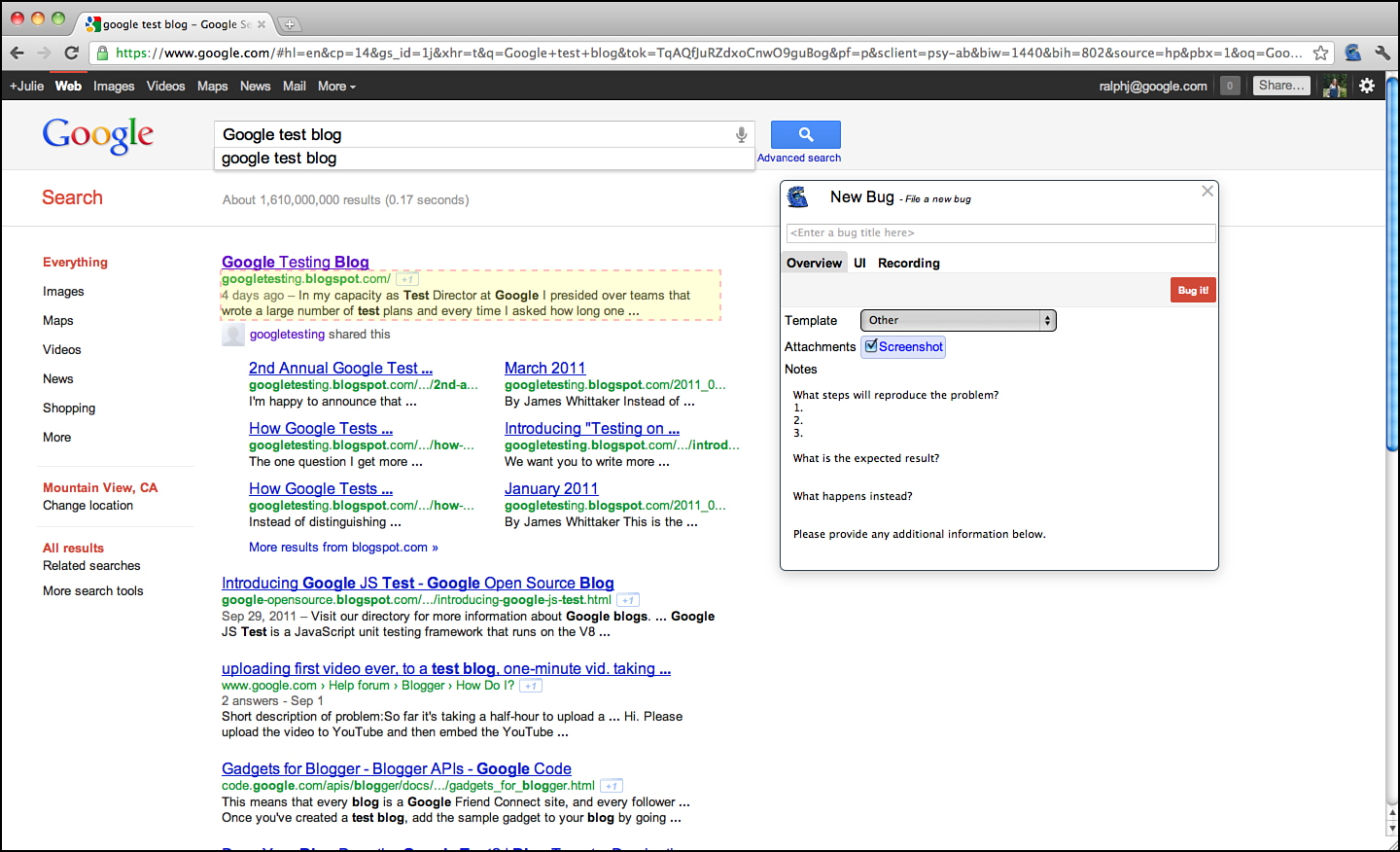

When filing a bug, BITE automatically grabs screenshots, links, and problematic UI elements, and it attaches them to the bug (see Figure C.2). This gives developers charged with investigating and/or fixing the bug a wealth of information to help them determine root causes and factors in the behavior.

Figure C.2 Bug Filing interface for BITE extension.

When it comes to reproducing a bug, testers will often labor to remember and accurately record the exact steps taken. With BITE, however, every action the tester takes on the page is recorded in JavaScript and can be played back later. This enables engineers to quickly determine whether the steps of a bug reproduce in a specific environment or whether a code change has resolved the issue.

Also included in BITE is a Record/Playback console to automate user actions in a manual test. Like the BITE recording experience, the RPF console will automatically author JavaScript that can be used to replay your actions at a later date. And BITE’s record and playback mechanism is fault-tolerant; UI automation tests will fail from time to time, and when they do, it tends to be for test issues rather than product issues. To that end, when a BITE playback fails, the tester can fix his recording in real-time just by repeating the action on the page. There’s no need to touch code or report a failing test; if your script can’t find a button to click on, just click on it again, and the script will be fixed! For those times when you do have to touch the code, we’ve used the Ace (http://ace.ajax.org/) as an inline editor, so you can make changes to your JavaScript in real-time.

Check out the BITE project page at http://code.google.com/p/bite-project. Feedback is welcome at [email protected]. Posted by Joe Allan Muharsky from the Web Testing Technologies Team (Jason Stredwick, Julie Ralph, Po Hu, and Richard Bustamante are the members of the team who delivered the product).

Unleash the QualityBots

Thursday, October 06, 2011 1:52 PM

http://googletesting.blogspot.com/2011/10/unleash-qualitybots.html

By Richard Bustamante

Are you a website developer who wants to know if Chrome updates will break your website before they reach the stable release channel? Have you ever wished there was an easy way to compare how your website appears in all channels of Chrome? Now you can!

QualityBots (http://code.google.com/p/qualitybots/) is a new opensource tool for web developers created by the Web Testing team at Google. It’s a comparison tool that examines web pages across different Chrome channels using pixel-based DOM analysis. As new versions of Chrome are pushed, QualityBots serves as an early warning system for breakages. Additionally, it helps developers quickly and easily understand how their pages appear across Chrome channels.

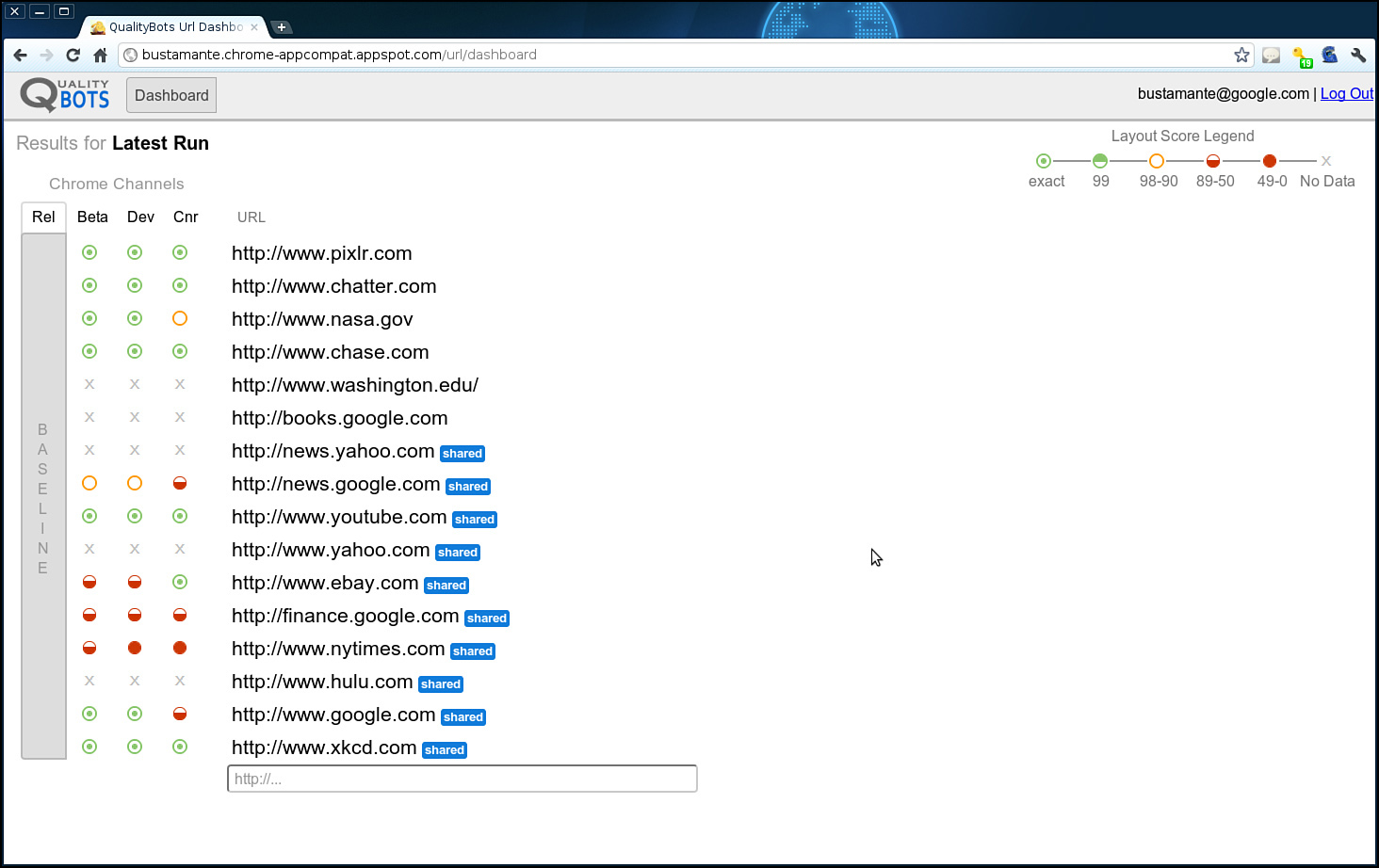

QualityBots is built on top of Google AppEngine (http://code.google.com/appengine/) for the frontend and Amazon EC2 for the backend workers that crawl the web pages. Using QualityBots requires an Amazon EC2 account to run the virtual machines that will crawl public web pages with different versions of Chrome. The tool provides a web frontend where users can log on and request URLs they want to crawl, see the results from the latest run on a dashboard (see Figure C.3), and drill down to get detailed information about what elements on the page cause the trouble.

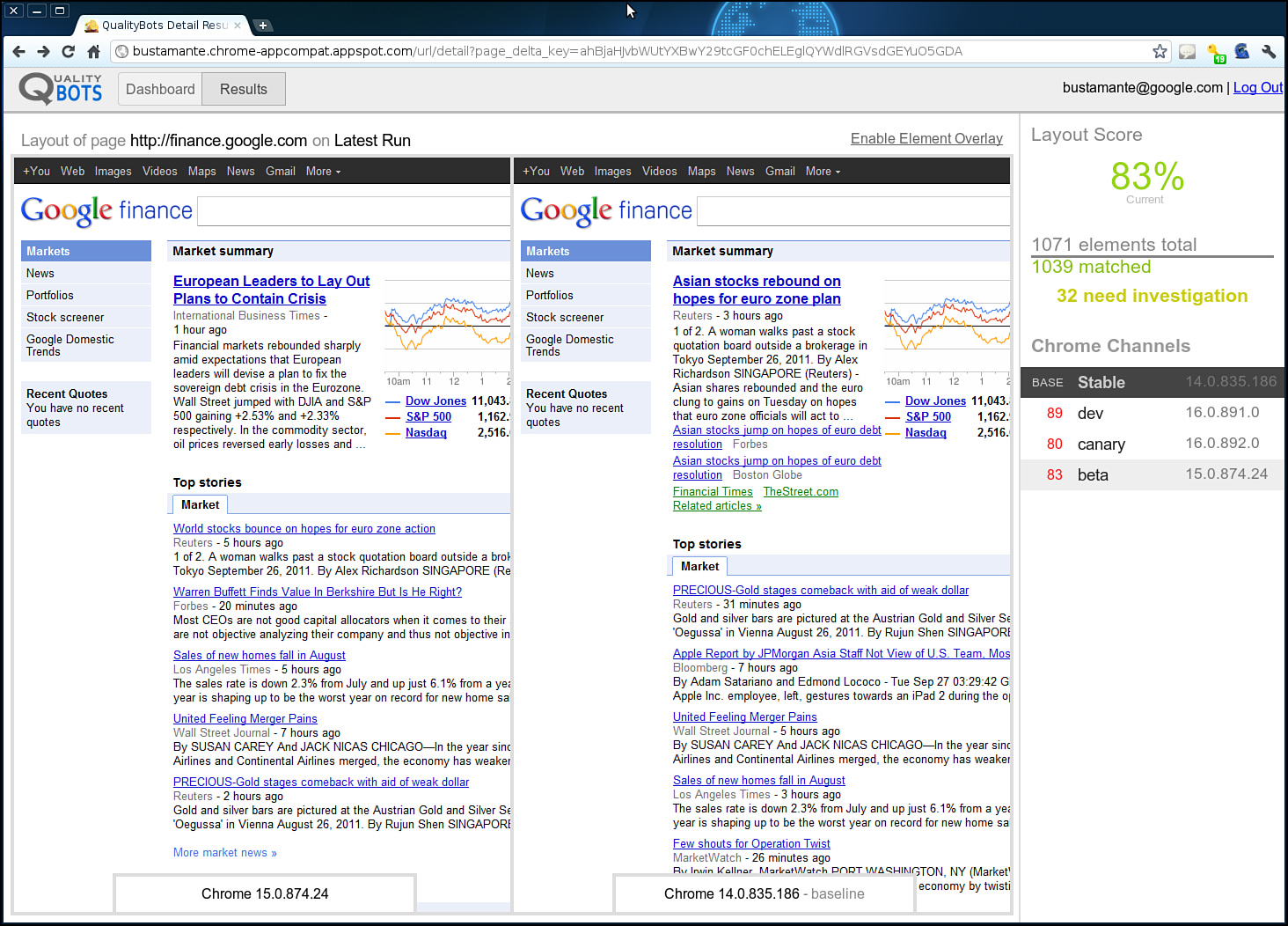

Figure C.3 Example results of a QualityBot Test run. The differences in this website between versions is displayed.

Developers and testers can use these results to identify sites that need attention due to a high amount of change and to highlight the pages that can be safely ignored when they render identically across Chrome channels (see Figure C.4). This saves time and the need for tedious compatibility testing of sites when nothing has changed.

Figure C.4 QualityBot dashboard for Chrome across a range of websites tested across different versions of Chrome.

We hope that interested website developers will take a deeper look and even join the project at the QualityBots project page (http://code.google.com/p/qualitybots/). Feedback is more than welcome at [email protected].

Posted by Ibrahim El Far, Web Testing Technologies Team (Eriel Thomas, Jason Stredwick, Richard Bustamante, and Tejas Shah are the members of the team who delivered this product).

RPF: Google’s Record Playback Framework

Thursday, November 17, 2011 5:26 AM

http://googletesting.blogspot.com/2011/11/rpf-googles-record-playback-framework.html

By Jason Arbon

At GTAC (http://www.gtac.biz/), folks asked how well the Record/Playback Framework (RPF) works in the Browser Integrated Test Environment (BITE) (http://googletesting.blogspot.com/2011/10/take-bite-out-of-bugs-and-redundant.html). We were originally skeptical ourselves, but figured somebody should try. Here is some anecdotal data and some background on how we started measuring the quality of RPF.

The idea is to just let users use the application in the browser, record their actions, and save them as JavaScript to play back as a regression test or repro later. Like most test tools, especially code-generating ones, it works most of the time but it’s not perfect. Po Hu had an early version working and decided to test this out on a real-world product. Po, the developer of RPF, worked with the Chrome web store team to see how an early version would work for them. Why the Chrome web store (https://chrome.google.com/webstore/)? It is a website with lots of data-driven UX, authentication, and file upload, and the site was changing all the time and breaking existing Selenium (http://seleniumhq.org/) scripts: a pretty hard web testing problem.

Before sharing with the Chrome web store test developer Wensi Liu, we invested a bit of time in doing something we thought was clever: fuzzy matching and inline updating of the test scripts. Selenium rocks, but after an initial regression suite is created, many teams end up spending a lot of time simply maintaining their Selenium tests as the products constantly change. Rather than simply fail like the existing Selenium automation would do when a certain element isn’t found, and rather than require some manual DOM inspection—updating the Java code and re-deploying, re-running, and re-reviewing the test code—what if the test script just kept running and updates to the code could be as simple as point and click? We would keep track of all the attributes in the element recorded, and when executing, we would calculate the percent match between the recorded attributes and values and those found while running. If the match isn’t exact, but within tolerances (say only its parent node or class attribute had changed), we would log a warning and keep executing the test case. If the next test steps appeared to be working as well, the tests would keep executing during test passes and log only warnings, or if in debug mode, they would pause and allow for a quick update of the matching rule with point and click via the BITE UI. We figured this might reduce the number of false-positive test failures and make updating them much quicker.

We were wrong, but in a good way!

We talked to the tester after a few days of leaving him alone with RPF. He’d already re-created most of his Selenium suite of tests in RPF, and the tests were already breaking because of product changes (its a tough life for a tester at Google to keep up with the developers’ rate of change). He seemed happy, so we asked him how this new fuzzy matching fanciness was working, or not. Wensi was like, “Oh yeah, that? Don’t know. Didn’t really use it...” We started to think how our update UX could have been confusing or not discoverable, or broken. Instead, Wensi said that when a test broke, it was just far easier to re-record the script. He had to re-test the product anyway, so why not turn recording on when he manually verified things were still working, remove the old test, and save this newly recorded script for replay later?

During that first week of trying out RPF, Wensi found:

• 77 percent of the features in Webstore were testable by RPF.

• Generating regression test scripts via this early version of RPF was about eight times faster than building them via Selenium/WebDriver.

• The RPF scripts caught six functional regressions and many more intermittent server failures.

• Common setup routines such as login should be saved as modules for reuse (a crude version of this was working soon after).

• RPF worked on Chrome OS, where Selenium, by definition, could never run because it required client-side binaries. RPF worked because it was a pure cloud solution, running entirely in the browser and communicating with a backend on the Web.

• Bugs filed via BITE provided a simple link, which would install BITE on the developer’s machine and re-execute the repros on their side. There was no need for manually crafted repro steps. This was cool.

• Wensi wished RPF was cross-browser. It worked only in Chrome, but people did occasionally visit the site with a non-Chrome browser.

So, we knew we were onto something interesting and continued development. In the near term, though, Chrome web store testing went back to using Selenium because that final 23 percent of features required some local Java code to handle file upload and to secure checkout scenarios. In hindsight, a little testability work on the server could have solved this with some AJAX calls from the client.

We performed a check of how RPF faired on some of the top sites of the web. This is shared on the BITE project wiki (https://docs.google.com/spreadsheet/ccc?key=0AsbIZrIYVyF0dEJGQV91WW9McW1fMjItRmhzcWkyanc#gid=6). This is now a little bit out of date with a lot more fixes, but it gives you a feel for what doesn’t work. Consider it Alpha quality at this point. It works for most scenarios, but there are still some serious corner cases.

Joe Allan Muharsky drove a lot of the UX (user experience) design for BITE to turn our original and clunky developer and functional-centric UX into something intuitive. Joe’s key focus was to keep the UX out of the way until it is needed and to make things as self-discoverable and findable as possible. We haven’t done formal usability studies yet, but we have done several experiments with external crowd testers using these tools and with minimal instructions, as well as internal dogfooders filing bugs against Google Maps with little confusion. Some of the fancier parts of RPF have some hidden Easter eggs of awkwardness, but the basic record and playback scenarios seem to be obvious to folks.

RPF has graduated from the experimental centralized test team to a formal part of the Chrome team, and it is used regularly for regression test passes. The team also has an eye on enabling the noncoding crowd; sourced testers generate regression scripts via BITE / RPF.

Please join us in maintaining BITE / RPF (http://code.google.com/p/bite-project/), and be nice to Po Hu and Joel Hynoski who are driving this work forward at Google.

Google Test Analytics—Now in Open Source

Wednesday, October 19, 2011 1:03 PM

http://googletesting.blogspot.com/2011/10/google-test-analytics-now-in-open.html

By Jim Reardon

The test plan is dead!

Well, hopefully. At a STAR West session this past week, James Whittaker asked a group of test professionals about test plans. His first question: “How many people here write test plans?” About 80 hands shot up instantly, a vast majority of the room. “How many of you get value or refer to them again after a week?” Exactly three people raised their hands.

That’s a lot of time being spent writing documents that are often long-winded and full of paragraphs of details on a project everyone already knows will get abandoned so quickly.

A group of us at Google set about creating a methodology that can replace a test plan; it needed to be comprehensive, quick, actionable, and have sustained value to a project. In the past few weeks, James has posted a few blogs about this methodology, which we’ve called ACC. It’s a tool to break down a software product into its constituent parts, and the method by which we created “10 Minute Test Plans” (that takes only 30 minutes!).

Comprehensive

The ACC methodology creates a matrix that describes your project completely; several projects that have used it internally at Google have found coverage areas that were missing in their conventional test plans.

Quick

The ACC methodology is fast; we’ve created ACC breakdowns for complex projects in under half an hour. That is far faster than writing a conventional test plan.

Actionable

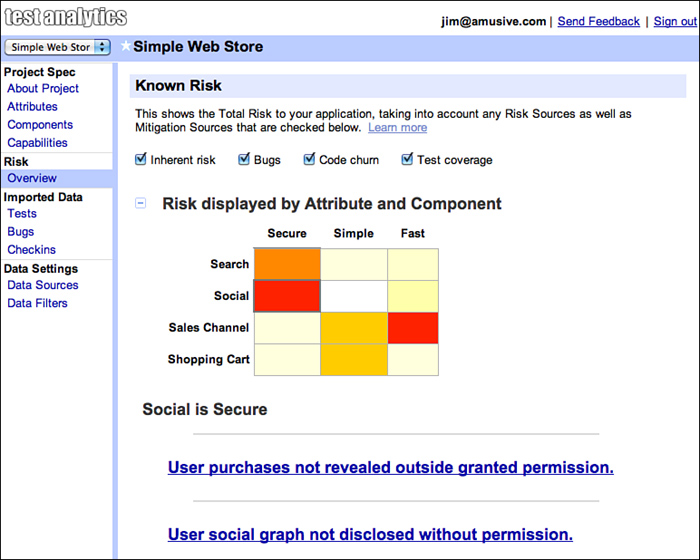

As part of your ACC breakdown, risk is assessed to the capabilities of your application. Using these values, you get a heat map of your project, showing the areas with the highest risk—great places to spend some quality time testing.

Sustained Value

We’ve built in some experimental features that bring your ACC test plan to life by importing data signals such as bugs and test coverage that quantify the risk across your project.

Today, I’m happy to announce that we’re open sourcing Test Analytics (http://code.google.com/p/test-analytics/), a tool built at Google to make generating an ACC simple.

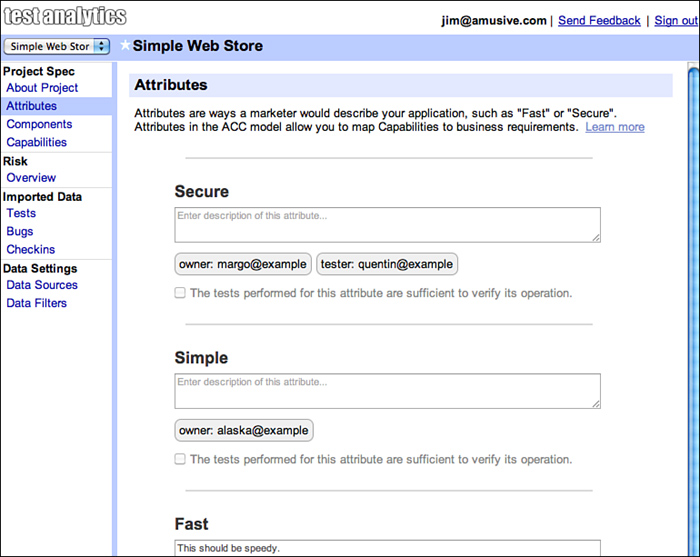

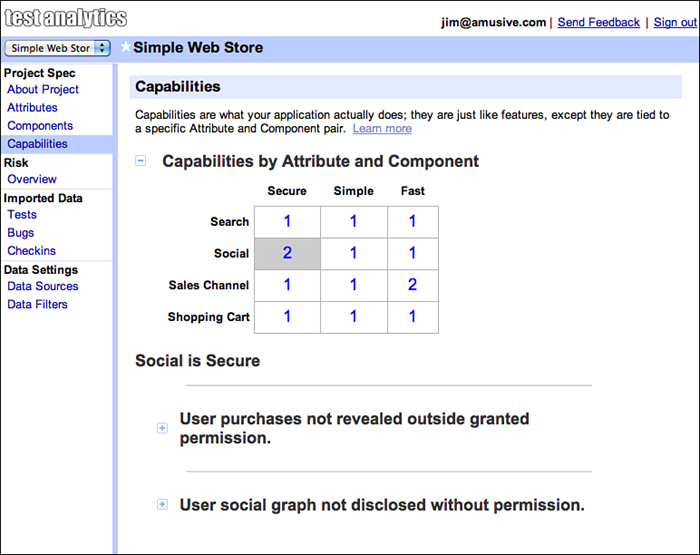

Test Analytics has two main parts; first and foremost, it’s a step-by-step tool to create an ACC matrix (see Figure C.5) that’s faster and much simpler than the Google Spreadsheets we used before the tool existed (see Figure C.6). It also provides visualizations of the matrix and risks associated with your ACC Capabilities that were difficult or impossible to do in a simple spreadsheet (see Figure C.7).

Figure C.5 Defining a project’s attributes in Test Analytics.

Figure C.6 Display of project capabilities in Test Analytics.

Figure C.7 Displaying the risk across the matrix of attributes and components in Test Analytics.

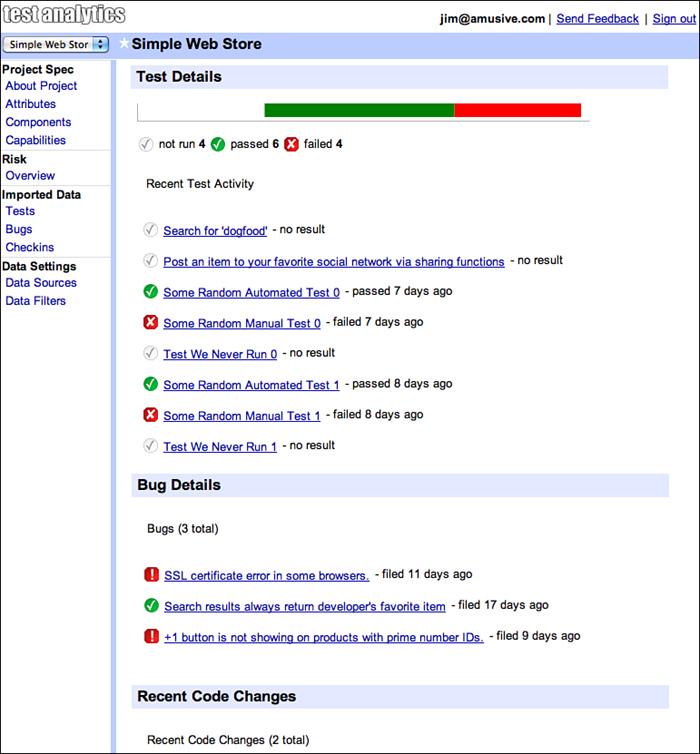

The second part is taking the ACC plan and making it a living, automatic-updating risk matrix. Test Analytics does this by importing quality signals from your project: Bugs, Test Cases, Test Results, and Code Changes. By importing this data, Test Analytics lets you visualize risk that isn’t just estimated or guessed, but based on quantitative values. If a Component or Capability in your project has had a lot of code change or many bugs are still open or not verified as working, the risk in that area is higher. Test Results can provide a mitigation to those risks; if you run tests and import passing results, the risk in an area gets lower as you test.

This part is still experimental; we’re playing around with how we calculate risk based on these signals to best determine risk (see Figure C.8). However, we wanted to release this functionality early so we can get feedback from the testing community about how well it works for teams so we can iterate and make the tool even more useful. It’d also be great to import even more quality signals; code complexity, static code analysis, code coverage, external user feedback, and more are all ideas we’ve had that could add an even higher level of dynamic data to your test plan.

Figure C.8 Test Analytics binding to bug and test case data.

You can check out a live hosted version (http://goo.gl/Cv2QB), browse, or check out the code (http://code.google.com/p/test-analytics/) along with documentation (http://code.google.com/p/test-analytics/wiki/AccExplained), and of course, if you have any feedback, let us know. There’s a Google Group set up for discussion (http://groups.google.com/group/test-analytics-discuss) where we’ll be active in responding to questions and sharing our experiences with Test Analytics so far.

Long live the test plan!