Artificial Opponents

Single-player strategy games often present the fiction that the player is opposed by another player like himself, who makes moves just the way the player does. In war games, it’s fun to think of pitting one’s wits against an enemy general. The old Electronic Arts war game Patton vs. Rommel explicitly encourages this fantasy; the player takes the role of one general and the computer takes the other. It is only a fantasy, of course; the opponent it provides is artificial, implemented by AI techniques. Here, you’ll learn about some of the AI used to create artificial opponents in strategy games and the strengths and weaknesses of several approaches.

Game Tree Search

In turn-based games such as checkers, chess, or Go, each player has a number of choices—possible moves that he can make—at each turn. You choose your move, then your opponent makes his move from the choices available to him, and then it’s back to you with a new set of possible moves, and so on. If you draw a graph of this, showing how the options branch out at every turn, it looks like a tree, so the set of all possible future moves is called the game tree.

With simple games, artificial opponents search through the game tree, looking for the moves that produce the most advantageous result. The fewer choices there are, the easier this is. With tic-tac-toe, the problem is trivial; with checkers, it is comparatively easy; with chess, it is quite difficult; and with Go, no one has yet managed to build a computer game that can play as well as a master human player. In Go, the board is large and the player can play nearly anywhere, so the number of possible future moves is simply astronomical. In addition, unlike in chess, it is very difficult to evaluate whether a potential move is good or bad; it depends too much on other moves.

As a designer, do not expect to use game-tree search in any but the simplest of games. The length of time it will take for the computer to compute a good move increases exponentially with the number of possible moves available.

Neural Nets

A neural net is a program that simulates, in a simplified form, the behavior of brain cells, or neurons. The details are too complex to discuss here, but put simply, a neural net mimics the brain’s ability to recognize and correctly identify patterns of data. This is how we learn to tell an apple from an orange, for example: Our brains learn the visual patterns that apples and oranges fall into. Like the brain, a neural network has to be taught to recognize the pattern; after that, and with repeated exposure, the brain or the neural network identifies the pattern correctly.

Some efforts have been made to teach neural nets to learn to play strategy games by recognizing patterns of play that lead to success. Although this technique is worthy of further research, you shouldn’t count on it. Neural nets take a long time to learn, and the process doesn’t work well for complex patterns of information, such as the dozens of possible choices available to a player in a modern war game. Furthermore, because of the way neural networks store data, there is no way to tell what the data actually mean. You can’t teach the network a new pattern simply by tweaking the numbers stored inside it; you have to start over again from the beginning.

Hierarchical Finite State Machines

In the absence of orders, go find something and kill it.

—Erwin Rommel

Hierarchical finite state machines have proven to be the most successful mechanism for creating artificially intelligent opponents in war games because they can handle large numbers of units and produce seemingly intelligent, coordinated behavior.

What Is a Finite State Machine?

A finite state machine (FSM) is a conceptual machine rather than a real piece of mechanical engineering. Its rules establish a simple behavioral system for an individual automated character, such as a unit in a war game. The unit can inhabit a limited number of states (such as scouting, guarding, pursuing, and retreating), and it’s only ever in one state at a time. As long as the unit is in a given state, it performs a particular activity. Certain events cause it to make a transition to a new state, after which it starts performing a new activity. To use an FSM to define the behavior of a unit, list all the states that you can think of that it might be in. Remember that these states describe a single activity that the unit will perform until something causes it to transition to another. For each state that you define, you must indicate how the unit behaves in that state and list all the things that will cause it to transition to a new state.

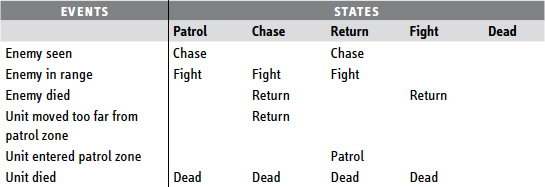

The simplest way to do this is with a table. Figure 5 illustrates a small finite state machine for a unit that has been set to patrol a certain region and not move too far from it. The unit has five possible states: patrol, chase, return, fight, and dead. It can detect six types of events: an enemy has been seen outside its current fighting range, an enemy is within its fighting range, the enemy has died, the unit has moved too far from its assigned patrol zone, the unit has returned to its patrol zone, and the unit has died.

Each state is shown in the table as a column, and all the possible events are along the left. The table shows what a unit will do in a given state. For example, if the unit is in the patrol state and it sees an enemy, it will switch to the chase state and leave its patrol zone to chase the enemy. If it is in the chase state and it moves too far from its patrol zone, it will enter the return state and go back to its patrol zone.

If a place in the table is blank, that means there is no change; the unit ignores the event. For example, if it is in the fight state and has moved too far from its patrol zone, it remains in the fight state. As long as the unit is fighting, it doesn’t care how far it is from its patrol zone.

Notice that there is one state from which there are no transitions: dead. When the unit is in the dead state, it no longer responds to anything else. In FSM terms, this is an end state and the FSM has halted.

FSMs have a weakness in that they can’t walk and chew gum at the same time—that is, they can be in only one state at a time, so a single individual can’t work on two things at once, unless that is defined as a state, or the single unit has two state machines to govern the two activities. However, the units in war games seldom need to do this anyway. For more information about designing FSMs, read the chapter “Fundamental AI Technologies” in Core Techniques and Algorithms in Game Programming, by Daniel Sánchez-Crespo Dalmau (Dalmau, 2004). Although it is a book on programming, the text is very accessible to nonprogrammers.

Hierarchical Finite State Machines in Games

A hierarchical finite state machine (hFSM) actually consists of several different FSMs, and the ones higher up in the hierarchy give orders to the ones lower down—that is, the controlling hFSMs send signals to those lower down in the hierarchy, ordering them to change states. An artificially intelligent opponent in such a system chooses a top-level goal, such as “take and hold this hill,” and delegates the tasks required to achieve the overall goal to subordinate FSMs that further delegate down to the individual unit level.

This is the way commands move down through a real army. The captain decides that he needs to take a hill and so delegates different activities to different platoons under him: providing covering fire, creating a diversion, and so on. If done properly, each platoon has a different goal and won’t get in each others’ way. The sergeant in each platoon then commands his individual men to achieve the platoon’s goal in the same way that the captain commanded the sergeants. Each of the men then tries to achieve his own goal by executing the command he has been given. In a video game, each man has his own FSM that determines his behavior. The FSM reacts to changing conditions on the battlefield (for example, “The unit I was told to attack is dead, so I will look for another one to attack”) and also to orders received from the superior FSM, that is, the sergeant’s FSM that governs the platoon as a whole (for example, “Your mission is accomplished, so cease fire and guard your position”).

The nice thing about hFSMs is that they produce emergent behavior—that is, they may cause units to behave in ways that are not programmed explicitly into the rules. hFSMs also allow you to design the AI from the top down, creating large-scale strategies that are made up of individual smaller-scale strategies. hFSMs are not restricted to combat, either: You can also use them to achieve economic goals, directing worker units to produce different resources as needed and telling them to stop when they’ve stockpiled enough.

A Final Note on Artificial Opponents

Don’t expect to be able to create an artificial opponent that can routinely beat a human fair and square unless your game is a simple one—so simple that it can use game-tree search. hFSMs are very useful, but they’re seldom good enough to beat a skilled player in an equal contest. Most strategy games use two additional features to provide a challenge to their players: hidden information that the player must find by exploring, and unfair advantages for the computer’s side, such as stronger units or more efficient production. But don’t concentrate too hard on making an unbeatable AI anyway. You do want the player to win the game eventually. The function of game AI is to put up a good fight but lose in the end.