2. Java Concurrency

Whenever more than one thread accesses a given state variable, and one of them might write to it, they all must coordinate their access to it using synchronization.

Brian Göetz

Java is one of the earliest languages in which concurrency is native. Built into the language at a low level are the constructs necessary for creating concurrent programs. In addition, as Java was conceived to be portable across multiple hardware architectures, it was also one of the first to define a memory model and to specify the exact behavior of its concurrent constructs.

Because Android’s concurrency constructs are built on Java’s, this chapter is a review of basic Java concurrency mechanisms. Of course, a complete description of the concurrency in Java can and does fill many complete books. The intention here is simply to establish vocabulary and refresh memory.

Note

Probably the best resource for concurrent programming in Java is the book from which this chapter’s introductory quote is taken: Java Concurrency in Practice, Göetz and Peierls, et al. Anyone who plans to do any substantial amount of concurrent programming in Java—and this includes all Android developers—should read this book.

Java Threads

In Java, the class Thread implements a thread. Every running application has one implicit thread, usually called the “default” or “main” thread. Spawning a new thread consists of creating a new instance of the Thread class and then calling its start method.

Note

In Android, the “main” thread is also frequently called the “UI” thread because it powers most of the UI components. This name can be confusing. There are actually multiple threads that run the UI and the main thread powers even applications that have no UI components.

The Thread Class

The two Thread methods start and run are very special. The start method takes no parameters and returns no values. From the point of view of the calling code, it doesn’t do anything at all. It just returns and passes control to the next statement in the program.

The magic, though, is that the call actually returns twice, once to the next statement in the program, but also on a new thread of execution spawned by the call, in the Thread object’s run method. The new thread executes the statements in the run method, in order, until the method returns. When the run method returns, the new thread is terminated.

Listing 2.1 is a simple example of the use of a Java Thread.

public class ThreadedExample {

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread() {

@Override

public void run() {

System.out.println("running: " + Thread.currentThread());

}

}.start();

System.out.println("finishing: " + Thread.currentThread());

}

}

This program, when run, will print the three messages and then terminate. It defines an anonymous subclass of Thread that overrides its run method. The overridden method is executed on a new thread, out of order with the execution of the thread that called the start method.

An example of the output from one run of the programs demonstrates this:

starting: Thread[main,5,main]

finishing: Thread[main,5,main]

running: Thread[Thread-0,5,main]

Notice that the message printed from within the run method verifies that the current thread within the new thread’s run method is not the thread that executes the other two print statements.

In what order will the three messages appear? The “starting” message will always appear first. Java guarantees a happened-before edge between any statement that precedes the call to the start method for a new thread in program order, and any statement in the new thread. In other words, everything happens in program order until the new thread starts and the new thread sees the world as it was at the time it was started.

There is no guarantee about the ordering of the remaining two messages. They will both be printed but Java does not define which will appear first. For some particular implementations of Java running on some specific hardware, the two messages might even appear in one order or the other most of the time. It would be quite incorrect, though, to take the fact that the messages happen to appear in a particular order each time the program is actually run, as evidence that they will always appear in that order. The two messages are unordered with respect to one another.

Furthermore, were the print methods not thread-safe, it would even be possible that the two messages could be interspersed: that the output produced by the running program would be an unreadable combination of the two messages.

Runnables

Although the previous example, a sub-classed Thread, is the simplest way to create a new thread of execution, it is not necessarily the best. Given Java’s constraint for single implementation inheritance, it would be a great shame to require that anything that needs to run on a new thread must also be a subclass of Thread. Indeed, that is not a requirement.

When an implementation of the Runnable interface is passed to a new Thread object, on creation, the Thread’s default run method calls that Runnable’s run method. Listing 2.2 shows a different implementation that behaves exactly like the previous example. Although it might not be immediately apparent in Listing 2.2, this example is quite a bit more flexible because the Runnable could inherit behavior from an arbitrary super-class.

Listing 2.2 Thread with Runnable

public class RunnableExample {

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(new Runnable() {

@Override

public void run() {

System.out.println("running: " + Thread.currentThread());

}

}).start();

System.out.println("finishing: " + Thread.currentThread());

}

}

Synchronization

As mentioned in the previous chapter, synchronization is the mechanism through which a group of statements is made atomic: only a single thread can execute statements in the block controlled by the synchronization lock—the critical section—at any given time. In Java synchronization is accomplished using the synchronized keyword and an object used as a mutex. The language guarantees that, at most, one thread can execute the statements in a given synchronized block at any time.

The three words mutex, monitor, and lock are used nearly interchangeably here. Mutex is perhaps the most clearly defined. It is a lock that provides mutual exclusion: only one thread may hold it at a time. The Java Specification uses the word monitor to describe an instance whose associated mutex is used to control access to a synchronized block. Lock is a looser term, with a more intuitive meaning.

Mutexes

Although threads are special objects, in Java any object can be used as a mutex for a synchronized block. Every Java Object has a monitor.

Listing 2.3 demonstrates a simple use of the synchronized keyword.

Listing 2.3 Synchronizing on an Object

public class SynchronizedExample {

static final Object lock = new Object();

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(new Runnable() {

@Override

public void run() {

synchronized (lock) {

System.out.println("running: " + Thread.currentThread());

}

}

}).start();

synchronized (lock) {

System.out.println("finishing: " + Thread.currentThread());

}

}

}

The critical sections in Listing 2.3 are the two print statements inside the two synchronized blocks. The object used as a monitor, lock, is nothing special. It is just an instance of the simplest of all objects, Object, created early in the code.

It can take some analysis to understand how the use of synchronization affects the example. There is, after all, only one thread that ever has the opportunity to execute the run method of the anonymous Runnable: the thread created in the anonymous thread’s start method. Similarly, there is only one thread that can print the “finishing” message: the main thread. If only one thread can enter either critical section, perhaps the synchronization is pointless?

In the unsynchronized version of this program, the new thread might print the “running” message at exactly the same time that the main thread prints the “finishing” message. If the print methods were not synchronized internally, this might result in an unreadable garble of the two messages. Because both synchronized blocks in the code in Listing 2.3 use the same mutex object, however, this version of the program cannot intermingle the two messages. Even though there are two separate synchronized blocks, only one thread can hold the mutex on a given object at any time.

One of the two threads, T1, will seize the mutex for the monitor object, enter one of the critical sections and print a message. Because it is holding the mutex, the other thread, T2, will not be able to enter its critical section. T2 will wait on the lock, at the beginning of its critical section, until T1 releases it. When T1 releases the lock, T2 will be notified that it can proceed. It will then enter its critical section, print its message, and continue.

Notice that that this synchronized version of the application still does not define the order in which the two messages will appear. There is no way to tell which of the two program threads, the main thread or the new thread, will enter its critical section first. This example, like the one before it, is said to contain a race condition.

Synchronizing on this

Because every object has a monitor, a convenient object on which to synchronize within a method is this. It makes sense that an object that must synchronize access to its state would do so by synchronizing on itself.

Listing 2.4 is an example of this technique. The Runnable is still an instance of an anonymous class. In this version of the program, however, a reference to the instance is stored in the variable job. The synchronization on this, from within the anonymous runnable’s run method, and the synchronization on job, in the main method, are synchronizing on the same object. This version behaves identically to the version in Listing 2.3.

Listing 2.4 Synchronizing on This

public class SynchronizedThisExample {

private static final Runnable job = new Runnable() {

@Override

public void run() {

synchronized (this) {

System.out.println("running: " + Thread.currentThread());

}

}

};

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(job).start();

synchronized (job) {

System.out.println("finishing: " + Thread.currentThread());

}

}

}

Experts will note that, though accurate, Listing 2.4 is not an improvement on Listing 2.3. Synchronizing the entire method, as in Listing 2.5, is a step backward in granularity that undermines most of the purpose of multithreading.

Synchronized Methods

Java has shorthand for synchronizing on this. Using the synchronized keyword in the signature of a method is exactly the same as wrapping the method’s contents in a block synchronized on this. For instance, the two methods sync1 and sync2 shown in Listing 2.5 are identical.

Listing 2.5 Synchronized Methods

public class SynchronizedMethodExample {

private int executionCount;

public synchronized void sync1() {

executionCount++;

someTask();

}

public void sync2() {

synchronized (this) {

executionCount++;

someTask();

}

}

private void someTask() {

// ...

}

}

A static method qualified with a synchronized keyword is synchronized on its class object. The two methods in Listing 2.6 are also identical to one another.

Listing 2.6 Synchronized Static Methods

public class SynchronizedStaticExample {

private static int executionCount;

public static synchronized void sync1() {

executionCount++;

someTask();

}

public static void sync2() {

synchronized (SynchronizedStaticExample.class) {

executionCount++;

someTask();

}

}

private static void someTask() {

// ...

}

}

Reentrant Monitors

Object monitors are said to be reentrant. Although only one thread can hold a mutex at any given time, once the thread holds it, successive attempts by the same thread to seize it again are, essentially, no-ops. Listing 2.7 shows a program that synchronizes twice on the same monitor, this. The program will complete successfully. It is correct, if not useful.

Listing 2.7 Synchronized Static Methods

public class ReentrantLockingExample {

public static void main(String... args) {

new ReentrantLockingExample().run1();

}

private synchronized void run1() { run2(); }

private synchronized void run2() {

System.out.println("reentrant locks!");

}

}

Common Synchronization Errors

Incorrect synchronization is the most common error in concurrent programs. Unfortunately, there’s a lot more to it than just getting the right things into synchronized blocks.

Using a Single Mutex

Access to a critical section is atomic only if the section synchronizes on a single mutex. It is the mutex, not the block, that protects the code.

Although locking on this is convenient, experienced developers may recognize that synchronization on a single object, this, might serialize operations that could otherwise be concurrent. An object, for instance, that has state components that are updated by requests to independent network endpoints might be significantly optimized if the requests do not block each other. To keep the separate operations from interfering with each other, the object might use separate locks to control access to separate components. Unfortunately, that might lead to code like that shown in Listing 2.8. See if you can identify the problem.

public class LeakingLockExample {

private String title = "";

public void setTitle(String title) {

if (null == title) {

throw new NullPointerException("title may not be null");

}

synchronized (this.title) { this.title = title; }

}

}

Of course, the problem is that the assignment to this.title is not correctly synchronized.

In the example, two different threads can see the assignment to this.title protected by different mutexes. Thread T1 seizes the mutex on the string currently referenced by this.title. As soon as it assigns this.title though, thread T2 can enter the setTitle method, and seize a different mutex. Because the two threads have not synchronized on a single mutex, there is no happens-before edge between them and the code could behave in any of a number of ways.

Deadlock

The second most common category of errors in concurrent programs is the deadlock. The previous discussion of Reentrant Monitors pointed out that attempting to seize the same mutex twice does not hang the thread. With two or more mutexes, though, it is quite possible to create code through which, under the right circumstances, no thread can make forward progress.

A deadlock occurs when two or more threads each holds locks that the other needs. This can happen, for instance, if threads T1 and T2 both need access to resources R1 and R2, which are protected by locks L1 and L2, respectively. If T1 seizes L1 to get access to R1 at the same time that T2 seizes L2 to get access to R2, neither T1 nor T2 will ever make forward progress. T1 needs L2, but cannot get it because it is held by T2. T2 needs L1 but cannot get it because it is held by T1. Listing 2.9 is an example of a program that might deadlock.

public class DeadlockingExample {

static final Object lock1 = new Object();

static final Object lock2 = new Object();

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(new Runnable() {

@Override

public void run() {

synchronized (lock1) {

synchronized (lock2) {

System.out.println("running: " + Thread.currentThread());

}

}

}

}).start();

synchronized (lock2) {

synchronized (lock1) {

System.out.println("finishing: " + Thread.currentThread());

}

}

}

}

Volatile

Most developers understand the need for atomicity in a concurrent program—and how to use synchronization to assure it—fairly easily. As discussed in Chapter 1, a much more common misunderstanding has to do with visibility. That misunderstanding frequently manifests itself in the belief that it is necessary to synchronize writing mutable state but not reading it. Listing 2.10 is an example of this kind of error.

Listing 2.10 Incorrect Synchronization

public class IncorrectSynchronizationExample {

static boolean stop;

private static final Runnable job = new Runnable() {

@Override

public void run() {

while (!stop) { // !!! Incorrect!

System.out.println("running: " + Thread.currentThread());

}

}

};

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(job).start();

synchronized (job) { // !!! Incorrect!

System.out.println("finishing: " + Thread.currentThread());

stop = true;

}

}

}

This code is incorrect. The use of synchronization for only one thread accomplishes nothing.

The reasoning behind this kind of code is usually something along the lines of: “There’s nothing critical about the number of times the loop in the run method is executed. If it runs 2 or 7 extra times, before it notices the stop flag, nobody cares ... and without synchronization, it will run much more efficiently.”

Remember, though, in the short description of Java’s contract that heads this chapter, that it is just the potential for write access from any thread that affects the need for synchronization There is no happened-before relationship of any kind between the assignment to stop on the main thread and the read from it, on the spawned thread. A cause in one need not ever result in an effect in the other.

As an example of a way in which this program might fail, consider a compiled version of the code in which the variable stop was represented as a register. Now suppose that the two threads are run on two separate cores in a CPU. There might well be two completely different stop flags, each a register in a different core. A change in the value of one is visible in the other only if the generated code forces the synchronization of the two values. That synchronization is expensive, and without the indication that it is necessary, might be optimized away. The spawned thread can run until it is externally terminated.

To reiterate, correct concurrency is about a contract. Although this example discusses hardware and compilers to demonstrate an error, attempting to demonstrate the absence of an error by citing known hardware and compiler architecture is fallacious reasoning. The memory model is the law. Programs that do not abide by it are incorrect.

The visibility problem in Listing 2.10 can be fixed with the addition of a single keyword, volatile. A variable marked as volatile behaves as if every reference to it were wrapped in a synchronization block. Listing 2.11 shows the corrected program.

public class VolatileExample {

static volatile boolean stop;

private static final Runnable job = new Runnable() {

@Override

public void run() {

while (!stop) {

System.out.println("running: " + Thread.currentThread());

}

}

};

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(job).start();

System.out.println("finishing: " + Thread.currentThread());

stop = true;

}

}

This program, like the ones in Listings 2.2 and 2.3, could possibly garble the messages if message printing were not synchronized. If the main thread changes the state of the stop flag, though, the spawned thread is guaranteed to see the change.

Note that making the stop flag volatile does not guarantee that the program will terminate! There is nothing, even in this version, that guarantees that the stop flag will ever be set to true. Because there is no ordering specified between the two threads, one entirely legal scenario is that the second thread is started and the first suspended and never resumed. In this scenario, the second thread is said to starve the first.

When there are not enough processors to power all the threads in a program, some kind of scheduler must assign those processors to threads. Hopefully it does that in a way that gives each thread a “fair share.” In practice, with the scheduler for the Android platform, Java’s lax scheduling rules are very rarely a concern. Even on Android, though, the exact meaning of “fair share” can, occasionally, be surprising.

Also note that although it does provide visibility, the volatile keyword does not provide atomicity. The program in Listing 2.12 might not print exactly eleven messages. As described in the previous chapter, the ++ operator is not atomic and it is possible for the read-alter-rewrite to store an incorrect value.

Listing 2.12 Incorrect Volatile

public class VolatileExample {

static volatile int iterations;

private static final Runnable job = new Runnable() {

@Override

public void run() {

while (iterations++ < 10) {

System.out.println("running: " + Thread.currentThread());

}

}

};

public static void main(String... args) {

System.out.println("starting: " + Thread.currentThread());

new Thread(job).start();

while (iterations++ < 10) {

System.out.println("finishing: " + Thread.currentThread());

}

}

}

Wait and Notify

Java contains two other concurrency primitives, the methods wait and notifyAll. The notifyAll method also has a less-frequently-used variant, notify.

Wait

The wait method enables a thread that has entered a synchronized block by seizing its mutex, to pause and release the mutex while still within block. This does not violate atomicity because, although the thread is inside the synchronized block, it is suspended and not executing.

The wait method can be called only when the current thread holds the mutex for the object on which the wait method is called. Listing 2.13 will throw a java.lang.IllegalMonitorStateException because the wait method is called when the executing thread does not hold the monitor object, lock.

Listing 2.13 IllegalMonitorStateException

public class WaitExceptionExample {

private Object lock = new Object();

public static void main(String... args) {

try { lock.wait(); }

catch (InterruptedException e) {

throw new AssertionError("Interrupts not supported");

}

}

}

When a thread is executing inside a synchronized block, and it calls the wait method on the block’s monitor object, the thread is suspended and releases the monitor. A thread that is suspended in a call to wait can be rescheduled by a subsequent call to notify or notifyAll. Of course, that call will have to be made from another thread; the suspended thread cannot do anything until it is rescheduled.

The code shown in Listing 2.14 contains two synchronized blocks that together control the number of threads executing the call to req.run.

When a new thread—this time let’s call it Edsger—enters the first critical section, the code checks to see whether there are already too many requests running. If there are not, Edsger increments the running count, leaves the critical section, and runs its request. When it completes the request, it attempts to enter the second critical section.

If any other thread is executing either of the two critical sections, Edsger will have to wait for that other thread either to leave, or to call wait. Once that happens Edsger enters the second critical section, decrements the count of running requests, and exits the method.

Consider, though, what would happen if three other threads (perhaps named Tony, Niklaus, and Per Brinch) were already running requests when Edsger entered the first synchronized block. Because the running count is already at its maximum, Edsger enters a loop that it can leave only when the running count goes below the maximum. Note that at this point Edsger is not yet counted as running. The running count can go below the maximum in only one way: some other thread that is currently running a request must enter the second synchronized block and decrement the count. Because Edsger releases the monitor when it calls wait, other threads are able do this.

Note

Among the things that are out of scope for this chapter is a complete discussion of the complex subject of handling of thread interrupts. In the examples here, interruptions are specifically disallowed. Brian Göetz has a good article on how to handle them correctly here: http://www.ibm.com/developerworks/library/j-jtp05236/.

Notify

Another thread decrementing the running count, however, won’t restart Edsger. Once a thread waits for a particular monitor, it will not be scheduled again, even if it could run, unless some running thread calls either notifyAll or notify for the same monitor. Listing 2.14 does this in the second critical section.

It is only when the running count is at its maximum that it is possible that threads are waiting in the first critical section; therefore notifyAll, in the second critical section, is called only then.

The distinction between the two methods, notify and notifyAll, is very important. A call to notify reschedules exactly one thread that is waiting on the monitor; notifyAll reschedules all of them. This is significant when different threads are waiting for different reasons.

Listing 2.14 Using Wait and Notify

public static final int RUN_MAX = 3;

private final Object lock = new Object();

private int running;

public void rateLimiter(NetworkRequest req) {

synchronized (lock) {

while (running >= RUN_MAX) {

try { lock.wait(); }

catch (InterruptedException e) {

throw new AssertionError("Interrupts not supported");

}

}

running++;

}

try { req.run(); }

finally {

synchronized (lock) {

if (running-- == RUN_MAX) { lock.notify(); }

}

}

}

Consider what would happen if the code in Listing 2.14 were modified to enable two different running maximums, one for threads that were performing read requests and another for those doing write requests. Suppose that the running count for readers went below its maximum, forcing a call to notify. It is entirely possible that only a thread waiting to write would be rescheduled, only to discover that although the read running count was below its maximum, the running count for writing was still above its maximum. The write thread might wait once again without ever notifying any other thread. In this case, no reading thread—no thread that can make forward progress—is ever rescheduled.

In circumstances like this, when different threads are waiting for different things, it is essential to call notifyAll, not notify. The former, as its name implies, reschedules all the threads waiting for the monitor. This is somewhat more expensive: each thread wakes up, examines the environment, and either proceeds or calls wait again if it cannot get the resources it needs. Only when threads are indistinguishable, as they are in Listing 2.14, is it safe to use notify. Even then, though, it is advisable to use notifyAll as a means of future-proofing.

The Concurrency Package

Although it is useful to understand Java’s low-level concurrency constructs, most code should never use any of them. Most code is concerned with accomplishing much higher-level goals. For most applications, designing with wait and notify is like planning a trip from San Francisco to Boston by considering carburetor design.

In Java 5, Java introduced an addition to the java.util package containing a framework of higher-level concurrency abstractions. Like the Collections framework before it, the Concurrency framework is safe, powerful, and sufficient for most programming tasks. Code that contains calls to low-level constructs like wait and notify is probably doing it wrong.

Safe Publication

A key problem in concurrent programming is sharing data between threads. Transferring data correctly from one thread to another is called safe publication. A careful examination of the rule that heads this chapter reveals a couple of strategies for safe publication.

The simplest strategy is immutable data. If it is impossible for any thread to change the state of the data, then the antecedent of the concurrency rule does not apply, and sharing the data is safe. As a rule of thumb, in Java, for state to be immutable it should be declared final. “Java Concurrency in Practice” (Göetz, et al. 2006) introduced the concept of “effective immutability” to discuss the situation in which everyone agrees not to change a variable’s value. That certainly works. It is much safer, however, to use the language to guarantee that a variable’s value cannot be changed, than to rely on assurances that it won’t be.

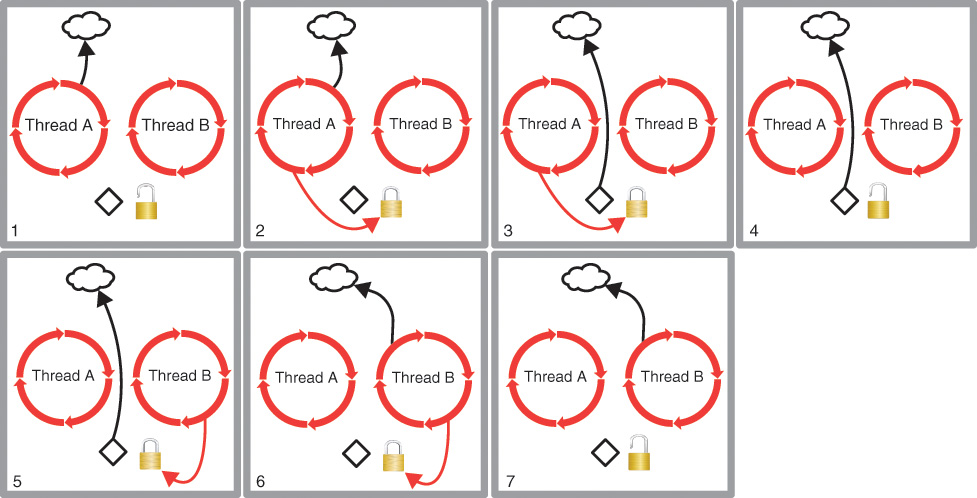

Transferring mutable state between threads is ticklish business. Fortunately, there is a standard idiom for doing it. Figure 2.1 illustrates that idiom.

When Thread A wants to pass an object to Thread B, it makes use of a mutex and a drop box. Thread A seizes the mutex and then puts a reference to the object into the drop box. It must make certain that it no longer holds any references to the transferred object. Thread A then releases the mutex. Thread B seizes the mutex and recovers the object reference from the drop box. Thread B must make certain that the drop box no longer holds a reference to the object before it releases the mutex.

Listing 2.15 is a partial implementation of the safe publication algorithm, in Java.

private Object lock = new Object();

private T dropBox;

/**

* Safely publish an object to another thread.

*

* @param obj The caller must hold <b>no other references</b> to this object.

*/

public void publish(T obj) {

synchronized (lock) {

if (null != dropBox) {

throw new IllegalStateException("The drop box is full!");

}

dropBox = obj;

}

}

/**

* Receive a published object.

*

* @return the received object

*/

public T receive() {

synchronized (lock) {

T obj = dropBox;

dropBox = null;

return obj;

}

}

During this transfer, there is only one piece of mutable state that is shared by two threads: the drop box. The object being passed is at no time accessible from more than one thread. A single mutex controls access to the drop box and the transfer is, therefore, safe and correct.

In common use, this idiom is implemented using a queue, instead of a simple drop box. Threads exchange data by enqueuing an object and leaving it to be dequeued by another thread.

Executors

It is easy to understand how an application can benefit from spawning an additional thread. Whenever there are independent tasks that can be executed concurrently, spawning more threads to execute them can make the application more efficient. On the other hand, simply spawning more threads does not mean that more tasks can be executed concurrently. On a processor with four cores, spawning ten threads for compute-intensive tasks does not make the application run ten times as fast.

Creating too many threads can, actually, make an application run more slowly. Threads are fairly heavy-weight objects. There is overhead incurred in switching between them. They also impose significant overhead during object allocation and garbage collection. Simply creating new threads haphazardly is not a great strategy.

For a given application running in a particular hardware environment there is an optimum range for the number of threads. Obviously, that number is related to the number of concurrent processes supported by the hardware. It need not, however, be exactly the same.

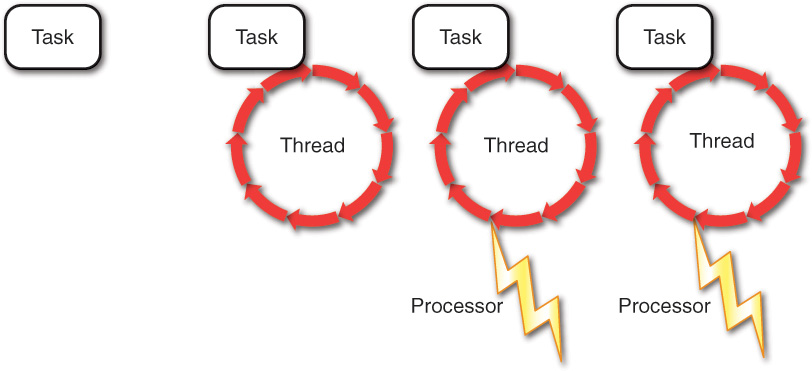

Applications need a policy for the number of threads they support. As is often the case, the necessary tool is one more layer of abstraction: separating the concept of a task from the thread that happens to execute it. Having already made the mental leap from physical processes (actual concurrency) to threads (unordered execution), this distinction seems nearly obvious. A thread is a virtual process powered by a physical CPU core, and a task is a unit of work. As illustrated in Figure 2.2, tasks are scheduled on threads and threads are scheduled on processors.

The Java concurrency framework, introduced in Java 5, defines a service called an Executor. The Executor combines the capability to define a threading policy with the safe publication idiom described in Figure 2.1. An executor is simply a pool of one or more threads servicing a queue of tasks. Tasks are closures, enqueued onto the executor queue in exchange for a Future, the promise of a value. Eventually, the task is removed from the queue by one of the executor threads and executed. The result of the execution becomes the value of the future.

Executors are a valuable step up in abstraction from the threads, synchronized blocks, and notifications of the previous section. By using an executor, an application can establish a single, cross-app policy for its optimum number of threads. Most application code simply creates new tasks—small, relatively light-weight objects—and submits them to the executor for asynchronous execution, with minimal concern for overhead and thread safety. An application can, certainly, use more than one Executor. It should be clear, though, that an application with tens of them does not have a threading policy.

Whereas an Executor is an abstraction that separates threading policy from the tasks being executed, an executor service is a real-world implementation of the Executor abstraction. It defines messy details like how the executor starts and stops, and whether it is possible to determine which of the two states it is in.

Futures

When one thread, A, requests that another thread, B, asynchronously perform some task on its behalf, there are only a few ways that B can return a result from the task to A. The simplest, of course, is that A can wait for B to complete. That kind of misses the point of concurrency.

Another possibility, popular in web applications, is the callback. Any function

f(x1, x2, x3, ..., xn) = y

can be transformed into a call

f'(x1, x2, x3, ..., xn, g(y))

That is, instead of returning a value, as does the function f, f’ takes one additional argument, g, which is a function to be called, with the return value. Because the requesting thread, A, has no idea when its method, g, will be called, g commonly enqueues a task to handle the return value on some kind of local queue.

Although this idiom is effective, particularly when the return value is being shipped across a network, it can lead to the infamous “callback hell” in which JavaScript developers all too often find themselves. The method g can be called at multiple and inconvenient times.

Executors use a third strategy, a future. A future is the promise of a value. A client handing a task to an executor receives a future in return. The future is not the value, but the client can treat it as if it were, until it actually needs the value that the future represents. When the client thread cannot make forward progress without the actual value, it is, by definition, prepared to wait for that value. It calls Future.get, which suspends the thread until the service thread completes the task and publishes the result into the client thread. This approach drastically reduces the need for callbacks and asynchronous programming.

Summary

This chapter reviewed the essentials of concurrent programming in Java as they will be applied to Android. It established several key features: Thread objects, Java’s representation of an asynchronous task, synchronization and monitors, Java’s atomicity and visibility constructs, and volatiles, Java’s visibility shortcut.

It also introduced a common idiom for safely publishing data from one thread to another and demonstrated its use in the Java Concurrency framework’s Executor type. Executors reduce the complexity of current programming by handling many of the aspects of safe publication and enabling an application to establish a parallelization policy, a number of threads that is sufficient to provide optimal parallel execution without incurring excessive scheduling or memory management overhead.