Chapter 10. Advanced Thread Synchronization

The preceding chapter described Windows performance issues and how to deal with them in realistic situations. Chapter 8 described several simple problems that require synchronization. This chapter solves additional practical but more complex synchronization problems, relying on the ideas introduced in Chapters 8 and 9.

The first step is to combine two or more synchronization objects and data to create compound objects. The most useful combination is the “condition variable model” involving a mutex and one or more events. The condition variable model is essential in numerous practical situations and prevents many serious program race condition defects that occur when programmers do not use Windows synchronization objects, especially events, properly. Events are complex, and their behavior varies depending on the choices illustrated in Table 8-1, so they should be used according to well-understood models. In fact, even the condition variable model described here has limitations that we’ll describe.

NT6 (Windows Vista, Windows Server 2008, and Windows 7) added condition variables to the Windows API, which is a significant advance that will be easy to understand after we cover the condition variable model. Programmers, however, will not be able to use condition variables if they need to support NT5 (Windows XP and Server 2003), which will probably be a requirement for many years after publication. NT6 condition variables are essential for a totally correct implementation that overcomes all the event limitations.

Subsequent sections show how to use asynchronous procedure calls (APCs) so that individual, cooperating threads can be controlled and canceled in an orderly manner.

Additional performance issues are discussed as appropriate.

The Condition Variable Model and Safety Properties

Threaded programs are much easier to develop, understand, and maintain if we use well-understood and familiar techniques and models. Chapter 7 discussed this and introduced the boss/worker and work crew models to establish a useful framework for understanding many threaded programs. The critical code region concept is essential when using mutexes, and it’s also useful to describe the invariants of your data structure. Finally, even defects have models, as we saw with the deadlock example. Note: Microsoft has its own distinct set of models, such as the apartment model and free threading. These terms are most often used with COM.

Using Events and Mutexes Together

The next step is to describe how to use mutexes and events together, generalizing Program 8-2, where we had the following situation, which will occur over and over again. Note: This discussion applies to CRITICAL_SECTIONs and SRW locks as well as to mutexes.

• The mutex and event are both associated with the message block or other data structure.

• The mutex defines the critical code section for accessing the data structure.

• The event signals that there is a new message or some other significant change to the data structure.

• Generalizing, the mutex ensures the object’s invariants (or safety properties), and the event signals that the object has changed state (e.g., a message has been added or removed from a message buffer), possibly being put into a known state (e.g., there is at least one message in the message buffer).

• One thread (the producer in Program 8-2) locks the data structure, changes the object’s state by creating a new message, and signals the event associated with the fact that there is a new message.

• At least one other thread (the consumer in this example) waits on the event for the object to reach the desired state. The wait must occur outside the critical code region so that the producer can access the object.

• A consumer thread can also lock the mutex, test the object’s state (e.g., is there a new message in the buffer?), and avoid the event wait if the object is already in the desired state.

This general situation, where one thread changes a state variable and other threads wait for the change, occurs in numerous situations. The example here involves producers, consumers, and message passing; Programs 10-1 and 10-2 provide a different example.

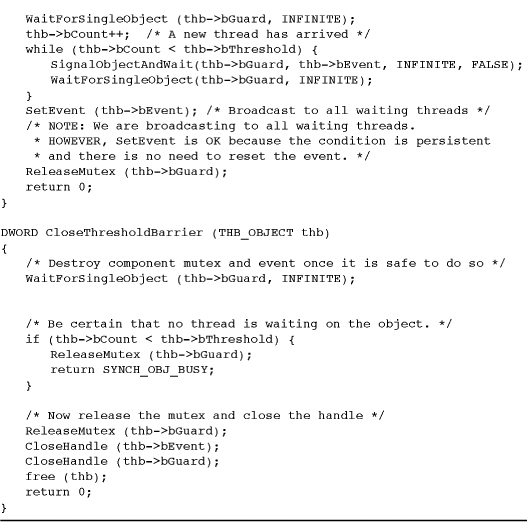

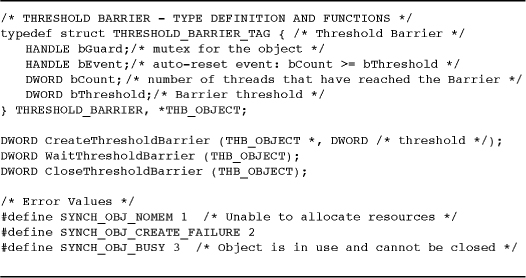

Program 10-1 SynchObj.h: Part 1—Threshold Barrier Definitions

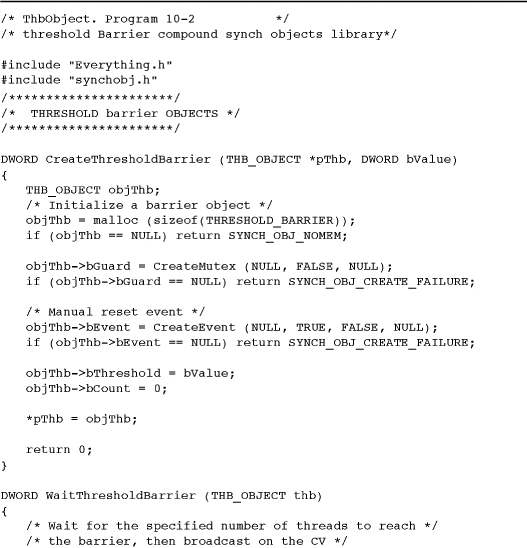

Program 10-2 ThbObject: Implementing the Threshold Barrier

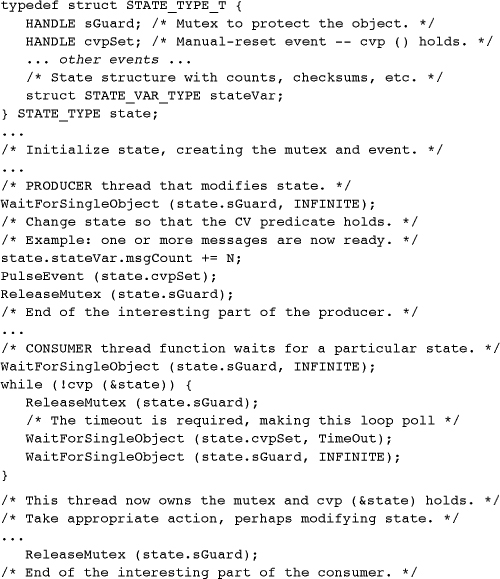

The Condition Variable Model

Now let’s combine all of this into a single code fragment that represents what we will call the condition variable model (CV model) with two variations, the signal and broadcast CV models. The first examples use the broadcast variation. The result is a program model that will occur frequently and can solve a wide variety of synchronization problems. For convenience, the example is stated in terms of a producer and a consumer.

The discussion may seem a bit abstract, but once the techniques are understood, we will be able to solve synchronization problems that would be very difficult without a good model.

The code fragment has several key elements.

• A data structure of type STATE_TYPE that contains all the data or state variables such as the messages, checksums, and counters used in Program 8-2.

• A mutex (alternatively, an SRW or CRITICAL_SECTION) and one or more events associated with, and usually a part of, the data structure.

• One or more Boolean functions to evaluate the condition variable predicates, which are the conditions (states) on which a thread might wait. Examples include “a new message is ready,” “there is available space in the buffer,” and “the queue is not empty.” A distinct event may be associated with each condition variable predicate, or one event may be used to represent simply a change of state or a combination (logical “or”) of several predicates. In the latter case, test individual predicate functions with the mutex locked to determine the actual state. If the predicate (logical expression) is simple, there is no need for a separate function.

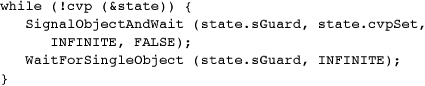

The following code segment shows a producer and consumer using these principles, with a single event and condition variable predicate (implemented with a function, cvp, that is assumed but not shown). When the producer signals that a desired state has been reached, the signal should be broadcast to all waiting consumers. For instance, the producer may have created several messages, and the state is changed by increasing the message count. In many situations, you want to release only a single thread, as discussed after the code fragment.

This code segment is designed to operate under all NT kernel versions and even Windows 9x. SignalObjectAndWait will then simplify the solution. We show this full solution, appropriate for obsolete Windows versions, because:

• The usage pattern is still common in existing programs.

• Understanding the segment will make it easier to see the usefulness of SignalObjectAndWait and NT6 condition variables.

• The usage pattern is still useful when using a CRITICAL_SECTION in place of a mutex.

NT6 condition variables will further simplify and improve the solution.

Note and caution: This example deliberately uses PulseEvent, even though many writers and some of the Microsoft documentation warn against its use (see the remarks section in the MSDN entry). The ensuing discussion and examples will justify this choice, but with an additional cautionary note in the SignalObjectAndWait section. Also, there is a WaitForSingleObject call with a finite time-out, making the loop a form of polling loop; we show later how to eliminate the time-out.

Comments on the Condition Variable Model

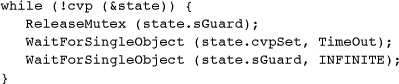

The essential feature in the code segment is the loop in the consumer code. The loop body consists of three steps: (1) unlock the mutex that was locked prior to entering the loop; (2) wait, with a finite time-out, on the event; and (3) lock the mutex again. The event wait time-out is significant, as explained later.

Pthreads, as implemented in many UNIX and other systems, combine these three steps into a single function, pthread_cond_wait, combining a mutex and a condition variable (which is similar but not identical to the Windows event). Windows NT6 condition variables do the same thing. This is the reason for the term “condition variable model.” There is also a timed version, which allows a time-out on the event wait.

Importantly, the single Pthreads function implements the first two steps (the mutex release and event wait) as an atomic operation so that no other thread can run before the calling thread waits on the event (or condition variable).

The Pthreads designers and the NT6 designers made a wise choice; the two functions (with and without a time-out) are the only ways to wait on a condition variable in Pthreads, so a condition variable must always be used with a mutex. Windows (before NT6) forces you to use two or three separate function calls, and you need to do it in just the right way to avoid problems.

Another motivation for learning the CV model, besides simplifying programs, is that it is essential if you ever need to use Pthreads or convert a Pthreads program to Windows.

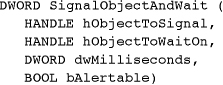

Note: Windows NT Version 4.0 introduced a new function, SignalObjectAndWait (SOAW), that performs the first two steps atomically. The later examples assume that this function is available, in keeping with the policy established in Chapter 1. Nonetheless, the CV model introduction does not use SOAW in order to motivate its later usage, and a few examples have alternative implementations on the book’s Examples file that use a CS in place of a mutex. (SOAW cannot be used with a CS.) Appendix C (Table C-6) shows that SignalObjectAndWait provides significant performance advantages.

Using the Condition Variable Model

The CV model, when implemented properly, works as follows in the producer/consumer context.

• The producer locks the mutex, changes state, pulses the event when appropriate, and unlocks the mutex. For example, the producer pulses the event when one or more messages are ready.

• The PulseEvent call should be with the mutex locked so that no other thread can modify the object, perhaps invalidating the condition variable predicate.

• The consumer tests the condition variable predicate with the mutex locked. If the predicate holds, there is no need to wait.

• If the predicate does not hold, the consumer must unlock the mutex before waiting on the event. Otherwise, no thread could ever modify the state and set the event.

• The event wait must have a time-out just in case the producer pulses the event in the interval between the mutex release (step 1) and the event wait (step 2). That is, without the finite time-out, there could be a “lost signal,” which is another example of a race condition. APCs, described later in this chapter, can also cause lost signals. The time-out value used in the producer/consumer segment is a tunable parameter. (See Appendix C for comments on optimal values.)

• The consumer always retests the predicate after the event wait. Among other things, this is necessary in case the event wait has timed out. Also, the state may have changed. For example, the producer may have produced two messages and then released three waiting consumers, so one of the consumers will test the state, find no more messages, and wait again. Finally, the retest protects against spurious wakeups that might result from a thread setting or pulsing the event without the mutex locked. There is no way to avoid this time-out and polling loop until we get to SignalObjectAndWait and the Windows NT6 condition variables.

• The consumer always owns the mutex when it leaves the loop, regardless of whether the loop body was executed.

Condition Variable Model Variations

Notice, first, that the preceding code fragment uses a manual-reset event and calls PulseEvent rather than SetEvent. Is this the correct choice, and could the event be used differently? The answer is yes to both questions.

Referring back to Table 8-1, we see that the example has the property that multiple threads will be released. This is correct in this example, where several messages are produced and there are multiple consuming threads, and we need to broadcast the change. However, if the producer creates just one message and there are multiple consuming threads, the event should be auto-reset and the producer should call SetEvent to ensure that exactly one thread is released. This variation is the “signal CV” model rather than the “broadcast CV” model. It is still essential for the released consumer thread, which will then own the mutex and can remove a message.

Of the four combinations in Table 8-1, two are useful in the CV model. Considering the other two combinations, auto-reset/PulseEvent would have the same effect as auto-reset/SetEvent (the signal CV model) because of the time-out, but the dependence on the time-out would reduce responsiveness. The manual-reset/SetEvent combination causes spurious signals (the condition variable predicate test offers protection, however), because some thread must reset the event, and there will be a race among the threads before the event is reset.

In summary:

• Auto-reset/SetEvent is the signal CV model, which releases a single waiting thread.

• Manual-reset/PulseEvent is the broadcast CV model, which releases all waiting threads.

• Pthreads and NT6 condition variables make the same distinction but do not require the finite time-out in the event wait for the broadcast model, whereas the time-out is essential in Windows because the mutex release and event wait are not performed atomically.

• This will change, however, when we introduce SignalObjectAndWait.

An Example Condition Variable Predicate

Consider the condition variable predicate:

state.stateVar.count >= K;

In this case, a consumer thread will wait until the count is sufficiently large. This shows, for example, how to implement a multiple-wait semaphore; recall that normal semaphores do not have an atomic wait for multiple units. The consumer thread would then decrement the count by K after leaving the loop but before releasing the mutex.

Notice that the broadcast CV model is appropriate in this case because a single producer may increase the count so as to satisfy several but not all of the waiting consumers.

Semaphores and the Condition Variable Model

In some cases, a semaphore would be appropriate rather than an event, and semaphores have the advantage of specifying the exact number of threads to be released. For example, if each consumer were known to consume exactly one message, the producer could call ReleaseSemaphore with the exact number of messages produced. In the more general case, however, the producer does not know how the individual consumers will modify the state variable structure, so the CV model can solve a wider class of problems.

The CV model is powerful enough to implement semaphores. As described earlier, the basic technique is to define a predicate stating that “the semaphore count is nonzero” and create a state structure containing the count and maximum value. Exercise 10–10 shows a complete solution that allows for an atomic wait for multiple units.

Using SignalObjectAndWait

The consumer loop in the preceding code segment is critical to the CV model because it waits for a state change and then tests to see if the desired state holds. The state may not hold if the event is too coarse, indicating, for example, that there was simply some state change, not necessarily the required change. Furthermore, a different consumer thread might have made some other state change, such as emptying the message buffer. The loop required two waits and a mutex release, as follows:

The time-out on the first wait (the event wait) is necessary in order to avoid missed signals and other potential problems. This code will work if you replace the mutexes with CSs.

SOAW is an important enhancement that eliminates the need for the time-out and combines the first two loop statements; that is, the mutex release and the event wait. In addition to the program simplicity benefit, performance generally improves because a system call is eliminated and there is no need to tune the wait time-out period.

This function simplifies the consumer loop, where the two handles are the mutex and event handles, respectively. There is no event wait time-out because the calling thread waits on the second handle immediately after the first handle is signaled (which, in this case, means that the mutex is released). The signal and wait are atomic so that no other thread can possibly signal the event between the time that the calling thread releases the mutex and the thread waits on the second handle. The simplified consumer loop, then, is as follows.

The final argument, bAlertable, is FALSE here but will be set to TRUE in the later sections on APCs.

In general, the two handles can be for any appropriate synchronization objects. You cannot, however, use a CRITICAL_SECTION as the signaled object; kernel objects are necessary.

Many program examples, both in the book and in the Examples file, use SignalObjectAndWait, although some alternative solutions are also included and are mentioned in the text. If you want to use a CRITICAL_SECTION instead of a mutex, use the signal/wait pair in the original code segment and be certain to have a finite time-out period on the event wait.

The section on APCs shows a different technique to signal waiting threads with the additional advantage of signaling a specific waiting thread, whereas, when using events, there is no easy way to control which thread is signaled.

PulseEvent: One More Caution

SignalObjectAndWait, used with PulseEvent, appears to implement the broadcast CV model properly, and it nearly does. The remaining problem is that the Windows executive can, under some circumstances, preempt a waiting thread (such as one waiting on SignalObjectAndWait) just as another thread calls PulseEvent, resulting in a missed signal and possibly a permanently blocked thread. This is PulseEvent’s fatal flaw and the principal reason that MSDN warns against it.

Unfortunately, it’s frequently necessary to use the CV model (e.g., you need to port a Pthreads program or you need the underlying functionality). Fortunately, there are several defenses against this flaw:

• Use a finite time-out with SOAW, treating a time-out as a spurious signal detected when the loop retests the predicate. You could also gain performance using a CS or an SRW lock rather than a mutex and then wait on the event with a finite time-out.

• Use the much faster Windows condition variables if you do not need to support NT5 (Windows XP, etc.).

• Assure that your program never uses the functions that would cause the executive to preempt a waiting thread. GetThreadContext is one such function and is very rare. However, if you are writing a library, there is no direct way to assure that the calling program respects such limitations.

• Pulse the event multiple times so that the waiting thread will eventually receive the signal. This is the approach used in Programs 10-3 and 10-4 where new messages are generated continuously.

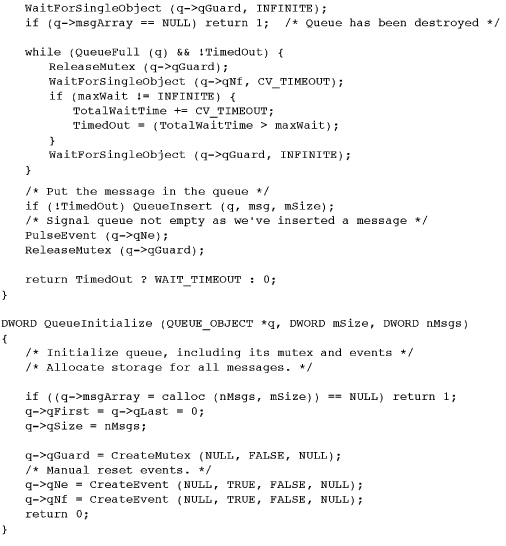

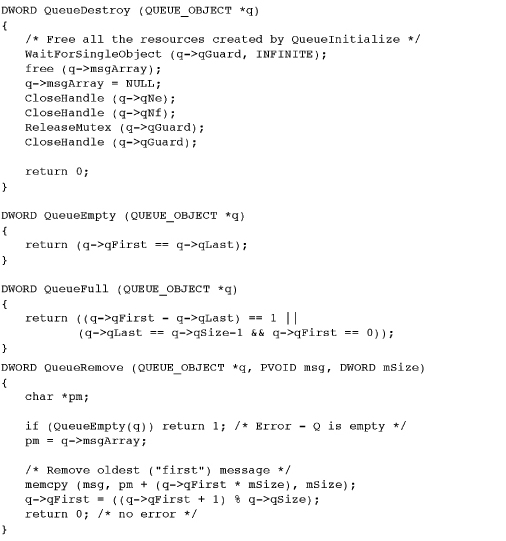

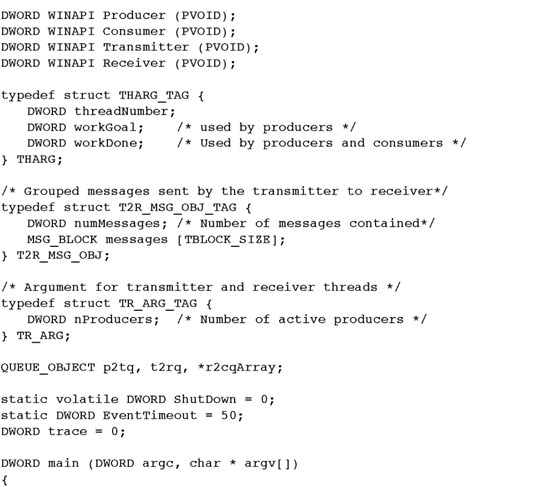

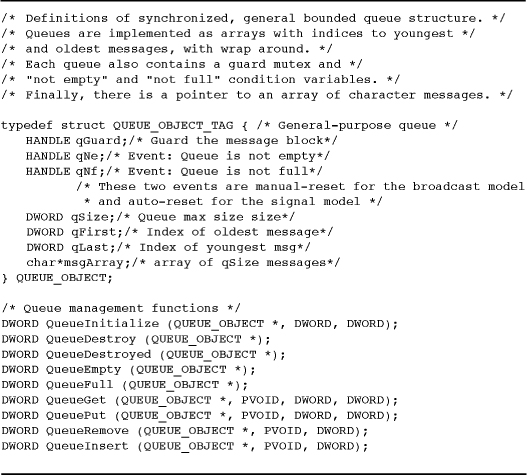

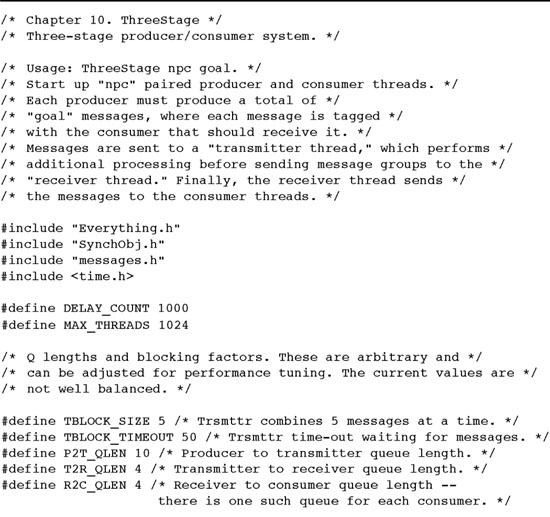

Program 10-3 SynchObj.h: Part 2—Queue Definitions

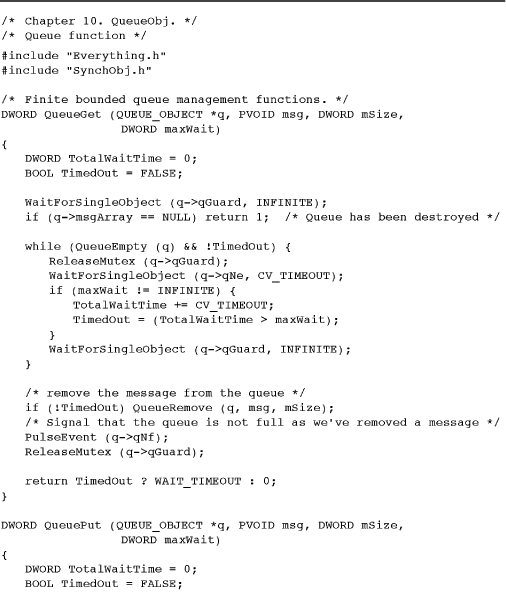

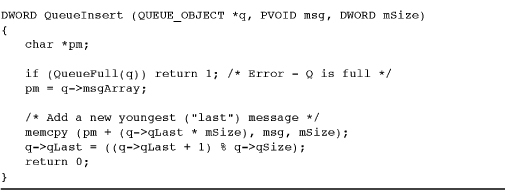

Program 10-4 QueueObj: The Queue Management Functions

• Avoid the broadcast model, which we can do in Programs 10-4 and 10-5. The signal model is sufficient if you need to signal only a single thread.

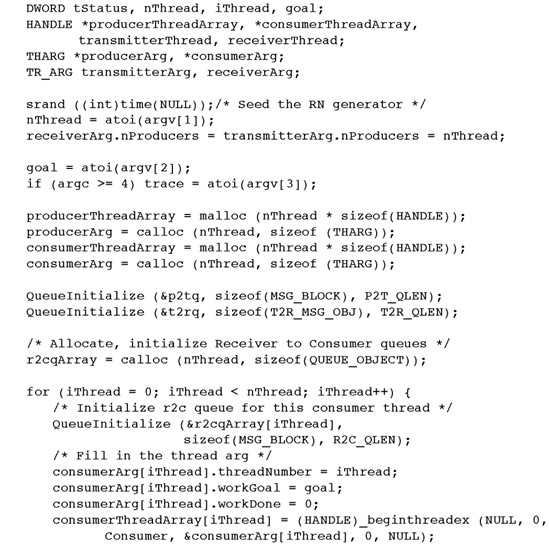

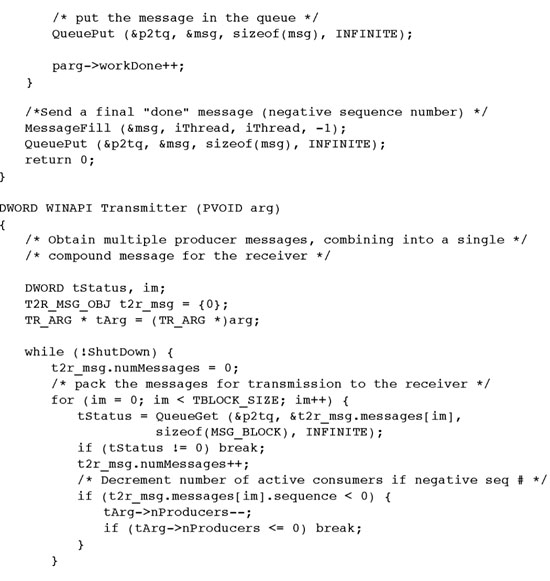

Program 10-5 ThreeStage: A Multistage Pipeline

• Program 10-4 uses PulseEvent, but comments after the program describe variations that do not require it.

Example: A Threshold Barrier Object

Suppose that you wish to have the worker threads wait until there are enough workers to form a work crew to work in parallel on a task, as in Program 7-1 (sortMT). Or, you may want to wait until all threads have finished the first phase of a parallel computation before proceeding to the next phase. Once the threshold is reached, all the workers start operation, and if any other workers arrive later, they do not wait. This problem is solvable with a threshold barrier compound object.

Programs 10-1 and 10-2 show the implementation of the three functions that support the threshold barrier compound object. Two of the functions, CreateThresholdBarrier and CloseThresholdBarrier, manage a THB_OBJECT. The threshold number of threads is a parameter to CreateThresholdBarrier.

Program 10-1 shows the appropriate part of the header file, SynchObj.h, while Program 10-2 shows the implementation of the three functions. Notice that the barrier object has a mutex, an event, a counter, and a threshold. The condition variable predicate is documented in the header file—that is, the event is to be set exactly when the count is greater than or equal to the threshold.

Program 10-2 now shows the implementation of the three functions. A test program, testTHB.c, is in the Examples file. Notice how the WaitThresholdBarrier function contains the familiar condition variable loop. Also notice that the wait function not only waits on the event but also signals the event. The previous producer/consumer example waited and signaled in separate functions.

Finally, the condition variable predicate is, in this case, persistent. Once it becomes true, it will never change, unlike the situation in other examples. This allows a further simplification in WaitThresholdBarrier. SetEvent is okay because there is no need to reset the event, although PulseEvent would also work and would adhere to the CV model. Later examples do use the CV model.

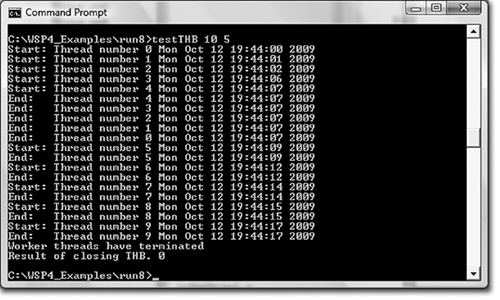

Run 10-2 shows the test program, testTHB, with command line parameters to start 10 short-lived threads and a barrier of 5. Each thread prints its start and stop time, and testTHB starts new threads at random intervals averaging one thread every 1.5 seconds (approximately). The first five threads end at about the same time immediately after the fifth thread arrives. Later threads end shortly after they start.

Run 10-2 testTHB: Testing the Threshold Barrier Functions

Comments on the Threshold Barrier Implementation

The threshold barrier object implemented here is limited for simplicity. In general, we would want to emulate Windows objects more closely by:

• Allowing the object to have security attributes (Chapter 15)

• Allowing the object to be named

• Permitting multiple objects on the object and not destroying it until the reference count is 0

• Allowing the object to be shared between processes

The Examples file contains a full implementation of one such object, a multiple wait semaphore, and the techniques used there can then be used for any of the objects in this chapter.

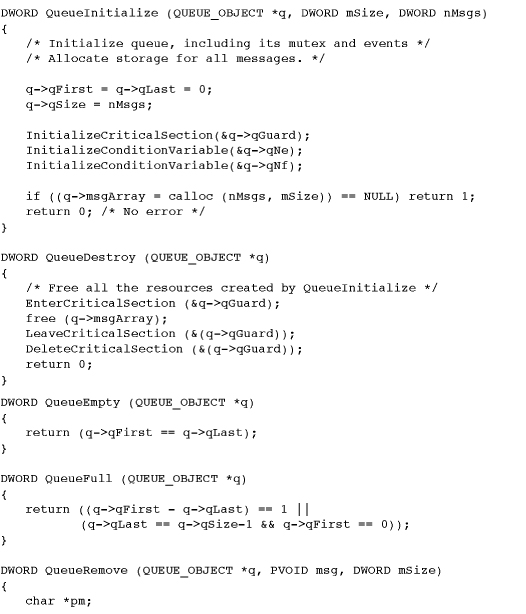

A Queue Object

So far, we have associated a single event with each mutex, but in general there might be more than one condition variable predicate. For example, in implementing a first in, first out (FIFO) queue, a thread that removes an element from the queue needs to wait on an event signifying that the queue is not empty, while a thread placing an element in the queue must wait until the queue is not full. The solution is to provide two events, one for each condition. Notice, however, that there is a single mutex.

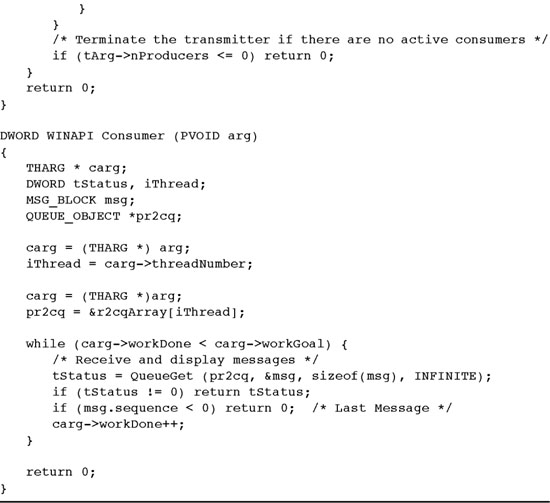

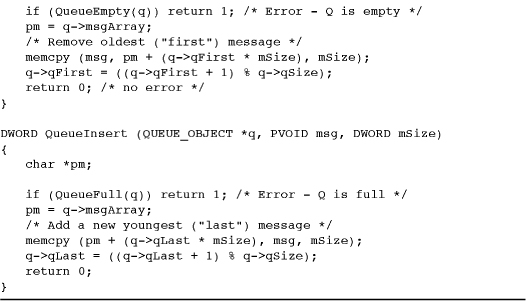

Program 10-3 shows the definitions of a queue object and its functions. Programs 10-4 and 10-5 show the queue functions and a program that uses them.

Program 10-4 shows the functions, such as QueueInitialize and QueueGet, that are defined at the end of Program 10-3. Notice that QueueGet and QueuePut provide synchronized access, while QueueRemove and QueueInsert, which the first two functions call, are not themselves synchronized and could be used in a single-threaded program. The first two functions provide for a time-out, so the normal condition variable model is extended slightly. The time-out parameter is used when the mutex guard is replaced with a CRITICAL_SECTION.

QueueEmpty and QueueFull are two other essential functions used to implement condition variable predicates.

This implementation uses PulseEvent and manual-reset events (the broadcast model) so that multiple threads are notified when the queue is not empty or not full.

A nice feature of the implementation is the symmetry of the QueueGet and QueuePut functions. Note, for instance, how they use the empty and full predicates and how they use the events. This simplicity is not only pleasing in its own right, but it also has the very practical benefit of making the code easier to write, understand, and maintain. The condition variable model enables this simplicity and its benefits.

Finally, C++ programmers will notice that a synchronized queue class could be constructed from this code; Exercise 10–7 suggests doing this.

Example: Using Queues in a Multistage Pipeline

The boss/worker model, along with its variations, is one popular multithreaded programming model, and Program 8-2 is a simple producer/consumer model, a special case of the more general pipeline model.

Another important special case consists of a single boss thread that produces work items for a limited number of worker threads, placing the work items in a queue. This message-passing technique can be helpful when creating a scalable server that has a large number (perhaps thousands) of clients and it is not feasible to have a worker thread for each client. Chapter 14 discusses the scalable server problem in the context of I/O completion ports.

In the pipeline model, each thread, or group of threads, does some work on work items, such as messages, and passes the work items on to other threads for additional processing. A manufacturing assembly line is analogous to a thread pipeline. Queues are an ideal mechanism for pipeline implementations.

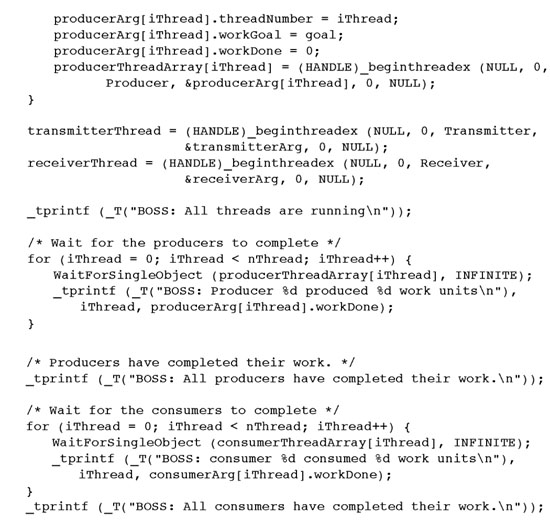

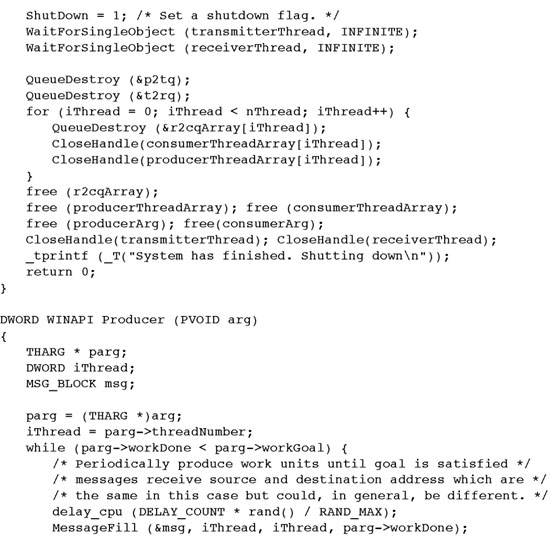

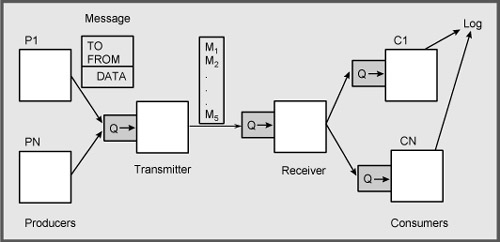

Program 10-5, ThreeStage, creates multiple production and consumption stages, and each stage maintains a queue of work to perform. Each queue has a bounded, finite length. There are three pipeline stages in total connecting the four work stages. The program structure is as follows.

• Producers create checksummed unit messages periodically, using the same message creation function as in Program 8-2, except that each message has a destination field indicating which consumer thread is to receive the message; each producer communicates with a single consumer. The number of producer/consumer pairs is a command line parameter. The producer then sends the unit message to the transmitter thread by placing the message in the transmission queue. If the queue is full, the producer waits until the queue state changes.

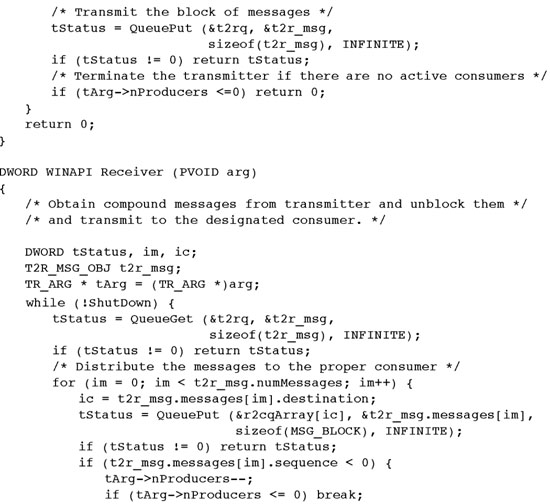

• The transmitter thread gathers all the available unit messages (but, arbitrarily, no more than five at a time) and creates a transmission message that contains a header block with the number of unit messages. The transmitter then puts each transmission message in the receiver queue, blocking if the queue is full. The transmitter and receiver might, in general, communicate over a network connection. The 5:1 blocking factor is easy to adjust.

• The receiver thread processes the unit messages in each transmission message, putting each unit message in the appropriate consumer queue if the queue is not full.

• Each consumer thread receives unit messages as they are available and puts the message in a log file.

Figure 10-1 shows the system. Notice how it models networking communication where messages between several sender/receiver pairs are combined and transmitted over a shared facility.

Figure 10-1 Multistage Pipeline

Program 10-5 shows the implementation, which uses the queue functions in Program 10-4. The message generation and display functions are not shown; they were first seen in Program 8-1. The message blocks are augmented, however, to contain source and destination fields along with the checksum and data.

One of the complexities in Program 10-5 is forcing all the threads to shut down in an orderly way without resorting to the very undesirable TerminateThread function, as was done in Edition 3. The solution is:

• Producer threads have an argument with the work goal, and the threads terminate after producing the required number of messages followed by a final “end message” with a negative sequence number.

• The consumer threads also have work goals, and they also look for messages with a negative sequence number in case the consumer goal does not match the producer goal.

• The transmitter and receiver threads know the number of consumers and can decrement the number of active consumers upon processing a message with a negative sequence. The threads terminate when the count reaches 0.

• The transmitter and receiver also test a global ShutDown flag. However, it is impossible to test this flag while waiting for a message. A later solution, ThreeStageCancel, will use the flag.

Queue Management Function Comments and Performance

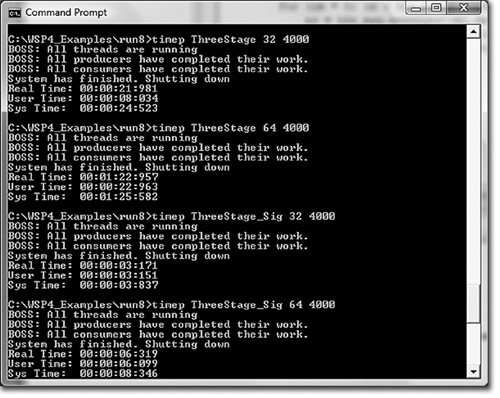

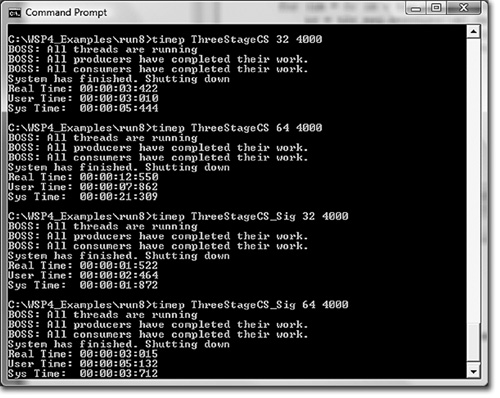

Program 10-5 and the queue management functions can be implemented in several different ways, and the version shown here is actually the slowest and scales poorly as the thread count increases. The following comments that refer to performance are based on that data. The Examples file contains several variations, and subsequent run screen shots will show the operation and performance.

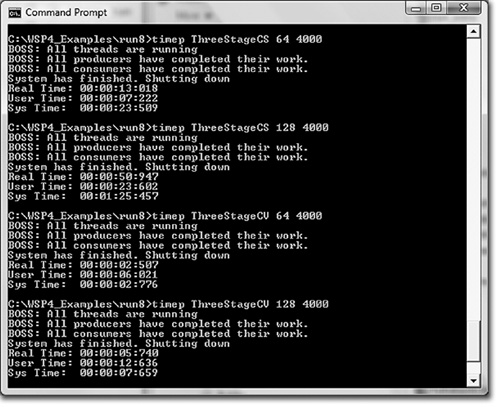

• ThreeStage, Program 10-5, uses the broadcast model (manual-reset/PulseEvent) to allow for the general case in which multiple messages may be requested or created by a single thread. This is the only version subject to the risk of a missed PulseEvent signal.

• ThreeStageCS uses a CRITICAL_SECTION, rather than a mutex, to protect the queue object. However, you must use an EnterCriticalSection followed by an event wait rather than SignalObjectAndWait with a finite time-out. Two files provided with the Examples, QueueObjCS.c and QueueObjCS_Sig.c, implement the queue management functions.

• ThreeStage_noSOAW does not use SignalObjectAndWait; instead it uses successive mutex and event waits with a time-out. This corresponds to the code fragment at the beginning of the chapter.

• ThreeStage_Sig uses the signal model (auto-reset/SetEvent) with SignalObjectAndWait and will work if only one message is produced at a time, as is the case in this example. There are significant performance advantages because only a single thread is released to test the predicate.

• ThreeStageCS_Sig is like ThreeStage_Sig, except that it uses a CS in place of a mutex. It combines the features of ThreeStageCS and ThreeStage_Sig.

• ThreeStageSig_noSOAW combines the ThreeStage_noSOAW and ThreeStage_Sig features.

• ThreeStageCV uses Windows NT6 condition variables, which are described later in the chapter.

Appendix C also shows the comparative performance of these implementations; the run screen shots here show some initial results.

Run 10-5a compares ThreeStage and ThreeStage_Sig with 32 and 64 producer/consumer pairs. Both use a mutex, but ThreeStage, which broadcasts, performs poorly and does not scale as we go from 32 to 64 threads.

Run 10-5a ThreeStage[_Sig]: Mutex Broadcast and Signaling

Run 10-5b makes the same comparison, but with a CRITICAL_SECTION and a time-out in the consumer loop. CS performance is much better, as expected, but, again, the broadcast model does not scale well with the number of producer/consumer pairs.

Run 10-5b ThreeStageCS[_Sig]: CS Broadcast and Signaling

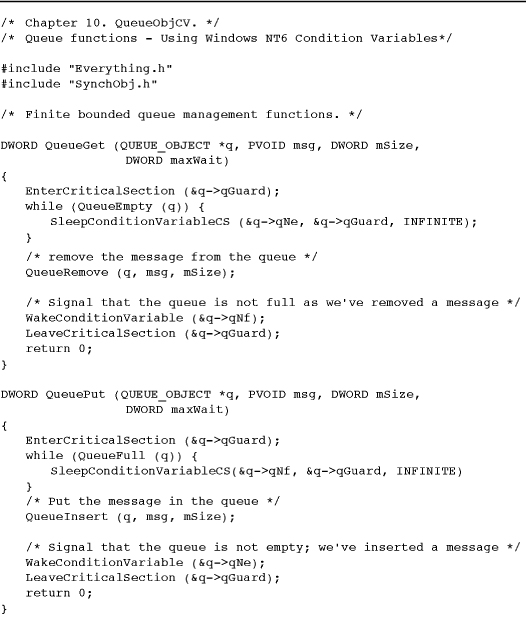

Windows NT6 Condition Variables

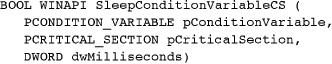

Windows Vista and 2008 Server support condition variable objects whose behavior is similar to Pthreads condition variables and the CV model we’ve used in this chapter. Furthermore, Windows condition variables (WCV, a nonstandard but convenient abbreviation) use CRITICAL_SECTION and SRW lock objects (Chapter 8) rather than mutexes, and the WCV objects are also user, not kernel, objects, providing additional performance benefits. The only significant limitations are:

• Condition variables cannot be shared between processes the way you can share named mutexes and events.

• There is nothing comparable to SignalObjectAndWait’s alertable state (see the upcoming “Asynchronous Procedure Calls” section), so you cannot cancel threads waiting on condition variables.

First, the type for a WCV object is CONDITION_VARIABLE. Initialize WCVs just as you would a CRITICAL_SECTION with the InitializeConditionVariable function. There is no DeleteConditionVariable function analogous to DeleteCriticalSection for the same reason that there is no delete function for SRW locks.

Use SleepConditionVariableCS with a CRITICAL_SECTION to wait for a signal to a WCV. Be sure to initialize both the CS and the WCV before their first use. There is a time-out, and the function looks similar to SignalObjectAndWait, except there is no alertable flag.

SleepConditionVariableSRW is an alternative, using SRW locks. The parameters are the same as for SleepConditionVariableCS, except there is an additional parameter to indicate whether the SRW lock is in shared or exclusive mode.

Signal, or “wake up,” a condition variable with WakeConditionVariable (corresponding to the “signal” model) and WakeAllConditionVariable (corresponding to the “broadcast” model).

Revising QueueObject (Program 10-4) is simple. First, modify SynchObj.h (Program 10-3) by replacing the three HANDLE items with CRITICAL_SECTION (for qGuard) and CONDITION_VARIABLE (for qNf and qNe). Then, QueueObjectCV is simpler, as there is no need for the additional wait after the SOAW call, as Program 10-6 shows. Note that there is no need to modify utility functions such as QueueEmpty and QueueRemove.

Program 10-6 QueueObjCV: The Queue Management Functions

Program 10-6 implements the signal, rather than the broadcast, version. It also uses a CS, but the Examples file version uses an SRW lock, so there are illustrations of both techniques. MSDN’s example code (search for “Using Condition Variables”) is very similar, also using queues with “not empty” and “not full” predicates.

The modified solution, in the Examples file, is ThreeStageCV, and it does provide the anticipated performance improvements relative to ThreeStageCS (the CRITICAL_SECTION solution), as shown in Run 10-6.

Run 10-6 ThreeStageCV: Condition Variable and CS Performance

Asynchronous Procedure Calls

A complexity in ThreeStage (Program 10-5), as it is currently written, is the way that the transmitter and receiver threads test the message sequence numbers and track the number of active consumers. This solution assumes that the transmitter and receiver threads know the number of consumers and understand the message structure, which may not always be the case. In general, it would be convenient if the boss thread were able to cancel the transmitter and receiver threads directly.

Another open problem is that there is no general method (other than TerminateThread) to signal, or cause an action in, a specific thread. Events signal one thread waiting on an auto-reset event or all the threads waiting on a manual-reset event, but there is no way to assure that the signal goes to a particular thread. The solution used so far is simply to wake up all the waiting threads so they can individually determine whether it is time to proceed. An alternative solution, occasionally used, is to assign events to specific threads so that the signaling thread can determine which event to pulse or set.

APCs provide a solution to both of these problems. The sequence of actions is as follows, where the boss thread needs to control a cooperating worker or target thread.

• The boss thread specifies an APC callback routine to be executed by the target thread by queuing the APC to the target. More than one APC can be queued to a specific thread.

• The target thread enters an alertable wait state indicating that the thread can safely execute the APC. The order of these first two steps is irrelevant, so there is no concern here with race conditions.

• A thread in an alertable wait state will execute all queued APCs, one at a time.

• An APC can carry out any appropriate action, such as freeing resources or raising an exception. In this way, the boss thread can cause an exception to occur in the target, although the exception will not occur until the target has entered an alertable state.

APC execution is asynchronous in the sense that a boss thread can queue an APC to a target at any time, but the execution is synchronous in the sense that it can occur only when the target thread allows it to occur by entering an alertable wait state. Also notice that APCs give a limited sort of thread pool (see Chapter 9); the target thread is the “pool,” and the queued functions are the callback functions.

Alertable wait states appear once more in Chapter 14, which covers asynchronous I/O.

The following sections describe the required functions and illustrate their use with another variation of the ThreeStage program. In the Examples file, the source file is ThreeStageCancel.c, and the project to build this version is ThreeStageCancel.

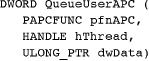

Queuing Asynchronous Procedure Calls

One thread (the boss) queues an APC to a target thread using QueueUserAPC.

pfnAPC is a pointer to the actual function that the target thread will execute. hThread is the handle of the target thread. dwData is a pointer-sized argument value that will be passed to the APC function when it is executed.

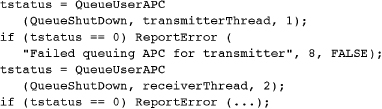

ThreeStageCancel.c, in the main function (compare to Program 10-5), uses QueueUserAPC calls to cancel the transmitter and receiver threads after the consumer and producer threads terminate, as follows:

The QueueUserAPC return value is nonzero for success or zero for failure. GetLastError(), however, does not return a useful value, so the ReportError call does not request an error message (the last argument is FALSE).

QueueShutDown is an additional queue function, where the argument specifies shutting down the get queue (value 1) or the put queue (value 2). The function also sets flags that QueueGet and QueuePut test, so an APC queued by some other thread will not inadvertently shut down the queue.

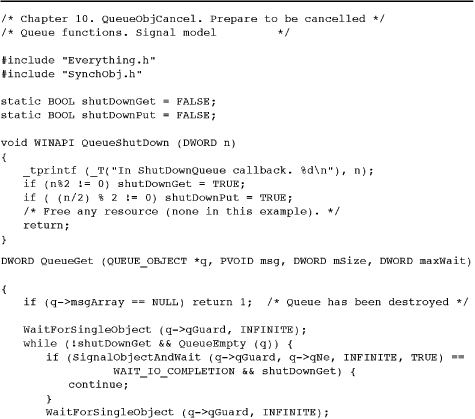

Program 10-7 shows QueueShutDown working with modified versions of QueueGet and QueuePut (Program 10-4). As a result, the queue functions return non-zero values, causing the transmitter and receiver threads to unblock and exit.

Program 10-7 QueueObjCancel: Queue Functions Modified for Cancellation

Alertable Wait States

The last SignalObjectAndWait parameter, bAlertable, has been FALSE in previous examples. By using TRUE instead, we indicate that the wait is a so-called alertable wait, and the thread enters an alertable wait state. The behavior is as follows.

• If one or more APCs are queued to the thread (as a QueueUserAPC target thread) before either hObjectToWaitOn (normally an event) is signaled or the time-out expires, then the APCs are executed (there is no guaranteed order) and SignalObjectAndWait returns with a return value of WAIT_IO_COMPLETION.

• If an APC is never queued, then SignalObjectAndWait behaves in the normal way; that is, it waits for the object to be signaled or the time-out period to expire.

Alterable wait states will be used again with asynchronous I/O (Chapter 14); the name WAIT_IO_COMPLETION comes from this usage. A thread can also enter an alertable wait state with other alertable wait functions such as WaitForSingleObjectEx, WaitForMultipleObjectsEx, and SleepEx, and these functions will also be useful when performing asynchronous I/O.

We can now modify QueueGet and QueuePut (see Program 10-4) to perform an orderly shutdown after an APC is performed, even though the APC function, QueueShutDown, does not do anything other than print a message and return. All that is required is to enter an alertable wait state and to test the SignalObjectAndWait return value, as shown by the following modified queue functions (see QueueObjCancel.c in the Examples file).

This version uses the signal CV model with an auto-reset event and SetEvent; there is no need to be concerned with the PulseEvent missed signal issues.

The APC routine could be either ShutDownReceiver or ShutDownTransmitter, as the receiver and transmitter threads use both QueueGet and QueuePut. If it were necessary for the shutdown functions to know which thread they are executed from, use different APC argument values for the third QueueUserAPC arguments in the code segment preceding Program 10-7.

The thread exit code will be WAIT_TIMEOUT to maintain consistency with previous versions. A DllMain function can perform additional cleanup in a DllMain function if appropriate.

An alternative to testing the return value for WAIT_IO_COMPLETION would be for the shutdown functions to raise an exception, place the QueuePut body in a try block, and add an exception handler.

APCs and Missed Signals

A kernel mode APC (used in asynchronous I/O) can momentarily move a waiting thread out of its wait state, potentially causing a missed PulseEvent signal. Some documentation warns against PulseEvent for this reason, as discussed earlier in the “PulseEvent: Another Caution” section. Should there be a situation where a missed signal could occur, include a finite time-out period on the appropriate wait calls, or use Windows NT6 condition variables. Better yet, avoid PulseEvent.

Safe Thread Cancellation

The preceding example and discussion show how we can safely cancel a target thread that uses alertable wait states. Such cancellation is sometimes called synchronous cancellation, despite the use of APCs, because the cancellation, which is caused by the boss’s QueueUserAPC call, can only take effect when the target thread permits cancellation by entering a safe alertable wait state.

Synchronous cancellation requires the target thread to cooperate and allow itself to be canceled from time to time. Event waits are a natural place to enter an alertable wait state because, as a system shuts down, the event may never be signaled again. Mutex waits could also be alertable to allow thread waiting on a resource that may not become available again. For example, a boss thread could break deadlocks with this technique.

Asynchronous thread cancellation might appear useful to signal a compute-bound thread that seldom, if ever, waits for I/O or events. Windows does not allow asynchronous cancellation, and it would be a risky operation. You do not know the state of the thread to be canceled and whether it owns locks or other resources. There are techniques, using processor-specific code, to interrupt a specified thread, but the techniques not only are risky but are nonportable.

Pthreads for Application Portability

Pthreads have been mentioned several times as the alternative threading and synchronization model available with UNIX, Linux, and other non-Windows systems. There is an open source Windows Pthreads library, and with this library, you can write portable threaded applications that can run on a wide variety of systems. The Examples file discusses this subject in more detail. The ThreeStagePthreads project uses the open source library and points to the download site.

Thread Stacks and the Number of Threads

Two more cautions, which are related, are in order. First, give some thought to the thread stack size, where 1MB is the default. This should be sufficient in most cases, but if there is any doubt, determine the maximum amount of stack space each thread will require, including the requirements of any library functions or recursive functions that the thread calls. A stack overflow will corrupt other memory or cause an exception.

Second, a large number of threads with large stacks will require large amounts of virtual memory for the process and could affect paging behavior and the paging file. For example, using 1,000 threads would not be unreasonable in some of the examples in this and later chapters. Allowing 1MB per thread stack results in 1GB of virtual address space. Preventive measures include careful stack sizing, thread pools, and multiplexing operations within a single thread. Furthermore, parallelism frameworks (previous chapter) generally assure that there are bounds on the total stack size and task-switching times.

Hints for Designing, Debugging, and Testing

At the risk of presenting advice that is contrary to that given in many other books and technical articles, which stress testing and little else, my personal advice is to balance your efforts so that you pay attention to design, implementation, and use of familiar programming models. The best debugging technique is not to create the bugs in the first place; this advice, of course, is easier to give than to follow. Nonetheless, when defects do occur, as they will, code inspection, balanced with debugging, often is most effective in finding and fixing the defects’ root causes.

Overdependence on testing is not advisable because many serious defects will elude the most extensive and expensive testing. Testing can only reveal defects; it cannot prove that they do not exist, and testing shows only defect symptoms, not root causes. As a personal example, I ran a version of a multiple semaphore wait function that used the CV model without the finite time-out on the event variable wait. The defect, which could cause a thread to block indefinitely, did not show up in over a year of use; eventually, however, something would have failed. Simple code inspection and knowledge of the condition variable model revealed the error.

Debugging is also problematic because debuggers change timing behavior, masking the very race conditions that you wish to expose. For example, debugging is unlikely to find a problem with an incorrect choice of event type (auto-reset or manual-reset) and SetEvent/PulseEvent. You have to think carefully about what you wish to achieve.

Having said all that, testing on a wide variety of platforms, which must include multiprocessor systems, is an essential part of any multithreaded software development project.

Avoiding Incorrect Code

Every bug you don’t put in your code in the first place is one more bug you won’t find in testing or production. Here are some hints, most of which are taken, although rephrased, from Butenhof’s Programming with POSIX Threads (PWPT).

• Avoid relying on thread inertia. Threads are asynchronous, but we often assume, for example, that a parent thread will continue running after creating one or more child threads. The assumption is that the parent’s “inertia” will keep it running before the children run. This assumption is especially dangerous on a multiprocessor system, but it can also lead to problems on single-processor systems.

• Never bet on a thread race. Nearly anything can happen in terms of thread scheduling. Your program has to assume that any ready thread can start running at any time and that any running thread can be preempted at any time. “No ordering exists between threads unless you cause ordering” (PWPT, p. 294).

• Scheduling is not the same as synchronization. Scheduling policy and priorities cannot ensure proper synchronization. Use synchronization objects instead.

• Sequence races can occur even when you use locks to protect shared data. Just because data is protected, there is no assurance as to the order in which different threads will access the shared data. For example, if one thread adds money to a bank account and another makes a withdrawal, there is no assurance, using a lock alone, that the deposit will be made before the withdrawal. Exercise 10–14 shows how to control thread execution order.

• Cooperate to avoid deadlocks. You need a well-understood lock hierarchy, used by all threads, to ensure that deadlocks will not occur.

• Never share events between predicates. Each event used in a condition variable implementation should be associated with a distinct predicate. Furthermore, an event should always be used with the same mutex.

• Beware of sharing stacks and related memory corrupters. Always remember that when you return from a function or when a thread terminates, memory that is local to the function or thread is no longer valid. Memory on a thread’s stack can be used by other threads, but you have to be sure that the first thread continues to exist. This behavior is not unique to thread functions, of course.

• Be sure to use the volatile storage modifier. Whenever a shared variable can be changed in one thread and accessed in another, the variable should be volatile to ensure that each thread stores and fetches the variable to and from memory, rather than assuming that the variable is held in a register that is specific to the thread. However, do not overuse volatile; any function call or return will assure that registers are stored; furthermore, every synchronization call will erect a memory barrier.

• Use memory barriers so that processors have coherent memory views (see Chapter 8 and Figure 8-2). volatile is not sufficient. Memory barriers assure that memory accesses issued by the processors are visible in a particular order.

Here are some additional guidelines and rules of thumb that can be helpful.

• Use the condition variable model properly, being certain not to use two distinct locks with the same event. Understand the condition variable model on which you depend. Be certain that the invariant holds before waiting on a condition variable.

• Understand your invariants and condition variable predicates, even if they are stated only informally. Be certain that the invariant always holds outside the critical code section.

• Keep it simple. Multithreaded programming is complex enough without the burden of additional complex, poorly understood thread models and logic. If a program becomes overly complex, assess whether the complexity is really necessary or is the result of poor design. Careful use of standard threading models can simplify your program and make it easier to understand, and lack of a good model may be a symptom of a poorly designed program.

• Test on both single-processor and multiprocessor systems and on systems with different clock rates, cache architectures, and other characteristics. Some defects will never, or rarely, show up on a single-processor system but will occur immediately on a multiprocessor system, and conversely. Likewise, a variety of system characteristics helps ensure that a defective program has more opportunity to fail.

• Testing is necessary but not sufficient to ensure correct behavior. There have been a number of examples of programs, known to be defective, that seldom fail in routine or even extensive tests.

• Be humble. After all these precautions, bugs will still occur. This is true even with single-threaded programs; threads simply give us more, different, and very interesting ways to cause problems. This adage has been proved many times in preparing this book, where several reviewers and I found bugs (not always subtle bugs, either) in the example programs.

Beyond the Windows API

We have intentionally limited coverage to the Windows API. Microsoft does, however, provide additional access to kernel objects, such as threads. For example, the .NET ThreadPool class, accessible through C++, C#, and other languages, allows you to create a pool of threads and to queue work items to the threads (the ThreadPool method is QueueUserWorkItem).

Microsoft also implements the Microsoft Message Queuing (MSMQ) service, which provides messaging services between networked systems. The examples in this chapter should help show the value of a general-purpose message queuing system. MSMQ is documented in MSDN.

Summary

Multithreaded program development is much simpler if you use well-understood and familiar programming models and techniques. This chapter has shown the utility of the condition variable model and has solved several relatively complex but important programming problems. APCs allow one thread to signal and cause actions in another thread, which allows thread cancellation so that all threads in a system can shut down properly.

Synchronization and thread management are complex because there are multiple ways to solve a given problem, and the different techniques involve complexity and performance trade-offs. The three-stage pipeline example was implemented several different ways in order to illustrate the options.

Use of careful program design and implementation is the best way to improve program quality. Overdependence on testing and debugging, without attention to detail, can lead to serious problems that may be very difficult to detect and fix.

Looking Ahead

Chapter 11 introduces interprocess communication using Windows proprietary anonymous and named pipes. The named pipe example programs show a multithreaded server that can process requests from multiple networked clients. Chapter 12 then converts the example to sockets, which are an industry standard and allow interoperability with Linux, UNIX, and other systems.

Additional Reading

David Butenhof’s Programming with POSIX Threads was the source of much of the information and programming guidelines at the end of the chapter. The threshold barrier solution, Programs 10-1 and 10-2, was adapted from Butenhof as well.

“Strategies for Implementing POSIX Condition Variables in Win32,” by Douglas Schmidt and Irfan Pyarali (posted at http://www.cs.wustl.edu/~schmidt/win32-cv-1.html), discusses Windows event limitations along with condition variables emulation, thoroughly analyzing and evaluating several approaches. Reading this paper will increase your appreciation of the new functions. Another paper by the same authors (http://www.cs.wustl.edu/~schmidt/win32-cv-2.html) builds object-oriented wrappers around Windows synchronization objects to achieve a platform-independent synchronization interface. The open source Pthreads implementation, which is based on the Schmidt and Pyarali work, is available at http://sources.redhat.com/pthreads-win32/.

Exercises

10–1. Revise Program 10-1 so that it does not use the SignalObjectAndWait function.

10–2. Modify eventPC (Program 8-2) so that there can be multiple consumers and so that it uses the condition variable model. Which event type is appropriate?

10–3. Change the logic in Program 10-2 so that the event is signaled only once.

10–4. Replace the mutex in the queue object used in Program 10-2 with a CS. What are the effects on performance and throughput? The solution is in the Examples file, and Appendix C contains experimental data.

10–5. Program 10-4 uses the broadcast CV model to indicate when the queue is either not empty or not full. Would the signal CV model work? Would the signal model even be preferable in any way? Appendix C contains experimental data.

10–6. Experiment with the queue lengths and the transmitter-receiver blocking factor in Program 10-5 to determine the effects on performance, throughput, and CPU load.

10–7. For C++ programmers: The code in Programs 10-3 and 10-4 could be used to create a synchronized queue class in C++; create this class and modify Program 10-5 to test it. Which of the functions should be public and which should be private?

10–8. Study the performance behavior of Program 10-5 if CRITICAL_SECTIONs are used instead of mutexes.

10–9. Improve Program 10-5 so that it is not necessary to terminate the transmitter and receiver threads. The threads should shut themselves down.

10–10. The Examples file contains MultiSem.c, which implements a multiple-wait semaphore modeled after the Windows objects (they can be named, secured, and process shared, and there are two wait models), and TestMultiSem.c is a test program. Build and test this program. How does it use the CV model? Is performance improved by using a CRITICAL_SECTION or Windows condition variable? What are the invariants and condition variable predicates?

10–11. Illustrate the various guidelines at the end of this chapter in terms of bugs you have encountered or in the defective versions of the programs provided in the Examples file.

10–12. Read “Strategies for Implementing POSIX Condition Variables in Win32” by Schmidt and Pyarali (see the Additional Reading section). Apply their fairness, correctness, serialization, and other analyses to the CV models (called “idioms” in their paper) in this chapter. Notice that this chapter does not directly emulate condition variables; rather, it tackles the easier problem of emulating normal condition variable usage, whereas Schmidt and Pyarali emulate condition variables used in an arbitrary context.

10–13. Convert one of Chapter 9’s statsXX programs to create a thread pool using APCs.

10–14. Two projects in the Examples file, batons and batonsMultipleEvents, show alternative solutions to the problem of serializing thread execution. The code comments give background and acknowledgments. The second solution associates a unique event with each thread so that specific threads can be signaled. The implementation uses C++ in order to take advantage of the C++ Standard Template Library (STL). Compare and contrast these two solutions, and use the second as a means to become familiar with the STL.

10–15. Perform tests to compare NT6 condition variable performance with the other ThreeStage implementations.

10–16. Modify QueueObjectCV.c (which implements the message queue management functions with condition variables) so that it uses SRW (slim reader/writer) locks. Test with ThreeStage.c and compare performance with the original implementation. Further modify the implementation to use thread pooling.