Operation

This chapter provides information about how to operate and configure the IBM TS7700 Virtualization Engine by using the Management Interface (MI). The following topics

are covered:

are covered:

•IBM TS7700 Virtualization Engine MI

•Basic operations

•Tape cartridge management

•Managing logical volumes

•Recovery scenarios

This chapter also includes information about these topics:

•TS3500 Tape Library Specialist

For general guidance about how to operate the IBM TS3500 tape library, see the following IBM Redbooks publication:

IBM TS3500 Tape Library with System z Attachment A Practical Guide to Enterprise Tape Drives and TS3500 Tape Automation, SG24-6789

8.1 User interfaces

To successfully operate your TS7700 Virtualization Engine, you need to understand its concepts and components. This chapter combines the components and functions of the TS7700 Virtualization Engine into two groups:

•The logical view

•The physical view

Each component and each function belong to only one view.

The logical view is named the host view. From the host allocation point of view, there is only one library, called the composite library. Before R3.2 of Licensed Level of Code (LIC), a composite library could have up to 1536 virtual addresses for tape mounts, considering a six-cluster grid (256 devices or 16 logical control units per cluster).

R3.2 introduces support for 496 devices per cluster (available with feature code 5275), making up for 3968 virtual tape devices in a grid of six fully configured clusters (z/OS also needs an authorized program analysis report (APAR) to grow from the previous limit of 2048 to 4096 per grid or composite library). Read more about this in Chapter 2, “Architecture, components, and functional characteristics” on page 13. The logical view includes virtual volumes and virtual tape drives.

The host is only aware of the existence of the underlying physical libraries because they are defined through Interactive Storage Management Facility (ISMF) in a z/OS environment. The term distributed library is used to denote the physical libraries and TS7700 Virtualization Engine components that are part of one cluster of the multicluster grid configuration.

The physical view is the hardware view that deals with the hardware components of a stand-alone cluster or a multicluster grid configuration. In a TS7740 or TS7720T Virtualization Engine, it includes the TS3500 Tape Libraries and 3592 J1A, TS1120, TS1130, or TS1140 tape drives.

The following operator interfaces for providing information about the TS7700 Virtualization Engine are available:

•Object access method (OAM) commands are available at the host operator console. These commands provide information about the TS7700 Virtualization Engine in stand-alone and grid environments. This information represents the host view of the components within the TS7700 Virtualization Engine. Other z/OS commands can be used against the virtual addresses. This interface is described in Chapter 9, “Host Console Operations” on page 567.

•Web-based management functions are available through web-based user interfaces (UIs). You can access the web interfaces with the following browsers:

– Mozilla Firefox 13.0, Firefox 17.0, Firefox 17.x ESR, Firefox 19.x, Firefox 24.0, Firefox 24.x ESR

– Microsoft Internet Explorer Version 9.x or 10.x

Enable cookies and disable the browser’s function of blocking pop-up windows for the Management Interface usage.

|

Attention: Only supported web browsers should be used for management. Non-supported web browser versions might cause some MI panes to malfunction.

|

•Considering the overall TS7700 implementation, two different web-based functions

are available:

are available:

– The TS3500 Tape Library Specialist, which enables management, configuration and monitoring of the IBM TS3500 Tape Library. The TS3500 Tape Library is used in TS7700 implementation for tape attached models.

– The TS7700 Virtualization Engine Management Interface (MI) is used to run all TS7700 Virtualization Engine configuration, setup, and monitoring actions.

Call Home Interface: This interface is activated on the TS3000 System Console (TSSC) and enables Electronic Customer Care (ECC) by IBM System Support. Alerts can be sent out to IBM RETAIN systems and the IBM service support representative (SSR) can connect through the TSSC to the TS7700 Virtualization Engine and the TS3500 Tape Library.

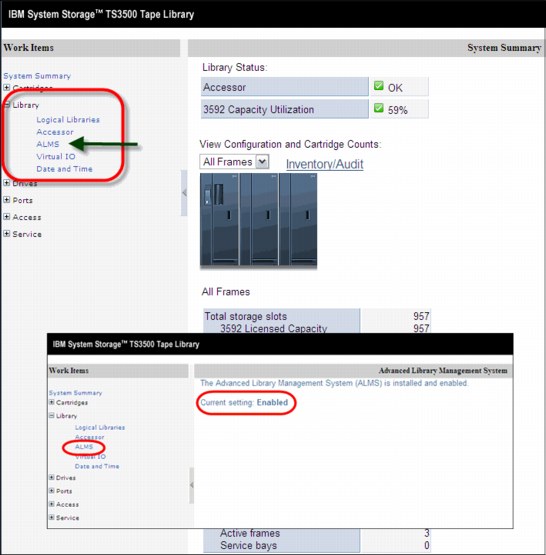

8.1.1 TS3500 Tape Library Specialist

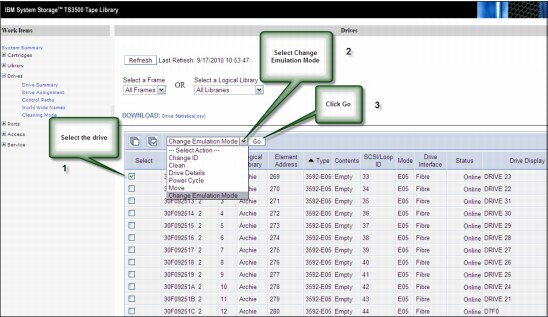

The IBM System Storage TS3500 Tape Library Specialist web interface, which can be called from the TS7700 Virtualization Engine interface, enables you to monitor and configure most of the library functions from the web.

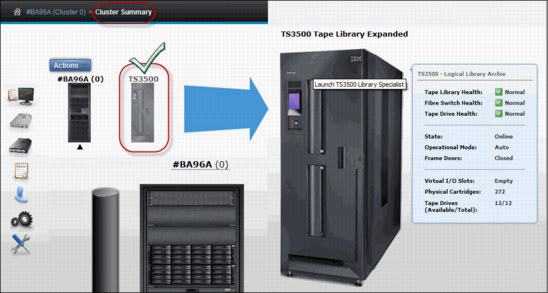

Figure 8-1 shows the TS3500 Tape Library Specialist welcome window with the System Summary.

Figure 8-1 TS3500 Tape Library Specialist welcome window

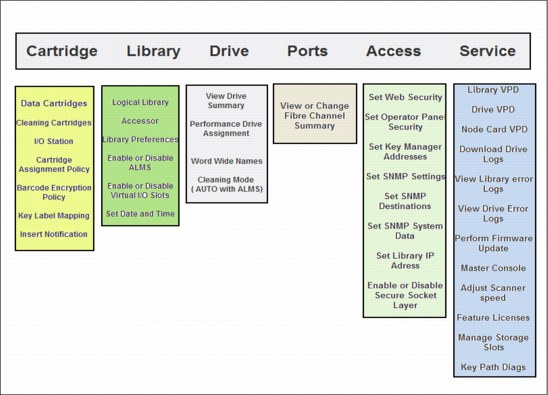

Figure 8-2 shows a flowchart of the functions that are available, depending on the configuration of your TS3500 Tape Library.

Figure 8-2 TS3500 Tape Library Specialist functions

The TS3500 windows are mainly used during the hardware installation phase of the TS7740 and TS7720T Virtualization Engine. The activities involved in installation are described in 8.3.1, “TS3500 Tape Library with a TS7740 and TS7720T Virtualization Engine” on page 481.

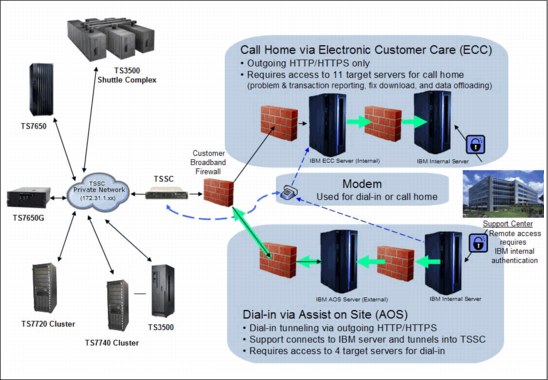

8.1.2 Call Home and Electronic Customer Care

The tape subsystem components include several external interfaces that are not directly associated with data paths. Instead, these interfaces are associated with system control, service, and status information. They support customer interaction and feedback, and attachment to IBM remote support infrastructure for product service and support.

These interfaces and facilities are part of the IBM System Storage Data Protection and Retention (DP&R) storage system. The main objective of this mechanism is to provide a safe and efficient way for the System Call Home (Outbound) and Remote Support (Inbound) connectivity capabilities.

See the document IBM Data Protection & Retention System Connectivity and Security, WP100704, for a complete description of the connectivity mechanism and related security aspects:

The Call Home function generates a service alert automatically when a problem occurs with one of the following components:

•TS3500 Tape Library

•3592 tape controllers models J70, C06, and C07

•TS7700 Virtualization Engine

Error information is transmitted to the IBM System Storage TS3000 System Console for service, and then to the IBM Support Center for problem evaluation. The IBM Support Center can dispatch an IBM SSR to the client installation. Call Home can send the service alert to a pager service to notify multiple people, including the operator. The SSR can deactivate the function through service menus, if required.

See Figure 8-3 for a high-level view of call home and remote support capabilities.

Figure 8-3 Call home and remote support functions

In addition to Release 3.2 of Licensed Internal Microcode, a new IBM System Storage TS3000 System Console (TSSC) model is introduced. The TSSC can be ordered as a rack mount feature of several products. Feature code 2725 provides the new enhanced TS3000 System Console. Physically, the TS3000 TSSC is a standard rack 1U mountable server that is installed within the 3592 F05 frame.

Feature code 2748 provides an optical drive, needed for the Licensed Internal Code changes and log retrieval. With the new TS3000 System Console provided by FC2725, remote data link or call home by using an analog telephone line and modem is not supported any longer. Dial-in function through Assist On Site (AOS) and Call Home with Electronic Customer Care functions are both available using HTTP/HTTPS broadband connection.

Electronic Customer Care

Electronic Customer Care (ECC) provides a method to connect IBM storage systems with IBM remote support. The package provides supports for dial-out communication for broadband Call Home and modem connection. All information sent back to IBM is Secure Sockets Layer (SSL) encrypted. Modem connectivity protocols follow similar standards as for direct connected modems. Broadband connectivity uses both HTTP and HTTPS protocols.

|

Note: Modem is not supported any longer in the new TS3000 System Console model.

|

ECC is a family of services featuring problem reporting by opening a problem management record (PMR), sending data files, and downloading fixes. The ECC client provides a coordinated end-to-end electronic service between IBM business operations, its IBM Business Partners, and its clients. The ECC client runs electronic serviceability activities, such as problem reporting, inventory reporting, and fix automation. This becomes increasingly important because customers are running heterogeneous, disparate environments, and are seeking a means to simplify the complexities of those environments.

The TSSC enables you to use a Proxy Server or Direct Connection. Direct Connection implies that there is not an HTTP proxy between the configured TS3000 and the outside network to IBM. Selecting this method requires no further setup. ECC supports customer-provided HTTP proxy. Additionally, a customer might require all traffic to go through a proxy server. In this case, the TSSC connects directly to the proxy server, which initiates all communications to the Internet.

|

Note: All inbound connections are subject to the security policies and standards defined by the client. When a Storage Authentication Service, Direct Lightweight Directory Access Protocol (LDAP), or RACF policy is enabled for a cluster, service personnel (local or remote) are required to use the LDAP-defined service login.

Important: Be sure that local and remote authentication has been allowed, or that an account has been created to be used by service personnel, before enabling storage authentication, LDAP, or RACF policies.

|

The outbound communication associated with ECC call home can be through an Ethernet connection, a modem, or both, in the form of a failover setup. Modem is not supported in the new TS3000 System Console. The local subnet LAN connection between the TSSC and the attached subsystems remains the same. It is still isolated without any outside access. ECC adds another Ethernet connection to the TSSC, bringing the total number to three. These connections are labeled:

•The External Ethernet Connection, which is the ECC Interface

•The Grid Ethernet Connection, which is used for the TS7700 Virtualization Engine Autonomic Ownership Takeover Manager (AOTM)

•The Internal Ethernet Connection, used for the local attached subsystem’s subnet

All of these connections are set up using the Console Configuration Utility User Interface that is on the TSSC.

|

Note: The AOTM and ECC interfaces should be in different TCP/IP subnets. This avoids both communications from using the same network connection.

|

The TS7700 Virtualization Engine shows the events that originated a Call Home in the Events pane, under the Monitor icon.

Tivoli Assist On-site

Enhanced support capabilities include the introduction of Tivoli Assist On-site (AOS) to expand maintenance capabilities. This is a service function that is managed by an IBM SSR or by the client through the AOS customer interface. AOS is a tool that enables an authenticated session for remote TSSC desktop connections over an external broadband Ethernet adapter. An AOS session enables IBM remote support center representatives to troubleshoot issues with the system.

AOS uses the same network as broadband call home, and works on either HTTP or HTTPS. The AOS function is disabled, by default. When enabled, the AOS can be configured to run in either attended or unattended modes:

•Attended mode requires that the AOS session be initiated at the TSSC associated with the target TS7700 Virtualization Engine. This requires physical access by the IBM SSR to the TSSC or the client through the customer interface.

•Unattended mode, also called Lights Out mode, enables a remote support session to be established without manual intervention at the TSSC associated with the target TS7700 Virtualization Engine.

All AOS connections are outbound. In unattended mode, the session is established by periodically connecting to regional AOS relay servers to determine whether remote access is needed. If access has been requested, AOS authenticates and establishes the connection, enabling a remote desktop access to the TSSC.

|

Note: Remember that all authentications are subject to the Authentication policy in effect. See the information under 8.2.9, “The Access icon” on page 413.

|

8.2 TS7700 Virtualization Engine Management Interface

The TS7700 Virtualization Engine Management Interface (MI) is the primary interface to monitor and administer the TS7700 Virtualization Engine.

|

Tip: Starting with R3.0, a new graphical user interface (GUI) has been implemented. This gives the TS7700 MI an appearance and operation that is similar to other IBM Storage products management interfaces.

|

8.2.1 Connecting to the Management Interface

To connect to the TS7700 Virtualization Engine MI, complete the following steps:

1. The TS7700 Virtualization Engine must first be installed, configured, and online.

2. In the address field of a supported web browser, enter http://x.x.x.x

(where x.x.x.x is the virtual IP address that was assigned during installation). Press Enter or click Go in your web browser.

(where x.x.x.x is the virtual IP address that was assigned during installation). Press Enter or click Go in your web browser.

|

Tip: The following web browsers are currently supported:

•Firefox 13.0, Firefox 17.0, Firefox 17.x ESR, Firefox 19.x, Firefox 24.0, and

Firefox 24.x ESR •Internet Explorer 9 and 10

|

3. The virtual IP is one of three IP addresses that are provided during installation. If you want to access a specific cluster, the cluster must be specified when the IP address is entered as shown in Example 8-1, where Cluster 0 is accessed directly.

Example 8-1 IP address to connect to Cluster 0 in a grid

http://x.x.x.x/0/Console

4. If you are using your own name server, where you can associate a name with the virtual IP address, you can use the name rather than the hardcoded address for reaching the MI.

5. The login page for the MI displays as shown in Figure 8-4. Enter the default login name as admin and the default password as admin.

Figure 8-4 TS7700 Virtualization Engine MI login

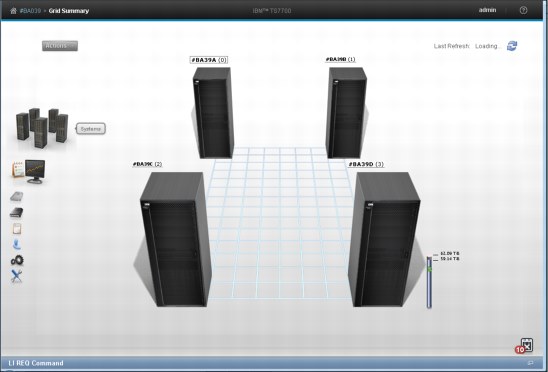

After entering your password, you see the first web page presented by the MI, the Virtualization Engine Grid Summary, as shown in Figure 8-5 on page 297.

After security policies are implemented locally at the TS7700 Virtualization Engine cluster or by using centralized role-base access control (RBAC), a unique user identifier and password can be assigned by the administrator. The user profile can be modified to only provide functions applicable to the role of the user. All users might not have access to the same functions or views through the MI.

For more information, see 8.2.9, “The Access icon” on page 413.

Figure 8-5 shows a visual summary of the TS7700 Virtualization Engine Grid. It shows a four-cluster grid, the components, and health status. The composite library is depicted as a data center, with all members of the grid on the raised floor.

Figure 8-5 MI Virtualization Engine Grid Summary

Each cluster is represented by an image of the TS7700 Virtualization Engine, displaying the cluster’s nickname and ID, and the composite library name and Library ID.

The health of the system is checked and updated automatically at times determined by the TS7700 Virtualization Engine. Data displayed in the Grid Summary page is not updated in real time. The Last Refresh field, in the upper-right corner, reports the date and time that the displayed data was retrieved from the TS7700 Virtualization Engine. To populate the summary with an updated health status, click the Refresh icon near the Last Refresh field in the upper-right corner of Figure 8-5.

The health status of each cluster is indicated by a status sign next to its icon. The legend explains the meaning of each status sign. To obtain additional information about a specific cluster, click that component’s icon.

Library control with TS7700 Virtualization Engine Management Interface

The TS770 MI can also link to the TS3500 Tape Library Web Specialist, which interacts with the physical Tape Library. In environments where the tape library is separated from the LAN-attached hosts or web clients by a firewall, the ports shown in Table 8-1 should be open for proper functionality.

Table 8-1 Network interface firewall

|

Function

|

Port

|

Direction (from library)

|

Protocol

|

|

TS3500 Tape Library Specialist

|

80

|

Inbound

|

TCP/IP

|

|

Simple Network Management Protocol (SNMP) traps

|

161/162

|

Bidirectional

|

User Datagram Protocol (UDP)/IP

|

|

Encryption Key Manager

|

1443

|

Outbound

|

Secure Sockets Layer (SSL)

|

|

Encryption Key Manager

|

3801

|

Outbound

|

TCP/IP

|

See the topic Planning → Infrastructure Requirements in the TS7700 3.2 IBM Knowledge Center for additional information:

8.2.2 Using the TS7700 Management Interface

This topic describes how to use the IBM TS7700 Virtualization Engine MI and its common page and table components.

|

Tip: To support Japanese input, a Japanese front-end processor needs to be installed on the computer where a web browser is running the MI.

|

Login

Each cluster in a grid uses its own login page. This is the first page displayed when you enter the cluster URL in your browser address field. The login page displays the name and number of the cluster to be accessed. After you log in to a cluster, you can access other clusters in the same grid from the same web browser window.

Navigating between pages

You can move between MI pages using the navigation icons, by clicking active links on a page or on the banner, or by selecting a menu option.

|

Restriction: You cannot use the Back or Forward buttons or the Go Back or Go Forward options in your browser to navigate between MI pages.

|

Banner

The banner is common to all pages of the MI. You can use banner elements to navigate to other clusters in the grid, run some user tasks, and locate additional information about the MI.

See Figure 8-6 for an example of the banner elements and available tasks.

Figure 8-6 Management Interface Banner

Status and event indicators

Status and alert indicators occur at the bottom of each MI page. These indicators provide a quick status check for important cluster and grid properties. Grid indicators provide information for the entire grid. These indicators are displayed on the left and right corners of the page footer, and include tasks and events.

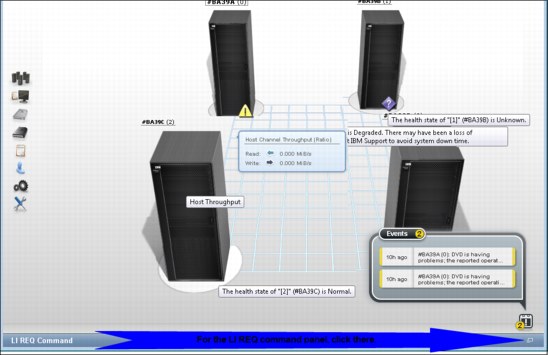

Figure 8-7 shows some examples of status and events that can be displayed from the Grid Summary panel. Also, notice the new function in the Management Interface introduced by R3.2 Licensed Internal Code (LIC), the Library Request command pane.

Figure 8-7 Status and Events indicators in the Grid Summary pane.

All cluster indicators provide information only for the accessing cluster, and are displayed only on MI pages that have a cluster scope. These three indicators occur in the middle of the page footer and include the following information:

•Physical Cache

•Copy Queues

•Health Status

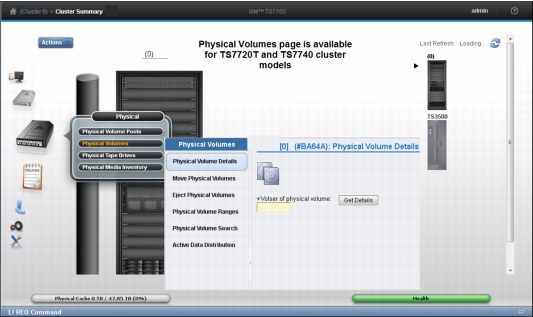

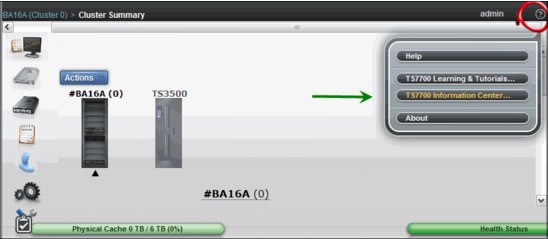

Figure 8-8 shows a Cluster Summary pane, and some examples of status, events, and messages that can be seen in this page.

Figure 8-8 Cluster Summary pane and some information examples.

Management Interface also provides ways to filter, sort, and change the presentation of different tables in the MI. For example, you can hide or display a specific column, modify its size, sort the table results, or download the table row data in a comma-separated value (CSV) file to a local directory.

For a complete description of tasks, the behavior of health and status icons, and a description of how to optimize the table presentations, see the Using the Management Interface topic in the IBM Knowledge Center TS7700 3.2:

Library Request Command window

The LI REQ command pane in the Management Interface is a new capability introduced by R3.2 of the Licensed Internal Code, expanding the interaction of the System Administrator with the TS7700 subsystem. By using the LI REQ pane, a standard LI REQ command can be issued by the Storage Administrator directly from the Management Interface to a Grid (also known as Composite Library), or to a specific Cluster (also known as Distributed Library), with no need to be logged in to the z/OS host system.

The LI REQ pane is minimized and docked at the bottom of the MI panel. The user only has to click it (at the lower right end) to open up the LI REQ command pane. Figure 8-9 shows the new LI REQ command pane and operation.

Figure 8-9 LI REQ Command window and operation.

By default, the only user role allowed to issue LI REQ commands is the Administrator. LI REQ commands are logged in to tasks.

|

Remember: The LI REQ option only shows in the bottom of the Management Interface pages for users with the Administrator role.

|

Figure 8-10 shows an example of a library request command reported in the Tasks list, and shows how to get more information about the command by selecting Properties and See details in the MI page.

Figure 8-10 LI REQ command log and information

|

Important: LI REQ commands issued from this window are not presented in the host console logs.

|

For a complete list of available LI REQ commands, their usage, and respective responses, see the current IBM TS7700 Series z/OS Host Command Line Request User's Guide (WP101091), available in Techdocs:

Standard navigation elements

This section of the TS7700 Virtualization Engine MI provides you with functions to manage and monitor the health the TS7700 Virtualization Engine. Listed next are the expandable interface pages displayed on the left side of the MI Summary page. The exception is the systems interface page, which is displayed only when the cluster is part of a grid.

More items might also show, depending on the actual cluster configuration:

Systems icon This page shows the cluster members of the grid and grid-related functions.

Monitor icon This page gathers the events, tasks, and performance information about one cluster.

Light cartridge icon Information related to virtual volumes is available here.

Dark cartridge icon Information related to physical cartridges and the associated tape library are under this page.

Notepad icon In this page, you find the constructs settings.

Blue man icon Under the Access icon, you find all security-related settings.

Gear icon Cluster general settings, feature licenses, overrides, SNMP, write protect mode, and backup and restore settings under the Gear icon.

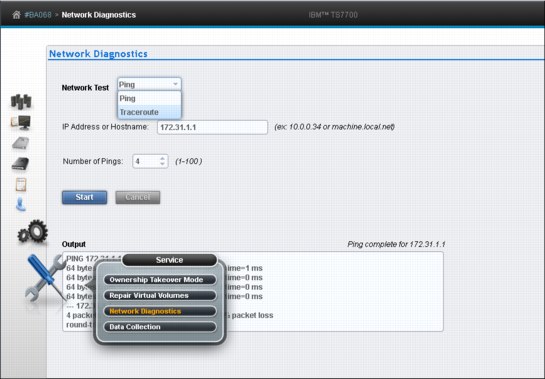

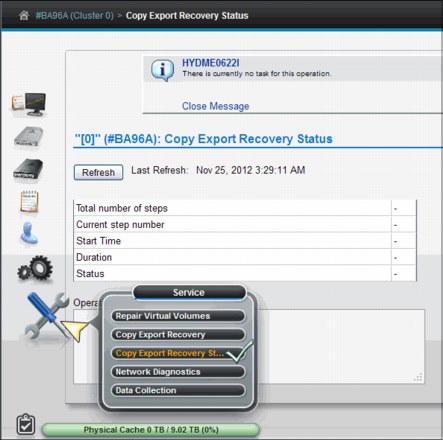

Tool icon Ownership takeover mode, network diagnostics, data collection, and other repair/recovery-related activities are under this icon.

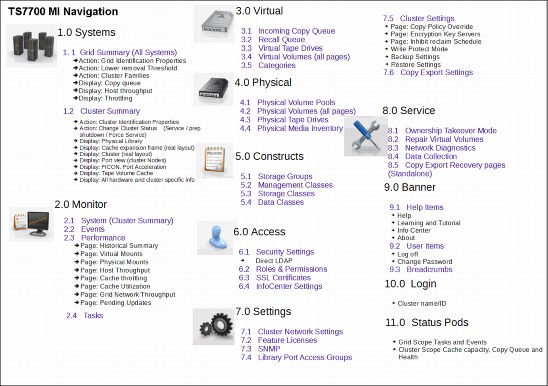

MI Navigation

Use this window (Figure 8-11) for a visual summary of the IBM TS7700 Virtualization Engine MI Navigation.

Figure 8-11 TS7700 Virtualization Engine MI Navigation

8.2.3 The Systems icon

The TS7700 Virtualization Engine MI windows gathered under the Systems icon can help to quickly identify cluster or grid properties, and assess the cluster or grid “health” at a glance.

|

Tip: The Systems icon is only visible when the accessed TS7700 Cluster is part of a grid.

|

Grid Summary page

The Grid Summary window is the first page displayed on the web interface when the IBM TS7700 Virtualization Engine is online. You can use this window to quickly assess the health of all clusters in the grid and as a starting point to investigate cluster or network issues.

|

Note: If the accessing cluster is a stand-alone cluster, the Cluster Summary window is shown upon login.

|

This window shows a summary view of the health of all clusters in the grid, including family associations, host throughput, and any incoming copy queue. Figure 8-12 shows an example of a Grid Summary window, including the pop-up windows.

Figure 8-12 Grid Summary and pop-up windows

Actions menu

Use this menu to change the appearance of clusters on the Grid Summary window or grid identification details. When the grid includes a TS7720 Virtualization Engine, you can also use this menu to change TS7720 Virtualization Engine removal threshold settings. See Figure 8-13 on page 305 for the Actions menu window. The following tasks are on this menu:

•Order by Cluster ID

Select this option to group clusters according to their cluster ID number. Ordered clusters are shown first from left to right, then front to back. Only one ordering option can be selected at a time.

|

Note: The number shown in parentheses in breadcrumb navigation and cluster labels is always the cluster ID.

|

•Order by Families

Select this option to group clusters according to their family association.

•Show Families

Select this option to show the defined families on the grid summary page. Cluster families are used to group clusters in the grid according to a common purpose.

•Cluster Families

Select this option to add, modify, or delete cluster families used in the grid.

Figure 8-13 Grid Summary page and Actions

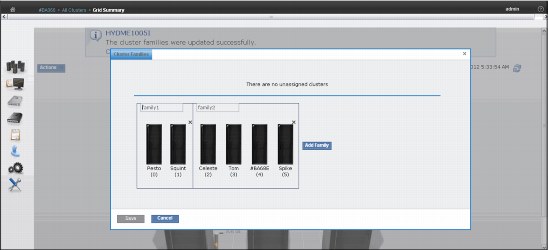

Cluster Families window

Use the window shown in Figure 8-14 to view information and run actions related to TS7700 Virtualization Engine cluster families.

Figure 8-14 MI Add Cluster Families window

Data transfer speeds between TS7700 Virtualization Engine clusters sometimes vary. The cluster family configuration groups clusters so that microcode can optimize grid connection performance between the grouped clusters.

To view or modify cluster family settings, first verify that these permissions are granted to your assigned user role. If your user role includes cluster family permissions, select Modify to run the following actions:

•Add a family: Click Add to create a new cluster family. A new cluster family placeholder is created to the right of any existing cluster families. Enter the name of the new cluster family in the active Name text box. Cluster family names must be 1 - 8 characters in length and composed of Unicode characters. Each family name must be unique. Clusters are added to the new cluster family by relocating a cluster from the Unassigned Clusters area using the method described in the Move a cluster function, described next.

•Move a cluster: You can move one or more clusters, by dragging, between existing cluster families, to a new cluster family from the Unassigned Clusters area, or to the Unassigned Clusters area from an existing cluster family:

– Select a cluster: A selected cluster is identified by its highlighted border. Select a cluster from its resident cluster family or the Unassigned Clusters area by using one of these methods:

• Clicking the cluster with your mouse.

• Using the Spacebar key on your keyboard.

• Pressing and holding the Shift key while selecting clusters to select multiple clusters at one time.

• Pressing the Tab key on your keyboard to switch between clusters before selecting one.

– Move the selected cluster or clusters:

• Clicking and holding the mouse on the cluster and drag the selected cluster to the destination cluster family or the Unassigned Clusters area.

• Using the arrow keys on your keyboard to move the selected cluster or clusters right or left.

|

Restriction: An existing cluster family cannot be moved within the Cluster Families window.

|

•Delete a family: You can delete an existing cluster family. Click the X in the upper-right corner of the cluster family you want to delete. If the cluster family that you attempt to delete contains any clusters, a warning message is displayed. Click OK to delete the cluster family and return its clusters to the Unassigned Clusters area. Click Cancel to abandon the delete action and retain the selected cluster family.

•Save changes: Click Save to save any changes made to the Cluster Families window and return it to read-only mode.

|

Remember: Each cluster family must contain at least one cluster. If you attempt to save changes and a cluster family does not contain any clusters, an error message displays and the Cluster Families window remains in edit mode.

|

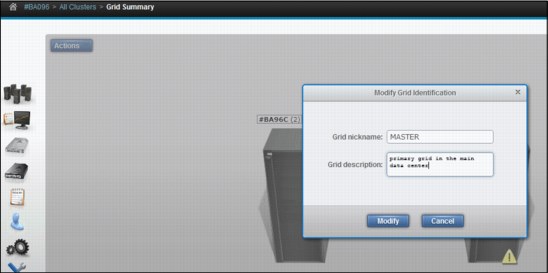

Grid Identification properties window

Use the window shown in Figure 8-15 to view and alter identification properties for the TS7700 Virtualization Engine grid. In a multigrid environment, use this window to clearly identify a particular composite library, making it easier to distinguish, operate, and manage this TS7700 grid (avoiding operational mistakes due to ambiguous identification).

Figure 8-15 MI Grid Identification properties window

The following information, related to grid identification, is displayed. To change the grid identification properties, edit the available fields and click Modify. The following fields are available:

•Grid nickname: The grid nickname must be 1 - 8 characters in length and composed of alphanumeric characters with no spaces. The characters at (@), period (.), dash (-), and plus sign (+) are also allowed.

•Grid description: A short description of the grid. You can use up to 63 characters.

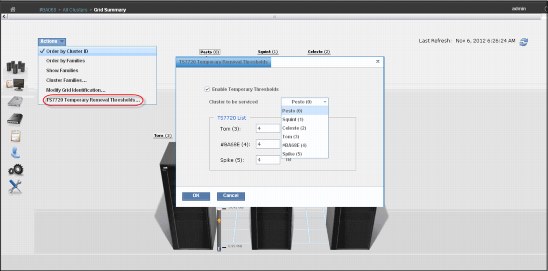

Lower removal threshold

Select TS7720 Temporary Removal Threshold from the Actions menu in the Grid summary view to lower the removal threshold for any TS7720 cluster in the grid. For more information about removal policies, see 4.2.5, “TS7720 cache thresholds and removal policies” on page 142.

Figure 8-16 shows the TS7720 Temporary Removal Threshold window.

Figure 8-16 TS7720 Temporary Removal Threshold

Grid health and details

In the Grid Summary view, cluster is in a normal state (healthy) when there is no warning or degradation icon displayed at the lower left side at the cluster’s representation in the Management Interface. Hovering the mouse pointer over the lower right corner of the cluster’s picture in the Grid Summary page shows The health state of the [cluster number] [cluster name] is Normal message, confirming that this cluster is in a normal state.

Exceptions in the cluster state are represented in the Grid Summary page by a little icon at the lower right side of the cluster’s picture. Use the main view of the Grid Summary window to compare the details and health status of all clusters in the grid. You can view additional information about the status by hovering over the icon with a mouse pointer.

Figure 8-17 on page 309 shows the appearance of the degraded icon, and the possible reasons for degradation to happen. The complete list of the icons and their meaning can be found at the TS7700 IBM Knowledge Center, which can be accessed straight from the Management Interface window by hovering over the question mark symbol at the right side of the banner, and clicking IBM Knowledge Center. Alternatively, the TS7700 3.2 IBM Knowledge Center is available at:

Figure 8-17 Warning or Degraded Icon meanings.

The following list includes the other possible statuses for a cluster that can be found in the Management Interface:

•Failed

•Service or Service Prep

•Unknown

•Offline

•Write Protect Mode

Figure 8-18 shows the icons.

Figure 8-18 Other cluster status icons

Additional information can be obtained by hovering over the status icon with the mouse pointer.

See the IBM TS7700 3.2 IBM Knowledge Center, available locally at the Management Interface page by clicking the question mark icon at the right of the banner. For the complete list of icons and meanings, see the following website:

In the Grid Summary pane, there is an indicator meaning that throttling activity is occurring for a determined cluster within the grid. See Figure 8-19 for the visual reference of the throttling indicator. See Chapter 10, “Performance and monitoring” on page 597 for practical considerations on this topic, what it means, and what can be done to avoid it.

Figure 8-19 Clusters throttling in a two-cluster grid

Also see the IBM Virtualization Engine TS7700 Series Best Practices - Understanding, Monitoring and Tuning the TS7700 Performance white paper for an in-depth explanation of the throttling mechanism, where it is applied in the TS7700 and how it affects the subsystem performance:

Cluster Summary window

By clicking the icon of an individual cluster in the grid, or by selecting a specific cluster in the cluster navigation element in the banner, you can access the Cluster Summary window. When you are in a stand-alone configuration, this is the first icon available in the MI.

Figure 8-20 shows an example of the Cluster Summary window.

Figure 8-20 Cluster Summary window

In the Cluster Summary window, you can access the following options using the Actions menu:

•Modify Cluster Information

•Change Cluster State → Force Shut Down

•Change Cluster State → Service Prep

You also can display the Cluster Information by hovering the mouse over the components, as shown in Figure 8-20. In the resulting box, the following information is available:

•Cluster components health status

•Cluster Name

•Family to which this cluster is assigned

•Cluster model

•Licensed Internal Code (LIC) (Microcode) level for this cluster

•Description for this cluster

•Disk encryption status

•Cache size and occupancy (Cache Tube)

Cluster Actions menu

By using the options under this menu, the user can change the state or settings of a cluster. Also, when the selected cluster is a TS7740 or a TS7720T (a TS3500 Tape Library is present) this menu can be used to change the Copy Export settings.

From the Action menu, the Cluster State can be changed to a different one to perform a specific task, such as preparing for a maintenance window, performing a disaster recovery drill, or moving machines to a different IT center. Depending on the current cluster state, different options display.

Table 8-2 describes options available to change the state of a cluster.

Table 8-2 Options to change cluster state

|

If the current state is

|

You can select

|

Restrictions and notes

|

|

Online

|

Service Prep

|

All following conditions must first be met:

•The cluster is online.

•No other clusters in the grid are in service

prep mode. •At least one other cluster must remain online.

Caution: If only one other cluster remains online, a single point of failure exists when this cluster state becomes service prep mode.

Select Service Prep to confirm this change.

|

|

Force Shutdown

|

Select Force Shutdown to confirm this change.

Important: After a shutdown operation is initiated, it cannot be canceled.

|

|

|

Service Pending

|

Force Service

|

You can select this option if you think that an operation has stalled and is preventing the cluster from entering Service Prep.

Select Force Service to confirm this change.

Note: You can place all but one cluster in a grid into service mode but it is advised that only one cluster be in service mode at a time. If more than one cluster is in service mode, and you cancel service mode on one of them, that cluster does not return to normal operation until service mode is canceled on all clusters in the grid.

|

|

Return to Normal

|

You can select this option to cancel a previous service prep change and return the cluster to the normal online state.

Select Return to Normal to confirm this change.

|

|

|

Force Shutdown

|

Select Force Shutdown to confirm this change.

Important: After a shutdown operation is initiated, it cannot be canceled.

|

|

|

Shutdown (offline)

|

User interface not available

|

After an offline cluster is powered on, it attempts to return to normal. If no other clusters in the grid are available, you can skip hot token reconciliation.

|

|

Online-Pending or Shutdown Pending

|

Menu disabled

|

No options to change state are available when a cluster is in a pending state.

|

Going offline and coming online considerations

Whenever a member cluster of a grid goes offline or comes back online, it needs to exchange information with other peer members regarding the current status of the logical volumes controlled by the grid. Each logical volume is represented by a so-called token, which contains all of the pertinent information regarding that volume, such as creation date, whose cluster it belongs to, which cluster is supposed to have a copy of it, what kind of copy it should be, and so on.

Each cluster in the grid keeps its own copy of the collection of tokens, representing all the logical volumes existing in grid, and those copies are kept updated at the same level by the grid mechanism. When coming back online, a cluster needs to reconcile its own collection of tokens with the peer members of the grid, making sure that it represents the current status of the grid inventory. This reconcile operation is also referred to as token merge.

•Pending token merge

A cluster in a grid configuration attempts to merge its token information with all of the other clusters in the grid as it goes online. When no other clusters are available for this merge operation, the cluster attempting to go online remains in the going online, or blocked, state indefinitely as it waits for the other clusters to become available for the merge operation. If a pending merge operation is preventing the cluster from coming online, you are given the option to skip the merge step.

Click Skip Step to skip the merge operation. This button is only available if the cluster is in a blocked state waiting to share pending updates with one or more unavailable clusters. If you click Skip Step, pending updates against the local cluster might remain undetected until the unavailable clusters become available.

•Ownership takeover

If ownership takeover was set at any of the peers, the possibility exists that old data can surface to the host if the cluster is forced online. Therefore, before attempting to force this cluster online, it is important to know whether any peer clusters have ever enabled ownership takeover mode against this cluster while it was unavailable. In addition, if this cluster is in service, automatic ownership takeover from unavailable peers is also likely and must be considered before attempting to force this cluster online.

If multiple clusters have been offline and must be forced back online, force them back online in the reverse order that they went down in (for example, the last cluster down is the first cluster up). This process ensures that the most current cluster is available first to educate the rest of the clusters forced online.

•Autonomic Ownership Takeover Manager (AOTM)

If it is installed and configured, it attempts to determine whether all unavailable peer clusters are actually in a failed state. If it determines that the unavailable cluster is not in a failed state, it blocks an attempt to force the cluster online. If the unavailable cluster is not in a failed state, the forced online cluster can be taking ownership of volumes that it must not take ownership of. If AOTM discovers that all unavailable peers have failed and network issues are not to blame, this cluster is then forced into an online state.

After it is online, AOTM can further enable ownership takeover against the unavailable clusters if the AOTM option is enabled. Additionally, manual ownership takeover can be enabled, if necessary.

•Shutdown restrictions

You can shut down only the cluster into which you are logged. To shut down another cluster, you must log out of the current cluster and log in to the cluster that you want to shut down. See “Cluster Shutdown window” on page 316 for more details about this topic.

|

Note: After a shutdown or force shutdown action, the targeted cluster (and associated cache) are powered off. A manual intervention is required on site where the cluster is physically located to power it up again.

|

A cluster shutdown operation started from the TS7700 Virtualization Engine MI also shuts down the cache. The cache must be restarted before any attempt is made to restart the TS7700 Virtualization Engine.

Service mode window

Use the window shown in Figure 8-21 to put a TS7700 Virtualization Engine Cluster into service mode, whenever required by a service action or any disruptive activity on a cluster that is a member of a grid. See Chapter 2, “Architecture, components, and functional characteristics” on page 13 for more information.

|

Remember: Service mode is only possible for clusters that are members of a grid.

|

Figure 8-21 TS7700 MI for service preparation

Service mode enables the subject cluster to leave the grid graciously, surrendering the ownership of its logical volumes as required by the peer clusters in the grid to attend to the tasks being performed by client. The operation continues smoothly in the other members of the grid, automatically, because consistent copy volumes in this cluster exist elsewhere in the grid. Before changing a cluster state to Service, the user needs to vary offline all logical drives associated to this cluster. No host access is available in a cluster that is in service mode.

|

Important: Forcing Service Mode causes jobs currently mounted or using resources provided by targeted cluster to fail.

|

Whenever a cluster state is changed to Service, it enters first in service preparation mode, and then, when the preparation stage finishes, it goes automatically into service mode.

During the service preparation stage, the cluster monitors the status of current host mounts, sync copy mounts targeting local TVC, monitors and finishes up the copies that are currently in execution, and makes sure that there are no remote mounts targeting local TVC. When all running tasks have ended, and no more pending activities are detected, the cluster finishes service preparation stage and enters Service mode.

In a TS7700 Virtualization Engine Grid, service prep can occur on only one cluster at any one time. If service prep is attempted on a second cluster at the same time, the attempt fails. After service prep has completed for one cluster and that cluster is in service mode, another cluster can be placed in service prep. A cluster in service prep automatically cancels service prep if its peer in the grid experiences an unexpected outage while the service prep process is

still active.

still active.

|

Consideration: Although you can place all clusters except one in service mode, the best approach is having only one cluster in service mode at a time. If more than one cluster is in service mode, and you cancel service mode on one of them, that cluster does not return to online state until service mode is canceled on all the clusters.

|

For a TS7720 Virtualization Engine cluster in a grid, you can click Lower Threshold to lower the required threshold at which logical volumes are removed from cache in advance. See “Temporary Removal Threshold” on page 145 for more information about the Temporary Removal Threshold. The following items are available when viewing the current operational mode of a cluster.

Cluster State can be any of the following states:

•Normal: The cluster is in a normal operation state. Service prep can be initiated on this cluster.

•Service Prep: The cluster is preparing to go into service mode. The cluster is completing operations (that is, copies owed to other clusters, ownership transfers, and lengthy tasks, such as inserts and token reconciliation) that require all clusters to be synchronized.

•Service: The cluster is in service mode. The cluster is normally taken offline in this mode for service actions or to activate new code levels.

Depending on the mode that the cluster is in, a different action is presented by the button under the Cluster State display. You can use this button to place the TS7700 Virtualization Engine into service mode or back into normal mode:

•Prepare for Service Mode: This option puts the cluster into service prep mode and enables the cluster to finish all current operations. If allowed to finish service prep, the cluster enters service mode. This option is only available when the cluster is in normal mode. To cancel service prep mode, click Return to Normal Mode.

•Return to Normal Mode: Returns the cluster to normal mode. This option is available if the cluster is in service prep or service mode. A cluster in service prep mode or service mode returns to normal mode if Return to Normal Mode is selected.

You are prompted to confirm your decision to change the Cluster State. Click Service Prep or Normal Mode to change to new Cluster State, or Cancel to abandon the change operation.

Cluster Shutdown window

Use the window shown in Figure 8-22 to remotely shut down a TS7700 Virtualization Engine Cluster for a planned power outage or in an emergency.

Figure 8-22 MI Cluster shutdown window

This window is visible from the TS7700 Virtualization Engine MI whether the TS7700 Virtualization Engine is online or in service. If the cluster is offline, MI is not available, and the error HYDME0504E The cluster you selected is unavailable is presented.

|

Note: After a shutdown or force shutdown action, the targeted cluster (and associated cache) are powered off. A manual intervention is required on site where cluster is physically located to power it up again.

|

You can shut down only the cluster to which you are logged in. To shut down another cluster, you must log out of the current cluster and log in to the cluster that you want to shut down.

Before you shut down the TS7700 Virtualization Engine, you must decide whether your circumstances provide adequate time to perform a clean shutdown. A clean shutdown is not mandatory, but it is suggested for members of a TS7700 grid configuration. A clean shutdown requires you to first put the cluster in service mode to ensure that no jobs or copies are targeting or being sourced from this cluster during shutdown.

Jobs using this specific cluster are affected, but also copies are cancelled. Eligible data, which has not been copied yet to remaining clusters cannot be processed accordingly during service and downtime. If you cannot place the cluster in service mode, you can use the force shutdown option.

|

Attention: A forced shutdown can result in lost access to data and job failure.

|

A cluster shutdown operation that is started from the TS7700 Virtualization Engine MI also shuts down the cache. The cache must be restarted before any attempt is made to restart the TS7700 Virtualization Engine.

If you select Shutdown from the action menu for a cluster that is still online, as shown at the top of Figure 8-22 on page 316, a message alerts you to first put the cluster in service mode before shutting down as shown in Figure 8-23.

Figure 8-23 Warning message and Cluster Status

In Figure 8-23, the Online State and Service State fields in the message show the operational status of the TS7700 Virtualization Engine and appear over the button that is used to force its shutdown. The lower-right corner of the picture shows the cluster status reported by the message. You have the following options:

•Cluster State. The following values are possible:

– Normal. The cluster is in an online, operational state and is part of a TS7700 Virtualization Engine Grid.

– Service. The cluster is in service mode or is a stand-alone system.

– Offline. The cluster is offline. It might be shutting down in preparation for service mode.

•Shutdown. This button initiates a shutdown operation:

– Clicking Shutdown in Normal mode. If you click Shutdown while in normal mode, you receive a warning message suggesting that you place the cluster in service mode before proceeding, as shown in Figure 8-23 on page 317. To place the cluster in service mode, select Modify Service Mode. To continue with the force shutdown operation, provide your password and click Force Shutdown. To abandon the shutdown operation, click Cancel.

– Clicking Shutdown in Service mode. If you select Shutdown while in service mode, you are asked to confirm your decision. Click Shutdown to continue, or click Cancel to abandon the shutdown operation.

|

Important: After a shutdown operation is initiated, it cannot be canceled.

|

When a shutdown operation is in progress, the Shutdown button is disabled and the status

of the operation is displayed in an information message. The following list shows the shutdown sequence:

of the operation is displayed in an information message. The following list shows the shutdown sequence:

1. Going offline

2. Shutting down

3. Powering off

4. Shutdown completes

Verify that power to the TS7700 Virtualization Engine and to the cache is shut down before attempting to restart the system.

A cluster shutdown operation started from the TS7700 Virtualization Engine MI also shuts down the cache. The cache must be restarted first and allowed to achieve an operational state before any attempt is made to restart the TS7700 Virtualization Engine.

Cluster Identification Properties window

Use the window shown in Figure 8-24 to view and alter cluster identification properties for the TS7700 Virtualization Engine. This can be used to distinguish this distributed library.

Figure 8-24 MI Cluster Identification properties window

The following information related to cluster identification is displayed. To change the cluster identification properties, edit the available fields and click Modify. The following fields are available:

•Cluster nickname: The cluster nickname must be 1 - 8 characters in length and composed of alphanumeric characters. Blank spaces and the characters at (@), period (.), dash (-), and plus sign (+) are also allowed. Blank spaces cannot be used in the first or last character position.

•Cluster description: A short description of the cluster. You can use up to 63 characters.

Cluster health and detail

The health of the system is checked and updated automatically from time to time by the TS7700 Virtualization Engine. The information status reflected on this page is not in real time; it shows the status of the last check-out. To repopulate the summary window with the updated health status, click the Refresh icon. This operation takes some minutes to complete. If this cluster is operating in Write Protect Mode, a lock icon is shown in the middle right part of the cluster image.

See Figure 8-25 for reference. In the cluster front view, you see a general description about the cluster, such as model, name, family, microcode level, cluster description, and cache encryption capabilities right in the cluster badge (top of the box picture).

Hovering the cursor over the locations within the picture of the frame shows you the health status of different components, such as the network gear (at the top), Tape Volume Cache (TVC) controller and expansion enclosures (bottom and halfway up), and the engine server along with the internal 3957-Vxx disks (the middle). The summary of cluster health shows at the lower-right status bar, and also at the badge health status (over the frame).

Figure 8-25 Front view of Cluster Summary with health details

The new R3.2 of Licensed Internal Code for the Management Interface improves the information provided by the element health information. Note the information now available in the example shown in Figure 8-20 on page 311 for a healthy cluster. Figure 8-26 shows another example of the enhanced information by the R3.2 MI, detailing a failed DDM in a 3956-CC7 Cache Controller.

Figure 8-26 Sample of a degraded 3957-CC7 with a failed DDM in R3.2

Figure 8-27 an example of the cache tube display in a multi-partitioned TS7720T (Tape Attach), introduced in R3.2 of Licensed Internal Code:

Figure 8-27 Display of the Cache tube in a multi-partitioned TS7720T

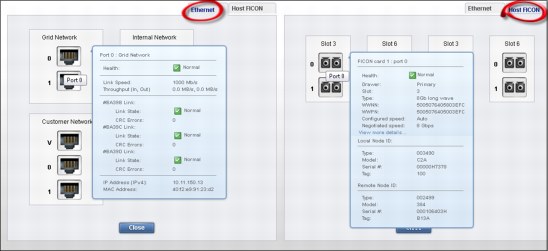

Figure 8-28 on page 322 shows the back view of the cluster summary window and health details. The components depicted in the back view are the Ethernet ports and host Fibre Channel connection (FICON) adapters for this cluster. Under the Ethernet tab, you can see the ports dedicated to the internal network (the TSSC network) and those dedicated to the external (client) network.

You can see the assigned IP addresses, if IPv4 or IPv6, that are being used, and the health of the ports are shown for those ports. In the grid Ethernet ports, information about links to the other clusters, data rates, and cyclic redundancy check (CRC) errors are displayed for each port in addition to the assigned IP address and Media Access Control (MAC) address.

The host FICON adapter information is displayed under the Fibre tab for a selected cluster, as shown in Figure 8-28. The available information includes the adapter position and general health for each port.

Figure 8-28 Back view of the cluster summary with health details

To display the different area health details, hover the cursor over the component in the picture.

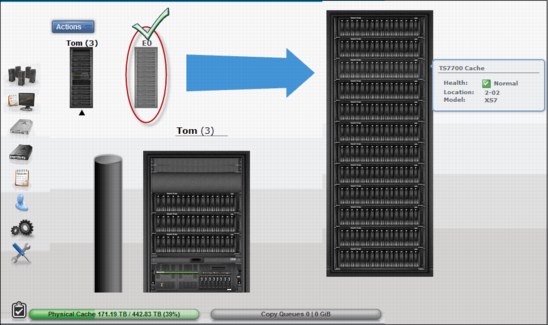

Cache expansion frame

The expansion frame view displays details and health for a cache expansion frame attached to the TS7720 Cluster. To open the expansion frame view, click the small image corresponding to a specific expansion frame, beneath the Actions button.

|

Tip: The expansion frame icon is only displayed if the accessed cluster has an expansion frame.

|

See Figure 8-29 for a visual reference of the Cache Expansion frame details and health view through the Management Interface.

Figure 8-29 Cache expansion frame details and health

Physical library and tape drive health

The Physical Library icon, visible in a TS7740 and TS7720T Cluster Summary window, enables you to check the health of the tape library and tape drives by clicking it. See Figure 8-30.

Also, clicking the TS3500 Tape Library Expanded picture opens the TS3500 Library Specialist web interface.

Figure 8-30 TS3500 Tape Library Expanded from Cluster Summary page

|

Restriction: If the cluster is not a TS7740 or a TS7720T, the Tape Library icon does not display on the TS7700 Virtualization Engine MI.

|

The library details and health are displayed as explained in Table 8-3.

Table 8-3 Library health details

|

Detail

|

Definition

|

|||

|

Physical library type - virtual library name

|

The type of physical library (type is always TS3500) accompanied by the name of the virtual library established on the physical library.

|

|||

|

Tape Library Health

Fibre Switch Health

Tape Drive Health

|

The health states of the library and its main components. The following values are possible:

•Normal

•Degraded

•Failed

•Unknown

|

|||

|

State

|

Whether the library is online or offline to the TS7700 Virtualization Engine.

|

|||

|

Operational Mode

|

The library operational mode. The following values are possible:

•Auto

•Paused

|

|||

|

Frame Door

|

Whether a frame door is open or closed.

|

|||

|

Virtual I/O Slots

|

Status of the I/O station used to move cartridges into and out of the library. The following values are possible:

•Occupied

•Full

•Empty

|

|||

|

Physical Cartridges

|

The number of physical cartridges assigned to the identified virtual library.

|

|||

|

Tape Drives

|

The number of physical tape drives available, as a fraction of the total. Click this detail to open the Physical Tape Drives window.

|

|||

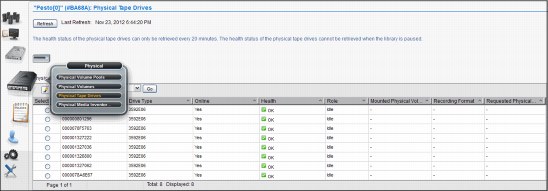

From the TS3500 Tape Library Expanded page, you can open the Physical Tape Drives window. Click the Tape Drives item in the health report, as shown in Figure 8-31.

Figure 8-31 Opening the Physical Tape Drives window

The Physical Tape Drives window looks similar to the example in Figure 8-32.

Figure 8-32 Physical Tape Drives window

On the Physical Tape Drives window, you see all the specific details about a physical tape drive, such as its serial number, drive type, whether the drive has a cartridge mount on it, what is it mounted for, among others. To see the same information, such as drive encryption, tape library location, and so on, about the other tape drives, select a specific drive and choose Details in the Select Action menu. The detailed drive information window is shown in Figure 8-33.

Figure 8-33 Physical Tape Drive Details and navigation

8.2.4 The Monitor icon

The collection of pages under the Monitor icon in the MI enables7 you to monitor events in the TS7700 Virtualization Engine.

Events encompass every significant occurrence within the TS7700 Virtualization Grid or Cluster, such as a malfunctioning alert, an operator intervention, a parameter change, a warning message, or some user-initiated action. Figure 8-34 shows the Monitor icon in a grid and in a stand-alone cluster.

Figure 8-34 Monitor icon in a grid or stand-alone configuration

|

Tip: Notice in Figure 8-34 that the Systems icon only shows up in a grid configuration, and the Cluster Summary item only shows up under Monitor in a stand-alone configuration.

|

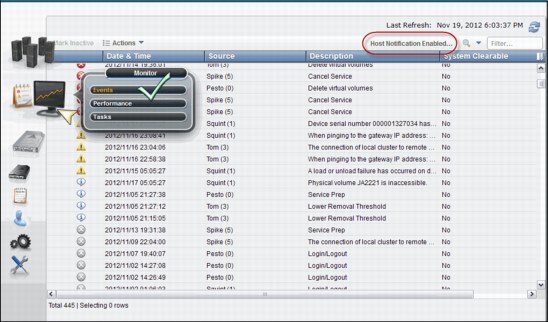

Events

Use this window, shown in Figure 8-35, to view all meaningful events that occurred within the grid or a stand-alone TS7700 Virtualization Engine Cluster.

You have the choice to send future events to the host operational system, by enabling host notification. Although events are grid-wide, enabling or disabling host notification only affects the currently accessed cluster when in a grid configuration. Also, task events are not sent to the host.

Information is displayed on the Events table for 30 days after the operation stops or the event becomes inactive.

Figure 8-35 TS7700 Management Interface Events window

|

Note: The Date & Time column refers the time of the events to the local time in the computer where the Management Interface was initiated. If the DATA/TIME has been modified in the TS7700 from Coordinated Universal Time during installation, the event times are offset by the same difference in the Events display on the Management Interface. We suggest using Coordinated Universal Time in all TS7700 clusters when possible.

|

Figure 8-36 shows the alerts, tasks, and event values and associated severity icons in the Events window in the MI.

Figure 8-36 Alerts, tasks, and event values and associated severity icons

Table 8-4 describes the column names and descriptions of the fields, as shown in the Event window (see Figure 8-35 on page 327).

Table 8-4 Field name and description for the Events window

|

Column name

|

Description

|

|||

|

Date & Time

|

Date and time the event occurred.

|

|||

|

Source

|

Cluster where the event occurred.

|

|||

|

Location

|

Specific location on the cluster where the event occurred.

|

|||

|

Description

|

Description of the event.

|

|||

|

ID

|

The unique number that identifies the instance of the event. This number consists of the following values:

•A locally generated ID, for example: 923

•The type of event: E (event) or T (task)

An event ID based on these examples appears as 923E.

|

|||

|

Status

|

The status of an alert or task.

If the event is an alert, this value is a fix procedure to be performed or the status of a call home operation.

If the event is a task, this value is its progress or one of these final status categories:

•Canceled

•Canceling

•Completed

•Completed, with information

•Completed, with warning

•Failed

|

|||

|

System Clearable

|

Whether the event can be cleared automatically by the system. The following values are possible:

Yes. The event is cleared automatically by the system when the condition causing the event has been resolved.

No. The event requires user intervention to clear. You must clear or deactivate the event manually after resolving the condition causing the event.

|

|||

Table 8-5 lists actions that can be run on the Events table.

Table 8-5 Actions that can be run on the Events table

|

To run this task

|

Action

|

|||

|

Deactivate or clear one or more alerts

|

1. Select at least one but no more than 10 events.

2. Click Mark Inactive.

If a selected event is normally cleared by the system, you must confirm your selection. Other selected events are cleared immediately.

Note: You can clear a running task but if the task later fails, it is displayed again as an active event.

|

|||

|

Enable or disable host notification for alerts

|

Select Actions → [Enable/Disable] Host Notification. This change affects only the accessing cluster.

Note: Tasks are not sent to the host.

|

|||

|

View a fix procedure for an alert

|

Select Actions → View Fix Procedure.

Note: A fix procedure can be shown for only one alert at a time. No fix procedures are shown for tasks.

|

|||

|

Download a comma-separated value (CSV) file of the events list

|

Select Actions → Download all Events.

|

|||

|

View more details for a selected event

|

1. Select an event.

2. Select Actions → Properties.

|

|||

|

Hide or show columns on the table

|

1. Right-click the table header.

2. Click the check box next to a column heading to hide or show that column in the table. Column headings that are checked display on the table.

|

|||

|

Filter the table data

|

Follow these steps to filter by using a string of text:

1. Click in the Filter field.

2. Enter a search string.

3. Press Enter.

To filter by column heading:

1. Click the down arrow next to the Filter field.

2. Select the column heading to filter by.

3. Refine the selection.

|

|||

|

Reset the table to its default view

|

1. Right-click the table header.

2. Click Reset Table Preferences.

|

|||

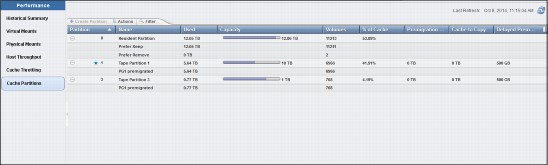

8.2.5 Performance

This section introduces the performance and statistic windows available in the TS7700 Virtualization Engine MI.

All graphical views, except the Historical Summary, are from the last 15 minutes. The Historical Summary presents a customized graphical view of the different aspects of the cluster operation, in a 24-hour time frame. This 24-hour window can be slid back up to 90 days, which covers three months of operations.

Historical Summary

Figure 8-37 shows the Throughput View for the Historical Summary in Monitor → Performance Management Interface operation in a TS7720T cluster.

Figure 8-37 Performance window operation, Throughput view

Release R3.2 enhances the Performance window in Management Interface to accommodate the new functions introduced by the new code. Figure 8-38 shows the Performance Historical Summary and related chart selections available for this item.

Figure 8-38 Performance options and chart selections

Figure 8-39 shows another Historical Summary sample from the same cluster, selecting the Throttling view. The performance data came from a TS7720T (tape attach) cluster.

Notice that the chart shows in orange the information about the host throttling applied to the resident partition (CP0), where the brown line represents the host write throttling values applied to the tape-attached partitions (CP1 - CP7). Because all tape attached partition feeds into the same premigration queue, sharing the same physical tape drive resources, they all are equally affected by the same host throttling value.

Figure 8-39 Historical Summary showing the Throttling view for a stand-alone TS7720T

Clicking the Download Spreadsheet icon shown on the left of Figure 8-39 saves the raw data for the graph in a comma-separated value (CSV) file. Downloadable data is also limited to a 24-hour period from the start date and time defined in the window.

By using the Select Metrics icon, the user is able to select up to 10 data sets of different statistics to populate the chart depending on what aspect of cluster’s performance is under scrutiny. The Select Metrics window is shown (not all options in the picture) in Figure 8-40.

|

Note: Up to ten different statistic data sets can be selected for the same graph view.

|

Figure 8-40 Select Metrics window

See Chapter 10, “Performance and monitoring” on page 597 for an explanation of the values and what to expect in the resulting graphs. Also, see the TS7700 3.2 IBM Knowledge Center for a complete description of the page and available settings. The TS7700 3.2 IBM Knowledge Center is available both locally on the TS7700 MI (by clicking the question mark icon at the upper right corner of the page) and on the following website:

Also, see IBM Virtualization Engine TS7700 Series Best Practices - Understanding, Monitoring, and Tuning the TS7700 Performance, WP101465, which is available on the IBM Techdocs Library website:

The WP101465 paper is an in-depth study of the inner workings of the TS7700, and the factors that can affect the overall performance of a stand-alone cluster or a TS7700 grid. Also, it explains throttling mechanisms and available tuning options for the subsystem to achieve peak performance.

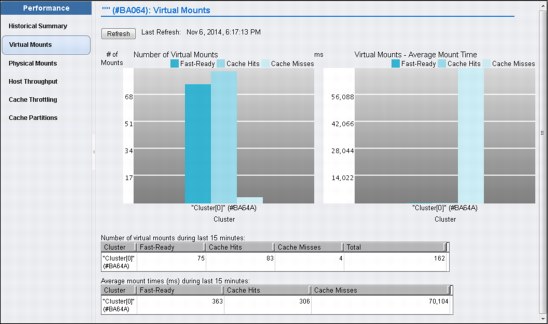

Virtual Mounts

The Virtual Mounts statistics for the last 15 minutes of activity are displayed in a bar graphs and table format per cluster. Figure 8-41 shows an example of a virtual mounts graph for a TS7720T cluster.

Figure 8-41 Virtual Mounts performance window.

In a grid configuration, the Virtual Mounts chart displays the activity for all members in the grid. See the TS7700 Customer Information for more details.

Physical Mounts

The Physical Mounts statistics for the last 15 minutes of activity will be displayed in a bar graphs and table format per cluster. This page will be available and active when the selected TS7700 is attached to a physical tape library (TS7740 or TS7720T). When a grid possesses a physical library but the selected cluster does not, MI displays the following message:

The cluster is not attached to a physical tape library.

This page is not visible on the TS7700 Management Interface if the grid does not possess a physical library (no tape attached member).

Figure 8-42 shows an example of a physical mounts page for a four-cluster grid.

Figure 8-42 Physical mounts statistic display

Host Throughput

The host throughput for data transfer activity is shown for the last 15 minutes in a bar graph and tables. The throughput is shown for all clusters in a grid. Figure 8-43 shows the Host Throughput page in MI.

Notice the hyperlink, which enables the user to single out the throughput numbers of a specific host adapter in a specific cluster for a deeper look.

Figure 8-43 the Host Throughput page

See the TS7700 3.2 IBM Knowledge Center for a complete description of the page.

The TS7700 3.2 IBM Knowledge Center is available both locally at TS7700 MI (by clicking the question mark icon at the upper right corner of the page) and on the following website:

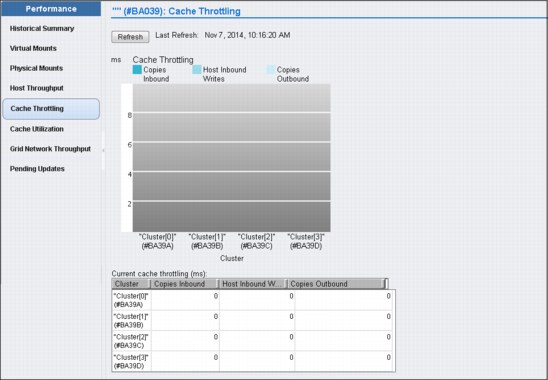

Cache Throttling

This page shows the statistics of the throttling values applied on the host write operations and RUN copy operations throughout the grid.

Figure 8-44 is an example of the Cache Throttling page.

Figure 8-44 Cache Throttling page

See the TS7700 3.2 IBM Knowledge Center for a complete description of the page, either locally at TS7700 MI (by clicking the question mark icon at the upper right corner of the page) or on the following website:

Cache Utilization

Cache utilization statistics are presented for clusters having one resident-only or tape-only partition, and for clusters with partitioned cache. Models TS7720 and TS7740 only have one resident or tape partition, which accounts for the entire cache. The cache partitioning concept, and the TS7720T cluster model, were introduced in Release 3.2 of Licensed Internal Code.

Figure 8-45 on page 337 shows an example of Cache Utilization (single partition), as displayed in a TS7720 disk only or TS7740 cluster.

Figure 8-45 TS7720 or TS7740 Cache Utilization page.

Cache Partition

The Cache Partition page presents the cache use statistics for the TS7720T model, in which the cache is made up of multiple partitions. Figure 8-46 shows a sample of the Cache Partition (multiple partitions) page. This page can be reached using the Monitor icon (as described here) or using the Virtual icon. Both ways direct you to the same page. In this page, the user can display the already existent cache partitions, but also can create a new partition, reconfigure an existing one, or delete a partition as needed.

|

Tip: Consider limiting the Management Interface user roles who are allowed to change the partition configurations through this page.

|

Figure 8-46 Cache Partitions page

See the TS7700 IBM Knowledge Center for a complete description of the page, either locally on the TS7700 MI (by clicking the question mark icon) or on the following website:

Grid Network Throughput

This page is only available if the TS7700 cluster is a member of a Grid. The Grid Network Throughput page shows the last 15 minutes of cross-cluster data transfer rate statistics, shown in megabytes per second (MBps). Each cluster of the grid is represented both in the bar graph chart and in the tables. Figure 8-47 shows an example of the Grid Network Throughput page.

Figure 8-47 Grid Network Throughput page

See the TS7700 3.2 IBM Knowledge Center for more details about this page. Learn about data flow within the grid and how those numbers vary during the operation in Chapter 10, “Performance and monitoring” on page 597.

Pending Updates

The Pending Updates window is only available if the TS7700 cluster is a member of a grid. Pending updates window can be used to monitor status of outstanding updates per cluster throughout the grid. Pending updates can be caused by one cluster being offline, in service preparation or service mode while other grid peers were busy with the normal client’s production work.

A faulty grid link communication also might cause a RUN or SYNC copy to became Deferred Run or Sync. The Pending Updates window can be used to follow the progress of those copies. Figure 8-48 on page 339 shows a sample of Pending Updates window.

The Download bottom in the top of the page saves a comma-separated value (.CSV) file listing all volumes or grid global lock targeted during an ownership takeover. The volume or global pending updates are listed, along with hot tokens and stolen volumes.

Tokens are internal data structures that are used to track changes to the ownership, data, or properties of each one of the existing logical volumes in the grid. Hot tokens occur when a cluster attempts to merge its own token information with the other clusters, but the clusters are not available for the merge operation.

Stolen volume describes a volume whose ownership has been taken over during a period in which the owner cluster was in service mode or offline. Also, in the case of an unexpected cluster outage, when the volume ownership has been taken over under an operator’s direction, or by using AOTM (Autonomic Ownership Takeover Manager).

Figure 8-48 Pending Updates page.

See Chapter 2, “Architecture, components, and functional characteristics” on page 13 for more information regarding copy mode and other concepts referred to in this section. Also, see TS7700 3.2 IBM Knowledge Center for other information about this MI function on the following website:

Tasks window

This page is used to monitor the status of tasks submitted to the TS7700 Virtualization Engine. You can find information for an entire grid if the accessing cluster is part of a grid, or only for this individual cluster if it is a stand-alone configuration. You can format the table by using filters, or you can reset the table format to its default by using reset table preferences. Information is available in the task table for 30 days after the operation stops or the event or action becomes inactive.

Tasks are listed by starting date and time. Tasks that are still running are shown on the top of the table, and the completed tasks are listed at the bottom. Figure 8-49 shows an example of the Tasks window.

Figure 8-49 Tasks window

|

Note: The Start Time column refers to the time of starting a task to the local time on the computer where the Management Interface was started. If the DATA/TIME has been modified in the TS7700 from Coordinated Universal Time during installation, the task start times are offset by the same difference in the Tasks display on the MI. Use Coordinated Universal Time in all TS7700 clusters unless you have a good reason not to.

|

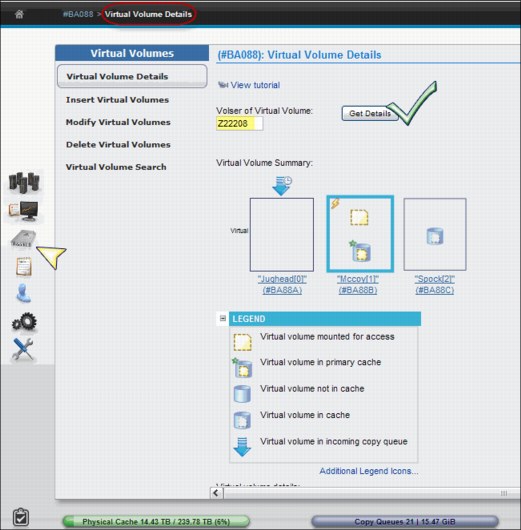

8.2.6 The Virtual icon

TS7700 Virtualization Engine MI pages collected under the Virtual icon can help you view or change settings related to virtual volumes and their queues, virtual drives, and scratch (Fast Ready) categories. Release 3.2 of Licensed Internal Code introduces the TS7720T (Tape Attach). For the TS7720T a new item, Cache Partitions, has been added under the Virtual icon, which enables the user to create, modify, or delete cache partitions.

Figure 8-50 shows the Virtual icon and the options available both for TS7720T and the traditional models.

The Cache Partitions item only is available for the TS7720T models, while the Incoming Copy Queue item shows only in grid configurations.

Figure 8-50 The Virtual icon and options

The available items under the Virtual icon are described in the following topics.

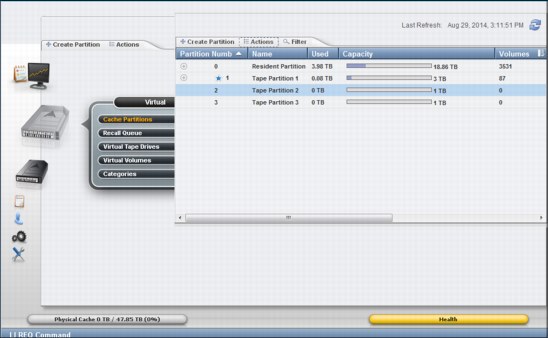

Cache Partitions

Cache utilization, multiple partitions is the page available in Management Interface to create a new cache partition, or reconfigure or delete an existing cache partition. Cache partitioning is introduced with R3.2 of Licensed Internal Code for the new TS7720T models. Also, the same page enables the user to monitor the cache and partitions occupancy and usage. Figure 8-51 shows a Cache Partitions screen capture.

Figure 8-51 Cache Partitions page in Management Interface

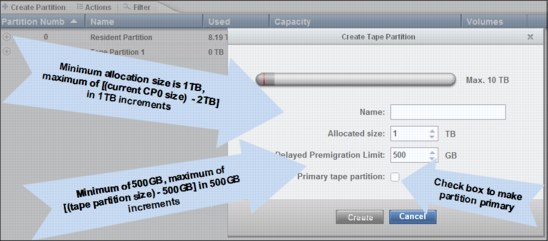

Figure 8-52 on page 343 shows a sequence for creating a new partition. There can be as many as eight partitions, from Resident partition (partition 0) to Tape Partition 7, if needed. The tape partition allocated size is subtracted from the actual resident partition capacity, if there is at least more than 2 TB of free space in the resident partition (CP0). See the TS7700 3.2 IBM Knowledge Center for the complete set of rules and allowed values in effect for this page. Also, learn about the TS7720T, cache partitions, and usage in Chapter 2, “Architecture, components, and functional characteristics” on page 13.

|

Restrictions: No new partition can be created if Resident-Only (CP0) has 2 TB or less of free space. Creation of new partitions is blocked by a Flash Copy for DR in progress, or by one of the existing partitions being in overcommitted state.

|

Figure 8-52 illustrates creating a new partition.

Figure 8-52 Creating a new Tape Partition

Figure 8-53 show an example of successful creation in the upper half. In the lower half, an example where the user failed to observe the amount of free space available in CP0.

Figure 8-53 Example of success and a failure to create a new partition.

Notice that redefining the size of existing partitions in an operational TS7720T might create unexpected load peak in the overall premigration queue, causing host write throttling to be applied to the tape partitions.

For instance, consider the following example, where a tape attached partition is downsized, and become instantly overcommitted. In this example, the TS7720T premigration queue is flooded by volumes that got dislodged by the size of this cache partition becoming smaller. Partition readapts to the new size by migrating volumes in excess to physical tape.

Figure 8-54 shows the previous scenario, TS7720T operating and Tape Partition 1 operating with 12 TB cache.

Figure 8-54 Tape partition 1 operating with 12TB Cache.

Figure 8-55 shows tape partition 1 being downsized to 8 TB. Note the initial warning and subsequent overcommit statement that shows up when resizing the tape partition results in overcommitted cache size.

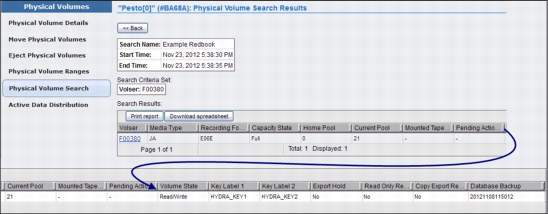

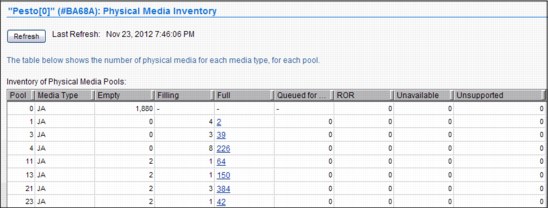

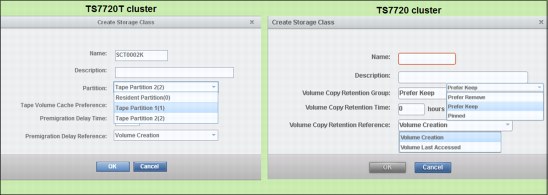

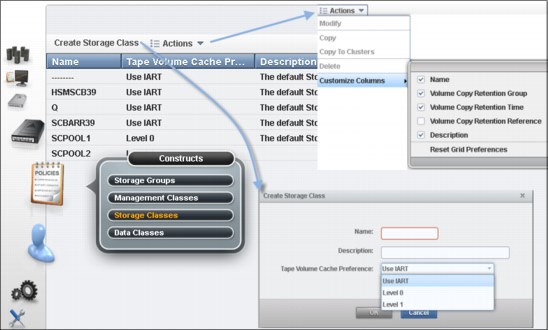

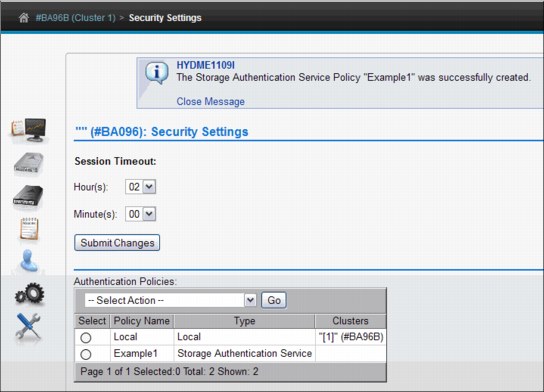

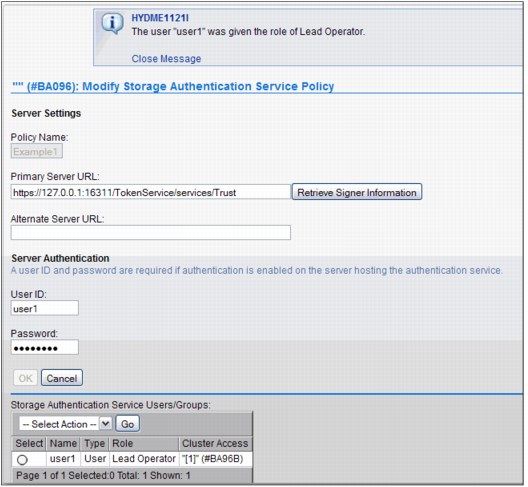

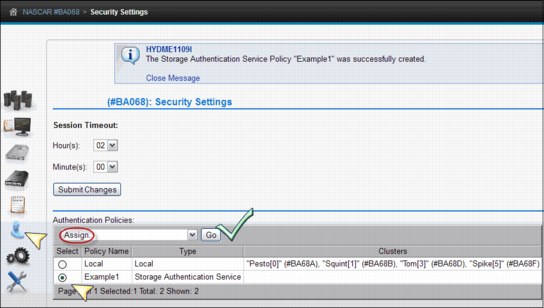

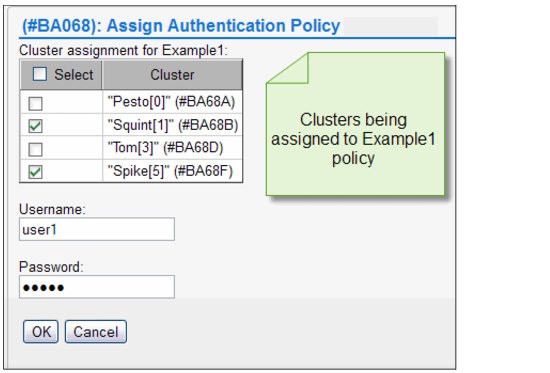

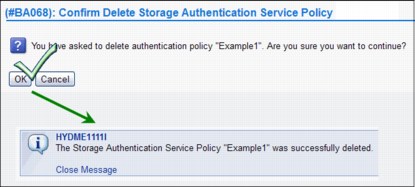

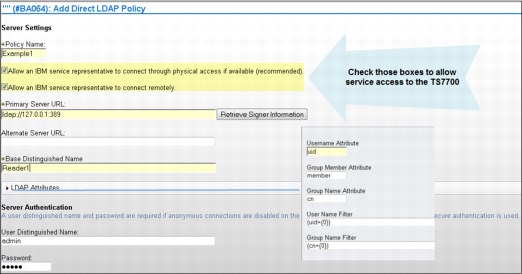

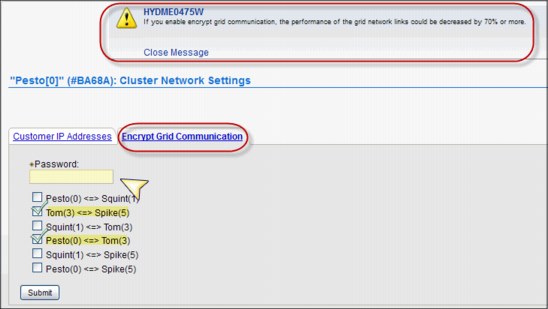

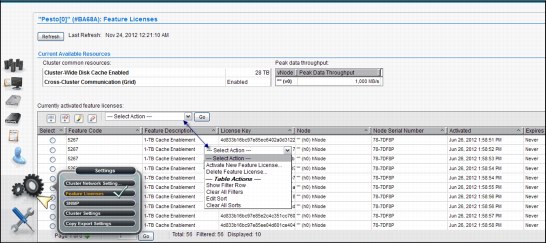

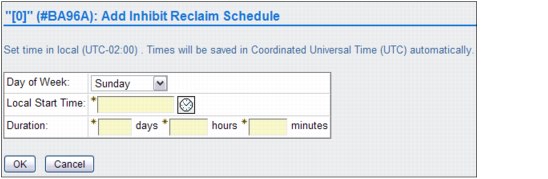

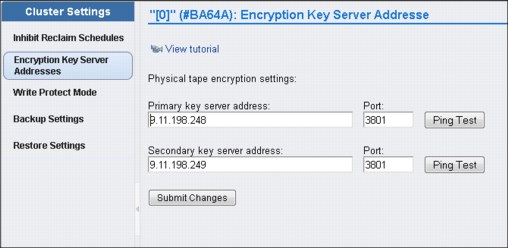

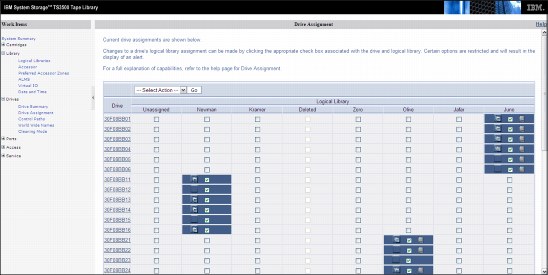

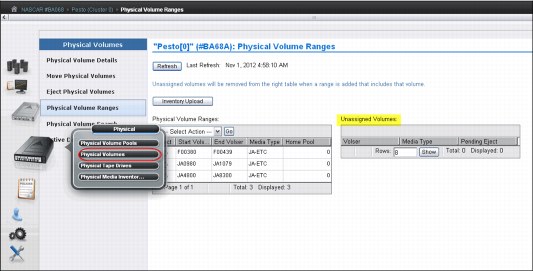

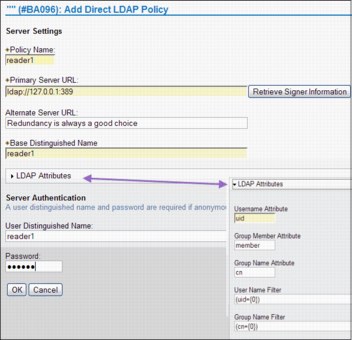

Figure 8-55 Downsizing Tape Partition 1, and the overcommit warning.