Host attachment

This chapter describes the following attachment topics and considerations between host systems and the DS8000 series for availability and performance:

•DS8000 host attachment types.

•Attaching Open Systems hosts.

•Attaching IBM Z hosts.

This chapter provides detailed information about performance tuning considerations for specific operating systems in later chapters of this book.

8.1 DS8000 host attachment

The DS8000 enterprise storage solution provides various host attachments that allow exceptional performance and superior data throughput. At a minimum, have two connections to any host, and the connections must be on different host adapters (HAs) in different I/O enclosures. You can consolidate storage capacity and workloads for Open Systems hosts and z Systems hosts by using the following adapter types and protocols:

•Fibre Channel Protocol (FCP)-attached Open Systems hosts.

•FCP/Fibre Connection (FICON)-attached z Systems hosts.

•zHyperLink Connection (zHyperLink)-attached z Systems hosts.

The DS8900F supports up to 32 Fibre Channel (FC) host adapters, with four FC ports for each adapter. Each port can be independently configured to support Fibre Channel connection (IBM FICON) or Fibre Channel Protocol (FCP).

Each adapter type is available in both longwave (LW) and shortwave (SW) versions. The DS8900F I/O bays support up to four host adapters for each bay, allowing up to 128 ports maximum for each storage system. This configuration results in a theoretical aggregated host I/O bandwidth around 128 x 32 Gbps. Each port provides industry-leading throughput and I/O rates for FICON and FCP.

The host adapters that are available in the DS8900F have the following characteristics:

•32 Gbps FC HBAs (32 Gigabit Fibre Channel - GFC):

– Four FC ports

– FC Gen7 technology

– New IBM Custom ASIC with Gen3 PCIe interface

– Quad-core PowerPC processor

– Negotiation to 32, 16, or 8 Gbps; 4 Gbps or less is not possible

•16 Gbps FC HBAs (16 GFC):

– Four FC ports

– Gen2 PCIe interface

– Quad-core PowerPC processor

– Negotiation to 16, 8, or 4 Gbps (2 Gbps or less is not possible)

The DS8900F supports a mixture of 32 Gbps and 16 Gbps FC adapters. Hosts with slower FC speeds like 4 Gbps are still supported if their HBAs are connected through a switch.

The 32 Gbps FC adapter is encryption-capable. Encrypting the host bus adapter (HBA) traffic usually does not cause any measurable performance degradation.

With FC adapters that are configured for FICON, the DS8900F series provides the following configuration capabilities:

•Fabric or point-to-point topologies.

•A maximum of 128 host adapter ports, depending on the DS8900F system memory and processor features.

•A maximum of 509 logins for each FC port.

•A maximum of 8192 logins for each storage unit.

•A maximum of 1280 logical paths on each FC port.

•Access to all 255 control-unit images (65,280 CKD devices) over each FICON port.

•A maximum of 512 logical paths for each control unit image.

An IBM Z server supports 32,768 devices per FICON host channel. To fully access 65,280 devices, it is necessary to connect multiple FICON host channels to the storage system. You can access the devices through an FC switch or FICON director to a single storage system FICON port.

The 32 GFC host adapter doubles the data throughput of 16 GFC links. When comparing the two adapter types, the 32 GFC adapters provide I/O improvements in full adapter I/Os per second (IOPS) and reduced latency.

The DS8000 storage system can support host and remote mirroring links by using Peer-to-Peer Remote Copy (PPRC) on the same I/O port. However, it is preferable to use dedicated I/O ports for remote mirroring links.

zHyperLink reduces the I/O latency by providing a point-to-point communication path between IBM z14®, z14 ZR1 or z15™ Central Processor Complex (CPC) and the I/O bay of DS8000 family through optical fiber cables and zHyperLink adapters. zHyperLink is intended to complement FICON and speed up I/O requests that are typically used for transaction processing. This goal is accomplished by installing zHyperLink adapters on the z/OS host and the IBM DS8900 hardware family, connecting them using zHyperLink cables to perform synchronous I/O operations. zHyperLink connections are limited to a distance of 150 meters (492 feet). All DS8900 models have zHyperLink capability, and the maximum number of adapters is 12 on DS8950F and DS8980F models and 4 adapters on the DS8910F.

Planning and sizing the HAs for performance are not easy tasks, so use modeling tools, such as Storage Modeller, see Chapter 6.1, “IBM Storage Modeller” on page 126.

The factors that might affect the performance at the HA level are typically the aggregate throughput and the workload mix that the adapter can handle. All connections on a HA share bandwidth in a balanced manner. Therefore, host attachments that require maximum I/O port performance may be connected to HAs that are not fully populated. You must allocate host connections across I/O ports, HAs, and I/O enclosures in a balanced manner (workload spreading).

Find further fine-tuning guidelines in Chapter 4.10.2, “Further optimization” on page 103.

8.2 Attaching Open Systems hosts

This section describes the host system requirements and attachment considerations for Open Systems hosts running AIX, Linux, Hewlett-Packard UNIX (HP-UX), Oracle Solaris, VMware, and Microsoft Windows to the DS8000 series with Fibre Channel (FC) adapters.

|

No Ethernet/iSCSI: There is no direct Ethernet/iSCSI, or Small Computer System Interface (SCSI) attachment support for the DS8000 storage system.

|

8.2.1 Fibre Channel

FC is a full-duplex, serial communications technology to interconnect I/O devices and host systems that might be separated by tens of kilometers. The DS8900 storage system supports 32, 16, 8, and 4 Gbps connections and it negotiates the link speed automatically.

Supported Fibre Channel-attached hosts

For specific considerations that apply to each server platform, and for the current information about supported servers (the list is updated periodically), refer to:

Fibre Channel topologies

The DS8900F architecture supports two FC interconnection topologies:

•Direct connect

•Switched fabric

|

Note: With DS8900F, Fibre Channel Arbitrated Loop (FC-AL) is no longer supported.

|

For maximum flexibility and performance, use a switched fabric topology.

The next section describes best practices for implementing a switched fabric.

8.2.2 Storage area network implementations

This section describes a basic storage area network (SAN) network and how to implement it for maximum performance and availability. It shows examples of a correctly connected SAN network to maximize the throughput of disk I/O.

Description and characteristics of a storage area network

With a SAN, you can connect heterogeneous Open Systems servers to a high-speed network and share storage devices, such as disk storage and tape libraries. Instead of each server having its own locally attached storage and tape drives, a SAN shares centralized storage components, and you can efficiently allocate storage to hosts.

Storage area network cabling for availability and performance

For availability and performance, you must connect to different adapters in different I/O enclosures whenever possible. You must use multiple FC switches or directors to avoid a potential single point of failure. You can use inter-switch links (ISLs) for connectivity.

Importance of establishing zones

For FC attachments in a SAN, it is important to establish zones to prevent interaction from HAs. Every time a HA joins the fabric, it issues a Registered State Change Notification (RSCN). An RSCN does not cross zone boundaries, but it affects every device or HA in the same zone.

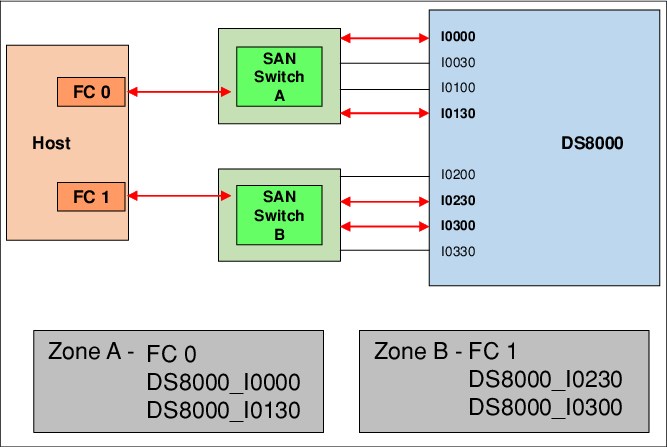

If a HA fails and starts logging in and out of the switched fabric, or a server must be restarted several times, you do not want it to disturb the I/O to other hosts. Figure 8-1 shows zones that include only a single HA and multiple DS8900F ports (single initiator zone). This approach is the preferred way to create zones to prevent interaction between server HAs.

|

Tip: Each zone contains a single host system adapter with the wanted number of ports attached to the DS8000 storage system.

|

By establishing zones, you reduce the possibility of interactions between system adapters in switched configurations. You can establish the zones by using either of two zoning methods:

•Port number

•Worldwide port name (WWPN)

You can configure switch ports that are attached to the DS8000 storage system in more than one zone, which enables multiple host system adapters to share access to the DS8000 HA ports. Shared access to a DS8000 HA port might be from host platforms that support a combination of bus adapter types and operating systems.

|

Important: A DS8000 HA port configured to run with the FICON topology cannot be shared in a zone with non-z/OS hosts. Ports with non-FICON topology cannot be shared in a zone with z/OS hosts.

|

LUN masking

In FC attachment, LUN affinity is based on the WWPN of the adapter on the host, which is independent of the DS8000 HA port to which the host is attached. This LUN masking function on the DS8000 storage system is provided through the definition of DS8000 volume groups. A volume group is defined by using the DS Storage Manager or DS8000 command-line interface (DSCLI), and host WWPNs are connected to the volume group. The LUNs to be accessed by the hosts that are connected to the volume group are defined to be in that volume group.

Although it is possible to limit through which DS8000 HA ports a certain WWPN connects to volume groups, it is preferable to define the WWPNs to have access to all available DS8000 HA ports. Then, by using the preferred process of creating FC zones, as described in “Importance of establishing zones” on page 190, you can limit the wanted HA ports through the FC zones. In a switched fabric with multiple connections to the DS8000 storage system, this concept of LUN affinity enables the host to see the same LUNs on different paths.

Configuring logical disks in a storage area network

In a SAN, carefully plan the configuration to prevent many disk device images from being presented to the attached hosts. Presenting many disk devices to a host can cause longer failover times in cluster environments. Also, boot times can take longer because the device discovery steps take longer.

The number of times that a DS8000 logical disk is presented as a disk device to an open host depends on the number of paths from each HA to the DS8000 storage system. The number of paths from an open server to the DS8000 storage system is determined by these factors:

•The number of HAs installed in the server

•The number of connections between the SAN switches and the DS8000 storage system

•The zone definitions created by the SAN switch software

|

Physical paths: Each physical path to a logical disk on the DS8000 storage system is presented to the host operating system as a disk device.

|

Consider a SAN configuration, as shown in Figure 8-1 :

•The host has two connections to the SAN switches, and each SAN switch in turn has four connections to the DS8000 storage system.

•Zone A includes one FC card (FC0) and two paths from SAN switch A to the DS8000 storage system.

•Zone B includes one FC card (FC1) and two paths from SAN switch B to the DS8000 storage system.

•This host uses only four of the eight possible paths to the DS8000 storage system in this zoning configuration.

By cabling the SAN components and creating zones, as shown in Figure 8-1 on page 192, each logical disk on the DS8000 storage system is presented to the host server four times because there are four unique physical paths from the host to the DS8000 storage system. As you can see in Figure 8-1 on page 192, Zone A shows that FC0 has access through DS8000 host ports I0000 and I0130. Zone B shows that FC1 has access through DS8000 host ports I0230 and I0300. So, in combination, this configuration provides four paths to each logical disk presented by the DS8000 storage system. If Zone A and Zone B are modified to include four paths each to the DS8000 storage system, the host has a total of eight paths to the DS8000 storage system. In that case, each logical disk that is assigned to the host is presented as eight physical disks to the host operating system. Additional DS8000 paths are shown as connected to Switch A and Switch B, but they are not in use for this example.

Figure 8-1 Zoning in a SAN environment

You can see how the number of logical devices that are presented to a host can increase rapidly in a SAN environment if you are not careful about selecting the size of logical disks and the number of paths from the host to the DS8000 storage system.

Typically, it is preferable to cable the switches and create zones in the SAN switch software for dual-attached hosts so that each server HA has 2 - 4 paths from the switch to the DS8000 storage system. With hosts configured this way, you can allow the multipathing module to balance the load across the four HAs in the DS8000 storage system.

Between 2 and 4 paths for each specific LUN in total are usually a good compromise for smaller and mid-size servers. Consider eight paths if a high data rate is required. Zoning more paths to a certain LUN, such as more than eight connections from the host to the DS8000 storage system, does not improve SAN performance and can cause too many devices to be presented to the operating system.

8.2.3 Multipathing

Multipathing describes a technique to attach one host to an external storage device through more than one path. Multipathing can improve fault tolerance and the performance of the overall system because the fault of a single component in the environment can be tolerated without an impact to the host. Also, you can increase the overall system bandwidth, which positively influences the performance of the system.

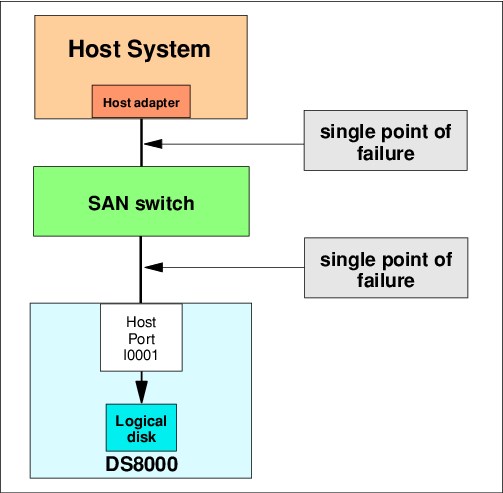

As illustrated in Figure 8-2 on page 193, attaching a host system by using a single-path connection implements a solution that depends on several single points of failure. In this example, as a single link, failure either between the host system and the switch, between the switch and the storage system, or a failure of the HA on the host system, the DS8000 storage system, or even a failure of the switch leads to a loss of access of the host system. Additionally, the path performance of the whole system is reduced by the slowest component in the link.

Figure 8-2 SAN single-path connection

Adding additional paths requires you to use multipathing software (Figure 8-3 ). Otherwise, the same LUN behind each path is handled as a separate disk from the operating system side, which does not allow failover support.

Multipathing provides the DS8000 attached Open Systems hosts that run Windows, AIX, HP-UX, Oracle Solaris, VMware, KVM, or Linux with these capabilities:

•Support for several paths per LUN.

•Load balancing between multiple paths when there is more than one path from a host server to the DS8000 storage system. This approach might eliminate I/O bottlenecks that occur when many I/O operations are directed to common devices through the same I/O path, thus improving the I/O performance.

•Automatic path management, failover protection, and enhanced data availability for users that have more than one path from a host server to the DS8000 storage system. It eliminates a potential single point of failure by automatically rerouting I/O operations to the remaining active paths from a failed data path.

•Dynamic reconfiguration after changing the environment, including zoning, LUN masking, and adding or removing physical paths.

Figure 8-3 DS8000 multipathing implementation that uses two paths

The DS8000 storage system supports several multipathing implementations. Depending on the environment, host type, and operating system, only a subset of the multipathing implementations is available. This section introduces the multipathing concepts and provides general information about implementation, usage, and specific benefits.

|

Important: Do not intermix several multipathing solutions within one host system. Usually, the multipathing software solutions cannot coexist.

|

Multipath I/O

Multipath I/O (MPIO) summarizes native multipathing technologies that are available in several operating systems, such as AIX, Linux, and Windows. Although the implementation differs for each of the operating systems, the basic concept is almost the same:

•The multipathing module is delivered with the operating system.

•The multipathing module supports failover and load balancing for standard SCSI devices, such as simple SCSI disks or SCSI arrays.

•To add device-specific support and functions for a specific storage device, each storage vendor might provide a device-specific module that implements advanced functions for managing the specific storage device.

8.2.4 Example: AIX

AIX brings its own MPIO, and some of these commands are very powerful to see what is going on in the system.

Example 8-1 shows a smaller AIX LPAR which has 8 volumes, belonging to two different DS8000 storage systems. The lsmpio -q command gives us the sizes and the DS8000 volume serial numbers. lsmpio -a gives us the WWPNs of the host being used, that we have already told the DS8000 to make our volumes known to. lsmpio -ar additionally gives us the WWPNs of the DS8000 host ports being used, and how many SAN paths are being used between a DS8000 port and the IBM Power server port.

Example 8-1 Working with AIX MPIO

[p8-e870-01v20:root:/home/root:] lsmpio -q

Device Vendor Id Product Id Size Volume Name

---------------------------------------------------------------------------------

hdisk0 IBM 2107900 50.00GiB 8050

hdisk1 IBM 2107900 50.00GiB 8150

hdisk2 IBM 2107900 80.00GiB 8000

hdisk3 IBM 2107900 80.00GiB 8001

hdisk4 IBM 2107900 80.00GiB 8100

hdisk5 IBM 2107900 80.00GiB 8101

hdisk6 IBM 2107900 10.00TiB 8099

hdisk7 IBM 2107900 4.00TiB 81B0

[p8-e870-01v20:root:/home/root:] lsmpio -ar

Adapter Driver: fscsi0 -> AIX PCM

Adapter WWPN: c05076082d8201ba

Link State: Up

Paths Paths Paths Paths

Remote Ports Enabled Disabled Failed Missing ID

500507630311513e 2 0 0 0 0xc1d00

500507630a1013e7 4 0 0 0 0xc1000

Adapter Driver: fscsi1 -> AIX PCM

Adapter WWPN: c05076082d8201bc

Link State: Up

Paths Paths Paths Paths

Remote Ports Enabled Disabled Failed Missing ID

500507630313113e 2 0 0 0 0xd1500

500507630a1b93e7 4 0 0 0 0xd1000

In the DS8000, we can use lshostconnect -login to see that our server is logged in with its adapter WWPNs, and through which DS8000 ports.

With lsmpio -q -l hdiskX we get additional information about one specific volume. As seen in Example 8-2 on page 196, we see the capacity and which DS8000 storage system is hosting that LUN. We see that it is a 2107-998 model (DS8980F), and in the Volume Serial, the WWPN of the DS8000 is also encoded.

Example 8-2 Getting more information on one specific volume

[p8-e870-01v20:root:/home/root:] lsmpio -q -l hdisk6

Device: hdisk6

Vendor Id: IBM

Product Id: 2107900

Revision: .431

Capacity: 10.00TiB

Machine Type: 2107

Model Number: 998

Host Group: V1

Volume Name: 8099

Volume Serial: 600507630AFFD3E70000000000008099 (Page 83 NAA)

As we see in Example 8-3 with the lsmpio -l hdiskX command, initially, only one of the paths is selected by the operating system, for this LUN. lsattr -El shows us having the algorithm fail_over, for that LUN (and besides, here it also shows the serial number of the DS8000 we use).

Example 8-3 hdisk before algorithm change

[p8-e870-01v20:root:/home/root:] lsmpio -l hdisk6

name path_id status path_status parent connection

hdisk6 0 Enabled Sel fscsi0 500507630a1013e7,4080409900000000

hdisk6 1 Enabled fscsi1 500507630a1b93e7,4080409900000000

[p8-e870-01v20:root:/home/root:] lsattr -El hdisk6

DIF_prot_type none T10 protection type False

DIF_protection no T10 protection support True

FC3_REC false Use FC Class 3 Error Recovery True

PCM PCM/friend/aixmpiods8k Path Control Module False

PR_key_value none Persistant Reserve Key Value True+

algorithm fail_over Algorithm True+

...

max_retry_delay 60 Maximum Quiesce Time True

max_transfer 0x80000 Maximum TRANSFER Size True

node_name 0x500507630affd3e7 FC Node Name False

...

queue_depth 20 Queue DEPTH True+

reassign_to 120 REASSIGN time out value True

reserve_policy single_path Reserve Policy True+

...

timeout_policy fail_path Timeout Policy True+

unique_id 200B75HAL91809907210790003IBMfcp Unique device identifier False

ww_name 0x500507630a1013e7 FC World Wide Name False

But we can change this path algorithm, with the chdev -l -a command, as in Example 8-4. For this to work, the hdisk needs to be taken offline.

Example 8-4 Changing the path algorithm to shortest_queue

[p8-e870-01v20:root:/home/root:] chdev -l hdisk6 -a algorithm=shortest_queue -a reserve_policy=no_reserve

hdisk6 changed

As seen in Example 8-5, after the hdisk is taken online, both power adapters and paths are selected, and the algorithm is changed to shortest_queue, including balancing for this individual LUN.

Example 8-5 hdisk after algorithm change

[p8-e870-01v20:root:/home/root:] lsmpio -l hdisk6

name path_id status path_status parent connection

hdisk6 0 Enabled Sel fscsi0 500507630a1013e7,4080409900000000

hdisk6 1 Enabled Sel fscsi1 500507630a1b93e7,4080409900000000

[p8-e870-01v20:root:/home/root:] lsattr -El hdisk6

...

PCM PCM/friend/aixmpiods8k Path Control Module False

PR_key_value none Persistant Reserve Key Value True+

algorithm shortest_queue Algorithm True+

...

reserve_policy no_reserve Reserve Policy True+

...

Find more hints on setting up AIX multipathing at:

8.3 Attaching z Systems hosts

This section describes the host system requirements and attachment considerations for attaching the z Systems hosts (z/OS, IBM z/VM, IBM z/VSE®, Linux on z Systems, KVM, and Transaction Processing Facility (TPF)) to the DS8000 series. The following sections describe attachment through FICON adapters only.

|

FCP: z/VM, z/VSE, and Linux for z Systems can also be attached to the DS8000 storage system with FCP. Then, the same considerations as for Open Systems hosts apply.

|

8.3.1 FICON

FICON is a Fibre Connection used with z Systems servers. Each storage unit HA has either four or eight ports, and each port has a unique WWPN. You can configure the port to operate with the FICON upper-layer protocol. When configured for FICON, the storage unit provides the following configurations:

•Either fabric or point-to-point topology.

•A maximum of 128 host ports for a DS8950F and DS8980F models and a maximum of 64 host ports for a DS8910F model.

•A maximum of 1280 logical paths per DS8000 HA port.

•Access to all 255 control unit images (65280 count key data (CKD) devices) over each FICON port. FICON HAs support 4, 8, 16or 32 Gbps link speeds in DS8000 storage systems.

Improvement of FICON generations

IBM introduced FICON channels in the IBM 9672 G5 and G6 servers with the capability to run at 1 Gbps. Since that time, IBM has introduced several generations of FICON channels. The FICON Express16S channels make up the current generation. They support 16 Gbps link speeds and can auto-negotiate to 4 or 8 Gbps. The speed also depends on the capability of the director or control unit port at the other end of the link.

Operating at 16 Gbps speeds, FICON Express16S channels achieve up to 2600 MBps for a mix of large sequential read and write I/O operations, as shown in the following charts. Figure 8-4 shows a comparison of the overall throughput capabilities of various generations of channel technology.

Due to the implementation of new Application-Specific Integrated Circuits (ASIC), and new internal design, the FICON Express16S+ and FICON EXPRESS16SA on IBM z15, represent a significant improvement in maximum bandwidth capability compared to FICON Express16S channels and previous FICON offerings. The response time improvements are expected to be noticeable for large data transfers. The FICON Express16SA channel operates at the same 16 Gbps line rate as the previous generation FICON Express16S+ channel. Therefore, the FICON Express16SA channel provides similar throughput performance when compared to the FICON Express16S+.

With the introduction of the FEx16SA channel, improvements can be seen in both response times and maximum throughput for IO/sec and MB/sec for workloads using the zHPF protocol on FEx16SA channels.

With Fibre Channel Endpoint Security encryption enabled, the FEx16SA channel has the added benefit of Encryption of Data In Flight for FCP (EDIF) operations with less than 4% impact on maximum channel throughput.

High Performance FICON for IBM Z (zHPF) is implemented for throughput and latency, which it optimizes by reducing the number of information units (IU) that are processed. Enhancements to the z/Architecture and the FICON protocol provide optimizations for online transaction processing (OLTP) workloads.

The size of most online database transaction processing (OLTP) workload I/O operations is 4K bytes. In laboratory measurements, a FICON Express16SA channel, using the zHPF protocol and small data transfer I/O operations, achieved a maximum of 310 KIO/sec. Also, using FICON Express16SA in a z15 using the zHPF protocol and small data transfer FC-ES Encryption enabled achieved a maximum of 304 KIO/sec.

Figure 8-4 on page 199 shows the maximum single channel throughput capacities of each generation of FICON Express channels supported on z15. For each of the generations of FICON Express channels, it displays the maximum capability using the zHPF protocol exclusively.

Figure 8-4 FICON Express Channels Maximum IOPS

Figure 8-5 displays the maximum READ/WRITE (mix) MB/sec for each channel. Using FEx16SA in a z15 with the zHPF protocol and a mix of large sequential read and update write data transfer I/O operations, laboratory measurements achieved a maximum throughput of 3,200 READ/WRITE (mix) MB/sec. Also, using FEx16SA in a z15 with the zHPF protocol with FC-ES Encryption and a mix of large sequential read and update write data transfer I/O operations achieved a maximum throughput of 3,200 READ/WRITE (mix) MB/sec.

.

Figure 8-5 FICON Express Channels Maximum MB/sec over latest generations

The z14 and z15 CPCs offer FICON Express16S and FICON Express 16S+ SX and LX features with two independent channels. Each feature occupies a single I/O slot and uses one CHPID per channel. Each channel supports 4 Gbps, 8 Gbps, and 16 Gbps link data rates with auto-negotiation to support existing switches, directors, and storage devices.

|

FICON Support:

•The FICON Express16S is supported on the z13®, z14 and z15 CPCs.

•The FICON Exprss16S+ is available on the z14 and z15 CPCs.

•The FICON Express16SA is available only on the z15 CPC.

|

For any generation of FICON channels, you can attach directly to a DS8000 storage system, or you can attach through a FICON capable FC switch.

8.3.2 FICON configuration and sizing considerations

When you use an FC/FICON HA to attach to FICON channels, either directly or through a switch, the port is dedicated to FICON attachment and cannot be simultaneously attached to FCP hosts. When you attach a DS8000 storage system to FICON channels through one or more switches, the maximum number of FICON logical paths is 1280 per DS8000 HA port. The FICON directors (switches) provide high availability with redundant components and no single points of failure. A single director between servers and a DS8000 storage system is not preferable because it can be a single point of failure. This section describes FICON connectivity between z Systems and a DS8000 storage system and provides recommendations and considerations.

FICON topologies

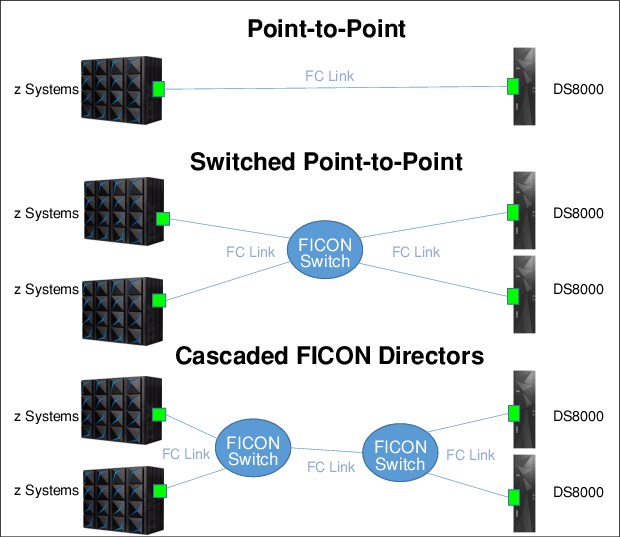

As shown in Figure 8-6 on page 201, FICON channels in FICON native mode, which means CHPID type FC in Input/Output Configuration Program (IOCP), can access the DS8000 storage system through the following topologies:

•Point-to-Point (direct connection)

•Switched Point-to-Point (through a single FC switch)

•Cascaded FICON Directors (through two FC switches)

Figure 8-6 FICON topologies between z Systems and a DS8000 storage system

FICON connectivity

Usually, in IBM Z environments, a one-to-one connection between FICON channels and storage HAs is preferred because the FICON channels are shared among multiple logical partitions (LPARs) and heavily used. Carefully plan the oversubscription of HA ports to avoid any bottlenecks.

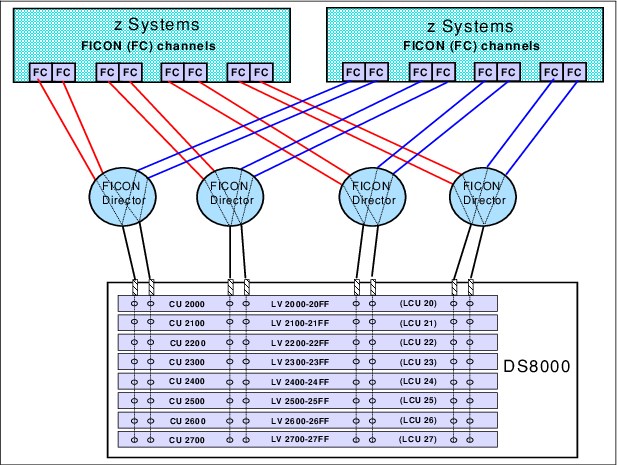

Figure 8-7 on page 202 shows an example of FICON attachment that connects a z Systems server through FICON switches. This example uses 16 FICON channel paths to eight HA ports on the DS8000 storage system and addresses eight logical control units (LCUs). This channel consolidation might be possible when your aggregate host workload does not exceed the performance capabilities of the DS8000 HA.

Figure 8-7 Many-to-one FICON attachment

A one-to-many configuration is also possible, as shown in Figure 8-8 on page 202, but careful planning is needed to avoid performance issues.

Figure 8-8 One-to-many FICON attachment

Sizing FICON connectivity is not an easy task. You must consider many factors. As a preferred practice, create a detailed analysis of the specific environment. Use these guidelines before you begin sizing the attachment environment:

•For FICON Express CHPID utilization, the preferred maximum utilization level is 50%.

•For the FICON Bus busy utilization, the preferred maximum utilization level is 40%.

•For the FICON Express Link utilization with an estimated link throughput of 4 Gbps, 8 Gbps, or 16 Gbps, the preferred maximum utilization threshold level is 70%.

For more information about DS8000 FICON support, see IBM System Storage DS8000 Host Systems Attachment Guide, SC26-7917, and FICON Native Implementation and Reference Guide, SG24-6266.

You can monitor the FICON channel utilization for each CHPID in the RMF Channel Path Activity report. For more information about the Channel Path Activity report, see Chapter 10, “Performance considerations for IBM z Systems servers” on page 225.

The following statements are some considerations and best practices for paths in z/OS systems to optimize performance and redundancy:

•Do not mix paths to one LCU with different link speeds in a path group on one z/OS.

It does not matter in the following cases, even if those paths are on the same CPC:

It does not matter in the following cases, even if those paths are on the same CPC:

– The paths with different speeds, from one z/OS to different multiple LCUs

– The paths with different speeds, from each z/OS to one LCU

•Place each path in a path group on different I/O bays.

•Do not have two paths from the same path group sharing a card.

8.3.3 z/VM, z/VSE, KVM, and Linux on z Systems attachment

z Systems FICON features in FCP mode provide full fabric and point-to-point attachments of Fixed Block (FB) devices to the operating system images. With this attachment, z/VM, z/VSE, KVM, and Linux on z Systems can access industry-standard FCP storage controllers and devices. This capability can facilitate the consolidation of UNIX server farms onto IBM Z servers and help protect investments in SCSI-based storage.

The FICON features support FC devices to z/VM, z/VSE, KVM, and Linux on z Systems, which means that these features can access industry-standard SCSI devices. These FCP storage devices use FB 512-byte sectors rather than Extended Count Key Data (IBM ECKD) format for disk applications. All available FICON features can be defined in FCP mode.

Linux FCP connectivity

You can use either direct or switched attachment to attach a storage unit to an IBM Z or LinuxONE host system that runs SUSE Linux Enterprise Server (SLES), or Red Hat Enterprise Linux (RHEL), or Ubuntu Linux, with current maintenance updates for FICON. For more information, refer to:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.