Chapter 22

Understanding Basic Linux Security

Understanding security is a crucial part of Linux system administration. No longer are a username and simple password sufficient for protecting your server. The number and variety of computer attacks escalate every day, and the need to improve computer security continues to grow with it.

Some of the security problems you may face as an administrator include denial-of-service (DoS) attacks, root kits, worms, viruses, logic bombs, man-in-the-middle ploys, Trojan horses, and so on. The attacks don't come just from the outside of the organization. They can also come from the inside. Protecting your organization's valuable information assets can be overwhelming.

Your first step is to gather knowledge of basic security procedures and principles. With this information, you can begin the process of locking down and securing your Linux servers. Also, you can learn how to stay informed of daily new threats and the new ways to continue protecting your organization's valuable information assets.

Introducing the Security Process Lifecycle

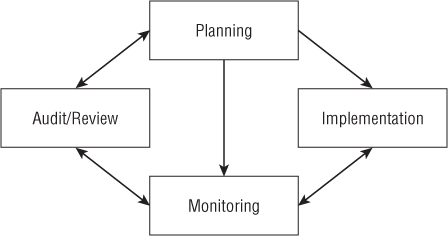

Like software development, securing a computer system has a process life cycle. This Security Process Lifecycle has four main phases, as shown in Figure 22.1. Each phase has important activities that help to create a system hardened against security attacks.

Figure 22.1 The four key phases of the Security Process Lifecycle.

Notice that there is no identifiable starting point in the Security Process Lifecycle. Under ideal circumstances, you would begin the process at the Planning phase, then progress through Implementation, Monitoring, Audit/Review, and then return back to the Planning phase. However, most systems end up starting somewhere in the middle. Even though the actual life cycle may not progress in an orderly fashion, it's still important to understand the life cycle and its phases. Your system's security will be increased if you learn the life cycle and understand what activities need to take place in each phase.

The Security Process Lifecycle comes with several of its own terms and acronyms. Some of these terms and acronyms conflict with already well-established computer terminology. For example, when working with computer networking, a MAC address is a Media Access Control address. In computer security, a MAC is a form of access control called Mandatory Access Control. You can see how quickly confusion can occur.

Here are some basic security definitions to help you in the world of security and through this chapter:

- Subject — A user or a process carrying out work on behalf of a user.

- Object — A particular resource on a computer server, such as a database or a hardware device.

- Authentication — Determining a subject is who he says he is. This is sometimes called “identification and authentication.”

- Access Control — Controlling a subject's ability to use a particular object.

- Access Control List (ACL) — A list of subjects who may access a particular object.

On a computer system, subjects access objects. But before they can, they must be authenticated. Subjects are then only given levels of access to objects via the pre-established access controls. Both authentication procedures and access controls for subjects and objects should be determined during the Security Process Lifecycle's Planning phase.

Examining the Planning Phase

Even though every Security Process Lifecycle phase has important activities, the Planning phase can be the most critical. In the Planning phase, an organization determines such items as:

- What subjects need access to what objects

- What objects need protection from which subjects

- Each object's security or confidentiality classification level

- Each subject's security or confidentiality clearance level

- Policies and procedures for granting subject access to objects

- Policies and procedures for revoking subject access to objects

- Who should approve and allow access to objects

Choosing an access control model

Answers to the previously listed questions form the basis of the security access controls. The access controls can be used in various access control models to implement and maintain a secure computer environment. It would be wise to determine which access control model to use in your organization early in the Planning phase.

There are three primary access control models:

All three models are used in Linux systems today. The one you choose to use depends upon your organization's security needs. The following covers each model in detail and will assist you in choosing the right one for your organization.

Discretionary Access Control

Discretionary Access Control (DAC) is the traditional Linux security access control. Access to an object is based upon a subject's identity and group memberships. In Chapter 4, you read about file permissions, such as read, write, and execute. Also, you read about a file's owner and group. This is basic DAC for a Linux system. Access to a particular object or file is based upon the permissions set for that object's owner, group, and others.

In the example that follows, the file, DAC_file, has read and write permissions given to the file owner, christine. Read permission is given to the group, writer, as well as to the rest of the other system users.

$ ls -l DAC_File -rw-r--r-- 1 christine writer 41094 2012-02-02 13:24 DAC_File

The primary weakness of DAC is that the owner of an object has control of security settings of that object. Instead of using a policy established by the organization, the object's owner may make arbitrary changes to security controls. Using the file and its owner from the preceding example, subject christine can modify the object DAC_File's permissions:

$ chmod 777 DAC_File $ ls -l DAC_File -rwxrwxrwx 1 christine christine 41094 2012-02-02 13:24 DAC_File

Another major weakness of DAC is in differentiating between subjects. DAC can only transact with object owners and other users. It cannot set access rights for system applications. In other words, DAC does not know who is a human and who is a program. Thus, when a subject runs a program, that program inherits the human's access rights to the object.

Mandatory Access Control

Mandatory Access Control (MAC) overcomes the weakness of DAC and is a popular access control model. With MAC, you have a much more refined model:

- Objects are classified by their need for data integrity and privacy.

- Subjects are classified by their data or security clearance level.

- A subject's access to an object is based upon the subject's clearance level.

The owner of an object no longer can change the security settings of that object, as in the DAC model. All security settings and modifications are handled by a designated person or team from the security planning process. Also, MAC does not allow a program to inherit a user's access right to an object when the program is run by that user. This overcomes the second weakness in the DAC model.

Overall, objects are provided protection from malicious threats by confining subjects to their clearance level. There is a further refinement to MAC that provides even higher levels of security, the Role Based Access Control (RBAC) model.

Role Based Access Control

Role Based Access Control (RBAC) was developed at the National Institute of Standards and Technology (NIST). It is a slight, but important, refinement to the MAC model. Access to an object is based upon a subject's identity and its associated role. Each role, not each subject, is classified by its data or security clearance level. Thus, access to objects is based upon a role's clearance level and not an individual subject's clearance level.

The RBAC model has several advantages:

- Strengths of the MAC model are maintained.

- Role access rights are easier to classify into data or security clearance levels than an individual subject's access rights.

- Role access rights rarely change.

- Moving individual subjects in and out of various roles eliminates the need for reclassification of each subject's access rights.

Thus, with RBAC, you get a simpler and easier-to-manage access model that is just as strong as the MAC model. This marriage of simplicity and strength has made Role Based Access Control popular with security products, including Security Enhanced Linux (SELinux), which is covered in Chapter 24.

Using security checklists

Checklists are a necessary part of the Planning phase. These lists also will be helpful during the Implementation phase of your security plan.

Access Control Matrix

One helpful checklist is an Access Control Matrix. This matrix is simply a table you create yourself. It lists out subjects or roles against objects and their classification levels.

Table 22.1 is a simplified Access Control Matrix for an organization using a MAC access model. In this example, the subjects (users and programs) are listed in the left column and objects (programs and data) are listed across the top.

Table 22.1 MAC Access Control Matrix

| SubjectObject | ABC Program (Low) | XYZ Data (High) |

| Bresnahan, Christine | Yes | Yes |

| Jones, Tim | Yes | No |

| ABC Program | N/A | No |

In Table 22.1, you can see that Tim Jones does have access to the ABC program, but not the XYZ data. Also, the ABC program does not have access to the XYZ data. These matrices allow a security planning team to easily review access rights and a security implementation team to accurately set the correct access rights.

Industry security checklists

Security vulnerabilities are constantly changing. Every time you secure against one potential intrusion or piece of malware, another comes to life. Because of this, security checklists are continually being updated by government and industry organizations. These checklists provide a great help in planning how to protect your Linux system. Here are some good sites to check for new and improved checklists. For a very basic Linux security checklist, look at www.sans.org/score/linuxchecklist.php. If you need a more detailed security checklist, review the information at these sites:

- National Checklist Program's (NCP) National Vulnerability Database: http://web.nvd.nist.gov/view/ncp/repository

- The International Standards Organization's (ISO) management standard for running a secure computer system: http://iso27001security.com

- The International Standards Organization's general advice on how to secure computer systems via controls: http://iso27001security.com/html/27002.html

Now, with your subject and object classifications, Access Control Matrix, and security checklists, you can continue to the next security phase. The Implementation phase is where you will begin to actually secure the Linux computer system.

Entering the Implementation Phase

It is unrealistic to think you would start the Security Process Lifecycle Implementation phase only after the Planning phase was complete. The reality is that often the phases of the Security Process Lifecycle overlap. Often, computer systems have to be in place and in the process of being secured before a Planning phase has even been started.

Because of these realities, it's important for you to understand some basic security implementations. These basics include physical security, disaster recovery, user account management, account passwords, and other related topics.

Implementing physical security

A lock on the computer server room door is a first line of defense. Although a very simple concept, it is often ignored. Access to the physical server means access to all the data it contains. No security software can protect your systems if someone with malicious intent has physical access to the Linux server.

Basic server room physical security includes items such as:

- A lock or security alarm on the server room door

- Access controls that allow only authorized access and identify who accessed the room and when the access occurred, such as a card key entry system

- A sign stating “no unauthorized access allowed” on the door

- Policies on who can access the room and when access may occur, for groups such as the cleaning crew, server administrators, and others

Physical security includes environmental controls. Appropriate fire suppression systems and proper ventilation for your server room must be implemented.

In addition to basic physical security of a server room, attention should be given to what is physically at each worker's desk. Desktops and laptops may need to be locked. Fingerprints are often left on computer tablets, which can reveal pins and passwords. Therefore, a tablet screen wiping policy may need to be implemented.

A Clean Desk Policy (CDP) mandates either only the papers being currently worked on are on a desk or that all papers must be locked away at the end of the day. A CDP protects classified information from being gleaned by nosey and unauthorized personnel. And, finally, a “no written passwords” policy is mandatory.

Implementing disaster recovery

Disasters do happen, and they can expose your organization's data to insecure situations. Therefore, part of computer security includes preparing for a disaster.

Similar to the entire Security Process Lifecycle, disaster recovery includes creating disaster recovery plans, testing the plans, and conducting plan reviews. Plans must be tested and updated to maintain their reliability in true disaster situations.

Disaster recovery plans should include:

- What data is to be included in backups

- Where backups are to be stored

- How long backups are maintained

- How backup media is rotated through storage

Backup data, media, and software should be included in your Access Control Matrix checklist.

Backup utilities on a Linux system include:

- amanda (Advanced Maryland Automatic Network Disk Archiver)

- cpio

- dump/restore

- tar

The cpio, dump/restore, and tar utilities are typically pre-installed on a Linux distribution. Only amanda is not installed by default. However, amanda is extremely popular because it comes with a great deal of flexibility and can even back up a Windows system. If you need more information on the amanda backup utility, see www.amanda.org. The utility you ultimately pick should meet your organization's particular security needs for backup.

With luck, disasters occur only rarely. However, every day, users log in to your Linux system. User accounts and passwords have basic security settings that should be reviewed and implemented as needed.

Securing user accounts

User accounts are part of the authentication process allowing users into the Linux system. Proper user account management enhances a system's security. Setting up user accounts was covered in Chapter 11. However, a few additional rules are necessary to increase security through user account management. They include:

- One user per user account.

- No logins to the root account.

- Set expiration dates on temporary accounts.

- Remove unused user accounts.

One user per user account

Accounts should enforce accountability. Thus, multiple people should not be logging in to one account. When multiple people share an account, there is no way to prove a particular individual completed a particular action. Their actions are deniable, which is called repudiation in the security world. Accounts should be set up for non-repudiation. In other words, there should be one person per user account, so actions cannot be denied.

No logins to the root account

If multiple people can log in to the root account, you have another repudiation situation. You cannot track individual use of the root account. To allow tracking of root account use by individuals, a policy for using sudo (see Chapter 8) instead of logging into root should be instituted.

Using sudo provides the following security benefits:

- The root password does not have to be given out.

- You can fine-tune command access.

- All sudo use (who, what, when) is recorded in /var/log/secure.

- All failed sudo access attempts are logged.

Although sudo use can be enforced, one thing you need to know is that users with access to sudo can issue the command sudo su - to log in to the root account. In the code that follows, the user named joe, who had his sudo privileges set up in Chapter 8, is now using the sudo command to log in to the root account.

[joe]$ sudo su - We trust you have received the usual lecture from the local System Administrator. It usually boils down to these two things: #1) Respect the privacy of others. #2) Think before you type. Password: ********* [joe]# whoami root

This essentially gets around the sudo security benefits. For your secure Linux system, you will need to disable this access.

To disable sudo su - for designated sudo users, you must go back and edit the /etc/sudoers configuration file. Again, using the example from Chapter 8, the user named joe currently has the following record in the /etc/sudoers file:

joe ALL=(ALL) NOPASSWD: ALL

To disable joe's ability to use sudo su -, you will need to change his record to look like the following:

joe ALL=(ALL) NOPASSWD: !/bin/su, /bin/, /sbin/, /usr/bin/, /usr/sbin/

Joe will still be able to execute all the commands on the system as root, except sudo su -. Adding the exclamation point (!) in front of /bin/su in joe's record tells sudo not to allow joe to execute that particular command. However, now the commands that joe is allowed to execute must be listed in his record. The /bin/, /sbin/, /usr/bin/, and /usr/sbin/ tell sudo to allow joe to execute all the commands located in those directories.

If joe does attempt to use sudo su -, he will get the following message:

[joe]$ sudo su - Sorry, user joe is not allowed to execute '/bin/su -' as root on host.domain

Now, the use of sudo on your Linux system will be tracked properly and access to sudo will have the desired non-repudiation feature. Your organization's security needs to determine the amount of detail you must add to the sudo configuration file.

Setting expiration dates on temporary accounts

If you have consultants, interns, or temporary employees who need access to your Linux systems, it will be important to set up their user accounts with expiration dates. The expiration date is a safeguard, in case you forget to remove their accounts when they no longer need access to your organization's systems.

To set a user account with an expiration date, use the usermod command. The format is usermod -e yyyy-mm-dd user_name. In the following code, the account Tim has been set to expire on January 1, 2015.

# usermod -e 2015-01-01 Tim

To verify that the account has been properly set to expire, double-check yourself by using the chage command. The chage command is primarily used to view and change a user account's password aging information. However, it also contains account expiration information. The -l option will allow you to list out the various information chage has access to. To keep it simple, pipe the output from the chage command into grep and search for the word “Account.” This will produce only the user account's expiration date.

# chage -l Tim | grep Account Account expires : Jan 01, 2015

As you can see, the account expiration date was successfully changed for Tim to January 1, 2015.

Set account expiration dates for all transitory employees. In addition, consider reviewing all user account expiration dates as part of your security monitoring activities. These activities will help to eliminate any potential backdoors to your Linux system.

Removing unused user accounts

Keeping old expired accounts around is asking for trouble. Once a user has left an organization, it is best to perform a series of steps to remove his or her account along with data:

Problems occur when Step 5 is forgotten, and expired or disabled accounts are still on the system. A malicious user gaining access to your system could renew the account and then masquerade as a legitimate user.

To find these accounts, search through the /etc/shadow file. The account's expiration date is in the eighth field of each record. It would be convenient if a date format were used. Instead, this field shows the account's expiration date as the number of days since January 1, 1970.

You can use a two-step process to find expired accounts in the /etc/shadow file automatically. First, set up a shell variable (see Chapter 7) with today's date in “days since January 1, 1970” format. Then, using the gawk command, you can obtain and format the information needed from the /etc/shadow file.

Setting up a shell variable with the current date converted to the number of days since January 1, 1970 is not particularly difficult. The date command can produce the number of seconds since January 1, 1970. To get what you need, divide the result from the date command by the number of seconds in a day: 86,400. The following demonstrates how to set up the shell variable TODAY.

# TODAY=$(echo $(($(date --utc --date "$1" +%s)/86400))) # echo $TODAY 15373

Next, the accounts and their expiration dates will be pulled from the /etc/shadow file using gawk. The gawk command is the GNU version of the awk program used in UNIX. The command's output is shown in the code that follows. As you would expect, many of the accounts do not have an expiration date. However, two accounts, Consultant and Intern, are showing an expiration date in the “days since January 1, 1970” format. Note that you can skip this step. It is just for demonstration purposes.

# gawk -F: '{print $1,$8}' /etc/shadow

...

chrony

tcpdump

johndoe

Consultant 13819

Intern 13911

The $1 and $8 in the gawk command represent the username and expiration date fields in the /etc/shadow file records. To check those accounts' expiration dates and see if they are expired, a more refined version of the gawk command is needed.

# gawk -F: '{if (($8 > 0) && ($TODAY > $8)) print $1}' /etc/shadow

Consultant

Intern

Only accounts with an expiration date are collected by the ($8 > 0) portion of the gawk command. To make sure these expiration dates are past the current date, the TODAY variable is compared with the expiration date field, $8. If TODAY is greater than the account's expiration date, the account is listed. As you can see in the preceding example, there are two expired accounts that still exist on the system and need to be removed.

That is all you need to do. Set up your TODAY variable and then execute the gawk command. All the expired accounts in the /etc/shadow file will be listed for you. To remove these accounts, use the userdel command.

User accounts are only a portion of the authentication process, allowing users into the Linux system. User account passwords also play an important role in the process.

Securing passwords

Passwords are the most basic security tool of any modern operating system and, consequently, the most commonly attacked security feature. It is natural for users to want to choose a password that is easy to remember, but often this means they choose a password that is also easy to guess.

Brute force methods are commonly employed to gain access to a computer system. Trying the popular passwords often yields results. Some of the most common passwords are:

- 123456

- Password

- princess

- rockyou

- abc123

Just use your favorite Internet search engine and look for “common passwords.” If you can find these lists, then malicious attackers can, too. Obviously, choosing good passwords is critical to having a secure system.

Choosing good passwords

In general, a password must not be easy to guess, be common or popular, or be linked to you in any way. Here are some rules to follow when choosing a password:

- Do not use any variation of your login name or your full name.

- Do not use a dictionary word.

- Do not use proper names of any kind.

- Do not use your phone number, address, family, or pet names.

- Do not use website names.

- Do not use any contiguous line of letters or numbers on the keyboard (such as “qwerty” or “asdfg”).

- Do not use any of the above with added numbers or punctuation to the front or end, or typed backwards.

So now, you know what not to do — take a look at the two primary items that make a strong password:

- Lowercase letters

- Uppercase letters

- Numbers

- Special characters, such as : ! $ % * ( ) - + = , < > : : “ '

Twenty-five characters is a long password. However, the longer the password, the more secure it is. What your organization chooses as the minimum password length is dependent upon its security needs.

Choosing a good password can be difficult. It has to be hard enough not to be guessed and easy enough for you to remember. A good way to choose a strong password is to take the first letter from each word of an easily remembered sentence. Be sure to add numbers, special characters, and varied case. The sentence you choose should have meaning only to you, and should not be publicly available. Table 22.2 lists examples of strong passwords and the tricks used to remember them.

Table 22.2 Ideas for Good Passwords

| Password | How to Remember It |

| Mrci7yo! | My rusty car is 7 years old! |

| 2emBp1ib | 2 elephants make BAD pets, 1 is better |

| ItMc?Gib | Is that MY coat? Give it back |

The passwords look like nonsense but are actually rather easy to remember. Of course, be sure not to use the passwords listed here. Now that they are public, they will be added to malicious attackers' dictionaries.

Setting and changing passwords

You set your own password using the passwd command. Type the passwd command, and it will enable you to change your password. First, it prompts you to enter your old password. To protect against someone shoulder surfing and learning your password, the password will not be displayed as you type.

Assuming you type your old password correctly, the passwd command will prompt you for the new password. When you type in your new password, it is checked using a utility called cracklib to determine whether it is a good or bad password. Non-root users will be required to try a different password if the one they have chosen is not a good password.

The root user is the only user who is permitted to assign bad passwords. After the password has been accepted by cracklib, the passwd command asks you to enter the new password a second time to make sure there are no typos (which are hard to detect when you can't see what you are typing).

When running as root, changing a user's password is possible by supplying that user's login name as a parameter to the passwd command. For example:

# passwd joe Changing password for user joe. New UNIX password: ******** Retype new UNIX password: ******** passwd: all authentication tokens updated successfully.

Here, the passwd command prompts you twice to enter a new password for joe. It does not prompt for his old password in this case.

Enforcing best password practices

Now, you know what a good password looks like and how to change a password, but how do you enforce it on your Linux system? To get a good start on enforcing best password practices, educate system users. Educated users are better users. Some ideas for education include:

- Add an article on best password practices to your organization's monthly newsletter.

- Post tip sheets in the break rooms, such as “The Top Ten Worst Passwords.”

- Send out regular employee computer security e-mails containing password tips.

- Provide new employees training on passwords.

Employees who understand password security will often strive to create good passwords at work as well as at home. One of the “hooks” to gain user attention is to let employees know that these passwords work well also when creating personal passwords, such as for their online banking accounts.

Still, you always have a few users who refuse to implement good password practices. Plus, company security policies often require that a password be changed every so many days. It can become tiresome to come up with new, strong passwords every 30 days! That is why some enforcing techniques are often necessary.

Default values in the /etc/login.defs file for new accounts were covered in Chapter 11. Within the login.defs file are some settings affecting password aging and length:

PASS_MAX_DAYS 30 PASS_MIN_DAYS 5 PASS_MIN_LEN 16 PASS_WARN_AGE 7

In this example, the maximum number of days, PASS_MAX_DAYS, until the password must be changed is 30. The number you set here is dependent upon your particular account setup. For organizations that practice one person to one account, this number can be much larger than 30. If you do have shared accounts or multiple people know the root password, it is imperative that you change the password often. This practice effectively refreshes the list of those who know the password.

To keep users from changing their password to a new password and then immediately changing it right back, you will need to set the PASS_MIN_DAYS to a number larger than 0. In the preceding example, the soonest a user could change his password again is 5 days.

The PASS_WARN_AGE setting is the number of days a user will be warned before being forced to change his password. People tend to need lots of warnings and prodding, so the preceding example sets the warning time to 7 days.

Earlier in the chapter, I mentioned that a strong password is between 15 and 25 characters long. With the PASS_MIN_LEN setting, you can force users to use a certain minimum number of characters in their passwords. The setting you choose should be based upon your organization's security life cycle plans.

For accounts that have already been created, you will need to control password aging via the chage command. The options needed to control password aging with chage are listed in Table 22.3. Notice that there is not a password length setting in the chage utility.

Table 22.3 chage Options

| Option | Description |

| -M | Sets the maximum number of days before a password needs to be changed. Equivalent to PASS_MAX_DAYS in /etc/login.defs |

| -m | Sets the minimum number of days before a password can be changed again. Equivalent to PASS_MIN_DAYS in /etc/login.defs |

| -W | Sets the number of days a user will be warned before being forced to change the account password. Equivalent to PASS_WARN_AGE in /etc/login.defs |

The example that follows uses the chage command to set password aging parameters for the Tim account. All three options are used at once.

# chage -l Tim | grep days Minimum number of days between password change : 0 Maximum number of days between password change : 99999 Number of days of warning before password expires : 7 # # chage -M 30 -m 5 -W 7 Tim # # chage -l Tim | grep days Minimum number of days between password change : 5 Maximum number of days between password change : 30 Number of days of warning before password expires : 7

You can also use the chage command as another method of account expiration, which is based upon the account's password expiring. Earlier, the usermod utility was used for account expiration. Use the chage command with the -M and the -I options to lock the account. In the code that follows, the Tim account is viewed using chage -l. Only the information for Tim's account passwords is extracted.

# chage -l Tim | grep Password Password expires : never Password inactive : never

You can see that there are no settings for password expiration (Password expires) or password inactivity (Password inactive). In the following code, the account is set to be locked 5 days after Tim's password expires, by using only the -I option.

# chage -I 5 Tim # # chage -l Tim | grep Password Password expires : never Password inactive : never

Notice that no settings changed! Without a password expiration set, the -I option has no effect. Thus, using the -M option, the maximum number of days will be set before the password expires and the setting for the password inactivity time should take hold.

# chage -M 30 -I 5 Tim # # chage -l Tim | grep Password Password expires : Mar 03, 2014 Password inactive : Mar 08, 2014

Now, Tim's account will be locked 5 days after his password expires. This is helpful in situations where an employee has left the company, but his user account has not yet been removed. Depending upon your organization's security needs, consider setting all accounts to lock a certain number of days after passwords have expired.

Understanding the password files and password hashes

Early Linux systems stored their passwords in the /etc/passwd file. The passwords were hashed. A hashed password is created using a one-way mathematical process. Once you create the hash, you cannot re-create the original characters from the hash. Here's how it works. When a user enters the account password, the Linux system rehashes the password and then compares the hash result to the original hash in /etc/passwd. If they match, the user is authenticated and allowed into the system.

The problem with storing these password hashes in the /etc/passwd file has to do with the filesystem security settings (see Chapter 4). The filesystem security settings for the /etc/passwd file are listed here:

# ls -l /etc/passwd -rw-r--r--. 1 root root 1644 Feb 2 02:30 /etc/passwd

As you can see, everyone can read the password file. You might think that this is not a problem because the passwords are all hashed. However, individuals with malicious intent have created files called rainbow tables. A rainbow table is simply a dictionary of potential passwords that have been hashed. For instance, the rainbow table would contain the hash for the popular password “Password,” which is:

$6$dhN5ZMUj$CNghjYIteau5xl8yX.f6PTOpendJwTOcXjlTDQUQZhhy V8hKzQ6Hxx6Egj8P3VsHJ8Qrkv.VSR5dxcK3QhyMc.

Because of the ease of access to the password hashes in the /etc/passwd file, it is only a matter of time before a hashed password is matched in a rainbow table and the plaintext password uncovered.

Thus, the hashed passwords were moved to a new configuration file, /etc/shadow. This file has the following security settings:

# ls -l /etc/shadow -r--------. 1 root root 1049 Feb 2 09:45 /etc/shadow

Only root can view this file. Thus, the hashed passwords are protected. Here is the tail end of a /etc/shadow file. You can see that there are long, nonsensical words in each user's record. Those are the hashed passwords.

# tail -2 /etc/shadow johndoe:$6$jJjdRN9/qELmb8xWM1LgOYGhEIxc/:15364:0:99999:7::: Tim:$6$z760AJ42$QXdhFyndpbVPVM5oVtNHs4B/:15372:5:30:7:16436::

The following are also stored in the /etc/shadow file, in addition to the account name and hashed password:

- Number of days (since January 1, 1970) since the password was changed

- Number of days before password can be changed

- Number of days before a password must be changed

- Number of days to warn a user before a password must be changed

- Number of days after password expires that account is disabled

- Number of days (since January 1, 1970) that an account has been disabled

This should sound familiar as they are the settings for password aging covered earlier in the chapter. Remember that the chage command will not work if you do not have a /etc/shadow file set up, nor will the /etc/login.defs file be available.

Obviously, filesystem security settings are very important for keeping your Linux system secure. This is especially true with all Linux systems' configuration files and others.

Securing the filesystem

Another important part of the Implementation phase is setting proper filesystem security. The basics for security settings were covered in Chapter 4 and Access Control Lists (ACL) in Chapter 11. However, there are a few additional points that need to be added to your knowledge base.

The Access Control Matrix created in the Planning phase will be a tremendous help here. Setting a file or directory's owners and groups as well as their permissions should be directed using the matrix. It will be a long and tedious process, but well worth the time and effort. In addition to following your organization's Access Control Matrix, there are a few additional file permissions and configuration files you need to pay attention to.

Managing dangerous filesystem permissions

If you gave full rwxrwxrwx (777) access to every file on the Linux system, you can imagine the chaos that would follow. In many ways, similar chaos can occur by not closely managing the SetUID (SUID) and the SetGID (SGID) permissions (see Chapters 4 and 11).

Files with the SUID permission in the Owner category and execute permission in the Other category will allow anyone to temporarily become the file's owner while the file is being executed in memory. The worst case would be if the file's owner were root.

Similarly, files with the SGID permission in the Owner category and execute permission in the Other category will allow anyone to temporarily become a group member of the file's group while the file is being executed in memory. SGID can also be set on directories. This sets the group ID of any files created in the directory to the group ID of the directory.

Executable files with SUID or SGID are favorites of malicious users. Thus, it is best to use them sparingly. However, some files do need to keep these settings. Two examples are the passwd and the sudo commands, which follow. Each of these files should maintain their SUID permissions.

$ ls -l /usr/bin/passwd -rwsr-xr-x. 1 root root 28804 Feb 8 2011 /usr/bin/passwd $ $ ls -l /usr/bin/sudo ---s--x--x. 2 root root 77364 Nov 10 04:50 /usr/bin/sudo

However, some Linux systems come with the ping command set with SUID permission. This could enable a user to start a denial-of-service (DoS) attack called a ping storm. To remove the permission, use the chmod command, as follows:

$ chmod u-s /bin/ping $ ls -l /bin/ping -rwxr-xr-x. 1 root root 39344 Nov 10 04:32 /bin/ping

Removing and granting the SUID and GUID permissions to objects should be in your Access Control Matrix and determined during the Security Process Lifecycle Planning phase.

Securing the password files

The /etc/passwd file is the file the Linux system uses to check user account information and was covered earlier in the chapter. The /etc/passwd file should have the following permission settings:

- Owner: root

- Group: root

- Permissions: (644) Owner: rw- Group: r-- Other: r--

The example that follows shows that the /etc/passwd file has the appropriate settings.

# ls -l /etc/passwd -rw-r--r--. 1 root root 1644 Feb 2 02:30 /etc/passwd

These settings are needed so users can log in to the system and see usernames associated with user ID and group ID numbers. However, users should not be able to modify the /etc/passwd directly. For example, a malicious user could add a new account to the file if write access were granted to Other.

The next file is the /etc/shadow file. Of course, it is closely related to the /etc/passwd file because it is also used during the login authentication process. This /etc/shadow file should have the following permissions settings:

- Owner: root

- Group: root

- Permissions: (400) Owner: r-- Group: --- Other: ---

The code that follows shows that the /etc/shadow file has the appropriate settings.

# ls -l /etc/shadow -r--------. 1 root root 1049 Feb 2 09:45 /etc/shadow

The /etc/passwd file has read access for the owner, group, and other. Notice how much more the /etc/shadow file is restricted than the /etc/passwd file. For the /etc/shadow file, only the owner is given read access. This is so that only root can view this file, as mentioned earlier. So if only root can view this file, how can a user change her password because it is stored in /etc/shadow? The passwd utility, /usr/bin/passwd, uses the special permission SUID. This permission setting is shown here:

# ls -l /usr/bin/passwd -rwsr-xr-x. 1 root root 28804 Feb 8 2013 /usr/bin/passwd

Thus, the user running the passwd command temporarily becomes root while the command is executing in memory and can then write to the /etc/shadow file.

The /etc/group file (see Chapter 11) contains all the groups on the Linux system. Its file permissions should be set exactly as the /etc/passwd file:

- Owner: root

- Group: root

- Permissions: (644) Owner: rw- Group: r-- Other: r--

Also, the group password file, /etc/gshadow, needs to be properly secured. As you would expect, the file permission should be set exactly as the /etc/shadow file:

- Owner: root

- Group: root

- Permissions: (400) Owner: r-- Group: --- Other: ---

Locking down the filesystem

The filesystem table (see Chapter 12), /etc/fstab, needs some special attention, too. The /etc/fstab file is used at boot time to mount devices. It is also used by the mount command, the dump command, and the fsck command. The /etc/fstab file should have the following permission settings:

- Owner: root

- Group: root

- Permissions: (644) Owner: rw- Group: r-- Other: r--

Because the /etc/fstab is readable by everyone on the system, no comment fields describing the mounted devices should be included. There is no need to give away any additional filesystem information that can be used by a malicious user.

Within the filesystem table, there are some important security settings that need to be reviewed. Besides your root, boot, and swap partitions, consider the following:

- Put the /home subdirectory, where user directories are located, on its own partition and:

- Set the nosuid option to prevent SUID and SGID permission-enabled executable programs running from there. Programs that need SUID and SGID permissions should not be stored in /home and are most likely malicious.

- Set the nodev option so no device file located there will be recognized. Device files should be stored in /dev and not in /home.

- Set the noexec option so no executable programs, which are stored in /home, can be run. Executable programs should not be stored in /home and are most likely malicious.

- Put the /tmp subdirectory, where temporary files are located, on its own partition and use the same options settings as for /home:

- nosuid

- nodev

- noexec

- Put the /usr subdirectory, where user programs and data are located, on its own partition and set the nodev option so no device file located there will be recognized.

- Put the /var subdirectory, where important security log files are located, on its own partition and use the same options settings as for /home:

- nosuid

- nodev

- noexec

Putting the above into your /etc/fstab will look similar to the following:

/dev/sdb1 /home ext4 nodev,noexec,nosuid 1 2 /dev/sdc1 /tmp ext4 nodev,noexec,nosuid 1 1 /dev/sdb2 /usr ext4 nodev 1 2 /dev/sdb3 /var ext4 nodev,noexec,nosuid 1 2

These mount options will help to further lock down your filesystem and add another layer of protection from those with malicious intent.

Once again, managing the various file permissions and fstab options should be in your Access Control Matrix and determined during the Security Process Lifecycle Planning phase. The items you choose to implement must be determined by your organization's security needs.

Managing software and services

Often, the administrator's focus is on making sure the needed software and services are on a Linux system. From a security standpoint, you need to take the opposite viewpoint and make sure the unneeded software and services are not on a Linux system.

Removing unused software and services

During the Security Process Lifecycle Planning phase, one of your outcomes should be a “Needed Software and Services Checklist.” This will be your starting point in determining what to remove from the system. With the checklist in hand, you can begin the process of reviewing and removing unnecessary software and services:

Every service (see Chapter 15) and software package (see Chapter 10) removal should be conducted first in a test environment of some sort. This is especially true if you are not positive what effect this may have on a production system.

Updating software packages

In addition to removing unnecessary services and software, keeping current software up-to-date is critical for security. The latest bug fixes and security patches are obtained via software updates. Software package updates were covered in Chapters 9 and 10.

Software updates need to be done on a regular basis. How often and when you do it, of course, depends upon your organization's security needs.

You can easily automate software updates, but like removing services and software, it would be wise to test the updates in a test environment first. Once updated software shows no problems, you can then update the software on your production Linux systems.

Advanced implementation

There are several other important topics in the Security Process Lifecycle's Implementation phase. They include cryptography, Pluggable Authentication Modules (PAM), and SELinux. These advanced and detailed topics have been put into separate chapters, Chapter 23 and Chapter 24.

Working in the Monitoring Phase

If you do a good job of planning and implementing your system's security, most malicious attacks will be stopped. However, if an attack should occur, you need to be able to recognize it. Monitoring is an activity that needs to be going on continuously, no matter where your organization currently is in the Security Process Lifecycle.

In this phase, you watch over log files, user accounts, and the filesystem itself. In addition, you need some tools to help you detect intrusions and other types of malware.

Monitoring log files

Understanding the log files in which Linux records important events is critical to the Monitoring phase. These logs need to be reviewed on a regular basis.

The log files for your Linux system are primarily located in the /var/log directory. Most of the files in the /var/log directory are maintained by the rsyslogd service (see Chapter 13). Table 22.4 contains a list of /var/log files and a brief description of each.

Table 22.4 Log Files in the /var/log Directory

| System Log Name | Filename | Description |

| Apache Access Log | /var/log/httpd/access_log | Logs requests for information from your Apache Web server. |

| Apache Error Log | /var/log/httpd/error_log | Logs errors encountered from clients trying to access data on your Apache Web server. |

| Bad Logins Log | btmp | Logs bad login attempts. |

| Boot Log | boot.log | Contains messages indicating which system services have started and shut down successfully and which (if any) have failed to start or stop. The most recent bootup messages are listed near the end of the file. |

| Boot Log | dmesg | Records messages printed by the kernel when the system boots. |

| Cron Log | cron | Contains status messages from the crond daemon. |

| dpkg Log | dpkg.log | Contains information concerning installed Debian Packages. |

| FTP Log | vsftpd.log | Contains messages relating to transfers made using the vsFTPd daemon (FTP server). |

| FTP Transfer Log | xferlog | Contains information about files transferred using the FTP service. |

| GNOME Display Manager Log | /var/log/gdm/:0.log | Holds messages related to the login screen (GNOME display manager). Yes, there really is a colon in the filename. |

| LastLog | lastlog | Records the last time an account logs in to the system. |

| Login/out Log | wtmp | Contains a history of logins and logouts on the system. |

| Mail Log | maillog | Contains information about addresses to which and from which e-mail was sent. Useful for detecting spamming. |

| MySQL Server Log | mysqld.log | Includes information related to activities of the MySQL database server (mysqld). |

| News Log | spooler | Provides a directory containing logs of messages from the Usenet News server if you are running one. |

| RPM Packages | rpmpkgs | Contains a listing of RPM packages that are installed on your system. No longer available by default. You can install the package, or just use rpm -qa to view a listing of installed RPM packages. |

| Samba Log | /var/log/samba/log.smbd | Shows messages from the Samba SMB file service daemon. |

| Security Log | secure | Records the date, time, and duration of login attempts and sessions. |

| Sendmail Log | sendmail | Shows error messages recorded by the sendmail daemon. |

| Squid Log | /var/log/squid/access.log | Contains messages related to the squid proxy/caching server. |

| System Log | messages | Provides a general-purpose log file where many programs record messages. |

| UUCP Log | uucp | Shows status messages from the UNIX to UNIX Copy Protocol daemon. |

| YUM Log | yum.log | Shows messages from the Yellow Dog Update Manager. |

| X.Org X11 Log | Xorg.0.log | Includes messages output by the X.Org X server. |

The log files that are in your system's /var/log directory will depend upon what services you are running. Also, some log files are distribution-dependent. For example, if you use Fedora Linux, you would not have the dpkg log file.

Most of the log files are displayed using the commands cat, head, tail, more, or less. However, a few of them have special commands for viewing (see Table 22.5).

Table 22.5 Viewing Log Files That Need Special Commands

| Filename | View Command |

| btmp | dump-utmp btmp |

| dmesg | dmesg |

| lastlog | lastlog |

| wtmp | dump-utmp wtmp |

For a practical example of how you might use the log files for monitoring, the following is the tail end of the /var/log/messages file. Notice that the yum command has been used to install the sysstat package.

# tail -5 /var/log/messages

Feb 3 11:51:10 localhost dbus-daemon[720]: **

Message: D-Bus service launched with name:

net.reactivated.Fprint

Feb 3 11:51:10 localhost dbus-daemon[720]: **

Message: entering main loop

Feb 3 11:51:40 localhost dbus-daemon[720]: **

Message: No devices in use, exit

Feb 3 12:08:25 localhost yum[5698]: Installed:

lm_sensors-libs-3.3.1-1.fc16.i686

Feb 3 12:08:25 localhost systemd[1]: Reloading.

Feb 3 12:08:25 localhost yum[5698]: Installed:

sysstat-10.0.2-2.fc16.i686

To verify this installation, you can view the /var/log/yum.log, as shown in the example that follows. You can see that the yum.log verifies the installation occurred. If, for some reason, you or anyone on the administrator team did not install this package, further investigation should be pursued.

# tail -3 /var/log/yum.log Feb 03 11:08:51 Installed: 2:nmap-5.51-1.fc16.i686 Feb 03 12:08:25 Installed: lm_sensors-libs-3.3.1-1.fc16.i686 Feb 03 12:08:25 Installed: sysstat-10.0.2-2.fc16.i686

You should keep in mind the following important security issues concerning managing your log files:

- Review the rsyslog daemon's configuration file, /etc/rsyslog.conf, to ensure the desired information is being tracked (see Chapter 13).

- Track any modifications to /etc/rsyslog.conf.

- Review the /etc/rsyslog.conf to ensure that login attempts are sent to /var/log/secure by the setting authpriv* /var/log/secure. For Ubuntu, login attempts are sent to /var/log/auth.log by the setting auth,authpriv.* /var/log/auth.log.

- Create and follow log review schedules for “human eyes.” For instance, it may be important to view on a daily basis who is using the sudo command, which is logged in the /var/log/secure file.

- Create log alerts. For example, a sudden increase in a log file size, such as /var/log/btmp, is an indicator of a potentially malicious problem.

- Maintain log file integrity.

- Review log file permissions.

- Review the log rotation schedule in the logrotate configuration file, /etc/logrotate.conf.

- Include log files in backup and data retention plans.

- Implement best practices, such as clearing log files appropriately so as not to lose file permission settings. To clear a log file without losing its permission settings, use the redirection symbol as follows: > logfilename.

Log files are obviously important in your Security Process Lifecycle Monitoring phase. Another important item to monitor is user accounts.

Monitoring user accounts

User accounts are often used in malicious attacks on a system by gaining unauthorized access to a current account, creating new bogus accounts, or by leaving an account behind to access later. To avoid such security issues, watching over user accounts needs to be part of the Monitoring phase.

Detecting counterfeit new accounts and privileges

Accounts created without going through the appropriate authorization should be considered counterfeit. Also, modifying an account in any way that gives it a different unauthorized User Identification (UID) number or adds unauthorized group memberships is a form of rights escalation. Keeping an eye on the /etc/passwd and /etc/group files will monitor these potential breaches.

To help you monitor the /etc/passwd and /etc/group files, you can use the audit daemon. The audit daemon is an extremely powerful auditing tool, which allows you to select system events to track and record them, and provides reporting capabilities.

To begin auditing the /etc/passwd and /etc/group files, you need to use the auditctl command. Two options at a minimum are required to start this process:

- -w filename—Place a watch on filename. The audit daemon will track the file by its inode number. An inode number is a data structure that contains information concerning a file, including its location.

- -p trigger(s)—If one of these access types occurs (r=read, w=write, x=execute, a=attribute change) to filename, then trigger an audit record.

In the following code, a watch has been placed on the /etc/passwd file using the auditctl command. The audit daemon will monitor access, which consists of any reads, writes, or file attribute changes:

# auditctl -w /etc/passwd -p rwa

To review the audit logs, use the audit daemon's ausearch command. The only option needed here is the -f option, which specifies which records you want to view from the audit log. The following is an example of the /etc/passwd audit information:

# ausearch -f /etc/passwd time->Fri Feb 3 04:27:01 2014 type=PATH msg=audit(1328261221.365:572): item=0 name="/etc/passwd" inode=170549 dev=fd:01 mode=0100644 ouid=0 ogid=0 rdev=00:00 obj=system_u:object_r:etc_t:s0 type=CWD msg=audit(1328261221.365:572): cwd="/" ... time->Fri Feb 3 04:27:14 2014 type=PATH msg=audit(1328261234.558:574): item=0 name="/etc/passwd" inode=170549 dev=fd:01 mode=0100644 ouid=0 ogid=0 rdev=00:00 obj=system_u:object_r:etc_t:s0 type=CWD msg=audit(1328261234.558:574): cwd="/home/johndoe" type=SYSCALL msg=audit(1328261234.558:574): arch=40000003 syscall=5 success=yes exit=3 a0=3b22d9 a1=80000 a2=1b6 a3=0 items=1 ppid=3891 pid=21696 auid=1000 uid=1000 gid=1000 euid=1000 suid=1000 fsuid=1000 egid=1000 sgid=1000 fsgid=1000 tty=pts1 ses=2 comm="vi" exe="/bin/vi" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023" ----

This is a lot of information to review. A few items will help you see what audit event happened to trigger the bottom record.

- time — The time stamp of the activity

- name — The filename, /etc/passwd, being watched

- inode — The /etc/passwd's inode number on this filesystem

- uid — The user ID, 100, of the user running the program

- exe — The program, /bin/vi, used on the /etc/passwd file

To determine what user account is assigned the UID of 100, look at the /etc/password file. In this case, the UID of 100 belongs to the user johndoe. Thus, from the audit event record displayed above, you can determine that account johndoe has attempted to use the vi editor on the /etc/passwd file. It is doubtful that this was an innocent action and it requires more investigation.

The audit daemon and its associated tools are extremely rich. To learn more about it, look at the man pages for the following audit daemon utilities and configuration files:

- auditd — The audit daemon

- auditd.conf — Audit daemon configuration file

- autditctl — Controls the auditing system

- audit.rules — Configuration rules loaded at boot

- ausearch — Searches the audit logs for specified items

- aureport — Report creator for the audit logs

- audispd — Sends audit information to other programs

The audit daemon is one way to keep an eye on important files. You should review your account and group files on a regular basis also with a “human eye” to see if anything looks irregular.

Important files, such as /etc/passwd, do need to be monitored for unauthorized account creation. However, just as bad as a new unauthorized user account is an authorized user account with a bad password.

Detecting bad account passwords

Even with all your good efforts, bad passwords will slip in. Therefore, you do need to monitor user account passwords to ensure they are strong enough to withstand an attack.

One password strength monitoring tool you can use is the same one malicious users use to crack accounts, John the Ripper. John the Ripper is a free and open source tool that you can use at the Linux command line. It's not installed by default. For a Fedora distribution, you will need to issue the command yum install john to install it.

In order to use John the Ripper to test user passwords, you must first extract account names and passwords using the unshadow command. This information needs to be redirected into a file for use by john, as shown here:

# unshadow /etc/passwd /etc/shadow > password.file

Now, edit the password.file using your favorite text editor to remove any accounts without passwords. Because it is wise to limit John the Ripper to testing a few accounts at a time, remove any account names you do not wish to test presently.

Now, use the john command to attempt password cracks. To run john against the created password file, issue the command john filename. In the following code snippet, you can see the output from running john against the sample password.file. For demonstration purposes, only one account was left in the sample file. And the account, Samantha, was given the bad password of password. You can see how little time it took for John the Ripper to crack the password.

# john password.file Loaded 1 password hash (generic crypt(3) [?/32]) password (Samantha) guesses: 1 time: 0:00:00:44 100% (2) c/s: 20.87 trying: 12345 - missy Use the "--show" option to display all of the cracked passwords reliably

To demonstrate how strong passwords are vital, consider what happens when the Samantha account's password is changed from password to Password1234. Even though Password1234 is still a weak password, it takes longer than 7 days of CPU time to crack it. In the code that follows, john was finally aborted to end the cracking attempt.

# passwd Samantha Changing password for user Samantha. ... # john password.file Loaded 1 password hash (generic crypt(3) [?/32]) ... time: 0:07:21:55 (3) c/s: 119 trying: tth675 - tth787 Session aborted

As soon as passwords cracking attempts have been completed, the password.file should be removed from the system. To learn more about John the Ripper, visit www.openwall.com/john.

Monitoring the filesystem

Malicious programs often modify files. They also can try to cover their tracks by posing as ordinary files and programs. However, there are ways to uncover them through various monitoring tactics covered in this section.

Verifying software packages

Typically, if you install a software package from a standard repository or download a reputable site's package, you won't have any problems. But it is always good to double-check your installed software packages to see if they have been compromised. The command to accomplish this is rpm -V package_name.

When you verify the software, information from the installed package files is compared against the package metadata (see Chapter 10) in the rpm database. If no problems are found, the rpm -V command will return nothing. However, if there are discrepancies, you will get a coded listing. Table 22.6 shows the codes used and a description of the discrepancy.

Table 22.6 Package Verification Discrepancies

| Code | Discrepancy |

| S | File size |

| M | File permissions and type |

| 5 | MD5 check sum |

| D | Device file's major and minor numbers |

| L | Symbolic links |

| U | User ownership |

| G | Group ownership |

| T | File modified times (mtime) |

| P | Other installed packages this package is dependent upon (aka capabilities) |

In the partial list that follows, all the installed packages are given a verification check. You can see that the codes 5,S, and T were returned, indicating some potential problems.

# rpm -qaV 5S.T..... c /etc/hba.conf ... ...T..... /lib/modules/3.2.1-3.fc16.i686/modules.devname ...T..... /lib/modules/3.2.1-3.fc16.i686/modules.softdep

You do not have to verify all your packages at once. You can verify just one package at a time. For example, if you want to verify your nmap package, you simply enter rpm -V nmap.

Scanning the filesystem

Unless you have recently updated your system, binary files should not have been modified for any reason. To check for binary file modification, you can use the files' modify time, or mtime. The file mtime is the time when the contents of a file were last modified. Also, you can monitor the file's create/change time or ctime.

If you suspect malicious activity, you can quickly scan your filesystem to see if any binaries were modified or changed today (or yesterday, depending upon when you think the intrusion took place). To do this scan, use the find command.

In the example that follows, a scan is made of the /sbin directory. To see if any binary files were modified less than 24 hours ago, the command find /sbin -mtime -1 is used. In the example, several files display, showing they were modified recently. This indicates that malicious activity is taking place on the system. To investigate further, review each individual file's times, using the stat filename command, as shown here:

# find /sbin -mtime -1 /sbin /sbin/init /sbin/reboot /sbin/halt # # stat /sbin/init File: '/sbin/init' -> '../bin/systemd' Size: 14 Blocks: 0 IO Block: 4096 symbolic link Device: fd01h/64769d Inode: 9551 Links: 1 Access: (0777/lrwxrwxrwx) Uid: ( 0/ root) Gid: ( 0/ root) Context: system_u:object_r:bin_t:s0 Access: 2014-02-03 03:34:57.276589176 -0500 Modify: 2014-02-02 23:40:39.139872288 -0500 Change: 2014-02-02 23:40:39.140872415 -0500 Birth: -

You could create a database of all the binary's original mtimes and ctimes and then run a script to find current mtimes and ctimes, compare them against the database, and note any discrepancies. However, this type of program has already been created and works well. It's called an Intrusion Detection System and is covered later in this chapter.

There are several other filesystem scans you need to perform on a regular basis. Favorite files or file settings of malicious attackers are listed in Table 22.7. The table also lists the commands to perform the scans and why the file or file setting is potentially problematic.

Table 22.7 Additional Filesystem Scans

| File or Setting | Scan Command | Problem with File or Setting |

| SetUID permission | find / -perm -4000 | Allows anyone to temporarily become the file's owner while the file is being executing in memory. |

| SetGID permission | find / -perm -2000 | Allows anyone to temporarily become a group member of the file's group while the file is being executing in memory. |

| rhost files | find /home -name .rhosts | Allows a system to fully trust another system. Should not be in /home directories. |

| Ownerless files | find / -nouser | Indicates a compromised file. |

| Groupless files | find / -nogroup | Indicates a compromised file. |

These filesystem scans will help monitor what is going on in your system and help detect malicious attacks. However, there are additional types of attacks that can occur to your files, including viruses and rootkits.

Detecting viruses and rootkits

Two popular malicious attack tools are viruses and rootkits because they stay hidden while performing their malicious activities. Linux systems need to be monitored for both such tools.

Monitoring for viruses

A computer virus is malicious software that can attach itself to already installed system software and has the ability to spread through media or networks. It is a misconception that there are no Linux viruses. The malicious creators of viruses do often focus on the more popular desktop operating systems, such as Windows. However, that does not mean viruses are not created for the Linux systems.

Even more important, Linux systems are often used to handle services, such as mail servers, for Windows desktop systems. Therefore, Linux systems used for such purposes need to be scanned for Windows viruses as well.

Antivirus software scans files using virus signatures. A virus signature is a hash created from a virus's binary code. The hash will positively identify that virus. Antivirus programs have a virus signature database that is used to compare against files to see if there is a signature match. Depending upon the number of new threats, a virus signature database can be updated often to provide protection from these new threats.

A good antivirus software for your Linux system, which is open source and free, is ClamAV. To install ClamAV on a Fedora or RHEL system, type the command yum install clamav. You can find out more about ClamAV at www.clamav.net, where there is documentation on how to set up and run the antivirus software.

Monitoring for rootkits

A rootkit is a little more insidious than a virus. A rootkit is a malicious program that:

- Hides itself, often by replacing system commands or programs

- Maintains high-level access to a system

- Is able to circumvent software created to locate it

The purpose of a rootkit is to get and maintain root-level access to a system. The term was created by putting together “root,” which means that it has to have administrator access, and “kit,” which means it is usually several programs that operate in concert.

A rootkit detector that can be used on a Linux system is chkrootkit. To install chkrootkit on a Fedora or RHEL system, issue the command yum install chkrootkit. To install chkrookit on an Ubuntu system, use the command sudo apt-get install chkrootkit.

Finding a rootkit with chkrootkit is simple. After installing the package or booting up the Live CD, type in chkrootkit at the command line. It will search the entire file structure denoting any infected files.

The code that follows shows a run of chkrootkit on an infected system. The grep command was used to search for the key word INFECTED. Notice that many of the files listed as “infected” are bash shell command files. This is typical of a rootkit.

# chkrootkit | grep INFECTED Checking 'du'... INFECTED Checking 'find'... INFECTED Checking 'ls'... INFECTED Checking 'lsof'... INFECTED Checking 'pstree'... INFECTED Searching for Suckit rootkit... Warning: /sbin/init INFECTED

In the last line of the preceding chkrootkit code is an indication that the system has been infected with the Suckit rootkit. It actually is not infected with this rootkit. When running utilities, such as antivirus and rootkit-detecting software, you will often get a number of false positives. A false positive is an indication of a virus, rootkit, or other malicious activity that does not really exist. In this particular case, this false positive is caused by a known bug.

The chkrootkit utility should have regularly scheduled runs and, of course, should be run whenever a rootkit infection is suspected. To find more information on chkrootkit, go to http://chkrootkit.org.

Detecting an intrusion

During the Monitoring phase of the Security Process Lifecycle, there are a lot of things to monitor. Intrusion Detection System (IDS) software — a software package that monitors a system's activities (or its network) for potential malicious activities and reports these activities — can help you with this work. Closely related to Intrusion Detection System software is a software package that will prevent an intrusion, called Intrusion Prevention software. Some of these packages are bundled together to provide Intrusion Detection and Prevention.

Several Intrusion Detection System software packages are available for a Linux system. A few of the more popular utilities are listed in Table 22.8. You should know that tripwire is no longer open source. However, the original tripwire code is still available. See the tripwire website listed in Table 22.8 for more details.

Table 22.8 Popular Linux Intrusion Detection Systems

| IDS Name | Installation | Website |

| aide | yum install aide

apt-get install aide |

http://aide.sourceforge.net |

| Snort | rpm or tarball packages from website | http://snort.org |

| tripwire | yum install tripwire

apt-get install tripwire |

http://tripwire.org |

The Advanced Intrusion Detection Environment (aide) IDS uses a method of comparison to detect intrusions. When you were a child, you may have played the game of comparing two pictures and finding what was different between them. The aide utility uses a similar method. A “first picture” database is created. At some time later, another database “second picture” is created, and aide compares the two databases and reports what is different.

To begin, you will need to take that “first picture.” The best time to create this picture is when the system has been freshly installed. The command to create the initial database is aide -i and will take a long time to run. Some of its output follows. Notice that aide tells you where it is creating its initial “first picture” database.

# aide -i AIDE, version 0.15.1 ### AIDE database at /var/lib/aide/aide.db.new.gz initialized.

The next step is to move the initial “first picture” database to a new location. This protects the original database from being overwritten. Plus, the comparison will not work unless the database is moved. The command to move the database to its new location and give it a new name is as follows:

# cp /var/lib/aide/aide.db.new.gz /var/lib/aide/aide.db.gz

Now, you need to create a new database, “second picture,” and compare it to the original database, “first picture.” The check option on the aide command, -c, will create a new database and run a comparison against the old database. The output shown next illustrates this comparison being done and the aide command reporting on some problems.

# aide -C ... --------------------------------------------------- Detailed information about changes: --------------------------------------------------- File: /bin/find Size : 189736 , 4620 Ctime : 2012-02-10 13:00:44 , 2012-02-11 03:05:52 MD5 : <NONE> , rUJj8NtNa1v4nmV5zfoOjg== RMD160 : <NONE> , 0CwkiYhqNnfwPUPM12HdKuUSFUE= SHA256 : <NONE> , jg60Soawj4S/UZXm5h4aEGJ+xZgGwCmN File: /bin/ls Size : 112704 , 6122 Ctime : 2012-02-10 13:04:57 , 2012-02-11 03:05:52 MD5 : POeOop46MvRx9qfEoYTXOQ== , IShMBpbSOY8axhw1Kj8Wdw== RMD160 : N3V3Joe5Vo+cOSSnedf9PCDXYkI= , e0ZneB7CrWHV42hAEgT2lwrVfP4= SHA256 : vuOFe6FUgoAyNgIxYghOo6+SxR/zxS1s , Z6nEMMBQyYm8486yFSIbKBuMUi/+jrUi ... File: /bin/ps Size : 76684 , 4828 Ctime : 2012-02-10 13:05:45 , 2012-02-11 03:05:52 MD5 : 1pCVAWbpeXINiBQWSUEJfQ== , 4ElJhyWkyMtm24vNLya6CA== RMD160 : xwICWNtQH242jHsH2E8rV5kgSkU= , AZlI2QNlKrWH45i3/V54H+1QQZk= SHA256 : ffUDesbfxx3YsLDhD0bLTW0c6nykc3m0 , w1qXvGWPFzFir5yxN+n6t3eOWw1TtNC/ ... File: /usr/bin/du Size : 104224 , 4619 Ctime : 2012-02-10 13:04:58 , 2012-02-11 03:05:53 MD5 : 5DUMKWj6LodWj4C0xfPBIw== , nzn7vrwfBawAeL8nkayICg== RMD160 : Zlbm0f/bUWRLgi1B5nVjhanuX9Q= , 2e5S00lBWqLq4Tnac4b6QIXRCwY= SHA256 : P/jVAKr/SO0epBBxvGP900nLXrRY9tnw , HhTqWgDyIkUDxA1X232ijmQ/OMA/kRgl File: /usr/bin/pstree Size : 20296 , 7030 Ctime : 2012-02-10 13:02:18 , 2012-02-11 03:05:53 MD5 : <NONE> , ry/MUZ7XvU4L2QfWJ4GXxg== RMD160 : <NONE> , tFZer6As9EoOi58K7/LgmeiExjU= SHA256 : <NONE> , iAsMkqNShagD4qe7dL/EwcgKTRzvKRSe ...

The files listed by the aide check in this example are infected. However, aide can also display many false positives.

Where aide databases are created, what comparisons are made, and several other configuration settings are handled in the /etc/aide.conf file. The following is a partial display of the file. You can see the names of the database file and the log file directories set here:

# cat /etc/aide.conf

# Example configuration file for AIDE.

@@define DBDIR /var/lib/aide

@@define LOGDIR /var/log/aide

# The location of the database to be read.

database=file:@@{DBDIR}/aide.db.gz

# The location of the database to be written.

#database_out=sql:host:port:database:login_name:passwd:table

#database_out=file:aide.db.new

database_out=file:@@{DBDIR}/aide.db.new.gz

An Intrusion Detection System can be a big help in monitoring the system. At least one of the IDS tools should be a part of your Security Process Lifecycle Monitoring phase.

Working in the Audit/Review Phase

The final phase in the Security Process Lifecycle is the Audit/Review phase. It is not only important that the security being implemented is following policies and procedures, but that the policies and procedures in place are correct.

There are two important terms to know for this phase. A compliance review is an audit of the overall computer system environment to ensure policies and procedures determined in the Security Process Lifecycle Planning phase are being carried out correctly. Audit results from this review would feed back into the Implementation phase. A security review is an audit of current policies and procedures to ensure they follow accepted best security practices. Audit results from this review would feed back into the Planning phase.

Conducting compliance reviews

Similar to audits in other fields, such as accounting, audits can be conducted internally or by external personnel. These reviews can be as simple as someone sitting down and comparing implemented security to the Access Control Matrix and stated policies. However, more popular is conducting audits using penetration testing.

Penetration testing is an evaluation method used to test a computer system's security by simulating malicious attacks. It is also called pen testing and ethical hacking. No longer do you have to gather tools and the local neighborhood hacker to help you conduct these tests. The following are Linux distributions you can use to conduct very thorough penetration tests:

- BackTrack (www.backtrack-linux.org)

- Linux distribution created specifically for penetration testing

- Can be used from a live DVD or a flash drive

- Training on use of BackTrack is offered by www.offensive-security.com

- Fedora Security Spin (http://spins.fedoraproject.org/security)

- Also called “Fedora Security Lab”

- Spin of the Fedora Linux distribution

- Provides a test environment to work on security auditing

- Can be used from a flash drive

While penetration testing is a lot of fun, for a thorough compliance review, a little more is needed. You should also use checklists from industry security sites and the Access Control Matrix from the Security Process Lifecycle Planning phase for auditing your servers.

Conducting security reviews

Conducting a security review requires that you know current best security practices. There are several ways to stay informed about best security practices. The following is a brief list of organizations that can help you.

- United States Computer Emergency Readiness Team (CERT)

- URL: www.us-cert.gov

- Offers the National Cyber Alert System

- Offers RSS feeds on the latest security threats

- The SANS Institute

- URL: www.sans.org/security-resources

- Offers Computer Security Research newsletters

- Offers RSS feeds on the latest security threats

- Gibson Research Corporation

- URL: www.grc.com

- Offers the Security Now! security netcast

Information from these sites will assist you in creating stronger policies and procedures. Given how fast the best security practices change, it would be wise to conduct security reviews often, depending upon your organization's security needs.

Now you understand a lot more about basic Linux security. The hard part is actually putting all these concepts into practice.

Summary

Understanding the Security Process Lifecycle will greatly assist you in protecting your organization's valuable information assets. Each life cycle area, Planning, Implementation, Monitoring, and Audit/Review, has important information and activities. Even though the life cycle may not progress in an orderly fashion, at your organization, it's still important to implement the Security Process Lifecycle and its phases.

In the Planning phase, the Access Control Model is chosen. The three to choose from are DAC, MAC, and RBAC. DAC is the default model on all Linux distributions. The model chosen here greatly influences the rest of the life cycle phases. The Access Control Matrix is created in this phase. This matrix is used throughout the process as a security checklist.

In the Implementation phase, you are actually implementing the chosen Access Control Model using the various Linux tools available. This is the phase in which most organizations typically begin the security life cycle process. Activities such as managing user accounts, securing passwords, and managing software and services are included in this phase.