Chapter 7

Examples of the Use of EROM Results for Informing Risk Acceptance Decisions

7.1 Overview

The purpose of Chapter 7 is to demonstrate how EROM can help inform risk acceptance decisions at key decision points for programs and projects that serve multiple strategic objectives. Such objectives (and the associated performance requirements) may span multiple mission execution domains (e.g., safety, technical performance, cost, and schedule) as well as multiple government or other stakeholder priorities (e.g., tech transfer, equal opportunity, legal indemnity, and good public relations). Since the risks of not meeting the top-level program/project objectives may imply risks of not meeting the enterprise's strategic objectives, risk acceptance decisions at the program/project level have to include consideration of enterprise-wide risk and opportunity management.

Two demonstration examples are pursued for this topic. The first is based on the Department of Defense's Ground-Based Midcourse Defense (GMD) program as it existed in an earlier time frame (about 14 years ago). In the time that has passed since then, a significant body of information about the GMD program has become available to the public through published reviews performed by the Government Accountability Office (GAO) and the DoD Inspector General (IG). The second is based on NASA's efforts to develop a commercial crew transportation system (CCTS) capability intended to transport astronauts to and from the International Space Station (ISS) and other low-earth-orbit destinations.

All information used for these examples was obtained from unclassified and publicly available reports, including government reviews and media reporting. Because of sensitivities concerning proprietary information, both examples are pursued only to the point where information is available to the public.

The GMD and CCTS examples are interesting when considered together because they are cases where the objectives are analogous but the plan to achieve them is different. Apart from the obvious differences in the mission objectives (one being defense against missiles, the other being space exploration), they share the following competing goals:

- Develop an operational capability quickly

- Make the system safe and reliable

- Keep costs within budget

- Develop partnerships with commercial companies

- Maintain public support

However, in one case (GMD), the plan for achievement of the top program objective emphasizes the first goal (rapid deployment), whereas in the other case (CCTS), the plan emphasizes the second goal (safety and reliability). Taken together, they represent an interesting study of the importance of EROM in helping the decision maker to reach a decision that reflects his or her preference for one goal without neglecting the other goals.

7.2 Example 1: DoD Ground-Based Midcourse Missile Defense in the 2002 Time Frame

7.2.1 Background

The GMD program was initiated in the early 1980s by the Reagan administration under a different name, and is now managed by the Missile Defense Agency (MDA) under DoD. The GMD is a system-of-systems designed to intercept and destroy enemy ballistic missiles during ballistic flight in the exoatmosphere after powered ascent and prior to reentry. The individual systems within the system-of-systems include ground and sea-based radars, battle management command, control, and communication (BMC3) systems, ground-based interceptor (GBI) boost vehicles, and exoatmospheric kill vehicles (EKVs). The main providers of these systems are Raytheon, Northrop Grumman, and Orbital Sciences, and the prime contractor is Boeing Defense, Space & Security. The program is now projected to cost $40 billion by 2017 (Wikipedia 2016), a sharp escalation from its initial cost estimate of $16 billion to $19 billion (Mosher 2000).

In 2002, in an effort to achieve the rapid deployment goals of the George W. Bush administration, the secretary of defense exempted MDA from following the Pentagon's normal rules for acquiring a weapons system (Coyle 2014). The upshot, according to the DoD Office of the Inspector General (IG) (2014a), was that the EKV did not go through the milestone decision review process and product development phase. These activities are normally mandated “to carefully assess a program's readiness to proceed to the next acquisition phase and to make a sound investment decision committing the DoD's financial resources.” For the product development phase, the program is assessed “to ensure that the product design is stable, manufacturing processes are controlled, and the product can perform in the intended operational environment.” As a result of waiving these processes for the GMD system, according to the IG, “the EKV prototype was forced into operational capability” before it was ready. Furthermore, according to the IG, “a combination of cost constraints and failure-driven program restructures has kept the program in a state of change. Schedule and cost priorities drove a culture of ‘use-as-is’ leaving the EKV as a manufacturing challenge.”

Complicating the decision to suspend standard review and verification practices, the program was already subject to a variety of quality management deficiencies. Before and after that decision was announced, concerns had been expressed by the Government Accountability Office (GAO) (2015) about quality management within a number of DoD programs, including the GMD program. These concerns included nonconformances, insufficient systems engineering discipline, insufficient oversight of the prime contractor activities, and relying on subtier suppliers to self-report without effective oversight. These deficiencies, among others cited by GAO, led to the installation of defective parts, ultimately resulting in substantial increases in both schedule and cost.

The decision to proceed to deployment with an unproven EKG was made because of the primacy of Objective 1 at the time the decision was made. The operating assumption was that the system could be deployed in a prototype form and later retrofitted as needed to achieve reliability goals.

7.2.2 Top-Level Objectives, Risk Tolerances, and Risk Parity

For this example, the principal objective of the program is to rapidly achieve a robust, reliable, and cost-effective operating GMD system. That top objective (denoted as Objective 1) may be subdivided into the following three contributing objectives:

- Objective 1.1: Rapidly deploy a robust operational GMD system. In this context, the term robust implies a system that is able to withstand any credible environment to which it may be exposed prior to launch, during launch, and during intercept.

- Objective 1.2: Rapidly achieve a reliable operating GMD system. The term reliable refers to the ability of the system to identify, intercept, and destroy its targets with a high probability of success.

- Objective 1.3: Achieve a cost-effective operating GMD system. The term cost effective refers to the ability to deploy and maintain a robust operational system and achieve consistently high reliability within the established funding limits for the program.

Restating one of the main themes of this book, the framework for EROM calls for factoring risk tolerance into the analysis processes that accompany the development of requirements. To avoid imbalance between the competing goals that will later be regretted, it is necessary for there to be a process for eliciting the decision maker's risk tolerances in an objective, rational manner, and incorporating these tolerances into the evaluation of the plan. As discussed in Sections 3.1.1, 3.3.2, and 3.5.1, risk tolerance may be accounted for through the development of the following EROM-generated items:

- Risk parity statements

- Risk watch and response boundaries

- Leading indicator watch and response triggers

Risk parity statements are elicited from the decision maker. Each risk parity statement reflects a common level of pain from the decision maker's perspective. Thus, each reflects the decision maker's view of an even trade-off between objectives.

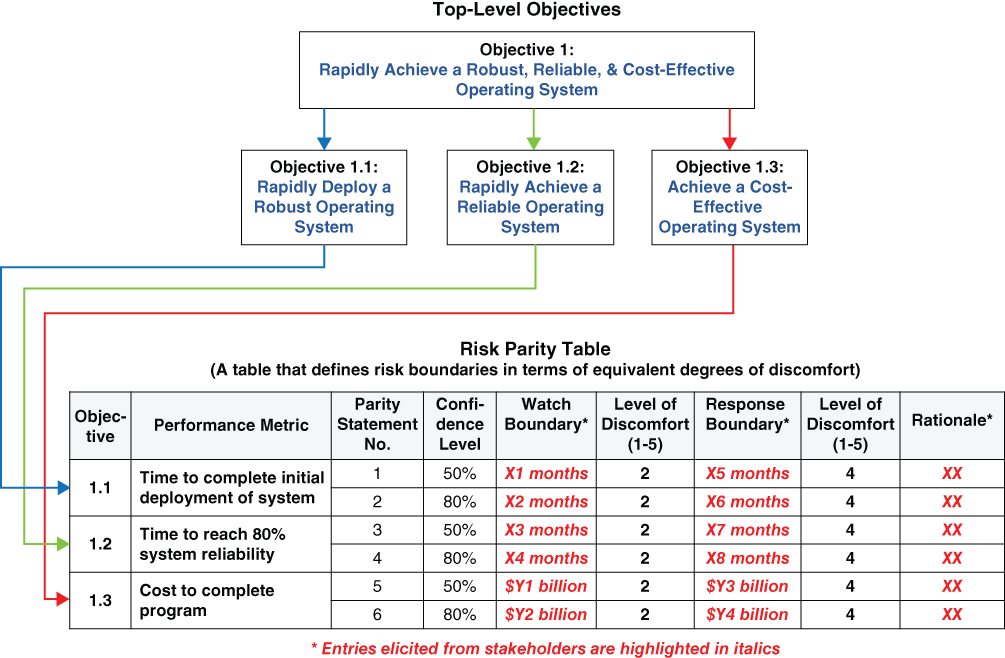

The objectives for this example and a suggested format for a cumulative risk parity table are illustrated in Figure 7.1. The following statements of cumulative risk, taken from the table in Figure 7.1, are parity statements because each of them corresponds to the same level of discomfort (i.e., rank 2):

Figure 7.1 Objectives and hypothetical cumulative risk parity table for GMD example

- Risk Parity Statement 1 (Discomfort Level 2): We are 50 percent confident that it will take no more than X1 months to complete initial deployment of the system.

- Risk Parity Statement 2 (Discomfort Level 2): We are 80 percent confident that it will take no more than X2 months to complete initial deployment of the system.

- Risk Parity Statement 3 (Discomfort Level 2): We are 50 percent confident that it will take no more than X3 months before the system is 80 percent reliable.

- Risk Parity Statement 4 (Discomfort Level 2): We are 80 percent confident that it will take no more than X4 months before the system is 80 percent reliable.

- Risk Parity Statement 5 (Discomfort Level 2): We are 50 percent confident that the total cost to deploy the system and achieve 80 percent reliability will be no more than $Y1 billion.

- Risk Parity Statement 6 (Discomfort Level 2): We are 80 percent confident that the total cost to deploy the system and achieve 80 percent reliability will be no more than $Y2 billion.

Similarly, the following parity statements also evolve from the table in Figure 7.1 because of the fact that each statement results in a rank 4 level of discomfort:

- Risk Parity Statement 1 (Discomfort Level 4): We are 50 percent confident that it will take no more than X5 months to complete initial deployment of the system.

- Etc.

7.2.3 Risks and Leading Indicators

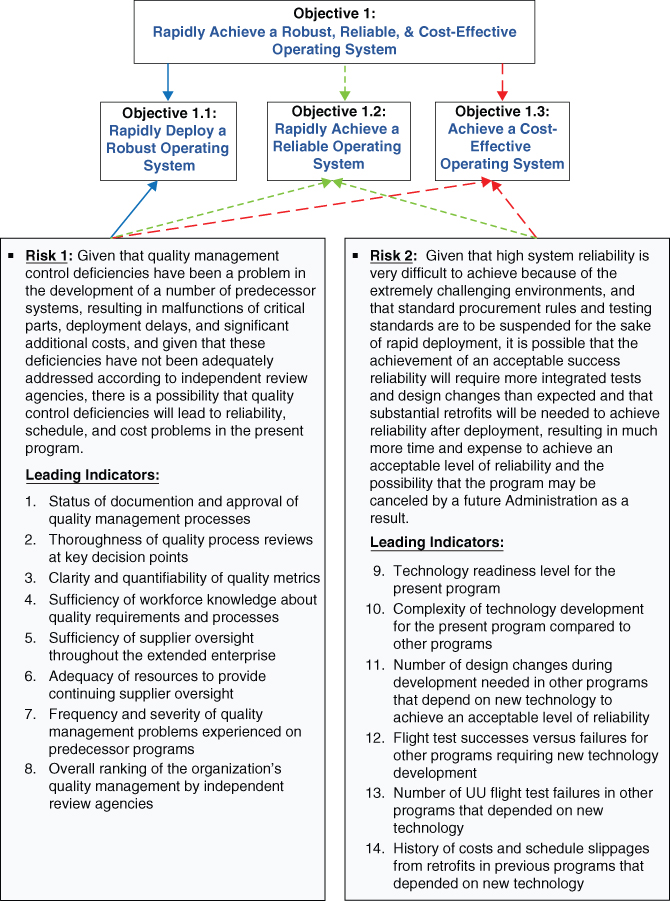

Based on the information provided in Section 7.2.1 for the GMD program, two risk scenarios suggest themselves. The first emanates from quality management control concerns in the 2002 time frame and affects all three objectives: rapid deployment, reliability attainment, and cost effectiveness. The second results from the suspension of standard controls, combined with the challenging nature of kinetic intercept at hypersonic speeds, and affects the latter two objectives.

Example risk scenario statements and corresponding example leading indicators are shown in Figure 7.2. The leading indicators for each risk are representative of the sources of concern cited by GAO and by the DoD IG, as summarized in Section 7.2.1. Those listed for the first risk scenario in Figure 7.2 are based on the following observation (IG 2014b):

Figure 7.2 Risks and leading indicators for GMD example (2002 time frame)

“Quality management system deficiencies identified by GAO and DoD OIG reports include:

- Inconsistent process review at key decision points across programs,

- Quality metrics not consolidated in a manner that helps decision makers identify and evaluate systemic quality problems,

- Insufficient workforce knowledge,

- Inadequate resources to provide sufficient oversight, and

- Ineffective supplier oversight.”

Those listed for the second risk scenario are based on technology readiness level (TRL) and experience from other programs and projects, which can serve as an indicator of potential future problems for the present program. These indicators pertain to the principal factors that have tended to produce reliability, schedule, or cost impacts and UU risks for complex programs.

7.2.4 Leading Indicator Trigger Values

Parity between cumulative risks can be stated in terms of parity between leading indicators. As discussed in Section 3.5, trigger values for leading indicators of risk are developed by the EROM analysts and are used to signal when a risk is reaching a risk tolerance boundary that was established by a decision maker. When there are many leading indicators, as is typically the case, various combinations of the leading indicators are formulated to act as surrogates for the cumulative risks. These combinations were referred to as composite leading indicators in Section 4.6.4.

To simplify this example, we combine the leading indicators in Figure 7.2 into three composite indicators, as follows:

- Composite indicator A, termed “Quality Management Ranking”: A composite of leading indicators 1 through 8 with a ranking scale of 1 to 5.

- Composite indicator B, termed “Technology Readiness Ranking”: A composite of leading indicators 9 through 11 with a ranking scale of 1 to 5.

- Composite indicator C, termed “Previous Success Ranking”: A composite of leading indicators 12 through 14 with a ranking scale of 1 to 5.

It is assumed that as a part of the EROM analysis, formulas have been derived for combining the 14 leading indicators in Figure 7.2 into these three composite indicators, but these formulas are left unstated for purposes of this example.

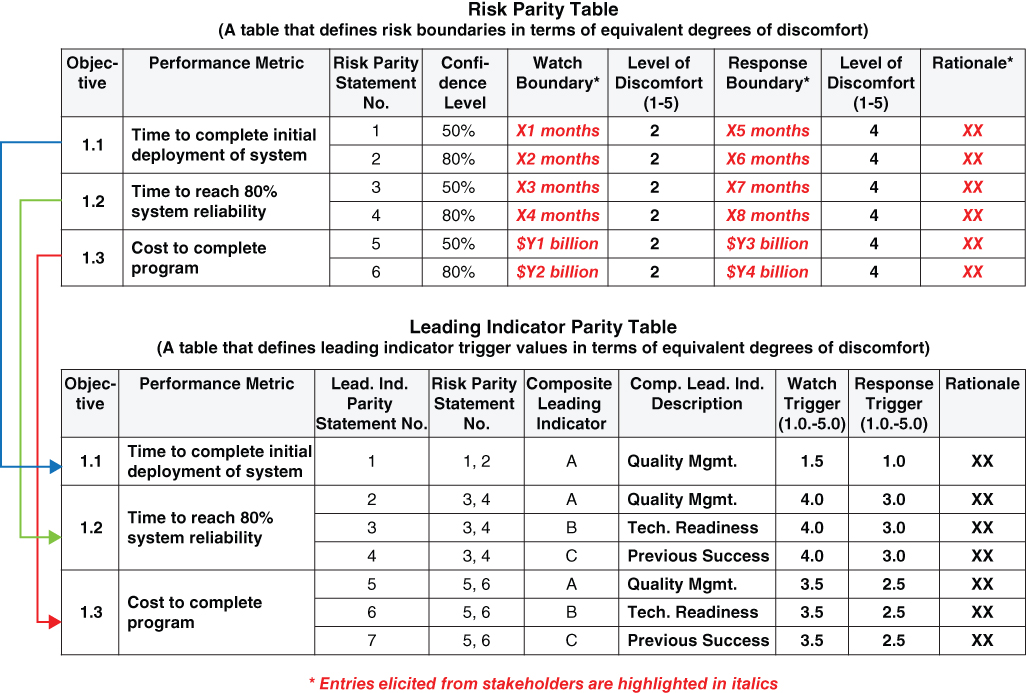

Figure 7.3 illustrates how parity statements for composite leading indicators may substitute for parity statements for cumulative risks. The lower table in the figure leads to the following leading indicator parity statements corresponding to watch triggers (level of discomfort rank 2):

Figure 7.3 Hypothetical composite leading indicator parity table for GMD example

- Leading Indicator Parity Statement 1 (Discomfort Level 2): The watch boundary for the time to complete initial deployment of the system is consistent with a value of 1.5 for the quality management composite indicator.

- Leading Indicator Parity Statement 2 (Discomfort Level 2): The watch boundary for the time to reach 80 percent system reliability is consistent with a value of 4.0 for the quality management composite indicator.

- Leading Indicator Parity Statement 3 (Discomfort Level 2): The watch boundary for the time to reach 80 percent system reliability is consistent with a value of 4.0 for the technology readiness composite indicator.

- Leading Indicator Parity Statement 4 (Discomfort Level 2): The watch boundary for the time to reach 80 percent system reliability is consistent with a value of 4.0 for the previous success composite indicator.

- Leading Indicator Parity Statement 5 (Discomfort Level 2): The watch boundary for the cost to complete the program is consistent with a value of 3.5 for the quality management composite indicator.

- Leading Indicator Parity Statement 6 (Discomfort Level 2): The watch boundary for the cost to complete the program is consistent with a value of 3.5 for the technology readiness composite indicator.

- Leading Indicator Parity Statements 7 (Discomfort Level 2): The watch boundary for the cost to complete the program is consistent with a value of 3.5 for the previous success composite indicator.

Similar leading indicator parity statements evolve for level of discomfort rank 4:

- Leading Indicator Parity Statement 1 (Discomfort Level 4): The response boundary for the time to complete initial deployment of the system is consistent with a value of 1.0 for the quality management composite indicator.

- Etc.

The trigger values in the leading indicator parity table indicate that quality management is less of a concern for the objective of rapidly deploying a robust operating system than for the objectives of rapidly achieving a reliable operating system and of completing the project in a cost-effective manner. Thus, a quality management watch trigger value of 1.5 is posited to be adequate for achieving rapid deployment, whereas corresponding technology readiness and previous success watch trigger values of 4.0 are needed to rapidly achieve reliability goals. Furthermore, only slightly lower values are needed to keep operating costs down. These entries reflect the fact that the deployment plan calls for bypassing most obstacles that would normally impede early deployment (including quality management provisions such as milestone decision reviews), whereas quality management issues not addressed prior to deployment could create substantial risks after deployment. The above discussion, and any other pertinent observations, would normally be included as rationale in the last column of the table.

7.2.5 Example Template Entries and Results

Having developed risk scenario statements for each objective, the associated leading indicators, and the associated parity statements, it is now possible to develop templates similar to those in Sections 4.5 through 4.7 to complete the analysis. For example, Tables 7.1 and 7.2 show, respectively, how the Leading Indicator Evaluation Template and the High-Level Display Template might appear for the GMD example, based on the information provided in the preceding subsections. The results indicate that there was (in 2002) significant risk after deployment owing to the combination of quality management deficiencies, flight test failures for predecessor systems, the complexity of the EKV system, and the probable need for a substantial number of retrofits.

Table 7.1 Leading Indicator Evaluation Template for GMD Example (2002 Time Frame)

| Objective | Comp. Ind. No. | Comp. Ind. Descrip. | Risk, Opp., or Intr. Risk | Scen. No. | Comp. Ind. Watch Value | Rationale or Source | Comp. Ind. Resp. Value | Rationale or Source | Comp. Ind. Current Value | Rationale or Source | Comp. Ind. 1-Yr. Projected Value | Rationale or Source | Comp. Ind. Level of Concern |

| 1.1 Rapidly deploy a robust oper. system |

A | Quality | Risk | 1 | 1.5 | XX | 1.0 | XX | 2.0 | XX | 2.0 | XX | −1 Green Tolerable |

| 1.2 Rapidly achieve a reliable operating system |

A | Quality | Risk | 1 | 4.0 | XX | 3.0 | XX | 2.0 | XX | 2.0 | XX | −3 Red Intolerable |

| B | Readiness | Risk | 2 | 4.0 | XX | 3.0 | XX | 1.5 | XX | 2.0 | XX | −3 Red Intolerable |

|

| C | Prev. Succ. | Risk | 2 | 4.0 | XX | 3.0 | XX | 2.0 | XX | 2.0 to 2.5 | XX | −3 Red Intolerable |

|

| 1.3 Achieve a cost-effective operating system |

A | Quality | Risk | 1 | 3.5 | XX | 2.5 | XX | 2.0 | XX | 2.0 | XX | −3 Red Intolerable |

| B | Readiness | Risk | 2 | 3.5 | XX | 2.5 | XX | 1.5 | XX | 2.0 | XX | −3 Red Intolerable |

|

| C | Prev. Succ. | Risk | 2 | 3.5 | XX | 2.5 | XX | 2.0 | XX | 2.0 to 2.5 | XX | −3 Red Intolerable |

Table 7.2 High-Level Display Template for GMD Example (2002 Time Frame)

| Objective Index | Objective Description | Risk to Objective | Drivers | Suggested Responses |

| 1.1 | Rapidly Deploy a Robust Operating System | −1 Green Tolerable |

None | None |

| 1.2 | Rapidly Achieve a Reliable Operating System | −3 Red Intolerable |

XX | XX |

| 1.3 | Achieve a Cost-Effective Operating System | −3 Red Intolerable |

XX | XX |

While hypothetical, the example results are consistent with the decision maker's belief that in addition to early deployment, long-term reliability and cost effectiveness are important objectives. To put it another way, the decision maker's parity statements are the main determinant of the outcome.

7.2.6 Implications for Risk Acceptance Decision Making

Results such as those in Table 7.2, based on the decision maker's risk tolerances, would seem to indicate that the aggregate risks for two of the three objectives are intolerable and that the program should probably not proceed as currently formulated. The results also indicate the principal sources (drivers) of the risks and the corrective actions (suggested responses) that would tend to make the intolerable risks more tolerable. The principal risk driver in the case of the GMD example would be the decision to exempt the managing organization from following certain standards and rules, including the verification and validation processes normally followed prior to deployment of a system. Inadequate qualification testing for the integrated system and lack of milestone reviews are two of the principal issues to be addressed by corrective action.

The next step would be to assess the aggregate risks for all three objectives assuming the corrective actions were implemented. If such evaluation indicated that none of the aggregate risks remained red (as, for example, in Table 7.3), a logical decision might be to request an iteration from the prime contractor and reschedule the key decision point to a later date.

Table 7.3 High-Level Display Template for GMD Example after Adopting Corrective Actions That Balance the Risks to the Top-Level Objectives

| Objective Index | Objective Description | Risk to Objective | Drivers | Suggested Responses |

| 1.1 | Rapidly Deploy a Robust Operating System | −2 Yellow Marginal |

XX | None |

| 1.2 | Rapidly Achieve a Reliable Operating System | −2 Yellow Marginal |

XX | None |

| 1.3 | Achieve a Cost-Effective Operating System | −2 Yellow Marginal |

XX | None |

To facilitate the identification of alternative decisions that might succeed better than the current one, the results in Table 7.2 include a list of risk drivers for each objective and a list of suggested responses to better control the drivers. The principal risk driver in this case would be the decision to exempt the managing organization from following certain standards and rules, including the verification and validation processes normally followed prior to deployment of a system. Inadequate qualification testing for the integrated system and lack of milestone reviews are two of the principal issues to be addressed by an alternative decision.

7.3 Example 2: NASA Commercial Crew Transportation System as of 2015

7.3.1 Background

The objective of the second example is to develop the capability to use a commercially provided space system to transport crew to low-earth orbit, including to the ISS. This objective has faced several challenges over the past two or three years. As stated by the NASA Administrator (Bolden 2013), “Because the funding for the President's plan has been significantly reduced, we now won't be able to support American launches until 2017. Even this delayed availability will be in question if Congress does not fully support the President's fiscal year 2014 request for our Commercial Crew Program, forcing us once again to extend our contract with the Russians.” Clearly, while safety and reliability has always been a priority at NASA, the Administrator was concerned about the problem of having to be dependent on the Russians for transport capability for longer than necessary, while facing budget cuts that could prolong the problem.

Conscious of these concerns, the NASA Commercial Crew Program (CCP) has devised an approach designed to ensure that safety and reliability receive high priority while the potential for schedule slippages and cost overruns are minimized. The approach in effect for this program is referred to as a “Risk-Based Assurance” process utilizing a “Shared Assurance” model (Canfield 2016, Kirkpatrick 2014).

Basically, the role of certifying that identified hazards are adequately controlled is shifted from NASA safety and mission assurance personnel to the cognizant commercial contractor(s). NASA personnel, however, audit and verify the results for hazards that are deemed to pose high or moderate risk. The criteria for ranking the risk each hazard poses are based on a set of criteria that includes design and process complexity, degree of maturation, past performance, and expert judgment.

Before a system can be developed and American launches can occur, it is necessary for there to be a stable set of certification requirements, including engineering standards, required tests, analyses, and protocols for verification and validation. The development and implementation of these requirements entails seven steps:

- Consultation between NASA and the providers leads to a virtual handshake on the requirements to be implemented.

- The set of requirements is reviewed by the NASA technical authorities and by independent review groups, such as the Aerospace Safety Advisory Panel (ASAP).

- The NASA approval authority approves the set of requirements.

- The providers implement the requirements.

- The providers make the case that the requirements have been implemented correctly and successfully.

- Technical authorities and independent review groups review the case.

- NASA approval authority approves the implementation.

It is noteworthy that at its quarterly meeting with NASA on July 23, 2015, ASAP was highly supportive of the efforts of the Commercial Crew Program (CCP) in executing its responsibilities, while also being highly cognizant of the challenges. “This Program has all the challenges inherent in any space program; it is technically hard. In addition, it has the challenge of working under a new and untried business model—engaging with two commercial partners with widely varying corporate and development cultures, each bringing unique advantages and opportunities and each presenting differing aspects to be wrestled with. This challenge is compounded by budget and schedule pressures, appropriation uncertainties, the desire to remove crew transportation to the ISS from dependency on Russian transportation as soon as possible, and the fixed-price contract environment. Given all of these challenges, the Panel sees considerable risk ahead for the CCP. Fortunately, competent and clear-headed professionals (in whom the Panel has great confidence) are dealing with these risks. However, the risks will only increase over time and test the skills at all management levels” (ASAP 2015).

7.3.2 Top-Level Objectives, Risk Tolerances, and Risk Parity

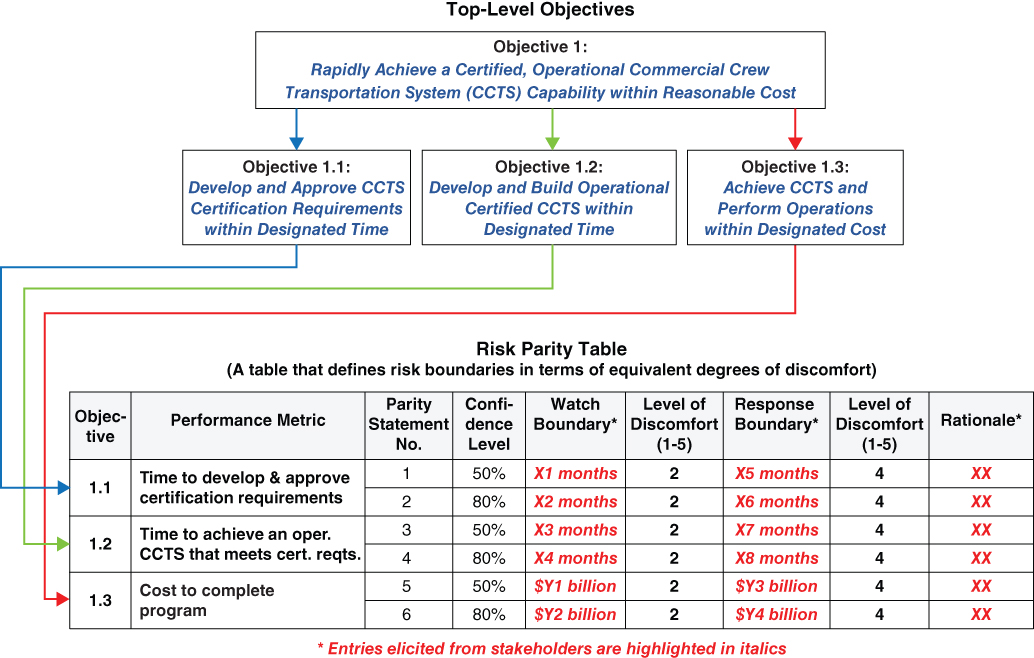

For this example, we consider the principal objective of the program to be the rapid achievement of a certified, operational CCTS capability within reasonable cost. The following three contributing objectives apply:

- Objective 1.1: Develop, review, and approve a set of CCTS certification requirements within a designated near-term time frame (e.g., by 2015 or 2016).

- Objective 1.2: Develop and build an operational certified CCTS within a designated near-term time frame (e.g., by 2017 or 2018).

- Objective 1.3: Achieve a CCTS and perform a designated number of flights within a designated cost (e.g., the amount of funding expected from Congress).

These objectives and a corresponding hypothetical cumulative risk parity table are illustrated in Figure 7.4. The following statements of cumulative risk, taken from the table in Figure 7.4, are parity statements that correspond to a rank 2 level of discomfort:

Figure 7.4 Objectives and hypothetical cumulative risk parity table for CCTS example

- Risk Parity Statement 1 (Discomfort Level 2): We are 50 percent confident that it will take no more than X1 months to develop, review, and approve the certification requirements.

- Risk Parity Statement 2 (Discomfort Level 2): We are 80 percent confident that it will take no more than X2 months to develop, review, and approve the certification requirements.

- Risk Parity Statement 3 (Discomfort Level 2): We are 50 percent confident that it will take no more than X3 months to develop and build an operational certified CCTS.

- Risk Parity Statement 4 (Discomfort Level 2): We are 80 percent confident that it will take no more than X4 months to develop and build an operational certified CCTS.

- Risk Parity Statement 5 (Discomfort Level 2): We are 50 percent confident that the total cost to achieve an operational CCTS and perform 50 crewed flights will be no more than $Y1 billion.

- Risk Parity Statement 6 (Discomfort Level 2): We are 80 percent confident that the total cost to achieve an operational CCTS and perform 50 crewed flights will be no more than $Y2 billion.

Similarly, the following parity statements also evolve from the table in Figure 7.4 because of the fact that each statement results in a rank 4 level of discomfort:

- Risk Parity Statement 1 (Discomfort Level 4): We are 50 percent confident that it will take no more than X5 months to develop, review, and approve the certification requirements.

- Etc.

The first set of parity statements at rank 2 discomfort constitute watch boundaries for the cumulative risk, and the second set at rank 4 constitute response boundaries.

7.3.3 Remainder of Example 2

As foretold in Section 7.1, Example 2 will not be formally pursued beyond this point because of the proprietary nature of the data and the changing landscape within the program. It may be inferred, however, that the tasks to be pursued to complete Example 2 will be similar to those described in Section 7.2 to complete Example 1.

As a general comment reflecting this author's opinion, while assessing the risks associated with the risk-based assurance process and shared assurance model used in the CCP, close attention will have to be paid to the quality and degree of rigor applied to the communication between those responsible for assuring individual parts of the system. Without open and effective communication among the contractors and between the contractors and NASA, there could be a substantial risk that the assurance process will miss accident scenarios that emanate from interactions between subsystems, or will miss solutions that require a collaborative mindset. Furthermore, independence between the providers and those assuring the product is a best practice that needs to be maintained.

For these reasons, and because the risk-based assurance process and shared assurance model is a new approach to assurance at NASA, the approach itself will need to have a set of implementation controls to ensure that it is effective and efficient.

7.4 Implication for TRIO Enterprises and Government Authorities

Achievement of a balance between the competing objectives of timeliness, safety/reliability, and cost requires an honest appraisal of the decision maker's tolerances in each area. As Tables 7.1 and 7.2 show for the GMD example circa 2002, it is easy for decisions to be made based on what is perceived to be the most pressing objective at the time without considering the longer-term objectives that will become more pressing in the future. The use of EROM guards against this tendency, thereby making today's decisions more inclusive of short-term, mid-term, and long-term needs.

References

- Aerospace Safety Advisory Board (ASAP). 2015. “2015 Third Quarterly Meeting Report” (July 23). http://oiir.hq.nasa.gov/asap/documents/ASAP_Third_Quarterly_Meeting_2015.pdf

- Bolden, Charles. 2013. “Launching American Astronauts from U.S. Soil.” NASA (April 30). http://blogs.nasa.gov/bolden/2013/04/

- Canfield, Amy. 2016. The Evolution of the NASA Commercial Crew Program Mission Assurance Process. NASA Kennedy Space Center. https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/20160006484.pdf

- Coyle, Philip. 2014. “Time to Change U.S. Missile Defense Culture,” Nukes of Hazard Blog, Center for Arms Control and Nonproliferation (September 11).

- Government Accountability Office. (GAO). 2015. GAO-15-345, Missile Defense, Opportunities Exist to Reduce Acquisition Risk and Improve Reporting on System Capabilities. Washington, DC: Government Accountab ility Office (May).

- Inspector General (IG). 2014a. DODIG-2014-111, Exoatmospheric Kill Vehicle Quality Assurance and Reliability Assessment—Part A. Alexandria, VA: DoD Office of the Inspector General (September 8). http://www.dodig.mil/pubs/documents/DODIG-2014-111.pdf

- Inspector General (IG). 2014b. DODIG-2015-28. Evaluation of Government Quality Assurance Oversight for DoD Acquisition Programs. Alexandria, VA: DoD Office of the Inspector General (November 3). http://www.dodig.mil/pubs/documents/DODIG-2015-028.pdf

- Kirkpatrick, Paul. 2014. The NASA Commercial Crew Program (CCP) Shared Assurance Model for Safety. https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/20140017447.pdf

- Mosher, David. 2000. “Understanding the Extraordinary Cost of Missile Defense,” Arms Control Today, December 2000. Also website http://www.rand.org/natsec_are/products/missiledefense.html

- Wikipedia. 2016. “Ground-Based Midcourse Defense.” Wikipedia (July 10).