Chapter 2

The Birth of the Service Availability Forum

This chapter examines the benefits of adopting open standards of service availability in general for carrier-grade systems, and the Service Availability Forum specifications in particular. It includes the rationale behind the development of the Service Availability Forum from both the technical and business perspectives. It elaborates on the scope and approach taken by the Service Availability Forum middleware, together with the justification, and explains how it enables a service availability ecosystem for the telecommunications industry.

2.1 Introduction

On 4 December 2001, a group of leading communications and computing companies announced an industry-wide coalition to create and promote an open standard for service availability (SA) [22, 23]. The announcing companies included the traditional network equipment providers, IT companies, hardware suppliers, and software vendors. A series of questions ensued. What was going on? Why were these companies working together? Why did they need an open standard for SA? What were they going to standardize?

These were the typical questions one heard after the SA Forum launch events. In order to answer these questions, one has to take a step back and take a look at what was happening at around the time of the late 1990s and early 2000. For example, the rapid technological advancements in the communications sector, the incorporation of mobility in traditional services and the considerably increased use of the Internet had all contributed to the convergence of the information and communications industries. As a result, the landscape of the overall business environment had changed, requiring the necessary matching actions to be taken in developing these new products and services.

This chapter first examines the technology developments at around that time in the information and communications technology industry. It then discusses the changes from the business perspective as a result of these technological developments. We then delve into the rationale behind the forming of the SA Forum and the benefits that it intended to provide to the users of the standards. We elaborate on the scope and the approach taken by the SA Forum middleware, together with the justification, and explain how it enables a SA ecosystem for the telecommunications industry.

2.2 Technology Environment

In the first chapter, we introduced the concept, principle, and fundamental steps for achieving SA. We have also highlighted a few reported cases of service outage and their corresponding consequences. The described unavailability of mobile communications services perhaps had the most impact in terms of the number of people that were directly affected. It is therefore not surprising to observe that a very high level of SA has been a well-known characteristic expected of a communications service, be it a traditional landline or the more contemporary mobile communications system. Indeed, the term carrier-grade has long been used to refer to a class of systems used in public telecommunications network that deliver up to five nines or six nines (99.999 or 99.9999%) availability. Its origin comes from the fact that a telecommunications company provides the public for hire with communications transmission services is known as a carrier [24]. The equipment associated with providing these highly available services has traditionally been dubbed ‘carrier-grade.’ This reinforces our association of communications services with high level of availability.

Ever since the introduction of the public telephone service in the late nineteenth century, which was a voice-grade telephone service for homes and offices, very little in terms of service features had changed over a long period of time, and the expectation of new telephony services was not very high either. During this time the development was more on the quality of the provided services such as intelligibility of transmitted voice and issues with reliability. Decades later, the introduction of digital electronics had led to its use in telephony services and systems. By the 1960s, digital networks had more or less replaced the analog ones. The benefits of doing so were the lower costs, higher capacity and more flexible to introduce new services.

The technology for communications services took a fork along the path of mobility, with the first commercial analog mobile phones and networks were brought out in 1983. The digital counterparts appeared as commercial offerings in 1991. In 2001, the first 3G (3rd Generation) networks were launched in Japan. 3G was all about communicating via a combination of text, voice, images, and video while a user was on the move. In just a mere two decades, we saw the tremendous pace of advancement in communications technologies as well as an exponential growth in the telecommunications market.

In parallel to the developments of 3G, another trend around this time was the considerably increased use of the Internet—6 August 1991 is considered to be the public debut of the World Wide Web [25]. This trend turned out to be one of the biggest technological drivers for the paradigm shift in the information and communications industry. If we look at the Internet today, it is clear that it has become the convergence layer for different technologies, regardless of whether the information is data or voice, passive or interactive, and stored or real-time. At the time of these changes, information technology has also found its way into the deeper part of communications systems such as the infrastructure. A significant influence of this trend in the communications networks was apparent, based on the observation of the emerging of all Internet protocol (IP) core networks for mobile communications and services at the time. This transitioning from the traditional way of implementing and offering communications services using closed, proprietary technologies into the then new era had substantial effect on the way these services should be developed.

From a user's perspective, however, all these new and exciting technological developments were primarily translated into new features they could now experience. The expectation of the high level of SA was nevertheless untouched because this perception had been around since the beginning, certainly for the majority who had enjoyed the highly available communications services.

2.3 Business Environment

While the pace of technological developments in the information and communications industries were remarkable during just a short period of two decades, it had brought with it some significant changes to the landscape of the environment in which businesses were conducted. On the one hand, the technological advances had considerably broadened the scope and increased business opportunities for a company. On the other hand, a company had to change and adapt to this new environment. In the following subsections, we will speculate about the reasons why the seemingly disparate companies decided to work together, and the need for an open standard for SA.

2.3.1 Ecosystem

An important aspect of the changes in the industry was that transferring bits over communications lines was no longer that profitable. New services based on innovations during this time of convergence with a clear customer value were needed. Due to the increased competition from previously different industry segments, most communications companies had the urgency to roll-out these new services as soon as possible. This was easier said than done when the sheer scale and complexity of the expected products and services were daunting to say the least. Many companies had started to look for new ways of developing these products and services, with a clear objective to increase productivity by reducing the development effort and costs. These included model-driven architecture with automatic code generation, and reusing software assets. The former attempts to produce code as fast as possible at the push of a button, while the latter tends to avoid duplication of effort by using the same code over and over again.

It should be noted that assets for reuse can be either developed in-house or bought from outside. These kinds of ready-made components were generally referred to as Commercially-Off-The-Shelf (COTS) components. They were used as a building block and incorporated into products or services. It is important to point out that a COTS component may come in many different forms. The broad notion of a COTS component is that it is available to the general public and can be obtained through a variety of manners including buying, leasing, or licensing. It is worth mentioning that open source software is therefore considered to be COTS components. In some cases, the open-source implementations have become so dominantly accepted that they are even considered to be de facto standards.

At about this time, the communications industry was going through a period of transitioning from building everything itself in a proprietary way to adopting solutions from the information technology world. For example, the use of COTS components for hardware such as processors and boards; for system software such as operating systems and protocol stacks. One key question was what sorts of items should be made a commodity from a business perspective. Since SA was a natural common function across most communications products and services, support in a middleware was deemed appropriate in this new hybrid architecture.

As a sidenote, middleware was originally developed to provide application developers with assistance to deal with the problems of diverse hardware and complexity of distribution in a distributed application [26]. It positions between an application and its underlying platform, hence the name middleware. One such example is the Object Management Group's Common Object Request Broker Architecture (CORBA) [27], which is a standard enabling software components written in different programming languages and running on different computers to interoperate.

The term platform is used here to collectively refer to its operating system, processor, and the associated low-level services. By providing a set of common functions for an application that resides on different platforms, middleware relieves an application developer's burden of handling interactions among these different application instances across a network. Early use of middleware in database systems and transaction monitors had proved to be effective. By incorporating the SA support functions into a middleware in a similar fashion, it was generally believed that comparable benefit could be gained.

Many communications companies had gone down this path and started to develop their own SA middleware for their own internal reuse. Some had even gone further by releasing them as products, for example, Ericsson's Telecom Server Platform [28]. Other IT and software vendors had also worked with their partners in the communications sector to develop similar products, for example, Sun's Netra Carrier-Grade Servers [29] and Go Ahead's Self Reliant [30]. There were many more at the time. Some companies – and, in some cases, products – are no longer in the market.

While these solutions addressed product efficiency internally within each of the companies, they did not offer the same interface to external application developers. Therefore the same applications still could not be offered across platforms without laborious porting, adaptation, and integration work. At the same time the number of applications that have been implemented in these systems and offering the same functionality was steadily growing yet still lagging behind the (anticipated) demand for new common services. This was the early indication of an ecosystem in which new cooperation among different companies could be beneficial to all parties involved. This explains why a diverse range of companies such as network equipment providers, IT companies, hardware suppliers, and software vendors were interested in working together, as pointed out at the beginning of this chapter.

2.3.2 COTS and Open Systems

These trends underlie the point argued in [13] that COTS and open systems are related concepts but they are not the same. An open system has the characteristics of having interfaces defined to satisfy some stated needs; group consensus is used to develop and maintain the interface specifications; and the specifications are available to the public. The last two qualifying criteria of an open system have essentially made the interface specifications open standards. An implementation of such a standard specification can therefore be made available as a COTS component, although this is not required by an open system. While the different SA middleware solutions could be considered as COTS components, the systems they were part of remained closed.

There was a potential that by opening up what was a closed and proprietary solution on SA support could create an environment in which new cooperation among different companies would become beneficial to all parties involved. However this had to be a collective effort in the tradition of tele- and data communications standardization.

The reasons behind the companies' joining forces together were far from obvious. There were many speculations and observations. My co-editor has theorized the IT bubble [31] and its burst could also be a contributing factor. She has suggested that the World Wide Web brought about the IT bubble in which the trends were as we have described them in this chapter. Initially when the companies had money for development, everyone was doing it on their own and hiring many people to keep up with the demand and the competition. Being different was considered to be a benefit as it locked-in customers for the coming future.

Then in the year 2000 when the bubble burst the profit went down. Existing systems boosted up during the bubble were still working fine and therefore they did not need to be replaced. The main opportunity to increase profit was to address the appetite of the customers which was still growing for the new services and applications. Eventually companies started to look inward and reorganize their processes to save money, and at the same time trying to meet the demand so that they could keep or even grow market share. Standardization was a way of accomplishing this goal.

Her conclusion was that the burst of the IT bubble was a contributor if not really the trigger for the companies' collaboration.

Regardless of whatever the reasons were that caused the companies joining forces and working in a standardization body, adopting a standards-based approach is considered to be a sound risk management strategy. In addition, compatibility of products delivered by different vendors can be ensured. A key role played by standards is to divide a large system into smaller pieces with well-defined boundaries. As a result, an ecosystem is created with different suppliers contributing to different parts. The standardization process ensures that the stakeholders are involved in the development and agreement of the outcome, resulting in conforming products being compatible and interoperable across interface boundaries. This is particularly important to those businesses involving many vendors: the only way to ensure that the system as a whole works is to have standardized interfaces.

Standard COTS components have the added advantage of having a wider choice of vendors and one can normally take the best solution available. By standardizing the design of commonly used high availability (HA) components and techniques, it opens up a competitive environment for improving product features, lowering costs and increasing performance of HA components. The application developers can concentrate on using their core competence during application development, leaving the SA support to the middleware. As a result, a wider variety of application components can be developed simultaneously in shorter development time, thus addressing exactly the trend of the demands we were observing. Being a standard also enables the portability of highly available applications between hardware, operating systems, and middleware. This to a certain extent has answered the question of ‘Why did they need an open standard for SA?’

2.4 The Service Availability Forum Era

The predecessor of the SA Forum was an industry group called the HA Forum. Its goal was to standardize the interfaces and capabilities of the building blocks of HA systems. However, it only went as far as publishing a document [14] that attempted to describe the best-known methods and a guide to a common vocabulary for HA systems. The SA Forum subsequently took over this initiative and developed the standards for SA.

The focus of the SA Forum is to build the foundation for on-demand, uninterrupted landline, and mobile network services. In addition, the goal is to come up with a solution that is independent of the underlying implementation technology. This has been achieved by first of all identifying a set of building blocks in the context of the application domain, followed by defining their interfaces and finally, obtaining a majority consensus among the member companies.

So ‘What is in the SA Forum middleware then?’ A short answer is the essential, common functions we need to place in the middleware in order to support the applications to provide highly available services. This was carried out by extracting the common SA functions that were not only applicable to telecommunications systems, but also to the upcoming new technology and applications areas. The basis of the standardization was drawn from the experiences brought into the Forum by the member companies. It is important to note that they contributed the technical know-how [28–30] to the specifications.

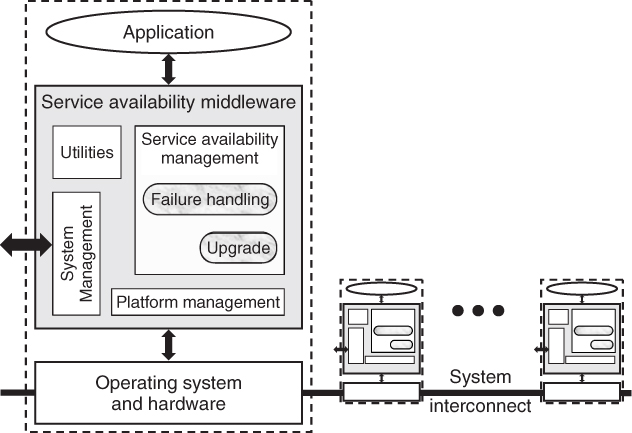

A high-level view of such a SA middleware is shown in Figure 2.1, illustrating an overall architecture and areas of functions. The diagram also shows the relationship between a SA middleware with its application, platform, and cooperating nodes over a system interconnect. Each of these nodes is connected, forming a group to deliver services in a distributed system environment. According to the functions required to support SA, the identified functions are put into four functional groups. They are platform management, SA management, common utilities for developing distributed applications, and system management.

Figure 2.1 High-level view of a service availability middleware.

Although the functions have been partitioned into different groups, ultimately all of them must work together in order to deliver SA support to applications. We will go through each functional group and describe what it does; why it is needed; and what kinds of functions are expected.

- Platform management

This group of functions manages the underlying platform, which consists of the operating system, hardware and associated low level services. If any of these underlying resources are not operating correctly, the problem must be detected and handled accordingly. Since we are in a distributed environment, and the separate instances of the middleware must cooperate, there is a need to maintain information regarding which node is healthy and operating and which is not at any point in time. It must be mentioned that this kind of group membership information is distributed and dynamic in nature, and must be reliably maintained. The expected functions in this group include the monitoring, control, configuring, and reconfiguring capabilities of platform resources; and a reliable facility for maintaining membership information for a group of nodes over a system interconnect.

- SA management

This group of functions is essentially the core for providing SA support to applications. As discussed in Chapter 1, both unplanned and planned events may impact the level of SA. Therefore, the functions are further split into two subgroups to deal with failures handling and upgrade respectively. The expected functions in failures handling include support for error detection, system recovery, and redundancy models. For the upgrade, the expected functions include a flexible way to apply upgrades with minimum or no service outage; monitoring, control, and error recovery of upgrades. Although there are two subgroups in this area, it must be pointed out that the functions need to collaborate closely because protective redundancy is used in both.

- Common utilities for developing distributed applications

One of the original goals of developing a piece of middleware was to hide the complexity caused by the required interactions among distributed instances of an application. As the name suggests, this group provides applications with distributed programming support to conceal the behavior of a distributed system. Most of the frequently used functions for supporting distribution transparency are therefore expected in this group. For example, naming services for providing a system-wide registry and look-up of resources; communication and synchronization support such as message passing, publish/subscribe, and checkpointing; and coordinating distributed accesses to resources.

- System management

This group of functions primarily deals with external systems or applications for the purpose of managing a deployed system as a whole. Before any management operations can be performed, management information regarding the system must be present. There is also a need to keep track of the configuration data for the initial set-up and runtime information of resources while the system is operating, and having functions to manipulate this information. Other expected functions in this group include informing significant events arising in the system; and keeping a record of important events for further analysis.

A thorough discussion on the architecture of such a SA middleware and its corresponding functions are in Part Two.

2.5 Concluding Remarks

We have described the technology and business environments of the information and communications industries around the period of the late 1990s to early 2000, when there was a tremendous pace of advancement in communications technologies. Against this backdrop of what was going on, we explained how the business environment had changed, and how the companies had reacted by joining forces and cooperating to build a viable ecosystem. We distinguished between COTS and open systems, and explained the benefits of having an open standard for a SA middleware. We have also given a high-level view of a SA Forum system and described the intended functions of the middleware.

There you have it: the answers to the questions we raised about the announcement in the beginning of the chapter. These were the circumstances and motivations behind the founding of the SA Forum dated back to the year of 2001—the SA Forum was born!

In the years that followed 2001, the SA Forum, backed by a pool of experienced and talented representatives from its member companies and with the desire to realize the Forum's vision, had diligently worked to develop the necessary specifications. There have also been separate open source implementations to deliver what were written in the specifications, instead of just a pile of papers containing the descriptions of the interfaces.

In the next part of the book we will be looking at the results of these developments. We will run through the reasoning and design decisions behind the development of these specifications. We will also give hints on the best way to use the various SA Forum services and frameworks, and the pitfalls to avoid where appropriate.