C H A P T E R 6

Voxelization

In this chapter, we’ll discuss voxelization and develop a people tracking system. We’ll cover the following:

- What a voxel is

- Why you would want to voxelize your data

- How to make, manipulate, and use voxels

- Why people are rectangles

What Is a Voxel?

A voxel is the three-dimensional equivalent of a pixel—a box, rather than a point, in space that has a volume. Imagine taking an object and then decomposing into cubes, all of the same size. Or, if you’d prefer, building an object out of LEGOs or in Minecraft, much like a cubist painting. See Figure 6-1 for an example of a voxelized scene.

Why Voxelize Data?

There are two different reasons to voxelize a dataset. First, it reduces the size of the dataset, up to 75%—essential if you’re going to be doing a large amount of work on your data in real time. This size reduction can create an outsized response in performance, given the computational complexity of some of the algorithms involved. Instead of simply destroying data, as if you decimated it, voxelization creates a smoothly moving average over the space, something that maintains as much data as possible while simultaneously lowering the state space size. For example, given a scene that’s 3 meters by 3 meters by 3 meters and has 500,000 points evenly distributed, you can realize over a 50% reduction in points (216,000 vs. 500,000) with a 5-centimeter voxel size.

Second, voxelizing various datasets make them very easy to combine. This may not seem like a large benefit, but consider the multisensor fusion problem. Instead of relying on complex algorithms to handle fusing multiple point clouds, you simply need to address each potential voxel space of interest and calculate the probability that it is occupied given multiple sensors.

Voxels make your datasets easier to work with, but what’s the next step? There are many possibilities for voxelized data, but we’re going to cover background subtraction, people tracking, and collision detection. Background subtraction is cutting out the background of a scene (prerecorded) and isolating the new data. People tracking is using that new data (foreground) to track people and people shaped groups of voxels.

Voxelizing Data

Voxelizing in PCL is simply accomplished: the voxelizer is a filter, and it takes a point cloud as an input. It then outputs the voxelized point cloud, calculated for the centroid of the set of points voxelized. See Listing 6-1 for an example.

Listing 6-1. Voxelizing Data

#include <pcl/filters/voxel_grid.h>

//Voxelization

pcl::VoxelGrid<pcl::PointXYZRGB> vox;

vox.setInputCloud (point_cloud_ptr);

vox.setLeafSize (5.0f, 5.0f, 5.0f);

vox.filter (*cloud_filtered);

std::cerr << "PointCloud before filtering: " << point_cloud_ptr->width *

point_cloud_ptr->height << " data points (" << pcl::getFieldsList (*point_cloud_ptr) << ").";

std::cerr << "PointCloud after filtering: " << cloud_filtered->width *

cloud_filtered->height << " data points (" << pcl::getFieldsList (*cloud_filtered) << ").";

Let’s break down the preceding listing:

pcl::VoxelGrid<pcl::PointXYZRGB> vox;

vox.setInputCloud (point_cloud_ptr);

The preceding two commands declare the filter (VoxelGrid) and set the input to the filter to be point_cloud_ptr.

vox.setLeafSize (5.0f, 5.0f, 5.0f);

The preceding command is the key part of the declaration: it sets the size of the window over which voxels are calculated or the voxel size itself. Given that our measurements from the Kinect are in millimeters, this corresponds to a voxel that is 5mm per side.

While this calculation seems quite easy, there’s a problem: voxelizing in this manner disorders a point cloud. Instead of a set of points that’s easy to access and manipulate set of points that’s 640 high by 480 wide, you have a single line that’s 307,200 points long. This makes accessing a particular point or even getting the proper set of indices for a group of points difficult.

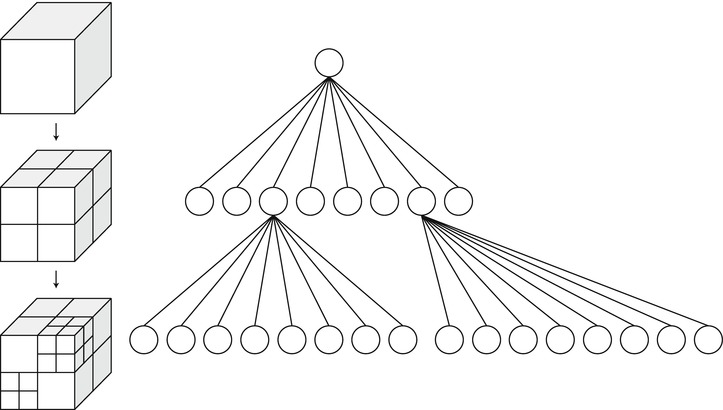

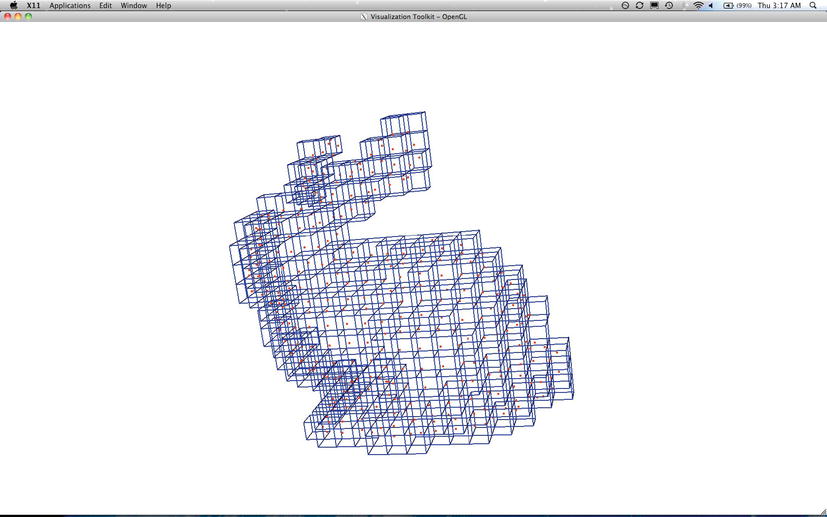

A better method is to use octrees. Octrees divide space into recursive sets of eight octants and organize it as a tree, as shown in Figure 6-2. Only the leaves hold data, and you can easily access them. Also, there are several very fast functions for calculating useful metrics over an octree; for example, nearest neighbor searches are lightning fast. See Figures 6-3 and Figure 6-4 for two examples of octree divided objects.

Again, PCL provides functions to make this work simple; the octree module handles everything. Listing 6-2 will do the same work as Listing 6-1. It voxelizes the incoming point cloud about the centroids of points, dividing the world up neatly into 50-mm–per–side squares.

#include <pcl/octree/octree.h>

float resolution = 50.0;

pcl::octree::OctreePointCloudVoxelCentroid<pcl::PointXYZRGB> octree(resolution);

// Add points from cloud to octree

octree.setInputCloud (pointcloud_ptr);

octree.addPointsFromInputCloud ();

The preceding code is very straightforward. Resolution defines the voxel size, in our case, 50 mm. We create the octree with standard octree and leaf types and set the points inside to be XYZRGB. We then set the input cloud to the octree as our cloud and call addPointsFromInputCloud to push them into the structure.

Figure 6-2. How octrees are organized

Manipulating Voxels

In the end, we’re really only interested in the leaf nodes of the octree that contain the data we’re looking for. Normally, to reach all of the leaves, we’d have to traverse the entire tree end to end. However, PCL handily provides an iterator for leaf nodes, LeafNodeIterator:

// leaf node iterator

OctreePointCloud<PointXYZRGB>::LeafNodeIterator itLeafs (octree);

Let’s work through a simple example. We’re going to take an input cloud and display it voxelized with boxes drawn where there are filled voxels in space, as shown in Listing 6-3.

Listing 6-3. Drawing Voxel Boxes

viewer->removeAllShapes();

octreepc.setInputCloud(fgcloud);

octreepc.addPointsFromInputCloud();

//Get the voxel centers

double voxelSideLen;

std::vector<pcl::PointXYZRGB, Eigen::aligned_allocator<pcl::PointXYZRGB> > voxelCenters;

octreepc.getOccupiedVoxelCenters (voxelCenters);

std::cout << "Voxel Centers: " << voxelCenters.size() << std::endl;

voxelSideLen = sqrt (octreepc.getVoxelSquaredSideLen ());

//delete the octree

octreepc.deleteTree();

pcl::ModelCoefficients cube_coeff;

cube_coeff.values.resize(10);

cube_coeff.values[3] = 0; //Rx

cube_coeff.values[4] = 0; //Ry

cube_coeff.values[5] = 0; //Rz

cube_coeff.values[6] = 1; //Rw

cube_coeff.values[7] = voxelSideLen; //Width

cube_coeff.values[8] = voxelSideLen; //Height

cube_coeff.values[9] = voxelSideLen; //Depths

//Iterate through each center, drawing a cube at each location.

for (size_t i = 0; i < voxelCenters.size(); i++) {

//Drawn from center

cube_coeff.values[0] = voxelCenters[i].x;

cube_coeff.values[1] = voxelCenters[i].y;

cube_coeff.values[2] = voxelCenters[i].z;

viewer->addCube(cube_coeff, boost::lexical_cast<std::string>(i));

}

Given these capabilities, you may ask, “What’s the purpose of drawing voxel boxes?” While our ultimate goal is voxel-on-voxel collisions, our first goal is to subtract the background of our scene from our new data, as shown in Listing 6-4. First comes the code in block, and then we ll break down each step.

Listing 6-4. Background Subtraction

/*

* This file is part of the OpenKinect Project. http://www.openkinect.org

*

* Copyright (c) 2010 individual OpenKinect contributors. See the CONTRIB file

* for details.

*

* This code is licensed to you under the terms of the Apache License, version

* 2.0, or, at your option, the terms of the GNU General Public License,

* version 2.0. See the APACHE20 and GPL2 files for the text of the licenses,

* or the following URLs:

* http://www.apache.org/licenses/LICENSE-2.0

* http://www.gnu.org/licenses/gpl-2.0.txt

*

* If you redistribute this file in source form, modified or unmodified, you

* may:

* 1) Leave this header intact and distribute it under the same terms,

* accompanying it with the APACHE20 and GPL20 files, or

* 2) Delete the Apache 2.0 clause and accompany it with the GPL2 file, or

* 3) Delete the GPL v2 clause and accompany it with the APACHE20 file

* In all cases you must keep the copyright notice intact and include a copy

* of the CONTRIB file.

*

* Binary distributions must follow the binary distribution requirements of

* either License.

*/

#include <iostream>

#include <libfreenect.hpp>

#include <pthread.h>

#include <stdio.h>

#include <string.h>

#include <cmath>

#include <vector>

#include <ctime>

#include <boost/thread/thread.hpp>

#include "pcl/common/common_headers.h"

#include "pcl/features/normal_3d.h"

#include "pcl/io/pcd_io.h"

#include "pcl/visualization/pcl_visualizer.h"

#include "pcl/console/parse.h"

#include "pcl/point_types.h"

#include <pcl/kdtree/kdtree_flann.h>

#include <pcl/surface/mls.h>

#include "boost/lexical_cast.hpp"

#include "pcl/filters/voxel_grid.h"

#include "pcl/octree/octree.h"

///Mutex Class

class Mutex {

public:

Mutex() {

pthread_mutex_init( &m_mutex, NULL );

}

void lock() {

pthread_mutex_lock( &m_mutex );

}

void unlock() {

pthread_mutex_unlock( &m_mutex );

}

class ScopedLock

{

Mutex & _mutex;

public:

ScopedLock(Mutex & mutex)

: _mutex(mutex)

{

_mutex.lock();

}

~ScopedLock()

{

_mutex.unlock();

}

};

private:

pthread_mutex_t m_mutex;

};

///Kinect Hardware Connection Class

/* thanks to Yoda---- from IRC */

class MyFreenectDevice : public Freenect::FreenectDevice {

public:

MyFreenectDevice(freenect_context *_ctx, int _index)

: Freenect::FreenectDevice(_ctx, _index),

depth(freenect_find_depth_mode(FREENECT_RESOLUTION_MEDIUM, FREENECT_DEPTH_REGISTERED).bytes),

m_buffer_video(freenect_find_video_mode(FREENECT_RESOLUTION_MEDIUM,

FREENECT_VIDEO_RGB).bytes), m_new_rgb_frame(false), m_new_depth_frame(false)

{

}

//~MyFreenectDevice(){}

// Do not call directly even in child

void VideoCallback(void* _rgb, uint32_t timestamp) {

Mutex::ScopedLock lock(m_rgb_mutex);

uint8_t* rgb = static_cast<uint8_t*>(_rgb);

std::copy(rgb, rgb+getVideoBufferSize(), m_buffer_video.begin());

m_new_rgb_frame = true;

};

// Do not call directly even in child

void DepthCallback(void* _depth, uint32_t timestamp) {

Mutex::ScopedLock lock(m_depth_mutex);

depth.clear();

uint16_t* call_depth = static_cast<uint16_t*>(_depth);

for (size_t i = 0; i < 640*480 ; i++) {

depth.push_back(call_depth[i]);

}

m_new_depth_frame = true;

}

bool getRGB(std::vector<uint8_t> &buffer) {

Mutex::ScopedLock lock(m_rgb_mutex);

if (!m_new_rgb_frame)

return false;

buffer.swap(m_buffer_video);

m_new_rgb_frame = false;

return true;

}

bool getDepth(std::vector<uint16_t> &buffer) {

Mutex::ScopedLock lock(m_depth_mutex);

if (!m_new_depth_frame)

return false;

buffer.swap(depth);

m_new_depth_frame = false;

return true;

}

private:

std::vector<uint16_t> depth;

std::vector<uint8_t> m_buffer_video;

Mutex m_rgb_mutex;

Mutex m_depth_mutex;

bool m_new_rgb_frame;

bool m_new_depth_frame;

};

///Start the PCL/OK Bridging

//OK

Freenect::Freenect freenect;

MyFreenectDevice* device;

freenect_video_format requested_format(FREENECT_VIDEO_RGB);

double freenect_angle(0);

int got_frames(0),window(0);

int g_argc;

char **g_argv;

int user_data = 0;

//PCL

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr bgcloud (new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr voxcloud (new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr fgcloud (new pcl::PointCloud<pcl::PointXYZRGB>);

float resolution = 50.0; //5 CM voxels

// Instantiate octree-based point cloud change detection class

pcl::octree::OctreePointCloudChangeDetector<pcl::PointXYZRGB> octree (resolution);

//Instantiate octree point cloud

pcl::octree::OctreePointCloud<pcl::PointXYZRGB> octreepc(resolution);

bool BackgroundSub = false;

bool hasBackground = false;

bool grabBackground = false;

int bgFramesGrabbed = 0;

const int NUMBGFRAMES = 10;

bool Voxelize = false;

bool Oct = false;

bool Person = false;

unsigned int cloud_id = 0;

///Keyboard Event Tracking

void keyboardEventOccurred (const pcl::visualization::KeyboardEvent &event,

void* viewer_void)

{

boost::shared_ptr<pcl::visualization::PCLVisualizer> viewer =

*static_cast<boost::shared_ptr<pcl::visualization::PCLVisualizer> *> (viewer_void);

if (event.getKeySym () == "c" && event.keyDown ())

{

std::cout << "c was pressed => capturing a pointcloud" << std::endl;

std::string filename = "KinectCap";

filename.append(boost::lexical_cast<std::string>(cloud_id));

filename.append(".pcd");

pcl::io::savePCDFileASCII (filename, *cloud);

cloud_id++;

}

if (event.getKeySym () == "b" && event.keyDown ())

{

std::cout << "b was pressed" << std::endl;

if (BackgroundSub == false)

{

//Start background subtraction

if (hasBackground == false)

{

//Grabbing Background

std::cout << "Starting to grab backgrounds!" << std::endl;

grabBackground = true;

}

else

BackgroundSub = true;

}

else

{

//Stop Background Subtraction

BackgroundSub = false;

}

}

if (event.getKeySym () == "v" && event.keyDown ())

{

std::cout << "v was pressed" << std::endl;

Voxelize = !Voxelize;

}

if (event.getKeySym () == "o" && event.keyDown ())

{

std::cout << "o was pressed" << std::endl;

Oct = !Oct;

viewer->removeAllShapes();

}

if (event.getKeySym () == "p" && event.keyDown ())

{

std::cout << "p was pressed" << std::endl;

Person = !Person;

viewer->removeAllShapes();

}

}

// --------------

// -----Main-----

// --------------

int main (int argc, char** argv)

{

//More Kinect Setup

static std::vector<uint16_t> mdepth(640*480);

static std::vector<uint8_t> mrgb(640*480*4);

// Fill in the cloud data

cloud->width = 640;

cloud->height = 480;

cloud->is_dense = false;

cloud->points.resize (cloud->width * cloud->height);

// Create and setup the viewer

boost::shared_ptr<pcl::visualization::PCLVisualizer> viewer (new

pcl::visualization::PCLVisualizer ("3D Viewer"));

viewer->registerKeyboardCallback (keyboardEventOccurred, (void*)&viewer);

viewer->setBackgroundColor (0, 0, 0);

viewer->addPointCloud<pcl::PointXYZRGB> (cloud, "Kinect Cloud");

viewer->setPointCloudRenderingProperties

(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 1, "Kinect Cloud");

viewer->addCoordinateSystem (1.0);

viewer->initCameraParameters ();

//Voxelizer Setup

pcl::VoxelGrid<pcl::PointXYZRGB> vox;

vox.setLeafSize (resolution,resolution,resolution);

vox.setSaveLeafLayout(true);

vox.setDownsampleAllData(true);

pcl::VoxelGrid<pcl::PointXYZRGB> removeDup;

removeDup.setLeafSize (1.0f,1.0f,1.0f);

removeDup.setDownsampleAllData(true);

//Background Setup

pcl::RadiusOutlierRemoval<pcl::PointXYZRGB> ror;

ror.setRadiusSearch(resolution);

ror.setMinNeighborsInRadius(200);

device = &freenect.createDevice<MyFreenectDevice>(0);

device->startVideo();

device->startDepth();

boost::this_thread::sleep (boost::posix_time::seconds (1));

//Grab until clean returns

int DepthCount = 0;

while (DepthCount == 0) {

device->updateState();

device->getDepth(mdepth);

device->getRGB(mrgb);

for (size_t i = 0;i < 480*640;i++)

DepthCount+=mdepth[i];

}

//--------------------

// -----Main loop-----

//--------------------

double x = NULL;

double y = NULL;

int iRealDepth = 0;

while (!viewer->wasStopped ())

{

device->updateState();

device->getDepth(mdepth);

device->getRGB(mrgb);

size_t i = 0;

size_t cinput = 0;

for (size_t v=0 ; v<480 ; v++)

{

for ( size_t u=0 ; u<640 ; u++, i++)

{

iRealDepth = mdepth[i];

freenect_camera_to_world(device->getDevice(), u, v, iRealDepth, &x, &y);

cloud->points[i].x = x;//1000.0;

cloud->points[i].y = y;//1000.0;

cloud->points[i].z = iRealDepth;//1000.0;

cloud->points[i].r = 255;//mrgb[i*3];

cloud->points[i].g = 255;//mrgb[(i*3)+1];

cloud->points[i].b = 255;//mrgb[(i*3)+2];

}

}

std::vector<int> indexOut;

pcl::removeNaNFromPointCloud (*cloud, *cloud, indexOut);

if (grabBackground) {

if (bgFramesGrabbed == 0)

{

std::cout << "First Grab!" << std::endl;

*bgcloud = *cloud;

}

else

{

//concat the clouds

*bgcloud+=*cloud;

}

bgFramesGrabbed++;

if (bgFramesGrabbed == NUMBGFRAMES) {

grabBackground = false;

hasBackground = true;

std::cout << "Done grabbing Backgrounds - hit b again to subtract BG." <<

std::endl;

removeDup.setInputCloud (bgcloud);

removeDup.filter (*bgcloud);

}

else

std::cout << "Grabbed Background " << bgFramesGrabbed << std::endl;

viewer->updatePointCloud (bgcloud, "Kinect Cloud");

}

else if (BackgroundSub) {

octree.deleteCurrentBuffer();

fgcloud->clear();

// Add points from background to octree

octree.setInputCloud (bgcloud);

octree.addPointsFromInputCloud ();

// Switch octree buffers

octree.switchBuffers ();

// Add points from the mixed data to octree

octree.setInputCloud (cloud);

octree.addPointsFromInputCloud ();

std::vector<int> newPointIdxVector;

//Get vector of point indices from octree voxels

//which did not exist in previous buffer

octree.getPointIndicesFromNewVoxels (newPointIdxVector, 1);

for (size_t i = 0; i < newPointIdxVector.size(); ++i) {

fgcloud->push_back(cloud->points[newPointIdxVector[i]]);

}

//Filter the fgcloud down

ror.setInputCloud(fgcloud);

ror.filter(*fgcloud);

viewer->updatePointCloud (fgcloud, "Kinect Cloud");

}

else if (Voxelize) {

vox.setInputCloud (cloud);

vox.setLeafSize (5.0f, 5.0f, 5.0f);

vox.filter (*voxcloud);

viewer->updatePointCloud (voxcloud, "Kinect Cloud");

}

else

viewer->updatePointCloud (cloud, "Kinect Cloud");

viewer->spinOnce ();

}

device->stopVideo();

device->stopDepth();

return 0;

}As you may notice, the first quarter of the code is just like the bridge code we built in Chapter 3. The background subtraction code is all new, and we’ll be focusing on the functional part directly, in Listing 6-5.

Listing 6-5. Background Subtraction Function

if (grabBackground) {

if (bgFramesGrabbed == 0)

{

std::cout << "First Grab!" << std::endl;

*bgcloud = *cloud;

}

else

{

//concat the clouds

*bgcloud+=*cloud;

}

bgFramesGrabbed++;

if (bgFramesGrabbed == NUMBGFRAMES) {

grabBackground = false;

hasBackground = true;

std::cout << "Done grabbing Backgrounds - hit b again to subtract BG." <<

std::endl;

removeDup.setInputCloud (bgcloud);

removeDup.filter (*bgcloud);

}

else

std::cout << "Grabbed Background " << bgFramesGrabbed << std::endl;

viewer->updatePointCloud (bgcloud, "Kinect Cloud");

}

else if (BackgroundSub) {

octree.deleteCurrentBuffer();

fgcloud->clear();

// Add points from background to octree

octree.setInputCloud (bgcloud);

octree.addPointsFromInputCloud ();

// Switch octree buffers

octree.switchBuffers ();

// Add points from the mixed data to octree

octree.setInputCloud (cloud);

octree.addPointsFromInputCloud ();

std::vector<int> newPointIdxVector;

//Get vector of point indices from octree voxels

//which did not exist in previous buffer

octree.getPointIndicesFromNewVoxels (newPointIdxVector, 1);

for (size_t i = 0; i < newPointIdxVector.size(); ++i) {

fgcloud->push_back(cloud->points[newPointIdxVector[i]]);

}

//Filter the fgcloud down

ror.setInputCloud(fgcloud);

ror.filter(*fgcloud);

viewer->updatePointCloud (fgcloud, "Kinect Cloud");

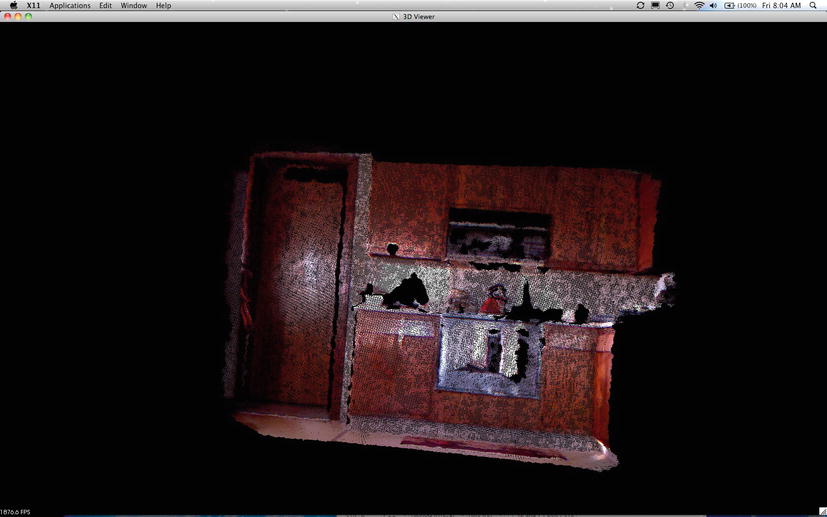

}First, we create a new foreground cloud (fgcloud). We also clear the octree’s buffer from the previous iteration. Next, we add the background cloud (bgcloud) to the octree – this is created by stacking successive point clouds (10 in this case), then removing duplicates. This is captured on the first set of iterations after turning on background subtraction. Then, we flip the buffer, keeping the new octree we just created in memory but freeing the input buffer. After that, we insert our new cloud data (cloud) into the octree. PCL has another handy working function called getPointIndicesFromNewVoxels, which provides a vector of indices within the second point cloud (cloud) that are not in the first (bgcloud). Next, we push back the points that are referred to by those indices into our new cloud (fgcloud) and display it. This results in the three output clouds shown in Figures 6-5 (background), 6-6 (full scene), and 6-7 (foreground).

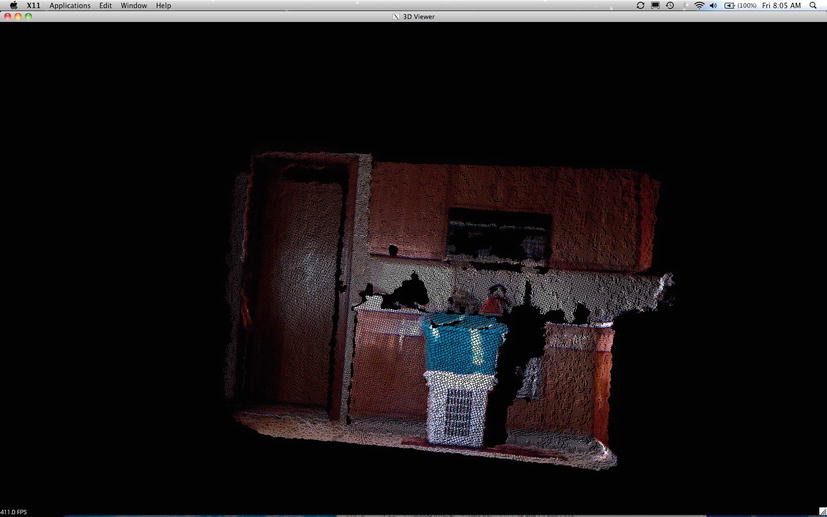

Figure 6-5. The background of the scene, precaptured

Figure 6-6. The full sceneas a point cloud

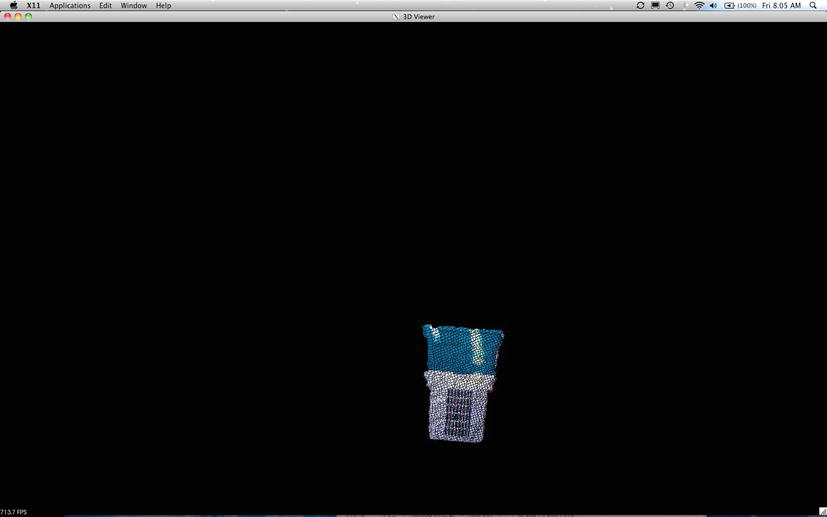

Figure 6-7. Foreground, after subtraction

Clustering Voxels

Now that we’re able to subtract the background and get the foreground only in these images, we’re going to need to identify people in the scene. And, in order to do that, we’re need to intelligently cluster our voxels together, measure the results, and judge whether or not each result is a person.

There are many clustering algorithms, but we’re going to discuss one of the most simple, yet effective, ones—Euclidean clustering. This method is similar to the 2-D flood fill technique. Here’s how it works:

- Create octree

Ofrom point cloudP. - Create a list of clusters,

C, and a cluster,L. - For every voxel

vin octreeO, do the following:- Add

v_itoL. - For every voxel

v_iin clusterL, do the following:- Search in the set

O^i_kfor neighbors in a sphere with radiusr. - For every neighbor

v^k_iinO^k_i, see if the voxel has already been added to a cluster, and if not, add it toL.

- Search in the set

- Add

- When all of the voxels in

Lhave been processed, addLtoC, and resetL. - Terminate when all voxels

v_iin octreeOhave been processed intoLs inC.

Again, PCL provides a function called EuclideanClusterExtraction to save us the pain of having to implement this algorithm ourselves. It is powered by a different tree implementation—a k-d tree. A k-dimensional (k-d) tree is another space-partitioning tree; this one is based around splitting along dimensions (octrees are split around a point). K-d trees are also always binary, a property that makes searching for nearest neighbors (or neighbors in a radius) extremely fast.

Clustering in PCL is easy to set up, as shown in Listing 6-6.

Listing 6-6. Clustering Voxels

viewer->removeAllShapes();

pcl::PointCloud<pcl::PointXYZRGB>::Ptr clustercloud (new pcl::PointCloud<pcl::PointXYZRGB>);

tree->setInputCloud (fgcloud);

std::vector<pcl::PointIndices> cluster_indices;

ec.setInputCloud( fgcloud );

ec.extract (cluster_indices);

pcl::PointXYZRGB cluster_point;

int numPeople = 0;

int clusterNum = 0;

std::cout << "Number of Clusters: " << cluster_indices.size() << std::endl;

for (std::vector<pcl::PointIndices>::const_iterator it = cluster_indices.begin (); it !=

cluster_indices.end (); ++it)

{

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_cluster (new

pcl::PointCloud<pcl::PointXYZRGB>);

float minX(0.0), minY(0.0), minZ(0.0), maxX(0.0), maxY(0.0), maxZ(0.0);

std::cout << "Number of indices: " << it->indices.size() << std::endl;

for (std::vector<int>::const_iterator pit = it->indices.begin ();

pit != it->indices.end (); pit++)

{

cluster_point = fgcloud->points[*pit];

if (clusterNum == 0) cluster_point.r = 255;

if (clusterNum == 1) cluster_point.g = 255;

if (clusterNum >= 2) cluster_point.b = 255;

cloud_cluster->points.push_back (cluster_point);

if (cluster_point.x < minX)

minX = cluster_point.x;

if (cluster_point.y < minY)

minY = cluster_point.y;

if (cluster_point.z < minZ)

minZ = cluster_point.z;

if (cluster_point.x > maxX)

maxX = cluster_point.x;

if (cluster_point.y > maxY)

maxY = cluster_point.y;

if (cluster_point.z > maxZ)

maxZ = cluster_point.z;

}

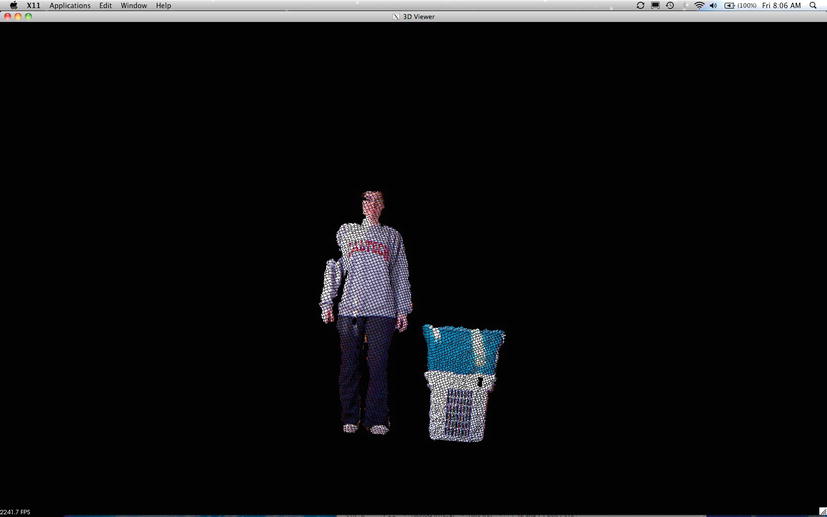

The KdTree line creates the k-d tree from the input data. A vector called cluster_indices is created to store the indices. setClusterTolerance sets the clustering reach, that is, how far we’ll look from each point (the radius in the equation in Listing 6-6). setMinClusterSize and setMaxClusterSize put a floor and ceiling on how many points we’ll try to categorize into a single cluster. All three of these values need to be tuned: if they’re too small, you’ll miss some objects; too large, and you’ll categorize multiple objects as one. Our search method is the k-d tree. The extract function fills the vector of PointIndices. When we run this code over our foreground, we end up with the image in Figure 6-8.

Figure 6-8. Clustered voxels

Tracking People and Fitting a Rectangular Prism

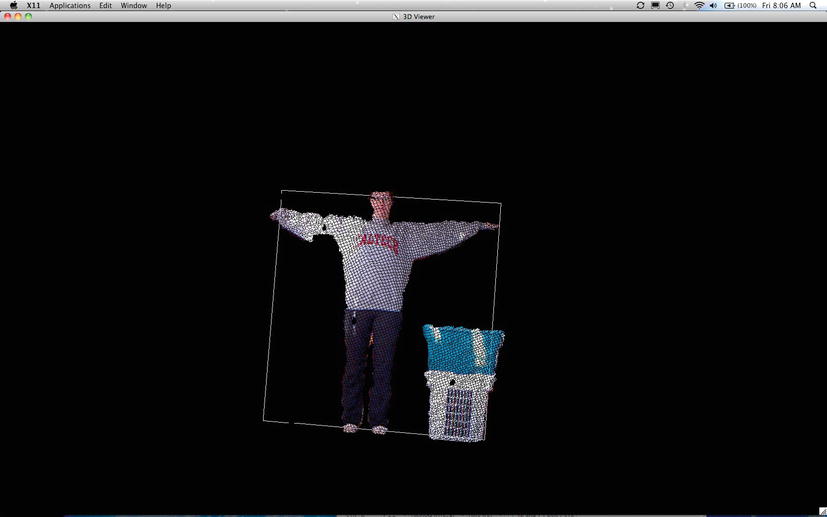

Now that we’ve clustered our data, we can proceed to tracking people and fitting a prism to the data. We’re going to use a very simple metric for tracking a person: adults are generally within a range of height and width, as well as being of a certain size. First, we’ll judge the height and width of our cluster, and then we’ll fit a convex hull to the points to learn what the area is. If a cluster’s area is within the metrics, it will be identified as a person, as shown in Listing 6-7.

Listing 6-7. Identifying People

//Use the data above to judge height and width of the cluster

if ((abs(maxY-minY) >= 1219.2) && (abs(maxX-minX) >= 101.6)) {

//taller than 4 foot? Thicker than 4 inches?

//Draw a rectangle about the detected person

numPeople++;

pcl::ModelCoefficients coeffs;

coeffs.values.push_back ((minX+maxX)/2);//Tx

coeffs.values.push_back ((minY+maxY)/2);//Ty

coeffs.values.push_back (maxZ);//Tz

coeffs.values.push_back (0.0);//Qx

coeffs.values.push_back (0.0);//Qy

coeffs.values.push_back (0.0);//Qz

coeffs.values.push_back (1.0);//Qw

coeffs.values.push_back (maxX-minX);//width

coeffs.values.push_back (maxY-minY);//height

coeffs.values.push_back (1.0);//depth

viewer->addCube(coeffs, "PersonCube"+boost::lexical_cast<std::string>(numPeople));

}

std::cout << "Cluster spans: " << minX << "," << minY << "," << minZ << " to " << maxX << ","

<< maxY << "," << maxZ << ". Total size is: " << maxX-minX << "," << maxY-minY << "," <<

maxZ-minZ << std::endl;

clusterNum++;

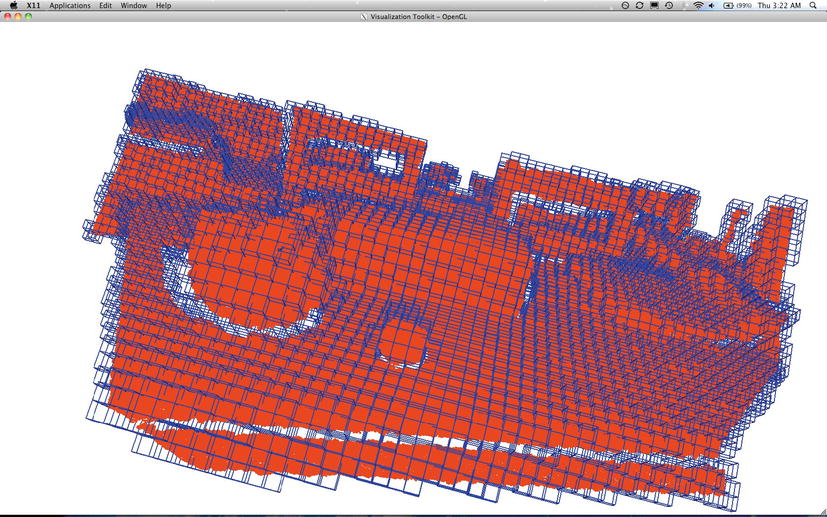

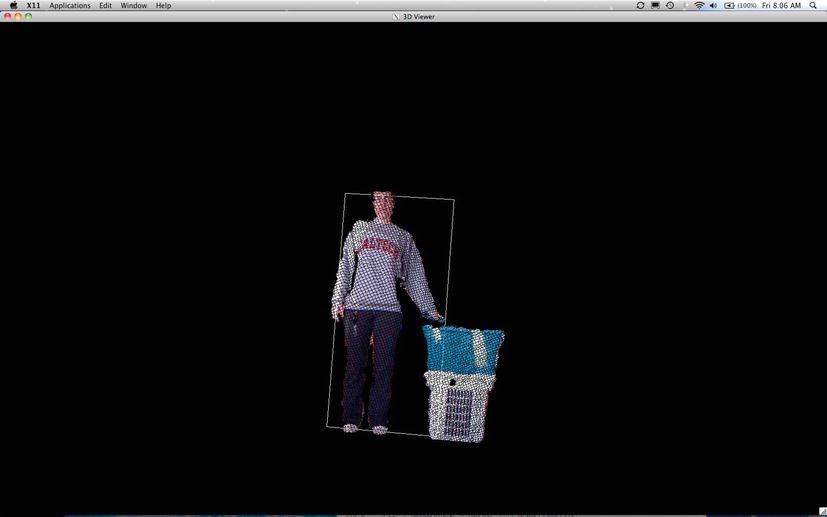

Given these metrics—that a person is larger than 4 feet by 4 inches in the Kinect’s view—we get output that looks like Figure 6-9 and Figure 6-10.

Summary

In this chapter, we delved deep into voxels—why and how to use them—and voxelization. We discussed octrees, background subtraction, clustering, and people tracking. You even had the opportunity to build your very own person tracker.