![]()

Index Maintenance

Like anything in life, indexes require maintenance. Over time, the performance benefits of indexes can wane or, through data modifications, their sizes and the underlying statistics can bloat. To prevent these issues, indexes must be maintained. If you do so, your database will remain a lean, mean querying machines.

When it comes to maintenance, there are six areas to consider:

- Index fragmentation

- Heap bloat and forwarding

- Columnstore fragmentation

- Statistics

- In-memory statistics

Each plays a key role in maintaining a properly indexed and well-performing database.

This chapter explains all these areas. You’ll learn about issues that arise from not maintaining indexes and review strategies for implementing a maintenance process. To illustrate how fragmentation occurs, there will be a number of simple demos. The statistics conversation will expand on the items discussed in Chapter 3 and lay out how to update statistics to keep them accurate.

The primary maintenance issue that can lead to a degradation of index performance is index fragmentation. Fragmentation happens when the pages in an index are no longer physically sequential. There are a few events in SQL Server that can lead to this.

As you can see, except for selecting data from the database, pretty much everything that you can do to an index can lead to fragmentation. Unless your database is read-only, you must pay attention to fragmentation and address it in an index before it becomes an issue.

Fragmentation Operations

The best way to understand fragmentation is to see it in action. In Chapter 3, you looked at the information returned by the dynamic management object (DMO) sys.dm_index_physical_stats. In this section, you’ll review a number of scripts that cause fragmentation and then use the DMO to investigate the amount of fragmentation that has occurred.

As mentioned, fragmentation occurs when physical pages within an index are not sequential. When an insert occurs and the new row is not placed at the beginning or ending of the pages for the index, the new row will be placed on a page that already has other rows on it. If there is not enough room on the page for the new row, then the page will split—leading to fragmentation of the index. Fragmentation is the physical result of page splits in the index.

The first operation that can lead to index fragmentation is an INSERT operation. This isn’t usually considered the most likely operation to result in fragmentation, but there are database design patterns that can lead to fragmentation. There are two areas in which INSERT operations can lead to fragmentation: clustered and nonclustered indexes.

The most common pattern for designing clustered indexes is to place the index on a single column with a value that is ever-increasing. This is often done using an int data type and the IDENTITY property. When this pattern is followed, the chances of fragmentation occurring during inserts are relatively rare. Unfortunately, this isn’t the only clustered index pattern that exists, and the others can often cause fragmentation. For example, using business keys or uniqueidentifier data type values often causes fragmentation.

Clustered indexes that use uniqueidentifier data type values often use the NEWID() function to generate a random, unique value to serve as the clustering key. This value is unique but not ever-increasing. The most recent value generated may or may not be after the previous value. Because of this, when a new row is inserted into the clustered index, it is most likely to be placed between a number of other rows already in the index. And, as mentioned, if there isn’t enough room in the index, fragmentation will occur.

To demonstrate fragmentation caused by the use of uniqueidentifier data type values, try the code in Listing 9-1. This code creates a table named dbo.UsingUniqueidentifier. It is populated with rows from sys.columns, and then a clustered index is added. At this point, all the pages in the indexes are physically sequential. Run the code in Listing 9-2 to view the results shown in Figure 9-1; these results show that the average fragmentation for the index is 0.00 percent.

Figure 9-1. Starting fragmentation results (results may vary)

With the table built with a clustered index based on uniqueidentifier data types, you are now ready to perform an INSERT into the table to see the effect that the insert has on the index. To demonstrate, insert all the rows in sys.objects into dbo.UsingUniqueidentifier using the code in Listing 9-3. After the insert, you can review the fragmentation of the index in the results, using Listing 9-2 again. Your results should be similar to those shown in Figure 9-2, which shows fragmentation in the clustered index at almost 70 percent at index level 0 after adding 645 rows to the table.

Figure 9-2. Post-INSERT fragmentation results (percentage results may vary)

As this code sample demonstrated, clustered indexes that are based on values that are not ever-increasing result in fragmentation. The best example of this type of behavior is through the use of uniqueidentifiers. This can also happen when the clustering key is a computed value or based on a business key. When looking at business keys, if a random purchase order is assigned to an order, then that value will likely behave similar to a uniqueidentifier data type value.

The other way in which INSERT operations can affect fragmentation is on the nonclustered indexes. While the clustered index values may be ever-increasing values, the values for the columns in the nonclustered index won’t necessarily have that same quality. A good example of this is when indexing the name of a product in a nonclustered index. The next record inserted into the table may start with the letter M and will need to be placed near the middle of the nonclustered index. If there isn’t room at that location, a page split will occur, and fragmentation will result.

To demonstrate this behavior, add a nonclustered index to the table dbo.UsingUniqueidentifier that you used in the previous demonstrations. Listing 9-4 shows the schema for the new index. Before inserting more records to see the effect of inserting into a nonclustered index, run Listing 9-2 again. Your results should be similar to those in Figure 9-3.

Figure 9-3. Nonclustered index fragmentation results

At this point, you need to insert more records into dbo.UsingUniqueidentifier. Use Listing 9-3 again to insert more records into the table and then use Listing 9-4 to view the state of fragmentation in the nonclustered index. With this complete, your nonclustered index has gone from no fragmentation to more than 40 percent fragmentation, as shown in Figure 9-4.

Figure 9-4. Nonclustered Post-INSERT fragmentation results

Whenever you perform INSERT operations, there will always be a way in which fragmentation can occur. This will happen on both clustered and nonclustered indexes.

Another operation that can lead to fragmentation is an UPDATE operation. There are two main ways in which an UPDATE operation will result in fragmentation. First, the data in the record no longer fits on the page in which it currently resides. Second, the key values for the index changes, and the index location for the new key value are not on the same page or don’t fit on the page where the record is destined. In both of these cases, the page splits, and fragmentation occurs.

To demonstrate how these situations lead to fragmentation, you’ll first look at how increasing the size of a record in an update can lead to fragmentation. For this, you’ll create a new table and insert a number of records into it. Then you’ll add a clustered index to the table. The code for this is in Listing 9-5. Using the script from Listing 9-6 again, you can see that there is no fragmentation on the clustered index, as the results in Figure 9-5 show. One thing to pay attention to with these fragmentation results is that the average page space used is almost 90 percent. Because of this, any significant growth in record size will likely fill the available space on the pages.

Figure 9-5. Initial UPDATE fragmentation results

Now increase the size of some of the rows in the index. To accomplish this, execute the code in Listing 9-7. This code will update the JunkValue column for every five rows from a 1,000-character value to a 2,000-character value. Using Listing 9-2 to view current index fragmentation, you can see that, through the results in Figure 9-6, the clustered index is now more than 99 percent fragmented, and the average page space used has dropped to about 50 percent. As this code demonstrates, when a row increases in size during an UPDATE operation, there can be significant amounts of fragmentation.

![]()

Figure 9-6. UPDATE fragmentation results after record length increase

As mentioned, the second way in which an index can incur fragmentation is by changing the key values for the index. When the key values for an index change, the record may need to change its position in the index. For instance, if an index is built on the name of the product, then changing the name from Acme Mop to XYZ Mop will change where the product name will be placed in the sorting for the index. Changing the location of the record in the index may place the record on a different page, and if there isn’t sufficient space on the new page, then a page split and fragmentation may occur.

To demonstrate this concept, execute Listing 9-8 and then use Listing 9-6 to obtain the results shown in Figure 9-7. You will see that for the new nonclustered index there is no fragmentation in the index.

![]() Note If key values for a clustered index change often, that may indicate that the key values selected for the clustered index are inappropriate.

Note If key values for a clustered index change often, that may indicate that the key values selected for the clustered index are inappropriate.

Figure 9-7. UPDATE fragmentation results after adding nonclustered index

At this point, you need to modify some key values. Using Listing 9-9, perform UPDATE activity on the table and update one of every nine rows. To simulate changing the key values, the UPDATE statement reverses the characters in the column. This small amount of activity is enough to cause a significant amount of fragmentation. As the results in Figure 9-8 illustrate, the nonclustered index went from no fragmentation to more than 30 percent fragmentation.

Figure 9-8. UPDATE fragmentation results after changing index key values

One thing to note is that the fragmentation on the clustered index did not change with these updates. Not all updates will result in fragmentation—only those that move data around because of space being unavailable on the pages where the record is currently stored.

The third type of operation that causes fragmentation is DELETE operations. Deletes are a bit different in the nature in which they create fragmentation within a database. Instead of relocating pages because of page splits, a delete can lead to pages being removed from an index. Gaps will then appear in the physical sequence of pages for the index. Since the pages are no longer physically sequential, they are considered fragmented—especially since once the pages are deallocated from the index, they can be reallocated to other indexes for a more traditional form of fragmentation.

To demonstrate this type of behavior, create a table, populate it with a number of records, and then add a clustered index. Listing 9-10 shows the script for these tasks. Run the script followed by the script from Listing 9-11 to get the current fragmentation for the clustered index. Your results should match those in Figure 9-9. As you can see from the average fragmentation in the percent column (avg_fragmentation_in_percent), there is no fragmentation currently in the index.

![]()

Figure 9-9. Fragmentation results before DELETE operation

Now, to demonstrate fragmentation caused by DELETE operations, you’ll delete every other 50 records in the table using the code in Listing 9-12. As before, you’ll use Listing 9-11 to view the state of fragmentation in the index. The results, shown in Figure 9-10, indicate that the DELETE operation resulted in about 13 percent fragmentation. With DELETE operations, the rate in which fragmentation usually occurs isn’t too fast. Also, since the fragmentation is not the result of page splits, the order of the pages does not become logically out of order. Instead, there are gaps in the contiguous pages.

Figure 9-10. Fragmentation results after DELETE

As a final note on DELETE operations, the fragmentation may not appear immediately after the DELETE operation. When records are deleted, they are first marked for deletion before the record itself is actually deleted. While it is marked for delete, the record is considered to be a ghost record. During this stage, the record is logically deleted but is physically still present in the index. At a future point, after the transaction has been committed and a CHECKPOINT has completed, the ghost cleanup process will physically remove the row. At this time, the fragmentation will show in the index.

The last type of operation that leads to fragmentation is when databases are shrunk. Databases can be shrunk using either DBCC SHRINKDATABASE or DBCC SHRINKFILE. These operations can be used to shrink the size of a database or its files. When they are used, the pages at the end of a data file are relocated toward the beginning of the data file. For their intended purpose, shrink operations can be effective tools.

Unfortunately, these shrink operations do not take into account the nature of the data pages that are being moved. To the shrink operation, a data page is a data page is a data page. The priority of the operation is that pages at the end of the data file find a place at the beginning of the data file. As discussed, when the pages of an index are not physically stored in order, the index is considered fragmented.

To demonstrate the fragmentation damage that a shrink operation can cause, you’ll create a database and perform a shrink on it; the code appears in Listing 9-14. In this example, there are two tables: FirstTable and SecondTable. Some records will be inserted into each table. The inserts will alternate back and forth with three inserts into FirstTable and two inserts into SecondTable. Through these inserts, there will be alternating bands of pages allocated to the two tables. Next, SecondTable will be dropped, which will result in unallocated data pages between each of the bands of pages for FirstTable. Using Listing 9-13 again will show that a small amount of fragmentation exists on FirstTable, shown in Figure 9-11.

Figure 9-11. Fragmentation of FirstTable after inserts

With the database prepared, the next step is to shrink the database, the purpose of which is to recover the space that SecondTable had been allocated and trim down the size of the database to only what is needed. To perform the shrink operation, use the code in Listing 9-15. When the SHRINKDATABASE operation completes, you can see in Figure 9-12 that running the code from Listing 9-13 causes the fragmentation for the index to increase from less than 1 percent fragmentation to more than 32 percent fragmentation. This is a significant change in fragmentation on a database with just a single table. Consider the effect of a shrink operation on a database with hundreds or thousands of indexes.

Figure 9-12. Fragmentation of FirstTable after shrink operation

This has been a simple example of the damage in terms of fragmentation that a shrink operation can have on an index. As was evident even with this example, the shrink operation led to a significant amount of fragmentation. Most SQL Server database administrators will agree that shrink operations should be an extremely rare operation on any database. Many DBAs are also of the opinion that this operation should never be used on any database for any reason. The guideline that is most often recommended is to be extremely cautious when shrink database operations are performed. Also, don’t get caught in a cycle of shrinking a database to recover space and causing fragmentation and then expanding the database through defragmenting the indexes. This is only a waste of time and resources that could be better spent on real performance and maintenance issues.

Fragmentation Variants

Traditionally, when people consider index maintenance, the primary focus is on fragmentation within the clustered or nonclustered index. This isn’t the only consideration to keep in mind when maintaining indexes. You also need to consider whether the table or index has bloat, forwarding, or segmentation. In this section, I’ll review two other areas in which fragmentation type maintenance can be required on tables.

Heap Bloat and Forwarding

The first area I’ll cover is heap bloat and forwarding. As discussed in Chapter 3, heaps are collections of unordered pages in which data for a table is stored. As new rows are added to the table, the heap grows, and new pages are allocated. Insert and update operations can cause changes to heaps that can require maintenance on the table.

To begin, you’ll look at bloating within a heap. For heaps, bloating occurs when records are deleted from the heap without being reused for new records. As discussed in Chapter 8, this isn’t a matter of records going to new pages but an overall decline in the number of records in the table. The pages will be reused, but when they aren’t, the pages remain allocated, and this can have an impact on performance.

To demonstrate this activity, let’s review the script in Listing 9-16. In the script, it starts with a heap table that has 400 records inserted into it, and then half the records are deleted, leaving 200 records in the table. As shown in Figure 9-13, the record count for the table reflects these changes, but in both cases the DMV results show that there are 100 pages associated with the table. This is because pages are not removed from a heap unless maintenance activities force this to occur. Through the ALTER TABLE statement on dbo.HeapTable with the REBUILD option, the table is rebuilt, and the excess pages are flushed.

Figure 9-13. Results from deleting from a heap

To help emphasize that the pages are still in the table, Figure 9-14 shows the pages that are read when counting all rows in the table, further demonstrating that there are 100 pages being accessed. When considering the impact on performance for heaps after a delete, if there is an excessive number of pages in a heap compared to the amount of data, this will increase the amount of effort required by SQL Server to execute the query. In the case of this demonstration, the COUNT(*) queries are processing twice the amount of data that is required.

Figure 9-14. I/O impact from deletes on a heap

The other area of consideration for the maintenance of heaps is the volume of forwarded records in the table. Forwarded records, discussed in Chapter 3, are records within a heap that no longer fit in the original location in which they were added to the heap. To accommodate the change in the size of the record, the record is stored on another page, and the previous record location includes a pointer to the new location.

The impact of this change is an increase in the number of pages in the heap, because new pages are added for existing records, and it takes an additional I/O operation to go from the first page to the forwarded page when looking up a record. While this may not appear to be a huge issue, in aggregate the accumulated impact of forwarded records increases the amount of I/O for a system and adds to latency in query execution.

To demonstrate the impact of forwarded records on queries, execute the code in Listing 9-17. This script creates a table with a heap, runs a number of queries, updates the records to cause forwarding of heap records to occur, and then completes by reexecuting the previous collection of queries.

There are three queries from Listing 9-17 that are included to demonstrate the impact of forwarded records on heaps.

- SELECT *: To demonstrate the impact of an index scan

- SELECT with equality predicate: To demonstrate the impact on a singleton lookup

- SELECT with inequality predicate: To demonstrate the impact on a range lookup

For the SELECT * query, before the forwarded records are in the heap, the query executes with 26 reads, shown in Figure 9-15. After the forward records are introduced, the reads increase to 144.This increase is because of the new pages added to the heap to accommodate the increases in the row sizes. With the second query, the singleton lookup grows from three reads to four reads, which represents the additional read required to go from the original location for the record to the forwarding location. In the last query, the range query with the lookup executes with 26 reads, but after the forwarded records are added to the table, the reads jump to 30 reads.

Figure 9-15. I/O statistics for forwarded record queries

The overall effect of the forwarded records is an increase in reads. While the increase may not be significant from a per-query basis, after time the impact adds up. Scans of heaps with forwarded records access more pages, and lookups require an extra I/O. Reducing the impact of forwarded records in heaps is an important part of maintaining indexes.

Columnstore Fragmentation

Columnstore indexes are one of SQL Server’s newer features. An interesting component to columnstore indexes is the read-only nature of the segments. As discussed in Chapter 2, when new columnstore indexes are added to a delta table, the delta table is eventually compressed into a columnstore format. Also, since the segments are read-only, deletes don’t immediately impact the segments, resulting in fragments of the read-only segments that contain data that is no longer part of the table.

To demonstrate both of these concepts, execute the code in Listing 9-18 to prepare a table with a clustered columnstore index. After the table is built, the two sets of rows are inserted. The first set contains 1,000 rows, and the index is reorganized to force the row group to compress to columnstore format. The second set contains 105,000 rows, which is more than the 104,000 threshold that automatically triggers use of the columnstore format. As shown in Figure 9-16, the inserted records are all compressed to columnstore format.

![]() Note Depending on your environment, the script in Listing 9-18 can take a while to run.

Note Depending on your environment, the script in Listing 9-18 can take a while to run.

Figure 9-16. Columnstore row group resultset

The piece that is interesting at this point is that the row groups created are much smaller than a typical row group. And since they are smaller, there may be an opportunity to optimize the number of pages that they use by increasing the number of records per row group. This can be done by maintaining the columnstore index and rebuilding it. To show the value in rebuilding columnstore indexes, execute the code in Listing 9-19. Through this, you can see that the reads before the rebuild are 4,024 and drop to 3,513 after the rebuild, shown in Figure 9-17. This is only a 12 percent drop in pages, but over time and with large fact tables under the columnstore indexes, these types of excessive allocation of pages will impact performance. Additionally, comparing Figures 9-16 to 9-18, the table also has far fewer row groups, with 24 versus 14 after the rebuild.

Figure 9-17. I/O statistics for forwarded record queries

Figure 9-18. Row group statistics after columnstore index rebuild

The next type of fragmentation that occurs with columnstore indexes is through delete operations. While this is called fragmentation, in actuality when deletes occur on columnstore indexes, the rows are not removed from the index; they are only marked as deleted. Because of this, pages allocated to a clustered columnstore index that have all their records deleted will still be active within the index.

To show how this impacts, you’ll use the script in Listing 9-20 to delete all the 2007 data from the table. Then another statement will rebuild the columnstore index. Between these operations, you’ll run an aggregate query to provide an operation to see the impact of deletes on the I/O of queries.

After running these queries and the index is rebuilt, the results are fairly interesting. If you start with the first query, there are 3,522 I/Os for the aggregate query, shown in Figure 9-19.Deleting a year’s worth of data results in the aggregate query requiring 3.525 I/Os, which is an increase from the original query with nearly 300 less rows returned. This is because of the pages required to manage the deleted rows. After rebuilding, the number of I/Os drops significantly to 2,675.

Figure 9-19. Statistics I/O results for delete operation demonstration

Through the addition of new rows and the deletion of existing rows, there are reasons to consider the maintenance requirements of columnstore indexes. The issues that affect these indexes are not the same as traditional clustered indexes, but they are significant nonetheless.

Fragmentation Issues

You’ve seen a number of ways in which indexes can become fragmented, but there hasn’t been a discussion about why this is important. There are a couple important reasons why fragmentation within indexes can be a problem.

- Index I/O

- Contiguous reads

As the fragmentation of an index increases, each of these two areas affects the index’s ability to perform well. In some worst-case scenarios, the level of fragmentation can be so severe that the query optimizer will stop using the index in query plans.

When it comes to I/O, this is an area of SQL Server where it is easy to have performance bottlenecks; likewise, there are a multitude of solutions to help mitigate the bottleneck. From the perspective of this chapter, you are concerned with the effect of fragmentation on I/O.

Since page splits are often the cause of fragmentation, they provide a good place to start investigating the effect of fragmentation on I/O. To review, when a page split occurs, half the contents on the page are moved off the page and onto another page. Generally speaking, if the original page was 100 percent full, then both pages would be about 50 percent full. In essence, it will take two I/Os to read from storage the same amount of information that required one I/O prior to the page split. This increase in I/Os will drive up reads and writes and thus can have a negative effect on performance.

To validate that effect of fragmentation on I/O, let’s walk through another fragmentation example. This time you’ll build a table, populate it with some data, and perform an update to generate page splits and fragmentation. The code in Listing 9-22 will perform these operations. The last portion of the script will query sys.dm_db_partition_stats to return the number of pages that have been reserved for the index. Execute the fragmentation script from Listing 9-21. You’ll see the index at this point is more than 99 percent fragmented, and the results from Listing 9-14 show the index is using 209 pages. See Figure 9-20 for the results.

Figure 9-20. Fragmentation of CLUS_IndexIO

But would removing the fragmentation from the index have a noticeable impact on the number of pages in the index? As the demo will demonstrate, reducing fragmentation does have an impact.

Continuing, the next thing to do is to remove the fragmentation from the index. To accomplish this, execute the ALTER INDEX statement in Listing 9-23 to remove the fragmentation. In the rest of the chapter, I’ll discuss the mechanics of removing fragmentation from an index, so for the time being, this statement won’t be explained. The effect of this command is that all the fragmentation has been removed from the index. Figure 9-21 shows the results from Listing 9-23. They show that the number of pages that the index is using dropped from 289 to 210. The effect of removing the fragmentation is an impressive reduction of almost 30 percent in pages in the index.

Figure 9-21. Page count resulting from rebuild operations

This proves that fragmentation can have an effect on the number of pages in an index. The more pages in an index, the more reads are required to get the data you need. Reducing the count of pages can help with allowing SQL Server databases to process more data in the same number of reads or to improve the speed in which it reads the same information across fewer pages.

The other negative effect that fragmentation can have on performance relates to contiguous reads. Within SQL Server, contiguous reads affect its ability to utilize read-ahead operations. Read-ahead allows SQL Server to request pages into memory that are going to be used in the immediate future. Rather than waiting for an I/O request to be generated for the page, SQL Server can read large blocks of pages into memory with the expectation that the data pages will be used by the query in the future.

Going back to indexes, I previously discussed how fragmentation within an index occurs as a result of breaks in the continuity of physical data pages in an index. Every time there is a break in the physical pages, I/O operations must change the place in which data is being read from SQL Server. This is how fragmentation creates a hindrance in contiguous reads.

SQL Server offers a number of ways in which fragmentation can be removed or mitigated within an index. Each of the methods has pros and cons associated with using it. In this section, you’ll look at the options and the reasons for using each one.

The first method for removing fragmentation from an index is to rebuild the index. Rebuilding an index builds a new contiguous copy of the index. When the new index is complete, the existing index is dropped. Index rebuild operations are accomplished through either a CREATE INDEX or ALTER INDEX statement. Typically, indexes with more than 30 percent fragmentation are considered good candidates for index rebuilds. Note that 30 percent and lower levels of fragmentation in most databases will not show as a large negative impact in performance. The usage of 30 percent is a good starting point, but each database and index usage should be reviewed and adjusted if performance shows more negative effects with less than 30 percent fragmentation of the index.

The chief benefit of performing an index rebuild is that the resulting new index has contiguous pages. When an index is highly fragmented, sometimes the best way to resolve the fragmentation is to simply start over with the index and rebuild. Another benefit of rebuilding an index is that the index options can be modified during the rebuild. Lastly, for most indexes, the index can remain online while it is being rebuilt.

![]() Note Since SQL Server 2012, clustered indexes with varchar(max), nvarchar(max), varbinary(max), and XML data types can be rebuilt online. Clustered indexes still cannot be rebuilt online when they contain the following data types: image, ntext, or text. Also, online rebuilds are limited to SQL Server Enterprise, Developer, and Evaluation editions. Additionally, online rebuilds require double the space for the index, which can be a problem with large tables.

Note Since SQL Server 2012, clustered indexes with varchar(max), nvarchar(max), varbinary(max), and XML data types can be rebuilt online. Clustered indexes still cannot be rebuilt online when they contain the following data types: image, ntext, or text. Also, online rebuilds are limited to SQL Server Enterprise, Developer, and Evaluation editions. Additionally, online rebuilds require double the space for the index, which can be a problem with large tables.

The first option for rebuilding an index is to use the CREATE INDEX statement, shown in Listing 9-24. This is accomplished through the use of the DROP_EXISTING index option. There are a few reasons to choose the CREATE INDEX option instead of ALTER INDEX.

- The index definition needs to be changed, such as when the columns need to be added or removed or their order needs to change.

- The index needs to be moved from one file group to another.

- The index partitioning needs to be modified.

The other option is the ALTER INDEX statement, shown in Listing 9-25. This option utilizes the REBUILD option in the syntax. Conceptually, this accomplishes that same thing as the CREATE INDEX statement but with the following benefits:

- More than one index can be rebuilt in a single statement.

- A single partition of an index can be rebuilt.

The primary downside to index rebuilds is the amount of space that is required for the index during the rebuild operation. At a minimum, there should be 120 percent of the size of the current index available within the database for the rebuilt index. The reason for this is that the current index will not be dropped until after the rebuild is completed. For a short time, the index will exist twice in the database.

There are two ways to mitigate some of the space required for an index during a rebuild. First, the SORT_IN_TEMPDB index option can be used to reduce the amount of space needed for intermediate results. You will still need room in the database for two copies of the index, but the 20 percent buffer won’t be necessary. The second way to mitigate space is to disable the index prior to the rebuild. Disabling an index drops the index and data pages from an index while retaining the index metadata. This will allow a rebuild of the index in the space that the index previously occupied. Be aware that the disabling option applies only to nonclustered indexes.

An alternative to an index rebuild is to reorganize an index. This type of defragmentation happens just as it sounds. Data pages in the index are reordered across the pages already allocated to the index. After the reorganization is complete, the physical order of pages in an index matches the logical order of pages. Indexes should be reorganized when they are not heavily fragmented. In general, indexes fragmented less than 30 percent are reorganization candidates.

To reorganize an index, the ALTER INDEX syntax is used (see Listing 9-26) with the REORGANIZE option. Along with that option, the reorganization allows for a single partition to be reorganized. The REBUILD option does not allow this.

There are a couple of benefits to using the REORGANIZE option. First, indexes are online or available for use by the optimizer in a new execution plan or in cached execution plans for the duration of the reorganization. Second, the process is designed around minimal resource usage, which significantly lowers the chance that locking and blocking issues will occur during the transaction.

The downside to index reorganizations is that the reorganization uses only the data pages already allocated to the index. With fragmentation, the extents allocated to one index can often be intertwined with the extents allocated to other indexes. Reordering the data pages won’t make the data pages any more contiguous than they currently are, but it will make certain that the pages allocated are sorted in the same order as the data itself.

The third way to defragment an index is to simply drop the index and re-create it. I include this option for completeness, but note that it is not widely practiced or advised. There are a few reasons that illustrate why dropping and creating can be a bad idea.

First, if the index is a clustered index, then all the other indexes will need to be rebuilt when the clustered index is dropped. Clustered indexes and heaps use different structures for identifying rows and storing data. The nonclustered indexes on the table will need information on where the record is and will need to be re-created to obtain this information.

Next, if the index is a primary key or unique, there are likely other dependents on the index. For instance, the index may be referenced in a foreign key. Also, the index could be tied to a business rule, such as uniqueness, that cannot be removed from the table, even in a maintenance window.

The third reason to avoid this method is that it requires knowledge of all properties on an index. With the other strategies, the index retains all the existing index properties. By having to re-create the index, there is a risk that a property or two may not be retained in the DDL for the index and important aspects of an index could be lost.

Lastly, after an index is dropped from that table, it cannot be used. This should be an obvious issue, but it’s often overlooked when considering this option. The purpose of an index is usually the performance improvements that it brings; removing it from the table takes those improvements with it.

So far I’ve discussed how fragmentation occurs, why it is an issue, and how it can be removed from indexes. It is important to apply this knowledge to the indexes in your databases. In this section, you will learn two ways in which the defragmentation of indexes can be automated.

Maintenance Plans

The first automation option available is defragmentation through maintenance plans, which offer the opportunity to quickly create and schedule maintenance for your indexes that will either reorganize or rebuild your indexes. For each of the types of index defragmentation, there is a task available in the maintenance plans.

There are a couple of ways in which maintenance plans can be created. For the purposes of brevity, I will assume you are familiar with maintenance plans in SQL Server and thus will focus on the specific tasks related to defragmenting indexes.

The first task available is the Reorganize Index Task. This task provides a wrapper for the ALTER INDEX REORGANIZE syntax from the previous section. Once configured, this task will reorganize all the indexes that match the criteria for the task.

There are a few properties that need to be configured when using the Reorganize Index Task (see Figure 9-22).

- Connection: The SQL Server instance the task will connect to when it executes.

- Database(s): The databases the task will connect to for reorganizing. The options for this property are

- All databases

- All system databases

- All user database

- These specific databases (a list of available databases is included and one must be selected)

- Object: Determines whether the reorganization will be against tables, views, or tables and views.

- Selection: Specifies the tables or indexes affected by this task. This is not available when Tables and Views is selected in the Object box.

- Compact large objects: Determines whether the reorganize uses the option ALTER INDEX LOB_COMPACTION = ON.

Figure 9-22. Properties window for Reorganize Index Task

The main issue with the Reorganize Index Task is that there isn’t any filtering on the level of fragmentation or the size of the index. While indexes do remain online during reorganizations, there could be a fair amount of unnecessary work done.

The other task available is the Rebuild Index Task. This task provides a wrapper for the ALTER INDEX REBUILD syntax. Once configured, this task rebuilds all the indexes that match the criteria for the task.

Similar to the Reorganize Index Task, the Rebuild Index Task has a number of properties that need to be configured before using it (see Figure 9-23).

- Connection: The SQL Server instance the task will connect to when it executes.

- Database(s): The databases the task will connect to for rebuilding. The options for this property are

- All databases

- All system databases

- All user database

- These specific databases (a list of available databases is included and one must be selected)

- Object: Determines whether the rebuild will be against tables, views, or tables and views.

- Selection: Specify the tables or indexes affected by this task. This is not available when Tables and Views is selected in the Object box.

- Default free space per page: Specifies whether the rebuild should use the current fill factor on the index.

- Change free space per page to: Allows the rebuild to specify a new fill factor when the index is rebuilt.

- Sort results in tempdb: Determines whether the rebuild uses the option ALTER INDEX SORT_IN_TEMPDB = ON.

- Keep index online while reindexing: Determines whether the rebuild uses the option ALTER INDEX ONLINE = ON. For indexes that cannot be rebuilt online, there is an additional option to determine whether to skip or rebuild the index offline.

Figure 9-23. Properties window for Rebuild Index Task

The downside to the Rebuild Index Task is similar to that of the other index task: there are no options for filtering indexes based on fragmentation or size. This issue can be a bit more significant with the rebuild task. An index rebuild creates a new copy of the index in order to do the rebuild. If this task is configured to rebuild every index in a database, the task must then rewrite every index in the database every time the task executes. For instance, if a database has 1TB of indexes in it, the Rebuild Index Task will rebuild and write 1TB of indexes into the database. Depending on the frequency of transaction log backups, this could result in some significant log file growths overnight.

Maintenance Plan Summary

Maintenance plans offer a way to get started with removing fragmentation from your indexes right away. The tasks can be configured and scheduled in a matter of minutes. The downside to these tasks is that they are extremely limited in their ability to choose the appropriate indexes to defragment. While they offer the ability to get started today, their brute-force nature requires some caution in determining when it is appropriate to use them.

An alternative approach to defragmenting databases is to use a T-SQL script to defragment the indexes intelligently. In this section, I’ll walk you through the steps necessary to defragment all the indexes in a single database. Instead of “doing everything,” as the maintenance plan tasks would, the script will pick the indexes that will best benefit from defragmentation and ignore those that would receive little or no benefit.

To accomplish the filtering, you’ll apply some defragmentation best practices that help determine whether to defragment the index and what method should be applied. The guidelines that you will use are

- Reorganize indexes with less than 30 percent fragmentation.

- Rebuild indexes with 30 percent or more fragmentation.

- Ignore indexes that have less than 1,000 pages.

- If you have Enterprise Edition, use online rebuilds when the data needs to be accessible during maintenance.

- If the clustered index is being rebuilt, rebuild all indexes in the table.

![]() Note Just because an index is fragmented doesn’t mean that it should be always be defragmented. When dealing with indexes for small tables, there isn’t always a lot of benefit in defragmenting the index. For instance, an index having fewer than eight pages will fit into one extent, and thus there is no benefit in terms of reduced I/O from defragmenting that index. Some Microsoft documentation and SQL Server experts recommend not defragmenting tables with fewer than 1,000 pages. Whether that value is appropriate for your database is dependent on your database, but it is a starting point for building index maintenance strategies.

Note Just because an index is fragmented doesn’t mean that it should be always be defragmented. When dealing with indexes for small tables, there isn’t always a lot of benefit in defragmenting the index. For instance, an index having fewer than eight pages will fit into one extent, and thus there is no benefit in terms of reduced I/O from defragmenting that index. Some Microsoft documentation and SQL Server experts recommend not defragmenting tables with fewer than 1,000 pages. Whether that value is appropriate for your database is dependent on your database, but it is a starting point for building index maintenance strategies.

There are a few steps that a defragmentation script will perform to intelligently defragment the indexes.

- Collect fragmentation data.

- Determine what indexes to defragment.

- Build the defragmentation statement.

Before starting on the fragmentation steps, you need a template for the index maintenance script. The template, shown in Listing 9-27, declares a number of variables and utilizes a CURSOR to loop through each of the indexes and perform the necessary index maintenance. The variables are set at the DECLARE statement with the thresholds defined at the start of this section. Also in the template is a table variable that is used to store intermediate results on the state of fragmentation in the database.

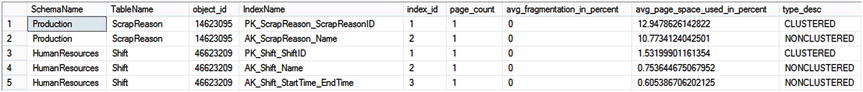

To get started, you need to collect fragmentation data on the indexes and populate them into the table variable. In the script in Listing 9-28, the DMF sys.dm_db_index_physical_stats is used with the SAMPLED option. This option is used to minimize the impact that executing the DMF will have on the database. Included in the results are the schema, table, and index names to identify the index that is being reporting on, along with the object_id and index_id. Statistical columns on the index fragmentation from the DMF are included in the columns page_count, avg_fragmentation_in_percent, and avg_page_space_used_in_percent. The last column in the results is has_LOB_column. This column is the result of a correlated subquery that determines whether any of the columns in the index are LOB types, which disallow online index rebuilds.

The results of the query in Listing 9-28 will vary for every reader. In general, the results should be similar to those in Figure 9-24, which include clustered, nonclustered, and XML indexes from the AdventureWorks2014 database.

Figure 9-24. Properties window for Rebuild Index Task

The next step in the defragmentation script is to build the list of indexes that need to be defragmented. The list of indexes, created through Listing 9-29, is used to populate the cursor. The cursor then loops through each of the indexes to perform the defragmentation. One point of interest in the script is that for clustered indexes, all the underlying indexes will be rebuilt. This isn’t a requirement when defragmenting indexes, but it is something that can be considered. When there are just a few indexes on a table, this may be a worthwhile way to manage them. As the count of indexes increases, this may become less appealing. The results from this query should look similar to those in Figure 9-25.

Figure 9-25. Indexes for rebuild/reorganize operations

The last part of the template is where the magic happens. In other words, the script in Listing 9-30 is used to construct the ALTER INDEX statement that is used to defragment the index. At this point, the level of fragmentation is checked to determine whether to issue a REBUILD or REORGANIZATION. For indexes that can support ONLINE index rebuilds, a CASE statement adds the appropriate syntax.

![]() Note One of the improvements to SQL Server 2014 Enterprise Edition is the ability to perform online index rebuilds when the index contains columns with large object (LOB) data types.

Note One of the improvements to SQL Server 2014 Enterprise Edition is the ability to perform online index rebuilds when the index contains columns with large object (LOB) data types.

Combining all these pieces into the template from the beginning of this section to create an index defragmentation script provides similar functionality to that of the maintenance plan tasks. With the ability to set the size and fragmentation levels in which the defragmentation occurs, this script removes the fragmentation from indexes that really need the work done on them versus just defragmenting every index in the database. Using Extended Events on AdventureWorks2014 to trace the output of the script reveals that the ALTER INDEX syntax for the results of the previous queries is similar to that in Listing 9-31.

As the code in this section demonstrated, using a T-SQL script can be much more complicated than just using the maintenance plan tasks. The upside to the complexity is that once the script is complete, it can be wrapped in a stored procedure and used on all your SQL Server instances. This script is meant as a first step in automating defragmentation with T-SQL scripts. It doesn’t account for partitioned tables and doesn’t check to see whether the index is being used before rebuilding or reorganizing the index. On the upside, rather than driving a truck through your databases and re-indexing everything, a scripted solution can intelligently decide how and when to defragment your indexes.

![]() Note For a complete index defragmentation solution, check out Ola Hallengren’s index maintenance scripts at http://ola.hallengren.com/Versions.html.

Note For a complete index defragmentation solution, check out Ola Hallengren’s index maintenance scripts at http://ola.hallengren.com/Versions.html.

Preventing Fragmentation

Fragmentation within an index is not always a foregone conclusion. There are some methods that can be utilized to mitigate the rate in which fragmentation occurs. When you have indexes that are often affected by fragmentation, it is advisable to investigate why the fragmentation is occurring. There a few strategies that can help mitigate fragmentation; these are fill factor, data typing, and default values.

Fill factor is an option that can be used when building or rebuilding indexes. This property is used to determine how much space per page should be left available in the index when it is first created or the next time it is rebuilt. For instance, with a fill factor of 75, about 25 percent of every data page is left empty.

If an index encounters a significant or frequent amount of fragmentation, it is worthwhile to adjust the fill factor to mitigate the fragmentation. By doing this, the activities that are causing fragmentation should be less impactful, which should reduce the frequency that the index needs to be defragmented.

By default, SQL Server creates all indexes with a default of 0. This is a recommended value for both the server and database levels. Not all indexes are created equal, and fill factor should be applied as it is needed, not as a blanket insurance policy. Also, a fill factor of 0 is the same as a fill factor of 100.

The one downside of fill factor is that leaving space available in data pages means that the index will require more data pages for all the records in the index. More pages means more I/O and possibly less utilization of the index if there are alternate indexes to select from.

A second way to avoid fragmentation is through appropriate data typing. This strategy applies to data types that can change length depending on the data that they contain. These are data types such as VARCHAR and NVARCHAR, which have lengths that can change over time.

In many cases, variable-length data types are a great fit for columns in a table. Issues arise when the volatility of the data is high and the length of the data is volatile as well. As the data changes length, there can be page splits, which lead to fragmentation. If the length volatility occurs across significant portions of the index, then there may also be significant numbers of page splits and thus fragmentation.

A good example of bad data typing comes from a previous experience with a data warehouse. The original design for one of the tables included a column with a data type of VARCHAR(10). The column was populated with dates in the format of yyyymmdd, with values similar to 20120401. As part of the import process, the date values were updated into a format of yyyy-mm-dd. When the import was moved to production and millions of rows were being processed at a time, the increase in the length of the column from eight to ten characters led to an astounding level of fragmentation because of page splits. Resolving the problem was a simple as changing the data type of the column from VARCHAR(10) to CHAR(10).

Such simple solutions can apply to many databases. It just requires a bit of investigation into why the fragmentation is occurring.

The proper application of default values may not seem to be something that can assist in preventing fragmentation, but there are some scenarios in which it can have a significant effect on fragmentation. The poster child for this type of mitigation is when databases utilize the uniqueidentifier data type.

In most cases, uniqueidentifier values are generated using the NEWID() function. This function creates a GUID that should be unique across the entire planet. This is useful for generating unique identifiers for rows but is likely scoped larger than that of your database. In many cases, the unique value probably needs to be unique for the server or just the table.

The main problem with the NEWID() function is that generating the GUID is not a sequential process. As demonstrated at the beginning of the chapter, using this function to generate values for the clustered index key can lead to severe levels of fragmentation.

An alternative to the NEWID() function is the NEWSEQUENTIALID() function. This function returns a GUID just like the other function but with a couple variations on how the values are generated. First, each GUID generated by the function on a server is sequential to the last value. The second variation is that the GUID value generated is unique only to the server that is generating it. If another SQL Server instance generates a GUID with this function for the same table, it is possible that duplicate values will be generated and the values will not be sequential since these are scoped to the server level.

With these restrictions in mind, if a table must use the uniqueidentifier data type, the NEWSEQUENTIALID() function is an excellent alternative to the NEWID() function. The values will be sequential, and the amount of fragmentation encountered will be much lower and less frequent.

In Chapter 3, I discussed the statistics collected on indexes. These statistics provide crucial information that the query optimizer uses to compile execution plans for queries. When this information is out of date or inaccurate, the database will provide suboptimal or inaccurate query plans.

For the most part, index statistics do not require much maintenance. In this section, you’ll look at the processes within SQL Server that can be used to create and update statistics. You’ll also look at how you can maintain statistics in situations where the automated processes cannot keep up with the rate of data change within an index.

Automatically Maintaining Statistics

The easiest way to build and maintain statistics in SQL Server is to just let SQL Server do it. There are three database properties that control whether SQL Server will automatically build and maintain statistics.

By default, the first two properties are enabled in databases. The last option is disabled by default. In most cases, all three of these properties should be enabled.

The first database property is AUTO_CREATE_STATISTICS. This database property directs SQL Server to automatically create single-column statistics that do not have statistics. From the perspective of indexes, this property does not have an impact. When indexes are created, a statistics object is created for the index.

The next two properties are AUTO_UPDATE_STATISTICS and AUTO_UPDATE_STATISTICS_ASYNC. At a high level, these two properties are quite similar. When an internal threshold is surpassed, SQL Server will initiate an update of the statistics object. The update occurs to keep the values within the statistics object current with the cardinality of values within the table.

The threshold for triggering a statistics update can change from table to table. The threshold is based on a couple of calculations relating to the number of rows that have changed. For an empty table, when more than 500 rows are added to the table, a statistics update will be triggered. If the table has more than 500 rows, then statistics will be updated when 500 rows plus 20 percent of the cardinality of rows have been modified. At this point, SQL Server will schedule an update to the statistics.

When the statistics update occurs, there are two modes in which it can be accomplished: synchronously and asynchronously. By default, statistics update synchronously. This means that when statistics are deemed out of date and require an update, the query optimizer will wait until after the statistics have been updated before it will compile an execution plan for the query. This is extremely useful for tables that have data that is volatile. For instance, the statistics for a table before and after a TRUNCATE TABLE would be quite different. Optionally, statistics can be built asynchronously through enabling the AUTO_UPDATE_STATISTICS_ASYNC property. This changes how the query optimizer reacts when an update statistics event is triggered. Instead of waiting for the statistics update to complete, the query optimizer will compile an execution plan based on the existing statistics and use the update statistics for future queries after the update completes. For databases with high volumes of queries and data being pushed through, this is often the preferred manner of updating statistics. Instead of occasional pauses in transactional throughput, the queries will flow through unencumbered, and plans will update as improved information is available.

If you are in an environment that disabled AUTO_UPDATE_STATISTICS in previous SQL Server versions, you should consider enabling it now with AUTO_UPDATE_STATISTICS_ASYNC. The most common reason to disable AUTO_UPDATE_STATISTICS in the past was the delay caused by the update of statistics. With the option to enable AUTO_UPDATE_STATISTICS_ASYNC, those performance concerns can likely be mitigated.

Depending on the index on a table, there will be times in which automatically updating the indexes will do more harm than good. For instance, an automatic statistics update on a large table may lead to performance issues while the statistics object is updated. In that situation, the existing statistics object may be good enough until the next maintenance window. There are a number of ways in which AUTO_UPDATE_STATISTICS can be disabled on an individual statistics object rather than across the entire database.

- Executing an sp_autostats system store procedure on the statistics object

- Using the NORECOMPUTE option on the UPDATE STATISTICS or CREATE STATISTICS statement

- Using STATISTICS_NORECOMPUTE on the CREATE INDEX statement

Each of these options can be used to disable or enable the AUTO_UPDATE_STATISTICS option on indexes. Before disabling this feature, always be sure to validate that the statistics update is truly necessary.

When considering statistics, one area where statistics are created and used a bit differently are with in-memory tables. It’s important to understand that statistics cannot be generated automatically on in-memory tables and that they always require a full scan. Add to this, natively compiled stored procedures on in-memory tables retrieve statistics only when the stored procedure is compiled or when SQL Server restarts. This means that when considering the impact of statistics on indexes, in-memory tables require additional care in timing the maintenance for the statistics.

Manually Maintaining Statistics

There will be times when the automated processes for maintaining statistics will not be good enough. This is often tied to situations where the data is changing but not enough has changed to trigger a statistics update. A good example of when this can happen is when update statements change the cardinality of the table without affecting a large number of rows. For instance, if 10 percent of a table was changed from a large number of values to a single value, then the plan for querying the data could end up being suboptimal. In situations like this, you need to be able to get in and manually update statistics. As with index fragmentation, there are two methods for manually maintaining statistics.

- Maintenance plans

- T-SQL scripts

In the next sections, you’ll look at each of these methods and walk through how they can be implemented.

Within maintenance plans, there is a task that allows statistics maintenance. This task is the Update Statistics Task, aptly named for exactly what it accomplishes. When using this task, there are a number of properties that can be configured to control its behavior (see Figure 9-26).

- Connection: The SQL Server instance the task will connect to when it executes.

- Database(s): The databases the task will connect to for rebuilding. The options for this property are

- All databases

- All system databases

- All user database

- These specific databases (a list of available databases is included and one must be selected)

- Object: Determines whether the rebuild will be against tables, views, or tables and views.

- Selection: Specify the tables or indexes affected by this task. This is not available when tables and views is selected in the Object box.

- Update: For each table, determines whether all existing statistics, column statistics only (using WITH COLUMN clause), or index statistics only (using WITH INDEX clause) are updated.

- Scan Type: A choice between a full scan of all leaf-level pages of the indexes or “Sample by,” which will scan a percentage or number of rows to build the statistics object.

Figure 9-26. Properties window for Update Statistics Task

As with the maintenance plan tasks discussed earlier in this chapter, the tasks don’t have deeper controls to help determine whether the statistics should be updated. A useful option would be to limit the statistics updates to a specified date range, which would reduce the number of statistics updated during each execution. For the most part, the lack of that option is not a deal-breaker. Statistics updates aren’t like indexes where each update requires enough space to rebuild the entire index.

Through T-SQL there are a couple alternative approaches for updating statistics: using stored procedure or using DDL statements. Each of these approaches has pros and cons. In the next sections, you’ll look at each one and why it may be a worthwhile approach.

Within the master database there is a system stored procedure named sp_updatestats that allows for updating all statistics within a database. Since it is a system stored procedure, it can be called from any database to update the statistics in the database in which it is called from.

When sp_updatestats is executed, it runs the UPDATE STATISTICS statement, described in the next section, using the ALL option. The stored procedure accepts a single parameter named resample, shown in Listing 9-32. The resample parameter accepts only the value resample. If this value is supplied, then the stored procedure uses the RESAMPLE option of UPDATE STATISTICS. Otherwise, the stored procedure uses the default sampling algorithm in SQL Server.

One benefit to using sp_updatestats is that it will update the statistics only for items in which there have been modifications to the data. The internal counter that is used to trigger automatic statistics updates is checked to make certain that only statistics that have been changed will be updated.

In situations where a statistics update is needed, the sp_updatestats is a great tool for updating statistics on just those that have the potential for being out of date since the last update. Where the Update Statistics Task is an oversized blanket that smothers the entire database, sp_updatestats is a comforter that covers in just the right places.

The other option for updating statistics is through the DDL command UPDATE STATISTICS, shown in Listing 9-33. The UPDATE STATISTICS statement allows for finely tuned statistics updates on a per-statistics basis with a number of options for how to collect and build statistics information.

The first parameter to set when using UPDATE STATISTICS is table_or_indexed_view_name. This parameter references the table in which the statistics will be updated. With the UPDATE STATISTICS command, only one table or view can have its statistics updated at a time.

The next parameter is index_or_statistics_name. This parameter is used to determine whether a single statistic, list of statistics, or all statistics on a table will be updated. To update just a single statistic, include the name of the statistic after the name of the table or view. For a list of statistics, the names of the statistics are included in a comma-separated list within parentheses. If no statistics are named, then all statistics will be considered for updating.

After the parameters are set, it is time to add applicable options to the UPDATE STATISTICS command. This is where the power and flexibility of the syntax really shines. These parameters allow the statistics to be finely tuned to the data available in them to get the right statistics for the right table and the right index.

- FULLSCAN: When the statistics object is built, all rows and pages in the table or view are scanned. For large tables, this may have an effect on performance while creating the statistics. Basically, this is the same as performing a SAMPLE 100 PERCENT operation.

- SAMPLE: The statistics object is created using either a count or a percentage sample of the rows in the table or view. When the sample rate is not selected, SQL Server will determine an appropriate sample rate based on the number of rows in the table.

- RESAMPLE: Update the statistics using the sample rate from the last time that the statistics were updated. For instance, if that last update used a FULLSCAN, then a RESAMPLE will result in a FULLSCAN as well.

- ALL | COLUMNS | INDEX: Determines whether column statistics, index statistics, or both should be updated.

- NORECOMPUTE: Disables the option for the query optimizer to request an automatic update to the statistics. This is useful for locking in statistics that shouldn’t change or are optimal with the current sample. Take caution when using this on tables that have frequent data modifications and make certain there are other mechanisms in place to update the statistics as needed.

The first three options in this list are mutually exclusive. You are able to select only one of the options. Selecting more than one of those options will generate an error.

Since UPDATE STATISTICS is a DDL command, it can easily be automated in a fashion similar to that used to defragment indexes. For brevity, a sample script is not included, but the template for the index fragmentation maintenance could be used as a starting point. As mentioned in the previous section, sp_updatestats uses UPDATE STATISTICS under the covers. This DDL command is a powerful way to update statistics as needed in your databases without doing more than is really necessary. To continue the analogy from the previous section, using UPDATE STATISTICS replaces the blanket and comforter with a handmade sweater.

Summary

In this chapter, you learned about a number of maintenance considerations that are part of indexing a table. These break down to managing the fragmentation of indexes and managing their statistics. With index fragmentation, you saw ways in which indexes can become fragmented, why it is an issue, and strategies to remove the fragmentation. These maintenance tasks are critical for making certain that SQL Server can use indexes to the best of its ability. Along with the maintenance activity, the statistics on the indexes must also be maintained. Out-of-date or inaccurate statistics can lead to execution plans that do not match the data in the table. Without proper execution plans, performance will suffer regardless of the indexes in place.