Deep learning is a field of machine learning that is a true enabler of cutting-edge achievements in the domain of artificial intelligence. The term deep implies a complex structure that is designed to handle massive datasets using intensive parallel computations (mostly by leveraging clusters of GPU-equipped machines). The term learning in this context means that feature engineering and customization of model parameters are left to the machine. In practice, the combination of these terms in the form of deep learning implies multilayered neural networks. Neural networks are heavily used for tasks like image classification, voice recognition/synthetization, time series analysis, and so forth. Neural networks tend to mimic how our brain cells work in tandem in decision-making activities. This chapter introduces you to neural networks and how to build them using PyTorch, which is an open-source Python framework (visit https://pytorch.org ) that has a familiar API to those accustomed to Numpy. Furthermore, as the last chapter in this book, it exemplifies many stages of the data science life cycle model (data preparation, feature engineering, data visualization, data analysis, and data product deployment). First, though, let’s consider the notion of intelligence as well as when, how, and why it matters.

Intelligent Machines

What makes a car intelligent? Is a self-driving car intelligent? Is a self-parking car also intelligent (albeit not that much as a fully self-driving one)? Is a car with a cruise control system intelligent at all? Where is the borderline?

Is a common fruit fly intelligent? Is an artificial, self-reproductive fruit fly the state-of-the-art of AI in 2019+?

Are there indirect signs of intelligence? (Take a look at Figure 12-1, discussed a bit later.)

You and your team on a mission to find a habitable planet

Imagine that you and your team are searching nearby solar systems for habitable planets. You approach a good candidate planet, but there is a problem. You have noticed a shovel on the beach. You are afraid to land, not because you are afraid of the shovel, but afraid of what it represents. It is a clear sign of the presence of intelligent beings, who might not welcome you. It doesn’t even matter whether the planet is colonized by intelligent living beings or by robots. What matters is the indirect sign of intelligence, which sparks some level of respect. This idea is nicely elaborated in reference [1] and we will further develop it in this chapter.

Turing test setup (source: https://commons.wikimedia.org/wiki/File:Test_de_Turing.jpg ). The interrogator (C) tries to reveal which player (A or B) is a human only by presenting questions and looking at the written answers.

The Turing test aims to establish the ultimate criteria regarding what constitutes an intelligent machine. Maybe one day we will manage to attain such level of sophistication. Until then we must focus our attention on more pragmatic objectives. Returning to our washing machine example, do we really care who or what cleaned an item of clothing? The real question is, “Can we distinguish whether clothing was washed manually by a human or washed by a washing machine?” If there is no difference, then the washing machine is a useful utensil that makes us more productive and relieve us of mundane tasks. This is the whole point of human–machine interaction. In this sense, intelligence is just a requisite score related to the complexity of tasks that we want to tackle with or without a machine. For more advanced jobs, we need to reach out to more progressed techniques, technologies, and tools. We don’t usually care whether the desired level of a machine’s intelligence is due to the stupid hardware + super-smart software, ultra-smart hardware + dumb software, or shrewd hardware and software. Sometimes, even an ordinary calculator can make us powerful enough to solve a problem on time with the required level of accuracy.

Note

In the spirit of the previous text, I only contemplate using deep neural networks when simpler, more interpretable solutions are unfeasible. Deep neural networks may seem mystical to external spectators, but internally they are roughly linear algebra and calculus. Nonetheless, the amount of research and human effort that preceded them is staggering (developing the algorithm to train deep neural networks required around 30 years of concerted hard work of many scientists). You may also want to consult references [2] and [3] for more information regarding intelligence and different ways of looking at things.

One of the fascinating and amusing achievements of neural networks is embodied in the 20Q game (for more details, read reference [4]). This product constantly learns from users as they play the game. You may also want to consult reference [5] for examples of when neural networks are a good fit as well as to learn about Keras (I will present PyTorch in this chapter).

Intelligence As Mastery of Symbols

I have pondered a lot about how to best exemplify what is happening inside a neural network. Jumping immediately to nodes, weights, layers, and activation functions seems inappropriate for me. Luckily, I managed to find a suitable problem statement on Topcoder, for a game called AToughGame , to demonstrate how abstractions interact in creating something that appears as intelligent (to follow this discussion, you first must read the specification; click the link for AToughGame at https://www.topcoder.com/blog/how-to-come-up-with-problem-ideas ). Intelligence may be treated as a mastery of producing symbols (abstractions) at multiple levels of granularity, as mentioned in reference [1]. Abstract hierarchical structures are accumulated and built upon each other until the final solution may be trivially described in terms of them.

Manual Feature Engineering

The overall state diagram of the problem

If the probability of passing some level is p, then the opposite outcome is q = 1 – p. The final state is the winning one. The goal is to calculate the expected amount of treasure collected after completing all levels. The diagram shows the initial pair of states that is going to be aggregated first.

Modeling the Aggregated State

What is the joint probability of the new state?

What is the joint value (I will use v to denote a value) of the new state?

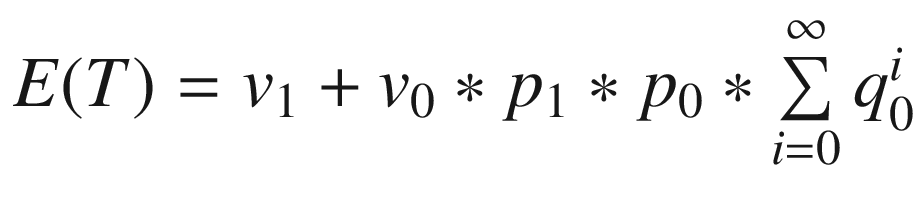

We have already answered the first question, as also shown in Figure 12-3. The new value is a sum of the last value and the expected Amount of treasure taking into account all possible ways to leave the two states. The most trivial scenario is to leave the states in succession without dying at either level. The next possibility is dying once at level 0 and afterward finishing both states in succession. The third scenario is to die twice in succession at level 0 and afterward finish both states in succession. This pattern continues indefinitely. We may describe all these scenarios by  , where E(T) is the expected amount of treasure.

, where E(T) is the expected amount of treasure.

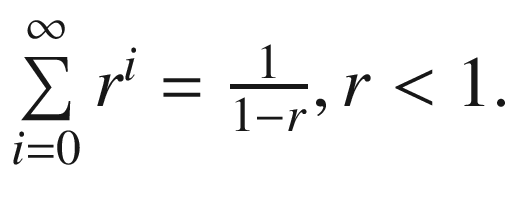

The last expression already encompasses two powerful abstractions. We should synthesize them now. Don’t forget that there are many more possibilities to finish these two levels, which means that manipulating raw probabilities will soon become unwieldy. The first abstraction comes from algebra and provides a closed from solution to the summation. This is called a geometric sum  In our case, the parameter r will always be a non-negative real number. It is important to name abstractions, and this sum will be denoted as series1(r). Our second abstraction is γ = p0 ∗ series1(q0), with a meaning of “the probability of leaving level 0.” Notice that we can leave this level without dying, or by dying once, twice, and so on.

In our case, the parameter r will always be a non-negative real number. It is important to name abstractions, and this sum will be denoted as series1(r). Our second abstraction is γ = p0 ∗ series1(q0), with a meaning of “the probability of leaving level 0.” Notice that we can leave this level without dying, or by dying once, twice, and so on.

Thanks to the previously introduced abstractions, we may formulate the joint probability for the preceding use case in a succinct fashion as series1(γ ∗ q1) ∗ q0 ∗ γ ∗ p1. Observe how γ is nested inside series1.

In this case, after each iteration, the amount of treasure left at level 1 is equal to v0 ∗ m, where m is the number of iterations. To describe this case effectively, we need a new abstraction, which is a derivative of the geometric sum:  . We will name this abstraction as series2(r). Therefore, the joint probability is given by series2(p0 ∗ q1) ∗ p0 ∗ p1.

. We will name this abstraction as series2(r). Therefore, the joint probability is given by series2(p0 ∗ q1) ∗ p0 ∗ p1.

One final remark is that the last use case can also be associated with the second one. In other words, it is possible to accumulate wealth and then, in the last round, lose everything by leaving level 0 after dying at least once.

Tying All Pieces Together

The whole solution is depicted in Listing 12-1 after refactoring the formulas for each use case. The combine function receives as inputs the raw probabilities and values for two consecutive levels and returns the aggregated probability and expected value of treasure. In between, it builds abstractions from raw data. This is very similar to how neural networks start from raw input nodes, create a hierarchy of abstractions via hidden layers, and finally output the result.

AToughGame.py Module That Implements the AToughGame Topcoder Problem

The class name and the sole method’s signature are part of the requirements specification (see Exercise 12-1). The internal details, despite all abstractions being manually created, would be unfathomable to someone who hasn’t read the preceding description. Therefore, you must take care to accompany your condensed code with a proper design document.

By looking at the preceding code, you might wonder where the intelligence is in these nine lines of code (including the boilerplate stuff and a blank line). Maybe you would marvel at its ingenuity by running it and seeing how it spits out the solution in a couple of nanoseconds. Can you imagine that it has all the necessary knowledge to take into account all possible ways the player could finish the game? This program nicely illustrates the state of affairs in AI before the 1990s. Software solutions were equipped beforehand will all the necessary heuristics and rules.

Now, imagine a software wizard that is capable of deciphering all the necessary abstractions to deal with a problem. This is exactly where neural networks shine. You simply define the architecture of the solution and leave to the network the hard work of producing higher-level symbols. Upper layers reuse abstractions from lower ones. More layers more sophisticated features.

Machine-Based Feature Engineering

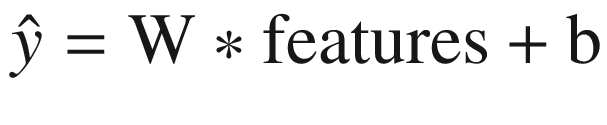

We will now build two versions of a neural network to demonstrate automatic feature engineering and how such a network works. The first version will be built from scratch and the second one will use PyTorch. You have already seen linear regression in Chapter 7 (about machine learning), where the output is estimated as  (W is the weight of the features and b is the bias term). The features may contain nonlinear components, though. It is possible to associate an activation function with this output; by doing this, you may turn linear regression into logistic regression for classification purposes. An activation function can even be a trivial step function that outputs 1 if

(W is the weight of the features and b is the bias term). The features may contain nonlinear components, though. It is possible to associate an activation function with this output; by doing this, you may turn linear regression into logistic regression for classification purposes. An activation function can even be a trivial step function that outputs 1 if  and 0 otherwise. As a matter of fact, any function that has some threshold to discern positive and negative cases can serve as an activation function.

and 0 otherwise. As a matter of fact, any function that has some threshold to discern positive and negative cases can serve as an activation function.

Figure 12-4 shows a general structure of a neural network with one input layer, one hidden layer, and one output layer. The input layer has as many nodes as there are different features (in this case two) plus an extra constant to represent the bias. The hidden layer is configurable from the viewpoint of number of nodes. The output layer has as many nodes as there are targets (in this case only one).

The shadowed nodes in the hidden layer are composed of an aggregator and an activation function. The node’s aggregator calculates the product of the matching input weights and input, while the activation function transforms the value from an aggregator. The final output node may also apply an activation function, when you are doing classification instead of regression. In Figure 12-4, the activation function is the sigmoid function, whose formula is also shown there (its derivative with respect to its input, which is very neat, as you can see).

The architecture of a simple neural network for regression task with one hidden layer

The error is the difference between the true label and the predicted value, represented as  . A set of partial derivatives of this error function with respect to weights gives us the desired gradients for updating weights; this is the crux of the gradient descent method. The amount to update the weight

. A set of partial derivatives of this error function with respect to weights gives us the desired gradients for updating weights; this is the crux of the gradient descent method. The amount to update the weight  is

is  , which is an application of the chain rule in calculating derivatives. The previous expression would have an additional term had the final output also applied an activation function. At any rate, these partial derivatives are only feasible if the activation function (or functions; you may use different functions in each layer) is continuous and differentiable. Furthermore, to avoid the vanishing gradient issue in deep neural networks (when the product of all partial derivates becomes tiny), the activation function should spread out the output over a larger range. In this respect, the sigmoid function isn’t quite good. This is why you will see hyperbolic tangent (tanh) or rectified linear unit (ReLU) in action. The latter is the default choice for hidden layers.

, which is an application of the chain rule in calculating derivatives. The previous expression would have an additional term had the final output also applied an activation function. At any rate, these partial derivatives are only feasible if the activation function (or functions; you may use different functions in each layer) is continuous and differentiable. Furthermore, to avoid the vanishing gradient issue in deep neural networks (when the product of all partial derivates becomes tiny), the activation function should spread out the output over a larger range. In this respect, the sigmoid function isn’t quite good. This is why you will see hyperbolic tangent (tanh) or rectified linear unit (ReLU) in action. The latter is the default choice for hidden layers.

To avoid changing the weights abruptly, there is a hyperparameter called learning rate. Every gradient is multiplied by this quantity, so that the process moves cautiously toward a minimum (most often a good local minima).

The power of neural networks comes from the nonlinearity of outputs from hidden nodes. In deep neural networks, as each layer reuses outputs from a previous one, complex features may be created out of raw input. The beauty is that you don’t need to worry about how the network will describe the problem in succinct fashion. Of course, the downside is that you will have a hard time interpreting the network’s decision-making procedure. There is also an amazing project for producing visual effects from intermediary features created by deep neural networks (visit https://github.com/google/deepdream ).

Implementation from Scratch

Simple Neural Network Implementation for Regression Task As Shown in Figure 12-4 (Without Biases)

There are two public methods, train and run. The private __update_weights method doesn’t compute the average of the delta weights but assumes that the provided learning rate includes this factor. This is a usual practice, as this parameter is anyhow an arbitrary number that must be tuned for every problem separately.

The weights are initialized to small random values using normal distribution with a standard deviation of  , where n is the number of input nodes into the matching layer. This is known as Xavier initialization, which you may read more about at

http://bit.ly/xavier-init

. This is another way to mitigate the vanishing gradient issue.

, where n is the number of input nodes into the matching layer. This is known as Xavier initialization, which you may read more about at

http://bit.ly/xavier-init

. This is another way to mitigate the vanishing gradient issue.

- 1.

Issue git clone https://github.com/udacity/deep-learning-v2-pytorch.git to clone the course repository.

- 2.

Go into the project-bikesharing folder and copy there the simple_network1.py file from this chapter’s source code.

- 3.

Delete the my_answers.py file and rename simple_network1.py to my_answers.py.

- 4.

Open Predicting_bike_sharing_data.ipynb in your Jupyter notebook instance and follow the narrative.

All unit tests should pass, and you should get a line plot for the test data as shown in Figure 12-5. The model predicts the data quite well, except for the last week of the year. You can see that in the period of 22 of December until the end of the year the prediction is higher than the actual data. The network had not been properly trained to recognize this period, when most people take vacation around Christmas. If you look carefully in the notebook, you will see that the test data is not covering a typical period, and this critical period was taken away during training. Furthermore, workingday as a feature was also removed from the data.

Implementation with PyTorch

The actual and predicted outputs for the test data

Version of Our Network with PyTorch Using GPUs, If Available The hyperparameters were also altered (look in the simple_network2.py module).

- 1.

Set gradients to zero (the same as we did in Listing 12-2).

- 2.

Make a forward pass through the network.

- 3.

Calculate the error.

- 4.

Backpropagate the error to find the proper deltas for updating the weights.

- 5.

Update the weights.

Updated Script Using PyTorch DataLoader Class

The efficiency of the training process. You should always monitor the validation loss curve. If it starts to rise, then your network is overfitting. If the training loss doesn’t drop, then you are underfitting.

Exercise 12-1. Custom Parallelization

In Chapter 11 we applied Dask to perform operations in parallel over an array. There are situations where this form of concurrency isn’t viable. Dask also provides an option to parallelize custom algorithms through the dask.delayed interface.

The expectedGain function returns the expected amount of treasure at the end of a game. Suppose that you want to simply return the total probability of passing all levels in succession. This is currently returned as the first element in the final tuple. Create a new method totalProbability that calculates just this quantity in parallel.

Take a look at the example code about tree summation (reduction) at https://examples.dask.org/delayed.html . Instead of using the add operation from the Dask tutorial, you would use multiplication to merge nodes. For this simple case of tree reduction, you don’t need a custom procedure, but the aim is to try out the dask.delayed interface and monitor in the Dask dashboard how computations are carried out.

Exercise 12-2. Deployment into Production

PyTorch (starting from version 1.0) offers the capability to convert your Python model into an intermediary format that could be utilized from a C++ environment. In this manner, you can develop your solution in Python and later deploy it as a C++ application. This approach addresses the performance requirements associated with production setup.

Consult the tutorial about loading your PyTorch model in C++ at https://pytorch.org/tutorials/advanced/cpp_export.html . In our case, because the forward implementation is unified (there is no conditional logic based on input), you can transform the PyTorch model to Torch Script via tracing.

Summary

Using PyTorch, or some other framework for neural networks, is essential to cope with the inherent complexities of deep neural networks. There are lots of additional options to optimize the training process, which are readily available in PyTorch: dropout, batch normalization, various advanced optimizers, different activation functions, and so on. PyTorch also allows you to persist your network into external storage for later use. You might want to save your model each time you manage to reduce the validation loss. At the end, you can select the best-performing variant.

PyTorch is bundled with two major extensions: pytorchvision, which is useful for image processing, and pytorchtext, which is useful for handling text (such as doing sentiment analysis). You can also reuse publicly available trained models to realize the concept of transfer learning. For example, there are models trained on images from ImageNet ( http://www.image-net.org ) with cool features for image classification.

There is an interesting initiative called PyTorch Hub ( https://pytorch.org/hub ) for efficiently sharing pretrained models, thus realizing the vision of transfer learning. Neural networks are also a very popular option at the IoT edge. For this you need an ultra-light prepacked engine. One example is Intel’s Neural Compute Stick ( http://bit.ly/neural-stick ). All in all, there are very innovative and interesting approaches for each use case and domain.

References

- 1.

Douglas R. Hofstadter, Gödel, Escher, Bach: An Eternal Golden Braid, Anniversary Edition, Basic Books, 1999.

- 2.

Charles Petzold, Code: The Hidden Language of Computer Hardware and Software, Microsoft Press, 2000.

- 3.

Garry Kasparov, “Don’t Fear Intelligent Machines. Work with Them,” TED2017, https://www.ted.com/talks/garry_kasparov_don_t_fear_intelligent_machines_work_with_them , April 2017.

- 4.

Karen Schrock, “Twenty Questions, Ten Million Synapses,” Scienceline, https://scienceline.org/2006/07/tech-schrock-20q , July 28, 2006.

- 5.

Jojo Moolayil, Learn Keras for Deep Neural Networks: A Fast-Track Approach to Modern Deep Learning with Python, Apress, 2018.