Chapter 3. The Future Has Been Here Before

Conversations about how the IoT will “change everything” span many application domains. Three sexy ones in particular are:

- Smart medicine

-

Putting IT inside medical devices

- Smart grid

-

Putting IT everywhere in the power grid

- Smart cars

-

Putting IT everywhere inside cars—even replacing the driver

Discussions of the impact of the IoT—of making everything smart—usually go in two directions. In one (reminiscent of Pollyanna or Candide’s Pangloss) the vision is impossibly rosy: the IoT will make everything wonderful. Another direction, reminiscent of the Cassandra from mythology touts a dystopia (too often what listeners hear when security people speak). Nonetheless, both visions have a common thread: this future is new.

Although the IoT is new, interconnecting IT with physical reality (albeit on a less massive scale) is not. However, this is not the first time that information technology has taken a quantum jump in its integration with non-IT aspects of life—even for domains the IoT is penetrating. This is also not the first time that Cassandras and Pollyannas have spoken.

This chapter revisits some older incidents, not widely known outside the software engineering community, where interconnection of complex IT with physical reality had interesting results. The discussion follows the sexy application domains just listed:

-

For smart medicine, what happened back in the 1980s with the simpler Therac-25, a computer-controlled radiation therapy device

-

For the smart grid, what happened in the 2003 East Coast blackout with the simpler cyber infrastructure of the last decade’s power grid

-

For smart cars, what happened 30 years ago when the Airbus A320 brought computer-based control to passenger aircraft

In each of these cases, it’s safe to say there were unexpected consequences from the IT–physical connection. Sometimes Cassandras spoke and were wrong; sometimes they didn’t but should have. The past is prologue; in each case, the history sheds some light on current IoT efforts in these spaces.

Bug Background

When talking about some consequences of layering IT on top of non-IT technology, it helps to discuss a few types of bugs that can surface in large software systems (as programs glued to these internetworked things tend to be). Experienced programmers will likely recognize these patterns.

Integer Overflow

Software design, in some sense, is a form of translation: expressing higher-level concepts of workflow and physical processes and what have you in terms of precise instructions carried out by a computing machine. As with any type of translation (or mathematical mapping from one set to another), the higher-level idea and its machine representation may not necessarily be identical.1 As software often deals with basic manipulation of numbers, one manifestation of this translation error arises from considering questions such as “How big is an integer?” In the world of mathematics, an integer is an integer; there are an infinite number of them. However, computing machines typically represent an integer as a binary number and reserve a specific number of bits (binary digits) to represent any integer.

For example, suppose the variable x is

intended to represent a nonnegative integer. In the programmer’s mental model of what he or she is doing, x can have an infinite number of values, and it’s always

going to be true that x + 1 will be greater than x.

But if the computing machine represents x as an 8-bit value, then there are only

possible values. An infinite number of things cannot fit

into only

256 slots. In the representation machines naturally use,

if x = 255 and the program adds 1, then x = 0, surprisingly (unless one is familiar with modular arithmetic). The rule that adding 1 gives a larger number no longer holds.

Race Conditions

Another family of bugs arises when software may consist of two or more threads of execution happening at the same time (“concurrently” or “in parallel,” depending on the mechanism). Actions by thread A may have dependencies on actions by thread B in ways obvious or subtle, and if a particular run of the system fails to respect these dependencies, things can go dramatically wrong.

As an extreme noncomputing example, suppose the job of thread Alice is to drive a commuter train and the job of thread Bob is to take the train to work. Although Alice and Bob may be two separate actors, coordination is critical: if Bob steps off the station platform a few seconds too early—just as the train is arriving—the outcome will not be good. (The correctness of the execution thus depends on a “race” between the two threads.)

In computing, the deadly interaction typically involves inadvertent concurrent use of the same data structure, and is often much more subtle. What’s even more vexing is that if the programmer does not see and account for the dependency at design time, it can be very hard for testing to reveal it. Imagine that Alice’s thread of execution is a large red deck of numbered playing cards, and Bob’s is a large green deck. What actually happens in any particular execution of the system is a shuffling together of these two decks: the red and green cards each stay in order, but their interleaving is arbitrary. If the bad action happens only if Bob’s card #475 gets placed between Alice’s #242 and #243, then one might run the system many times and never see that happen—and when it does happen, one might rerun the system and see the buggy behavior disappear.

Memory Corruption

Computers store data and code in numbered memory slots and manipulate an item by specifying the index number (address) of its slot. Memory corruption bugs occur when the program somehow gets the correspondence of address to slot wrong, and writes the wrong thing in the wrong place. For a noncomputing metaphor, think of the letter in Hamlet instructing that the bearer of the letter should be killed; this plan has unexpected consequences if the wrong person carries the letter.

Impossible Scenarios

Again, designing and reasoning about computer system behavior involves a semiotic mapping between the reality of what a system actually does and the mental model the programmer or analyst has of what it does. In many situations (including the bug scenarios just described), things can get lost in translation; behaviors can happen in the actual system that are impossible in the mental model. This phenomenon can make it very hard to find and fix the problems.

The future has been here before—and all of these bugs showed up.

Smart Health IT

If the IoT is defined as “layering IT on top of previously noncomputerized things,” one area receiving much attention is smart health. Healthcare requires measuring and sensing and probing and manipulating what is quite literally a physical system: the human body. Adding a cyber component to this creates some interesting potential. For a Panglossian voice, consider this quote from Miller’s textbook [12]:

It’s a given that the Internet of Things (IoT) is going to change healthcare as we know it. By connecting together all the various medical devices in use (or soon to be introduced), healthcare gets a lot smarter real fast…. It’s a medical dream come true.

Smart health may be an exciting new avenue in the 2010s, but this future has also been here before. As a cautionary tale, we will revisit the 1980s and the Therac-25, a computer-controlled radiation therapy machine.

The Therac-25

One of the treatments for cancer is radiation: bombarding the malignant cells with focused beams of high-energy particles. The Therac-25, from Atomic Energy of Canada Limited (AECL), contained a 25 million-electron volt accelerator and could generate either electron or X-ray photon beams; the latter requires first generating a much higher-energy electron beam and then converting it. These processes—particularly the conversion to X-ray—required that various objects be physically arranged with the beam; a primary component in this arrangement was a rotating turntable.

The Therac-25 was a successor to earlier devices and adapted both design elements and software from them. However, the Therac-25 improved on its predecessors by eliminating many hardware interlocks that could prevent the beams and objects and such from being put into a dangerous arrangement (such as one that would send too much radiation to the patient, or send it to the wrong part of the patient’s body).

Quite literally a “distributed system,” the Therac-25 filled a room and was operated from a console outside the room (see Figure 3-1).

Figure 3-1. Typical Therac-25 setup, from [9]. (Used with permission.)

The Sad Story

Unfortunately, the Therac-25 is remembered for a series of incidents in the mid-1980s, when things started going mysteriously wrong. The cases followed a similar pattern: the patient would complain of unusual or unpleasant physical experiences during treatment, such as tingling or burning. In many of the incidents, the operator needed to work with the keyboard to handle some kind of error message.

Outcomes were grim. In Georgia, the patient lost a breast; in Ontario, the patient then required a hip replacement; in Texas and Washington, three patients died. What was happening was that the device was occasionally giving patients massively incorrect radiation doses—Leveson and Turner [8] estimate two orders of magnitude higher than intended. (Subsequent investigation also revealed that many unreported incidents of underdosing may also have been taking place.)

Unfortunately, this behavior was impossible in the mental models of the machine operators, of the system designers, and even of some doctors treating the patients (who suspected radiation burns but could not figure out the causes). This apparent impossibility hampered subsequent efforts to unravel the fact that the machine was doing this—and to discover the series of bugs that were causing these dangerous malfunctions.

The primary bugs were instances of some of the patterns described in “Bug Background”:

-

At least one race condition existed in the operator I/O and machine configuration code—with the result that if exactly the wrong sequence of events happened, the machine would enter a dangerous configuration.

-

A critical variable used to indicate whether one of the key components of the beam positioning needed to be tested was implemented as an 8-bit integer and modified by incrementing. As a result, integer overflow could occur, and a nonzero value (256) was represented as a zero—causing the machine to conclude it could skip the test. The Washington death apparently resulted from this overflow occurring at exactly the wrong time.

The fact that exactly the wrong sequence of things had to happen to trigger these errors further complicated detection and diagnosis—repeating the sequence of events reported in an accident would not necessarily trigger the error again.

Today

The physical consequences of cyber problems in the Therac-25 provide grist for some software engineering classes, stressing the importance of principled design and testing for such software systems. (The Leveson-Turner paper [8] provides the seminal and exhaustive treatment of the topic.) Unfortunately, even when the focus is reduced from all of smart health to just computerized radiation therapy, cyber issues have still caused problems.

In Evanston, Illinois, in 2009, a linear accelerator made by Varian was used to provide radiation treatment to patients. As with the Therac-25, physical alignment of components was critical to ensuring the beam hit the intended target, rather than healthy tissue. However, the treatment system also required information flow across several computers. At Evanston, “medical personnel had to load patient information onto a USB flash drive and walk it from one computer to another” [3] and had to engage in additional cyber workarounds to get the machine to work with particular focusing components. It’s hypothesized that it was because information was lost and changed during translation that some patients ended up having healthy brain tissue irradiated—leaving one 50-year-old woman comatose. Smartening healthcare with IT created more channels where things could go wrong.

At the Philadelphia Veterans Affairs Hospital, clinicians treated patients for prostate cancer with brachytherapy, implanting radioactive “seeds” in the cancerous prostates and using a computerized device to make sure the seeds ended up where they were supposed to. However, “for a year, starting in November 2006, the computer workstation with the software used to calculate the post-implant dosages was unplugged from the hospital’s network” [11].

As subsequent news coverage and lawsuits attest (e.g., [2]), this lack of closing the loop led to seeds being placed in the wrong places (and sometimes the wrong organs, such as bladders or rectums). Patient cancers received radiation doses too small to be effective, while healthy organs received damaging doses, with serious consequences. Again, more IT created more failure modes.

Past and Future

What happened when the smart health future was here before?

Layering IT on top of radiation therapy led to rather bad consequences. The way we built software led to bugs; the way we tied it to physical reality led to the potential of bad outcomes; the way we dispensed with hardware double-checks on the software behavior made that potential a reality. As this future arrives again, we should learn from this past.

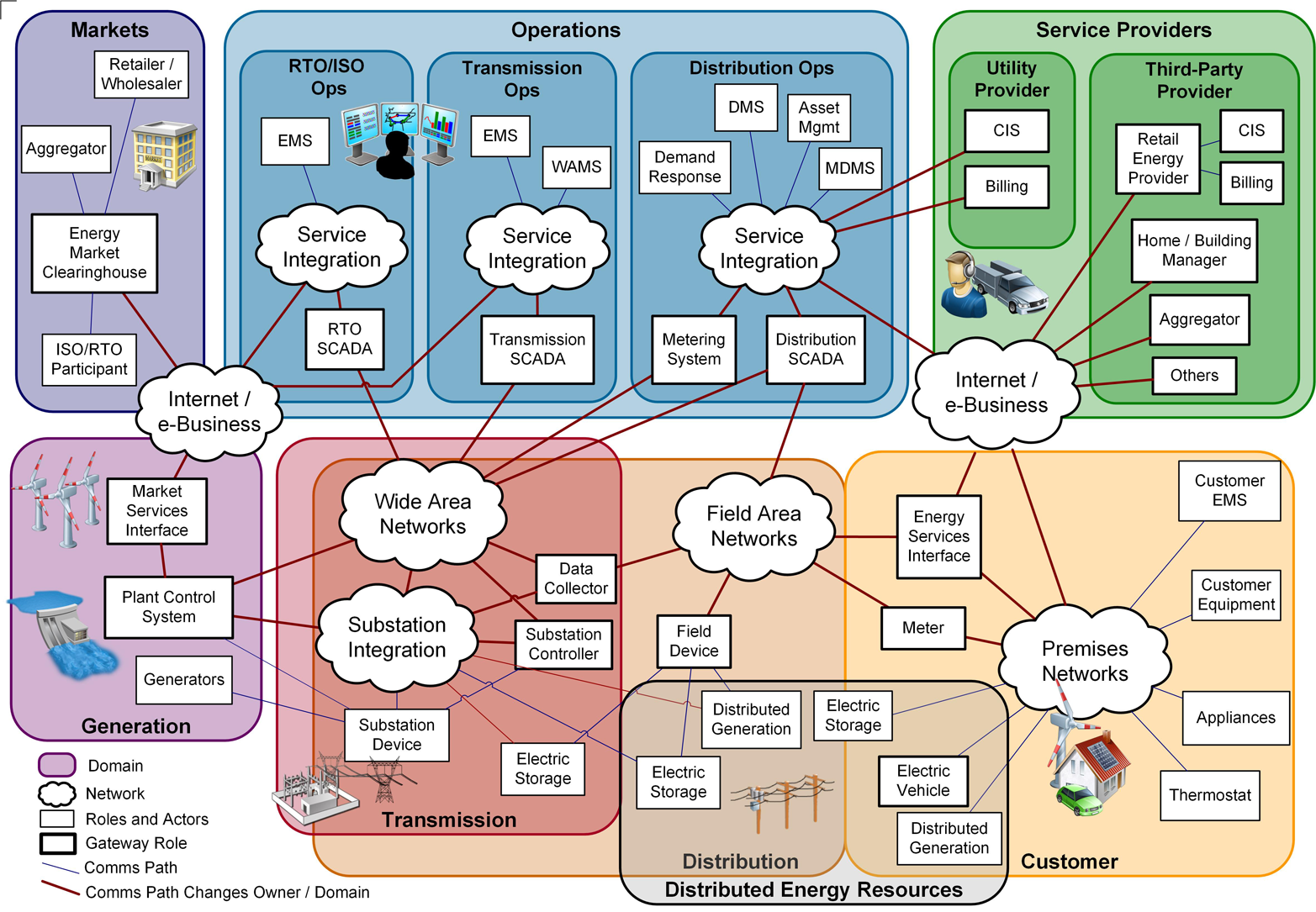

Smart Grid

Another IoT application domain receiving much attention is the smart grid, layering internetworked computing devices everywhere throughout the massive cyber-physical system that is the electric power grid (Figure 3-2). Some speakers limit the term to just the small portion of the power grid that lies within a consumer’s home, but colleagues of mine who are long-time veterans of power engineering actually object to either usage. To them, the electric grid has been “smart” for many decades; the relatively recent push to add even more computational devices is only making it “smarter.” Again, to some extent, this future has been here before.

Figure 3-2. The envisioned “smart grid” distributes computational devices everywhere throughout the electricity generation, transmission, and distribution processes. (Source: NIST Framework and Roadmap for Smart Grid Interoperability Standards, Release 3.0.)

The Balancing Act

Electric energy cannot be stored efficiently in large quantities. As a consequence, the giant distributed system of generation, consumption, and routing in between must be kept in near real-time balance—a fact which has long necessitated the use of a layer of cyber communication and control. Making this even more complicated is the subtle interaction between the elements themselves, and between the elements and the greater environment. For example, increased consumption of electricity for air conditioning on a hot day may cause the transmission line carrying this power to heat up and sag too close to trees, and thus require dynamic reconfiguration to send some of this power via an alternate route.

Finally, in the US and many other places, there is no longer a monolithic “Power Company.” As a consequence of various economic and government forces, the power grid in fact comprises many players responsible for:

-

Generating electricity

-

Transmitting electricity (i.e., “long-haul”)

-

Distributing electricity to consumers

-

Buying and selling futures

-

Coordinating regional and national-level power

These players may compete in the same marketplaces while trying to cooperate to keep power flowing—a situation some term “coopetition.”

One might imagine the “power grid” as a giant network of pipes moving water around. Although perhaps natural, this metaphor can be misleading. One can and does store water in tanks and reservoirs. Furthermore, the movement of an incompressible fluid in a pipe is far more straightforward to mathematically analyze than electric power flow, which requires complex analysis.2 As a consequence, to determine the state of the grid, we cannot just measure flow in a few places; rather, we need to first measure various aspects of phase angle and such, and then use computers to solve rather hairy differential equations.

The principal motivations for moving from the current grid—already equipped with cyber sensing and control—to the smart grid is to make this balancing problem easier by giving the system more places to measure and compute and switch and tweak.

Lights Out in 2003

Interconnection of power across continents and layering cyber sensing and control on top of it can add much stability to these systems, as more opportunities for generation, transmission, and consumption can make it easier to keep the whole system balanced.

But unfortunately, cyber failures can translate to physical failures, and the interconnection can cause physical failures to cascade.

A dramatic example of such failure occurred in the northeastern US and southeastern Canada in August 2003 (Figure 3-3). On August 14, a hot day with much power demand due to air conditioning, a power transmission line in Ohio touched a tree and automatically shut off. Ordinarily, grid operators, using standard cyber measurement and control, would have taken appropriate action and kept the system balanced and the lights on. However, for almost two hours that day, the alarm that should have alerted the local control room operators about this line failure itself failed. The humans in the loop, trusted to take correct action based on the information their systems gave them, were subverted because this information did not match reality. Cascading failure followed—along with a flurry of communication as operators throughout the region tried to assess, diagnose, and fix the problem. The end result was an international blackout that, according to the US Department of Energy, affected over 50 million people and cost in excess of $4 billion [10]. According to a later study by epidemiologists [5], the blackout also led to over 100 deaths—all from a tree and a failed alarm.

Figure 3-3. The 2003 incident shut down power in the eastern regions of the US and Canada, with the exception of the seaboard. (Source: Air Force Weather Agency.)

Root Causes

During and after the blackout, much speculation occurred as to its causes. As postmortem analysis unraveled the cascade and identified the failed alarms in the Ohio control room, speculation then focused on why these computers failed to work. Had they been inadvertently infected with run-of-the-mill malware? Or had there been a malicious, targeted cyber attack?

GE Energy, the developer of the software, analyzed the million lines of C and C++ code and eventually discovered a race condition (recall “Bug Background”) that, under exactly the wrong set of conditions, would cause the alarms to fail. Poulsen [15] quotes GE’s Mike Unum:

There was a couple of processes that were in contention for a common data structure, and through a software coding error in one of the application processes, they were both able to get write access to a data structure at the same time…and that corruption led to the alarm event application getting into an infinite loop and spinning.

As noted earlier, concurrent access to shared data structures is a common source of race conditions. As also noted, exhaustive testing may not be sufficiently exhaustive to generate exactly the right wrong scenario. Poulsen observes that “the bug had a window of opportunity measured in milliseconds” and further quotes Unum:

We had in excess of three million online operational hours in which nothing had ever exercised that bug. I’m not sure that more testing would have revealed it.

Today

In the tales of radiation therapy recounted earlier, the sad history was followed by a sad coda of more cyber bugs causing more of the same physical consequences.

With this aspect of the smart grid, such a sad coda does not exist (yet). As enticing as it might be for security analysts to identify in the grid what one of my students termed a “Byzantine butterfly” (a tiny but pathological cyber action causing a widespread negative outcome), the closest analysts have come is a Byzantine horde—that is to say, exactly the wrong thing happening in exactly the wrong nine different places at the same time [16].

Past and Future

What happened when the future smart grid was here before?

Even in apparently well-engineered and well-tested software, a race condition nonetheless was hiding—and when the right (that is, wrong) circumstances triggered it, it led to cascading failure. As this future arrives again, we should learn from this past: continue to test, but use synchronization more aggressively to prevent race conditions in the first place, and realize that just because sensing and control is cyber-enhanced does not mean it is sufficient. Even with regard to the 2003 blackout, the IEEE Power Engineering Society recommended improving “understanding of the system” and “situational awareness” [1].

That the coming “smarter grid” will greatly expand the number of computational devices, and hence the attack surface—and the tendency of computing devices to use common software components with common vulnerabilities—may also make it easier for Byzantine butterflies to turn into Byzantine hordes.

Smart Vehicles

Another IoT application domain receiving much attention (e.g., [21]) is the autonomous vehicle. Some of this attention may be due to the universality of driving a car in many Western societies—self-driving cars may fundamentally transform and maybe even subvert our cultural identity! Another possible cause is the potential positive impact, given the number of fatalities due to traffic accidents, the amount of time Americans waste while commuting in cars, the number of driven miles squandered on seeking parking, and the amount of real estate wasted on parking spaces.

But for whatever reason, this is an IoT area where it’s not hard to hear the Cassandras. Surrendering to a computer the basic human right of controlling this transport vehicle is a recipe for disaster—a computing machine (from this point of view) can never duplicate the perception and judgment of a human, so we will have accidents and carnage.

However, this future too has been here before.

The Dawn of Fly-by-Wire

Let’s go back again to the 1980s and think about airplanes.

In the then-current way of doing things, humans in the cockpit used their muscles to move levers and devices that connected via wires and cables to the ailerons and flaps that controlled the flight of the airplane and ensured the passengers safely reached their destination. However, an emerging idea called fly-by-wire threatened this comfortable paradigm. With fly-by-wire, rather than pulling a lever that directly controls the flap, the human pilot would enter some kind of electronic input into a computer, which in turn would actuate some servos that made the flap move.

Placing the computer between the human and the vehicle’s moving parts introduces opportunities for the computer to do more than just relay commands. Instead, the computer can actually filter them and participate in the decisions, using its knowledge of the other devices and of the plane’s current operating parameters.

To traditionalists, this intrusion sounds dangerous—the computer might not let the pilot do what he or she needs to do to rescue the plane. But by another way of thinking, it could improve safety. For example, if the pilot requests a flap to move at a speed that would be too dangerous, the computer can slow down the transition. Waldrop [20] termed this “envelope protection”:

Suppose that you are flying along, says Airbus’ Guenzel, and you suddenly find yourself staring at a Cessna that has wandered into your airspace. So you swerve. Now, in a standard airliner, you would probably hold back from maneuvering as hard as you could for fear of tumbling out of control, or worse. “You would have to sneak up on it [2.5 G],” he says, “And when you got there you wouldn’t be able to tell, because very few commercial pilots have ever flown 2.5 G.” But in the A320, he says, you wouldn’t have to hesitate: you could just slam the controller all the way to the side and instantly get out of there as fast as the plane will take you. In short, goes the argument, envelope protection doesn’t constrain the pilot. It liberates the pilot from uncertainty—and thus enhances safety. As [747 pilot Kenneth] Waldrip puts it, “it’s reassuring to know that I can’t pull back so hard that the wings fall off.”

In 2017, when cars whose computers assist the driver are already on the road, one hears the same arguments, on both sides.

Fear of the A320

The insertion of computerized interference (or assistance) between the human pilot and the vehicle came to a head when, in the late 1980s, Airbus (a European consortium) introduced the A320, a commercial passenger jet that pushed the envelope by being entirely fly-by-wire.

This development led to what sounded like extreme claims on both sides of the spectrum. Airbus claimed that the A320 “would be the safest passenger aircraft ever” (see [7]), in part due to the computer preventing the pilots from putting the aircraft into dangerous operational states. Naysayers worried about computer failures and more insidious errors, such as preventing the human pilots from doing what they knew to be the right thing. A professor of computational mathematics at Liverpool University even took steps to try to stop the A320 in the UK on safety grounds.

(Another factor stoking controversy may have been that the European-backed Airbus was challenging a market previously dominated by American-based Boeing.)

The naysayers seemed to be vindicated in June 1988 when an A320 on a demonstration flight crashed at Mulhouse–Habsheim airport in France, killing three passengers. Although the computer risk community speculated the problem lay somehow with fly-by-wire, the subsequent inquiry vindicated the aircraft and blamed the pilots. Indeed, analysis suggests the software itself is well engineered and robust.

What Happened Next

In 1990, another A320 crashed in India and killed 97, engendering more speculation that was squelched again by attribution of the crash to pilot error (ironically, reports indicated that the pilot was attempting to land the plane manually). Then in 1992, an A320 crashed in France and killed 87. This crash was also blamed on pilot error—“cowboy-like behaviour”—but pilots objected [22]:

“Each time (one crashes) the crew is blamed whereas the responsibility is really shared in the hiatus between man and machine,” said Romain Kroes, secretary-general of the SPAC civil aviation pilots’ union.

In June 2009, an A330 (a later fly-by-wire jet from Airbus) crashed in the Atlantic Ocean, killing all 228 people aboard. Again, speculation of bad computer behavior was rife (e.g., [6]).

The final crash report told a more subtle story, though: the jet’s pitot tubes (which measure airspeed) had apparently failed due to ice crystals; confused, the fly-by-wire computer handed control back to the pilots, who then engaged in behavior that brought the plane down. (Episode 642 of NPR’s Planet Money even went so far as to speculate that if the pilots had done nothing at all, the computer would have taken over again and the crash would not have happened.) There is an echo here of the 2003 blackout—it’s hard both for computers and for grid operators to take correct action when they receive input that does not match reality.

Past and Future

What happened when the smart vehicle future was here before?

As with self-driving cars now, Cassandras predicted that inserting computers between pilots and vehicles would lead to disaster. The predicted widespread disasters did not happen; indeed, the software engineering in the Airbus jets is held up as an example of what to do.

However, the incidents that did occur show that, even without software failure, layering IT into a human-physical system changes how humans work with these systems. The 2009 A330 crash foreshadowed what many identify as the real fundamental problem with autonomously controlled vehicles: not the machine’s autonomy but the transfer between machine and humans.

The challenge of interface design—how human operators of equipment, ranging from things as simple as doors and showers to things as complicated as operating system security policies and airplanes—has received much attention over the years. Norman’s Design of Everyday Things [14] is a great window into this world; Chapter 18 in my security textbook [17] applies this framework to computer security issues. As long-time readers of Peter Neumann’s Risks Digest know, bad interface design has often been considered as a factor in accidents in old-fashioned nonautonomous vehicles—for example, the death of John Denver when piloting a small aircraft has been attributed to the bizarre placement and orientation of the control for which of the plane’s two fuel tanks should be used [18]:

The builder of the aircraft, however, elected to place the valve back behind the pilot’s left shoulder…. The only way to switch tanks was to let go of the controls, twist your head to the left to look behind you, reach over your left shoulder with your right hand, find the valve, and turn it…. It was difficult to do this without bracing yourself with your right foot—by pressing the right rudder pedal all the way to the floor….

He also rotated it in such a way that turning the valve to the right turned on the left fuel tank. This ensured that a pilot unfamiliar with the aircraft, upon hearing the engine begin missing and spotting in his mirror that the left fuel tank was empty, would attempt to rotate the fuel valve to the right, away from the full tank, guaranteeing his destruction.

Making vehicles smart brings a new dimension to this human/machine boundary—not just how to do the controlling, but who is doing the controlling. Chapter 10 will revisit this topic.

Today

As Chapter 1 mentioned, summer 2016 brought news of perhaps the first fatality due to a self-driving car: the May 7 death of Joshua Brown in his Tesla in Williston, Florida. Reports sketch a complicated situation—apparently, Brown was speeding and watching a DVD [9]:

[The truck driver] said the Harry Potter movie “was still playing when he died and snapped a telephone pole a quarter mile down the road”.

What appeared to be driver carelessness was exacerbated by the Tesla’s computer finding itself in a confusing physical scenario [4]:

Tesla says, “the high, white side of the box truck … combined with a radar signature that would have looked very similar to an overhead sign, caused automatic braking not to fire.” This is in line with a tweet from Tesla CEO Elon Musk yesterday, who suggested that the system believed the truck was an overhead sign that the car could pass beneath without incident.

Subsequent reports suggest Tesla has worked on algorithm updates to prevent this kind of scenario from reoccurring.

Indeed, I am tempted to put on my professor hat and observe that real world is often messier than that of simple models. It would be easier to blame either the self-driving car (watch out, a dangerous future!) or the foolish human (the future is the best of all possible worlds!), but—according to initial reports—both bore some responsibility.

Some initial comments from Tesla also reflect the messy nature of reality [9]:

Elon Musk, the CEO of Tesla, tweeted his condolences regarding the “tragic loss”, but the company’s statement deflected blame for the crash. His 537-word statement noted that this was Tesla’s first known autopilot death in roughly 130m miles driven by customers.

“Among all vehicles in the US, there is a fatality every 94 million miles,” the statement said.

Self-driving cars may reduce the total number of traffic fatalities, but they may also change who gets killed—which is good overall, unless one happens to be one of the ones who gets killed. As Joshua Brown might have rephrased John Donne, “Any man’s death diminishes me—especially mine.”

Not Repeating Past Mistakes

What did these past experiences tell us about the IoT?

Sloppy software engineering enhances the chance of software failures, and integration with the physical environment means these failures have physical consequences. However, even well-engineered software can still hide bugs. Even without failures, the cyber-physical connection adds a new surface to the system, and the solution to problems in cyber-physical control might sometimes be to add more cyber.

Have these lessons been taken to heart? In 2013, Toyota agreed to pay a $1.2 billion fine due to safety issues regarding sudden acceleration in its cars [19]. Technical analysis of this phenomenon identified numerous sloppy coding practices: race conditions, failure to detect bad combinations, memory corruption, and the like [13]. With Toyota vehicles—and all the other smart IoT devices being rushed to market—might we have more Therac-25s lurking?

Chapter 4 will consider software engineering factors that lead to such problems, Chapter 7 will consider the economic factors, and Chapter 8 will consider public policy factors.

Works Cited

-

G. Anderson and others, “Causes of the 2003 major grid blackouts in North America and Europe, and recommended means to improve system dynamic performance,” IEEE Transactions on Power Systems, October 31, 2005.

-

W. Bogdanich, “At V.A. hospital, a rogue cancer unit,” The New York Times, June 20, 2009.

-

W. Bogdanich and K. Rebelo, “A pinpoint beam strays invisibly, harming instead of healing,” The New York Times, December 28, 2010.

-

J. Golson, “Tesla and Mobileye disagree on lack of emergency braking in deadly Autopilot crash,” The Verge, July 1, 2016.

-

K. Grens, “Spike in deaths blamed on 2003 New York blackout,” Reuters Health News, January 27, 2012.

-

J. T. Iverson, “Could a computer glitch have brought down Air France 447?,” Time, June 5, 2009.

-

N. Leveson, “Airbus fly-by-wire controversy,” The Risks Digest, February 23, 1988.

-

N. Leveson and C. Turner, “An investigation of the Therac-25 accidents,” IEEE Computer, July 1993.

-

S. Levin and N. Woolf, “Tesla driver killed while using Autopilot was watching Harry Potter, witness says,” The Guardian, July 1, 2016.

-

B. Liscouski and others, “Final Report on the August 14, 2003 Blackout in the United States and Canada.” U.S.-Canada Power System Outage Task Force, April 2004.

-

M. McCullough and J. Goldstein, “Unplugged computer cited in Phila. VA medical errors,” Philadelphia Inquirer, July 19, 2009.

-

P. Mundkur, “Critical embedded software bugs responsible in Toyota unintended acceleration case,” The Risks Digest, October 26, 2013.

-

D. Norman, The Design of Everyday Things. Basic Books, 2002.

-

K. Poulsen, “Tracking the blackout bug,” SecurityFocus, April 7, 2004.

-

R. Smith, “U.S. risks national blackout from small-scale attack,” The Wall Street Journal, March 12, 2014.

-

S. Smith and J. Marchesini, The Craft of System Security. Addison-Wesley, 2008.

-

B. Tognazzini, “When interfaces kill: John Denver,” Ask Tog, June 1999.

-

E. Tucker and T. Krisher, “Toyota to pay $1.2 billion fine for misleading consumers on sudden acceleration,” The Dallas Morning News, March 19, 2014.

-

M. Waldrop, “Flying the electric skies,” Science, June 30, 1989.

-

M. Wrong, “Latest crash heightens controversy over Airbus A3320,” Reuters, January 21, 1992.

1 Chapter 10 will revisit the idea of looking at IT security and usability troubles in terms of semantic mismatches.

2 “Complex” in the sense of , where .