Chapter 3. Continuous Integration

Continuous Integration is central to Continuous Delivery. Many organizations assume that they are “doing CI” if they run a CI Server (see below), but good CI is a well-defined set of approaches and good practices, not a tool. We integrate continuously in order to find code conflicts as soon as possible. That is, by doing Continuous Integration, we explicitly expect to hit small snags with merges or component interactions on a regular basis. However, because we are integrating continuously (many times every day), the size and complexity of these conflicts or incompatibilities are small, and each problem is usually easy to correct. We use a CI server to coordinate steps for a build process by triggering other tools or scripts that undertake the actual compilation and linking activities. By separating the build tasks into scripts, we are able to reuse the same build steps on the CI server as on our developer workstations, giving us greater consistency for our builds.

In this chapter, we first look at some commonly used CI servers for Windows and .NET and their strengths and weaknesses. We then cover some options for driving .NET builds both from the command line and from CI servers.

CI Servers for Windows and .NET

Although CI is a practice or mindset, we can use a CI server to help us do some heavy lifting. Several CI servers have dedicated Windows and .NET support, and each has different strengths. Whichever CI server you choose, ensure that you do not run builds on the CI server itself, but use build agents (“remote agents” or “build slaves”; see Figure 3-1). Build agents can scale and be configured much more easily than the CI server; leave the CI server to coordinate builds done remotely on the agents.

Note

Remote build agents require a physical or virtual machine, which can be expensive. A possible route to smaller and cheaper build agents is Windows Nano, a lightweight version of Windows announced in April 2015.

Figure 3-1. Use remote build agents for CI

AppVeyor

AppVeyor is a cloud-hosted CI service dedicated to building and testing code on the Windows/.NET platform (see Figure 3-2). The service has built-in support for version control repositories in online services such as GitHub, Bitbucket, and Visual Studio Online, as well as generic version control systems such as Git, Mercurial, and Subversion. Once you have authenticated with your chosen version control service, AppVeyor automatically displays all the repositories available.

The focus on .NET applications allows AppVeyor to make some useful assumptions about what to build and run within your source code: solution files (.sln) and project files (.csproj) are auto-detected, and there is first-class support for NuGet for build dependency management.

Figure 3-2. A successful build in AppVeyor

If all or most of your software is based on .NET and you use cloud-based services for version control (GitHub, Bitbucket, etc.) and Azure for hosting, then AppVeyor could work well for you.

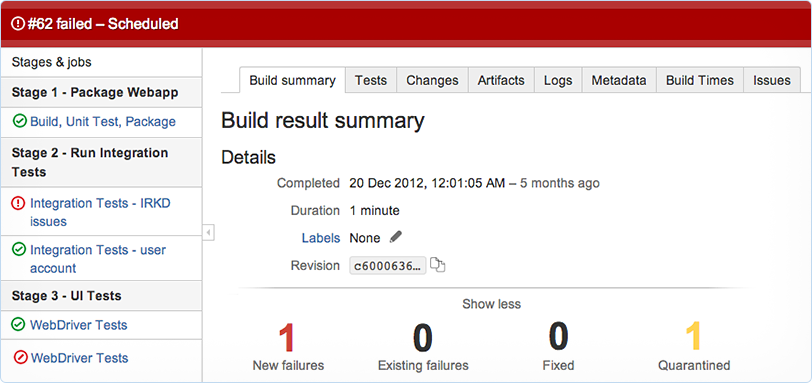

Bamboo

Atlassian Bamboo (Figure 3-3) is a cross-platform CI and release management tool with first-class support for Windows and .NET. Short build times are essential for rapid feedback after a commit to version control, and Bamboo supports remote build agents for speeding up complex builds through parallelization.

Figure 3-3. Bamboo builds

Along with drag-and-drop organization of build plans, Bamboo has strong integration with Atlassian JIRA to show the status of builds against JIRA tickets, a useful feature for teams using JIRA for story tracking.

Bamboo has strong support for working with multiple branches, both short-lived and longer-term, so it may be especially suitable if you need to gain control over heavily branched version control repositories as you move toward trunk-based development.

BuildMaster

BuildMaster from Inedo can provide CI services, along with many other aspects of software deployment automation (Figure 3-4). BuildMaster is written in .NET and has first-class support for both .NET and non-.NET software running on Windows. Its key differentiators are ease of use, team visibility of build and deployment status, and incremental adoption of Continuous Delivery.

Figure 3-4. Deployments in BuildMaster

BuildMaster’s focus on incremental progress toward Continuous Delivery is particularly useful because moving an existing codebase to Continuous Delivery in one go is unlikely to succeed. In our experience, organizations should start Continuous Delivery with just a single application or service, adding more applications as teething problems are solved, rather than attempting a “big bang” approach.

GoCD

GoCD is arguably the most advanced tool for Continuous Delivery currently available. Almost alone among Continuous Delivery tools, it has first-class support for fully configurable deployment pipelines, and we look in more depth at this capability later in the book (see Chapter 4).

GoCD can also act as a CI server, and has a developing plug-in ecosystem with support for .NET technologies like NuGet. The UI is particularly clean and well designed. Nonlinear build schemes are easy to set up and maintain in GoCD, as seen in Figure 3-5.

Figure 3-5. Nonlinear build flows in GoCD

GoCD has strong support for parallelization of builds using build agents. This can lead to a huge reduction in build times if a build has multiple independent components (say, several .sln solution files, each generating separate class libraries or services).

Parallel builds, a clean UI, and support for nonlinear build flows make GoCD an effective choice for CI for more complex situations.

Jenkins

Jenkins is a well-established CI server, likely the most popular one for Linux/BSD systems. One of the most compelling features of Jenkins is the plug-in system and the many hundreds of plug-ins that are available for build and deployment automation.

A very useful feature of Jenkins is the “weather report” (Figure 3-6), which indicates the trend of builds for a Jenkins job: sunshine indicates that all is well, whereas rain indicates that the build job is regularly failing.

Figure 3-6. Jenkins build jobs indicate long-term health

A downside of using Jenkins as a CI for .NET builds is that the support for .NET ecosystem technologies such as NuGet or Azure is largely limited to the use of hand-crafted command-line scripts or early-stage plug-ins. Your mileage may vary!

TeamCity

JetBrains provides the commercial product TeamCity, likely the most popular in use with .NET projects on Windows. Compared to other CI tools, the highly polished UI is one of its best features.

TeamCity uses a hierarchical organizational structure—projects contain subprojects, which in turn contain multiple builds. Each build consists of an optional version control source, trigger rules, dependencies, artifact rules, and multiple build steps.

A build can run a single build step, which triggers a script, or multiple build steps can be specified to run in sequence to compose the required steps. TeamCity includes preconfigured build steps for many common actions, such as compiling a Visual Studio solution and generating NuGet packages.

A running build will display a live log of progress and a full history kept for a configurable amount of time.

Note

TeamCity includes support for triggering builds from branches and pull requests merged into the HEAD of trunk. Using this feature allows for testing of a pull request before finally merging.

TeamCity also exposes a REST API allowing for scripting and a library of over 100 plug-ins available to use.

TFS Build / VSO

Team Foundation Server Build (often known as TFS) is Microsoft’s CI and deployment solution and has undergone several iterations, the most recent being TFS 2015 (self-hosted) or Visual Studio Online (VSO). The versions of TFS prior to TFS 2015 are “notoriously unfriendly to use” [Hammer] and have very limited support for non-developers using version control and CI because in practice they require the use of Visual Studio, a tool rarely installed on the workstations of DBAs or SysAdmins. The pre-2015 versions of TFS Build also used an awkward XML-based build definition scheme, which was difficult for many to work with.

However, with TFS Build 2015 and Visual Studio Online, Microsoft has made a significant improvement in many areas, with many parts rewritten from the ground up. Here is the advice from the Microsoft Visual Studio team on using TFS 2015/VSO for CI:

If you are new to Team Foundation Server (TFS) and Visual Studio Online, you should use this new system [TFS 2015]. Most customers with experience using TFS and XAML builds will also get better outcomes by using the new system.

The new builds are web- and script-based, and highly customizable. They leave behind many of the problems and limitations of the XAML builds.1

Visual Studio team

TFS Build 2015 supports a wide range of build targets and technologies, including many normally associated with the Linux/Mac platform, reflecting the heterogeneous nature of many technology stacks these days. The CI features have been revamped, with the live build status view (shown in Figure 3-7) being particularly good.

Figure 3-7. Live build status view in TFS 2015

Crucially for Continuous Delivery, TFS Build 2015 uses standard build scripts to run CI builds, meaning that developers can run the same builds as the CI server, reducing the chances of running into the problem of “it builds fine on my machine but not on the CI server”. TFS Build 2015/VSO appears to have very capable CI features.

Build Automation

Build Automation in .NET usually includes four distinct steps:

- Build

-

Compiling the source code

- Test

-

Executing unit, integration, and acceptance tests

- Package

-

Collating the compiled code and artifacts

- Deploy

-

Getting the package onto the server and installed

If any of the steps fails, subsequent steps should not be executed; instead, error logs should be available to the team so that investigations can begin.

Build Automation Tools

The various CI servers described in the previous chapter co-ordinate steps for a build process by triggering other tools or scripts. For the step of building and compiling source files there are various options in the .NET space. A description of a select few follow.

MSBuild

MSBuild is the default build toolset for .NET, and uses an XML schema describing the files and steps needed for the compilation. It is the process with which Visual Studio itself compiles the solutions, and MSBuild is excellent for this task. Visual Studio is dependent on MSBuild but MSBuild is not dependent on Visual Studio, so the build tools can be installed as a distinct package on build agents, thereby avoiding extra licensing costs for Visual Studio.

The solution (.sln) and project (.csproj) files make up almost the entirety of the scripts for MSBuild. These are generally auto-generated and administered through Visual Studio. MSBuild also provides hooks for packaging, testing, and deployment.

Tip

Builds with MSBuild can be speeded up significantly using the /maxcpucount (or /m) setting. If you have four available CPU cores, then call MSBuild like this: msbuild.exe MySolution.sln /maxcpucount:4, and MSBuild will automatically use up to four separate build processes to build the solution.

PSake

PSake is a PowerShell-based .NET build tool that takes an imperative “do this” approach that is contrary to the declarative “this is what I want” approach of NAnt. Several emerging .NET Continuous Delivery tools use PSake as their build tool.

Ruby/Rake

Rake saw great popularity as a build automation tool of choice from late 2009. At the time, it was a great improvement over MSBuild and NAnt—the previous popular tools. With NAnt being XML-based, it’s extremely difficult to write as a script (e.g., with control statements such as loops and ifs). Rake provided all of this, as it is a task-based DSL written in Ruby.

The downside of Rake is that Ruby is not at home in Windows. Ruby must be installed on the machine and so a version must be chosen. There’s no easy way to manage multiple Ruby versions in Windows so the scripts tend to be frozen in time and locked to the version that has been installed.

NAnt

NAnt is a tool that used to be popular. It is XML-driven and unwieldy. Replace it with another tool if NAnt is still in use.

Batch Script

If the CI server you are using does not support PowerShell and other tools such as Ruby cannot be installed, then batch scripting can be used for very small tasks. It is not recommended and can quickly result in unmanageable and unreadable files.

Integrating CI with Version Control and Ticket Tracking

Most CI servers provide hooks that allow for integration with version control and ticketing systems. With these features we can enable the CI server to trigger a build on the commit of a changeset, to link a particular changeset with a ticket, and to list artifacts generated from builds from within the ticket.

This allows the ticket tracker to store a direct link to code changes, which can be used to answer questions relating to actual changes made when working on this ticket.

Patterns for CI Across Multiple Teams

Effective CI should naturally drive us to divide the coding required for User Stories into developer- or team-sized chunks so that there is less need to branch code. Contract tests between components (with consumer-driven contracts) help to detect integration problems early, especially where work from more than one team needs to be integrated.

Clear ownership of code is crucial for effective Continuous Delivery. Generally speaking, avoid the model of “any person can change anything,” as this model works only for highly disciplined engineering-driven organizations. Instead, let each team be responsible for a set of components or services, acting as gatekeepers of quality (see “Organizational Changes”).

Architecture Changes for Better CI

We can make CI faster and more repeatable for .NET code by adjusting the structure of our code in several ways:

-

Use smaller, decoupled components

-

Use exactly one .sln file per component or service

-

Ensure that a solution produces many assemblies/DLLs but only one component or service

-

Ensure that each .csproj exists in only one .sln file, not shared between many

-

Use NuGet to package internal libraries as described in “Use NuGet to Manage Internal Dependencies”

Note

The new project.json project file format for DNX (the upcoming cross-platform development and execution environment for .NET) looks like a more CI-friendly format compared to the traditional .csproj format. In particular, the Dependencies feature of project.json helps to define exactly which dependencies to use.

Cleanly separated libraries and components that express their dependencies via NuGet packaging tend to produce builds that are easier to debug due to better-defined dependencies compared to code where projects sit in many solutions.

Summary

Continuous Integration is the first vital step to achieving Continuous Delivery. We have covered the various options for CI servers, version control systems, build automation, and package management in the Windows and .NET World.

When choosing any tools, it is important to find those that facilitate the desired working practices you and your team are after, rather than simply selecting a tool based on its advertised feature set. For effective Continuous Delivery we need to choose Continuous Integration tooling that supports the continuous flow of changes from development to automated build and test of deployment packages.