Chapter 2. Sidecar Proxies

The sidecar proxy is a pattern for integrating the data plane of the service mesh with your application. Alongside every application, a lightweight and performant network proxy is deployed that is responsible for handling functionality such as load balancing, retries, circuit breaking, authorization, observability, and other service mesh features. The primary design is to externalize service mesh functionality while keeping network latency to an absolute minimum. Let’s look at a hypothetical problem that can be solved by the sidecar pattern.

Problem

Qui’s team were early adopters of the service mesh pattern, their platform comprised of a central system that provided a service catalog and configuration for the individual applications and a library embedded into the applications. While this approach has provided the functionality that the team required and has done so with little performance impact on the application there have been certain challenges.

-

Maintaining a common version of the library across multiple services

-

Differing implementation of libraries across polyglot environments

-

Incorrect implementation of the library by some application teams

The problem that Qui’s team faced was how to ensure that the applications in the system are all using a recent version of the data plane library. The number of applications in the system is vast and many of these applications are not actively developed. If the data plane library needs to be updated to fix a security vulnerability or to manage a change in the control plane API then every application in the system must have its dependencies updated, be rebuilt, tested, and deployed. This is starting to cause her real problems, recently the entire system experienced an outage as an application did not get updated to the latest library version. A deprecated feature was removed from the control plane API and this stopped the application from communicating with the service mesh.

Another problem that the team faces is the need for the organization to enable the developers to work in multiple languages. The teams who are creating front-end client code are working with NodeJS, the bulk of the backend services are built in Java, however, the data science team would like to start working with Python and there is a general desire across the company to be flexible on language choice. This is a problem for Qui’s team as they must now produce four different client libraries providing the data plane capabilities.

The final problem has recently caused the biggest impact, the authentication and authorization feature of the client library was implemented incorrectly in the payments service meaning that it was effectively open to all on the network. In addition to taking payments, the payments service can also process refunds. The intention of using service mesh was to limit access between services. In the instance that the system is compromised a potential attacker would not have the ability to access the endpoints on every service in the application. This misconfiguration placed the organization at risk of large financial loss should an attacker have managed to exploit this problem.

Let’s look at a pattern that solves all of Qui’s problems.

Solution

The sidecar proxy pattern is a common implementation of the service mesh data plane and is designed to move logic related to network operations previously used in application code libraries into a separate binary which acts as a proxy for all downstream and upstream requests.

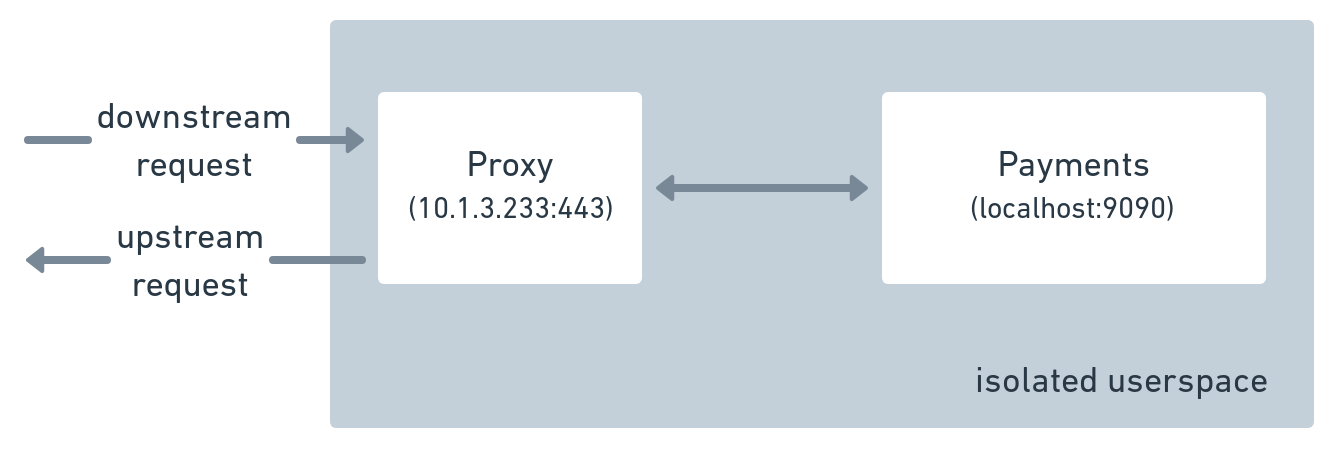

Figure 7-1 shows a simple overview of a service along with its sidecar proxy. The sidecar proxy is responsible for proxying all network traffic in and out of the application.

Figure 2-1. Directionality of service requests: upstream versus downstream.

Downstream / Upstream Traffic

People are often confused by the concept of upstream and downstream traffic--with good reason! Picture a real-life stream of water on a mountain. If you travel with the flow of water, you are going downstream down the mountain. If you travel against the flow, you are going upstream up the mountain.

If you apply the same thinking to network traffic, you’d assume that Service A in Figure 7-1 is the downstream service.

Sadly this is not the case with network traffic, the term upstream and downstream are most likely adopted from the HTTP specification, RFC 2616.1 It states:

Upstream and downstream describe the flow of a message: all messages flow from upstream to downstream.

With HTTP servers (certainly in 1999), the majority of traffic was contained in the response. If the server is the source of the stream then the bulk of the traffic will flow away from downstream.

The confusion comes when you associate the source of a request as the source of a stream and this is not the case, the source in networking terms is the response.

You can still use the stream analogy, just remember that the stream is not carrying the request to the service but carrying the response from a service to you. Anything you make a request to is upstream and anything that receives a response is downstream.

Technical Implementation

Implementing the sidecar pattern requires you to deploy an accompanying proxy for your applications, which is then used as the data plane for the service mesh. Services and their associated sidecars are always deployed in pairs. The sidecar is exclusive to its paired service, for example, it only routes downstream requests to its allocated service, and will only accept traffic destined to upstream services from its dedicated service.

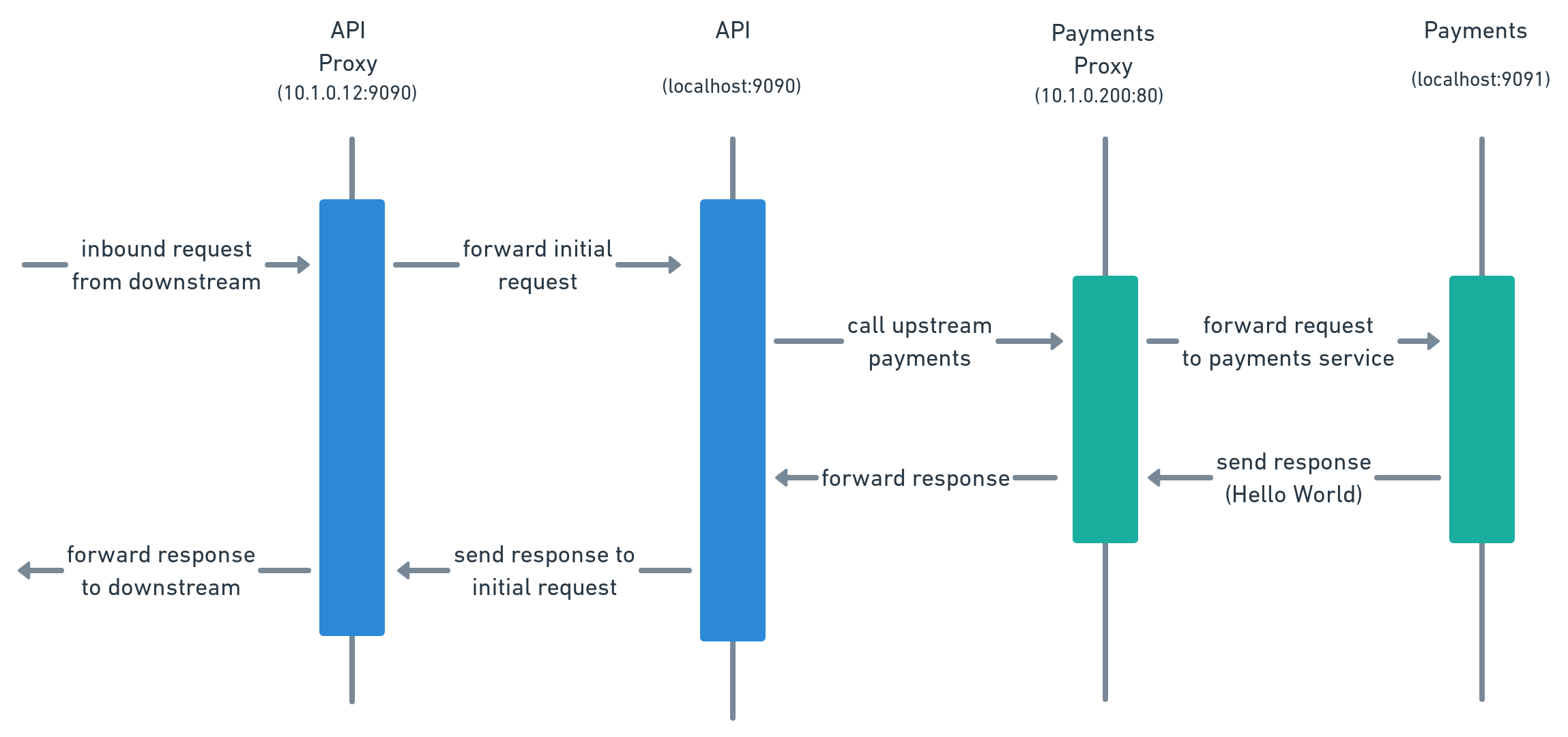

The proxy handles all ingress traffic for the service and also handles all egress traffic for the service, which is to say that the service has no network interaction outside its local environment (pod or userspace) without going through the sidecar proxy. The sequence diagram in Figure 7-2 shows the network traffic as a request is handled by the API service. The public entry point for the request is the Sidecar proxy, and the sidecar routes the request to the local application.

In order to perform its work, the API must call the Payments servcie. Again this request flow is transmitted through the sidecar proxy. The actual location of the Payments service is unknown to the API because it delegates that responsibility to its sidecar proxy. The API proxy connects to the Payments proxy with the request from the API service, which is then forwarded to the Payments service and the response to the request flows back through the chain of proxies to the API.

Figure 2-2. A request flow with sidecar proxy present.

Typically sidecar proxies run in isolated userspaces as the proxy often implements mTLS-based security for connections. The isolated userspace ensures that no applications can contact the application service directly and bypass any security that has been implemented by the proxy.

Figure 7-3 shows a simplified version of the request sequence shown in figure 7.2. Even if the API and Payments service is running on the same machine, communication should only be allowed via the proxies.

Figure 2-3. Request flow from one service to another service with sidecar proxies on the same node.

Reference Implementation

Let’s see this in action. If you look in the folder sidecar-proxy in the examples repository, you will see a Meshery configuration file sidecar_http_test.yaml. This example application runs a simple two-tier application consisting of two services: API and Payments. The application is configured for the service mesh and is using sidecar proxies for its data plane.

deployment:

sidecar_proxy:

protocol: http

Let’s deploy the application, run the following command in your terminal.

mesheryctl perf deploy --file ./sidecar_http_test.yaml

Once the deployment has completed Meshery will output the following message with a local endpoint that can be used to contact the API service.

## Deploying application

Application deployed, you can access the application using the URL:

http://localhost:8200

You can manually test the service by curling the URL http://localhost:8200.

Run the following command in your terminal.

curl http://localhost:19090/

You will see in the response that the API service called the upstream service Payments, the traffic flow for this example application is exactly that shown in Figure 7-2.

{

"name": "API",

"uri": "/",

"type": "HTTP",

"ip_addresses": [

"10.42.0.20"

],

"start_time": "2020-09-27T19:34:14.002749",

"end_time": "2020-09-27T19:34:16.082502",

"duration": "2.079753s",

"body": "Hello World",

"upstream_calls": [

{

"name": "PAYMENTS V1",

"uri": "http://localhost:9091",

"type": "HTTP",

"ip_addresses": [

"10.42.0.21"

],

"start_time": "2020-09-27T19:34:16.031841",

"end_time": "2020-09-27T19:34:16.081988",

"duration": "50.147ms",

"headers": {

"Content-Length": "258",

"Content-Type": "text/plain; charset=utf-8",

"Date": "Sun, 27 Sep 2020 19:34:16 GMT"

},

"body": "Hello World",

"code": 200

}

],

"code": 200

}

Now you have run a manual test of the example application, let’s run some performance tests for this application. This spike test will load the application with 10 threads that represent concurrent user requests.

performance_test:

duration: 3m

threads: 10

success_conditions:

- requests_per_second_p50[name="api"]:

value: "> 180"

- cpu_seconds_p50[name="api"]:

value: "< 2"

- cpu_seconds_p50[name="api_sidecar"]:

value: "< 1"

- memory_megabytes_max[name="payments"]:

value: "< 100MB"

- memory_megabytes_max[name="api_sidecar"]:

value: "< 50MB"

- memory_megabytes_min[name="payments"]:

value: "< 100MB"

- memory_megabytes_min[name="api_sidecar"]:

value: "< 50MB"

You can run this test using the following command:

meshery perf run -file sidecar_proxy_test.yaml

The test will take 3 minutes to complete, when it finishes you should see the results output in a tabular format that will look similar to the results below.

## Executing performance tests Summary: Total: 180.0521 secs Slowest: 0.093 secs Fastest: 0.0518 secs Average: 0.0528 secs Requests/sec: 189.2397 Results: requests_per_second_p50[name="api"] 189.00 PASS cpu_seconds_p50[name="api"] 1.71 PASS cpu_seconds_p50[name="api_sidecar"] 0.04 PASS memory_megabytes_max[name="api"] 76.40 PASS memory_megabytes_max[name="api_sidecar"] 30.30 PASS memory_megabytes_max[name="payments"] 69.10 PASS memory_megabytes_max[name="payments_sidecar"] 29.60 PASS memory_megabytes_min[name="api"] 23.30 PASS memory_megabytes_min[name="api_sidecar"] 26.40 PASS memory_megabytes_min[name="payments"] 23.90 PASS memory_megabytes_min[name="payments_sidecar"] 26.50 PASS

The performance test measures the CPU and memory consumption for the application and the sidecar. From this simple test, you can see that there is an overhead when running a sidecar. However, if you look at the difference between the system under load you will see that this value is approximately 4MB over the memory at rest.

You should not however use this data to determine the suitability of a sidecar proxy in your system. This example is not designed to measure the resource and performance impact but show the functionality of a sidecar proxy within a service mesh. You will learn more about the caveats and considerations for choosing this pattern related to performance and resource consumption later in this chapter.

Discussion

Let’s look at some related patterns.

Caveats and Considerations

Even though the sidecar proxy is the most popular and the most capable of the available service mesh deployment models, it still comes with its share of shortcomings. Most notably, it increases resource consumption and can also have an impact on performance

Resource Consumption

One of the considerations regarding the use of the sidecar pattern is that it will increase the memory and the CPU consumption of your infrastructure. Overhead is incurred given a number of effects of running proxies:

-

Running a separate application for your proxy has a baseline memory footprint.

-

Observing L7 requests require requests to be buffered in memory (typically buffered in a rolling window)

-

The service mesh features that the proxy is performing have a computational overhead (the more functions you enable, the higher overhead you might expect).

-

Opening connections and proxying data (even transparently requires CPU and memory usage).

Ultimately, only you can determine how much overhead is incurred by the sidecar proxy pattern and what it means for your application’s infrastructure. The factors you need to configure are things like the type of service mesh features (network functions) that you are employing, the efficiency of the proxy used, the amount of workload handled and the scale of your applications.

Performance Considerations

When measuring the impact that a sidecar proxy has on resources you must also consider the work it is doing which your application or other components in your system would traditionally perform, and also the benefits you are getting from the features a service mesh is providing.

When making these determinations you should always:

-

Measure under normal operating and max operating load

-

Avoid synthetic tests when determining resources and performance

-

Ensure that you are comparing feature parity

Measure under normal operating and max operating load

When determining the resource consumption a proxy has on your system you should always measure the resources consumed by the proxy under normal load and maximum operating load. Measuring at maximum load helps you with your capacity planning, and measuring at normal operating conditions gives you the true impact of the proxies’ impact on your system.

You should not assume that resource consumption at rest will scale linearly. For example, a proxy will always have a baseline memory consumption which will not change as the throughput increases. It is also not safe to assume that response time will scale linearly. There are a number of factors that affect throughput such as the number of connections in the proxies connection pool which may cause request queuing. The safe approach is to test and measure and then use this data to make an informed decision.

Avoid synthetic tests when determining resources and performance

Synthetic tests may not give you a true picture of the impact the proxy will have on your system.

For example, consider that you have a workload containing two services and their associated proxies. When comparing performance between a system that uses a sidecar proxy and one that does not, you may see an increase in resource consumption of 100% and an increase in request execution time by 100%. Although these are legitimate results and the percentile change looks high, this may be due to the synthetic workload not demonstrating production-like conditions.

Looking at these results you may decide to rule out the use of the proxy as the impact is too great. However, if the resource consumption is not reflective of the production workload and in fact resource consumption and performance are 10x of the synthetic workload then you would have an increase of 10%. Where possible you should always measure impact based on your production workload, if this is not possible, ensure that your synthetic workload exhibits the behavior of your production workload.

Ensure that you are comparing feature parity

When making decisions on the suitability of the pattern you should not purely measure resources and performance. If you are adding features to your system then regardless of where this feature resides in the application code or the proxy there will be an impact.

Conclusions and Further Reading

The sidecar proxy gives a great balancer of security maintainability and performance, for these reasons it is the most popular pattern for providing data plane functionality in the service mesh. The proxy pattern is not however ideal in every situation and we recommend that you read chapters 8 and 9 to learn about the other possible patterns for the service mesh data plane.