In this chapter, we’ll lay the ground for the material to come in this book. We’ll start by talking about mobile devices, which are the platforms on which mobile apps run, and then about different types of mobile apps. Next, we’ll look at what mobile users expect from their devices and the apps running on them. This will bring us to the topic of how these mobile device and app realities, along with the expectations of the users, create challenges for testers, which will introduce a core set of topics for the subsequent chapters. We will also introduce the topic of tester skills for mobile testing, and then equipment requirements, both of which are topics we’ll return to later in the book. Finally, we’ll briefly look at software development life cycle models and how the ongoing changes in the way software is built are influencing model app development.

This book is not about software testing in general, but about mobile app testing in particular. So, while this chapter does not delve into the details of how mobile app testing differs from software testing in general, each of the subsequent chapters will explore those differences in detail.

CHAPTER SECTIONS

Chapter 1, Introduction to Mobile Testing, contains the following six sections:

CHAPTER TERMS

The terms to remember for Chapter 1 are as follows:

• hybrid application;

• Internet of Things;

• mobile application testing;

• native mobile application;

• wearables testing.

1 WHAT IS A MOBILE APPLICATION?

The learning objective for Chapter 1, Section 1, is recall of syllabus content only.

First, let’s look at what a mobile device is. There are two types of mobile device—general purpose mobile devices and purpose-built devices, either mass market or proprietary. Purpose-built devices are built for a specific purpose or set of purposes. A smart watch is an example. Another example is the e-reader, though these are evolving away from purpose built to general purpose—consider Kindles. The newer Kindles are more like a fully-fledged tablet now than the original Kindles.

Proprietary purpose-built devices include the package tracking and signature devices used by various delivery services around the world. When you receive a package, you sign on the device itself. Once you’ve signed on that, you can go to the appropriate website, which will show that the package was delivered. Behind the scenes, the mobile device communicated to a server. That information in turn propagated to the delivery company’s online website. A pretty complex data flow happens in the process of doing something that appears to be fairly mundane: acknowledging receipt of a package.

Keep in mind that if you are testing a purpose-built mobile device or an application for such a device, everything we’re discussing will be applicable, plus whatever is specific and unique about your purpose-built device and its applications.

General purpose mobile devices are those that can run various apps, downloadable from app stores such as those for Android and Apple devices; can be extended with external peripherals, such as Bluetooth headsets and keyboards; can be used for a wide (and ever-growing) variety of tasks; and have a wide (and ever-expanding) set of sensors that allow them to interact with the physical world in ways that set mobile devices apart from the typical PC. Examples include smartphones, tablets, and netbooks.

In this book, we will focus mostly on general purpose mobile devices and the applications that run on them. We won’t talk much about “dumb” phones, since those generally support only calls and texts, with no ability to install additional apps. So, it’s unlikely that you’re building apps for a dumb phone.

There are two basic types of mobile applications. One type is a native mobile app. The other type is a mobile-optimized website. (It’s a little more complicated than that, but let’s keep it simple for the moment.) Native mobile apps run on your mobile device directly, while mobile-optimized websites run on your mobile device’s browser. Native mobile apps are downloaded from an app store and installed on your device. Mobile-optimized websites—including websites designed using responsive techniques—are simply websites that look and behave nicely on your device’s browser and hardware.

Some organizations have both types of mobile apps. For example, United Airlines and Delta Airlines have apps that you can download and mobile-optimized websites that you can reach at their appropriate URLs, either united.com or delta.com. If your organization goes down this route, it increases the challenge from a testing point of view.

Let’s consider how this has unfolded over time. Suppose you are involved with testing Delta’s online presence. About a decade ago, there was delta.com and the website there. That was the online presence. Whether with a PC or a smartphone or an internet appliance (if you know what that was), you’d go to delta dot com and you’d see the same thing. Of course, with a smartphone, you’d see it on a smaller screen and it’d be a lot more difficult to read and navigate. Then, mobile-optimized websites came along and companies started to build apps. So now, if you are testing Delta’s online presence, you have to deal with all three options: the mobile-optimized website, the normal website and the native mobile app. There are a number of things that you’d need to consider across those three different platforms. These are topics that we’re going to talk about in this book, so you can recognize and deal with those related, overlapping, but nonetheless different situations.

1.1 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Question 1 Learning objective: recall of syllabus content only (K1)

Which of the following statements is true?

A. All mobile apps are general purpose.

B. All mobile apps are portable across mobile devices.

C. Browsers are used by all mobile apps.

D. The number of mobile apps available grows by the day.

Question 2 Learning objective: term understanding (K1)

What is a native mobile application?

A. A mobile application that requires communication with the web server but also utilizes plug-ins to access device functionality.

B. A mobile application that is designed for use by a variety of devices, with the majority of the code residing on the web server.

C. A mobile application that is designed for a specific device family and is coded to access specific functionality of the device.

D. The actual physical device that is running a mobile application.

2 EXPECTATIONS FROM MOBILE USERS

The learning objective for Chapter 1, Section 2, is as follows:

MOB-1.2.1 (K2) Explain the expectations for a mobile application user and how this affects test prioritization.

On a typical morning or afternoon, when I’m working from my home office, you can find me in a gym, working out, and listening to a podcast on my mobile phone. I might be checking social media updates and the news while I’m doing this. As an aging gym rat, my workouts don’t require a lot of focus, as it’s mostly about maintaining a certain level of muscle tone and general fitness.

Often, I’m surrounded by younger folks who are still on the upward path from a health and fitness perspective, putting in serious gym time, dripping sweat on treadmills or lifting a couple hundred pounds of iron. But, when I look up from my phone, guess what? Most of the people around me are in the same position, head bent forward, looking intently at a small screen, Bluetooth headphones in their ears. Imagine how completely bizarre this scene would appear to a gym rat from the 1970s.1

It’s not just at gyms. It’s everywhere. Worldwide, there is a new public health risk to pedestrians: being struck and in some cases, killed by cars because they—and sometimes the driver—were so engrossed in the little world of their little screen that they failed to avoid an obvious hazard.

So, public health and gym culture aside, what does all this mean for you as a tester?

Well, you should assume—if you’re lucky—that your users will interact with your app daily or hourly or maybe even continuously! But, before they do, you need to test it. Is it completely obvious how your app works? Does it work fast? Does it always work?

The further away your app’s behavior is from those expectations—reasonable or otherwise—the bigger the problem. There are millions of mobile apps out there, often with hundreds in each category. And in each category, for any one thing you’re trying to do, you’re likely to be able to find different options.

So, suppose a user downloads your mobile app, and, within seconds, the user’s not happy. Your app’s not very reliable. Or it’s too hard to figure out. Or it’s really slow. Or it´s really difficult to install or configure for initial use. What does the user do? That’s right: uninstall your app, download your competitor’s app, and, shazam, your erstwhile user is now a former user. Oh, yeah, and a dissatisfied one, too. Here comes the one-star review on the app store.

Now, some of you reading might have a captive audience, a cadre of users who can’t bail on you. For example, your company creates a mobile app that other employees use to do some certain thing. In this case, they can’t just abandon it. But if these users are dissatisfied, now two bad things happen. First, the company bears the cost of the inefficiency, the lost time, and the mistakes. Second, employee satisfaction suffers, because people compare your pathetic in-house app to all the other cool apps they have on their phone. Eventually, these bad things come home to roost with the development team that created the hated app and inflicted it on the other employees.

I have a client that operates a chain of home improvement stores. They have both a brick-and-mortar presence and an online presence. Doing business purely online doesn’t make sense, as sometimes you need a rake or a shovel or a sack of concrete right now.

Now, if you’ve been in a large home improvement store, you probably know that it’s not always obvious where you find particular things. In addition, sometimes stuff gets sold out.

So, my client issued iPhones to all its associates, and installed a custom-built app on them. If a customer approached an associate and asked, “Where are your shovels?” the associate could say, “Shovels are in this particular aisle, this particular location.”

In fact, the associate could even add, “By the way, we’re sold out of square-point shovels, but we happen to have some available at our other store at such-and-such location.” That was because the app was able to communicate with the back-end inventory systems. The app knew that the current store didn’t have something in stock, and automatically found the nearest store that had it. Further, if the customer didn’t want to drive over and get the square-point shovel, the associate could use the app to send a request that the missing shovel should be sent to their store for a pick up later.

That’s some fairly sophisticated capability. If it’s slow, if it’s hard to figure out, if it’s unreliable, then the associate will be frustrated while they’re trying to solve this dilemma of the missing square-point. If it’s bad enough, the customer might just walk away, muttering something like, “Nice whiz-bang technology, too bad it doesn’t work.” Now you’re back in former customer territory again.

The scope of the mobile app challenge is only going to grow. This is a very, very rapidly evolving market. At the time I wrote this book, there were around six million mobile apps across the Android, Apple, Windows, Amazon, and Blackberry platforms. That’s a huge number, and a lot of competition. Furthermore, the number seems to be equal to, or perhaps even greater than, the number of applications available for Windows, Mac, and Linux PCs.

This embarrassment of riches feeds users’ unreasonably high expectations of quality and their unreasonably high expectations of how fast they’ll get new features. People want all the new bells and whistles, all the new functionality. They want apps to be completely reliable. They want apps to be very fast. They want apps to be trivially easy to install, configure, and use.

With mobile apps as with anything else, as testers, we should focus our attention on the user. Try to think about testing from the users’ point of view.

Let’s consider an airline mobile app. Who’s the target user? Typically, the frequent flyer. From the users’ perspective, if you only travel every now and then, why go through the trouble of downloading and installing an app when you could just go to the mobile-optimized website? Even for those infrequent travelers who do use the app, they are not the airlines’ main customers.

So, what does our target user, the frequent flyer, want to do? Well, as a frequent flyer, I often use such apps to check details on my flights. For example, is there a meal? What gate does it leave from?

When I use these apps, I’m concerned with functionality, and also usability. For example, at one point on the Delta app, it had a page called “my reservations” that would show various kinds of information about a reservation, but not whether the flight had a meal. However, you could find the meal details on the appropriate flight status page. To get there, I had to back out of “my reservations,” remember (or find) the flight number, enter the flight number, and click to check the status. Then, buried in an obscure place on that page was an option to see the amenities—represented as icons rather than in words, as if to make it even less clear.

Okay, so the functionality was there, but the usability was pretty poor. The information was a good seven or eight clicks away from the “my reservation” page. Besides, there’s just no good reason not to include the answer to an obvious question—are you going to feed me?

Another important feature for a frequent flyer is to be able to check weather and maps for connections and destination cities. For example, if I’ve got a connection, I’ll want to know if there is weather in that area that might cause some inbound delays. If so, maybe I want to hedge my bets and get on a later connecting flight. If I’m arriving somewhere, I might want to quickly call up a map and see where the airport is in relationship to my hotel.

So, there’s an interoperability element. The app won’t have that ability embedded within itself; it calls other apps on the device. Sometimes that works, but I’ve had plenty of times when it didn’t—at least not the first time.

There’s also a portability element. I want to be able to solve my travel problems regardless of what kind of device I’m using at the time. So, whether I’m using my phone, or my tablet, or my PC, I want similar functionality and a similar way to access it.

Unfortunately, the users’ desire for consistency is something that organizations seem to have a lot of trouble getting right. Obviously, there will be some user interface differences by necessity. But, just like driving a car and driving a truck feel similar enough that there’s no problem moving from one to the other, the same should apply to apps, regardless of the platform. When you test different platforms—and you certainly should—make sure that your test oracles include your app on other platforms.

The problem is exacerbated by siloing in larger organizations. There can be three different groups of people developing and testing the mobile-optimized website, the native app, and the full-size website.

Going back to the frequent flyer example, I need to use these apps or mobile-optimized websites under a wide variety of circumstances. Whether I have a Wi-Fi connection, fast or slow. Whether I have only a 4G or 3G data connection. In fact, the more urgent travel problems are likely to arise in situations with challenging connectivity. For example, when you’re running through an airport.

Speed and reliability can be big concerns too. If I’m in the middle of trying to solve a screwed up travel situation, the last thing I want is to watch a little spinning icon, or have to start over when the app crashes.

In addition to the users’ perspective, the airline also is a stakeholder, and that perspective has to be considered when testing. For example, with international customers there could be some localization issues related to supporting different languages.

The airline wants to make it easy for a potential customer to go from looking for a particular flight on a particular day to actually purchasing a seat on the flight. If this natural use case—browse, find, purchase—is too hard, a frequent flyer can move from one airline’s app to another.

Ultimately, the airline wants its app to help gain market share. It does that by solving the frequent flyers’ typical problems, reliably, quickly, and easily.

Most of you probably aren’t testing airline apps. However, whatever type of app you are testing, you have to do the same kind of user and stakeholder analysis I just walked you through. It’s critical to consider the different, relevant quality characteristics. We’ll cover this in more detail later.

So, you’ve now met your user—and she (or he) is you, right, or at least a lot like you? You probably interact with apps on your mobile device daily, hourly, in some cases continuously. You probably expect those apps to be self-explanatory, fast, and reliable. When you get a new app, you expect it to be easy to install and easy to learn, or you abandon it. So, when you’re testing, remember to think like your user. Of course, you’ll need to test in a lot of different situations, which is something we’ll explore a lot more in this book.

1.2 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Question 3 Learning objective: MOB-1.2.1 (K2) Explain the expectations for a mobile application user and how this affects test prioritization

Which of the following is a typical scenario involving a mobile app that does not meet user expectations for ease-of-use?

A. Users abandon the app and find another with better usability.

B. Users continue to use the app, as their options are limited.

C. Users become frustrated by the app’s slow performance.

D. Testers should focus on usability for the next release.

3 CHALLENGES FOR TESTERS

The learning objectives for Chapter 1, Section 3, are as follows:

MOB-1.3.1 (K2) Explain the challenges testers encounter in mobile application testing and how the environments and skills must change to address those challenges.

MOB-1.3.2 (K2) Summarize the different types of mobile applications.

In this section, we’ll take a broad look at some topics we’ll cover deeply later, as well as revisiting the different types of mobile apps to add more clarity.

The number of uses for mobile apps just keeps on growing. While writing this book, for example, I checked and found that there are actually Fitbit-type devices and mobile apps for dogs—six of them. They allow you to monitor your dog’s exercise levels and so forth. If you’ve got an overweight dog, I suppose that makes sense.

However, some mobile applications are much more mission critical or safety critical. One of our clients, in the health-care management business, manages hospitals, emergency rooms, urgent care facilities, doctors’ offices, and pharmacies. This is obviously mission critical and safety critical. They have mobile apps that are used by health-care practitioners. Imagine if the app gets somebody’s blood type wrong, misses a drug allergy or interaction, or something similar. A person could die.

Further, the number of people who are using mobile apps is huge. It’s in excess of a billion, and it’s growing.2 Just think, a little over a decade ago, the one-laptop-per-child idea was seen as edgy and revolutionary. Now it seems almost quaint, with smartphones everywhere.

I’ve heard reports from relief workers that, if you go into some refugee camps, you will find people trading their food rations so they can pay their mobile phone bills. It is more essential to them to have a working phone than to eat, and there are good reasons for that apparently. The phone is their connection to the outside world, and thus is one of their few ways of leveraging their way out of those camps. It’s a remarkable concept on a number of levels.

So, ranging from private jets to refugee camps to everywhere in between, mobile devices and their apps are completely ubiquitous now, yet this promises to only explode further. How? Well, the Internet of Things seems on track to gradually expand to the point where every single object has an IP address. Refrigerator, dryer, stop light, vending machine, billboard, implantable medical device, and even you. Such immersion in a sea of continuous processing, possibly with your place in it via some optimized reality beyond what the Google Glass offered, or even virtual reality, has some challenging social implications.

Just from a testing point of view, it’s mind-boggling. How will we deal with all of this? This book doesn’t have all the answers, but I hope to give you some ideas of how to chip away at the problems.

Software releases

One of the problems is the firehose of software releases. Release after release after release. Marketing and sales people say, “We gotta get this new feature out, and we gotta get that new feature out, jeez, our competitors just got those features out, we gotta get those new features out.”

There’s a fine line between speed-to-market and junk-to-market, and users will be fickle when confronted with junk. It’s certainly not unusual for an insufficiently tested update to occur, causing users to howl as their favorite features break. Maybe they become former users. Maybe your app gets a lot of one-star reviews on the app store.

You can’t just test the app. You also must test how the app gets on the device, the installation and the update processes. When those processes go wrong, they can cause more trouble than a few bugs in a new feature.

So, part of the solution, which I’ll cover in more detail in Chapter 4, is the range of tools out there to help you cover more features and configurations in less time. Further, a lot of these are open-source tools, which is good, because free is always in budget, though you have to consider your own time working with the tool.

Further, a tool is not a magic wand. You need to have the skills to use the tool. You also need to have the time to use the tool, and the time to do sufficient testing with the tool.

Covering functional and non-functional quality characteristics

Users typically use software because it exhibits certain behaviors that they want or need. The ISTQB® has adopted the industry-standard approach of broadly classifying these characteristics as functional and non-functional. Functional characteristics have to do with what the software does, while non-functional characteristics have to do with how the software does what it does.

In testing, we need to address both functional and non-functional characteristics. For example, we have to test functionality, to see whether the software solves the right problem and gives the right answers. We also need to consider non-functional tests—if it matters whether the software solves problems quickly, reliably and securely, on all supporting hardware platforms, in a way that the users find easy to learn, easy to use, and pleasing to use. This need to address both functional and non-functional aspects of the software presents another set of challenges. Let’s illustrate this challenge with an example.

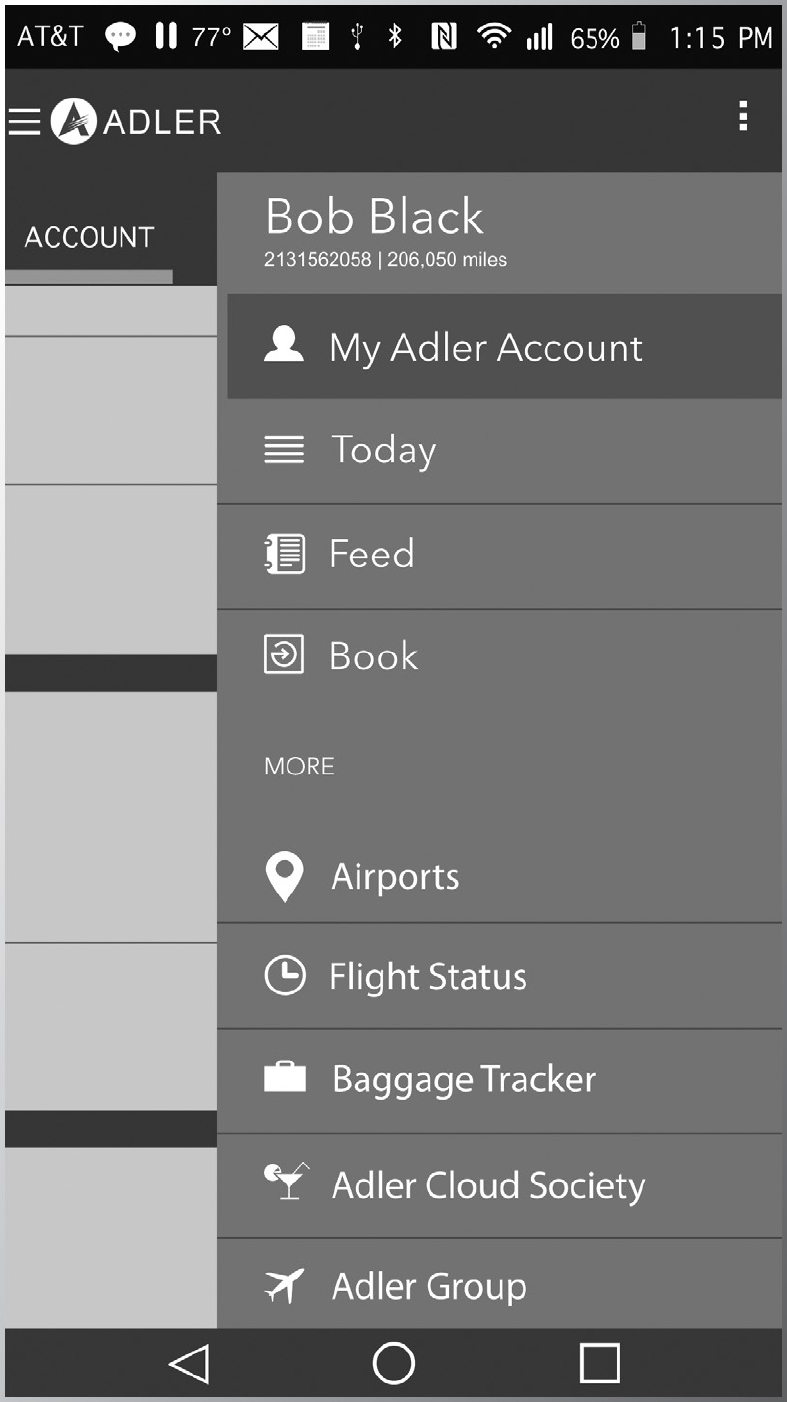

Figure 1.1 shows an example of the Adler Airlines app on a Windows device.3 We’ll see a contrasting example of the Adler app on an Android device a little later, which will add yet another dimension of test coverage. If you look at this app, there are some features that require connectivity, such as checking flight status. Some features do not, such as looking at existing trips that have already been downloaded to the phone.

Figure 1.1 The Adler app welcome screen

For the features that don’t require connectivity, you can test those in a test lab or cubicle or wherever. However, for features that do require connectivity, consider this: if you’re sitting in a test lab with stable and strong Wi-Fi and cellular signals, is that a good simulation of the users’ experience? In addition, you must consider different devices, different amounts of memory, different amounts of storage, different screen sizes, and so forth.

In addition to portability and connectivity, is security an issue for this app? Performance? Reliability? Usability? Yes, probably all of the above.

If you look closely at the screen, you can see there’re quite a few features here. For example, under the “traveling with us” selection, there are a bunch of specific features, such as being able to find Adler’s airline clubs. So, with each new release, there’s a bunch of functionality that needs regression testing.

There’s often more than one

Keep in mind, the user is just trying to solve a problem. The native apps (for however many platforms), the mobile-optimized website, the website as seen on a PC browser: for the user, these are just tools, a means to an end. The user wants to grab a device, whatever happens to be handy, whatever happens to enable them to get a connection at that moment, and solve their problem. They want to be able to solve the problem quickly, easily, whichever device they use. Further, a user might use one device and then come back and use another device to continue work.

For example, if the user books a flight using the native app, then goes to the airline’s website on their PC, the user expects to immediately see that reservation. This is true whether both access methods query the same database or whether there is a different back-end database for each. In other words, data synchronization and migration are critical, across all of the intended target devices here. You’ll need to consider portability across those devices. Whichever device users grab, they’ll expect the user interface to be consistent enough that the cross-platform user experience is, at least, not disconcerting and frustrating.

It’s not that the users don’t like you, but they are unlikely to be sympathetic to the significant increase in testing complexity that’s created by the fact that you’ve got three very distinct ways of getting at the same functionality. In fact, there might be two or three distinct native apps (one for each major phone operating system) that need testing, plus you have to test the websites on multiple browsers. Most users don’t know about that and probably wouldn’t care. They want it all to work, and they want new features all the time, and, if you do break something, they want bug fixes right away—because that’s the expectation that’s been created by all the options out there.

As I mentioned before, in the case of enterprise applications, you might have less of a problem. For example, my client in the home improvement store business standardized on the iPhone, in a particular configuration. However, the trend is towards enterprises allowing what’s called BYOD—bring your own device—so you’d be back in the same scenario in terms of testing supported devices.

So, it’s almost certain that you’re facing a real challenge with testing supported configurations. We’ll address ways to handle these challenges in great detail later in this book. For the moment, though, let me point out that the type of app you’re dealing with matters quite a bit, especially in terms of test strategy and risk.

If your company is still in the mobile device dark ages, you might only have to test a single website, designed for PC browsers. That might work functionally, and it makes your life a lot easier, but it probably looks horrible on a small screen and makes the user unhappy. Maybe you have a mobile-optimized website. As I mentioned before, this can be a separate site or it can be the same site based on responsive web design technology.

In either case, these can be classic thin-client implementations. There’s no code running on the mobile device, other than the browsers. That makes testing easier, because portability is down to one or two browsers on each supported mobile device. However, you can’t take advantage of any of the capabilities of the mobile device, other than those the browser can access, such as location.

So, your development team might decide to go with a thicker-client approach. This involves creating plug-ins for the browser that can access device features. This is obviously easier from a development point of view, but, for testing, you start to get into the portability challenges of a native app. Further, you might not get the same abilities to work disconnected as a native app would provide.

Moving further along the road toward a native mobile app are the hybrid mobile apps. These have some elements of a web-based app but also some native elements that provide access to device features. Depending on the native elements and the features accessed, you could have almost all the portability challenges of a native app. You’ll need to test every device feature used by the native code. However, developers can build a fully-functioned mobile app more quickly, since they enjoy some of the benefits of web-based development.

Once you get into having one or more native mobile apps, you’ve really entered the supported configurations testing morass. There are a huge number of possibilities. It’s likely that you’ll have to resort to some mix of actual hardware—yours and other people’s—and simulators. We’ll discuss this further in Chapter 4.

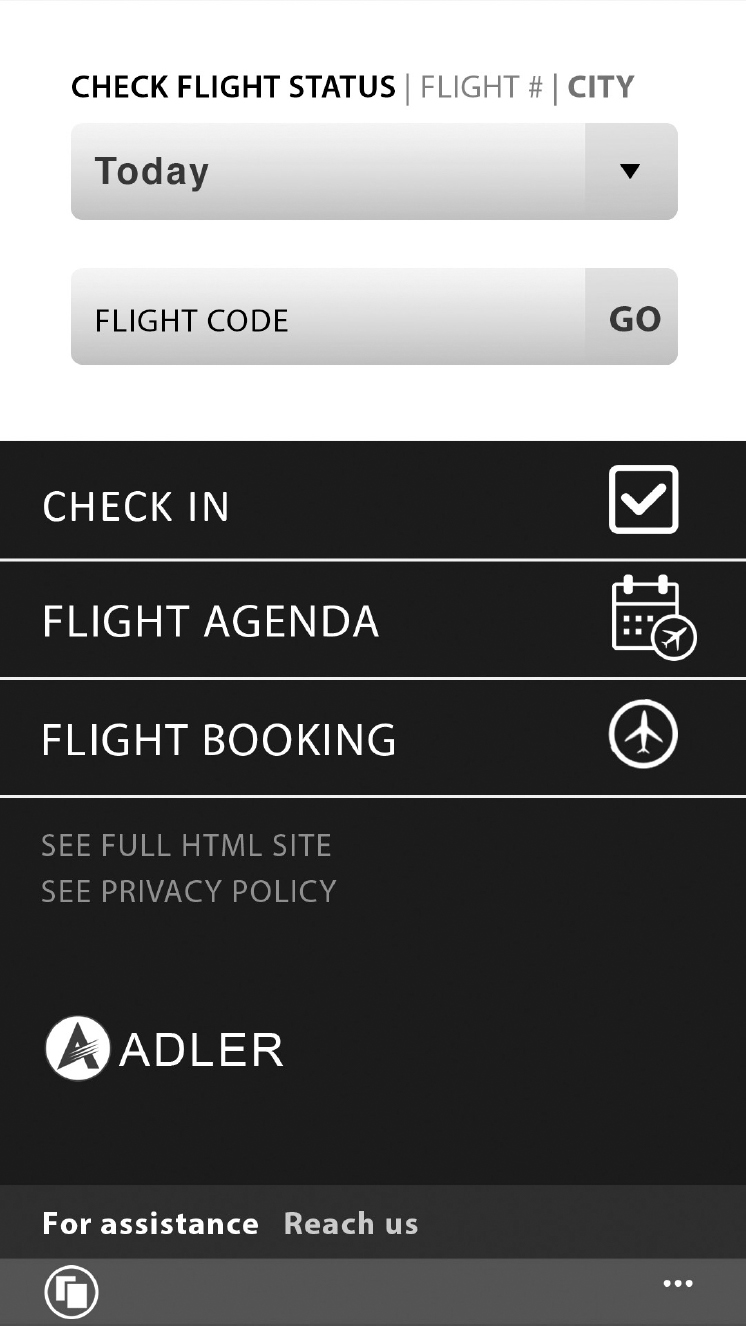

Let’s look more closely at the Adler mobile app and the Adler mobile website. In Figure 1.2, you see the Adler mobile app running on an Android phone. In Figure 1.3, you see the mobile-optimized Adler website.

The features are more or less the same, which is what the user would want. The user interface is different, but moving between them isn’t too challenging. However, the code is different. So, there are now two opportunities for a particular bug in a particular feature.

In addition, the distribution of work between the client-side and the server-side is different between the two. The native app can do more on the client-side, while the mobile-optimized website must rely more on the server-side. For example, I can do things on the native app that on the mobile-optimized website appear to be done on my phone, but they’re actually done on the server. I can use the native app without a connection. Certain features, like checking flight status, don’t work offline, but many features do work. Now, I can’t use the mobile-optimized website without a connection. Even if I have the webpage loaded in my browser, if I try to do anything on it and don’t have a connection, I’ll get an error message.

Figure 1.2 The Adler mobile app

So I have basically the same features, albeit with a somewhat different user interface and very different behavior if there’s no connection. The user can use either to achieve their travel goal, but for us as testers they are distinct, different things. Each must be tested, and tested in ways that address the risks to system quality posed by each.

1.3 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Figure 1.3 The Adler mobile website

Question 4 Learning objective: MOB-1.3.1 (K2) Explain the challenges testers encounter in mobile application testing and how the environments and skills must change to address those challenges

Which of the following statements is true?

A. The set of mobile apps is limited by the cost of integrated development environments.

B. The set of devices supported by any given mobile app can be limited by the cost of test environments.

C. There is usually enough time for testing at the end of each release cycle.

D. Testing can be focused on a single device/browser combination, due to similarities in behavior.

A mobile app runs entirely on a server, with no specific code written for or running on the mobile devices that access it. What kind of mobile app might it be?

A. mobile website;

B. native;

C. hybrid;

D. no such mobile apps exist.

4 NECESSARY SKILLS

The learning objective for Chapter 1, Section 4, is recall of syllabus content only.

While we’ll address the topic of skills in much more detail in Chapter 3, I bet you’re starting to realize now that mobile testing is testing like any other testing, only more so. In other words, you’ll need all the usual tester skills, plus some additional ones.

For example, the ISTQB® Foundation syllabus 2018 covers topics such as:

• requirements analysis;

• test design techniques;

• test environment and data creation;

• running tests;

• reporting results.

You’ll need all of the skills covered in that syllabus. Also, since a lot of mobile software is developed following Lean, Agile, DevOps, or similar types of life cycles, there are Agile testing skills discussed in the ISTQB® Agile Tester Foundation syllabus 2014 that you should have.4

In addition to these device-independent skills, you need to think about the functional capabilities that are present in a mobile device but not in the standard PC or client–server or mainframe app.

For example, boundary value analysis is a useful test design technique, whether you’re testing a mobile app or a mainframe app. However, if you’re familiar with the old 3270 mainframe terminals, you can pick one of those up and twiddle it around at all sorts of different angles. It will still look the same and do the same things. Of course, in twiddling it around, you might well drop it on your foot and bust your toe, because a 3270 terminal weighs about 75 pounds. Fortunately, you don’t have to put an accelerometer in something that’s designed to sit on a desk and display EBCDIC characters in green letters.5

For those of you born a lot further away from the center of the last century than I was, consider the humble PC, desktop or laptop. Certainly, we’re seeing some migration of mobile types of functionality into the PC, such as cellular network capability. However, most PCs still don’t care whether you’re touching their screens or tilting them one way or the other. Mobile devices do care, and they behave differently when you do.

These are significant functional differences that have testing implications. In addition, there are many, many quality characteristics above and beyond functionality, such as security, usability, performance, portability, compatibility, reliability, and so forth, that you must consider. You might be used to addressing these as part of testing PC apps or PC browser-based apps, but, if not, non-functional testing is something you’ll need to study. I’ll get you started on the important functional and non-functional mobile testing skills in Chapter 3, but there’s lots to learn.

1.4 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Question 6 Learning objective: recall of syllabus content only (K1)

A mobile tester can apply test techniques described in the Foundation and Test Analysis syllabi.

A. This statement is true, and no other techniques are required beyond those.

B. This statement is false, as mobile testing requires completely different techniques.

C. Since there are no best practices in mobile testing, only exploratory testing is used.

D. This statement is true, but additional techniques are needed for mobile testing.

5 EQUIPMENT REQUIREMENTS FOR MOBILE TESTING

The learning objective for Chapter 1, Section 5, is as follows:

MOB-1.5.1 (K2) Explain how equivalence partitioning can be used to select devices for testing.

As you might have guessed from Section 3, equipment requirements pose major challenges for mobile application testing. As challenging as PC application compatibility testing can be, it’s much more so with respect to mobile apps in many circumstances. While we cover this in more detail later, I’ll introduce the challenge in this introductory chapter.

Whether you’re creating a native mobile app, a hybrid app, or a mobile-optimized web app, the number of distinct configurations that your app could be run on is huge. Consider the factors: device firmware; operating system; operating system version; browser and browser version; potentially interoperating applications installed on the mobile device, such as a map application; and so on.

In these situations, you encounter what’s called a combinatorial explosion. For example, suppose there are seven factors, and each factor has five possible options that you can choose. How many distinct configuration possibilities are there? Five to the seventh power, or 78,125 distinct configurations.

Obviously, complete coverage of all possible configurations is impractical. In Chapter 4, we’ll examine in depth how to deal with these device configuration issues. However, for the moment, consider equivalence partitioning.

Instead of saying, “Yep, I’m gonna test all 78,000 possible configurations,” you can say instead, “For each of these seven different factors, I’m gonna include in my test environment one device that has each of the five configuration options for each of the seven factors.”

By doing so, you could get by with as few as five devices, depending on the factors. The risk is that, if any sort of combinatorial interactions happen, you won’t catch those. For example, your app might work fine with all supported operating systems and with all supported mapping apps, but not with one particular operating system version together with a particular mapping version. Equivalence partitioning can’t help you find this kind of bug, unless, through luck, that exact combination happens to be present in your test lab.

Now, there are techniques that can help you find pairwise bugs like this. In the ISTQB® Advanced Test Analyst syllabus 2018,6 there’s some discussion about pairwise techniques, which build upon equivalence partitioning. These techniques allow you to find certain combinatorial problems without committing to testing thousands of combinations.

In any case, when doing equivalence partitioning, it’s important that you carefully consider all the relevant options for each factor. For example, is just one Android device sufficient to represent that operating system? Not always, for reasons we’ll discuss in later chapters.

It’s also important to consider factors above and beyond operating systems, browsers, interoperating applications and other software. For example, is the device at rest, is it moving at walking speed, is it moving at highway speed, or even is it moving at bullet train speed, if you’re supporting Europe and Japan? Lighting can affect screen brightness and your ability to read the screen. Lighting tends to be different inside versus outside. If you’re outside, is it day or night, sunny or overcast?

So, what are the factors to consider? Here are some of them:

• the device manufacturer;

• the operating system and versions;

• the supported peripherals;

• whether the app is running on a phone or a tablet;

• which browser’s being used;

• whether it’s accessing the front or back camera, which might have different resolutions.

There are six factors here, but possibly hundreds of combinations. Rather than trying to test all possible combinations, you should ensure that you have one of each option for each factor present in the test environment.

1.5 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Question 7 Learning objective: MOB-1.5.1 (K2) Explain how equivalence partitioning can be used to select devices for testing

How is equivalence partitioning useful in mobile testing?

A. Mobile testers can only apply it to the selection of representative devices for testing, reducing test environment costs.

B. Mobile testers can apply it to identify extreme values for configuration options, application settings, and hardware capacity.

C. Mobile testers don’t use equivalence partitioning in their testing work, but rather rely on mobile-specific techniques.

D. Mobile testers can apply it to reduce the number of tests by recognizing situations where the application behaves the same.

6 LIFE CYCLE MODELS

The learning objective for Chapter 1, Section 6, is as follows:

MOB-1.6.1 (K2) Describe how some software development life cycle models are more appropriate for mobile applications.

Let’s start at the beginning. Since the beginning of medium- to large-scale software development in the 1950s, development teams followed a development model similar to that used in civil engineering, electrical engineering, and mechanical engineering. This model is sequential, starting with requirements identification, then design, then unit development and testing, then unit integration and testing, then system testing, and then, if relevant, acceptance testing. Each phase completes before the next phase starts.

In 1970, Winston Royce gave a presentation where he portrayed this sequential life cycle in a waterfall graphic. The name and the image, waterfall, stuck, and his name got stuck to the model. Because of this, poor Royce took the blame for years for the weaknesses of the waterfall model. Ironically, while the picture stuck, what Royce said didn’t, because what he said was, “Don’t actually do it like this, because you need iteration between the phases.” When I studied software engineering in the 1980s at UCLA, my professor was careful to explain the iteration and overlap in the waterfall.

We also discussed Barry Boehm’s spiral model, which uses a series of prototypes to identify and resolve key technical challenges early in development.7 In the early 1990s, in an attempt to put more structure around the iteration and overlap of the waterfall model and to incorporate Boehm’s ideas about prototyping and stepwise refinement, practitioners like Philippe Kruchten, James Martin, and Steve McConnell advanced the traditional iterative models, Rational Unified Process and Rapid Application Development.8 In these models, you break the set of features that you want to create into groups. Each group of features is built and tested in an iterative fashion. In addition, there is overlap between the different iterations; while developers are building one iteration, you’re testing the previous iteration.

In the late 1990s, from the traditional iterative models emerged the various Agile techniques, and then in the 2000s Lean arrived, usually in the form of Kanban. These have in common the idea of breaking development work into very small iterations or even single features, building and testing those iterations or features, then delivering them.

Agile, Kanban, and Spiral are all capable of supporting rapid release of software. You release something, then build on that, then build on that, and so forth, over and over again. Done right, these models allow you to attain some degree of speed to market without totally compromising testing. However, a lot of app developers teeter on the jagged edge of this balance. Sometimes, in the rush to get something out, they short-circuit the testing.

It’s really important as a tester, regardless of life cycle but especially when using life cycles that emphasize speed, such as Agile, Lean, and Spiral, to help people understand the risks they are taking. In addition, you can and should use techniques like risk-based testing to make sure that the most critical areas are tested sufficiently, and the less critical areas at least get some amount of attention.

The type of app matters. There might be less risk associated with a mass-market entertainment application like a video streaming app and more risk associated with a medical facility management app, such as the one I mentioned earlier in this chapter. With safety-critical software, regulations such as the Food and Drug Administration (FDA) regulations for medical systems come into play, which means a certain amount of documentation must be gathered to prove what you’ve tested. These types of factors might lead your organization to use a traditional iterative life cycle or even the classic waterfall.

One bad habit that has become common in software engineering is what I’d call the spaghetti development model. This is based on the old line that the best way to check if spaghetti is done is to throw it against the wall to see if it sticks. In mobile development, people rationalize this by saying, “Hey, if we have a bad release, we can just do an Over The Air (OTA) update, pushing a fix to the people using the app.”

Frankly, this is using customers as testers. Yes, it is possible to push fixes out fast in some cases. However, there’s a famous quote from Ray Donovan, a businessman-turned-cabinet secretary accused of a massive fraud from his business days. After he was acquitted, he asked, “Where do I go to get my reputation back?”9 Something to keep in mind. In the long run, I think such organizations will get a reputation for wasting their users’ time and using them as a free test resource. Think: one-star reviews in the app store.

People have various degrees of tolerance for vendors wasting their time. I will admit to being completely intolerant of it. However, I think it’s a mistake to assume that most people are at the other end of the spectrum, and will tolerate your organization doing regular OTA hose-downs with garbage software without posting a nasty review.

Consider Yelp, the social app for restaurant listings and other similar public venues. I like Yelp. I use it a fair amount. It isn’t perfect and it sometimes has weird glitches related to location. However, for the most part it does what I need it to do.

I travel a lot, and I have to find a place to eat dinner. I’m not picky; I’m very open to eating many different kinds of food. However, I’m not open to the possibility of getting food-borne illness. That’s not only inconvenient when traveling, it’s exceptionally unpleasant and has a real impact on my ability to do my job and get paid. By using Yelp and its reviews, I can find food I’ll enjoy that probably won’t make me sick. Now, while I hate food poisoning, Yelp is not really a high-risk app. The quality is definitely in the good-enough category, not the bet-your-life category.

Contrast that with one of my clients that maintains a patient logging application. Doctors and patients use this app when they are testing a drug for safety and effectiveness. During the development of the drug, trial patients log their results and side effects. This information is used by the FDA to decide whether the drug can be released to the public. As such, this app is FDA regulated.

Obviously, it should get—and, I’m sure, does get—a much higher scrutiny than Yelp. That doesn’t mean you can’t use Agile methodologies. In fact, my client did use Agile for some period of time. They eventually went back to waterfall because it fit their release schedule better. However, either approach worked, as long as they gathered the necessary regulatory documentation.

1.6 Test your knowledge

Let’s try one or more sample exam questions related to the material we’ve just covered. The answers are found in Appendix C.

Question 8 Learning objective: MOB-1.6.1 (K2) Describe how some software development life cycle models are more appropriate for mobile applications

Which of the following statements is true?

A. Agile life cycles are used for mobile app development because of the reduced need for regression testing associated with Agile life cycles.

B. Sequential life cycles are used for mobile app development because of the need for complete documentation of the tests.

C. Spiral models, with their rapid prototyping cycles, create too many technical risks for such life cycles to be used for mobile testing.

D. Mobile apps are often developed in a stepwise fashion, with each release adding a few new features as those features are built and tested.

In this chapter, we’ve introduced many of the topics we’ll explore in this book. You saw the different types of mobile devices and mobile apps. Next, you saw what mobile users expect from their devices and the apps running on them. These mobile device and app realities, along with the expectations of the users, create challenges for testers, which you have overviewed here. You also briefly saw how mobile testing realities influence the topics of tester skills and equipment requirements. We closed by looking how the ongoing changes in the way software is built are influencing model app development. In the following chapters, we’ll explore many of these topics in more detail, and look more closely at how mobile app testing differs from testing other kinds of software.

1 If you’re having a hard time visualizing the gym scene without mobile devices, watch the Arnold Schwarzenegger movie, Pumping Iron (1977), that introduced the soon-to-be-movie-star, and gym culture in general, to a wider audience.

2 As you can see on the link here, multiple apps have more than one billion users, so the total number of mobile app users must be well over one billion: www.statista.com/topics/1002/mobile-app-usage/

3 Don’t bother searching online for flights from Adler Airlines. The example airline apps are entirely fictitious.

4 You can find a detailed explanation of the Agile Tester Foundation syllabus in Rex Black et al. (2017) Agile Testing Foundations. Swindon: BCS.

5 You might be thinking, “Wait, whoa, EBCDIC, 3270, mainframe? What is that stuff he’s talking about?” Well, trust me, it was a thing, back in the day.

6 See https://certifications.bcs.org/category/18218

7 Barry W. Boehm “A Spiral Model of Software Development and Enhancement”. Available here: http://csse.usc.edu/TECHRPTS/1988/usccse88-500/usccse88-500.pdf

8 Steve McConnell’s 1996 book Rapid Development (Redmond, WA: Microsoft Press) is a reference for RAD. Philippe Kruchten’s 2003 book The Rational Unified Process: An Introduction (Boston, MA: Addison-Wesley Professional) is a reference for RUP.

9 You can read The New York Times account of the outcome of Donovan’s trial, and his comment about what the trial had done to his reputation, here: www.nytimes.com/1987/05/26/nyregion/donovan-cleared-of-fraud-charges-by-jury-in-bronx.html?pagewanted=all