In the Feature extraction section of Chapter 2, Data Preparation for Spark ML, we reviewed a few methods for feature extraction as well as their implementation on Apache Spark. All the techniques discussed there can be applied to our datasets here, especially the ones of utilizing time series to create new features.

As mentioned earlier, for this project, we have a target categorical variable of student attrition and a lot of data on demographics, behavior, performance, as well as interventions. The demographic data is almost ready to be used but needs to be merged with the following table for a partial list of the features:

|

FEATURE NAME |

Description |

|---|---|

|

|

These are the average ACT scores |

|

|

This is the age |

|

|

This is the student's county unemployment rate |

|

|

This is a first-generation student indicator using the "Y/N" options |

|

|

This is the high school GPA |

|

|

This is an indicator of the type of high school |

|

|

This is the student's reported race/ethnicity |

|

|

This is the distance of the student's home from campus |

|

|

This is the student's gender |

|

|

This is the number of Starbucks located in the student's county |

Many log files about students' web behavior are also available for this project, for which we will use techniques similar to what discussed in the Feature preparation section of Chapter 4, Fraud Detection on Spark.

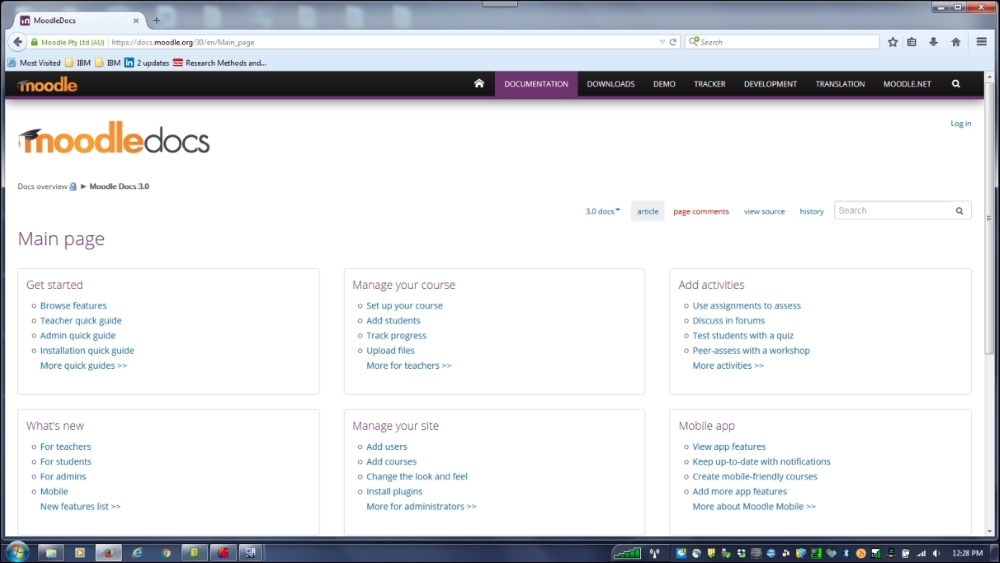

For this project, our focus on feature preparation is to extract more features from the MOODLE learning management system as this is the main and unique data source for learning analytics, which cover many rich characteristics of the students' learning. They often include students' clicks, timing, and total hours spent on each learning activity along with statistics on access to reading materials, syllabus, assignments, submission timing, and so on.

All the methods and procedures discussed here for Moodle can also be applied to other learning management systems, such as Sakai and Blackboard. However, for data about student behavior, especially for these behavior characteristics that need to be measured with the data points from Moodle, a lot of work is needed to get them organized, make them meaningful, and then merge them into the main dataset; all this will covered in this chapter.

In the previous chapters, we used SparkSQL, MLlib, and R for feature extraction. Here, for this project, we can use all of them with SparkSQL and MLlib as the most effective tools.

For your convenience, a complete guide to MLlib feature extraction can be found at http://spark.apache.org/docs/latest/mllib-feature-extraction.html.

For this project, extracting useful features from web log files adds a lot of value. However, the main challenge actually stays with organizing existing datasets and then developing new features from them. Especially for data exported from Moodle, some of it is easily organized, such as that of student performance and classroom participation. However, even with performance features, if we introduce the time dimension into our feature development, the performance changes over time to become something useful. Along with this logic, many new features can be developed.

Besides the preceding features, there are also some important time-related items, such as the specific time when the students submitted their homework, whether it was the middle of the night or afternoon time or days or hours before the due time, for which some categorical features can be created. Time intervals between periods of participation are also of significance to form some new features.

Some social network analysis tools are available to extract features from the learning data to measure student interaction with teachers as well as with classmates to form new features.

With all the preceding points taken into consideration, for this use case, we can develop more than 200 features that are of potentially high value to our modeling.

Hundreds of features in hand to use will enable us to obtain good prediction models. However, feature selection is definitely needed, as discussed in Chapter 3, A Holistic View on Spark, partially for a good explanation and also to avoid overfitting.

For feature selection, we will adopt a good strategy that we tested in Chapter 3, A Holistic View on Spark, which is to take three steps to complete our feature selection. First, we will perform principal components analysis (PCA). Second, we will use our subject knowledge to aid the grouping of features. Then, finally, we will apply machine learning feature selection to filter out redundant or irrelevant features.

If you are using MLlib for PCA, visit http://spark.apache.org/docs/latest/mllib-dimensionality-reduction.html#principal-component-analysis-pca. This link has a few example codes that users may adopt and modify to run PCA on Spark. For more information on MLlib, visit https://spark.apache.org/docs/1.2.1/mllib-dimensionality-reduction.html.

To use R, there are at least five functions to perform PCA, which are as follows:

prcomp() (stats)princomp() (stats)PCA() (FactoMineR)dudi.pca() (ade4)acp() (amap)

The prcomp and princomp functions from the basic package stats are commonly used, for which we also have good functions for the result summary and plots. Therefore, we will use these two functions.

As is always the case, if some subject knowledge can be used, feature reduction results can be improved greatly.

For our example, some concepts used by previous student attrition research are good to start with. They include the following:

- Academic performance

- Financial status

- Emotional encouragement from personal social networks

- Emotional encouragement in school

- Personal adjustment

- Study patterns

As an exercise, we will group all the developed features into six groups according to whether they are indicators measuring one of the preceding six concepts. Then, we will perform PCA six times, one for each data category. For example, for academic performance, we need to perform PCA on 53 features or variables to identify factors or dimensions that can fully represent the information we have about academic performance.

At the end of this PCA exercise, we obtained two to four features for each category, as summarized in the following table:

|

Category |

Number of Factors |

Factor Names |

|---|---|---|

|

Academic Performance |

4 |

AF1, AF2, AF3, AF4 |

|

Financial Status |

2 |

F1, F2 |

|

Emotional Encouragement 1 |

2 |

EE1_1, EE!_2 |

|

Emotional Encouragement 2 |

2 |

EE2_1, EE2_2 |

|

Personal Adjustment |

3 |

PA1, PA2, PA3 |

|

Study Patterns |

3 |

SP1, SP2, SP3 |

|

Total |

16 |

In MLlib, we can use the ChiSqSelector algorithm as follows:

// Create ChiSqSelector that will select top 25 of 240 features val selector = new ChiSqSelector(25) // Create ChiSqSelector model (selecting features) val transformer = selector.fit(TrainingData)

In R, we can use some R packages to make the computation easy. Among the available packages, CARET is one of the commonly used packages.