2

Agent-based Modeling of Human Organizations

2.1. Introduction

This section has a narrow spine but a wide embrace. In addressing the relationship between agents and organizations, it takes in an extensive but highly fragmented set of ideas and studies embedded in organization theories. Its purpose is to try to devise a common ground model from which organization theories of various sorts can be logically derived.

Many theories have been developed to explain how organizations are structured and conducted and how the stakeholders involved behave. Each one takes a definite point of view without giving the opportunity to understand how they are possibly correlated with each other and whether a mapping of a sort between some of them can be figured out. On the contrary, founders of new approaches seem in most cases to ignore previous works. Agent ontology can function as a background model, allowing for the derivation of the main organizational theories from this base.

2.2. Concept of agenthood in the technical world

2.2.1. Some words about agents explained

In the technical field, the concept of agenthood is widely used. A general definition of what an agent is has been produced by J. Ferber (1999) (p. 9). Its adaptation is as follows:

All the terms in italics describe the key features of an agent (action, communication, objectives, autonomy and availability of resources). Virtual entities are software components and computing modules. They are not accessible to human senses as such but, as their number grows exponentially in our living ecosystem, they are contrived to become more and more the faceless partners of human beings.

According to their main missions, specific names have been given to agents, namely communicating agents (computer environment without perception of other agents), situated agents (perception of the environment, deliberation on what should be done and action in this environment), reactive agents (drive-based reflexes) and cognitive agents (capable of anticipating events and preparing for them).

It is important to contrast object, actor and agent. In the field of computer science, object and actor are conceived as structured entities bound to execute computing mechanisms. An object is depicted by three characteristics:

- – the class/instance relationship representing the class as a structural and behavioral meta-model and the instance as a concrete model of the context attributes under consideration;

- – inheritance enabling one class to be derived from another and benefiting from the former in terms of attributes and procedures;

- – message discrimination to trigger polymorph procedures (methods in data-processing vernacular) as a function of incoming message contents.

The delineation between objects and communication agents is not always straightforward. This is the fate of all classifications. If a communication agent can be considered as an upgraded sort of object, conversely an object can be viewed as a degenerate communication agent whose language of expression is limited to the key words corresponding to its methods.

An agent has services (skills) and objectives embedded in its structure, whereas an object has encapsulated methods (procedures) triggered by incoming messages.

Actors in computer science perform data processing in parallel, communicate by buffered asynchronous messaging and generally ask the recipient of a message to forward the processed output to another actor.

Another concept associated with agents is what is called a multiagent system (MAS) (Ferber 1999). It has become a paradigm to address complex problems. There is no unified, generally accepted definition but communities of practice. The approaches followed by the system designer, namely functional design or object design, are chosen on the basis of answering the two following questions:

- – What should be considered as an agent to address the issues raised by the problem to tackle?

A system is analyzed with a functional approach when centered on the functions the system has to fulfil or with an object approach when centered on the individual or the product to deliver.

- – How are the tasks to perform allocated to each agent in the whole system from a methodological point of view?

There is no miracle recipe to achieve a good design. In addition, it is possible to analyze the same system from different angles and to deliver different designs. The approach for coming to terms with a problem is influenced by the historical development of the field involved. Many people are inclined to think of MAS as a natural extension of design by objects.

It is worth noting that the MAS paradigm has disseminated in many technical areas where centralized control was a common practice. For many a reason, especially the computing power of on-board systems and the reliability of available telecommunication services, coordination between distributed systems takes place directly between the very units of the system without any central controlling device. This situation already prevails in railway networks.

2.2.2. Some implementations of the agenthood paradigm

The concept of agenthood has been applied in various technical fields from the 1990s onwards. Two examples will be described here, namely telecommunication networks and manufacturing scheduling.

2.2.2.1. Telecommunications networks

The world of telecommunication networks is extensively modeled on the basis of this concept. A telecommunication network is a mesh of nodes fulfilling a variety of tasks. Each node is an agent. It can be defined as a computational entity:

*acting on behalf of other entities in an autonomous fashion (proxy agent);

*performing its actions with some level of proactivity and/or reactiveness;

*exhibiting some level of the key attributes of learning, cooperation and mobility.

Several agent technologies are operated mainly in the telecommunications realm. They fall into two main categories, i.e. distributed agent technology and mobile agent technology.

Distributed agent technology refers to a multi-agent system described as a network of actants with the following advantages:

- – solving problems that may be too large for a centralized agent;

- – providing enhanced speed and reliability;

- – tolerating uncertain data and knowledge.

They include the following salient features:

- – communicating between themselves;

- – coordinating their activities;

- – negotiating their conflicts.

“Actants” are non-human entities such as configurations of equipment, mediators and software programs and are distinguished from actors that are human beings. But actors and “actants” are entangled in ways that provoke complexity dynamics in many circumstances.

Mobile agent technology functions by encapsulating the interaction capabilities of agents into their descriptive attributes. A mobile agent is a software entity existing in a distributed software environment. The primary task of this environment is to provide the means which allow mobile agents to execute. A mobile agent is a program that chooses to migrate from machine to machine in a heterogeneous network.

The description of a mobile agent must contain all of the following models:

An agent model (autonomy, learning, cooperation).

A life-cycle model: this model defines the dynamics of operations in terms of different execution states and events, triggering the movement from one state to another (start state, running state and death state).

A computational model: this model, being closely related to the life-cycle model, describes how the execution of specified instructions occurs when the agent is in a running state (computational capabilities). Implementers of an agent gain access to other models of this agent through the computational model, the structure of which affects all other models.

A security model: mobile agent security can be split into two broad areas, i.e. protection of hosts from malicious agents and protection of agents from hosts (leakage, tampering, resource stealing and vandalism).

A communication model: communication is used when accessing services outside of the mobile agent during cooperation and coordination. A protocol is an implementation of a communication model.

A navigation model: this model concerns itself with all aspects of agent mobility from the discovery and resolution of destination hosts to the manner in which a mobile agent is transported (transportation schemes).

2.2.2.2. Manufacturing scheduling

Scheduling shop floor activities is a key issue in the manufacturing industry with respect to making the best economical use of manufacturing equipment and bringing costs under control, as well as delivering committed customer orders at due dates.

Consider the product structure from the manufacturing point of view as portrayed in Figure 2.1.

Figure 2.1. Product structures from a manufacturing point of view

Pi are parts machined in dedicated shops and Ai are assembled products. In terms of scheduling, the relevant combined attributes of the products and equipment units involved in machining and assembling, whatever their layout job shop or batch or continuous line production, are lead times, so that a Gantt chart can be derived by backward scheduling from the due date when the “root” product A4 or a batch thereof has to be delivered to its client. The situation is pictured in Figure 2.2.

Within the framework of decentralized decision-making for making collaborators more motivated for solving problems, they have to deal with and deploy what is called “job enrichment”; the choice of three agents, J1 in charge of the delivery of A2, a second one J2 in charge of the delivery of A3, and a third one J3 in charge of the delivery of A4 to the final client, appears to be the most pragmatic and efficient solution. This third agent is in some way the front office of all the hidden backward activities and responsible for fulfilling the commitments taken with respect to clients.

Figure 2.2. Gantt chart for scheduling machining and assembly activities for delivering products at a due date

The three agents Ji have to collaborate for establishing a schedule over a time horizon commensurate with the lead times. When manufacturing problems of any sort, which can impact the fulfilment of the schedule, the agents Ji have to collaborate to devise a coherent, sensible solution (outsourcing, hiring extra workforce, etc.), often without letting the top management know the details of the problems but only the adequate courses of action taken.

2.3. Concept of agenthood in the social world

2.3.1. Cursory perspective of agenthood in the social world

When considering how the concept of agenthood, if it exists, is used in the social world, we come to the concept of agency in law. It aims at defining the relationship existing when one person or party (the principal) engages another (the agent) to act for him/her, i.e. to do his/her work, to sell his/her goods, to manage his/her business on his/her behalf.

Early precedents for agency can be traced back to Roman law when slaves (though not true agents) were considered to be extensions of their masters and could make commitments and agreements on their behalf. In formal terms, a mandate is given to a proxy.

The concept of agenthood appeared in the field of economics in the past century. In 1937, R. H. Coase (1937) published a seminal article in which he developed a new approach to the theory of the firm. Later on, his line of thought was expounded by economists such as W. Baumol, R. Marris and O.E. Williamson. R. H. Coase emphasized the importance of the relations within the firm.

The theory of the firm covers many aspects of what a firm is, how it operates and how it is governed. A section of the theory of the firm is called the agency theory. It investigates the relationship between a principal and its agents within an economic context. This distinction results from the separation between business ownership (principal) and operations management (agents). One of the core issues is to understand the ways and means of how a balanced structure between the principal’s desires and its agents’ commitments and a balanced contract between both parties can be matched. Challenges at stakes, when decision-making takes place, are asymmetric information, risk aversion, ex ante adverse selection and ex-post moral hazard.

The concept of “social network” emerged in the 1930s in the Anglophone world for analyzing relationships in industrial organizations and local communities. The English anthropologist John Barn of the School of Manchester introduced the term “network” explicitly when studying a parish community in a Norwegian island (Barnes 1954). This approach was later theorized by Harrison White who developed “Social Network Analysis” as a method of structural network analysis of social relationships.

Social network theory strives to provide an explanation to an issue raised in the time of Plato by what is called social philosophy, namely the issue of social order: how to make intelligible the reasons why autonomous individuals cooperate to create enduring, functioning societies. In the 19th Century, A. Comte hoped to found a new field of “social physics” with individuals substituted for atoms. The French sociologist E. Durkheim (1951) argued that human societies are composed of interacting individuals and as such are akin to biological systems. Within this cast of thought, social order is not ascribed to the intentions of individuals but to the structure of the social context they are embedded in.

In the 1940s and 1950s, matrix algebra and graph theory were used to formalize fundamental socio-psychological concepts such as groups, social circles in network terms, making it possible to identify emergent groups in network data (Luce 1949). During that period of time, network analysis was also used by sociologists for analyzing the changing social fabric of cities in relation with the extension of urbanization.

In the 1960s, anthropologists carried out analyses with the view of social structures as networks of roles instead of individuals (Brown 1952). In the 1990s, network analysis radiated in a great number of fields, including physics and biology. It also made its way in management consulting (Cross 2004) where it is often applied in exploiting the knowledge and skills distributed across organizations’ members.

A book produced by S. Wasserman and K. Faust (1994) presents a comprehensive discussion of social network methodology. The quantitative features of this methodology rely on the theory of graphs and the properties of matrices. A graph can be either directed or not. A directed graph is an ordered pair G (V, A) where V is a set whose elements are called nodes, points or vertexes and A is a set of ordered pairs of directed edges (heads and tails). V can represent objects or subjects and A linkages between the elements of V. A special case of directed graph is the rooted directed graph in which a node has been distinguished as the root. When a graph is not directed, its edges are undirected. All the properties of graphs can be represented in the matrix formalism.

“Social network” agents: within this framework, an agent is no longer an individual but a collection of individuals associated by the linkages between them. The linkages can be deterministic or stochastic. These features imply two consequences. The first one is well acknowledged: the behavior of a social network agent is differentiated from individual behaviors (the whole is not the sum of its parts). When some linkages between individuals are altered, the behavior of the composite agent is changed. Networks are categorized by how many modal representations the network has (generally one or two) and by how connection variables are measured.

One-mode networks involve measurements of variables on just a single set of actors. The variety of actors covers people, subgroups, organizations, communities and nation states. Their relations extend over a wide spectrum of characteristics:

- – individual evaluation (friendship, liking, respect, etc.);

- – financial transactions and exchange of material resources;

- – transfer of immaterial resources;

- – kinship (marriage, descent).

Two-mode networks refer to measurements of variables on two sets, either two sets of actors or a set of actors and a set of events or activities. In a two-set case, the profiles of actors are similar to those found in one-mode networks. As for relations, some can connect actors inside each set, but at least one relation of a sort must be defined between the two sets of actors.

Connection networks are two-mode networks that combine a set of actors and a set of events or activities to which the actors in the first set attend or belong. The requirement is that the actors must be connected to one or more events or activities. These characteristics of connection networks offer wide possibility and flexibility to represent organizations’ or communities’ structures and operational courses of action.

Connection networks include three types of built-in linkages: first, they show how the actors and the events or activities are directly related to each other; second, the events or activities create indirect relations between actors; and third, the actors create relations between the events or activities.

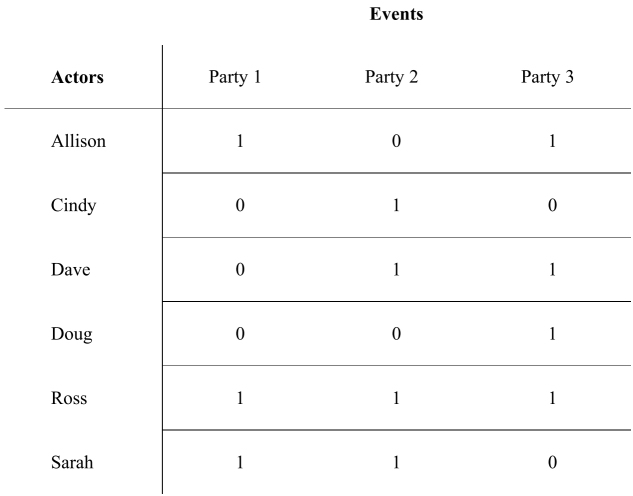

Let us take a simple example to clarify the ideas. Consider a set of children (Allison, Cindy, Dave, Doug, Ross and Sarah) and a set of events (birthday party 1, birthday party 2 and birthday party 3). The attendance of children to the parties can be represented by a matrix whose rows are children and columns are parties as shown in Figure 2.3.

Figure 2.3. Connection network matrix for the example of six children and three birthday parties

aij = 1 if actor i is affiliated with event j, otherwise aij = 0

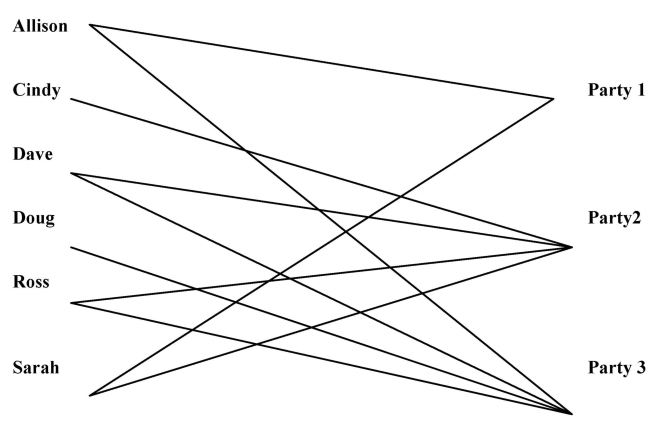

A connection network can also be formalized by a bipartite graph. A bipartite graph is a graph in which the nodes can be split into two subsets and all edges are between pairs of nodes belonging to different subsets. Figure 2.4 translates the matrix of Figure 2.3 into a bipartite graph. Bipartite graphs can be generalized to n-partite graphs that visualize long-range correlations between organizations’ stakeholders. Graphs are very flexible means to visualize real world situations.

Figure 2.4. Bipartite graph of the connection network matrix for the example of six children and three birthday parties (Figure 2.3)

2.3.2. Organization as a collection of agents

Defining what an organization is or is not often refers to metaphors. Let us review these metaphors:

- – an organization is a machine made of interacting parts engineered to transform inputs into outputs called deliverables (products/services);

- – an organization is an organism achieving a goal and experiencing a life cycle (birth, growth, adaptation to environmental conditions, death) and fulfilling organic functions;

- – an organization is a network representing a social structure directed towards some goal and created by communication between groups and/or individuals. The social structure mirrors how driving powers are distributed, influences exerted and finally decisions made to attain the set purpose.

All these instruments of organization representation are an objective symptom showing how this concept has many facets and is approached by apparently partible models. In fact, the question raised is: does an organization consist of relations of ideas or matters of facts? The two first metaphors can be better understood as non-contingent a priori knowledge and the last one as contingent a posteriori knowledge. In other words, the issue is the contingent or not contingent identity of the construct called organization.

E. Morin’s cast of thought (Morin 1977; Morin 1991) leans toward the contingent identity of the organization construct. We take his view to describe an organization: it is a mesh of relations between agents, human as well as virtual, which produces a cluster or system of actors sharing objectives and endowed with attributes and procedures for deploying courses of action, not apprehended at the level of single agents.

An organization is viewed as a society of agents interacting to achieve a purpose that is beyond their individual capabilities. The significant advantages of this vision are due to the potential abilities of agents which draw on:

- – communication among themselves;

- – coordination of their targeted activities;

- – negotiation once they find themselves in conflict and mobility by transferring their processing capabilities to other agents;

- – knowledge capitalization by learning;

- – reaction to stimuli and some degree of autonomy by being proactive.

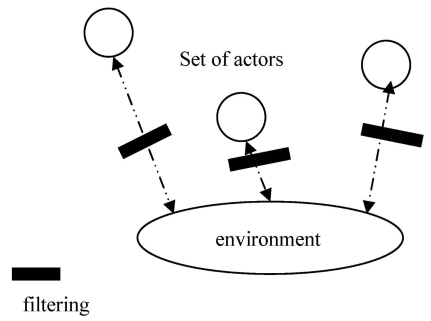

This form of system model allows more flexibility to describe the behavior of organizations. In other words, adaptive behavior can be easily made explicit. By adaptive, it is meant that systems are able to modify their behavior to respond to internal or external signals. Proactivity and autonomy are two essential properties that manifest themselves in a number of different ways. For instance, some agents perform an information filtering role, some of which filter in an autonomous way, only presenting the target agent with information it considers to be of interest to it. Similarly, this same type of agent can also be proactive, in that it actively searches for information that it judges would be of interest to its users.

An organization is characterized on one hand by its architecture in terms of formalized interplay between its agents (centralized or decentralized) and on the other hand by its functional capabilities, the roles of its actors and the relations between the two sets of their describable attributes (functional capabilities and actors’ roles).

2.4. BDI agents as models of organization agents

2.4.1. Description of BDI agents

Organization agents are social agents acting in a specific context. Interaction between social agents (short for social network agents) is central to understanding how organizations are structured and operated. A multiagent system in the social world must focus on how the interactions between agents are made effective, efficient and conducive to reach set objectives. In the late 1980s and the 1990s, a great deal of attention had been devoted to the study of agents capable of rational behavior. Rationality has been investigated in many a field. The economists have developed a strong interest in this concept and have built it as a normative theory of choice with a maximization of what is called a utility function, utility designating here something useful to customers. Hereafter, rationality is understood as the complete exploitation of information, sound reasoning and common sense logic. A particular type of rational agent, a Belief-Desire-Intention (BDI) agent, has been worked out by Rao and Georgeff (1995), and its implementation studied from both an ideal theoretical perspective and a more practical one.

BDI agents are cooperative agents characterized by having “mentalistic” features and, as such, they may incorporate many attitudes of human behavior. Its representative architecture is illustrated in Figure 2.5. It contains four key entities, namely beliefs, desires, intentions and plans, and an engine, “the interpreter”, securing the smooth coherence between the functional capabilities and roles fulfilled by the four key entities. In our opinion, these key structures are well suited to model the way social agents behave in a business environment and can be used as a modeling concept for organizations.

Figure 2.5. BDI agent architecture

Beliefs correspond to data-laden signals the agent receives from its environment. These signals are deciphered and may deliver incomplete or incorrect information, either because of the deliberate intention of signal sources or because of the lack of competencies at the reception side. Desires refer to the tasks allocated to the agent’s mission. All agents in an organization have an assignment that transforms their missions into clearly defined goals. Intentions represent desires the agent has committed to achieve and which have been chosen from among a set of possible desires, even if all these possible desires are compatible. The agent will typically keep striving to fulfill its commitment until it realizes that its intention is achieved or is no longer achievable. Plans are a set of courses of action to be implemented for achieving the agent’s intentions. They may be qualified as procedural knowledge as they are often reified by lists of instructions to follow.

The interpreter’s assignment is to detect updated beliefs captured from the surrounding world, assessing possible desires on the basis of newly elaborated beliefs, selecting from a set of current desires, some of which are to act as intentions. Finally, the interpreter chooses to deploy a plan in agreement with the agent’s committed intentions. Consistency has to be maintained between beliefs, desires, intentions and plans. Some degree of intelligence and competence is required to fulfil this functional capability and should be considered embedded in the interpreter.

The embedded intelligence and competence capabilities of the interpreter can be mainly expressed in terms of relationships between intentions and commitments. A commitment has two parts, i.e. the commitment condition and the termination condition when an active commitment is terminated (Rao and Georgeff 1995). Different types of commitments can be defined. A blindly committed agent denies any changes to its beliefs and desires which could conflict with its ongoing commitments. A single-minded agent accepts changes in beliefs and desires which could conflict with its ongoing commitments. A single-minded agent accepts changes in beliefs and drops its commitments accordingly. An open-minded agent is supposed to allow changes in both its beliefs and desires, forcing its ongoing commitments to be dropped. The commitment strategy has to be tailored, commensurate with the role(s) given to the agent according to the application context.

Let us elaborate on this mechanism by taking a general example of how the roles of the four entities (Beliefs, Desires, Intentions and Plans) articulate in the whole structure and how they are conducted by the interpreter.

An input message from another agent is captured by the data structure Beliefs and analyzed by the Interpreter. It deals with a change in the scheduled deliveries from this agent. This change is liable to impact its plan of activities and as consequence its own deliveries of products or services to its clients. Several options are open to analysis under the cover of desires and intentions. All actors in any context are keen on respecting their commitments (intentions), but a new choice has to be carried out when it appears that all desires cannot be met in terms of available resources from suppliers. A priority list of desires has to be re-established on the basis of strategic and/or tactical arguments (loyalty, criticality, etc. of clients) and converted in intentions to let the interpreter devise a new plan. The technique of “rolling schedule” in the manufacturing industry resorts to this practice.

Explaining the structural components of BDI agents in the next sections will show that BDI agents comply with the characteristics of an agent in the technical world that were given by J. Ferber.

2.4.2. Comments on the structural components of BDI agents

2.4.2.1. Definition of belief

The verb “believe” is defined in the Oxford dictionary. We are aware that the word “belief ” has been interpreted in different ways in the realm of philosophy and that its translation in other Indo-European languages (German, French, Italian, Spanish, among others) appears difficult (Cassin 2004). It is outside the scope of this book to discuss this issue. We will interpret it within the framework of what is called “the philosophy of mind” in the English language context. According to Hume’s book (1978 I sec 7), a matter of fact is “easily cleared and ascertained” and is closely correlated with reality: “if this be absurd in fact and reality, it must be absurd in idea”. These matters of fact are objects of belief: “it is certain that we must have an idea of every matter of fact which we believe… When we are convinced of any matter of fact, we do nothing but conceive it” (Hume 1978, I, III sec 8). In his book “Enquiries Concerning Human Understanding and Concerning the Principles of Morals”, Hume (1975) confirms that matters of fact and relations of ideas should be clearly distinguished: all the objects of human reason or enquiry may naturally be divided into two kinds, namely, relations of ideas or matters of fact.

Some people distinguish dispositional beliefs and occurring beliefs to try to mirror the storage structures of our memory organization. A dispositional belief is supposed to be held in the mind but not currently considered. An occurring belief is a belief being currently considered by the mind.

2.4.2.2. Attitudes and beliefs

An attitude is a state of mind favorable to behave in a positive or negative way towards an object or a subject. The information–integration tenet is one of the best credible models of the nature of attitudes and attitude change as stated by Anderson (1971), Fishbein (1975) and Wyer (1974). According to this approach, all pieces of information have the potential to affect one’s attitude. Two parameters have to be considered to understand the degree of influence information has on attitudes, i.e. the how and how much parameters. The how parameter is intended to evaluate the extent to which a piece of information received supports one’s belief. The how much parameter tries to measure the weight assigned to different pieces of information for impacting one’s attitude through a change in one’s belief.

Attitudes are dependent on a complex factor involving beliefs and evaluation. It is important to distinguish between two types of belief, i.e. belief in an object and belief about an object. When one believes in an object, one predicts a high probability of the object attributes existing. Belief about is the predicted probability that particular relationships exist between one object and others. Beliefs are embodied by the hundreds of thousands of statements we make about self and the world.

Attitudes change when beliefs are altered when acquiring new knowledge. The quantitative assessment of an attitude towards an object or a subject is measured in terms of the weighted sum of each belief about that object or subject times its circumstanced valuation. M. Rokeach has developed an extensive explanation of human behavior based on beliefs, attitudes and values (Rokeach 1969, 1973). According to him, each person has a highly organized system of beliefs, attitudes and values, which guides behavior. From M. Rokeach’s point of view, values are specific types of beliefs that act as life guidance. He concludes that people are guided by a need for consistency between their beliefs, attitudes and values. When a piece of information brings about changes in attitude towards an object or a situation, inconsistency develops and creates mistrust.

Another facet of belief and trust is linked to certainty and probability. Probability is commonly contrasted with certainty. Some of our beliefs are entertained with certainty, while there are others of which we are not sure. Furthermore, our beliefs are time-dependent along with our acquaintanceships.

2.4.2.3. Beliefs and biases

Biases are nonconscious drivers, cognitive quirks that influence how people perceive the world. They appear to be universal in most of humanity, perhaps hardwired in the brain as part of our genetic heritage. They exert their influence outside conscious awareness. We do not take action without our biases kicking in. They can be helpful by enabling people to make quick, efficient judgments and decisions with minimal cognitive effort. But they can also blind a person to new information or inhibit someone from considering valuable data when taking an important decision.

Biases often refer to beliefs that appear as the grounds on which decisions and courses of action are taken. Below is a list of biases commonly found in social life:

- – in-group bias: perceiving people who are similar to you more positively (ethnicity, religion, etc.);

- – out-group bias: perceiving people who are different from you more negatively;

- – belief bias: deciding whether an argument is strong or weak on the basis of whether you agree with its implications;

- – confirmation bias: seeking and finding evidence that confirms your beliefs and ignoring evidence that does not;

- – availability bias: making a decision based on the data that comes to mind more quickly rather than on more objective evidence;

- – anchoring bias: relying heavily on the first perception or piece of information offered (the anchor) when considering a decision;

- – halo effect: letting someone’s positive qualities in a specific area influence the free will of one individual or a group of individuals (constraints, lobbying, etc.);

- – base rate fallacy: when judging how probable an event is, ignoring the base rate (overall rate of occurrence);

- – planning fallacy: underestimating how long a task will be taken to complete, how much it will cost, i.e. the risks incurred, while overestimating the benefits;

- – representativeness bias: believing that something that is more representative is necessarily prevalent;

- – hot hand fallacy: believing that someone who was successful in the past has a greater chance of achieving further success.

2.4.2.4. Degrees of belief

Belief, probability and uncertainty

An important facet of belief is linked to trust, truth and certainty. Uncertainty is commonly treated with probability methods. Some of our beliefs are entertained with certainty, while there are others of which we are not sure. John Maynard Keynes (Keynes 1921) draws a distinction between uncertainty and risk. Risk is uncertainty structured by objective probabilities. Objective means based on empirical experience gained from past records or purposely designed experimental tests.

The concept of probability is related to ideas originally centered on the notion of credibility or reasonable belief falling short of certainty. Two distinct uses of this concept are made, i.e. modeling of physical or social processes and drawing inference from, or making decisions on the basis of, inconclusive data that characterizes uncertainty.

When modeling physical or social processes, the purpose is predicting the relative frequency with which the possible outcomes will occur. In evolving a probability model for some phenomenon, an implicit assumption is made: how the natural, social and human world is configured and how it behaves. Such assumed assertions are contingent propositions that should be exposed to empirical tests.

Probability is also used as an implement for decision-making by drawing inferences when a limited volume of data is available. When combined with an assessment of utilities, it is also used for choosing a course of action in an uncertain context. Probability modeling and inference are often complementary. Inference methods are often required for choosing among competing probabilities. Thus, decision makers are faced with situations represented by sets of probability distributions, giving more weight to some assessments rather than others. These techniques are used by assurance and reassurance companies when they work out contracts for which statistical series are too short. Probability is a tool for reasoning from data akin to logic and for adjusting one’s beliefs to take action.

Uncertainty can be rigged, increased or fabricated. This is not unusual in the political and economic realms. Think of the climate change, pesticides, acid rains and medicines and so on. Anyhow, dropping out or neglecting partially certain public data is rejecting an often large volume of data that, in spite of its uncertainty weight, can be turned down with large detriment to the relevance of decisions. The data deluge that pours over us through current uncontrolled communication channels is a challenge not only for citizens but also for businesses in order to distinguish relevant from fake information items.

Measures of degrees of belief

The degrees of belief about the future are ingrained with uncertainty. The usual way to come to practical terms with uncertainty is to use the concept of probability.

Two approaches to probability are generally considered, namely the frequency approach and the Bayesian approach. These two approaches are explained on the basis of the following statement: “the probability that the stock exchange index will crash tomorrow is 80%”.

The interest in games of chance stimulated work on probability and influenced the character of an emerging theory. The probability situations were analyzed into sets of possible outcomes of a gaming operation. The relative frequency of the occurrence of an event was postulated as a number called the “probability” of this event. It was expected that the relative frequency of occurrence of the event in a large number of trials would lie quite close to that number. But the existence in the real world of such an ideal limiting frequency cannot be proved. This approach to probability is just a model of what we think to be reality.

The statement “the probability that the stock exchange index will crash tomorrow is 80%” cannot express a relative frequency (even if financial market records are part of the evidence for the statement), because tomorrow comes but once. The statement implicitly expresses the credibility of the thought that the future is included in the past on the basis that it is rational to be confident of hypothesis (index crash) in the evidence of past records. This approach has often been called subjective, because its early proponents spoke of probability being relative in part to our ignorance and in part to our knowledge (Laplace 1795). It is now acknowledged that the term is misleading, for in fact there is an “objective” relationship between the hypothesis (index crash) and the evidence borne by past records, a probability relationship similar to the deductive relations of logic (Keynes 1921). One is faced with reasonable degrees of belief relative to evidence.

The label “objective theory”, according to Keynes’ view, has been criticized by F.P. Ramsay (1926). This skepticism led Ramsey, de Finetti (1937) and Savage (1954) to develop what Savage called a theory of personal probability. Within this framework, a statement of probability is the speaker’s own assessment of the extent to which (s)he is confident of a proposition. It is remarkable that a seemingly subjective idea like this is arguably constrained by exactly the same mathematical rules governing the frequency conception of probability.

Personal degrees of belief can arguably satisfy the probability axioms. These ideas were first proposed by Ramsey (1926). He considered a probability space as a representation of psychological states of belief. P(A) stands for a person’s degree of confidence in A; it is to be evaluated behaviorally by determining the least favorable rate at which this individual would take a bet on A. If the least favorable odds are, e.g. 3:1, the probability is P(A) = ¾.

Conditional probability is denoted by P (A/B). In a frequency interpretation, this is the relative frequency with which A occurs among trials in which B occurs. Conditional probabilities may be explained in terms of conditional bets. In a personal belief interpretation, P(A/B) may be understood as the rate at which a person would make a conditional bet on A – all bets being cancelled unless condition B is fulfilled. This approach, often called Bayes’ theorem, is of serious interest from a belief point of view.

Suppose that the set Ai is an exhaustive set of mutually exclusive hypotheses of interest and that B is knowledge bearing on the hypotheses. Assume that a person, on the basis of prior knowledge, has a distribution of belief over Ai, represented by P(Ai) for each i. Call this the prior distribution, assuming that for each Ai, P(B/Ai) is defined. This is called the likelihood of getting B if Ai is true. P(A/B) is interpreted as a logical relationship between A and B.

The goal of the Bayesian method is to make inferences regarding unknowns (generic term referring to any value not known to the investigator), given the information available that can be partitioned into information obtained from the current data as well as other information obtained independently or prior to the current data, which can be assigned to the investigator’s current knowledge. The more or less assured certainty of the expected future states of nature is encoded as probability estimates conditional on the information available. Within this framework of thought, the repetitive running of a trial and error process is supposed to allow people to gain new knowledge and eventually change their beliefs. In the inceptive step, the distribution of a priori subjective probabilities with respect to the future possible states of nature and their properties is chosen on the basis of innate and acquired knowledge to build a representation of the likely outcome of future action. This procedure draws on Bayes’ theorem. When the factual outcome happens, its compliance and/or discrepancy with the expected effect are analyzed and memorized, producing incremental knowledge coming from experience.

There is a clear connection between logical probability, rationality, belief and revision of belief.

2.4.2.5. Belief, trust and truth

Truth is an attribute of beliefs (opinions, doctrines, statements, etc.). It refers to the quality of those propositions that accord with reality, or with what is perceived as reality. The contrast is with falsity, faithlessness and fakery.

Many explanations have been devised to elaborate on the correspondence between what is true and what makes it true. The correspondence theory asserts that a belief is true provided that a fact corresponding to it exists. What does it mean for a belief to correspond to a fact? How to verify that a fact exists in the context of virtual reality? A third party, trust, seems adequate to intervene within this framework to assess the credibility of information sources. The state of believing involves some degree of confidence towards a propositional object of belief.

Other theories have been proposed to explain how a belief is accepted as true. The coherence theory developed by Bradley and Blanchard asserts that a belief is verified when it is part of an entire system of beliefs that is consistent and harmonious. A statement S is considered logically true if and only if S is a substitution-instance of a valid principle of logic. The pragmatic theory produced by two American philosophers C.S. Pierce and W. James asserts that a belief is true if it works, if accepting it brings success.

In a book about the impact of blockchain technology on business operations (buying and selling goods and services and their associated money transactions), Don and Alex Tapscott (2016) estimate that a trust protocol has to be established according to four principles of integrity:

- – Honesty has become not only an ethical issue but also an economic one. Trusting relations between all the stakeholders of business and public organizations have to be established and made sustainable.

- – Consideration means that all parties involved respect the interests, desires or feelings of their partners.

- – Accountability means clear commitments and abiding to them.

- – Transparency means that information pertinent to employees, customers and shareholders must be made available to avoid the instillation of distrust.

This protocol shows how social actors have become aware of the importance of societal relationships in a faceless virtual world.

Blockchain is a distributed ledger technology. Blockchain transactions are secured by powerful cryptography that is considered unbreakable using today’s computers.

We resort to K. Lewin’s field theory to analyze how emotions, feelings, beliefs, truth and trust are dynamically articulated when agents interact and perform their activities (Lewin 1951). The fundamental construct introduced by K. Lewin is that of “field”. K. Lewin gained a scientific background in Germany before immigrating to America. That explains why he was led to introduce the concept of a “field” to characterize the spatial–temporal properties of a human ecosystem. This concept is widely used in physics to describe the physical properties of phenomena in a limited space.

All behavior in terms of actions, thinking, wishing, striving, valuing, achieving, etc. is conceived of as a change in some state of a “field” in a given time unit. Expressed in the realm of individual psychology, the field is the life space of the individual (Lebensraum in German culture). The life space is equipped with beliefs and facts that interact to produce mental states resulting in attitudes at any given time. K. Lewin’s assertion that the only determinants of attitudes at a given time are the properties of the field at the same time has caused much controversy. But it sounds reasonable to accept that all the past is incorporated into the present state of the field under consideration. To put it in a different wording, only the contemporaneous system can have effects at any time. As a matter of fact, the present field has a certain time depth. It includes the “psychological” past, “psychological” present and “psychological” futures which constitute the time dimension of the life space existing at a given time.

This idea of time dimension is also found in the concept developed by Markov to approach the description of stochastic processes by chain of events. State changes in a system that occur in time follow some probability law. The transition from a certain state at time t to another state depends only on the properties of the state at time t: all the past features of previous states are considered already included in the attributes of the present state.

All attitudes depend on the cognitive structure of the life space that includes, for each agent of a cluster, the other stakeholders of the cluster. When exposed to the behavior suggestions of other cluster agents or their critical judgment of his/her own behavior, every agent develops either a conditioned reflex based on his innate and/or acquired knowledge embedded in his/her brain’s neural connections or branches out into emotional expressions according to the way the received information is appraised as a reward or a threat. This last case occurs if (s)he feels (s)he cannot secure the right pieces of knowledge to produce an appropriate reaction. T.D. Wilson, D.T. Gilbert and D.B. Centerbar (2003) wrote “helplessness theory has demonstrated that if people feel that they cannot control or predict their environments, they are at risk for severe motivational and cognitive deficits, such as depression”.

If one organization agent trusts the other organization agents, his/her motivation is strengthened to embark on a learning process to better his/her acquired knowledge. Learning engages imagination, demands concentration, attention, efforts and trust in other agents’ good will. Conscious awareness is fully involved.

2.4.2.6. Beliefs and logic

Logic is the study of consistent sets of beliefs. A set of beliefs is consistent if the beliefs are not only compatible with each other but also do not contradict each other. Beliefs are expressed by sentences. When written, these sentences stating beliefs are called declarative.

Many sentences do not naturally state beliefs. One sentence may have different meanings or interpretations depending on the context. Beliefs are, in some way or another, the outcome of “rational” reasoning. By rational, it is meant that rules of logic are called on for justifying the conclusions reached. But which rules?

Classical logic can be understood as a set of prescriptive rules defining the way reasoning has to be conducted to yield coherent conclusions. Within this framework, truth is unique. It is implicitly assumed that a universe exists where propositions are either true or false. The “principle of the excluded third” is called on. It is well suited for data processing by computer systems. Data coded by binary digits are memorized and processed by electronic devices able to be maintained only in two states (0 or 1). Classical logic does not mirror the way we reason in our daily life. It is acknowledged that our brain does not operate as a Turing machine (Wilson 2003). If our brain is viewed as a black box converting input into output, the transformation process can be represented by algorithms. But the intimate physiological mechanism cannot be ascribed to algorithmic procedures in the way a computer system crunches numbers.

Other systems of logic, descriptive by nature, have been worked out to try to take into account the ways and means we use to make decisions in our daily activities. Modal logic is a system we practice, generally implicitly. Modality is the manner in which a proposition or statement describes or applies to its subject matter. Derivatively, modality refers to characteristics of entities or states of affairs described by modal propositions.

Modal logic (Blackburn 2001) is a branch of logic which studies and attempts to systematize those logical relations between propositions which hold by virtue of containing modal terms such as “necessarily”, “possibly” and “contingently”; must, may and can. These terms cover three modalities: necessity, actuality and possibility. In short, modal logic is the study of necessity (it is necessary that…) and possibility (it is possible that….). This is done with the help of the two operators □ and ◊ meaning “necessarily” and “possibly”, respectively, and instrumental in dealing with different conceptions of necessity and possibility:

- – logical necessity, i.e. true by virtue of logic alone (if P then Q);

- – contextual necessity, i.e. true by virtue of the nature and structure of reality (business context, social context, etc.);

- – physical necessity, i.e. true by virtue of the laws of nature (water boils at 100°C under standard pressure).

Modal logic is not the name of a single logical system; there are a number of different logical systems making use of the operators □ and ◊, each with its own set of rules.

Modal operators □ and ◊ are introduced to express the modes with which propositions are true or false. They allow logical opposites to be clearly elicited. The operators □ and ◊ are regarded as quantifiers over entities called possible worlds. □ A is then interpreted as saying that A is true in all possible worlds, while ◊ A is interpreted as saying that A is true in at least one possible world.

The two operators are, in fact, connected. To say that something must be the case is to say that it is not possible for it not to be the case. That is, □ A means the same as ¬◊¬A. Similarly, to say that it is possible for something to be the case is to say that it is not necessarily the case that it is false. That is, ◊A means the same as ¬□¬A. For good measure, we can express the fact that it is impossible for A to be true, as ¬◊A (it is not possible that A) or as □¬A (A is necessarily false). The truth value of ◊A cannot be inferred from the knowledge of the truth value of A. Modal operators are situation-dependent. Following the 17th Century philosopher and logician Leibniz, logicians often call the possible options facing a decision-maker possible worlds or universes. A fresh approach to the semantics theory of possible worlds was introduced in the 1950s by Kripke (1963a and 1963b).

To say that ◊A is true, it is required to say that A is in fact true in at least one of the possible universes associated with a decision-maker’s situation. To say that □A is true implies that A is true in all the possible universes associated with a decision-maker’s situation. The modal status of a proposition is understood in terms of the worlds in which it is true and worlds in which it is false. Contingent propositions are those that are true in some possible worlds and false in others. Impossible propositions are true in no possible world.

Two logical operators, i.e. negation and the conditional operator → (if …then …), which are central in decision-making, require special attention to be applied within the framework of possible worlds. Let us assume that a decision-maker is in a situation M and that M is a set of exclusive possible worlds. Each element of the set is a world in itself. Possible worlds are not static but dynamically time-dependent. Today’s world is not tomorrow’s world. This means that each possible world evolves in time according to rules. These dynamics can be represented by a tree diagram with nodes and branches reflecting the relations between the different worlds (nodes). Each branch is a retinue of possible worlds. A tree diagram reads top-down so that from one certain node, access is not given to any other node in the tree.

It is posited that A → B in the world m if and only if in all the worlds n accessible from m, A and B are simultaneously true. ¬A is true in the world m if and only if A is false in all the worlds n accessible from m.

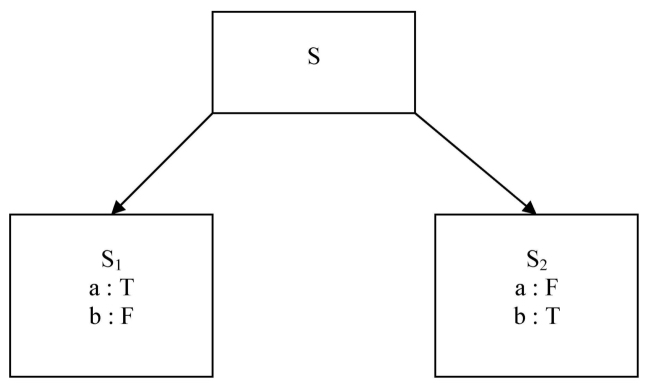

Let us give examples of inference employing modal operators. Consider a situation S with two associated worlds S1 and S2 and two sentences a and b that can be true (T) or false (F) as shown in Figure 2.6.

Figure 2.6. A situation S and its two possible worlds

Consider the inference  It is invalid: a is T at S1; hence, ◊a is true in S. Similarly, b is true in S2; hence, ◊b is true in S. But the (a & b) is true in no associated world; hence, ◊(a & b) is not true in S.

It is invalid: a is T at S1; hence, ◊a is true in S. Similarly, b is true in S2; hence, ◊b is true in S. But the (a & b) is true in no associated world; hence, ◊(a & b) is not true in S.

By contrast, the inference  is valid. If the premises are true at S, then a and b are true in all the worlds that are associated with S. Then, a & b is true in all those worlds, and □ (a & b) is true in S.

is valid. If the premises are true at S, then a and b are true in all the worlds that are associated with S. Then, a & b is true in all those worlds, and □ (a & b) is true in S.

Each software user exposed to two environments is subject to developing a situation of his/her own in terms of rules of meaning and action and of sets of “rational” propositions pertaining to his/her situational world at a certain time. Software designers are not aware of this immanent state of affairs. But even if they were, they could hardly cope with the wide variety of possible situational worlds users of software systems are embedded in.

2.5. Patterns of agent coordination

Coordination is central to a multi-agent system for without it, any benefits of interaction vanish and the society of agents degenerates into a collection of individuals with chaotic behavior. Coordination has been studied in diverse disciplines from the social sciences to biology. Biological systems appear to be coordinated though cells or “agents” act independently in a seemingly non-collaborative way. Coordination is essentially a process in which agents engage to ensure a community of individual agents having diverse capabilities and acting in a coherent manner to achieve a goal. Different patterns of coordination can be found.

2.5.1. Organizational coordination

The easiest way of ensuring coherent behavior and resolving conflicts consists of providing the group with an agent having a wider perspective of the system, thereby exploiting an organizational structure through hierarchy. This technique yields a classic master/slave or client/server architecture for task and resource allocation. A master agent gathers information from the agents of the group, creates plans, assigns tasks to individual agents and controls how tasks are performed.

This pattern is also referred to as a blackboard architecture because agents are supposed to read their tasks from a “blackboard” and post the states of these tasks to it. The blackboard architecture is the model of shared memory.

2.5.2. Contracting for coordination

In this approach, a decentralized market structure is assumed, and agents can take two roles, manager and a contractor. If an agent cannot solve an assigned problem using local resources or expertise, it will decompose into sub-problems and try to find other willing agents with the necessary resources/expertise to solve these sub-problems.

Assigning the sub-problems is engineered by a contracting mechanism consisting of a contract announcement, submissions of bids by bidding agents, their evaluation and the awarding of a contract to the appropriate bidder. There is no possibility of bargaining.

2.5.3. Coordination by multi-agent planning

2.5.3.1. General considerations

Coordinating multiple agents is viewed as a planning problem. In this context, all actions and the interactions of agents are determined beforehand, leaving nothing to chance. There are two types of multi-agent planning, namely centralized and decentralized.

In centralized planning, the separate agents evolve their individual plans and then send them to a central supervisor which analyzes them and detects potential conflicts (Georgeff 1983). The idea behind this approach is that the central supervisor can:

- a) identify synchronization discrepancies between the plans of the stakeholders;

- b) suggest changes and insert them in a realistic common schedule after approval by the stakeholders.

The distributed planning technique foregoes the presence of a central supervisor. Instead, it is based on the dissemination of each other’s plans to all agents involved (Georgeff 1984, Corkill 1979). Agents exchange with each other until all conflicts are removed in order to produce individual plans coherent with others. This means that each stakeholder shares information with its partners about its resource capacities.

2.5.3.2. E-enabled coordination along a supply chain

To illustrate the role of Information Technology and especially telecommunications services in coordination planning, the example of e-enabled demand-driven supply chain management systems will be described (Briffaut 2015). By e-enabled, it is meant that all transactions are electronically paperless engineered along the goods flow from suppliers to clients. In particular, this context implies that customers place orders via a website. These cybercustomers expect to be provided without latency with data about product availability and delivery lead times.

The role of information sharing is acknowledged to be a key success factor in Supply Chain Management (SCM) in order to secure efficient coordination between all the activities of the stakeholders involved along the goods pipeline. Coordinating multi-agent systems (MAS) is realized in the case of an e-enabled SCM through a common information system engineered to share the relevant data between the stakeholders involved. The MAS approach is a relevant substitute for optimization tools and analytical resolution techniques whose efficiency is usually limited to local problems, without any adequate visibility over the behavior of the entire chain of stakeholders involved. Optimization of the operations of a whole is different from optimization of the operations of its parts A global point of view is required to bring under full control the synchronization of inter-related activities.

In traditional contexts, a front office and a back office can be identified in terms of interaction with customers. The front office carries out face-to-face dealings with customers while using a proprietary information system. Relationships with the other agents of the supply chain take place by means of messages exchanged between their information systems. Coordination between the front office and the back office is generally asynchronous and does not meet real-time requirements.

When e-commerce is implemented via a website, the delineation between the back office and the front office of the previous configuration is blurred and has no reason to be taken into consideration. The two offices merge into one entity because of the response time constraint. When queries are introduced by cybercustomers via a website portal, the collaborative information system must have the ability to produce real-time answers (availability, delivery date). Then, the information systems of the various stakeholders along the supply chain have to be interfaced in such a way that coordination between them takes place synchronously. The thing to do is to implement a workflow of a sort between the “public” parts of the stakeholders’ information systems. In other words, some parts of the stakeholders’ information systems contribute to producing a relevant answer to cybercustomers’ queries. Figure 2.7 shows the changes induced by a portal in terms of information exchange between the supply chain stakeholders.

Figure 2.7. Coordination between proprietary information systems through a collaborative system

2.5.3.3. Scenario setting for placing an order via a website

Each time a customer enters an order via the website, the supply chain collaborative information system shared by all stakeholders proceeds with an automatic check of projected inventories and uncommitted resource capacities per time period. If the item ordered is already available, the customer is advised accordingly and can immediately confirm the order. If the item is not available in inventory, the system checks whether the quantity and the delivery date requested can be met. In other words, the system checks whether manufacturing and supply capacities are available to meet the demand on the due date. Otherwise, it checks what the best possible date could be and/or what split quantities could be produced by using a simulation engine. Then, the customer is advised of the alternatives and can choose an option suitable to him/her. Once the option is confirmed, the system automatically creates a reservation of capacity and materials on the order promising system and forwards the order parameters to the back-office systems to be included in the production plan of the stakeholders involved. Order acknowledgments and confirmation are generated and sent by email.

2.5.3.4. Mapping the order scenario onto the structures of a BDI agent

The role of the Beliefs structure is to record order entries, send answers and process transaction data to turn them into memorized statistics. These statistics are used as entry data to update the APS (Advanced Planning System). The APS is implemented as a control tool over a short time horizon and is used as a non-repudiable commitment taken by the manufacturing shops. The Plan structure establishes and memorizes the APS pertaining to the supply chain as a whole. This means that this entity updates the supply chain APS on a regular time basis from data provided by the Beliefs structure. As the BDI agent acts as the front office of the supply chain with respect to the buyer side, it seems reasonable to ascribe it a centralized coordination role. Within this perspective, it draws up partial plans for the stakeholders on their behalf. When conflicts arise, it has the capabilities to bring the unbalance of distributed resources to terms. In other words, the Plan structure is assigned to implement the APS concept. It ensures that data required to derive their partial APS are made available in due time to all agents involved along the goods flow. It has two major features:

- – concurrent planning of all partners’ processes;

- – incremental planning capabilities.

APS is intended to secure a global optimization of all flows through the supply chain not only by increasing ROI (Return on Investment) and ROA (Return on Assets) but also by fulfilling customers’ satisfaction and retaining their loyalty.

The Desires structure is in charge of supporting the use of the ATP and CTP parameters. ATP stands for Available-To-Promise and CTP Capable-To-Promise. Per period of time, ATP has the capability to deliver an answer to a client request in terms of availability (quantity and delivery date). Either the request can be fulfilled from a scheduled inventory derived from the enforced APS or simulation of a sort has to be carried out through the CTP parameter to send an answer to the client. The CTP parameter takes account of lead times required to mobilize potentially available resources and allows a real-time answer to customer requests when necessary. It results, when activated, in the production of a new APS. The APS technique is generally supposed to be able to produce rolling manufacturing plans to match the demands of the buyer side.

The fulfillment of the committed schedule APS is ascribed to the Intentions structure. The PAB parameter is managed by this structure because it includes all of what is recorded as committed (APS and customer orders). The PAB (Projected Available Balance) parameter represents the number of completed items on hand at the end of each time period. It can be viewed as a means for giving some margin in cases of temporary unserviceable capability of some resources.

Let us use an example to explain how the roles of the four structures (Beliefs, Desires, Intentions and Plans) are connected and how they are performed by the interpreter. An input message coming from a customer is captured by the Beliefs data structure and analyzed by the Interpreter. It deals with a change in the delivery schedule induced by a new supply order entry. If this change requirement falls within the time fence linked to the supply lead time, it is rejected. Otherwise, as the agent has to act, the available resource capacities for the time period, be they committed or uncommitted, the projected on-hand inventory and the uncommitted planned manufacturing output are analyzed. If one of the possible supply sources can meet the specification required, it needs to select appropriate actions or procedures (Plans) to execute from a set of functions available to it and commit itself (Intentions). This simple scenario can be conceptualized by a repeat loop as shown below:

BDI-Interpreter

Initialize-state [ ];

repeat

- a) Options:= read the event queue (Beliefs) and go to option ATP (Desires);

- b) Selected option ATP = if ATP parameter proves relevant (order fulfilled without altering the existing MPS) then update its value and go to Intentions to update PAB , otherwise go to selected option CTP ;

- c) Selected option CTP = if CTP proves relevant (possible adjustment of current MPS while abiding by commitments in force) then go to Intentions for updating otherwise reject the request

- d) Execute [ ];

end repeat

2.6. Negotiation patterns

Negotiations are the very fabric of our social life. A negotiation is a discussion pertaining to decision-making with a view to agreement, full or partial, or compromise when the discussants have incompatible mind-sets. When differences in opinion between discussing parties arise, several strategies are instrumental in trying to resolve the issue by determining what the fair or just outcome should be. A first strategy might be that the parties have agreed to resort to a set of procedural rules that have been defined beforehand for covering eventual cases of conflicts for settling disputes. This situation can be formalized by a negotiation protocol. A second strategy is to seek the advice of a referee. This strategy is aimed at giving the power of intervening in the conflict to an unbiased person. But in this case, the power to decide on the issue remains in the hands of the discussants with or without the referee taking part. A third strategy would be to transfer the full responsibility to take a decisive decision over the pending issue. Then, the risk is that “asymmetric ignorance” between the parties involved leads to the absence of consensus when it comes to deploying the decision.

Coordination is predicated on the implicit idea that the agents involved share a common interest to achieve an objective. Negotiations do not necessarily imply that they always take place between opponents and competitors, but this term often bears that connotation.

There are probably many definitions of negotiation. In our opinion, a basic definition of negotiation has been given by Brussmann and Muller (1992):

“…negotiation is the communication process of a group of agents in order to reach a mutually accepted agreement on some matter”.

The purpose of any negotiation process is to reach a consensus for the “balanced” benefit of the parties. “Balanced” does not mean “optimal” for the parties involved, but what they may consider the least unfavorable solution. This process may be very complex and involve the exchange of information, the relaxation of initial constraints, mutual concessions, lies or threats. It is easy to figure out the huge and varied literature produced on this subject matter of negotiation.

Negotiation can be competitive or cooperative depending on the behavior of individual agents. Competitive negotiation takes place in situations where independent agents with their own goals attempt to group their choices over well-defined alternatives. They are not a priori prepared to share information and cooperate. Cooperative negotiation takes place where agents share the same vision of their goals and are prepared to work together to achieve efficient collaboration.

2.7. Theories behind the organization theory

In spite of the ever-lasting claim by the French to be different in terms of culture (exception culturelle), many management tools currently used in France and introduced after the Second World War are based on imported concepts and practices. The costing system, called in French “comptabilité analytique”, is taken from the German costing system. The first textbooks on costing were published in the 19th Century in Germany (Ballewski 1887). On the other hand, at the same time period, organizational concepts under the wording “organization theory” were imported from the USA. Most contributors to this discipline in the USA had a sociology background. This situation can be ascribed to the characteristics of the American cultural context. A telling insight can be found in the book The Growth of American Thought by Merle Cutti (1964). Two chapters (“The Advance of Science and Technology” – “Business and the Life of the Mind”) are of special interest to understand the involvement of sociologists in studying the working conditions of the labor force in large corporations. The promotion of applied science to the arts was oriented to give engineers and mechanicians the possibilities to oversharpen the material benefits at the expense of moral values so deeply ingrained in the Christian heritage of the Pilgrim Fathers.

The many aspects of what is called the organization theory in English and French-speaking contexts defy easy classification. No system of categories is perfectly appropriate for organizing this material. This is why some baseline theories explained in the following subsections can help to derive schemes eliciting the very nature of the multiple casts of thought in this realm in a business environment.

It is important to realize that in the past decades, information and communication technologies have had a disruptive impact on the ways and means of how corporations and communities of all sorts have been redesigned to keep up with their environments. Here are the main features of the transformations perceived:

- 1) The enterprise is transformed from a closed system to an open system, a network of self-governing micro-enterprises with free-flowing communication among them and mutually creative connections with outside contributors. Some popular wording can be associated with this idea of openness (networked enterprise, open innovation, co-makership, etc.).

- 2) Employees are transformed from executors of top-down directions to self-motivated contributors, in many cases choosing or electing the leaders and members of their teams.

- 3) Purchasers of business offerings are transformed from customers to lifetime users of products and services designed to solve their problems and increase their satisfaction.

2.7.1. Structural and functional theories

This includes a broad group of loosely associated approaches. Although the meanings of the terms structuralism and functionalism are not clear cut and can bear a variety of variations, they designate the belief that social structures are real and function in ways that can be observed objectively (Giddens 1979).

It is relevant at this stage to elaborate on the term “function”. When considering the function of a thing, a distinction has to be made between

- a) what the thing does in the normal course of events (its activity);

- b) what the thing brings about in the normal course of events (the result of its activity).

Of course, it is understood that the activity of a thing and the outcome thereof are strongly correlated with the structure of the entity under consideration. When a function is ascribed to an agent, it is usually implied that a certain purpose is served.

The concept of mathematical function does not oppose the previous view but complements it by stressing the relation between two terms in an ordered pair. This pair of constituents can be for instance activity and result.

A functional explanation is a way of explaining why a certain phenomenon occurs or why something acts in a certain way by showing that it is a component of a structure within which it contributes to a particular kind of outcome.

The system theory is deeply rooted in the structural–functional tradition which can be traced back to Plato and Aristotle. Modern structuralism generally recognizes E. Durkheim (1951), who emphasized the concept of social structure, and F. de Saussure, founder of structural linguistics, as key figures.

The structural technical architecture of cybernetics has explicitly or implicitly permeated the organization theory. This means that a structure is described in terms of controlled and controlling entities with the underlying assumption that it has to deliver targeted output within the framework of a contingent ecosystem. This cybernetics mind-set prevails from the design stage whose process is called organizational design.

2.7.2. Cognitive and behavioral theories

This genre of theories is a combination of two different traditions that share many characteristics. They tend to espouse the same general ideas about knowledge as structural–functional theories do. Structural and functional theories focus on social and cultural structures, whereas cognitive and behavioral theories focus on the individual.

Psychology is the primary source of cognitive and behavioral theories. Psychological behaviorism deals with the connection between stimuli and behavioral responses. The term cognition refers to thinking or the mind, so cognitivism tries to understand and explain how people think. Cognitivism (Greene 1984) goes one step further than psychology and emphasizes the information processing phase between stimuli and responses. Somehow, cognitivism tries to open the black box, converting stimuli into responses in order to understand the mechanism involved.

These two groups of theories form a basis from which many other theories revealing the tone and color of their upholders can be derived. When the focus is put on the relations between the various entities of a structure, the structural and functional theories shift to what is called interactionist theories. When theories go further than merely describing a contextual situation but also criticize theories of this kind, e.g. on the grounds of the conflicts of interest in society or the ways in which one group perpetuates domination over another one, they are called critical theories.

A behavioral view implies that beliefs are just disposition to behave in certain ways. The question is that our beliefs including their propositional content indicated by a “that” – clause, typically explain why we do what we do. Explaining action via the propositional content of beliefs is not accommodated in the behavioral approach.

2.7.3. Organization theory and German culture

When you scrutinize the syllabi of German educational institutions in the field of management, what deals with organization theory (organizationstheorie) is presented as “Grundlagen der Organization, Aufbau- Ablauf- und Prozess-” (Foundations of Organization, Structure, Fluxes and Process) with often an additional subtitle “Unternehmensführung und Strategie” (Enterprise Guidance and Strategy). Within this framework, seven issues have to be addressed to design a coherent organization. By coherent, it is meant that any organizational configuration has a purpose, an objective; otherwise, it is irrelevant to devote efforts, i.e. resources, to design and build an object without significance.

The issues to address are:

- – What is the purpose?

- – How: what is required for function capabilities?

- – What is required for resources?

- – When does this structure have to be operated?

- – Where: what is the ecosystem of the location?

- – What is the relevance of the strategy?

- – What is the distribution complexity of deliveries?

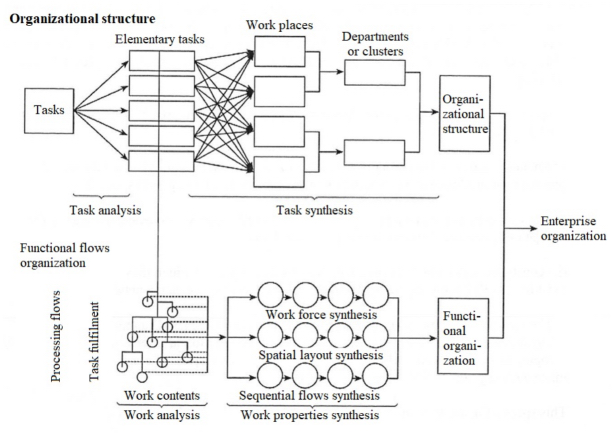

The synopsis of Figure 2.8 portrays how the procedures for deriving the “Afbauworganisation” and the “Ablauforganisation” components are systematically deployed and how they are combined to deliver an effective fully fledged business organization.

Figure 2.8. Systematic approach to derive the Aufbau- und Ablauforganisation components of a business organization (source: Knut Bleicher (1991) S. 49)