1

Complexity and Systems Thinking

1.1. Introduction: complexity as a problem

Perception of the world brings about the feeling that it is a giant conundrum with dense connections among what is viewed as its parts. As human beings with limited cognitive capabilities, we cannot cope with it in that form and are forced to reduce it to some separate areas which we can study separately.

Our knowledge is thus split into different disciplines, and over the course of time, these disciplines evolve as our understanding of the world changes. Because our education is conducted in terms of this division into different subject matters, it is easy not to be aware that the divisions are man-made and somewhat arbitrary. It is not nature that divides itself into physics, chemistry, biology, sociology, psychology and so on. These “silos” are so ingrained in our thinking processes that we often find it difficult to see the unity underlying these divisions.

Given our limited cognitive capabilities, our knowledge has been arranged by classifying it according to some rational principle. Auguste Comte (1880) in the 19th Century proposed a classification following the historical order of the emergence of the sciences, and their increasing degrees of complexity in terms of understanding their evolving concepts. Comte did not mention psychology as a science linking biology and the social sciences. He did not regard mathematics as a science but as a language which any science may use. He produced a classification of the experimental sciences into the following sequence of complexity: physics, chemistry, biology, psychology and social sciences.

Physics is the most basic science, being concerned with the most general concepts such as mass, motion, force, energy, radiation and atomic particles. Chemical reactions clearly entail the interplay of these concepts in a way that is intuitively more intricate than the isolated physical processes. A biological phenomenon such as the growth of a plant or an embryo brings in again a higher level of complexity. Psychology and social sciences belong to the highest degree of human-felt complexity. In fact, we find convenient to tackle the hurdles we are confronted with by establishing a hierarchy of separate sciences. As human beings, biology is of special interest for us because it studies the very fabric of our existence.

In physics, the scientific method inspired by the reductionistic approach from Descartes’ rule has proved successful for gaining knowledge. Chemistry and biology can rely on physics for explaining chemical and biological reactions; however, they are left with their own autonomous problems. K. Popper shares this point of view in his “intellectual biography” (Popper 1974):

“I conjecture that there is no biological process which cannot be regarded as correlated in detail with a physical process or cannot be progressively analysed in physiochemical terms. But no physiochemical theory can explain the emergence of a new problem… the problems of organisms are not physical: they are neither physical things, nor physical laws, nor physical facts. They are specific biological realities; they are ‘real’ in the sense that their existence may be the cause of biological effects”.

1.2. Complexity in perspective

1.2.1. Etymology and semantics

The noun “complexity” or the adjective “complex” are currently used in many oral or written contexts when some situations, facts or events cannot be described and explained with a straightforward line of thought.

It is always interesting to investigate the formation of a word and how its meaning has evolved in time in order to get a better understanding of its current usage.

“Complex” is derived from Latin complexus, made of interlocked elements. Complectere means to fold and to intertwine. This word showed up in the 16th Century for describing what is composed of heterogeneous entities and was given acceptance in logic and mathematics (complex number) circa 1652. At the turn of the 20th Century, it became closer to “complicated” and used in chemistry (organic complexes), economics (1918) and psychology (Oedipus complex, inferiority complex – Jung and Freud 1909/1910). “Complicated” is derived from Latin complicare, to fold and roll up. It was used in its original meaning at the end of the 17th Century. Its current usage is analogous to “complex”: what is complex – theory, concept, idea, event, fact and situation – is something difficult to understand. It is related to human cognitive and computable capabilities, which are both limited.

A telling instance is the meaning given to the word “complex” in psychology: it is a related group of repressed ideas causing abnormal behavior or mental state. It is implicitly supposed that the relations between these repressed ideas are intricate and are difficult to explain to outside observers.

In the Oxford dictionary, “complex” is described by three attributes, i.e. consisting of parts, composite and complicated. These descriptors are conducive to exploring the relationships of the concept of complexity with two well-established fields of knowledge, i.e. systems thinking and structuralism. That will be done in following sections.

1.2.2. Methods proposed for dealing with complexity from the Middle Ages to the 17th Century and their current outfalls

Complexity is not a new issue in quest of what is knowable to humans about the world they live in.

Two contributors from the Middle Ages and the Renaissance will be considered here, William of Ockham and René Descartes in their endeavors to come to terms with complexity. Their ideas are still perceptible in the present times.

1.2.2.1. Ockham’s razor and its outfall

William of Ockham (circa 1285–1347) is known as the “More Than Subtle Doctor”, English scholastic philosopher, who entered the Franciscan order at an early age and studied at Oxford. William of Ockham’s razor (also called the principle of parsimony) is the name commonly given to the principle formulated in Latin as “entia non sunt multiplicanda praeter necessitatem” (entities should not be multiplied beyond what is necessary). This formulation, often attributed to William of Ockham, has not been traced back in any of his known writings. It can be interpreted as an ontological principle to the effect that one should believe in the existence of the smallest possible number of general kinds of objects: there is no need to postulate inner objects in the mind, but only particular thoughts, or states of mind, whereby the intellect is able to conceive of objects in the world (Cottingham 2008). It can be translated into a methodology to the effect that the explanation of any given fact should appeal to the smallest number of factors required to explain the fact in question. Opponents contended that this methodological principle commends a bias towards simplicity.

Ockham wrote a book, Sum of Logic. Two logical rules, now named De Morgan’s laws, were stated by Ockham. As rules or theorems, the two laws belong to standard propositional logic:

- 1) [Not (p And q) ] is equivalent to [Not p Or Not q].

- 2) [Not (p Or q)] is equivalent to [Not p And Not q].

Not, And and Or are logic connectors; p and q are propositions.

In other words, the negation of a conjunction implies, and is implied by, the disjunction of the negated conjuncts. And the negation of a disjunction implies, and is implied by, the conjunction of the negated disjuncts.

It can be figured out that Ockham, who was involved in a lot of disputations, felt the need to use the minimum of classes of objects in order to articulate his arguments in efficient discussible ways on the basis of predicate logic. Predicate logic allows for clarifying entangled ideas and arguments, and producing a “rational” chain of conclusions that can be understood by a wide spectrum of informed people.

Different posterior schools of thought can be viewed as heirs apparent to the principle of Ockham’s razor, among many others, the ontological theory and Lévi-Strauss’ structuralism.

The word “ontology” was coined in the early 17th Century to avoid some of the ambiguities of “metaphysics”. Leibniz was the first philosopher to adopt the word. The terminology introduced by 18th Century came to be widely adopted: ontology is the general theory of being as such and forms the general part of metaphysics. In the usage of 20th-Century analytical philosophy, ontology is the general theory of what there is (MacIntyre 1967).

Ontological questions revolve around:

- – the existence of abstract entities (numbers);

- – the existence of imagined entities such as golden mountains/ square circles;

- – the very nature of what we seek to know.

In the field of organization theories, ontology deals with the nature of human actors and their social interactions. In other more abstract words, ontology aims to establish the nature of entities involved and their relationships. Ontology and knowledge go hand in hand because our conception of knowledge depends on our understanding of the nature of the knowable.

The ontological commitment of a theory is twofold:

- – assumptions about what there is and what kinds of entities can be said to exist (numbers, classes, properties);

- – when commitments are paraphrased into a canonical form in predicate logic, they are the domains over which the variables are bound to the theory range.

When it comes to complexity, the ontological description of an entity should refer to its structure (structural complexity) and its organization (organizational complexity). This is in line with the mindset in German culture to describe a set of entities by two concepts, i.e. Aufbau (structure) and Ablauf (flows of interactions inside the structure). An entity can be a proxy that represents our perception of the world. According to the purpose, a part of the world can be perceived in different ways and can turn out to be modeled by different sets of ontological building blocks.

Lévi-Strauss was a Belgian-born French social anthropologist and leading exponent of structuralism, a name applied to the analysis of cultural systems in terms of the structural relationships among their elements. Lévi-Strauss’ structuralism was an effort to classify and reduce the enormous amount of information about cultural systems to ontological entities. Therefore, he viewed cultures as systems of communication and constructed models based on structural linguistics, information theory and cybernetics to give them an interpretation. Structuralism is a school of thought which evolved first in linguistics (de Saussure 1960) and did not disseminate outside the French-speaking intellectual ecosystem.

1.2.2.2. René Descartes

René Descartes (1596–1650), French philosopher and mathematician, was very influential in theorizing the reductionistic approach to analyzing complex objects. It consists of the view that a whole can be fully understood in terms of its isolated parts or an idea in terms of simple concepts.

This attitude is closely connected to the crucial issue that science faces, i.e. its ability to cope with complexity. Descartes’ second rule for “properly conducting one’s reason” divides up the problems being examined into separate parts. This principle most central to scientific practice assumes that this division will not dramatically distort the phenomenon under study. It assumes that the components of the whole behave similarly when examined independently to when they are playing their part in the whole, or that the principles governing the assembling of the components into the whole are themselves straightforward.

The well-known application of this mindset is the decomposition of a human being into the body and the mind localized in the brain. It is surprising to realize that Descartes’ approach to understanding what a human being is and how (s)he is organized remains an issue discussed by philosophers of our time. The issue of mind–body interaction with the contributions of neurosciences will be developed in another section.

The argument supporting this approach is to reduce the complexity of an entity in reducing the variety of variables to analyze concomitantly. It is clear that this methodology can be helpful in a first step but understanding how the isolated parts interact to produce the properties of the whole cannot be avoided. This type of exercise can appear very tricky. This way to approach complexity contrasts with holism. Holism consists of two complementary views. The first view is that an account of all the parts of a whole and of their interrelations is inadequate as an account of the whole. For example, an account of the parts of a watch and of their interactions would be incomplete as long as nothing is said about the role of a watch as a whole. The complementary view is that an interpretation of a part is impossible or at least inadequate without reference to the whole to which it belongs.

In the philosophy of science, holism is a name given to views like the Duhem–Quine thesis, according to which it is the whole theories rather single hypotheses that are accepted or rejected. For instance, the single hypothesis that the earth is round is confirmed if a ship disappears from view on the horizon. However, this tenet presupposes a whole theory – one which includes the assumption that light travels in straight lines. The disappearance of the ship, with a theory that light-rays are curved, can also be taken to confirm that the earth is flat. The Duhem–Quine thesis implies that a failed prediction does not necessarily refute the hypothesis it is derived from, since it may be preferable to maintain the hypothesis and instead revise some background assumptions.

The term holism was created by Jan Smuts (1870–1950), the South African statesman and philosopher, and used in the title of his book Holism and Evolution (Smuts 1926). In social sciences, holism is the view that the proper object of these sciences is systems and structures which cannot be reduced to individual social agents in contrast with individualism.

As a mathematician, Descartes has developed what is called analytical geometry. Figures of geometric forms (lines, circles, ellipses, hyperboles, etc.) are defined by analytical functions and their properties described in terms of equations in “Cartesian” coordinates measured from intersecting straight axes. This is an implicit way to facilitate the analysis of complex properties of geometric forms along different spatial directions.

1.3. System-based current methods proposed for dealing with complexity

1.3.1. Evolution of system-based methods in the 20th Century

All current methods used to deal with complexity have been evolved in the 20th Century within the framework of what is called the system theory.

The system concept is not a new idea. It was already defined in the encyclopedia by Diderot and d’Alembert published in the 18th Century in Amsterdam to describe different fields of knowledge. In astronomy, a system is assumed to be a certain arrangement of various parts that make up the universe. The earth in Ptolemy’s system is the center of the world. This view was supported by Aristotle and Hipparchus. The motionless sun is the center of the universe in the Copernicus’ system. In the art of warfare, a system is the layout of forces on a battlefield or the provision of defensive works respectively according to the concepts of a general or a military engineer. The project by Law around 1720 to introduce paper money for market transactions was called Law’s system.

1.3.1.1. The systems movement from the 1940s to the 1970s

A revived interest in the concept of systems emerged in the 1940s, in the wake of the first-order cybernetics whose seminal figure is Norbert Wiener (1894–1964). His well-known book Cybernetics: Or the Study of Control and Communication in the Animal and the Machine (Wiener 1948) was published in 1948 and is considered as a landmark in the field of controlled mechanisms (servo-mechanisms). The word “animal” in the title of Wiener’s book reflects his collaboration with the Mexican physiologist Arturo Rosenblueth (1900–1970) of the Harvard medical School. He worked on transmission processes in nervous systems and favored teleological, non-mechanistic models of living organisms.

Cybernetics is the science that studies the abstract principles to control and regulate complex organizational structures. It is concerned not so much with what systems consist of but with their function capabilities and their articulations. Cybernetics is applied to design and manufacture purpose-focused systems of nonself-reorganizable components. By design, these mechanistic systems can sustain a certain range of constraints from the environment – never forget that the surroundings in which a system is embedded are part of the system – through feedback and/or feed-forward loops and from the failure of some of its components. In general, this last situation is dealt with at the design stage for securing a “graceful” degradation of operations. This first-order cybernetics clearly refers to Descartes’ school of thought: courses of action controlled by memorized instructions.

Shortcomings were revealed when the cybernetics corpus of concepts was applied to non-technical fields and especially social and management sciences. Second-order cybernetics was worked out under the impellent of Heinz von Förster (1978). The core idea was to distinguish the object (the system) and the subject (the system designer and controller). This delineation focuses on the role of the subject that decides on the rules and means a given set of interacting entities (the object) has to operate with. The subject can be a complex system. It may constitute in different parts, a human being or group and technical proxies thereof encapsulating the rules chosen by the human entity. These rules are subject to dynamical changes due to evolving constraints and set targets that are fulfilled or not. The presence of human cognitive capabilities in the control loop allows for securing the sustainability of systems in evolving ecosystems.

Another important stakeholder – not to say the founding father – in the conception and dissemination of the system paradigm is the biologist Ludwig von Bertalanffy (1901–1972). It is relevant to describe his scientific contribution to systems thinking, contribution that goes far beyond his well-known book General Systems Theory published in 1968 and often referred to as GST (von Bertalanffy 1968). With the economist Kenneth Boulding, R.W. Gerard and the biomathematician A. Rapoport in 1954 founded a think-tank (Society for General Systems Theory) whose objectives were to define a corpus of concepts and rules relevant to system design, analysis and control. GTS was imagined by Ludwig von Bertanlaffy as a tool to design models in all domains where a “scientific” approach can be secured. Opposite to the mathematical approach of Norbert Wiener, Ludwig von Bertalanffy describes models in a non-formal language striving to translate relations between objects and phenomena by sets of interacting components, the environment being a full part of the system. These interacting components match an organizational structure with an inner dynamical assembling device like living organisms. Contrary to Norbert Wiener’s cybernetics feedback and feed-forward mechanisms, actions according to Ludwig von Bertalanffy’s view are not only applied to objectively given things but can result in self-(re)organization of systems structures to reach and/or maintain a certain state, as happens in living organisms. In a world where data travels at the speed of light, the response time to adjust a human organization to an evolving environment is critical. The environment of any system is a source of uncertainty, because it is generally out of the control of the system designer and operator.

1.3.1.2. The systems movement in the 1980s: complexity and chaos

The systems movement in the 1980s became aware of two extents that were up to this time unconsciously not considered, i.e. that complexity cutting through all the scientific disciplines from physics to biology and economics is a subject matter in itself and that some researchers in a limited number of fields pioneered investigation in chaotic behaviors of systems independently. The Santa Fe Institute (SFI) played and still plays a major leading role in the interdisciplinary approach to complexity.

The SFI initiative brings a telling insight into the consciousness felt in the 1980s by scholars of different disciplines that they share the same issue, complexity, and that interdisciplinary discussions could help tackle this common stumbling block to achieve progress in knowledge.

SFI was founded in Santa Fe (California) in 1984 by scientists (including several Nobel laureates) mainly coming from the Los Alamos Laboratory. It is a non-profit organization and was created to be a visiting institution with no permanent positions. It consists of a small number of resident faculty, post-doctoral researchers and a large group of external faculty. Funding comes from private donors, grant-making foundations, government science agencies and companies affiliated with its business network. Its budget in 2014 was about 14 million US dollars. The primary focus is theoretical research in wide-ranging models and theories of complexity. Educational programs are also run from undergraduate level to professional department.

As viewed by SFI, “complexity science is the mathematical and computational study of evolving physical, biological, social, cultural and technological systems” (SFI website). Research themes and initiatives “emerge from the multidisciplinary collaboration of (their) research community” (SFI website).

SFI’s current fields of research which are described on their website demonstrate the wide spectrum of subject matters considered relevant today:

– complex intelligence: natural, artificial and collective (measuring and comparing unique and species-spanning forms of intelligence);

– complex time (can a theory of complex time explain aging across physical and biological systems?);

– invention and innovation (how does novelty – both advantageous and unsuccessful – define evolutionary processes in technological, biological and social systems?).

M. Mitchell Waldrop (1992) chronicles the events that happened in SFI from its foundation to the early 1990s. It is outside the scope of this context to survey all the interdisciplinary workshops run during this period of time. I will elaborate on the contributions of John H. Holland and W. Brian Arthur.

The lecture “The Global Economy as an Adaptive Process” delivered by John H. Holland, Professor of Psychology and Professor of Computer Science and Engineering at the University of Michigan, at a workshop held on September 8, 1987 contains the following main points that are of general application:

- – Economy is the model “par excellence” of what is called “complex adaptive systems” (CAS), term coined by the SFI. They share crucial properties and refer to the natural world (brains, immune systems, cells, developing embryos, etc.) and to the human world (political parties, business organizations, etc.). Each of these systems is a network of agents acting in parallel and interacting. This view implies that the environment of any agent is produced by other acting and reacting agents. The control of this type of system is highly distributed as long as no agent turns to be a controlling supervisor.

CAS has to be contrasted with “complex physical systems” (CPS). CPS follow fixed physical laws usually expressed by differential equations – Newton’s laws of gravity and Maxwell’s laws of electromagnetism are cases in point. In CPS, neither the laws nor the nature of elements change over time; only the states of the elements change according to the rules of the relevant laws. The variables of differential equations describe element states. CPS will be investigated in section 1.3.1.3.

- – Complex adaptive systems have a layered architecture. Agents of lower layers deliver services to agents of higher levels. Furthermore, all agents engineer changes to cope with the environmental requirements perceived through incoming signals.

- – Complex adaptive systems have capabilities for anticipation and prediction encoded in their genes.

Irish-born W. Brian Arthur who shifted from operation research to economics when joining the SFI has kept working in the field of complexity and economics. He produced an SFI working paper in 2013 (Arthur 2013) summarizing his ideas about complexity, economics and complexity economics. This term was first used by W. Brian Arthur in 1999.

Here are the main features of W. Brian Arthur’s positions:

- – “Complexity is not a theory but a movement in the sciences that studies how the interacting elements in a system create overall patterns and how those overall patterns in turn cause the interacting elements to change or adapt … Complexity is about formation – the formation of structures – and how this formation affects the objects causing it” (p 4). This means that most systems experience feedback loops of some sort, which entails nonlinear behaviors “genetically”.

- – An economic system cannot be in equilibrium. “Complexity economics sees the economy as in motion, perpetually ‘computing’ itself – perpetually constructing itself anew”. (p 1). Equilibrium, studied by neoclassical theory, is an idealized case of non-equilibrium which does not reflect the behavior of the real economy. This simplification makes mathematical equations tractable.

This point of view is in line with the dissipative systems studied by Ilya Prigogine. An economic system like a living organism operates far from equilibrium.

A lot of distinguished scholars brought significant contributions to the SFI activities. It is outside the scope of this framework to describe all of them.

1.3.1.3. Ilya Prigogine: “the future is not included in the present”

Ilya Prigogine and his research team played a major role in the study of irreversible processes with the approach of thermodynamics. His reputation outside the scientific realm comes from his co-working with Isabelle Stengers with whom he co-authored some seminal books about the epistemology of science (Prigogine 1979; Prigogine and Stengers 1979 and 1984).

He received the 1977 Nobel Prize for Chemistry for his contributions to non-equilibrium thermodynamics, particularly the theory of dissipative structures that are the very core of all phenomena in nature. He started his research at a time when non-equilibrium thermodynamics was not considered worthwhile since all thermodynamic states were supposed to reach equilibrium and stability sooner or later as time passes by. Only equilibrium and near-equilibrium thermodynamic systems were subject to academic research.

The pioneer work of Ilya Prigogine proved that non-equilibrium systems are widespread in nature: many of them are natural organic processes, namely evolving with time without any possible time reverse. That holds true clearly for biological processes. Science cannot yet reverse aging!

According to Ilya Prigogine, systems are not complex themselves, but their behaviors are. He contrasted classical and quantum dynamics models and thermodynamics models. In classical dynamics (Newton’s laws) and quantum mechanics, equations of motion and wave functions conserve information as time elapses: initial conditions can be restored by reversing the variable time t into –t. Thermodynamics models refer to a paradigm of processes destroying and creating information without letting initial conditions be restored. Time symmetry is broken: this is expressed by comparing time to an arrow which never comes back to its starting point contrary to boomerangs.

A key component of any system is its environment. When this part of the system is not taken into account, the system is qualified as a “closed system”. It is in fact a simplifying abstraction – a falsification in Popper’s terms – which facilitates reasoning and computations. In reality, all thermodynamics systems are “open”, i.e. exchanging matter and energy with their environments. Prigogine called them “dissipative systems”. Why this qualifier? For physicists, irreversible processes, such as the heat transfer from a hot source to a cold source, are associated with energy dissipation, degradation of a sort. There is exhaustion of the available energy in the system. If we want to transfer energy from a cold source to a hot source, energy must be delivered.

A dissipative system is a thermodynamically open system operating out of, often far from, thermodynamics equilibrium in an environment with which it exchanges matter and energy. A dissipative structure is a dissipative system with a reproducible steady state, naturally or under constraints. They constitute a large class of systems found in nature.

All living organisms exchange matter and energy with their environment and are, as such, dissipative systems. That explains why biologists, L. von Bertalanffy among others, were the first to develop interest in this field of research a long time ago. Biological processes are considered by many people as “complex”.

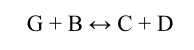

Basically, a chemical system is symbolized by a reaction in which a molecule of species A can combine with a molecule of species B to produce one molecule of species C and one molecule of species D. This process is represented by

A and B are the reactants, whereas C and D are the products. A and B combine at a certain rate and disappear. C and D are formed and appear as the reaction proceeds. In an isolated system, A and B do not disappear completely. After a certain lapse of time, the concentrations [A], [B], [C] and [D] reach a fixed value of the ratio [C] [D]/[A] [B]. How can we come to terms with equation [1.1]? In fact, a reverse reaction takes place

When both reactions occur with the same velocity, a balanced state, equilibrium, is reached. Equations [1.1] and [1.2] are represented as a reversible reaction [1.3] complying with what is called the “law of mass action”.

When a set-up allows the outflow of C and D, the system becomes open. Conditions can be created for the system to attain a state in which concentrations [A], [B], [C] and [D] remain constant, namely d[A]/dt = d[B]/dt = d[C]/dt = d[D]/dt = 0. This state is called the stationary non-equilibrium state. Reaction [1.2] does not logically operate.

Reaction [1.3] may not correspond to an actual reaction but to the final outcome of a number of intermediate reactions. The law of mass action must be only applied to actual reactions. When the situation is described by the two reactions [1.4] and [1.5],

G is described as a catalyst.

The situation described by reactions (4) and (5) is modeled by a system shown in Figure 1.1.

Figure 1.1. Dynamics of reactions with the intermediary formation of a catalyst

D is drained away as the product targeted is C.

Inside a fluid system, diffusion of reagents and products and chemical reactions are the two main phenomena that take place. A variety of irregularities (inhomogeneous distribution of chemical agents and physical parameters such as diffusibilities of chemicals, temperature and pressure) and random disturbances such as Brownian motion and statistical fluctuations occur in the system and may give rise to unstable equilibria leading to new physico-chemical configurations in terms of space and time. Unstable equilibrium is not a condition which occurs naturally. It usually requires some other artificial interference.

Three salient features characterize the complex behaviors of chemical systems according to Gregoire Nicolis and Ilya Prigogine (Nicolis and Prigogine 1989).

1.3.1.3.1. Non-linearity

All phenomena governed by nonlinear equations are highly sensitive to initial conditions (SIC). That means that a small change in the initial values of their variables can dramatically change their time evolutions.

Although the nonlinear effects of chemical reactions (the presence of the reaction product) have a feedback action on their “cause” and are comparatively rare in the inorganic world, molecular biology has discovered that they are virtually the rule so far as living systems are concerned. Autocatalysis (the presence of X accelerates its own synthesis), auto-inhibition (the presence of X blocks the catalysis needed to synthesize it) and cross-catalysis (two products belonging to two different reaction chains activate each other’s synthesis) provide the classical regulation mechanism guaranteeing the coherence of the metabolic function. In addition, reaction rates are not always linear functions of the concentrations of the chemical agents involved.

Biological systems have a past. Their constitutive molecules are the result of an evolution; they have been selected to take part in the autocatalytic mechanisms to generate very specific forms of organization processes.

1.3.1.3.2. Self-organization

Far-from-equilibrium ordinary systems such as chemical reactions can exhibit self-organization phenomena under conditions of given boundary limits. Three modes of self-organization of matter generate complex behaviors:

- – Bistability and hysteresis

According to the initial values of some λ parameter, when increased or decreased, the specific path evolutions are different. They depend on the system’s, past history: this is called the hysteresis. Within a certain range of this parameter [λ1, λ2], the system can be in two stable states depending on the initial conditions. When the upper or lower limits of this value range are reached, the system can switch from one state to the other.

- – Oscillations (periodic and non-periodic)

The BZ (Belousov–Zhabotinsky) reaction discovered by Boris Belousov in 1951 serves as a classical example of non-equilibrium thermodynamics, resulting in the establishment of a nonlinear chemical oscillator. It is thought to involve 18 different steps. It proves that reactions do not have to be governed by equilibrium thermodynamic behavior.

Oscillations can arise if the system in a macroscopic medium is sufficiently far from equilibrium. The BZ reaction operates with the Ce3+/Ce+4 couple as a catalyst and citric acid as a reductant. Ions other than cerium such as iron, copper, etc. and other reductants can be used to produce oscillating reactions.

- – Spatial patterns

When reactions take place in inhomogeneous phases, propagating regular patterns can be observed. The reaction producing ferroin, a complex of phenanthroline and iron, when implemented in a Petri dish, results in the formation of colored spots, growing into a series of expanding concentric rings or spirals depending on temperature. A Petri dish is a shallow cylindrical glass dish named after the German scientist Julius Petri and used by biologist to culture cells.

These two features have been mathematically investigated by Alan Turing in a seminal paper which is considered as the foundation of modern morphogenesis (Turing 1952). He modeled the dynamics of morphogenesis reactions by first-order linear differential equations representing the time evolution of chemical reactions. Different behavioral patterns, either stationary or oscillatory, show up as a function of parameter values in differential equations.

1.3.1.3.3. Chaos

Deterministic chaos follows deterministic rules and is characterized by long-term unpredictability arising from an extreme sensitivity to initial conditions. Such behavior may be undesirable particularly for monitoring processes dependent on temporal regulation.

On the contrary, a chaotic system can be viewed as a virtually unlimited reservoir of periodic oscillations which may be assessed when appropriate feedback is applied to one or more of the controllable system parameters in order to stabilize it.

Non-equilibrium enables a system to avoid chaos and to transform part of the energy supplied by the environment into an ordered behavior of a new type, the dissipative structure: symmetry breaking, multiple choices and correlations of macroscopic range. This type of structure has been often ascribed in the past only to biological systems. As a matter of fact, it appears to be more common than thought in nature.

All of these features led Prigogine to dwell on our innate inability to forecast the future of our ecosystem in spite of the fact that we know the elementary laws of nature. When more than two entities are involved, equations modeling their interactions are often not linear and their computed solutions are highly sensitive to initial conditions so that forecasts depend on the initial conditions chosen. “Rationality can no longer be identified with ‘certainty’, nor probability with ignorance, as has been the case in classical science … We begin therefore to be able to spell out the basic message of the second law (of thermodynamics). This message is that we are living in a world of unstable dynamical systems” (Prigogine 1987). In other words, “the future is not included in the present”. Uncertainty is the very fabric of our human condition.

1.3.2. The emergence of a new science of mind

Human beings are inclusive actors of many systems, either on their own or interfaced to hardware and/or software components. Their behavior, either rational or emotional, brings a special contribution to the complexity of the whole system.

It is outside the scope of this section to tackle this issue on the basis of psychology and sociology. At the start of the 21st Century, biology and neuroscience have reached a point where they have brought about solid results for helping understand human attitudes. Our purpose is to give a survey of the main results established by research in biology and neuroscience after having elaborated on some milestones about how emotion has been considered in different disciplines. This focus on emotion is justified by the contemporary views along the evolutionary psychology spectrum, positing that both basic emotions and social emotions evolved to motivate social behaviors. Emotions either are intense feelings directed at someone or something, or refer to mental states not directed at anything such as anxiety, depression and annoyance. Current research suggests that emotion is an essential driver of human decision-making and planning processes. The word “emotion” dates back to 16th Century when it was adapted from the French verb “émouvoir” which means “to stir up”.

1.3.2.1. Darwin and Keynes on emotions

Perspectives on emotions from the evolutionary theory were initiated by Charles Darwin’s 1872 book The Expressions of the Emotions in Man and Animals. Darwin argued that emotions serve a purpose for humans in communication and aiding their survival. According to Darwin, emotions evolved via natural selection and cross-cultural counterparts. He also detailed the virtues of experiencing emotions and the parallel experiences that take place in animals.

John Maynard Keynes used the term “animal spirits” in his 1936 book The General Theory of Employment, Interest and Money (Keynes 1936) to describe the instincts and emotions that influence and guide human behavior. This human behavior is assessed in terms of “consumer confidence” and “trust”, which are both assumed to be produced by “animal spirits”. This concept of “animal spirits” is still a subject matter of interest as George Akerlof and Robert Schiller’s book Animal Spirits: How Human Psychology Drives the Economy and Why it Matters for Global Capitalism (Akerlof and Schiller 2009) proves.

1.3.2.2. Neurosciences on emotions

The central issue is the distinction between mind and body. In spite of the fact that a lot of philosophers (Thomas Aquinas, Nicolas Malebranche, Bendict Spinoza, John Stuart Mill, Franz Brentano, among others) studied this subject matter, the Cartesian dualism introduced by René Descartes in the 17th Century (Meditationes de Prima Philosophia – 1641) continued to dominate the philosophy of mind for most of the 18th and 19th Centuries. Even nowadays, in some contexts such as data processing, mathematicians are inclined to model the brain function capabilities as software programs made of set instructions without imagining that some disruptive physiological (re)actions can branch out their linear execution.

Descartes proposed that whatever is physical is spatial and whatever is mental is non-spatial and unexpended. Mind for Descartes is a “thinking thing” and a thought is a mental state.

Increased potential in neuro-imaging has allowed investigations into the various parts of the brain and their interactions. The delineation between mind on one side and brain and body on the other side is strongly contended by Antonio Damasio, a neuroscientist, in his book Descartes’ Error (Damasio 2006). He thinks that the famous sentence “cogito, ergo sum” published in Principae Philosophiae (1644) has pushed biologists up to the present time to model biological processes as time-based mechanisms decontrolled from our conscience. In Descartes’ wording, res cognitans, our spirit and res extensa, our functional organs are two disconnected subsystems. Antonio Damasio is convinced that emotion, reason and brain are closely interrelated, which makes the analysis and understanding of human behaviors a conundrum.

In the introduction to his book, Antonio Damasio writes (page XXIII): “the strategies of human reason probably did not develop, in either evolution or any single individual, without the guiding force of the mechanisms of biological regulation, of which emotion and feeling are notable expressions. Moreover, even after reasoning strategies become established in the formative years, their effective deployment probably depends, to a considerable extent, on a continued ability to experience feelings”. This is one of the main tenets that Damasio unfolds in his book: the body and the mind are not independent, but are closely correlated through the brain. The brain and the body are indissolubly integrated to generate mental states.

Let us elaborate on two key words mentioned above, i.e. emotion and feeling. Antonio Damasio (p. 134) distinguishes between primary and secondary emotions. Primary emotions are wired in at birth and innate. Secondary emotions “occur once we begin experiencing feelings and forming systematic connections between categories of objects and situations, on the one hand, and primary emotions, on the other”. Secondary emotions begin with the conscious consideration entertained about a person or situation, and emotions develop as mental images in a thought process. They cause changes in the body state resulting in an “emotional body state”. Feeling is those changes being experienced. Some feelings have a major impact on cognitive processes. Feelings based on universal emotions are happiness, sadness, anger, fear and disgust.

The relationships between emotion and feeling are summarized by Antonio Damasio in this quotation: “emotion and feeling rely on two basic processes:

- 1) the view of a certain body state juxtaposed to the collection of triggering and evaluative images which cause the body state;

- 2) a particular style and level of efficiency of cognitive process which accompanies the events described in (1), but is operated in parallel” (p. 162).

Feelings may change beliefs, and as a result, may also change attitudes. On the basis of the theories mentioned above, events from emotions to attitudes follow this orderly sequence: emotions causing specific body states that generate feelings by cognitive processes and have an impact on beliefs and attitudes. Among the feelings based on universal emotions, mutual fear can be considered as one of the major psychological factors affecting relationships in collaborative networked environments. Fostering trust appears to be a relevant countermeasure to mutual fear. A general argumentative review of the biology of emotional states is given in Kandel’s panoramic autobiography (Kandel 2007).

At this point, it seems relevant to recall that the brain contains a hundred billion nerve cells interconnected by a hundred trillion links and that it is not an independent actor. It is part of an extended system reaching out to permeate, influence and be influenced by every entity of our body system. All our physical and intellectual activities are directly or indirectly controlled by the action of the nervous system of which the brain is the central part. The brain receives a constant flow of information from our body and the outside world via sensory nerves and blood vessels feeding it with real-time data.

When discussing the brain we are faced with a self-referencing paradox: we think about our brain with our brain! We are caught in a conundrum that is difficult to escape and sheds light on the partiality on our own current and future in-depth knowledge of brain processes.

Other relevant contributors to the study of emotion are worth mentioning such as Joseph Le Doux (The Emotional Brain) (Le Doux 1996), Derek Denton (The Primordial Emotions: The Dawning of Consciousness) (Denton 2006) and Elaine Fox (Emotion Science: An Integration of Cognitive and Neuroscientific Approaches) (Fox 2008). The two last references show that the understanding of emotion that is the very fabric of our human lives still motivates scholarship research without the hope of getting a full explanation in the future, considering the intricate pattern of the structure of the brain and the influences of the basic physiology and stress system of the human body.

1.4. Systems thinking and structuralism

A piecemeal approach to problems within firms and in local and national governments is no longer sustainable when firms and nations compete or collaborate to compete. This is because technology, firms and organizations have been made complex by the number of interacting stakeholders and the variety of techniques involved, and because decisions incur more far-reaching consequences in terms of space and time.

Systems’ thinking has turned out to be a thought tool to understand issues in a wide variety of knowledge realms outside the technical world. It is relevant to describe in detail systems thinking and structuralism that go hand in hand to deliver a structured view of interacting entities.

1.4.1. Systems thinking

Bernard Paulré is an economist who has investigated complexity in economic social contexts. We have chosen to elaborate on his description of systems thinking to show that this mindset has disseminated far beyond the technical sphere. Systems thinking or “systemics” has been defined by Bernard Paulré (Paulré 1989) as follows:

“It is the study of laws, operational modes and evolution principles of organized wholes, whatever their nature (social, biological, technical …)”. This study is first a matter of analyzing constituent elements of these complex wholes “from the scrutiny of at least two types of interaction: on one hand those linking the elements belonging to the organized whole (and considered as such falling under its full control) and on the other hand those that associate this very whole, as it is globally perceived, and its environment”.

At first sight, this definition looks complete and well articulated. However, a word draws attention, i.e. law.

- – A first question raised is: what is a law?

A law gives the quantified relation between a cause and an effect, according to the French scientist Claude Bernard. In a more general way, a law states a correlation between phenomena and is verified by quantitative or qualitative experiments. Direct or indirect validation is a critical step.

- – A second question raised is: are there laws common to all organized systems?

As a matter of fact, the system approach is implemented in two very different modeling frameworks to tackle complexity. Within the first framework, it is used to design a real or virtual object by combining “simple” functional systems into a “complex” system. It is a bottom-up approach (composition). The purpose is to easily keep track of the different design steps for quality control and maintenance operations. Within the second framework, the purpose is to understand how an existing “complex” entity operates by “decomposing’ it into simpler elements with the idea of monitoring it. The first situation is widely found in the technical world where the term “system integration” is nowadays of common use.

Another item of discussion is the very nature of “system approach”. Is it a theory or a tool for coming to terms with complexity? A theory is supposed to rely on laws. von Bertalanffy’s new ideas about systems were published for the first time in 1949 under the title Zu einer algemein Systemlehre. Lehre in German bears a significance different from théorie in French or theory in English. Lehre has a double meaning, namely “practice” (Erfahrung die man gemacht hat) and doctrine, body of knowledge (die Lehre des Aristoteles). The term “theory” in German includes two contrasting views, namely actual know-how gained from experience and abstract structure of concepts. These two views can be made compatible by considering that the abstract structure of concepts is built on know-how gained from experience. A telling example is cybernetics. The experience gained from mechanical automata developed in the 18th Century was applied in the 20th Century to devices called servo-mechanisms when electromechanical and later on electronic devices became available. The principles of servo-mechanisms were converted into a doctrine called “cybernetics”, the art of governing systems. This doctrine became applied in social, economic and management sciences as a blueprint to design controlling systems. The title of a book by Herbert Stachowiak (Stachowiak 1969) illustrates that state of mind: Denken und Erkennen im kybernetischen Modell (Thinking and understanding by cybernetics modeling).

In order to complete the scope of disciplines and people involved in the development of systems thinking after World War II, it is relevant to mention Kenneth Boulding’s hierarchy of system complexity (Boulding 1956). His purpose was to show that all organic and constructed things could be interpreted as layered constructions of building blocks to make complexity more easily interpretable in terms of explicability, transparency and provability. It is worth noting that this type of hierarchical architecture has become common in the field of information systems and telecommunication services.

Two adjacent levels in the hierarchy differ not merely in their degree of diversity or variability but in the appearance of wholly specific system properties. On the contrary, Kenneth Boulding asserts the cumulative properties of the hierarchy so that each level incorporates the elements or components (sub-systems, sub-sub-systems, etc.) of all the other lower levels, but a new type of emergent property appears at that level. Boulding identified nine levels of complexity. As you move up the hierarchy, complexity increases, in the sense that observers find it harder to predict what will happen. Boulding’s levels 1–5 are the aggregation of what can be called the “rational–technical” level. This level’s subdivision is often called the mechanistic level. Level 7 is roughly a personal level, level 8 includes the wider environment level and level 9 includes the spiritual side to our lives.

Kenneth Boulding’s nine levels of complexity are described as follows:

- – Level 1: frameworks. The static structural description of the system is produced at this level, i.e. its building blocks; for example, the anatomy of the universe or the solar system.

- – Level 2: clockworks. The dynamics system with predetermined, necessary motion is presented at this level. Unlike level 1, the state of a level 2 system changes over time.

- – Level 3: control system. The control mechanism or cybernetic system describes how the system behavior is regulated to remain stable in line with externally defined targets and shun explosive loss of control as in the thermostat. The major difference from level 2 systems is the information flow between the sensors, regulator and actuator.

- – Level 4: open systems. These exchange matter and energy with their environment to self-maintain their operations. This is the level at which life begins to differentiate from non-life: it may called the level of the cell.

- – Level 5: blueprinted growth systems. This is the genetic-societal level typified by the plant. It is the empirical world of the botanist where reproduction takes place not through a process of duplication but by producing seeds containing pre-programmed instructions for development. They differ from level 4 systems which reproduce by duplication (parthenogenesis).

- – Level 6: internal image systems. The essential feature of level 6 systems is the availability of a detailed awareness of the environment captured by discriminating information sensors. All pieces of information gathered are then aggregated into a knowledge structure. The capabilities of the previous levels are limited to capturing data without transforming it into significant knowledge.

- – Level 7: symbol-processing systems. Systems at this level exhibit full awareness not only of the environment but also of self-consciousness. This property is linked to the capability of inferring symbols and ideas into abstract informational concepts. These systems consist of self-conscious human speakers.

- – Level 8: multicephalous systems. This level is intended to describe systems with several “brains”, i.e. a group of individuals acting collectively. These types of systems possess shared systems of meaning – for example, systems of law or culture, or code of collaborative practice – that no individual human being seems to possess. All human organizations have the characteristics of this level.

- – Level 9: system of unknown complexity. This level is intended to reflect the possibility that some new level of complexity not yet imagined may emerge.

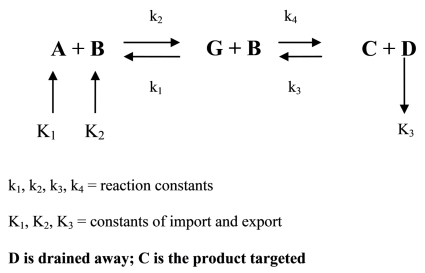

It is worth recalling here the interrelations between signs (symbols), data, information, knowledge and opinions to grasp an unbiased understanding of the contributions of Kenneth Boulding’s hierarchy of complexity. These interrelations form a five-level hierarchy, as shown in Figure 1.2.

Figure 1.2. Relationship between signs, data, information, knowledge and opinions

The basic element that can be captured, transmitted, memorized and processed is a sign (for instance, of alphanumerical nature). In order to convert concatenated signs into data, they have to be ordered according to a morphological lexicon and syntax that is able to produce significances. When interpreted as a function of the context, these significances are turned into meanings that are pieces of information. They are in fact data structured on the basis of the factual relationships with the context taken into account to convert data into pieces of information.

When a specific piece of information is “interconnected” with other pieces of information, such relations create a network of a sort which can be qualified as knowledge. In general, unconnected pieces of information do not give access to understanding a situation. At this level, a subjective dimension comes into play, i.e. individuals’ opinions that determine human behaviors. Opinions are based on beliefs.

1.4.2. Structuralism

Structuralism has been given a wide range of meaning in the course of the 20th Century. It has been called on by many fields of knowledge (linguistics, literary studies, sociology, psychology, anthropology) for delivering a rationalized understanding of their substance.

Structuralism is generally considered to derive its organizational principles from the early 20th-Century work of Saussure, the founder of structural linguistics. He proposed a “scientific” model of language, one understood as a closed system of elements and rules that account for the production and the social communication of meaning. Since language is the foremost instance of social sign systems in general, the structural account might serve as an understanding.

In general terms, there are three characteristics by which structures differ from mere aggregates:

- – the nature of an element depends, at least in part, on its place in the structure, i.e. its relations with other elements;

- – a structure is purpose-made for fulfilling an objective through function capabilities carried out by its elements;

- – the structure is not static, but allows for re-organization by changing the properties of and/or the relations between its elements to adjust to new requirements, internal or external to the structure.

A particular entity can be understood only in the context of the whole system it is embedded in. Structuralism holds that particular elements have no absolute meaning or value. Their added values are relative to the characteristics of other elements. Everything makes sense only in relation to something else. The properties of an element have to be studied from both points of view, i.e. its intrinsic processing capabilities and the ways in which these processing capabilities are triggered by signals received from its surrounding. An element is supposed to fulfill a function of a sort and deliver an output to another element. This output can depend on the nature of the incoming signal. When an element receives some incoming interference signal (by interference is meant outside the set of signals considered by design), the output may be changed and trigger the emergence of a new configuration of the whole system. Then, the system may run out of control and undergo self-reorganization.

P. Haynes (Managing Complexity in the Public Services) (Haynes 2003) and P. Cilliers (Complexity and Postmodernism: Understanding Complex Systems) (Cillers 1998) elaborate on complexity from the point of view of social sciences that turn out to be hybrid systems referring to cognitive, biological and economic phenomena.

1.4.3. Systems modeling

1.4.3.1. “Traditional” practice

Modeling is the answer humans have found to cope with understanding the ecosystems we live in. Galileo and Newton are considered as the founding fathers of modern physics: their achievements were to construct models, i.e. representations of a part of the world described with a mathematical language allowing for computations to be carried out.

Today, the modeling and system approaches go hand in hand for challenging the increasing complexity of the societal ecosystem in which we are embedded. We are exposed to a deluge of new faceless digital technologies. We are not yet fully aware of the impacts of what is called big data on our behaviors and private lives. For humans and businesses, the lake of data received from their environments is to be filtered and processed accordingly to help them survive.

A model is a constructed representation of a part of the world. Representations are derived from our sensory perceptions of our environments. However, all parts of our environments are not accessible through our five senses (sight, hearing, smell, touch and taste). Some parts of the world are hidden to our sensory perception and their perceived aspects are fuzzy and intangible. Microphysics as well as social behaviors come into this category. When interacting with other people, we have no access to the intentions and ideas behind their mind.

A model is necessarily an abstraction. It captures what we estimate to be the relevant characteristics of the real world from a particular perspective and ignores others. Therefore, the same object and the same entity can be represented by different models.

Models are intended to be manipulated and the results can be fed back to the real world through interpretation. They are supposed to help find solutions to real-world problems.

Many a model has been worked out to meet the requirements of its purpose. A variety of models with respect to their purposes can be listed:

- – heuristic model: this model is intended to mimic the behaviors of a real-world process, as it is perceived by outside observers. The actual courses of action at work may be very different from those imagined when working out the model. The real world is a black box with controlled inputs and received outputs.

- – functional model: this model is developed on the basis of the laws of nature, formal and behavioral logics encapsulated in a theory. It results in deriving equations, allowing the dynamical study of real-world processes.

- – cybernetics model: cybernetics is in fact a modeling approach to the delineation in the world between the human motivation and purpose, and the construction and control of an artifact by human actors to fulfill tasks on their behalf. There is a transfer of a sort of human responsibility for operational courses of action to an artifact agent whose instructions for action are remotely controlled by humans.

1.4.3.2. Object-oriented modeling for information processing

Today, ubiquitous information technology has been relying on software development for the last 50 years. One of the fundamental problems in software development is: how does someone model the real world so that computation of a sort can be carried out? We think that all the efforts made to tackle the issues of software modeling can benefit other fields of knowledge where explicit or implicit modeling is the cornerstone of what can become knowledgeable.

In the 1950s and 1960s, the focus of software system developers was on the algorithmic. The main concerns at that time were solving computation problems, designing efficient algorithms and controlling the complexity of computation. The models used were computation-oriented and the decomposition of complex systems was primarily based on control flow.

In the 1970s and 1980s, different types of software systems emerged to address the complexity of data to process. These systems were centered on data items and data flows with computation becoming less requiring. The models used were data-oriented and the decomposition of complex systems was primarily based on data flows.

What is called object-oriented modeling is a balanced view of the computation and data features of software systems. The decomposition of complex systems is based on the structure of objects and their relationships. This approach dates back to the late 1960s. It has a narrow spine but as a matter of fact offers a wide embrace. It can be used as a blueprint for a modeling mindset in different domains where the real world has to be analyzed and modeled to yield design patterns and frameworks.

We discuss here the fundamental concepts and the principles of object-oriented modeling (Booch 1994). The interpretation of the real world is translated into its representation by objects and classes (sets of objects). A class serves as a template for creating objects (instances of a class). The state of an object is characterized by its attributes, and their values and its behavior are defined by a set of methods (operations) which manipulate the states. Objects communicate with one another by passing messages. A message consists of the receiver’s method to be invoked. Complexity is dealt with by decomposing a system into a set of highly cohesive (functional relatedness) but loosely coupled (interdependency) modules. With the mindset familiar in the realm of information technology (IT), the interactions between objects can be understood as client–server relationships, i.e. services are exchanged between objects alternatively acting as emitters and receivers of services.

In order to maintain a stable overall architectural structure and at the same time to allow for changing the inner organizations of an object, a succinct and precise description of the object’s functional capabilities known as its “contractual” interface should be made available to other objects. That technique, called “encapsulation”, separates the “public” description of the object’s inputs and outputs from how the object’s functional capabilities are implemented.

We will examine whether the concepts used for describing the real world in object-oriented programming can be used to produce a reference framework to assess models developed by different knowledge disciplines. The BDI agent modeling human organizations will be vetted accordingly.

1.4.3.3. Artificial intelligence and system modeling

1.4.3.3.1. Current context

Artificial intelligence (AI) is expected to impact system modeling because it is more and more likely that “virtual” agents endowed with AI will interact with human agents in a wide spectrum of systems. These “virtual” agents can be characterized as autonomous agents that, on behalf of their designers, will take in information about their environments, and will be capable of making choices and decisions, sensing and responding to their environments and even modifying their objectives. That situation will have an influence on system modeling, design and operations. It will add complexity to stakeholders’ understanding of such “black-boxed” agents.

Let us first elaborate on what AI is. AI is subject to a strong revival in interest because enhanced computing facilities are made available, and the data lakes are supposed to develop the practices of “deep learning”.

In the 1980s, a great deal of effort was devoted to this field of research but the outcome was rather disappointing. As a result, less attention was paid to it. In 1995, Stuart Russell and Peter Norvig (Russell and Norvig 1995) described AI as “the designing and building of intelligent agents that receive percepts from the environment and take actions that affect the environment”. This definition is still fully valid. The most critical difference between AI and general-purpose software is “take actions”. That means that AI enables software systems to respond, on their own from the world at large, to signals that programmers do not control and therefore cannot anticipate. The fastest-growing category of AI is machine learning, namely the ability of software to improve its own activity by analyzing interactions with its environment.

Applications of AI and machine learning could result in new and unexpected forms of interconnectedness between different domains of the economic realm based on the use of previously unrelated data sources. In particular, the unregulated relationships between financial markets and different sectors of the “real” economy could reveal themselves to be highly damaging for these sectors.

1.4.3.3.2. Brief historical overview of AI development

Development from the 1960s to the 1980s

Artificial intelligence (AI) research can be traced back to the 1960s. From inception, the idea was to use predicate calculus. Therefore, the first papers on AI principally dealt with automatic theorem-proving, a learning draught-playing system and GPS (General Problem Solving) (Feigenbaum and Feldman 1963). In 1968, M. Minsky edited a book (Minsky 1968) in which research on semantic networks, natural language processing and recognition of geometrical patterns and so on was discussed in detail. At the same time, a specific programming language LISP was developed and a book produced by its creators (McCarthy et al. 1962).

LISP is a general-purpose language, enabling symbol manipulation procedures to be expressed in a simple way. Other programming languages based on LISP but with added and improved facilities were developed in the 1970s which include INTER-LISP, PLANNER, CONNIVER and POP-2.

Applications of AI were first qualified as “expert systems” because they incorporated the expertise or competencies of one or many experts. Their architecture was structured in a way that separates the knowledge of the experts from the detailed traits of a case under study. The stored knowledge is applied to the case and, from deductive reasoning, advice can be delivered to support decisions for data analyses. An important field of application was in health care to help diagnose diseases.

The main components of an expert system are the knowledge base, the inference engine, the explanation subsystem and the knowledge acquisition subsystem.

- – The knowledge base contains the domain-specific knowledge acquired from experts and the particular data captured about the case under study.

- – The inference engine is in charge of dynamically applying the domain-specific knowledge to the case being processed.

- – The explanation subsystem is the interface allowing users to enter requests about how a conclusion has been reached or why a question is being asked. This facility gives users access to the various steps of the reasoning deployment.

- – The knowledge acquisition subsystem enables the capture of knowledge by the expert or generates general rules from data derived from past cases by automated induction.

A key issue is the representation of knowledge. Different forms have different domains of suitability. Rule-based representations are common in many expert systems, particularly in small ones. Much knowledge is declarative rather than procedural. It can be cast in the mould of propositions linked together by logical relationships. Logic-supported rules are known as if-then rules (if antecedent condition, then consequent) associated with the logical connectives AND, OR, NOT.

This structure may be conveniently combined with the representation of attributes and values of objects (A-V pairs) within the very rules. For large systems, an Object-Attribute-Value (O-A-V) representation appears to be a better strategy. Semantic networks portray the connections between objects and concepts. They are very flexible. They have a formal composition of nodes connected by directed links describing their relationships. Objects are nodes. They may be characterized by attribute and value nodes. Has-a, is-a and is-a-value-of are standard names of links associated with object, attribute and value nodes. Some examples in Figure 1.3 show that how this concept is flexible and makes it possible to represent inheritance hierarchies. If a class of objects has an attribute, any object which belongs to this class is characterized by the same attribute.

Figure 1.3. Examples of relations between objects, classes of objects and attributes

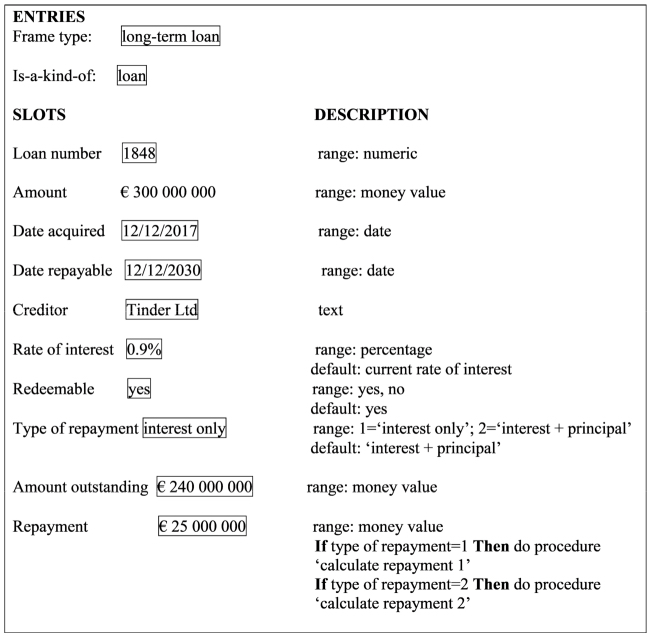

It is supposed that experts rationally represent their knowledge as various interconnected concepts (frames). Frames consist of slots in which entries are made for a particular occurrence of the type frame. The ability of frames for default values to fill the slots, and reference procedures and their possible linking by pointers, provides a powerful tool for describing knowledge. Frames made it possible to change to a different frame if an expected event does not materialize.

The objective of Minsky’s frame theory was to explain human thought patterns, and its application scope is more far-reaching than its focus on AI. A language called FRL (Frame Representation Language) has been developed and is simply an extension of LISP.

In order to materialize the concept of frame, Figure 1.4 captures the main features of a frame for a long-term loan.

Figure 1.4. A frame for a long-term loan

Different principles of inference can be used whether reasoning is concerned with certainty or some degree of uncertainty is involved when complex thinking processes are associated with expertise. In this case, the techniques of reasoning with certainty and fuzzy set theory interact. Fuzzy set theory interprets predicates as denoting fuzzy sets. A fuzzy set is a set of objects of which a part is definitely in the set. Another part is definitely not and some are only probably or possibly within the set. The issue of modeling uncertain knowledge is addressed by the development of what is called neural networks.

Inference control strategies ensure that the inference engine carries out reasoning in an exhaustive and efficient manner. Systems which attempt to prove selected goals by establishing facts for proving these goals, and facts which themselves become secondary goals are backward-chaining systems or goal-driven systems. This approach contrasts with forward-chaining systems which attempt to derive a conclusion from existing established facts. All major expert systems use backward chaining.

Backward-chaining systems postulate a conclusion and then determine whether or not that conclusion is true. For instance, if the name of an illness is postulated, then a rule which provides that illness as a conclusion is sought. If a rule is found, it is checked whether all the preconditions of that rule are fulfilled, i.e. are compatible with the symptoms. If not, then another hypothesis is adopted and the procedure is repeated till the hypothesis chosen holds.

A state of the art in AI in terms of hardware and software by the mid-1980s is compiled in a book edited by Pierre Vandeginste (Vandeginste 1987).

Development of neural networks

Neural networks (also called connectionist networks) gained attractiveness because of their supposed similarities with human information processing in the brain. Why supposed? Because we still have little understanding of how the brain of living animals actually works.

The best approximation to highlight the neural network research that has been most influential would be to combine the papers edited by Anderson and Rosenfeld (1988), with historical remarks by Rumelhart and McClelland (1986), Hinton and Anderson (1981), Nilsson (1965), Duda and Hart (1973) and Hecht-Nielsen (1990).

A neural network can serve as the knowledge base for an expert system that does classification tasks. The major advantage of this approach is that the learning algorithms can rely on training examples and generate expert systems automatically. Any decision is a candidate to a neural network expert system if training data is available. The availability of data lakes and computing power makes it possible to envision the day when short-lived expert systems for temporary tasks become constructed as customized simulation models of human information processing for individual use.

Briefly, a neural network model consists of a set of computational units (called cells) and a set of one-way data connection joining units. At certain times, a unit analyzes its input signals and delivers a computed signed number called activation as its output. The activation signal is passed along those connections to other units. Each connection has a signed number called weight. This weight determines whether an activation traveling along a connection influences the receiving cell to produce a similar or a different activation output signal according to the sign (+ or -) of the incoming signal weight. The aggregated level of all incoming signals received by a cell determines the output or activation delivered by this cell to other cells. An example is shown in Figure 1.5.

Figure 1.5. Activation (output) computed for a single cell

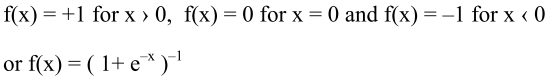

This type of cell is called semi-linear because of the dependence on the linear weighted sum Si of the weights ![]() of all the incoming signals from the cells uj connected to the cell ui. The activation function f( ) is usually nonlinear, bounded and piecewise differentiable such as:

of all the incoming signals from the cells uj connected to the cell ui. The activation function f( ) is usually nonlinear, bounded and piecewise differentiable such as:

From a computational point of view, neural networks offer two salient features, namely learning and representation.

Learning

Machine learning refers to computer models that improve their performance significantly on the basis of their input data. Two techniques are used and are divided into supervised and unsupervised models.

In supervised learning, a “teacher” supplies additional data that gives an assessment of how well a program is performing during a training phase, and delivers recommendations to improve the performance of the learning algorithm. It is an iterative learning algorithm. The most common form of supervised learning is trying to duplicate human behavior specified by a set of training examples consisting of input data and the corresponding correct output.

Unsupervised learning is a one-shot algorithm. No performance evaluation is carried out so that, without any knowledge of what constitutes a correct answer or an incorrect one, these systems are used to construct groups of similar input patterns. This is known as clustering.

Practically speaking, machine learning in neural networks consists of adjusting connection weights through a learning algorithm which is the critical mechanism of the whole system.

Knowledge representation

The issue is to define how to record whatever has been learned. In connectionist models, knowledge representation includes the network description of cells (nodes), the connection weights between the cells, and the semantic interpretations attached to the cells and their activations.

Once the learning process has been completed, it appears to be impossible for outside observers to trace back the various steps of reasoning in the form of a sequence of instructions, which is our “natural” way of analyzing our decision processes. This lack of interpretability or “auditability” of machine learning output when neural network techniques are implemented may result in a lack of trust in churned-out results. When important issues arise in risk management such as data privacy, adherence to relevant protocols and cyber security, decision-makers have to be ensured that applications do what they are intended to do. This uncertainty of a sort may appear in the future as a serious hurdle to the mass dissemination of neural network applications in critical contexts.

1.4.3.3.3. Current prospects

Three main ways that businesses or organizations can, and could in the near future, use AI for supporting their activities. AI is expected to play a pivotal role in what is called the digital transformation of our society.

Assisted intelligence

This helps actors be more efficient in performing their tasks. These tasks are clearly defined, rule-based and repeatable. They are found in clerkly environments (billing, finance, regulatory compliance, receiving and processing customer orders, etc.) as well as in field operations (services to industries, maintenance and repair, etc.).