Among the algorithms of hierarchical clustering, there are two main types: bottom-up- and top-down-based algorithms. Top-down algorithms work on the next principle: at the beginning, all objects are placed in one cluster, which is then divided into smaller and smaller clusters. Bottom-up algorithms are more common than top-down ones. They place each object in a separate cluster at the beginning of the work, and then merge clusters into larger ones until all the objects in the dataset are contained in the one cluster, building a system of nested partitions. The results of such algorithms are usually presented in tree form, called a dendrogram. A classic example of such a tree is the tree of life, which describes the classification of animals and plants.

The main problem of hierarchical methods is the difficulty of determining the stop condition in such a way as to isolate natural clusters and, at the same time, prevent their excessive splitting. Another problem with hierarchical clustering methods is choosing the point of separation or merging of clusters. This choice is critical because after splitting or merging clusters at each subsequent step, the method will operate only on newly formed clusters. Therefore, the wrong choice of a merge or split point at any step can lead to poor-quality clustering. Also, hierarchical methods cannot be applied to large datasets, because deciding whether to divide or merge clusters requires a large number of objects and clusters to be analyzed, which leads to a significant computational complexity of the method.

There are several metrics or linkage criteria for cluster union used in hierarchical clustering methods, listed as follows:

- Single linkage (nearest neighbor distance): In this method, the distance between the two clusters is determined by the distance between the two closest objects (nearest neighbors) in different clusters. The resulting clusters tend to chain together.

- Complete linkage (distance between the most distant neighbors): In this method, the distances between clusters are determined by the largest distance between any two objects in different clusters (that is, the most distant neighbors). This method usually works very well when objects come from separate groups. If the clusters are elongated or their natural type is chained, then this method is unsuitable.

- Unweighted pairwise mean linkage: In this method, the distance between two different clusters is calculated as an average distance between all pairs of objects in them. This method is useful when objects form different groups, but it works equally well in the case of elongated (chained-type) clusters.

- Weighted pairwise mean linkage: This method is identical to the unweighted pairwise mean method, except that the size of the corresponding clusters (the number of objects contained in them) is used as a weighting factor in the calculations. Therefore, this method should be used when we assume unequal cluster sizes.

- Weighted centroid linkage: In this method, the distance between two clusters is defined as the distance between their centers of mass.

- Weighted centroid linkage (median): This method is identical to the previous one, except that the calculations use weights for the distance measured between cluster sizes. Therefore, if there are significant differences in cluster sizes, this method is preferable to the previous one.

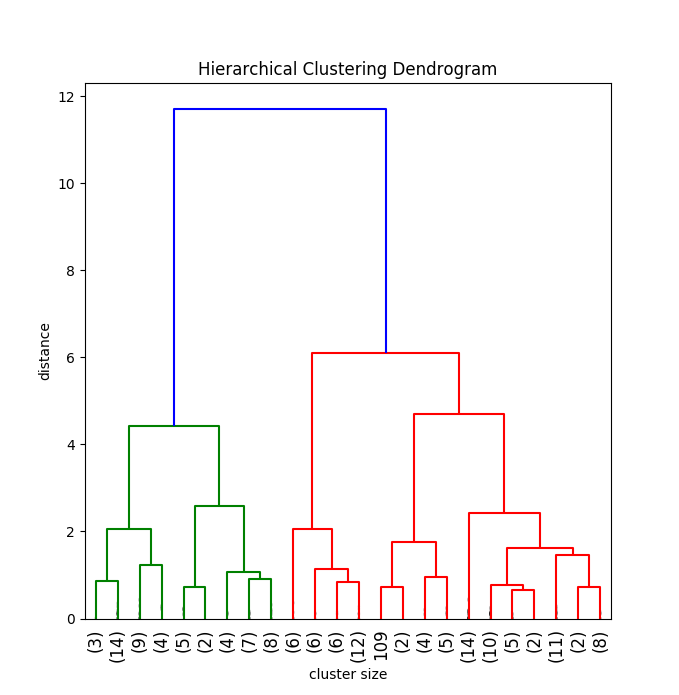

The following diagram displays a hierarchical clustering dendrogram:

The preceding diagram shows an example of a dendrogram for hierarchical clustering, and you can see how the number of clusters depends on the distance between objects. Larger distances lead to a smaller number of clusters.