The Shogun library implements multi-class logistic regression in the CMulticlassLogisticRegression class. This class has a single configurable parameter named z, and it is a regularization coefficient. To select the best value for it, we use the grid search approach with cross-validation. The following code snippets show this approach.

Assume we have the following train and test data:

Some<CDenseFeatures<DataType>> features;

Some<CMulticlassLabels> labels;

Some<CDenseFeatures<DataType>> test_features;

Some<CMulticlassLabels> test_labels;

As we decided to use a cross-validation process, let's define the required objects as follows:

// search for hyper-parameters

auto root = some<CModelSelectionParameters>();

// z - regularization parameter

CModelSelectionParameters* z = new CModelSelectionParameters("m_z");

root->append_child(z);

z->build_values(0.2, 1.0, R_LINEAR, 0.1);

Firstly, we created a configurable parameter tree with instances of the CModelSelectionParameters class. As we already saw in Chapter 3, Measuring Performance and Selecting Models, there should always be a root node and a child with exact parameter names and value ranges. Every trainable model in the Shogun library has the print_model_params() method, which prints all model parameters available for automatic configuration with the CGridSearchModelSelection class, so it's useful to check exact parameter names. The code can be seen in the following block:

index_t k = 3;

CStratifiedCrossValidationSplitting* splitting =

new CStratifiedCrossValidationSplitting(labels, k);

auto eval_criterium = some<CMulticlassAccuracy>();

auto log_reg = some<CMulticlassLogisticRegression>();

auto cross = some<CCrossValidation>(log_reg, features, labels, splitting, eval_criterium);

cross->set_num_runs(1);

We configured the instance of the CCrossValidation class, which took instances of a splitting strategy and an evaluation criterium object, as well as training features and labels for initialization. The splitting strategy is defined by the instance of the CStratifiedCrossValidationSplitting class and evaluation metric. We used the instance of the CMulticlassAccuracy class as an evaluation criterium, as illustrated in the following code block:

auto model_selection = some<CGridSearchModelSelection>(cross, root);

CParameterCombination* best_params =

wrap(model_selection->select_model(false));

best_params->apply_to_machine(log_reg);

best_params->print_tree();

After we configured the cross-validation objects, we used it alongside the parameters tree to initialize the instance of the CGridSearchModelSelection class, and then we used its method (namely, select_model()) to search for the best model parameters.

This method returned the instance of the CParameterCombination class, which used the apply_to_machine() method for the initialization of model parameters with this object's specific values, as illustrated in the following code block:

// train

log_reg->set_labels(labels);

log_reg->train(features);

// evaluate model on test data

auto new_labels = wrap(log_reg->apply_multiclass(test_features));

// estimate accuracy

auto accuracy = eval_criterium->evaluate(new_labels, test_labels);

// process results

auto feature_matrix = test_features->get_feature_matrix();

for (index_t i = 0; i < new_labels->get_num_labels(); ++i) {

auto label_idx_pred = new_labels->get_label(i);

auto vector = feature_matrix.get_column(i)

...

}

After we found out the best parameters, we trained our model on the full training dataset and evaluated it on the test set. The CMulticlassLogisticRegression class has a method named apply_multiclass() that we used for a model evaluation on the test data. This method returned an object of the CMulticlassLabels class. The get_label() method was then used to access labels values.

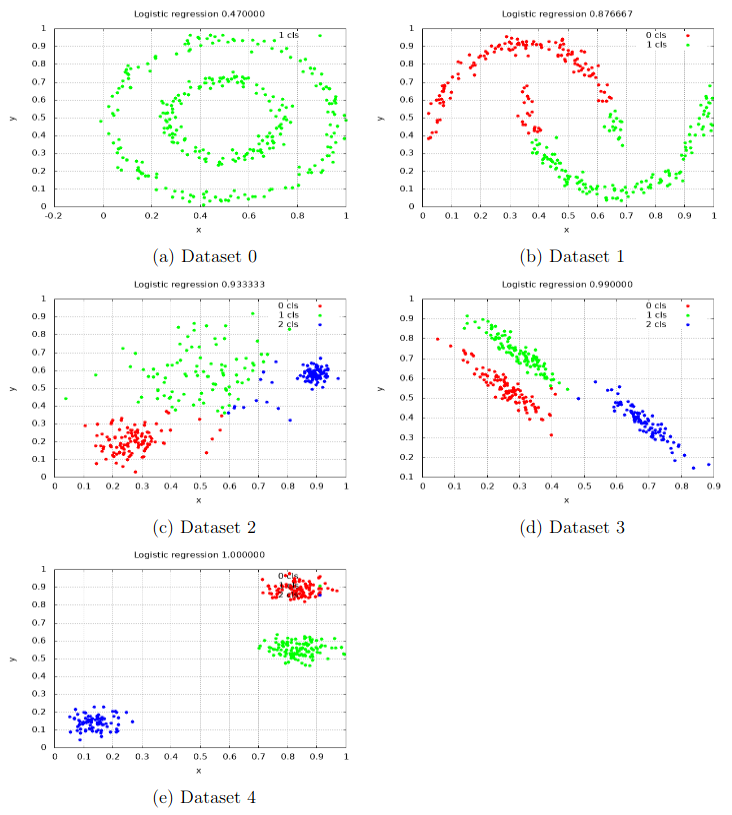

The following screenshot shows the results of applying the Shogun implementation of the logistic regression algorithm to our datasets:

Notice that we have classification errors in the Dataset 0, Dataset 1, and Dataset 2 datasets, and other datasets were classified almost correctly.