The following code sample shows how to use Dlib's SVM algorithm implementation for multi-class classification:

void SVMClassification(const Samples& samples,

const Labels& labels,

const Samples& test_samples,

const Labels& test_labels) {

using OVOtrainer = one_vs_one_trainer<any_trainer<SampleType>>;

using KernelType = radial_basis_kernel<SampleType>;

svm_nu_trainer<KernelType> svm_trainer;

svm_trainer.set_kernel(KernelType(0.1));

OVOtrainer trainer;

trainer.set_trainer(svm_trainer);

one_vs_one_decision_function<OVOtrainer> df = trainer.train(samples,

labels);

// process results and estimate accuracy

DataType accuracy = 0;

for (size_t i = 0; i != test_samples.size(); i++) {

auto vec = test_samples[i];

auto class_idx = static_cast<size_t>(df(vec));

if (static_cast<size_t>(test_labels[i]) == class_idx)

++accuracy;

...

}

accuracy /= test_samples.size();

}

This sample shows that the Dlib library also has a unified API for using different algorithms, and the main difference from the previous example is the object of binary classifier. For the SVM classification, we used an object of the svm_nu_trainer type, which was also configured with the kernel object of the radial_basis_kernel type.

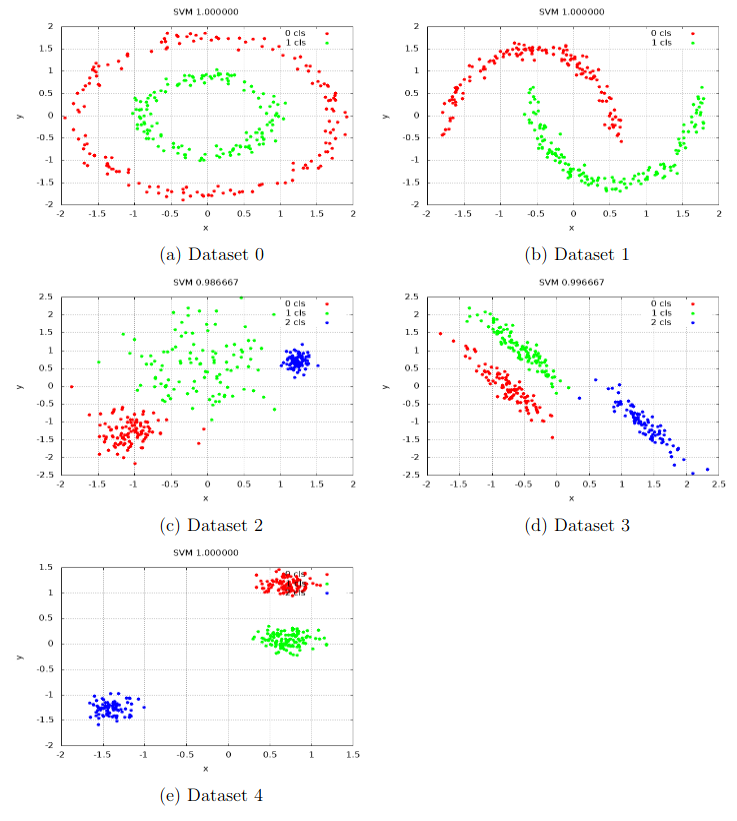

The following screenshot shows the results of applying the Dlib implementation of the KRR algorithm to our datasets:

You can see the Dlib implementation of the SVM algorithm also did correct classification on all datasets without additional configurations. Remember that the Shogun implementation of the same algorithm made incorrect classification in some cases.