10.2. Controlling concurrent access

Databases (and other transactional systems) attempt to ensure transaction isolation, meaning that, from the point of view of each concurrent transaction, it appears that no other transactions are in progress. Traditionally, this has been implemented with locking. A transaction may place a lock on a particular item of data in the database, temporarily preventing access to that item by other transactions. Some modern databases such as Oracle and PostgreSQL implement transaction isolation with multiversion concurrency control (MVCC) which is generally considered more scalable. We'll discuss isolation assuming a locking model; most of our observations are also applicable to multiversion concurrency, however.

How databases implement concurrency control is of the utmost importance in your Hibernate or Java Persistence application. Applications inherit the isolation guarantees provided by the database management system. For example, Hibernate never locks anything in memory. If you consider the many years of experience that database vendors have with implementing concurrency control, you'll see the advantage of this approach. On the other hand, some features in Hibernate and Java Persistence (either because you use them or by design) can improve the isolation guarantee beyond what is provided by the database.

We discuss concurrency control in several steps. We explore the lowest layer and investigate the transaction isolation guarantees provided by the database. Then, we look at Hibernate and Java Persistence features for pessimistic and optimistic concurrency control at the application level, and what other isolation guarantees Hibernate can provide.

10.2.1. Understanding database-level concurrency

Your job as a Hibernate application developer is to understand the capabilities of your database and how to change the database isolation behavior if needed in your particular scenario (and by your data integrity requirements). Let's take a step back. If we're talking about isolation, you may assume that two things are either isolated or not isolated; there is no grey area in the real world. When we talk about database transactions, complete isolation comes at a high price. Several isolation levels are available, which, naturally, weaken full isolation but increase performance and scalability of the system.

First, let's look at several phenomena that may occur when you weaken full transaction isolation. The ANSI SQL standard defines the standard transaction isolation levels in terms of which of these phenomena are permissible in a database management system:

A lost update occurs if two transactions both update a row and then the second transaction aborts, causing both changes to be lost. This occurs in systems that don't implement locking. The concurrent transactions aren't isolated. This is shown in figure 10.2.

Figure 10-2. Lost update: two transactions update the same data without locking.

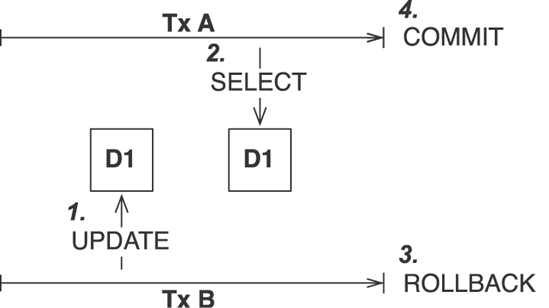

A dirty read occurs if a one transaction reads changes made by another transaction that has not yet been committed. This is dangerous, because the changes made by the other transaction may later be rolled back, and invalid data may be written by the first transaction, see figure 10.3.

An unrepeatable read occurs if a transaction reads a row twice and reads different state each time. For example, another transaction may have written to the row and committed between the two reads, as shown in figure 10.4.

A special case of unrepeatable read is the second lost updates problem. Imagine that two concurrent transactions both read a row: One writes to it and commits, and then the second writes to it and commits. The changes made by the first writer are lost. This issue is especially relevant if you think about application conversations that need several database transactions to complete. We'll explore this case later in more detail.

Figure 10-3. Dirty read: transaction A reads uncommitted data.

Figure 10-4. Unrepeatable read: transaction A executes two nonrepeatable reads

A phantom read is said to occur when a transaction executes a query twice, and the second result set includes rows that weren't visible in the first result set or rows that have been deleted. (It need not necessarily be exactly the same query.) This situation is caused by another transaction inserting or deleting rows between the execution of the two queries, as shown in figure 10.5.

Figure 10-5. Phantom read: transaction A reads new data in the second select.

Now that you understand all the bad things that can occur, we can define the transaction isolation levels and see what problems they prevent.

ANSI transaction isolation levels

The standard isolation levels are defined by the ANSI SQL standard, but they aren't peculiar to SQL databases. JTA defines exactly the same isolation levels, and you'll use these levels to declare your desired transaction isolation later. With increased levels of isolation comes higher cost and serious degradation of performance and scalability:

A system that permits dirty reads but not lost updates is said to operate in read uncommitted isolation. One transaction may not write to a row if another uncommitted transaction has already written to it. Any transaction may read any row, however. This isolation level may be implemented in the database-management system with exclusive write locks.

A system that permits unrepeatable reads but not dirty reads is said to implement read committed transaction isolation. This may be achieved by using shared read locks and exclusive write locks. Reading transactions don't block other transactions from accessing a row. However, an uncommitted writing transaction blocks all other transactions from accessing the row.

A system operating in repeatable read isolation mode permits neither unrepeatable reads nor dirty reads. Phantom reads may occur. Reading transactions block writing transactions (but not other reading transactions), and writing transactions block all other transactions.

Serializable provides the strictest transaction isolation. This isolation level emulates serial transaction execution, as if transactions were executed one after another, serially, rather than concurrently. Serializability may not be implemented using only row-level locks. There must instead be some other mechanism that prevents a newly inserted row from becoming visible to a transaction that has already executed a query that would return the row.

How exactly the locking system is implemented in a DBMS varies significantly; each vendor has a different strategy. You should study the documentation of your DBMS to find out more about the locking system, how locks are escalated (from row-level, to pages, to whole tables, for example), and what impact each isolation level has on the performance and scalability of your system.

It's nice to know how all these technical terms are defined, but how does that help you choose an isolation level for your application?

Choosing an isolation level

Developers (ourselves included) are often unsure what transaction isolation level to use in a production application. Too great a degree of isolation harms scalability of a highly concurrent application. Insufficient isolation may cause subtle, unreproduceable bugs in an application that you'll never discover until the system is working under heavy load.

Note that we refer to optimistic locking (with versioning) in the following explanation, a concept explained later in this chapter. You may want to skip this section and come back when it's time to make the decision for an isolation level in your application. Picking the correct isolation level is, after all, highly dependent on your particular scenario. Read the following discussion as recommendations, not carved in stone.

Hibernate tries hard to be as transparent as possible regarding transactional semantics of the database. Nevertheless, caching and optimistic locking affect these semantics. What is a sensible database isolation level to choose in a Hibernate application?

First, eliminate the read uncommitted isolation level. It's extremely dangerous to use one transaction's uncommitted changes in a different transaction. The rollback or failure of one transaction will affect other concurrent transactions. Rollback of the first transaction could bring other transactions down with it, or perhaps even cause them to leave the database in an incorrect state. It's even possible that changes made by a transaction that ends up being rolled back could be committed anyway, because they could be read and then propagated by another transaction that is successful!

Secondly, most applications don't need serializable isolation (phantom reads aren't usually problematic), and this isolation level tends to scale poorly. Few existing applications use serializable isolation in production, but rather rely on pessimistic locks (see next sections) that effectively force a serialized execution of operations in certain situations.

This leaves you a choice between read committed and repeatable read. Let's first consider repeatable read. This isolation level eliminates the possibility that one transaction can overwrite changes made by another concurrent transaction (the second lost updates problem) if all data access is performed in a single atomic database transaction. A read lock held by a transaction prevents any write lock a concurrent transaction may wish to obtain. This is an important issue, but enabling repeatable read isn't the only way to resolve it.

Let's assume you're using versioned data, something that Hibernate can do for you automatically. The combination of the (mandatory) persistence context cache and versioning already gives you most of the nice features of repeatable read isolation. In particular, versioning prevents the second lost updates problem, and the persistence context cache also ensures that the state of the persistent instances loaded by one transaction is isolated from changes made by other transactions. So, read-committed isolation for all database transactions is acceptable if you use versioned data.

Repeatable read provides more reproducibility for query result sets (only for the duration of the database transaction); but because phantom reads are still possible, that doesn't appear to have much value. You can obtain a repeatable-read guarantee explicitly in Hibernate for a particular transaction and piece of data (with a pessimistic lock).

Setting the transaction isolation level allows you to choose a good default locking strategy for all your database transactions. How do you set the isolation level?

Every JDBC connection to a database is in the default isolation level of the DBMS—usually read committed or repeatable read. You can change this default in the DBMS configuration. You may also set the transaction isolation for JDBC connections on the application side, with a Hibernate configuration option:

hibernate.connection.isolation = 4

Hibernate sets this isolation level on every JDBC connection obtained from a connection pool before starting a transaction. The sensible values for this option are as follows (you may also find them as constants in java.sql.Connection):

1—Read uncommitted isolation

2—Read committed isolation

4—Repeatable read isolation

8—Serializable isolation

Note that Hibernate never changes the isolation level of connections obtained from an application server-provided database connection in a managed environment! You can change the default isolation using the configuration of your application server. (The same is true if you use a stand-alone JTA implementation.)

As you can see, setting the isolation level is a global option that affects all connections and transactions. From time to time, it's useful to specify a more restrictive lock for a particular transaction. Hibernate and Java Persistence rely on optimistic concurrency control, and both allow you to obtain additional locking guarantees with version checking and pessimistic locking.

10.2.2. Optimistic concurrency control

An optimistic approach always assumes that everything will be OK and that conflicting data modifications are rare. Optimistic concurrency control raises an error only at the end of a unit of work, when data is written. Multiuser applications usually default to optimistic concurrency control and database connections with a read-committed isolation level. Additional isolation guarantees are obtained only when appropriate; for example, when a repeatable read is required. This approach guarantees the best performance and scalability.

Understanding the optimistic strategy

To understand optimistic concurrency control, imagine that two transactions read a particular object from the database, and both modify it. Thanks to the read-committed isolation level of the database connection, neither transaction will run into any dirty reads. However, reads are still nonrepeatable, and updates may also be lost. This is a problem you'll face when you think about conversations, which are atomic transactions from the point of view of your users. Look at figure 10.6.

Figure 10-6. Conversation B overwrites changes made by conversation A.

Let's assume that two users select the same piece of data at the same time. The user in conversation A submits changes first, and the conversation ends with a successful commit of the second transaction. Some time later (maybe only a second), the user in conversation B submits changes. This second transaction also commits successfully. The changes made in conversation A have been lost, and (potentially worse) modifications of data committed in conversation B may have been based on stale information.

You have three choices for how to deal with lost updates in these second transactions in the conversations:

Last commit wins—Both transactions commit successfully, and the second commit overwrites the changes of the first. No error message is shown.

First commit wins—The transaction of conversation A is committed, and the user committing the transaction in conversation B gets an error message. The user must restart the conversation by retrieving fresh data and go through all steps of the conversation again with nonstale data.

Merge conflicting updates—The first modification is committed, and the transaction in conversation B aborts with an error message when it's committed. The user of the failed conversation B may however apply changes selectively, instead of going through all the work in the conversation again.

If you don't enable optimistic concurrency control, and by default it isn't enabled, your application runs with a last commit wins strategy. In practice, this issue of lost updates is frustrating for application users, because they may see all their work lost without an error message.

Obviously, first commit wins is much more attractive. If the application user of conversation B commits, he gets an error message that reads, Somebody already committed modifications to the data you're about to commit. You've been working with stale data. Please restart the conversation with fresh data. It's your responsibility to design and write the application to produce this error message and to direct the user to the beginning of the conversation. Hibernate and Java Persistence help you with automatic optimistic locking, so that you get an exception whenever a transaction tries to commit an object that has a conflicting updated state in the database.

Merge conflicting changes, is a variation of first commit wins. Instead of displaying an error message that forces the user to go back all the way, you offer a dialog that allows the user to merge conflicting changes manually. This is the best strategy because no work is lost and application users are less frustrated by optimistic concurrency failures. However, providing a dialog to merge changes is much more time-consuming for you as a developer than showing an error message and forcing the user to repeat all the work. We'll leave it up to you whether you want to use this strategy.

Optimistic concurrency control can be implemented many ways. Hibernate works with automatic versioning.

Enabling versioning in Hibernate

Hibernate provides automatic versioning. Each entity instance has a version, which can be a number or a timestamp. Hibernate increments an object's version when it's modified, compares versions automatically, and throws an exception if a conflict is detected. Consequently, you add this version property to all your persistent entity classes to enable optimistic locking:

public class Item {

...

private int version;

...

}

You can also add a getter method; however, version numbers must not be modified by the application. The <version> property mapping in XML must be placed immediately after the identifier property mapping:

<class name="Item" table="ITEM">

<id .../>

<version name="version" access="field" column="OBJ_VERSION"/>

...

</class>

The version number is just a counter value—it doesn't have any useful semantic value. The additional column on the entity table is used by your Hibernate application. Keep in mind that all other applications that access the same database can (and probably should) also implement optimistic versioning and utilize the same version column. Sometimes a timestamp is preferred (or exists):

public class Item {

...

private Date lastUpdated;

...

}

<class name="Item" table="ITEM">

<id .../>

<timestamp name="lastUpdated"

access="field"

column="LAST_UPDATED"/>

...

</class>

In theory, a timestamp is slightly less safe, because two concurrent transactions may both load and update the same item in the same millisecond; in practice, this won't occur because a JVM usually doesn't have millisecond accuracy (you should check your JVM and operating system documentation for the guaranteed precision).

Furthermore, retrieving the current time from the JVM isn't necessarily safe in a clustered environment, where nodes may not be time synchronized. You can switch to retrieval of the current time from the database machine with the source="db" attribute on the <timestamp> mapping. Not all Hibernate SQL dialects support this (check the source of your configured dialect), and there is always the overhead of hitting the database for every increment.

We recommend that new projects rely on versioning with version numbers, not timestamps.

Optimistic locking with versioning is enabled as soon as you add a <version> or a <timestamp> property to a persistent class mapping. There is no other switch.

How does Hibernate use the version to detect a conflict?

Automatic management of versions

Every DML operation that involves the now versioned Item objects includes a version check. For example, assume that in a unit of work you load an Item from the database with version 1. You then modify one of its value-typed properties, such as the price of the Item. When the persistence context is flushed, Hibernate detects that modification and increments the version of the Item to 2. It then executes the SQL UPDATE to make this modification permanent in the database:

update ITEM set INITIAL_PRICE='12.99', OBJ_VERSION=2

where ITEM_ID=123 and OBJ_VERSION=1

If another concurrent unit of work updated and committed the same row, the OBJ_VERSION column no longer contains the value 1, and the row isn't updated. Hibernate checks the row count for this statement as returned by the JDBC driver—which in this case is the number of rows updated, zero—and throws a StaleObjectStateException. The state that was present when you loaded the Item is no longer present in the database at flush-time; hence, you're working with stale data and have to notify the application user. You can catch this exception and display an error message or a dialog that helps the user restart a conversation with the application.

What modifications trigger the increment of an entity's version? Hibernate increments the version number (or the timestamp) whenever an entity instance is dirty. This includes all dirty value-typed properties of the entity, no matter if they're single-valued, components, or collections. Think about the relationship between User and BillingDetails, a one-to-many entity association: If a CreditCard is modified, the version of the related User isn't incremented. If you add or remove a CreditCard (or BankAccount) from the collection of billing details, the version of the User is incremented.

If you want to disable automatic increment for a particular value-typed property or collection, map it with the optimistic-lock="false" attribute. The inverse attribute makes no difference here. Even the version of an owner of an inverse collection is updated if an element is added or removed from the inverse collection.

As you can see, Hibernate makes it incredibly easy to manage versions for optimistic concurrency control. If you're working with a legacy database schema or existing Java classes, it may be impossible to introduce a version or timestamp property and column. Hibernate has an alternative strategy for you.

Versioning without version numbers or timestamps

If you don't have version or timestamp columns, Hibernate can still perform automatic versioning, but only for objects that are retrieved and modified in the same persistence context (that is, the same Session). If you need optimistic locking for conversations implemented with detached objects, you must use a version number or timestamp that is transported with the detached object.

This alternative implementation of versioning checks the current database state against the unmodified values of persistent properties at the time the object was retrieved (or the last time the persistence context was flushed). You may enable this functionality by setting the optimistic-lock attribute on the class mapping:

<class name="Item" table="ITEM" optimistic-lock="all">

<id .../>

...

</class>

The following SQL is now executed to flush a modification of an Item instance:

update ITEM set ITEM_PRICE='12.99' where ITEM_ID=123 and ITEM_PRICE='9.99' and ITEM_DESCRIPTION="An Item" and ... and SELLER_ID=45

Hibernate lists all columns and their last known nonstale values in the WHERE clause of the SQL statement. If any concurrent transaction has modified any of these values, or even deleted the row, this statement again returns with zero updated rows. Hibernate then throws a StaleObjectStateException.

Alternatively, Hibernate includes only the modified properties in the restriction (only ITEM_PRICE, in this example) if you set optimistic-lock="dirty". This means two units of work may modify the same object concurrently, and a conflict is detected only if they both modify the same value-typed property (or a foreign key value). In most cases, this isn't a good strategy for business entities. Imagine that two people modify an auction item concurrently: One changes the price, the other the description. Even if these modifications don't conflict at the lowest level (the database row), they may conflict from a business logic perspective. Is it OK to change the price of an item if the description changed completely? You also need to enable dynamic-update="true" on the class mapping of the entity if you want to use this strategy, Hibernate can't generate the SQL for these dynamic UPDATE statements at startup.

We don't recommend versioning without a version or timestamp column in a new application; it's a little slower, it's more complex, and it doesn't work if you're using detached objects.

Optimistic concurrency control in a Java Persistence application is pretty much the same as in Hibernate.

Versioning with Java Persistence

The Java Persistence specification assumes that concurrent data access is handled optimistically, with versioning. To enable automatic versioning for a particular entity, you need to add a version property or field:

@Entity

public class Item {

...

@Version

@Column(name = "OBJ_VERSION")

private int version;

...

}

Again, you can expose a getter method but can't allow modification of a version value by the application. In Hibernate, a version property of an entity can be of any numeric type, including primitives, or a Date or Calendar type. The JPA specification considers only int, Integer, short, Short, long, Long, and java.sql.Timestamp as portable version types.

Because the JPA standard doesn't cover optimistic versioning without a version attribute, a Hibernate extension is needed to enable versioning by comparing the old and new state:

@Entity

@org.hibernate.annotations.Entity(

optimisticLock = org.hibernate.annotations.OptimisticLockType.ALL

)

public class Item {

...

}

You can also switch to OptimisticLockType.DIRTY if you only wish to compare modified properties during version checking. You then also need to set the dynamicUpdate attribute to true.

Java Persistence doesn't standardize which entity instance modifications should trigger an increment of the version. If you use Hibernate as a JPA provider, the defaults are the same—every value-typed property modification, including additions and removals of collection elements, triggers a version increment. At the time of writing, no Hibernate annotation for disabling of version increments on particular properties and collections is available, but a feature request for @OptimisticLock(excluded=true) exists. Your version of Hibernate Annotations probably includes this option.

Hibernate EntityManager, like any other Java Persistence provider, throws a javax.persistence.OptimisticLockException when a conflicting version is detected. This is the equivalent of the native StaleObjectStateException in Hibernate and should be treated accordingly.

We've now covered the basic isolation levels of a database connection, with the conclusion that you should almost always rely on read-committed guarantees from your database. Automatic versioning in Hibernate and Java Persistence prevents lost updates when two concurrent transactions try to commit modifications on the same piece of data. To deal with nonrepeatable reads, you need additional isolation guarantees.

10.2.3. Obtaining additional isolation guarantees

There are several ways to prevent nonrepeatable reads and upgrade to a higher isolation level.

We already discussed switching all database connections to a higher isolation level than read committed, but our conclusion was that this is a bad default when scalability of the application is a concern. You need better isolation guarantees only for a particular unit of work. Also remember that the persistence context cache provides repeatable reads for entity instances in persistent state. However, this isn't always sufficient.

For example, you may need repeatable read for scalar queries:

Session session = sessionFactory.openSession();

Transaction tx = session.beginTransaction();

Item i = (Item) session.get(Item.class, 123);

String description = (String)

session.createQuery("select i.description from Item i" +

" where i.id = :itemid")

.setParameter("itemid", i.getId() )

.uniqueResult();

tx.commit();

session.close();

This unit of work executes two reads. The first retrieves an entity instance by identifier. The second read is a scalar query, loading the description of the already loaded Item instance again. There is a small window in this unit of work in which a concurrently running transaction may commit an updated item description between the two reads. The second read then returns this committed data, and the variable description has a different value than the property i.getDescription().

This example is simplified, but it's enough to illustrate how a unit of work that mixes entity and scalar reads is vulnerable to nonrepeatable reads, if the database transaction isolation level is read committed.

Instead of switching all database transactions into a higher and nonscalable isolation level, you obtain stronger isolation guarantees when necessary with the lock() method on the Hibernate Session:

Session session = sessionFactory.openSession();

Transaction tx = session.beginTransaction();

Item i = (Item) session.get(Item.class, 123);

session.lock(i, LockMode.UPGRADE);

String description = (String)

session.createQuery("select i.description from Item i" +

" where i.id = :itemid")

.setParameter("itemid", i.getId() )

.uniqueResult();

tx.commit();

session.close();

Using LockMode.UPGRADE results in a pessimistic lock held on the database for the row(s) that represent the Item instance. Now no concurrent transaction can obtain a lock on the same data—that is, no concurrent transaction can modify the data between your two reads. This can be shortened as follows:

Session session = sessionFactory.openSession(); Transaction tx = session.beginTransaction(); Item i = (Item) session.get(Item.class, 123, LockMode.UPGRADE); ...

A LockMode.UPGRADE results in an SQL SELECT ... FOR UPDATE or similar, depending on the database dialect. A variation, LockMode.UPGRADE_NOWAIT, adds a clause that allows an immediate failure of the query. Without this clause, the database usually waits when the lock can't be obtained (perhaps because a concurrent transaction already holds a lock). The duration of the wait is database-dependent, as is the actual SQL clause.

FAQ

Can I use long pessimistic locks? The duration of a pessimistic lock in Hibernate is a single database transaction. This means you can't use an exclusive lock to block concurrent access for longer than a single database transaction. We consider this a good thing, because the only solution would be an extremely expensive lock held in memory (or a so-called lock table in the database) for the duration of, for example, a whole conversation. These kinds of locks are sometimes called offlinelocks. This is almost always a performance bottleneck; every data access involves additional lock checks to a synchronized lock manager. Optimistic locking, however, is the perfect concurrency control strategy and performs well in long-running conversations. Depending on your conflict-resolution options (that is, if you had enough time to implement merge changes), your application users are as happy with it as with blocked concurrent access. They may also appreciate not being locked out of particular screens while others look at the same data.

Java Persistence defines LockModeType.READ for the same purpose, and the EntityManager also has a lock() method. The specification doesn't require that this lock mode is supported on nonversioned entities; however, Hibernate supports it on all entities, because it defaults to a pessimistic lock in the database.

The Hibernate lock modes

Hibernate supports the following additional LockModes:

LockMode.NONE—Don't go to the database unless the object isn't in any cache.

LockMode.READ—Bypass all caches, and perform a version check to verify that the object in memory is the same version that currently exists in the database.

LockMode.UPDGRADE—Bypass all caches, do a version check (if applicable), and obtain a database-level pessimistic upgrade lock, if that is supported. Equivalent to LockModeType.READ in Java Persistence. This mode transparently falls back to LockMode.READ if the database SQL dialect doesn't support a SELECT ... FOR UPDATE option.

LockMode.UPDGRADE_NOWAIT—The same as UPGRADE, but use a SELECT ... FOR UPDATE NOWAIT, if supported. This disables waiting for concurrent lock releases, thus throwing a locking exception immediately if the lock can't be obtained. This mode transparently falls back to LockMode.UPGRADE if the database SQL dialect doesn't support the NOWAIT option.

LockMode.FORCE—Force an increment of the objects version in the database, to indicate that it has been modified by the current transaction. Equivalent to LockModeType.WRITE in Java Persistence.

LockMode.WRITE—Obtained automatically when Hibernate has written to a row in the current transaction. (This is an internal mode; you may not specify it in your application.)

By default, load() and get() use LockMode.NONE. A LockMode.READ is most useful with session.lock() and a detached object. Here's an example:

Item item = ... ; Bid bid = new Bid(); item.addBid(bid); ... Transaction tx = session.beginTransaction(); session.lock(item, LockMode.READ); tx.commit();

This code performs a version check on the detached Item instance to verify that the database row wasn't updated by another transaction since it was retrieved, before saving the new Bid by cascade (assuming the association from Item to Bid has cascading enabled).

(Note that EntityManager.lock() doesn't reattach the given entity instance—it only works on instances that are already in managed persistent state.)

Hibernate LockMode.FORCE and LockModeType.WRITE in Java Persistence have a different purpose. You use them to force a version update if by default no version would be incremented.

Forcing a version increment

If optimistic locking is enabled through versioning, Hibernate increments the version of a modified entity instance automatically. However, sometimes you want to increment the version of an entity instance manually, because Hibernate doesn't consider your changes to be a modification that should trigger a version increment.

Imagine that you modify the owner name of a CreditCard:

Session session = getSessionFactory().openSession();

Transaction tx = session.beginTransaction();

User u = (User) session.get(User.class, 123);

u.getDefaultBillingDetails().setOwner("John Doe");

tx.commit();

session.close();

When this Session is flushed, the version of the BillingDetail's instance (let's assume it's a credit card) that was modified is incremented automatically by Hibernate. This may not be what you want—you may want to increment the version of the owner, too (the User instance).

Call lock() with LockMode.FORCE to increment the version of an entity instance:

Session session = getSessionFactory().openSession();

Transaction tx = session.beginTransaction();

User u = (User) session.get(User.class, 123);

session.lock(u, LockMode.FORCE);

u.getDefaultBillingDetails().setOwner("John Doe");

tx.commit();

session.close();

Any concurrent unit of work that works with the same User row now knows that this data was modified, even if only one of the values that you'd consider part of the whole aggregate was modified. This technique is useful in many situations where you modify an object and want the version of a root object of an aggregate to be incremented. Another example is a modification of a bid amount for an auction item (if these amounts aren't immutable): With an explicit version increment, you can indicate that the item has been modified, even if none of its value-typed properties or collections have changed. The equivalent call with Java Persistence is em.lock(o, LockModeType.WRITE).

You now have all the pieces to write more sophisticated units of work and create conversations. We need to mention one final aspect of transactions, however, because it becomes essential in more complex conversations with JPA. You must understand how autocommit works and what nontransactional data access means in practice.