“Let me once again explain the rules. Tera-Tom books Rule!”

Tera-Tom Coffing

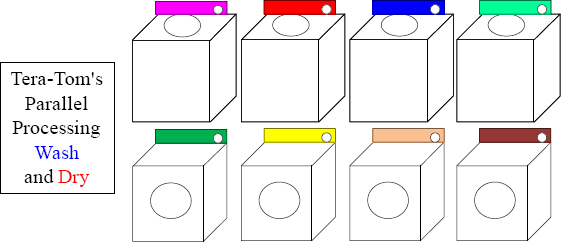

“After enlightenment, the laundry”

- Zen Proverb

“After parallel processing the laundry, enlightenment!”

- Netezza Zen Proverb

Two guys were having fun on a Saturday night when one said, “I’ve got to go and do my laundry.” The other said, “What!?” The first man explained that if he went to the laundry mat the next morning, he would be lucky to get one machine and be there all day. But if he went on Saturday night, he could get all the machines. Then, he could do all his wash and dry in two hours. Now that’s parallel processing mixed in with a little dry humor!

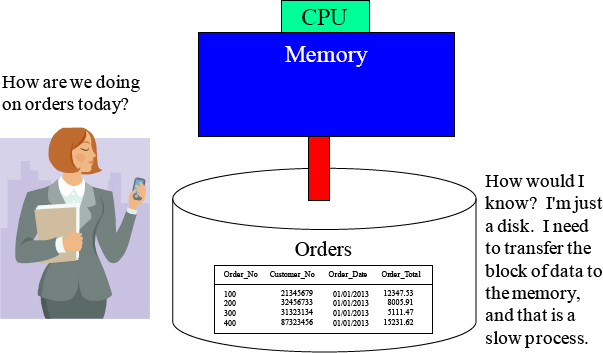

The Basics of a Single Computer

Data on disk does absolutely nothing. When data is requested, the computer moves the data one block at a time from disk into memory. Once the data is in memory, it is processed by the CPU at lightning speed. All computers work this way. The “Achilles Heel” of every computer is the slow process of moving data from disk to memory. That is all you need to know to be a computer expert!

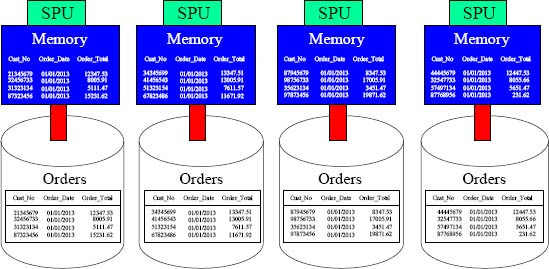

Netezza Parallel Processes Data

“If the facts don’t fit the theory, change the facts.”

- Albert Einstein

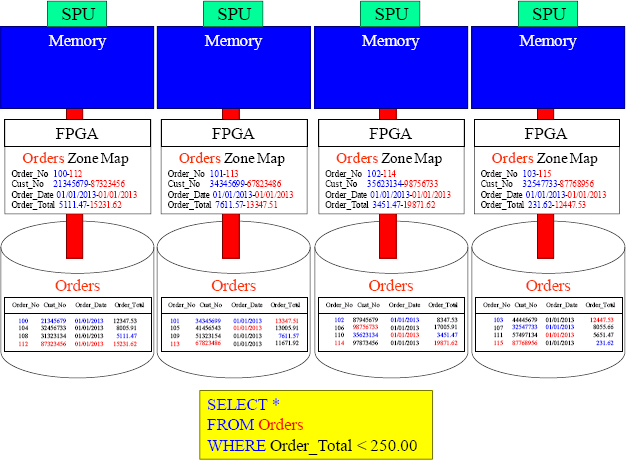

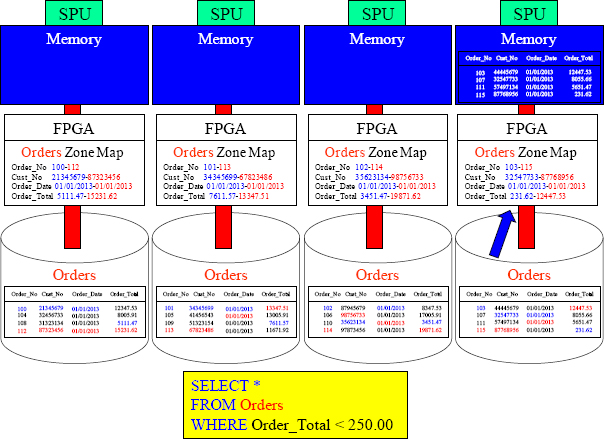

Netezza has always been the pioneer in parallel processing and is even credited with the invention of the Appliance. In the picture above, you see that we have 16 orders; four orders placed on each disk. It appears to be four separate computers, but this is one system. Netezza systems work just like a basic computer as they still need to move data from disk into memory, but Netezza divides and conquers. Each Snippet Processing Unit (SPU) holds a portion of the data for every table.

Netezza is Born to be Parallel

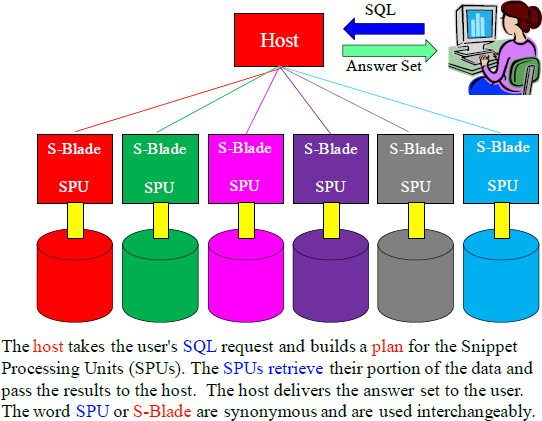

Each SPU holds a portion of every table and is responsible for reading and writing the data that it is assigned to and from its disk. Queries are submitted to the host who plans, optimizes, and manages the execution of the query by sending the necessary snippets to each SPU. Each SPU performs its snippet or snippets independent of the others, completely following only the host’s plan. The final results of queries performed on each SPU is returned to the host where they can be combined and delivered back to the user.

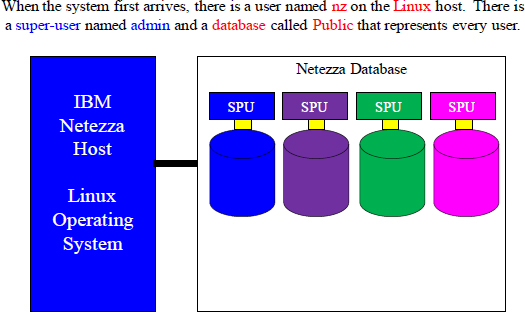

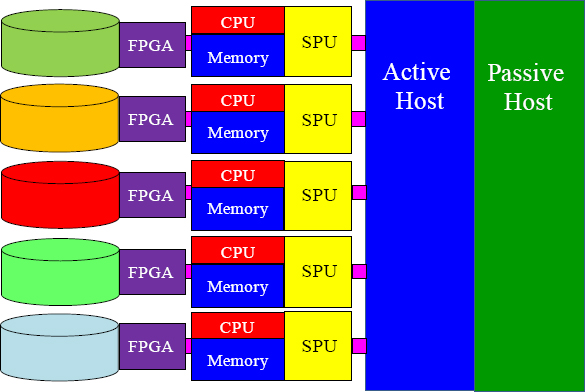

Starts with a Linux User, a Database User and A Database

The host is a Linux server that runs the Netezza software and utilities. The host controls and coordinates the activity of the Netezza Appliance and performs query optimization. The host also controls table and database operations, gathers and returns query results, and monitors the Netezza system. Netezza systems have two hosts in a highly available (HA) configuration. The host is connected to the Netezza Database, which consists of a series of parallel processors called Snippet Processing Units (SPUs) often referred to as S-Blades. SPU and S-Blade are synonymous and the term is used interchangeably. This book will most often refer to them as SPUs.

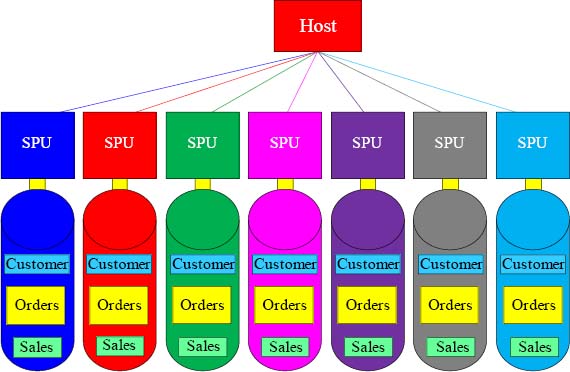

Each SPU holds a Portion of Every Table

Every SPU has the exact same tables, but each SPU holds different rows of those tables.

When a table is created on Netezza, each SPU receives that table. When data is loaded, the rows are hashed by a distribution key, so each SPU holds a certain portion of the rows. If the host orders a full table scan of a particular table, then all SPUs simultaneously read their portion of the data. This is the concept of parallel processing.

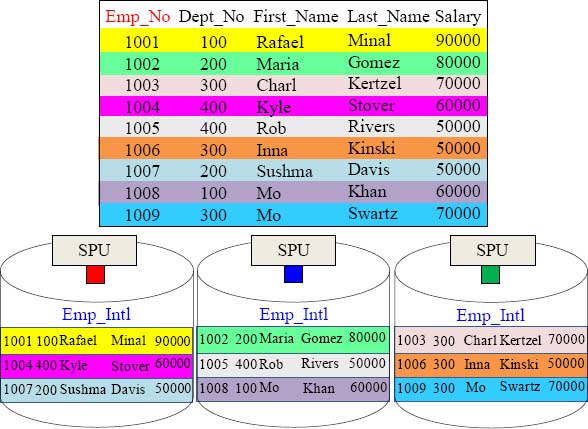

The Rows of a Table are Spread Across All SPUs

A Distribution Key will be hashed to distribute the rows among the SPUs. Each SPU will hold a portion of the rows. This is the concept behind parallel processing.

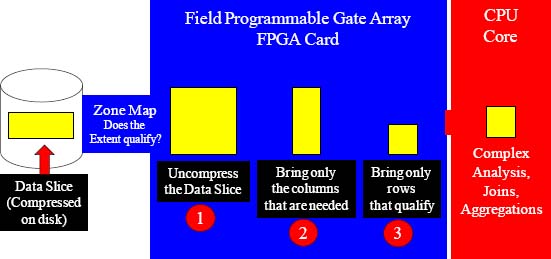

The brilliance behind Netezza is in the Field Programmable Gate Array. It has many components. First, understand that all data on disk is compressed (average 4X). The FPGA card sits just outside the disk. When a query is run on a table, each SPU holds a portion of that data, so each SPU holds what is termed a slice of data. Each SPU has their own FPGA card and CPU. The first thing analyzed is the zone map to see if the data block has the qualifying data. If it does, then the data slice is transferred to the FPGA card where it is uncompressed. This strategy stores data compressed and transfers data compressed thus saving space and transfer time. The second thing the FPGA card does is to eliminate columns that are not needed, thus reducing the block even further. Next, the FPGA card eliminates unneeded rows. The FPGA card then sends the smaller block directly to the CPU for processing. This brilliant strategy delivers only the data needed to be processed and allows the CPU to focus on what it does best which is complex analysis, joins, and aggregations.

Compress Engine II – Adaptive Stream Compression

• Automatic, system-wide data compression when data is stored on disk.

• Zero tuning and zero administration required.

• A table is compressed using a patent-pending algorithm that actually compresses at the column level, but the data is stored as an entire row.

• There are different compression strategies that are based on the data in that column.

• All data types are compressed.

• 4X compression is the average, but up to 32X is possible.

• When data is being queried, it is first brought into the FPGA card where it is uncompressed there.

• Automatic compression saves enormous space on disk, and when blocks are transferred from disk into the FPGA card, there is less traffic.

The Achilles heal of any computer system is moving data from disk into memory. That is the slowest process, and the larger the data block, the slower the transfer. Netezza brilliantly compresses each table automatically when it saves the block to disk. This saves space on disk and also helps enormously when transferring the data block from disk to memory. The compression will average 4X, so data movement is 4X faster between disk and memory.

FPGA Card and Zone Maps – The Netezza Secret Weapon

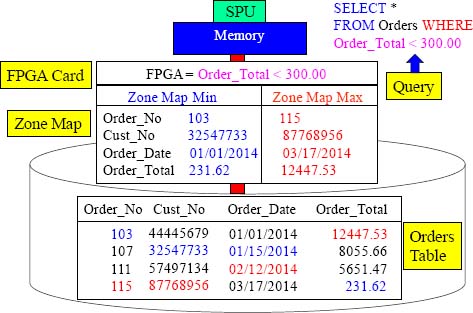

The first 200 columns of every table are automatically zone mapped. A Zone Map lists the min and max value for each column. The Field Programmable Gate Array (FPGA card) analyzes the query. Before ordering the block into memory, it checks the Zone Map to see if the block cannot possibly have that data. It can then skip reading that block. Where most databases use indexes to determine where data is, Netezza uses Zone Maps to determine where data is NOT! Our query above is looking for data WHERE Order_Total < 300. The Zone Map shows this block will contain rows, and therefore it will be moved into memory for processing. Each SPU has their own separate Zone Map and FPGA card. I have colored the min and max values to illustrate how it all works. No matter what column is in the WHERE clause, Netezza can exclude blocks.

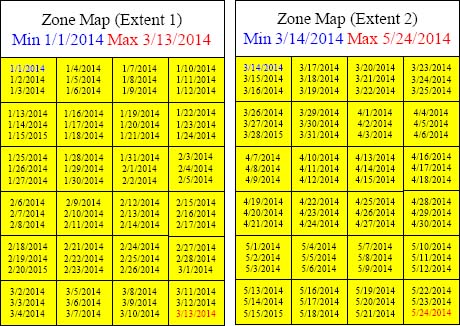

How Data Might Look Like on a SPU

Netezza allocates a 3 MB extent (on each SPU) when a table begins loading. When an extent is filled, another is allocated. I want you to imagine that we created a table that had only one column, and that column was Order_Date. On January 1st, data was loaded. Notice in the examples that as data is loaded, it continues to fill up the 128 KB pages (24 pages = 3 MB) and the Order_Date is ordered (because as each day is loaded, it fills up the next slot). Then, notice how the Zone Map has the min and max Order_Date. The Zone Map is designed to inform Netezza whether this block (Extent) should be read when this table is queried. If a query is looking for data in April, then there is no reason to read extent 1 because it falls outside of the min/max range.

Question – How Many Blocks Move Into Memory?

Looking at the SQL and the Zone Map, how many blocks will need to be moved into memory?

Answer – How Many Blocks Move Into Memory?

Only one block moves into memory. The FPGA card looks at the zone map and sees that the min and max for Order_total only falls into the range for the last SPU. Only that SPU moves the block into memory.

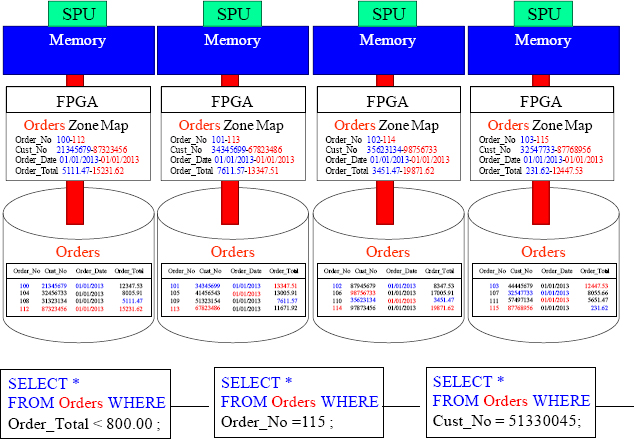

Quiz – Master that Query With the Zone Map

Looking at the SQL and the Zone Map, how many blocks will need to be moved into memory for each query?

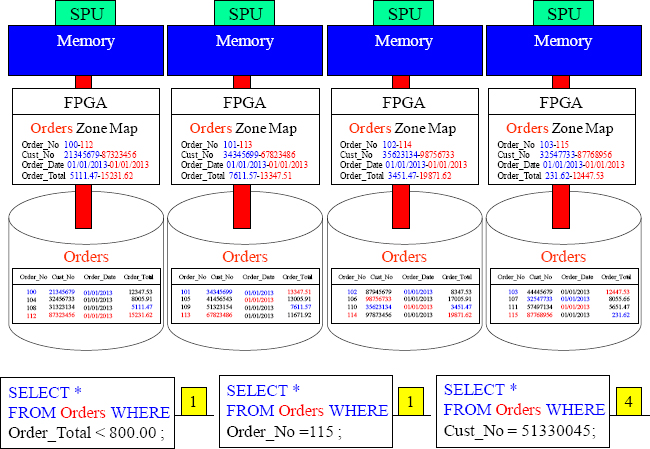

Answer to Quiz – Master that Query With the Zone Map

Above are your answers.

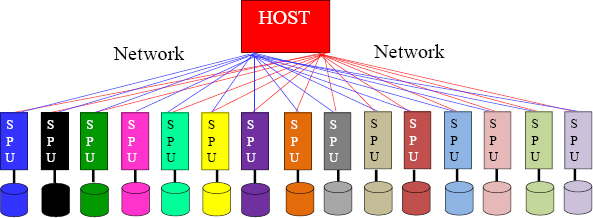

Netezza has Linear Scalability

“A Journey of a thousand miles begins with a single step.”

- Lao Tzu

Netezza was born to be parallel. With each query, a single step is performed in parallel by each SPU. A Netezza system consists of a series of SPUs that will work in parallel to store and process your data. This design allows you to start small and grow infinitely. If your Netezza system provides you with an excellent Return On Investment (ROI), then continue to invest by purchasing more SPUs. Most companies start small, but after seeing what Netezza can do, they continue to grow their ROI from the single step of implementing a Netezza system to millions of dollars in profits. Double your SPUs and Double your speeds…. Forever.

Users login to Netezza, and their queries are taken by the host. The host then parses the SQL and builds a plan for the Snippet Processing Units to follow. Each SPU has a portion of each table. The SPUs are inside the IBM Netezza Blade Servers, and some Netezza systems have grown to over 1,000 SPUs.

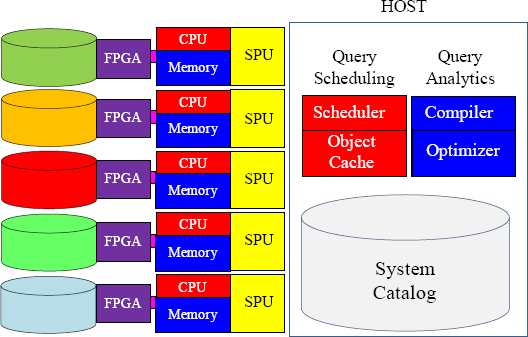

Users login to Netezza, and their queries are taken by the host. The host contains the System Catalog (which knows about all Netezza objects). The Host also has the Query Scheduler (which schedules the queries based on resources and priority). The main purpose of the host is to come up with a plan for the SPUs to follow in order to execute the query. The host actually compiles Snippets of executable code, and the Snippet Processing Units (SPUs) follow the plan and retrieve the data. The SPUs pass the data back to the host who delivers it to the user.

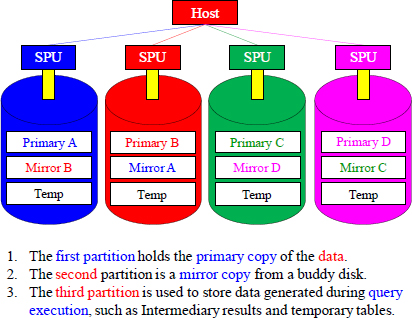

All of these partitions are mirrored. The primary partition is mirrored in pairs in a RAID 1 format. The Temp partition is striped across multiple discs (RAID 5) for increased performance. If a disk fails, transactions are not interrupted and an automatic failover to the mirror partition is initiated, but the system isn’t going to perform at maximum speeds. After the failover, the data of the mirror disc is automatically regenerated to one of the spare discs.

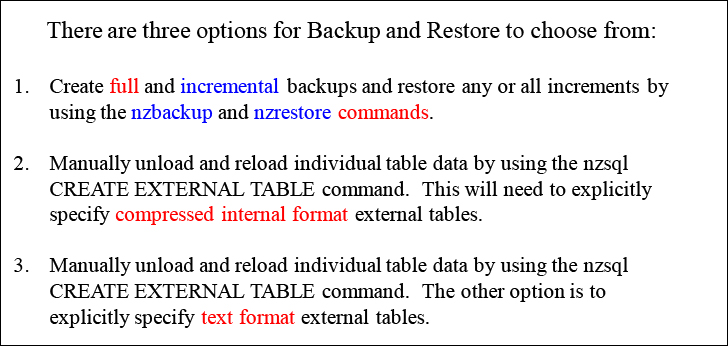

There Are Three Options for Backup and Restore

There are three options for Backup and Restore

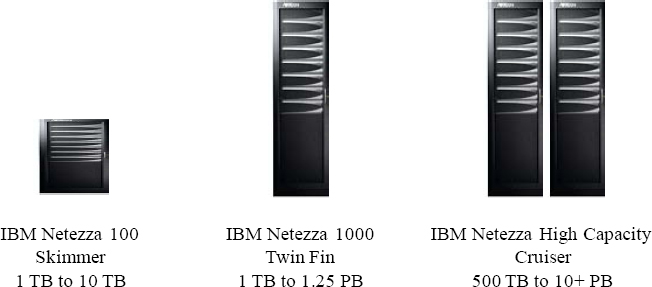

The IBM Netezza 100 is the development and test system and is not designed for production use. It has one S-Blade and up to 10 Terabytes of data capacity. The IBM Netezza 1000 is designed for production and can scale up to 1.25 Petabytes of user data. The new High Capacity Cruiser allows analytics on massive amounts of user data, up to 10 Petabytes. It is also designed for ultra-fast loading speeds.