Chapter 1

Introduction to Natural and Artificial Neural Networks

1.1 Why Learn about Neural Networks?

Here we will talk about the development of artificial neural networks that were derived from examinations of the human brain system. The examinations were carried out for years to allow researchers to learn the secrets of human intelligence. Their findings turned out to be useful in computer science. This chapter will explain how the ideas borrowed from biologists helped create artificial neural networks and continue to reveal the secrets of the human brain.

This chapter discusses the biological bases of artificial neural networks and their development based on examinations of human brains. The examinations were intended to find the basis of human intelligence and continued secretly for many years for reasons noted in the next section. Subsequent chapters will explain how to build and use neural networks.

As you already know, neural networks are easy to understand and use in computer software. However, their development was based on a surprisingly complex and interesting model of the nervous system in a biological model. We could say that neural networks are simplified models of some functions of the human brain (Figure 1.1).

1.2 From Brain Research to Artificial Neural Networks

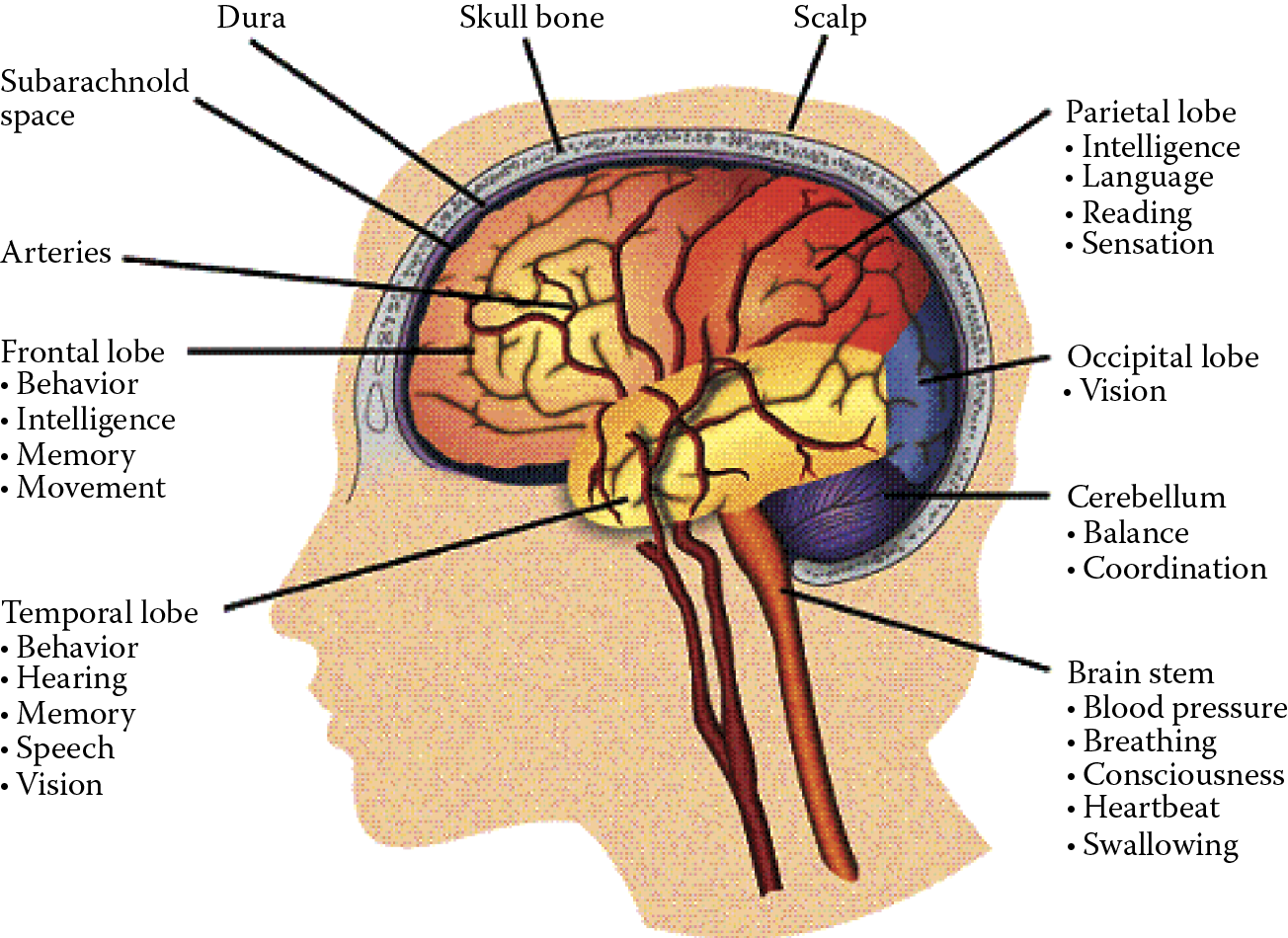

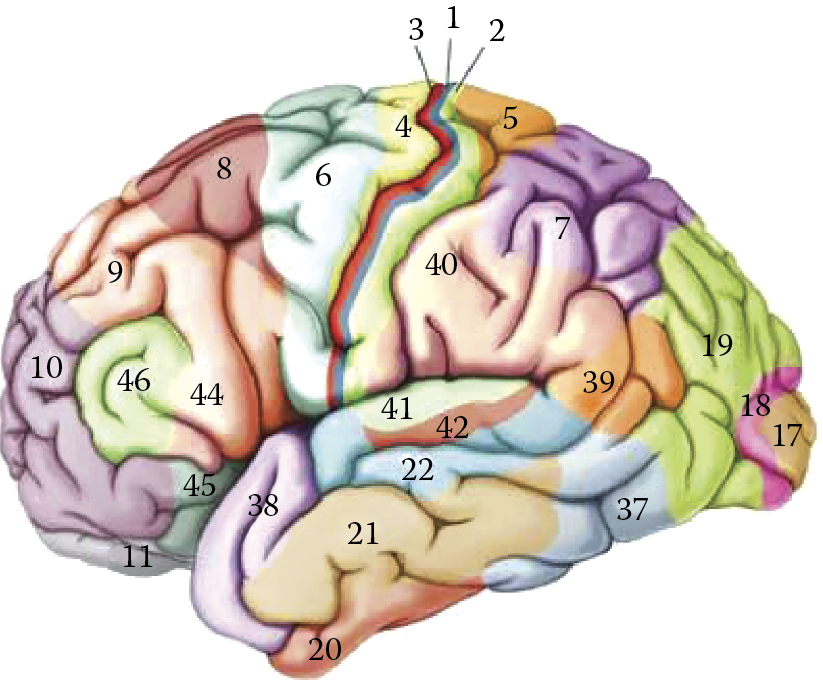

The intricacies of the brain have always fascinated scientists. Despite many years of intensive research, we were unable until recently to understand the mysteries of the brain. We are now seeing remarkable progress in this area and discuss it in Section 1.3. In the 1990s, when artificial neural networks were developed, much less information about brain functioning was available. The only known facts about the brain’s workings related to the locations of the structures responsible for vital motor, perception, and intellectual functions (Figure 1.2).

Localization of various functions within a brain. (Source: http://avm.ucsf.edu/patient_info/WhatIsAnAVM/images/image015.gif )

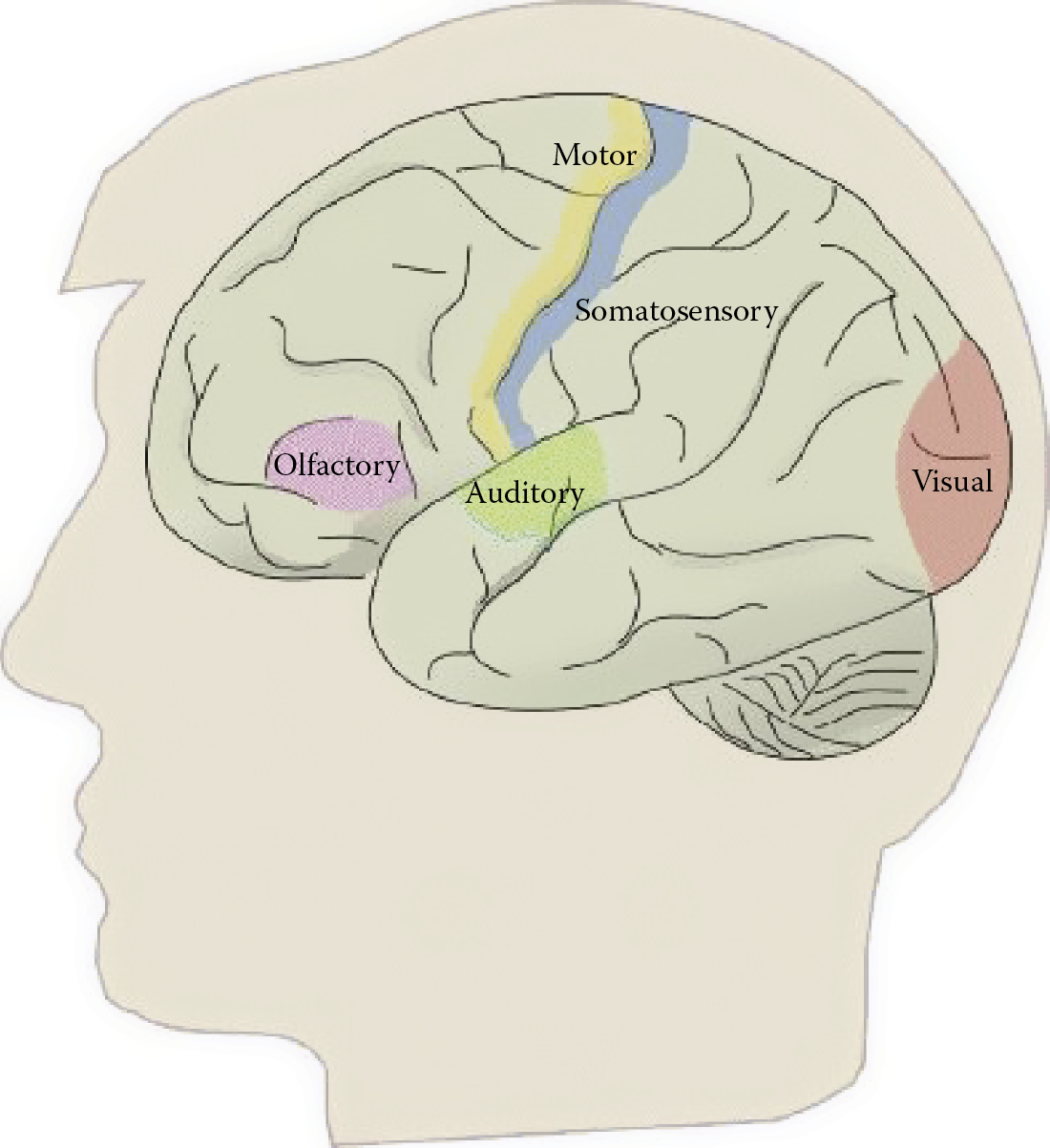

Our knowledge about specific tasks performed by each brain element was limited. Medical research focusing on certain diseases and injuries led to some understanding of how brain parts responsible for controlling movements and essential sensations (somatosensory functions) work (Figure 1.3).

Main localizations of brain functions. (Source: http://www.neurevolution.net/wp-content/uploads/primarycortex1_big.jpg )

We learned which movement (paralysis) or sensation defects were associated with injuries of certain brain components. Our knowledge about the nature and localization of more advanced psychological activities was based on this primitive information. Basically, we could only conclude that the individual cerebral hemispheres functioned as well-defined and specialized systems (Figure 1.4).

Generalized differences between main parts of the brain. (Source: http://www.ucmasnepal.com/uploaded/images/ucmas_brain.jpg )

One reason for the limited scientific knowledge of brain function was ethical. Regulations specified that experiments on human brains had to be restricted to observing and analyzing the relationships of functional, psychological, and morphological changes in patients with brain injuries. An intentional manipulation of an electrode or scalpel in a tissue of a healthy brain for real-life data collection was out of the question. Of course, it was possible to carry out experiments on animals, but killing innocent animals in the name of science has always been somewhat controversial even if dictated by a noble purpose. Furthermore, it was not possible to draw exact conclusions about human brains directly from animal tests. The differences in brain anatomy and physiology in humans and animals are more significant than differences in their musculoskeletal and circulatory systems.

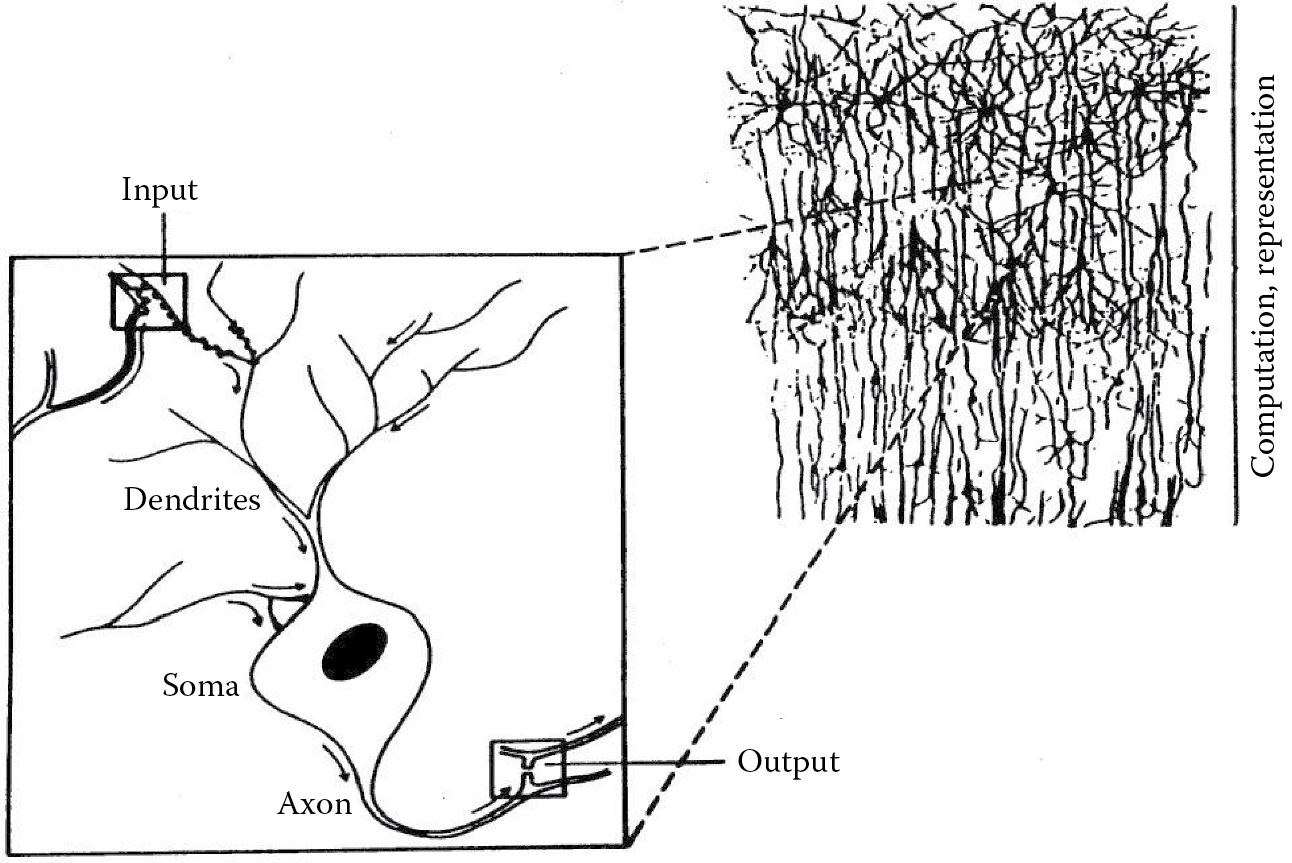

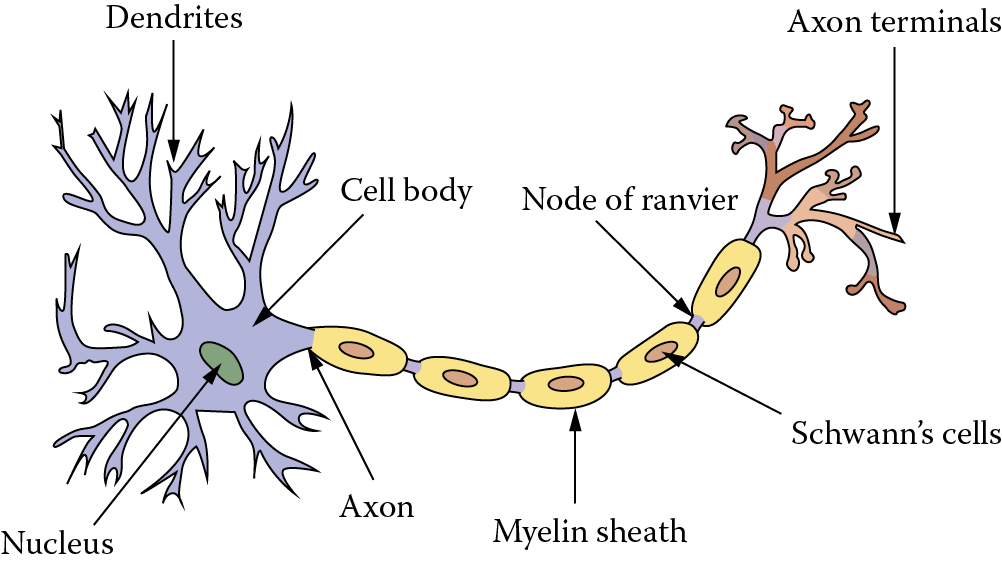

Let us now look at the methods adopted by the pioneering neural network creators to equip their constructions with the most desirable features and properties modeled on the brain evolved by nature. The brain consists of neurons that function as separate cells acting as natural processors. Spanish histologist Ramón y Cajal (1906 Nobel laureate; see Table P.1 in Preface) first described the human brain as a network of connected autonomous elements. He introduced the concept of neurons that were responsible for processing information, receiving and analyzing sensations, and also generating and sending control signals to all parts of the human body. We will learn more about the structures of neurons in Chapter 2. The artificial equivalent of the neuron is the main component of a neural network structure. Figure 1.5 shows how an individual neuron was isolated from a continuous web of neurons that form the cerebral cortex.

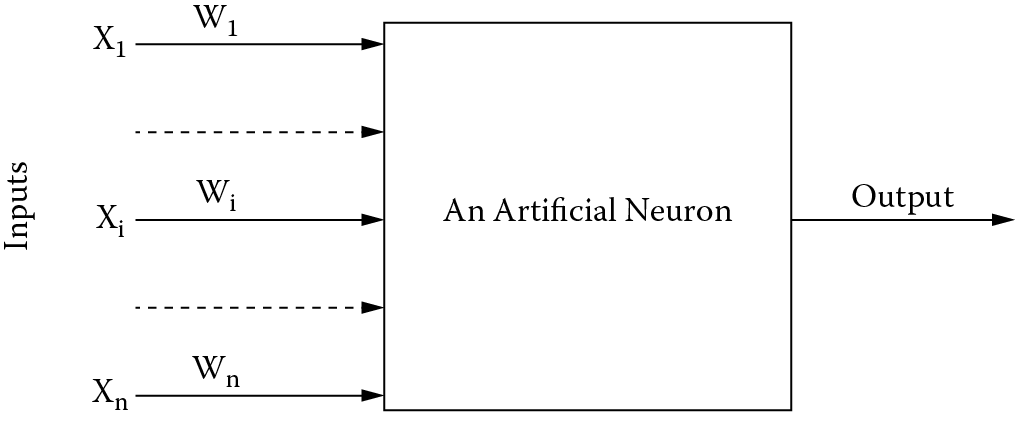

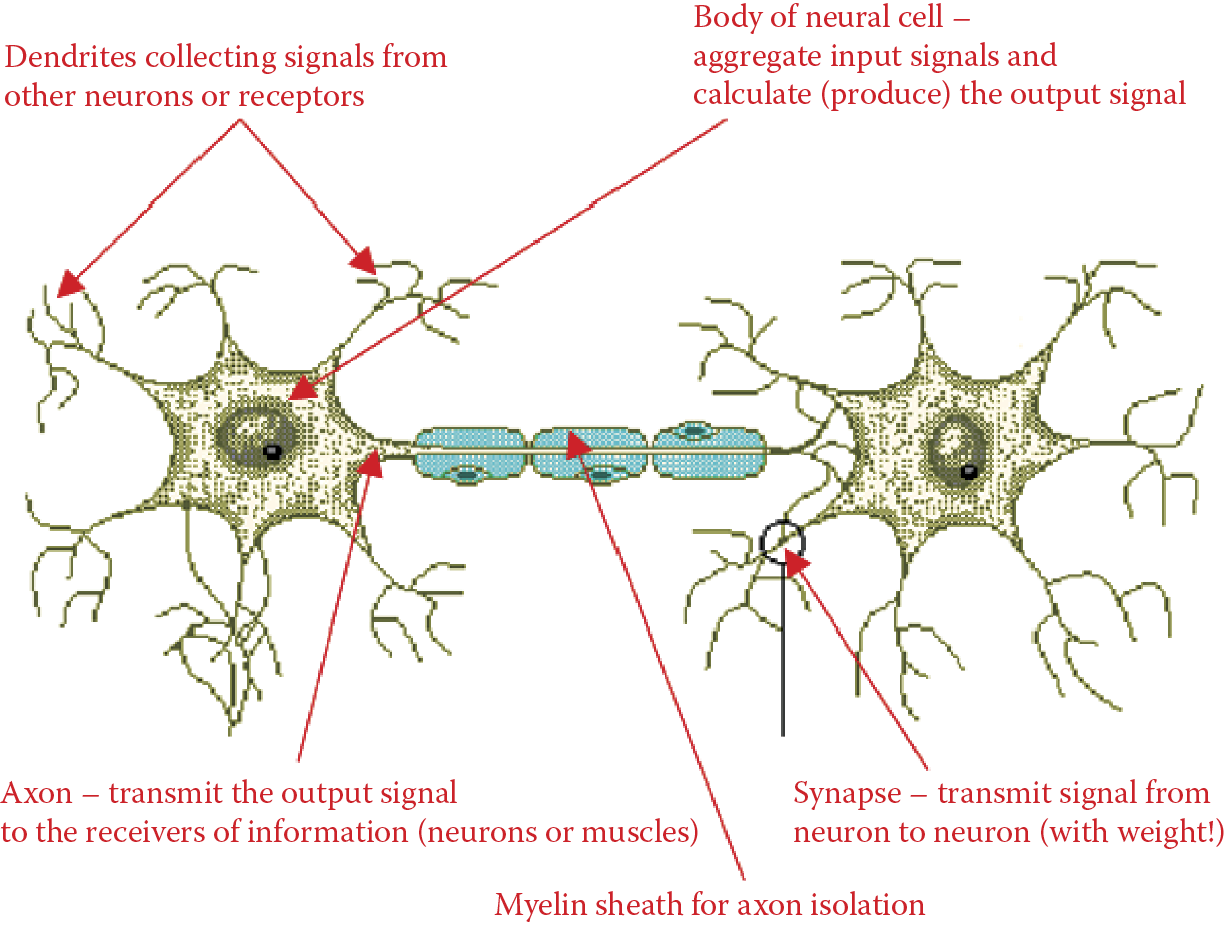

As evident from the experiments on certain animals such as squid of the genus Loligo, our knowledge of neurons when the first neural networks were designed was quite extensive. Hodgkin and Huxley (1963 Nobel Prize) discovered the biochemical and bioelectrical changes that occur during distribution and processing of nervous information carrier signals. The most important result was that the description of a real neuron could be simplified significantly by a reduction of observed information processing rules to several simple relations described in Chapter 2. The extremely simplified neuron (Figure 1.6) still allows us to create networks that have interesting and useful properties and at the same time are economical to build. The elements shown in Figure 1.6 will be discussed in detail in subsequent chapters.

A natural neuron has an extremely intricate and diverse construction (Figure 1.7). Its artificial equivalent as shown in Figure 1.6 has a substantially trimmed-down structure and is greatly simplified in the activity areas. Despite these differences, we can, with the help of artificial neural networks, duplicate a range of complex and interesting behaviors that will be described in detail in later chapters.

View of a biological neural cell. (Source: http://www.web-books.com/eLibrary/Medicine/Physiology/Nervous/neuron.jpg )

The neuron in Figure 1.7 is a product of a graphic fantasy. Figure 1.8 shows a real neural cell dissected from a rat brain—an exact match to a human neuron. It is possible to model simple artificial neurons easily and economically by means of an uncomplicated electronic system. It is also fairly easy to model them in a form of a computer algorithm that simulates their activities. The first neural networks were built as specialized electronic machines called perceptrons.

View of biological neurons in rat brain. (Source: http://flutuante.files.wordpress.com/2009/08/rat-neuron.png )

1.3 Construction of First Neural Networks

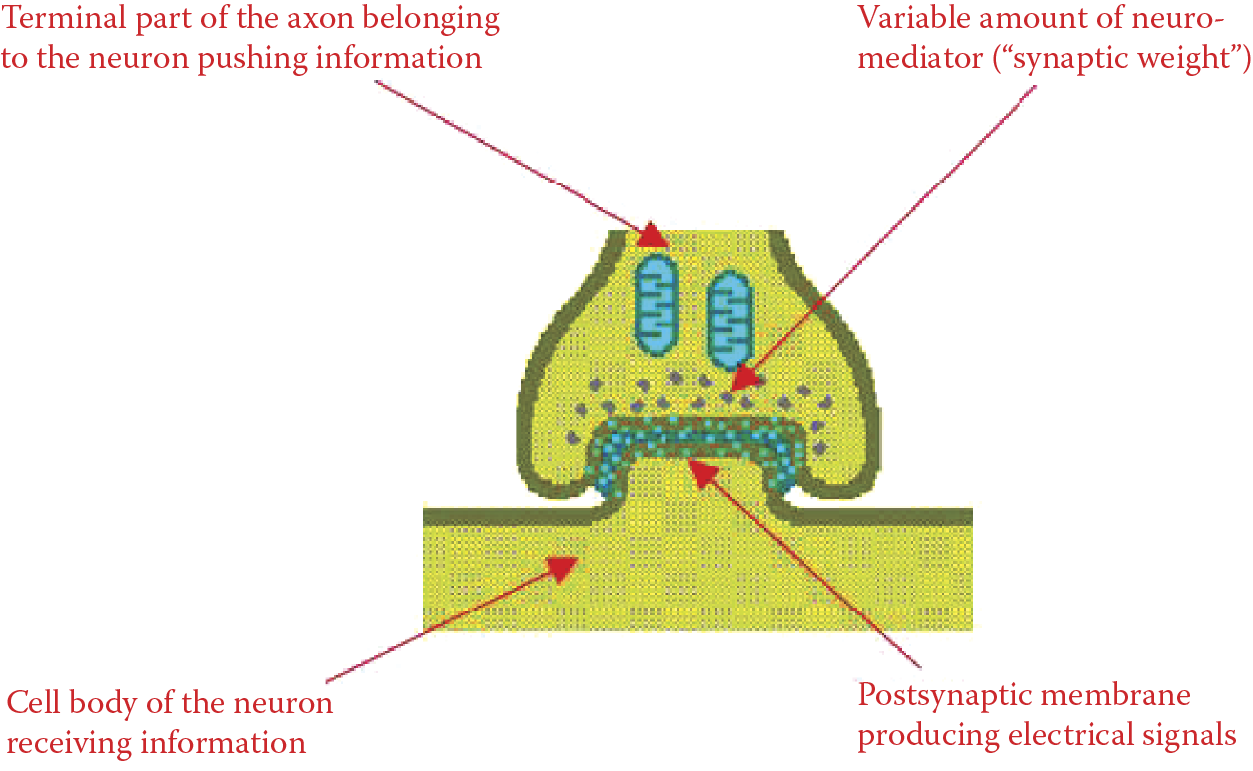

Let us see how biological information was used in the field of neurocybernetics to design economical and easy-to-use neural networks. The creators of the first neural networks understood the actions of biological neurons. Table P.1 in the Preface lists the number of Nobel prizes awarded throughout the twentieth century for discoveries related directly or indirectly to neural cells and their functions. The most astonishing discovery by biologists concerned the process by which one neuron passes a signal to another neuron (Figure 1.9).

Smallest functional part of a neural system: two connected and cooperating neurons. The most important part of the structure is the synapse connecting the two neurons.

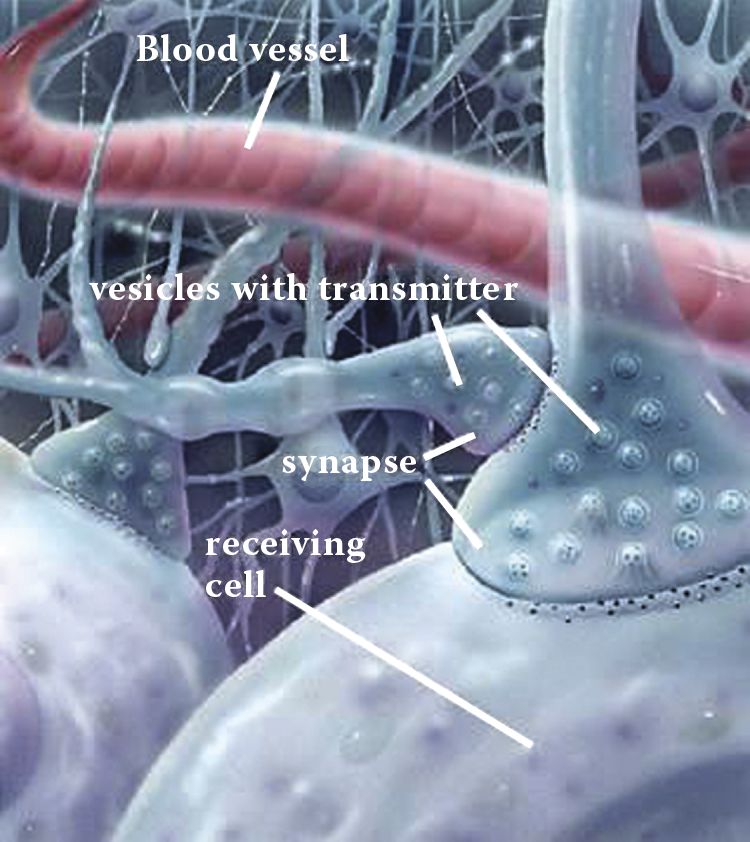

Researchers noted that the most important participants in information processing within the brain were the large and complicated cell bodies (axons and dendrites) used for communications between neurons. Synapses are also significant participants. They mediate the process of information passing between neurons. Synapses are so small that the resolving power of the optical microscopes typically used in biology was too low to find and describe them. They are barely visible in Figure 1.9. Their structural complexity could be understood only after the invention of the electron microscope (Figure 1.10).

View of synapse reconstructed on the basis of hundreds of electron microscope observations. (Source: http://www.lionden.com/graphics/AP/synapse.jpg )

John Eccles, a British neurophysiologist, proved that when a neural signal goes through a synapse, special chemical substances called neuromediators are engaged. They are released at the end of the axon from the neuron that transmits the information and travel to the postsynaptic membrane of the recipient neuron (Figure 1.11).

In essence, teaching a neuron depends on the ability of the same signal sent through an axon from a transmitting cell to release a greater or smaller quantity of the neuromediator to the synapse that receives the signal. If the brain finds a signal important during the learning process, the quantity of the neuromediator is increased or decreased. We should remember that the mechanism shown in the figure is an extremely simplified version of the complex biological processes that occur within a synapse in reality. The best way to understand the complexity is to discover how the synapses transmit information from neuron to neuron and the mechanism of the changes that take place in synapses as a brain learns and acquires new information.

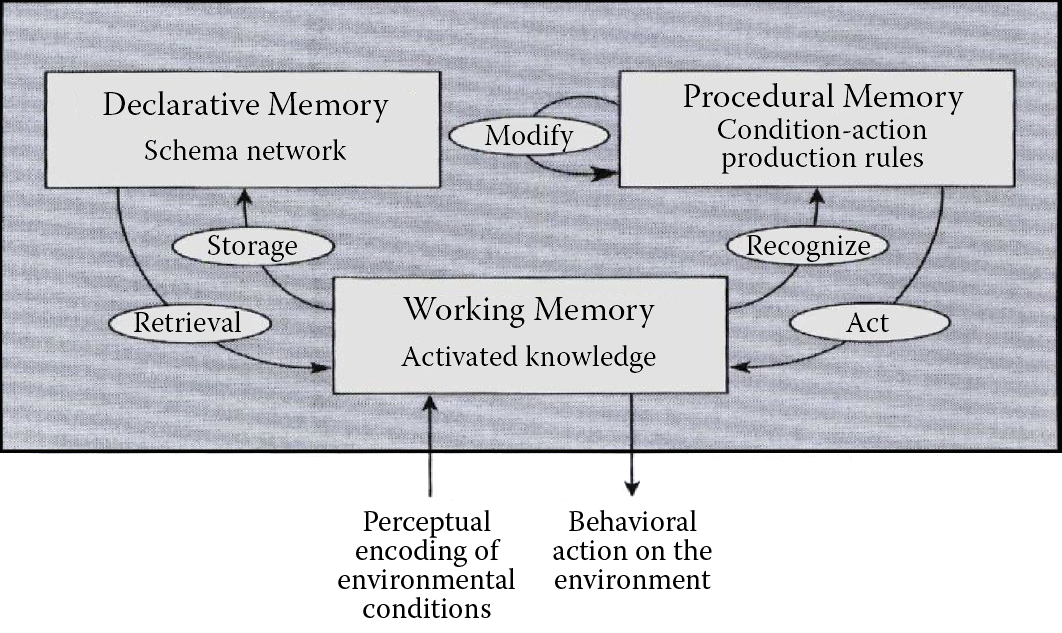

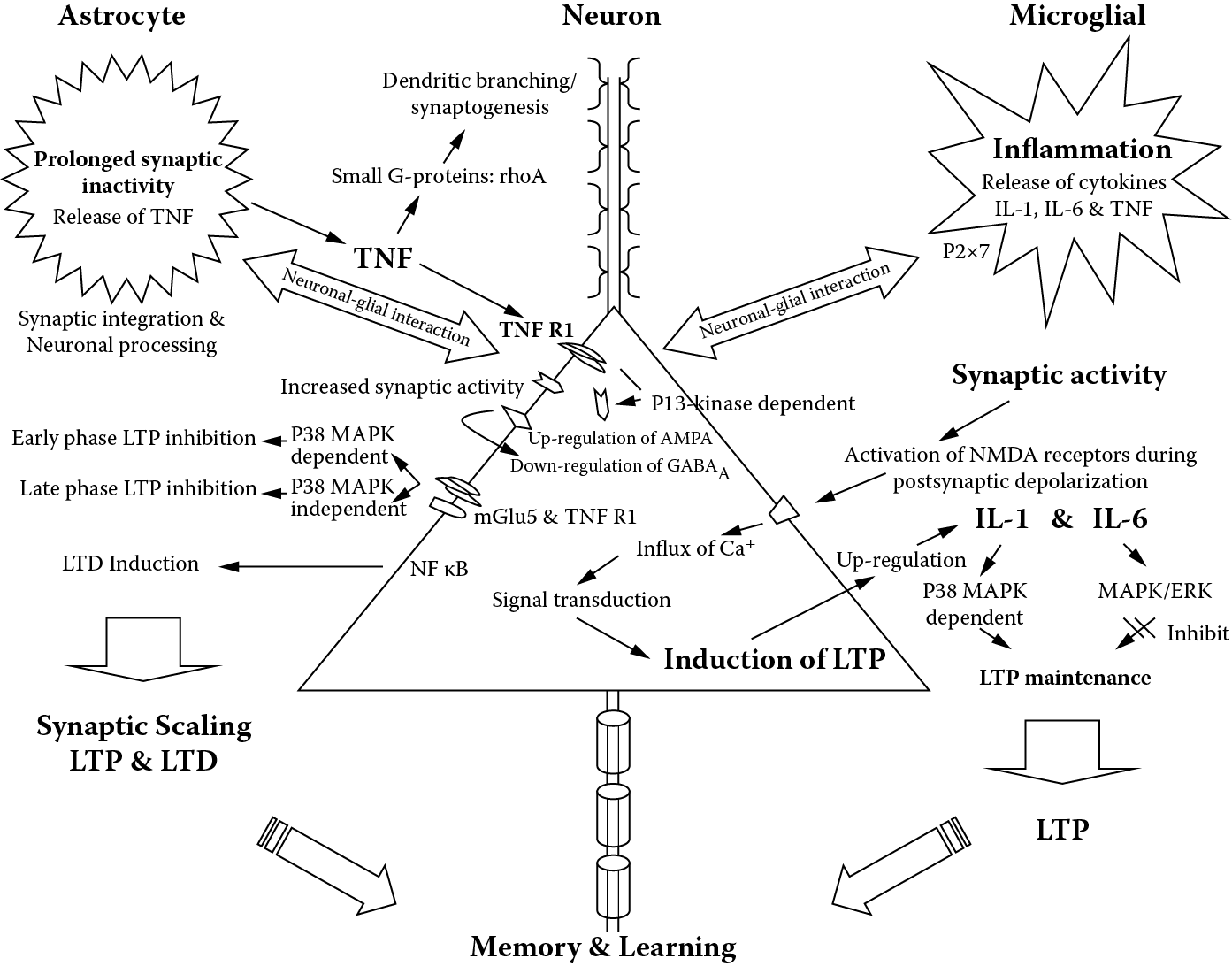

The neural networks specialists readily used this information and the systems they built possessed one vital attribute: the ability to learn. Obviously, the complicated process of biological learning that requires very complex biochemical processes (Figure 1.12) was greatly simplified for the design of an efficient tool for resolving practical computer science problems. The type of learning utilized in neural networks is classified by psychologists as procedural memory. As we know, humans also have other types of memories. The memory and learning processes are illustrated in Figure 1.13.

Biochemical mechanisms of learning and memory. (Source: http://ars.els-cdn.com/content/image/1-s2.0-S0149763408001838-gr3.jpg )

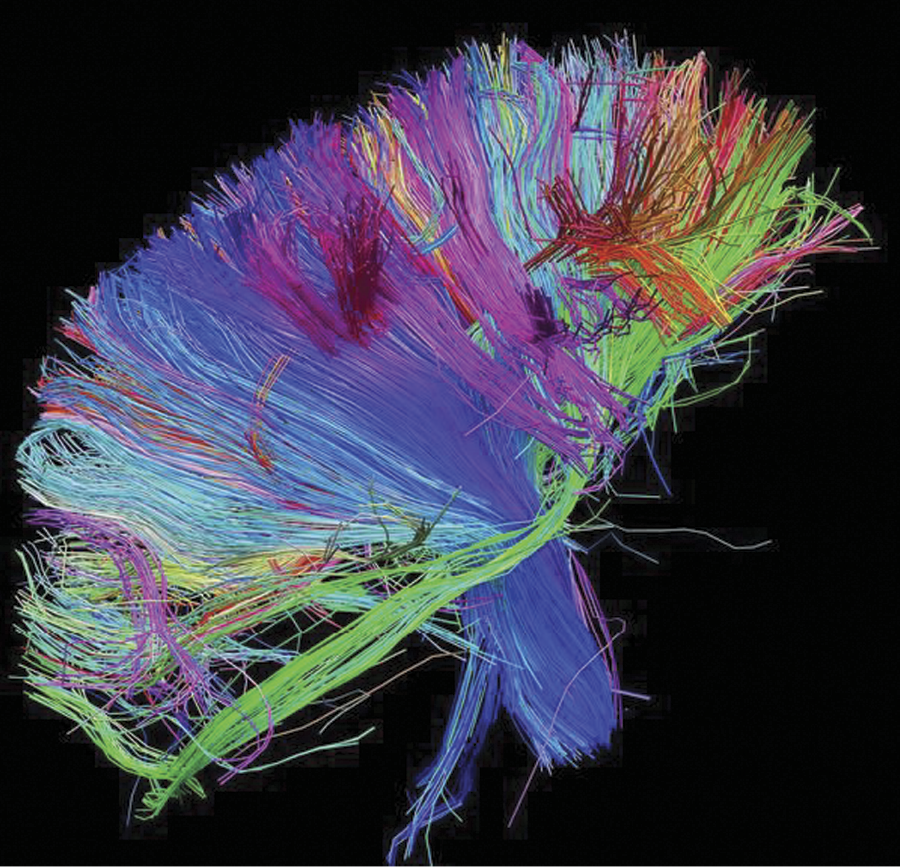

In the 1990s, the identification of the inner structures of the brain led to further development of neural networks. The hard work of many generations of histologists analyzing thousands of microscopic specimens and hundreds of less or more successful attempts to reconstruct the three-dimensional structures of the connections between neural elements produced useful results in the forms of schemas such as Figure 1.14.

Three-dimensional internal structure of connections within a brain. (Source: http://www.trbimg.com/img-504f806f/turbine/la-he-brainresearch18-154.jpg-20120910/600 )

1.4 Layered Construction of Neural Network

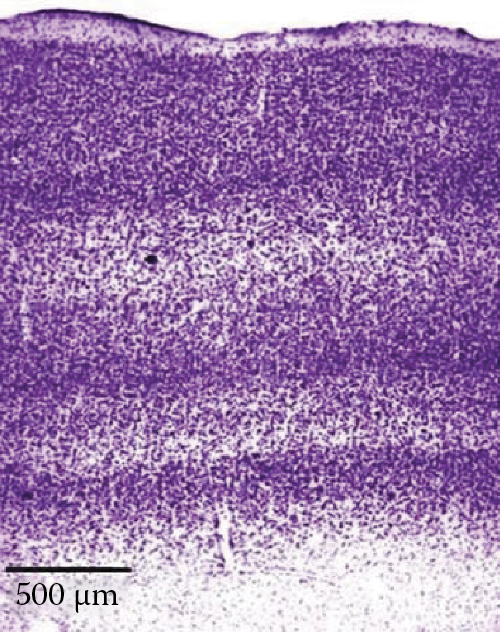

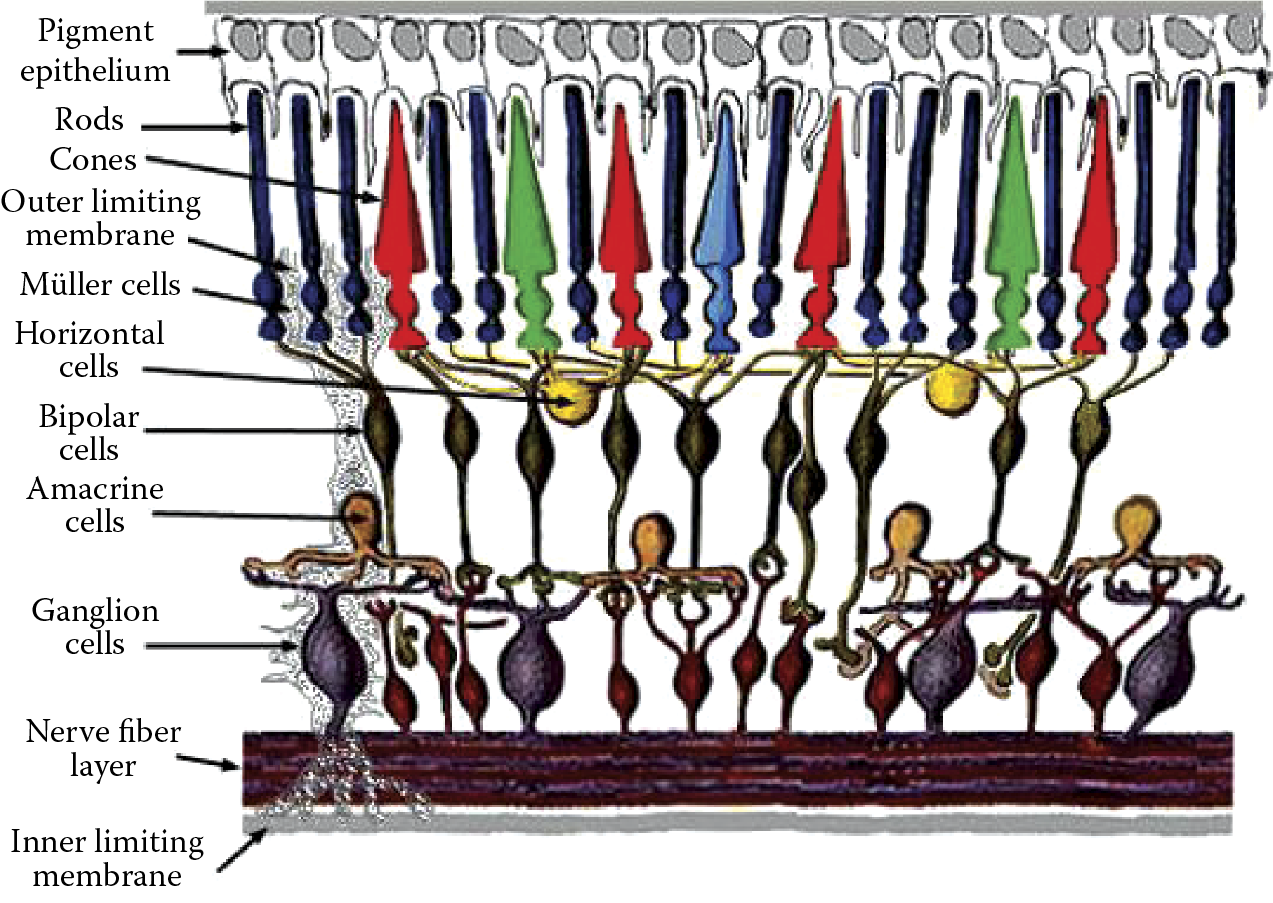

Neural network designers focused mainly on implementing working models that were practical, tough, and extremely truncated. The space layouts of neurons and their connections based on neuroanatomic and cytological research had to be reduced to absolute minimum. The regular layer-like patterns created by neurons in several brain areas had to be followed. Figure 1.15 shows the layered structure of the cerebral cortex. Figure 1.16 illustrates the layered structure of the retina of the eye.

Layered structure of human brain cortex. (Source: http://hirnforschung.kyb.mpg.de/uploads/pics/EN_M2_clip_image002_01.jpg )

Layered structure of the retina component of the eye. (Source: http://www.theness.com/images/blogimages/retina.jpeg )

The technicalities involved in designing neural networks in layers are easy to handle. At best, neural networks are biologically “crippled” models of actual tissues. They are functional enough to produce results that are fairly correct—at least in context of neurophysiology.

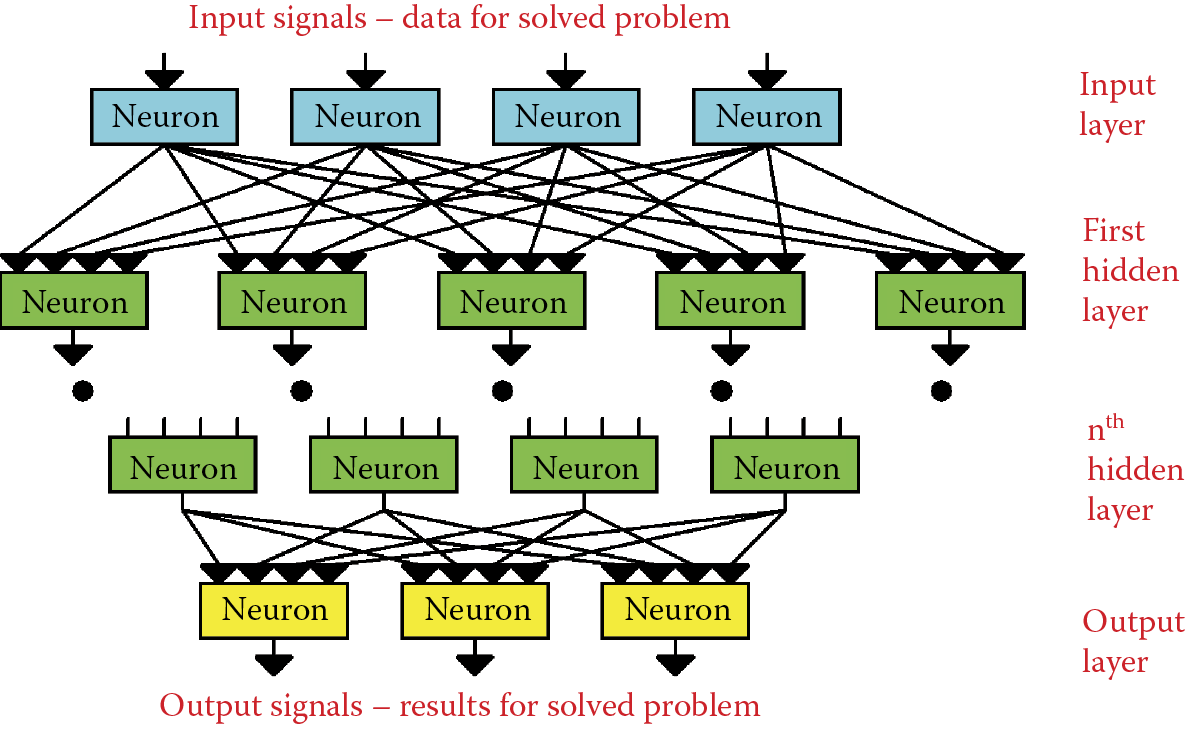

According to the popular movie Shrek, “Ogres are like onions. They have layers.” Like onions, neural networks also have layers. A typical neural network has the structure shown in Figure 1.17. The main problem for a designer is to set up and maintain the connections between the layers. The schematic of neural connections in a brain is very complicated and connection details differ according to the specific brain area. The first topological brain map was created in the nineteenth century and it divided the brain based on identical neural connection templates (Figure 1.18). The original map marked similar connections with the same color; different colors indicated differences. The map shows what are called Brodmann’s areas. Brodmann divided the cortex into 52 regions. Currently we treat brain structures more subtly, but Brodmann’s scheme depicts the problem we face in analyzing neuron connections into a network. The answer varies according to the brain area involved.

Brodmann’s map of brain regions with different cell connections. (Source: http://thebrain.mcgill.ca/flash/capsules/images/outil_jaune05_img02.jpg )

If our sole task is to build an artificial neural network, it may be wise to adapt its connection structure with respect to the single problem the network is to solve. It is obvious that a well-chosen structure can greatly increase the speed of network learning. The problem in most cases is that we are unable to determine the best way to work out the problem. We cannot even guess which algorithms are suitable and which ones should be employed after learning. The a priori selection of network elements is not possible. Therefore, the decisions about connecting layers and single elements in networks are arbitrary. Usually each element is connected to every other element. Such a homogeneous and completely connected network reduces the effort required to define it. However, this design increases computing complexity. More memory or increased chip complexity may be needed to recreate all connections between elements.

It is worth mentioning that without such simplification, network definition would require thousands of parameters. The deployment of such a structure would be a programmer’s worst nightmare. In contrast, using fully connected elements involves no special efforts of the designer and the use has become almost routine and harmless because the learning process eliminates unnecessary connections.

1.5 From Biological Brain to First Artificial Neural Network

In summary, the artificial neural networks invented in the 1990s had strong foundations in the anatomical, physiological, and biochemical activities of the human brain. The designers of neural networks did not attempt to make exact copies of brains. Instead, they treated the brain as an inspiration. Therefore, the construction and principles governing artificial neural networks applied in practice are not exact reflections of the activities of biological structures.

Basically, we can say that the foundations of modern neural networks consist of certain biological knowledge elements that are well known and serve as sources of inspiration. Neural networks do not duplicate the precise patterns of brains. The structure designed by a neurocybernetic scientist is totally the product of computer science because neural networks models are created and simulated using typical computers. A graphical metaphor of this process assembled by students of Akademia Górniczo-Hutnicza in Krakow shows the first author as an explorer of neural networks (Figure 1.19).

We must remember that during the evolution of neural networks, all biological knowledge must be considered thoroughly and simplified before it can serve as a basis for constructing artificial neurocybernetic systems. This fact fundamentally influences the properties of neural networks. Contrary to appearances, it also decides the properties and possibilities of neural networks considered tools that help us understand the biological processes of our own brains. We will address this issue in the next subsection.

While preparing this book, we could not ignore the remarkable progress of brain research in the past decade. Accordingly, the concepts determining when and for what purpose neural networks should be applied also have undergone an evolution. In the next subsection, we will talk about concurrent problems connected with human nervous system research and we try to demonstrate the role neural networks may play in future research.

1.6 Current Brain Research Methods

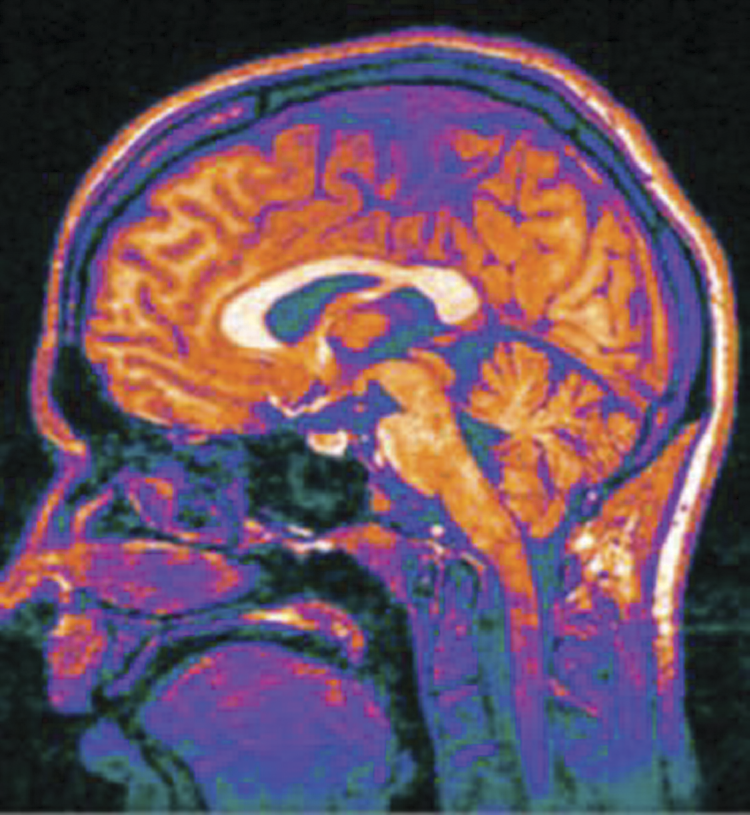

The variety of tools available for researchers in the twenty-first century would have been incomprehensible to the pioneers who gave us the basic knowledge about the structure and functionality of the nervous system. Their work underlined creation of the first neural network in the 1990s. The skull—more precisely the cranium—was impenetrable by early observation techniques. The interior of the brain could only be viewed after the death of a patient and postmortem examination failed to yield any insight into its activities. Anatomists tried to analyze the core structures that processed information and their connectivities by dissecting brains into pieces. With modern technology, the internal brain structures of living humans can be observed and analyzed with extraordinary precision and without harm, as shown in Figure 1.20. Two techniques that led to the improved representation of the human brain and its internal structures are computerized tomography (CT) and nuclear magnetic resonance (NMR).

Internal brain structures of living humans can be analyzed with astounding precision. (Source: http://www.ucl.ac.uk/news/news-articles/1012/s4_cropped.jpg )

In early March 2013, two terabytes of unique data hit the web: the first batch of images from a massively ambitious brain-mapping effort called the Human Connectome Project (Van Essen 2013). Thousands of images showed the brains of 68 healthy volunteers, with different regions glowing in bright jewel tones. These data, freely available for download via the project’s site, revealed the parts of the brain that act in concert to accomplish a task as simple as recognizing a face. The project leaders said their work was enabled by advances in brain-scanning hardware and image-processing software.

“It simply wouldn’t have been feasible five or six years ago to provide this amount and quality of data, and the ability to work with the data,” said David Van Essen, one of the project’s principal investigators and head of the anatomy and neurobiology department at the Washington University School of Medicine in St. Louis. Based on a growing understanding that the mechanisms of perception and cognition involve networks of neurons that sprawl across multiple regions of the brain, researchers have begun mapping those neural circuits. While the Human Connectome Project looks at connections among brain regions, a $100 million project announced in April 2013 and called the BRAINInitiative will focus on the connectivity of small clusters of neurons.

As of this writing, only the 5-year Human Connectome Project has delivered data. The $40 million project funds two consortia; the larger, international group led by Van Essen and Kamil Ugurbil of the University of Minnesota will eventually scan the brains of 1200 twin adults and their siblings. The goal, says Van Essen, is “not just to infer what typical brain connectivity is like but also how it varies across participants, and how that relates to their different intellectual, cognitive, and emotional capabilities and attributes.” To provide multiple perspectives on each brain, the researchers employ a number of cutting-edge imaging methods. They start with magnetic resonance imaging (MRI) scans to provide basic structural images of the brain using both a 3-tesla machine and a next-generation 7-T scanner. Both provide extremely high-resolution images of the convoluted folds of the cerebral cortex.

The next step will be a series of functional MRI (fMRI) scans to detect blood flow throughout the brain and show brain activities for subjects at rest and engaged in seven different tasks (including language, working memory, and gambling exercises). The fMRI is “souped up.” Ugurbil pioneered a technique called multiband imaging that takes snapshots of eight slices of the brain at a time instead of just one. To complement the data, on basic structure and blood flow within the brain, each participant will be scanned using a technique called diffusion MRI that tracks the movements of water molecules within brain fibers. Because water diffuses more rapidly along the lengths of the fibers that connect neurons than across them, this technique allows researchers to directly trace connections of sections of the brain.

The Connectome team had Siemens customize its top-of-the-line MRI machine to let the team alter its magnetic field strength more rapidly and dramatically to produce clearer images. Each imaging modality has its limitations, so combining them gives neuroscientists their best view yet of what goes on inside a human brain. First, however, all that neuroimaging data must be purged of noise and artifacts and organized into a useful database.

Dan Marcus, director of the Neuro-informatics Research Group at the Washington University School of Medicine, developed the image-processing software that automatically cleans up the images and precisely aligns the scans so that a single “brain ordinate” refers to the same point on both diffusion MRI and fMRI scans. That processing is computationally intensive, says Marcus: “For each subject, that code takes about 24 hours to run on our supercomputer.”

Finally, the team adapted open-source image analysis tools to allow researchers to query the database in sophisticated ways. For example, a user can examine a brain simply through its diffusion images or overlay that data on a set of fMRI results. Some neuroscientists think that all this data will be of limited use. Karl Friston, scientific director of the Wellcome Trust Centre for Neuroimaging at University College London (2013), applauds the project’s ambition, but criticizes it for providing a resource “without asking what questions these data and models speak to.” Friston would prefer to see money spent on hypothesis-directed brain scans to investigate “how a particular connection changes with experimental intervention or disease.”

The Connectome team thinks the open-ended nature of the data set is an asset, not a weakness. They hope to provoke research questions they never anticipated and in fields other than their own. “You don’t have to be a neuroscientist to access the data,” says Marcus. “If you’re an engineer or a physicist and want to get into this, you can.”

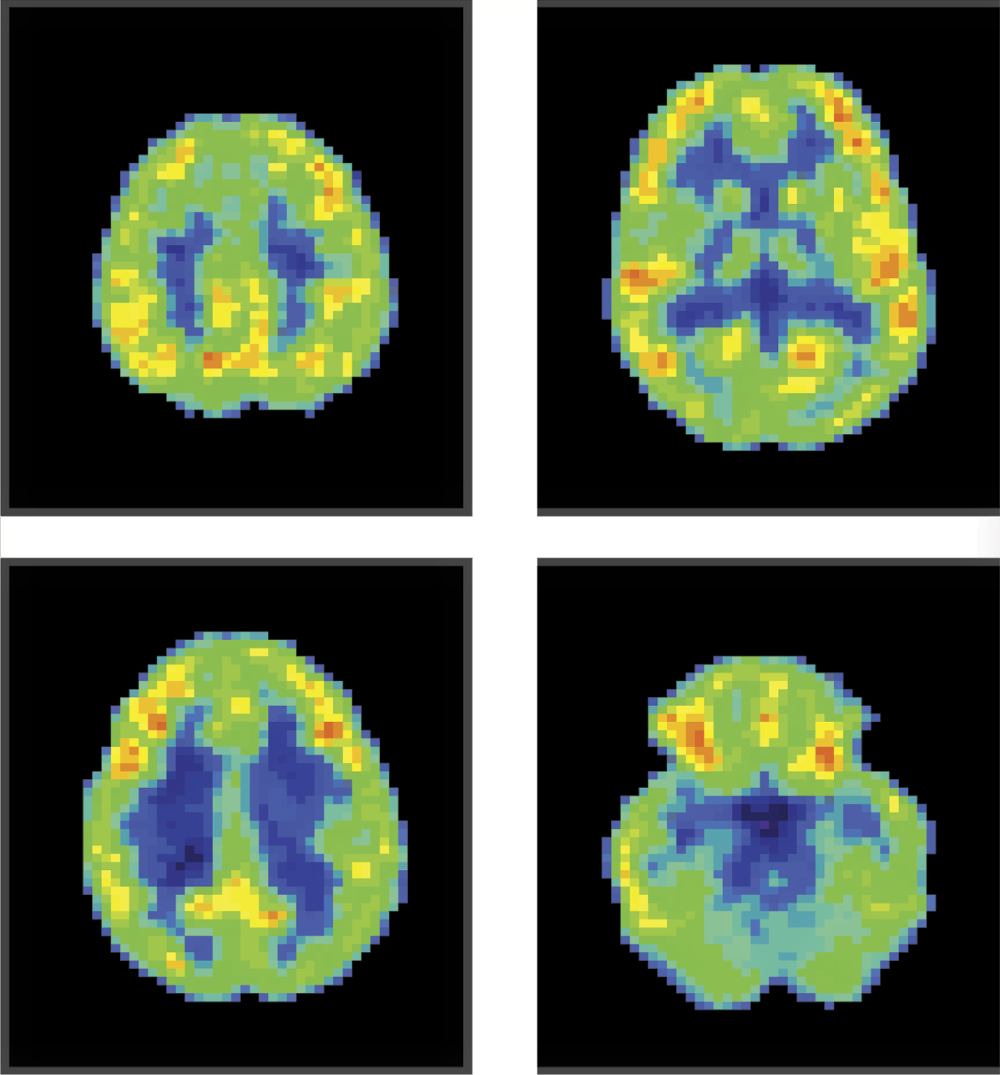

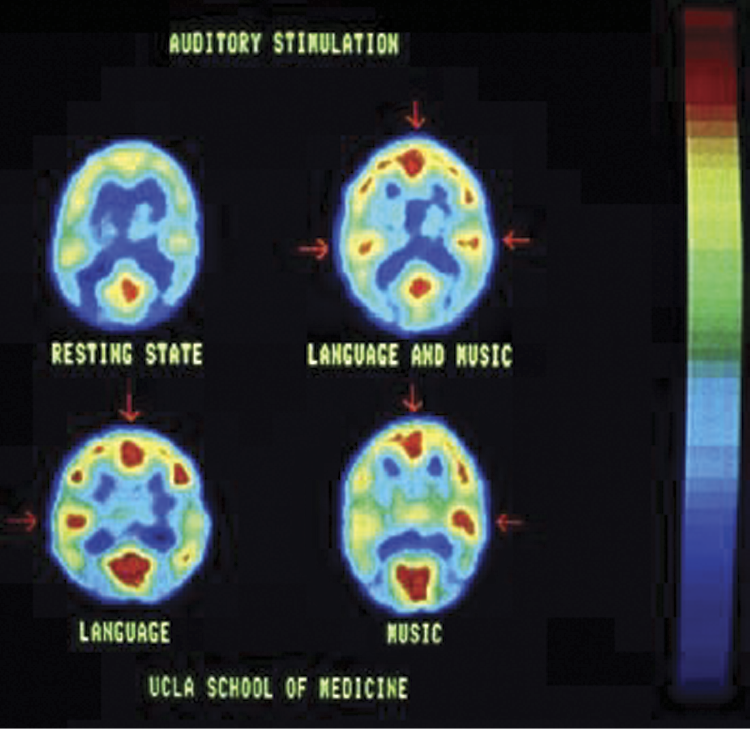

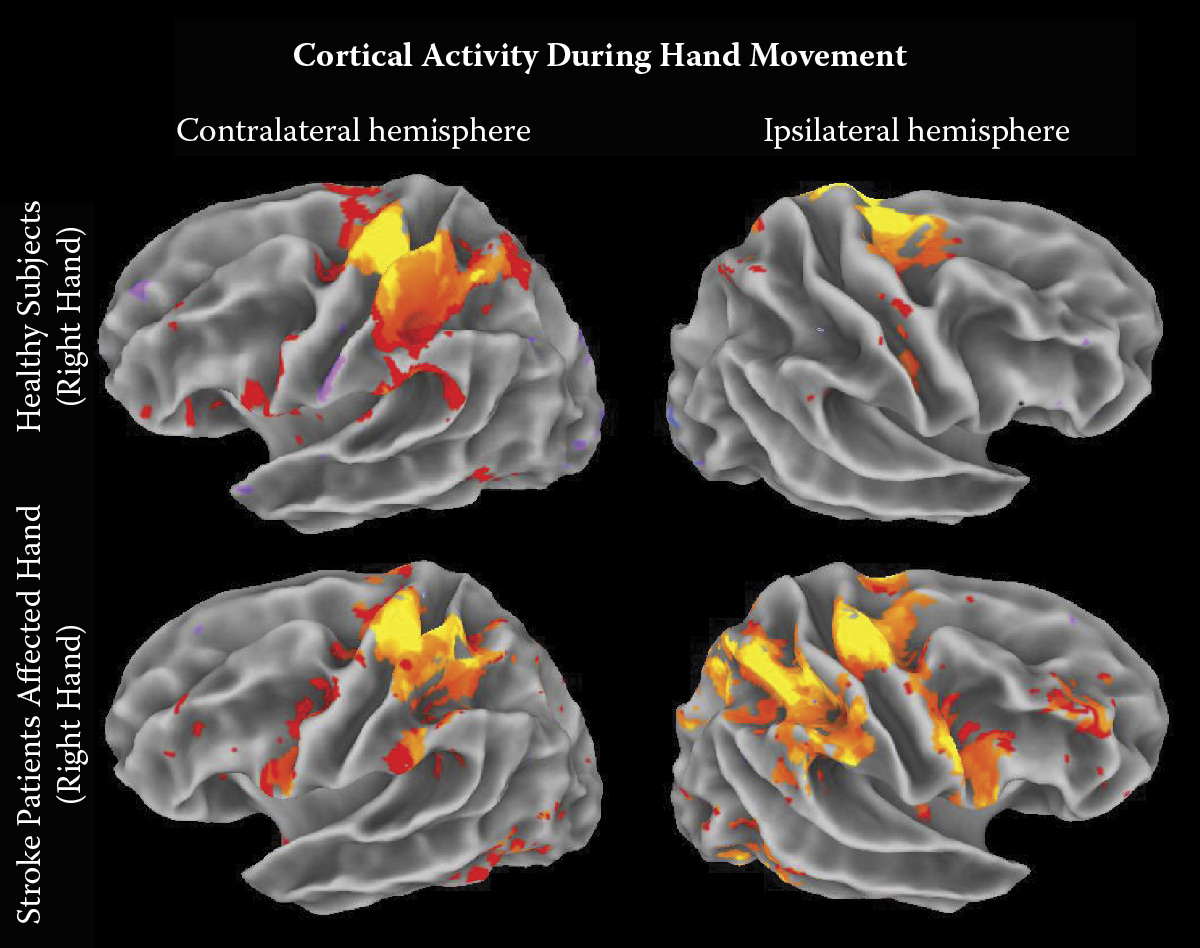

Diagnostics allow us to detect and locate certain brain areas that exhibit high levels of activities at certain moments (see Figure 1.21). Linking such sections with the type of activity a person is performing at a specific moment allows us to presume that certain brain structures are specialized to provide specific responses. This led to better understanding of functional aspects of neural compounds.

PET image showing brain activity during performance of certain tasks by research subject. (Source: http://faculty.vassar.edu/abbaird/resources/brain_science/images/pet_image.jpg )

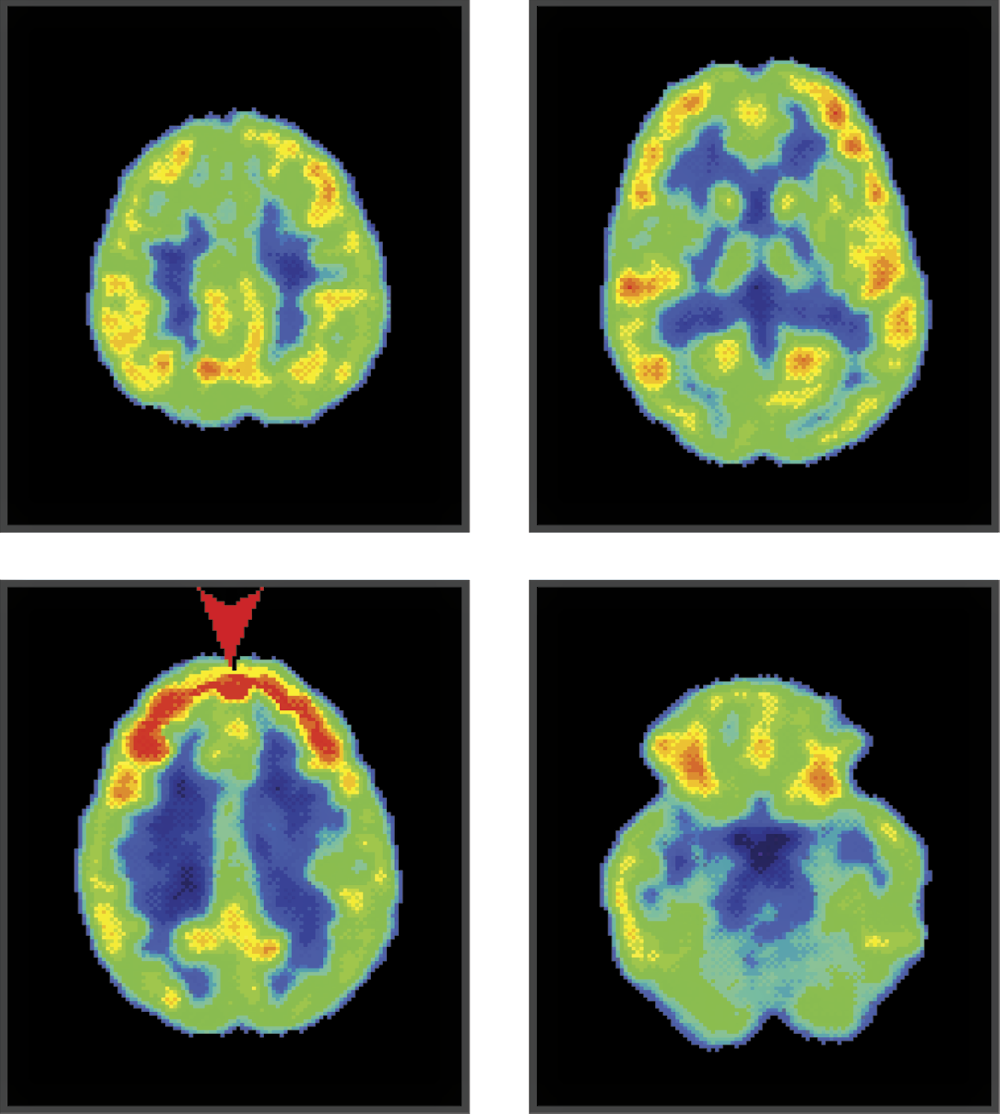

Figure 1.22 shows four profiles at different heights obtained by positron emission tomography (PET) imaging of a human brain viewed from inside. Its owner is awake, is not focused on anything specific, and has no task to accomplish. We can see that most of his brain is inactive (shown as blue or green areas in original scan; places where neurons work were shown as yellow and red areas). Why does the scan reveal activity? Although our test subject is relaxed, he continues to think; he moves even if his movements are not noticeable. He feels cold or heat. His activities are scattered and weak. If he focuses on solving a difficult mathematical problem, the brain areas responsible for abstract thinking immediately increase their activity, resulting in red areas as shown in Figure 1.23.

PET image of a human brain focused heavily on a mathematical equation. Intensive marks in the frontal lobe (shown in red on original scan) are activated by this exercise.

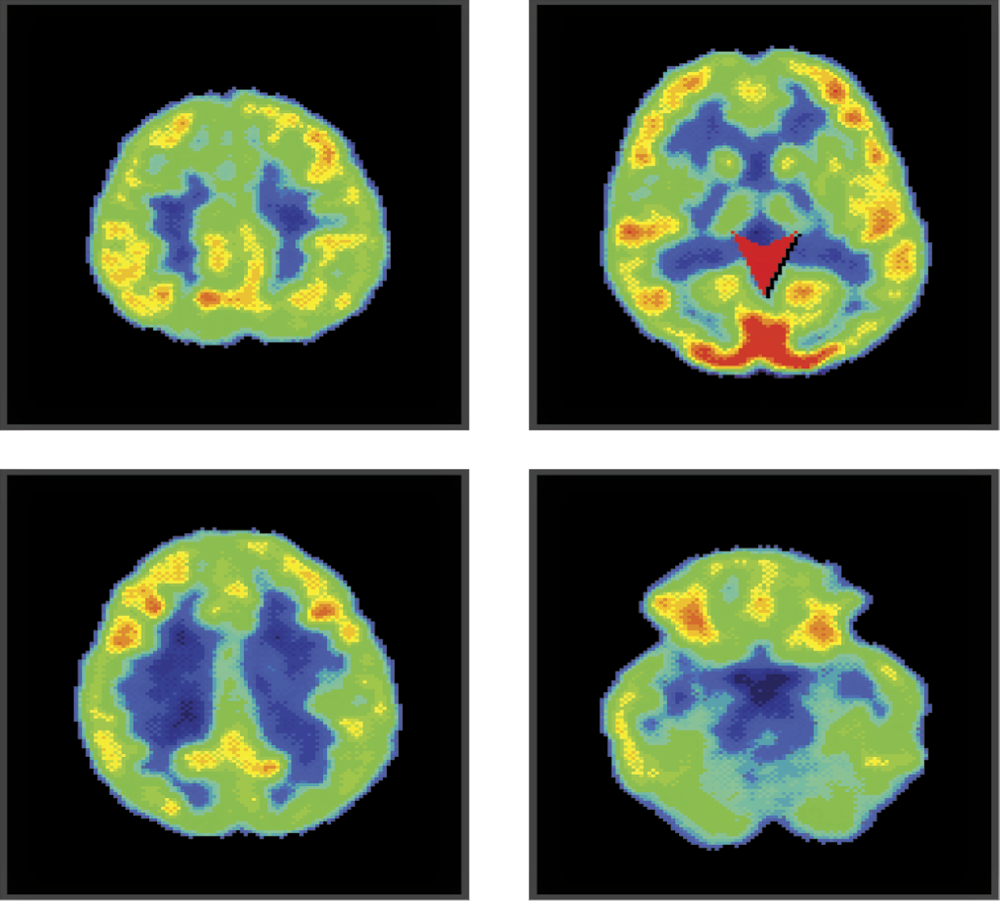

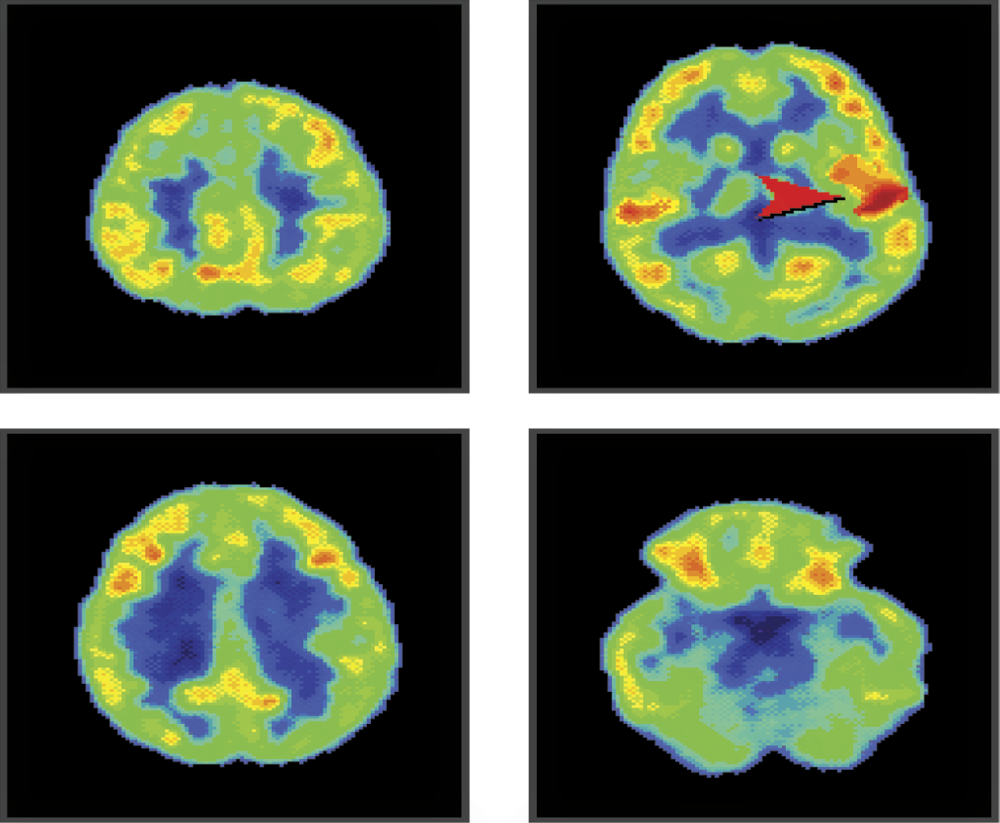

PET imaging can be used to study minimal activities and exhausting efforts. If a picture suddenly draws the attention of our research object, millions of neurons in the rear part of his brain will be activated to analyze the picture as shown in Figure 1.24. During listening and speech comprehension, triggers responsible for analysis and remembering sounds are activated in temporal lobes as shown in Figure 1.25.

PET image of brain activity while a subject watches something interesting. We can see spots marking activity in rear lobes (arrow) responsible for acquisition and identification of visual signals.

Listening to a conversation activates mostly temporal lobes because they are responsible for signal analysis. Note that speech comprehension areas are located only in one side of brain; listening to music changes the color on both sides of the image.

The methods briefly described here allow us to record temporary brain states and explain how the brain is organized. In fact, combinations of various imaging techniques allow us to view internal structures during many types of activities and reveal correlations between certain brain areas and an individual’s corresponding actions (Figure 1.26).

Tracking changes connected with performing specific tasks. (Source: http://www.martinos.org/neurorecovery/images/fMRI_labeled.png )

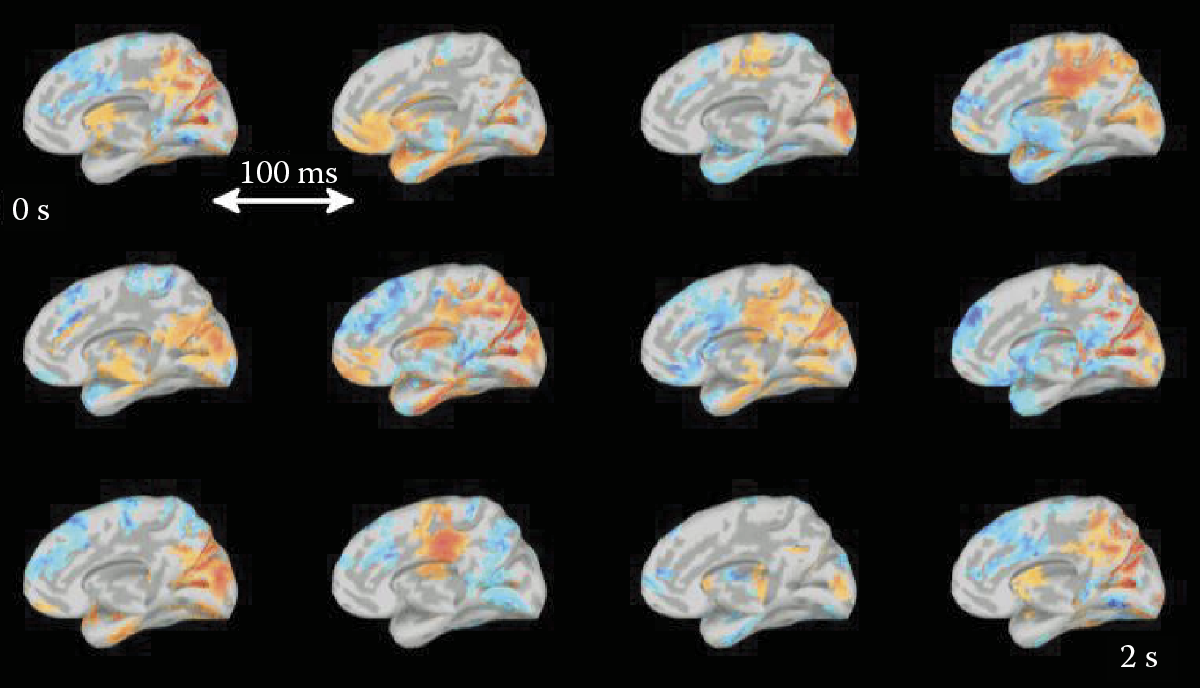

These changes can be recorded dynamically (creating something like a movie or computer animation) and provide an enormous advantage over static pictures. The process is similar to dividing the motions of a runner into sections or the serial snapshots used to produce animated movies. Figure 1.27 shows animation of brain structures. Presenting such still images of anatomical features in sequence gives an illusion of movement that allows us to track which structures activate and in what order to analyze a specific action.

Dynamic processes occurring within a brain. (Source: http://www.bic.mni.mcgill.ca/uploads/ResearchLabsNeuroSPEED/restingstatedemo.gif )

1.7 Using Neural Networks to Study the Human Mind

The examples presented above were obtained by modern methods of brain examination. The 1990s, also known as “the decade of the brain,” revealed so much information that the human brain no longer seemed mysterious. That statement is misleading. The brain structure has been explored thoroughly and we have some understanding of its functionality. However, a common problem for studies of highly complex objects appeared: the reconstruction of a whole that results in wastes of separate, distributed, and specific information.

One very efficient technique used in modern science is decomposition—the method of dividing an object into parts. Do you have a problem with description of a huge system? Divide it into several hundred pieces and investigate each piece! Are you at a loss to understand a complex process? Let us find several dozen simple subprocesses that constitute the complex system and investigate each one separately. This method is very effective. If separated subsystems or subprocesses remain resistant to your scientific methods, you can always divide them into smaller and simpler parts. However, decomposition creates other difficulties: who will compile the results of all these research fragments? How and when and in what form will the results be compiled?

In analyzing very complex systems, the synthesis of results is not easy, especially if the results were obtained by various scientific techniques. It is hard to compose a whole picture by integrating anatomic information derived from case descriptions based on physiological data and biochemical results generated by analytical devices. Computer modeling can work as a common denominator for processing information obtained from various sources. It was proven experimentally that a computer model describing anatomy can be joined with computer records of physiological processes and computer descriptions of biochemical reactions. The current approach attempts to build our entire body of knowledge from separate investigations of different biological systems by various scientists.

With reference to neural systems and in particular to the human brain, various computer models may be used to combine results of many different research projects in an effort to achieve an integrated explanation of the functionality of these extraordinarily complex systems. Some of those models will be explained later in the text. In this preliminary chapter, we would like to state that the easiest approach is to attempt to describe our knowledge of the brain in relation to artificial neural networks. Obviously, the human brain is far more complex than the simplified neural networks.

However, it is common in science that the use of simplified models leads to the discovery of rules that allow extrapolation on a larger scale. For example, if a chemist carries out a reaction in a small sample, we are entitled to presume that the same reaction takes place under similar conditions in a vast ocean or on a distant star and the extrapolation is usually valid. Moreover, it is much easier to handle a small laboratory sample than an ocean or a star. While looking for a scientific truth, simplification is often a key to success.

That is why, despite the simplicity of neural networks, researchers use them more and more frequently to model human brain processes to achieve better comprehension. To realize how much we rely on this tool, we will try to compare the complexities of artificial neural networks and animal and human neural systems.

1.8 Simplification of Neural Networks: Comparison with Biological Networks

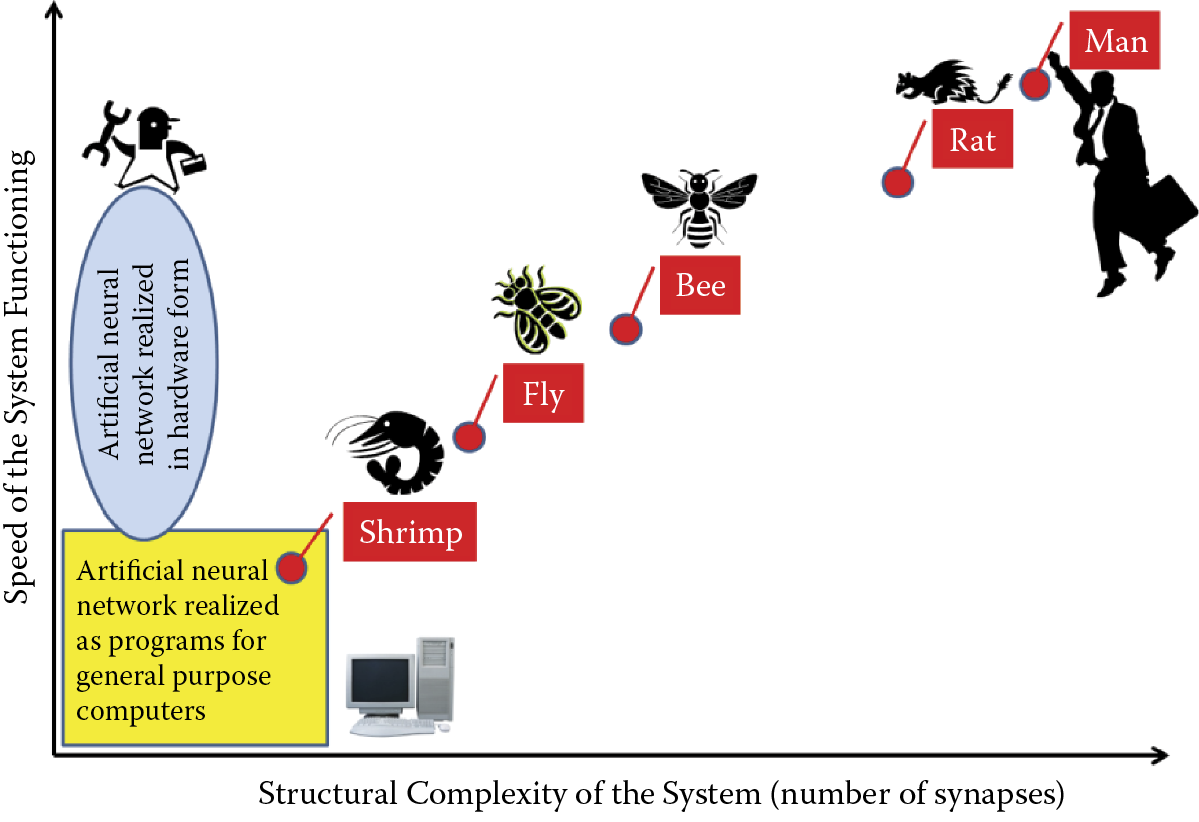

Artificial neural networks are very simplified in comparison with the neural systems of most living creatures. The comparison can be observed in Figure 1.28. Typical neural networks (usually programs for general-purpose computers) are shown at bottom left at lowest levels of complexity and functioning speed. Both dimensions on this plot are represented on a logarithmic scale to show the huge distance between smallest and largest presented values for various organisms.

Localization of artificial neural networks and selected real neural systems showing relation between structural complexity of a system and speed of functioning.

The structural complexity measured by the number of synapses in neuroinformatic systems can vary from 102 for a typical artificial neural network used for technological purposes up to 1012 for the human brain. This dimension for artificial neural networks is limited by value around 105 or 106 because of computer memory limitations, where appropriate values for the fairly simple brains of flies and bees are characterized by 108 and 109 synapses, respectively. In comparison with these neural systems, mammal central nervous systems have huge numbers of synapses (1011 for rats and 1012 for humans).

Let us consider the almost linear relation between structural complexity of biological neural systems and their speed of functioning (Figure 1.28). In fact, it is a general rule caused by massively parallel biological neural system functioning. When a system consists of more elements (more neurons and more synapses) and all these elements work together simultaneously, the speed of data processing increases proportionally to the system structural dimensions.

For artificial neural networks, the speed of system functioning depends on the form of network realization. When a neural network is realized as a program-simulating neural activity (e.g., learning or problem solving) on a general-purpose computer, the functioning speed is limited by the performance limits of the processor. Clearly it is impossible to speed processing beyond the hardware limitations with any type of program. Therefore, artificial neural networks realized as programs on general-purpose computers are rather slow.

It is possible to achieve high speed functioning by an artificial neural network when it is realized in hardware form (see ellipse in Figure 1.28). In a bibliography or on the Internet, readers can find many examples of neural networks realized as specialized electronic chips such as field-programmable gate arrays (FPGAs). Other solutions involve optoelectronics—chips are fabricated using partial analog technologies; neurochips that combine electronic silicon and biological parts (living neural cells or neural tissues treated as device components).

All such methods of hardware realization of artificial neural networks may operate very fast (Figure 1.28) but the structural complexity of such systems is always very limited and elasticity of system application is not satisfactory for most users. Therefore, in practice, most users of artificial neural networks prefer software solutions and accept their limitations.

Figure 1.28 indicates that some biological neural systems can be located within artificial neural network ranges. For example, the brain of a shrimp can be compared with an artificial neural network and exhibit no superiority in complexity or in speed of information processing. Therefore, the dot symbolizing shrimp neural system parameters is located within the box area indicating artificial neural network parameters. However, the complexities of their neural systems and speed of data processing for most biological species are much greater than the best parameters achieved by artificial neural networks. The complexity of the human brain is a billion times greater than parameters observed in artificial neural networks.

1.9 Main Advantages of Neural Networks

Neural networks are used widely to solve many practical problems. A Google search of neural networks application will yield about 17,500,000 answers. Wow! Of course, these search results include many worthless messages, but the number of serious articles, books, and presentations showing various neural network applications certainly total millions. Why are so many researchers and practicing engineers, economists, doctors, and other computer users interested in using neural networks? The widespread use may be attributed mainly to the following advantages.

- Network learning capability allows a user to solve problems without having to find and review methods, build algorithms, or develop programs even if he or she has no prior knowledge about the nature of the problem. A user needs some examples of similar tasks with good solutions. A collection of successful solutions of a problem allows use of a neural network for learning the results and applying them to solve similar problems. This approach to problem solving is both easy and comfortable.

- Pattern recognition has no easy algorithmic solution. A neural network can be comfortably engaged in the learning process to generate solutions in this area. Theoretical analysis of particular types of images (e.g., human faces) leads to conclusions for which exact algorithms are unavailable, such as those guaranteeing proper differentiation of faces of different persons and reliably recognizing the face of a specific person regardless of position, similar appearance, and other factors. It is possible to use learning neural networks on different images of the same person in different positions and also images of different persons for differentiation. These images serve as learning examples that help in the process of identification.

- Another typical area where a learning neural network can be better than a specific algorithm relates to prediction. The world today is abuzz with forecasting weather, money exchange rates, stock prices, results of medical treatments, and other items. In all prediction problems, up-to-date status and historical data are equally important. It is nearly impossible to propose a theoretical model for all nontrivial problems that can be used for algorithmic forecasting. Neural networks are effective for solving these problems too. Historical facts can serve as learning examples in these tasks. It is possible to develop many successful neural network forecasting models by using relevant history and considering only stationary processes.

- Another advantage is the realization of neural networks as specialized hardware systems. Until recently, neural network applications were based on software solutions. Users had to be involved with all steps of problem solving (i.e., neural network formation, learning, exploiting, and using computer simulation). The situation is slowly changing as hardware solutions for neural networks become available. Numerous electronic and optoelectronic systems are based on neural network structures. The hardware solutions offer high degrees of parallel processing, similar to the biological brain in which many neurons work simultaneously to activate vision, hearing, and muscle control at the same time.

Neural networks are used mostly for pattern recognition. The first known neural network called the perceptron was designed by Frank Rosenblatt and dedicated to automatic perception (recognition) of different characters. Kusakabe and colleagues (2011) discussed similar applications of neural networks on pattern recognition. Another interesting application of neural network is the automated recognition of human actions in surveillance videos (Shuiwang et al., 2013). Automatic classification of satellite images is another interesting application of neural networks (Shingha et al., 2012). Optical character recognition is perhaps the oldest and most common application.

Automatic noise recognition using neural network parameters is gaining popularity quickly (Haghmaram et al., 2012). The ability of neural networks to automatically solve recognition and classification problems is often used in diagnostic applications. A very good overview of neural network applications is found in Gardel et al. (2012). In general, objects recognized by neural networks vary hugely (Nonaka, Tanaka, and Morita, 2012; Deng, 2013); examples are mathematical functions and nonlinear dynamic system identification. Many neural network applications are connected with computer vision and image processing.

A typical example of such research related to medical applications is described in a paper by Xie and Bovik (2013). Another example of medical image processing by means of neural networks can be found in a paper by Wolfer (2012). Neural networks are used for many nonmedical types of analyses. Latini et al. (2012) demonstrated the application of neural networks to satellite image processing for geoscience purposes.

As noted above, forecasting is another typical application for neural networks. Samet et al. (2012) used artificial neural networks for prediction of electrical arc furnace reactive power to improve compensator performance. Liu et al. (2012) used neural-based forecasting to predict wind power plant generation. Thousands of examples of neural network applications exist. Many can be found by using Internet search engines. The important point is that neural networks have proven to be useful tools in many areas of scientific and professional activities. For that reason, it is useful to understand their structures, operation characteristics, and practical uses.

1.10 Neural Networks as Replacements for Traditional Computers

Based on the previous section, neural networks have many uses and can solve various types of problems. Does that mean we should give up our “classical” computers and use networks to solve all computational problems? Although networks are “fashionable” and useful, they present several serious limitations that will be discussed in the following chapters. Readers will also learn about network structures, methods of learning to use networks, and applications to specific tasks. Certain tasks cannot be handled by neural networks.

The first category of tasks not suitable for neural networks involves symbol manipulation. Neural networks find any information processing task involving symbols extremely difficult to handle and thus should not be used for elements based on symbol processing. In summary, it makes no sense to create a text editor or algebraic expression processor based on a neural network.

The next class of problems not suitable for a neural network involves calculations that require highly precise numerical results. The network always works qualitatively and thus the results generated are always approximate. The precision of such approximations is satisfactory for many applications (e.g., signal processing, picture analysis, speech recognition, prognosis, quotation predictions, robot controls, and other approximations). However, it is absolutely impossible to use neural networks for bank account services or precise engineering calculations.

Another situation in which a neural network will not produce effective results is a task that requires many stages of reasoning (e.g., deciding the authenticity or falseness of sequences of logical statements). A network solves a specific type of problem and generates an immediate result. If, however, a problem requires argumentation involving many steps and documentation of the results, an artificial network is useless. Attempts to use networks to solve problems beyond their capability leads to frustrating failures.

1.11 Working with Neural Networks

Readers should not jump to conclusions about the limitations of neural networks based on the statements above. A neural network alone cannot make symbolic calculations, but it can support systems that operate on symbols by handling functions symbol-based systems cannot handle alone. Good examples of such networks include those designed by Teuvo Kohonen, the classical NetTALK products, and the invention of Terrence Sejnowski that changes orthographic text into a phonemic symbol sequence used to control speech synthesizers.

While neural networks are not useful for terminals used to process customer transactions in banks, they are very effective for dealing with debtor credibility issues and establishing conditions for negotiating contracts. In summary, the neural network is useful for many applications in many fields worldwide. Its possibilities are simply not as universal as those of the classical computer.

Enthusiasts can easily list the results generated by neural networks that turned out to be far superior to those solved by classical computers. Proponents of classical computing, on the other hand, can easily show that the results produced by neural networks were incorrect. Both groups are correct—in a way. We shall try to explain both sides of this argument to find the truth in the following chapters by describing the teaching techniques of neural networks, various types of learning methods, simple programs, and ample illustrations that we hope will enable readers to make their own judgments.

In this chapter, we explained the genesis and benefits of neural networks in an effort to foster reader interest in such devices. In the following chapters, we treat neural networks as devices to help achieve practical goals (e.g., obtaining favorable stock market projections). We will show you how interesting the networks are because their designs are based on the structure and functioning of the live brain.

We will also describe significant successes that may be achieved by engineers, economists, geologists, and medical practitioners who use artificial networks to improve efficiency in their respective fields. We will also discuss the studies of the human brain that led to the invention of artificial neural networks as self-educating systems. In each chapter, the most important theoretical information is clearly categorized by descriptive headings. This enables readers to read or skip the important but less colorful mathematical techniques.

By accessing our programs on the Internet (http://home.agh.edu.pl/~tad//index.php?page= programy&lang=en), you will be both a witness and an active participant in unique experiments that will provide even more understanding of neural networks and the functioning of your own brain—a mysterious structure abounding in various capabilities that Shakespeare (1603) described as “a fragile house of soul.”

Do you know why it is worthwhile to study neural networks? If the answer is yes, we invite you to read the remaining chapters! This book is current and exhaustive. We did our best to include the most important and up-to-date information about neural networks. We did not, however, cover the small but onerous details in which each branch of calculation technology abounds and are usually expressed as mathematical formulae. We decided to treat seriously the principle written on the cover of Stephen Hawking’s A Brief History of Time (1988, 1996). Hawking wrote that each equation included in his text diminished the number of readers by half.

It is important to us that this book appeals to a large audience, among whom are readers who will find neural networks amazing and useful devices. Therefore, we are not going to include even a single equation in the text of this book. Even one reader discouraged by the inclusion of equations represents a heavy loss.

References

Callaghan, P.T., Eccles, C.D., Xia, Y. 1988. NMR microscopy of dynamic displacements: k-space and q-space imaging. Journal of Physics E., Scientific Instruments, vol. 21(8), pp. 820–822.

Friston, K. 2009. Causal Modeling and Brain Connectivity in Functional Magnetic Resonance Imaging. PLOS Biology, vol. 7(2), DOI: 10.1371/journal.pbio.100003.

Hawking, S. 1988, 1996. A Brief History of Time. New York: Bantam.

Kusakabe, K. Kimura Y. and Odaka K. 2011. Character recognition using feature modification with neural networks learning feature displacement in two opposite directions. IEICE Transactions on Information and Systems, vol. J94-D, no.6, pp. 989–997.

Latini, D., Del Frate, F., Palazzo, F. et al. 2012. Coastline extraction from SAR COSMO-SkyMed data using a new neural network algorithm. In Proceedings of IEEE International Geoscience and Remote Sensing Symposium, pp. 5975–5977.

Liu, Z., Gao, W., Wan, Y.H. et al. 2012. Wind power plant prediction by using neural networks. In Proceedings of IEEE Energy Conversion Congress and Exposition, pp. 3154–3160.

Rosenblatt, F. 1958. The Perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, American Psychological Association, vol. 65, no. 6, pp. 386–408.

Samet, H., Farhadi, M.R., and Mofrad, M.R.B. 2012. Employing artificial neural networks for prediction of electrical arc furnace reactive power to improve compensator performance. Proceedings of IEEE International Energy Conference, pp. 249–253.

Shakespeare, W. 1603. Hamlet, Prince of Denmark. Act I, Scene 3. http://www.shakespeare-online.com/plays/hamlet_1_3.html

Van Essen, D.C., Smith, S.M., Barch, D.M., Berhens, T.E.J., Yacoub, E., Ugurbil, K. 2013. The WU-Minn Human Connectome Project: An Overview. NeuroImage 80, pp. 62–79. http://www.humanconnectomeproject.org/ accessed on April 2, 2014

Wolfer, J. 2012. Pulse-coupled neural networks and image morphology for mammogram preprocessing. In Proceedings of Third International Conference on Innovations in Bio-Inspired Computing and Applications. IEEE Computer Society, pp. 286–290.

Xie, F. and Bovik, A.C. 2013. Automatic segmentation of dermoscopy images using self-generating neural networks seeded by genetic algorithm. Pattern Recognition, 46, 1012–1019.