IBM z/OS system management functions

IBM System z has always had a reputation for delivering the highest levels of system availability. The operating system components have integrated failure detection to allow recovery actions to occur without operator intervention. But even with that capability integrated into the operating system, failures can still occur.

As part of the effort to deliver even better availability, System z has created a set of system management functions that not only detect failures, but avoid failures. When failures do occur, these new functions help you more easily diagnose the cause of the problem and advise solutions to help you either to avoid an outage or reduce your mean time to recovery. Failures will still occur, but the advancements in failure avoidance and early failure detection mean that more failures can be mitigated before being visible to users, and before they impact your business.

As explained in 1.2.4, “Analytics can help address these issues” on page 6, you cannot achieve high availability by looking at only one view of your system or by using only one tool. Therefore, System z has multiple functions and products that perform their processing at different layers of the software stack, using different metrics for their early detection and different methodologies to detect abnormal behavior.

In this chapter, we highlight the various component functions and products available on System z that are designed to help you achieve your availability goals. We also provide sample scenarios in which each of them can be used.

The following topics are discussed:

•Overview of System z system management functions

•IBM z/OS Management Facility

•IBM Health Checker for z/OS

•Runtime Diagnostics

•Predictive Failure Analysis

•IBM zAware

•Message analysis with the various functions and products

•Additional tips

•Sample scenarios

2.1 Overview of System z system management functions

There are three general categories of software-detected system failures: masked failures, hard failures, and soft failures, as explained here:

Masked failure A masked failure is a failure that is detected and corrected by the software.

Hard failure A hard failure occurs when the software fails completely, quickly, and cleanly. For example, a hard failure occurs when the operating system abnormally terminates an address space.

Soft failure Sometimes a software component behaves in an unexpected or unusual manner. This abnormal behavior can be combined with events that usually do not generate failures, but produce secondary effects that might eventually result in a system failure. These types of failures are known as soft failures. You might have also heard these types of failures referred to as “sick, but not dead” incidents, meaning that the system appears to be running, but is not totally available.

Soft failures are often manifested as stalled or hung processes, resource contention, storage growth, or repetitive errors.

Clients have told us that soft failures account for a small percentage of the problems when compared to masked failures and hard failures, but they cause the largest business impact.

Soft failures are difficult to diagnose because the failure often does not occur in the address space causing the problem. In fact, the failure can often occur in an address space that might not even be associated with the issue.

During a soft failure, the problem often escalates from a minor problem in one address space or component to the point that the program eventually stops working and might even cause other components to fail. This sympathy sickness has been observed when either hard failures or abnormal behavior generate a system failure that could not be easily isolated to a failing component or subcomponent.

Because soft failures are difficult to detect, are quite unique, can be triggered anywhere in either software or hardware, and occur infrequently, their isolation is quite difficult. Therefore, to achieve high availability and reduce the rate of system failures, system management functions have been enhanced over the years and new functions have been developed. Additional tools have been developed to help you quickly discover the source of problems when they do arise.

Some of the system management functions are embedded directly in z/OS. These are sometimes referred to as in-band processing. Some of these functions help you avoid failures. Others help you detect, diagnose, and recover from the failures.

With the introduction of IBM zAware, we now have a tool that is independent of the operating system. Being independent of the operating system is sometimes referred to as out-of-band processing. Having an independent systems management function allows diagnosis of failures to occur even when the operating system is nonfunctional. It also allows compute-intensive analysis to be offloaded from the operating system’s LPAR.

System message logs have always been a critical resource for diagnosing operating system, middleware, and application problems. However, the volume of messages sent to the z/OS logs makes it difficult for operators and system programmers to quickly identify the messages required to pinpoint the problem.

Systems management functions including Runtime Diagnostics, Predictive Failure Analysis (PFA), and IBM zAware all provide functions that use messages and message logs to aid in detecting and diagnosing a problem. The differences in how these functions and products use the messages is described in 2.7, “Message analysis with the various functions and products” on page 82.

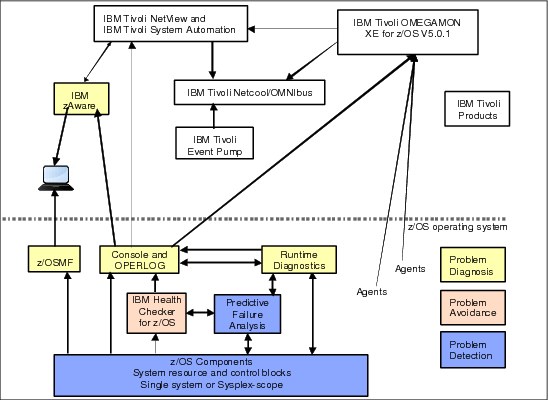

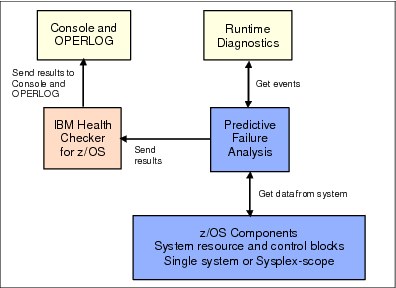

Figure 2-1 illustrates the system management functions included in z/OS. Each of the functions is described later in this book.

Figure 2-1 z/OS system management component relationship

Notice that each of the system management functions has its own specialty and some of them could be put into more than one specialty category:

•Problem detection

– z/OS operating system components have always had problem detection embedded in their logic.

As they detect abnormal situations such as software abends, their recovery code is invoked to handle the failure without operator intervention. Some z/OS components also provide soft failure detection, which usually results in an alert of some kind. See 2.1.1, “z/OS component functions” on page 27 for more information about the system management portion of z/OS operating system components.

– Predictive Failure Analysis (PFA) detects potential soft failures and alerts the operator before the failure escalates into a system problem.

PFA’s output can also be used to help diagnose the failure. See 2.5, “Predictive Failure Analysis” on page 42 for more information about PFA.

•Problem avoidance

– IBM Health Checker for z/OS is designed to identify configuration errors before they result in soft failures.

Health checks can be written by IBM component owners, ISVs, and by you. Health checks can set thresholds for system resources and detect migration actions that have not been completed so that your systems avoid these types of problems. See 2.3, “IBM Health Checker for z/OS” on page 30 for more information about IBM Health Checker for z/OS.

•Problem diagnosis

– z/OS OPERLOG and the z/OS console contain messages issued by z/OS components, middleware, and applications.

These messages are useful for diagnosing problems and are a data source for IBM and ISV problem determination and system automation.

– Runtime Diagnostics is a useful tool that can provide quick point-in-time diagnosis of problems.

This component is designed to be used for quick analysis of specific z/OS system issues to give the system operator an indication of where problem investigation should start. You can point Runtime Diagnostics to a system other than the system on which the command is being issued to detect problems on another system in your sysplex. See 2.4, “Runtime Diagnostics” on page 32 for more information about Runtime Diagnostics.

– z/OS Management Facility (z/OSMF) runs on the z/OS system and manages z/OS from z/OS itself.

z/OSMF is a Web 2.0-based application on z/OS, with direct access to z/OS data and information. It serves multiple roles in delivering simplified tasks to improve system programmer and operator productivity.

This book focuses on the role of z/OSMF as it relates to problem determination due to soft failures which is simplified with the z/OS incident log task. See 2.2, “IBM z/OS Management Facility” on page 28 for more information.

So, where does IBM zAware fit in the picture in Figure 2-1 on page 24? It is independent and resides outside of z/OS. It is designed to complement the functions in that figure by using powerful analytics algorithms to detect and help diagnose message anomalies without the need to access z/OS. This design provides the following benefits:

•It uses fewer system resources. This can sometimes be important when a system problem is occurring.

•It is operational even if all systems in your sysplex are having a problem.

•It allows you to easily see which system or systems are experiencing anomalous message behavior.

Figure 2-2 provides a simplified view of the various z/OS system management functions and products, identifies their locations in relation to z/OS, and gives a rudimentary view of their interfaces.

Figure 2-2 Systems management functions and products

Any component or product that uses OPERLOG or other system logs can participate in the systems management realm. For example, both IBM Health Checker for z/OS (including PFA output) and Runtime Diagnostics send their results to the z/OS system log.

Other examples include:

•The IBM Tivoli NetView CANZLOG function consolidates messages from the z/OS syslog and the NetView netlog. NetView can automate messages from either of these sources.

Additionally, as shown in Figure 2-2, NetView is able to use the IBM zAware API to retrieve information from the IBM zAware LPAR, allowing you to consolidate information from IBM zAware into the NetView operator interface.

•IBM Tivoli System Automation can be used to automate messages, and policies can be established to control corrective actions.

•IBM OMEGAMON® XE for z/OS 5.1.0 not only uses the messages, but also shows all health checks produced by IBM Health Checker for z/OS (including those owned by PFA).

•IBM zAware uses analytics to detect anomalous behavior using the OPERLOG.

•Performance issues and system problems can be detected by OMEGAMON.

•Netcool/OMNIbus provides centralized monitoring of health check alerts, performance, network activity, and so on.

•Tivoli Event Pump parses the messages, interprets the resource information within the messages, and converts the message to an event that can be read by other products.

•You can get alerts if PFA detects an abnormal condition and forward those events to other Tivoli event management products.

2.1.1 z/OS component functions

As shown in Figure 1-1 on page 3, detecting soft failures as close to the source as possible uses the minimum amount of system resources and ensures that the failure is responded to in the minimal time. Whenever possible, z/OS components try to detect and address soft failures themselves.

The following list provides a few examples of how z/OS components detect problems on a single system:

•The GRS component added enhanced contention analysis to identify enqueue blockers and waiters a number of releases ago. More recently, GRS implemented similar support for latches. Both enqueue contention detection and latch contention detection use the D GRS,ANALYZE command. The latch identity string is exploited by z/OS UNIX System Services, the Logger component, and IBM RACF® for their latches. In addition, Runtime Diagnostics takes advantage of the functions provided by GRS in its analysis of enqueue contention and GRS latch contention.

•The Missing Interrupt Handler (MIH) component intercepts incomplete I/O operations to prevent an application or system outage due to a device, control unit, or hardware (cabling) error. After the scope of the problem is understood, hardware and software recovery mechanisms are invoked and diagnostic data is captured.

•The system invokes the I/O Timing Facility to identify slow I/O response times by monitoring I/O requests that exceed I/O timing limits for devices.

•IBM has made improvements in channel recovery such that for frequently occurring path errors, the path is taken offline rather than having the hardware or operating system repeatedly try to recover the path.

•z/OS can detect contention in the Catalog Address Space, which identifies catalog tasks that appear to be stuck while waiting on an event. When this occurs, a symptom record is created and the task is terminated if the system deems it is safe to do so.

•The JES2 Monitor assists in determining why JES2 is not responding to requests. It monitors conditions that can seriously impact JES2 performance.

The following list provides a few examples of how z/OS components detect problems in a sysplex:

•XCF stalled member support detects whether a system that appears to be healthy is actually performing useful work. There might be critical functions that are non-operational that are making the system unusable and induce sympathy sickness elsewhere in the sysplex. When a problem is detected, action should be taken to restore the system to normal operation or remove it from the sysplex to avoid sympathy sickness.

•Sysplex Failure Management (SFM) detects and can automatically address soft failures that can cause sympathy sickness conditions when a system or sysplex application is unresponsive and might be holding resources needed by other systems in the sysplex.

•With critical member support, XCF terminates a critical member if it is “impaired” long enough. A critical member is a member of an XCF group that identifies itself as “critical” when joining the group. For example, GRS declares itself as a critical member when it joins its XCF group. If GRS cannot perform work for as long as the failure detection interval, it is marked as impaired. GRS indicates that when this occurs, the system on which it is running should be removed from the sysplex to avoid sympathy sickness.

•System Status Detection (SSD) uses z/OS BCPii to let XCF query the state of other systems through authorized interfaces through the Support Element and HMC network. If XCF detects that the system in question is in a state where it can no longer operate or recover, the system can be immediately partitioned out of the sysplex without having to wait for the failure detection interval to expire.

2.2 IBM z/OS Management Facility

IBM z/OS Management Facility (z/OSMF) simplifies, optimizes, and modernizes the z/OS system programmer and operator experience. z/OSMF delivers solutions using a task-oriented, web browser-based user interface to improve productivity by making the tasks easier to understand and use. z/OSMF makes the day-to-day operations and administration of your system easier for both new and experienced system programmers and operators.

2.2.1 z/OSMF tasks

The primary objective of z/OSMF is to make new staff productive as quickly as possible. This is accomplished by automating tasks and reducing the learning curve through a modern, simplified, and intuitive task-based, browser-based interface.

z/OSMF provides assistance in a number of areas:

•A configuration category with the Configuration Assistant

•The Links category that lets you add web links to the z/OSMF GUI

•A Performance category containing sysplex status, monitoring desktops, and workload management tasks

•The Problem Determination category that provides the incident log function

•The z/OSMF Administration category to let you administer z/OSMF itself

Because this book is focused on tools to help you avoid, detect, and diagnose soft failures, we only discuss the incident log task here.

Details about z/OSMF and its tasks can be found in other publications such as z/OSMF Configuration Guide, SA38-0652 and the IBM Redbooks document z/OS Management Facility, SG24-7851.

2.2.2 z/OSMF Incident Log

With its focus on simplification, z/OSMF is a natural addition to the set of z/OS functions focused on high availability and problem determination. Even with all the system management functions designed to avoid and detect failures, what if a problem still occurs? You need to diagnose and resolve the problem as quickly as possible to reduce your mean time to recovery and hopefully ensure that the problem does not occur again. The z/OSMF Incident Log task is designed to help you achieve higher availability by making problem determination diagnostic data management easier and quicker.

The reality is that when a problem occurs, it can be complicated and time-consuming to collect the right data and documentation to perform your problem analysis. And after you have the data for your problem analysis, it can be difficult to manage, particularly for less experienced staff.

The z/OSMF Incident Log task was created to alleviate these common troubleshooting challenges. It improves the first failure data capture for human-detected and system-detected problems that result in SVC dumps and creates diagnostic data snapshots for log data. It also provides a simple interface that allows you to perform these tasks:

•FTP this data to IBM or other software providers

•Display summary and detail information

•Drive incident management actions

•Manage the data related to the incident

For example, an incident might contain snapshots with 30 minutes of OPERLOG data (or SYSLOG data, if OPERLOG is not activated), one hour of LOGREC detail, and 24 hours of LOGREC summary. These materials are commonly required for z/OS-related problems reported to IBM service.

You can review all the incidents for your sysplex and drill down to see the diagnostic data associated with each incident. These details include the key system problem symptoms and the system symptom string.

If needed, you can then easily FTP the documents to IBM or an ISV or elsewhere for further debugging without having to remember where the data was archived. It also simplifies the steps involved to take another dump for a previously-recognized problem.

The z/OSMF Incident Log also integrates the IBM z/OS Problem Documentation Upload Utility (PDUU), which encrypts all of the files associated with an incident and transmits them to IBM using parallel sessions to send the material (such as a large system dump) more quickly.

z/OSMF Incident Log is accessed directly from the IBM z/OS Management Facility main panel and is located under “Problem Determination” as shown in Figure 2-3.

Figure 2-3 z/OSMF Incident Log

2.3 IBM Health Checker for z/OS

The IBM Health Checker for z/OS helps identify potential configuration problems before they impact availability or cause system outages. It checks the active z/OS and sysplex settings and definitions and compares them to IBM recommended best practice configuration values or customer-defined overrides. It generates output with detailed messages and reports to inform you of any potential problems and suggested actions to resolve them.

IBM Health Checker for z/OS is a preventative application that should be set up to run continuously on all systems to detect potential problems and to alert you if such a situation exists. It is also useful when new software releases or maintenance is installed on your system that might result in configuration issues.

It is not intended as a monitoring tool itself, but the output from health checks can be used by your monitoring tools. For example, NetView could raise an alert if a Health Checker exception above a certain level is issued. Also, some Health Check exception messages are contained in the “domain-specific” rules in IBM zAware.

IBM Health Checker for z/OS consists of the following parts:

•Framework

The framework is an interface that manages services like check registration, messaging, scheduling, command processing, logging, and reporting. It is an open architecture that supports check development by IBM products, independent software vendors (ISVs), and customers.

The framework is used by Predictive Failure Analysis as described in 2.5.3, “PFA and IBM Health Checker for z/OS integration” on page 45.

•Checks

Checks are programs or routines that evaluate component, element, or product-specific setting or definition, and look for potential problems on a running system. Checks are independent of the framework. The IBM checks are owned, delivered, and supported by the corresponding element of z/OS.

The following is an example of the types of things that the health checks provided by IBM look for:

•Check that specific system settings conform to best practices and recommendations.

•Check the configuration for single points of failure.

It is especially important that these checks run on a regular basis in case an unnoticed hardware change or failure has inadvertently introduced a single point of failure.

•Check the usage of finite system resources, such as common storage.

•Check that all the system’s availability-enhancing capabilities are being exploited.

•Check that sensitive system resources are protected.

You can, of course, also use vendor-provided or user-written checks to look for things such as:

•Ensuring compliance with installation-defined system and conventions. For example, you can ensure that user IDs are set up consistently based on the company’s requirements.

•Implement and confirm security compliance and audit. For example, ensure that installation-specific resources are protected properly.

•Check for configuration settings that changed since the last IPL to determine whether the change will be lost upon the next IPL. For example, compare the static content in Parmlib members used at IPL to the settings that are currently in effect.

•Detect changes, either those implemented dynamically or introduced by an IPL, that cause the system to diverge from the recognized best practice or your override. For example, you might have determined that the optimal setting for the WIDGET parameter in this system is 13. If a colleague changes that parameter to some other value without informing you or going through the normal change management process, a health check could inform you of the change.

Starting with z/OS V1R10, a new type of health check was introduced to exploit the IBM Health Checker for z/OS framework. Migration health checks help you determine the appropriateness of various migration actions:

•Before you migrate to the new z/OS release, you can use these new checks to assist with your migration planning.

•After you migrate, you can rerun these checks to verify that the migration actions were successfully performed.

These migration checks only report on the applicability of the specific migration actions on your current active system; they do not make any changes to the system.

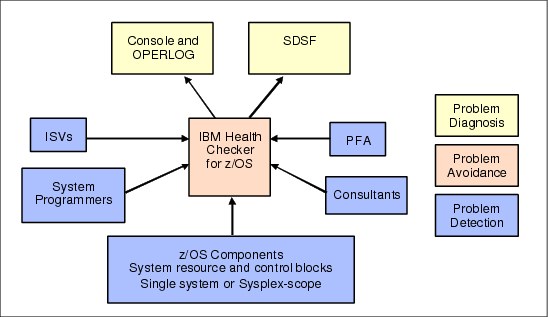

IBM provides a rich set of checks. But in addition, other vendors, customers, and consultants can write and add their own check routines to IBM Health Checker for z/OS as shown in Figure 2-4. By utilizing the ability of these various components and products to detect potential problems, IBM Health Checker for z/OS allows you to avoid future problems by alerting you of their existence.

Figure 2-4 IBM Health Checker for z/OS

Details of IBM Health Checker for z/OS are well documented and not repeated here. For more information, see the IBM Redbooks document Exploiting the IBM Health Checker for z/OS Infrastructure, REDP-4590 and IBM Health Checker for z/OS, SA22-7994.

2.3.1 Getting the most out of IBM Health Checker for z/OS

The following tips explain how to use IBM Health Checker for z/OS to optimize your availability:

•Always start IBM Health Checker for z/OS automatically at IPL.

•Do not ignore the results of the migration checks.

•Investigate exceptions and take appropriate action. For example, do not simply change a configuration value without knowing why it should be changed.

– That said, the first time you start the Health Checker, you might get a large number of exceptions. The goal should be to eventually remove all exceptions by fixing the condition or by tuning the check so that it more accurately reflects the normal behavior of your system.

If the check cannot be changed to avoid the exception and you are sure that skipping this check will not expose system problems, you can deactivate it.

In either case, your objective should be that no exceptions are detected during normal operation.

•After your systems run with no exceptions, you are in an ideal position to know that a health check exception indicates something is abnormal.

•Automate exceptions issued by IBM Health Checker for z/OS:

– The messages produced by each check are documented together with the check in IBM Health Checker for z/OS, SA22-7994. Because a given check might vary in significance from one enterprise to another (or even one system to another), you can use the WTOTYPE and SEVERITY parameters for each health check to control how exceptions are presented.

•In an IBM GDPS® environment, the recommendations provided by GDPS might not be the same as those provided by z/OS. If there is a clash, use the value provided by GDPS value.

2.4 Runtime Diagnostics

What if a problem occurs on your system? Do you fear getting that phone call saying that something is wrong and you have no idea where to start looking? Is there a “bridge call” in your future and do you need an initial check of the system before attending it?

Starting with z/OS 1.12, the Runtime Diagnostics function is available to reduce the time needed to analyze and diagnose a problem and to reduce the experience level needed by the system operator. It provides timely, comprehensive diagnostics at a critical time period and suggests next steps for you to take to resolve the problem quickly.

Runtime Diagnostics was created as a joint design effort between IBM development and service organizations.

•IBM service personnel provided the expertise in knowing what types of symptoms they most often saw in diagnostic data, and the types of data that are most helpful when investigating system failures.

•IBM development personnel provided the expertise in knowing what types of symptom data component experts need when diagnosing problems, and the knowledge for creating this type of service tool within z/OS.

The goal of Runtime Diagnostics is to diagnose a failure in a timely manner and mimic the types of investigation that an experienced system programmer would do to help isolate the problem as quickly as possible.

•It looks for specific evidence of soft failures at the current point in time, with a goal of doing so in 60 seconds or less.

•It also provides recommendations for the next steps that the system programmer should take in the analysis, such as what to investigate further. However, it does not take any corrective action itself, because many of the recommended actions can be disruptive. For example, Runtime Diagnostics might recommend that jobs need to be cancelled.

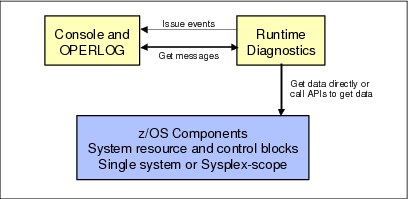

As shown in Figure 2-5, Runtime Diagnostics obtains its data from OPERLOG, system control blocks, and system APIs. It perform no processing until explicitly invoked, and has minimal dependencies on system services when performing its diagnosis.

The Runtime Diagnostics output is called an event. A Runtime Diagnostics event is simply a multiline WTO that describes the event and the recommended actions to take. If you want the output to be directed to a sequential data set, you can do so by editing the HZR procedure that is shipped in SYS1.PROCLIB and modifying the HZROUT DD statement to point at a data set instead of DD DUMMY.

Figure 2-5 Runtime Diagnostics input and output

There are three categories of Runtime Diagnostics symptoms, all of which are shown in Figure 2-6. Runtime Diagnostics identifies problems in the following areas:

•Specific components through OPERLOG messages that are related to the component

•Global resource contention issues with enqueues, GRS-managed latches, and z/OS UNIX file system latches

•Address space execution issues by identifying high CPU usage, local lock suspension issues, and TCB loops.

Figure 2-6 Runtime Diagnostics detection types

2.4.1 Invoking Runtime Diagnostics

Depending on your release, there are differences in how you invoke Runtime Diagnostics:

•In z/OS 1.12, Runtime Diagnostics was a started task that you started when needed using the start hzr,sub=mstr command. When it completed its analysis, the task would end.

•In z/OS 1.13, Runtime Diagnostics became a long-running started task that is started with the same command used in z/OS 1.12. However, now it does not end until you issue a p hzr command. In z/OS 1.13, the Runtime Diagnostics started task will remain started, but will not do anything until you request it to run its diagnostics using the modify command: f hzr,analyze.

The reason that it is a long-running started task in z/OS 1.13 is because it needs to be available for PFA to invoke if PFA thinks a metric might be abnormally low, and to avoid startup and shutdown resource thrashing. The integration between Runtime Diagnostics and PFA is described in 2.5.8, “PFA and Runtime Diagnostics integration” on page 63.

If you want to target a system other than the home system for critical message detection and enqueue contention checking, you can use the SYSNAME parameter. In z/OS 1.12, this parameter is specified on the start command. In z/OS 1.13, this parameter is specified on the modify command.

Even though Runtime Diagnostics was first made available in z/OS 1.12, the targeted system can be at z/OS 1.11 or later and the diagnostics are still performed.

There is also a DEBUG parameter that takes dumps when different types of events are found, or when they are not found and you believe they should be. This parameter is specified on the start command in z/OS 1.12 and on the modify command in z/OS 1.13. It should only be used when the IBM service organization requests this information.

Runtime Diagnostics is not an automated process and is not a system monitor. Its intent is to be a quick, on-demand, diagnostics tool that you use when you believe a soft failure is occurring.

If you choose to automate calls to Runtime Diagnostics, such as invoking it every hour, it is likely that events will be issued even though you are not experiencing soft failures. Some of the events also occur when the system is behaving normally, but they are not a real issue unless you have noticed that the system is having problems. These events are probably not indicative of a system problem at that time and you might waste time investigating these events when no system problem is occurring. This point is especially true for the address space execution and global resource contention categories.

For more information about starting and controlling Runtime Diagnostics, refer to z/OS Problem Management, G325-2564.

2.4.2 Component analysis

Runtime Diagnostics searches OPERLOG for any occurrences of an IBM-defined list of messages that are deemed to be critical to system availability. These messages were identified by z/OS component experts from both IBM service and development as the types that when found, truly indicate a potential system problem. However, due to the volume of messages in the OPERLOG, they are difficult to detect because their occurrence is rare and requires an experienced system programmer to know which ones are critical. If any of these messages are found, an event is created.

Runtime Diagnostics looks back for one hour in the OPERLOG (if that much data is available). For some critical component messages detected by Runtime Diagnostics, additional diagnostics are done. For example, some related messages are grouped into a single event. Runtime Diagnostics also recognizes when a critical situation has been relieved. In some cases, it only shows the last message if a critical message for the same resource name is repeated with some frequency.

Do not expect to always find the full text of the message in the event. Rather, a summarized version that includes the message identifier, up to five lines of message text, and the recommended actions are created in the event. A list of the messages that Runtime Diagnostics detects can be found in z/OS Problem Management, G325-2564.

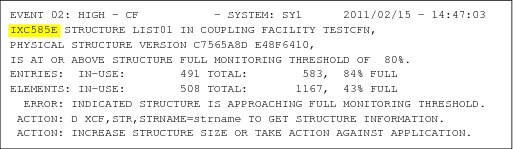

Figure 2-7 shows an example of the Runtime Diagnostics output for a critical message event.

Figure 2-7 Runtime Diagnostics critical message event

Critical message detection is performed for the system for which Runtime Diagnostics was invoked, even when SYSNAME is not the system on which the command was run. That is, you can target any system in your sysplex that is at z/OS 1.11 or above and the critical message detection is performed for it if OPERLOG is available.

To minimize the CPU cost of scanning potentially millions of messages in the OPERLOG, Runtime Diagnostics only searches for messages that were issued on the system for which it was invoked. That is, you cannot use Runtime Diagnostics to search the OPERLOG for all systems in the sysplex with one command. To have critical message detection performed for all systems in the sysplex, the command would have to be issued for each system separately.

|

Tips for critical message detection:

•OPERLOG must be active.

•The list of messages for which OPERLOG is searched is a hardcoded list defined by IBM and cannot be modified.

•OPERLOG is searched back one hour from the current time (if available).

•The Runtime Diagnostics event contains a summary of the message and the recommended actions.

•Some messages are grouped into one event that describes a single problem symptom.

•The detection is performed on the system specified in the SYSNAME parameter. If SYSNAME was not specified, the detection will be performed on the system that the command was issued on.

|

For a list of the messages for which Runtime Diagnostics creates events, refer to z/OS Problem Management, G325-2564.

2.4.3 Global resource contention

Runtime Diagnostics detects three types of global resource contention, as explained here.

Enqueue contention for system address spaces

This type of event is created when a system address space is waiting for an enqueue for more than five seconds.

Runtime Diagnostics has a hardcoded list of system address spaces for which enqueue contention could mean that a system failure is occurring. You cannot modify this list of address spaces.

The checking performed by Runtime Diagnostics to detect enqueue contention is equivalent to invoking the D GRS,AN,WAITER command. The event is created when a waiter is found that has been waiting for more than five seconds. No event is created for blockers that exist on this system because the blocker is listed in the event along with the waiter’s information.

Figure 2-8 shows a sample enqueue contention event. Notice that the event shows both the waiter and the blocker ASID, job name, and the system name, along with other diagnostic information.

Figure 2-8 Runtime Diagnostics enqueue contention event

Enqueue contention checking is performed for the system for which Runtime Diagnostics was invoked. That is, you can target any system in your sysplex that is at z/OS 1.11 or above.

|

Tips for enqueue contention detection:

•The address space must be in the list of system address spaces as defined by Runtime Diagnostics. This list cannot be modified.

•The address space must be waiting for an enqueue for at least five seconds.

•The detection is performed on the system specified in the SYSNAME parameter, or on the home system if SYSNAME was not specified.

|

GRS-managed latch contention

Contention checking for GRS-managed latches is available starting with z/OS 1.13. A GRS latch contention event is created when any address space has been waiting for a latch for more than five minutes.

Latch contention information is obtained directly from GRS by the use of GRS APIs. z/OS UNIX System Services file system latches are omitted from this category because they have their own category (this is described in “z/OS UNIX System Services file system latch contention” on page 38).

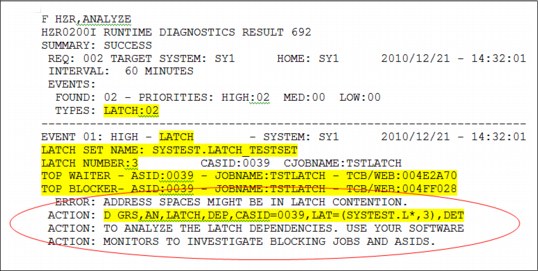

Figure 2-9 shows an example of a GRS latch contention event. The “top waiter” is the address space that has been waiting the longest for the latch set. The recommended action is circled.

Figure 2-9 Runtime Diagnostics GRS latch contention event

|

Tips for GRS latch contention detection:

•This function is available starting with z/OS 1.13.

•The event is created for any address space waiting for a latch for more than five minutes.

•Contention in z/OS UNIX System Services file system latches are handled separately.

•The top waiter is the address space that has been waiting for the latch set the longest.

|

z/OS UNIX System Services file system latch contention

Also new in z/OS 1.13 is z/OS UNIX System Services file system latch contention checking. An OMVS event is created when threads have been waiting for more than five minutes in z/OS UNIX System Services.

These types of latches are separated from the GRS-managed latch contention events because additional processing is performed to determine the specific source of the contention.

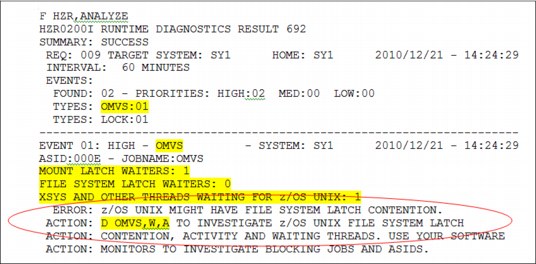

The normal action to take when this type of event occurs is to issue a D OMVS,W,A command to determine the ASID and the job names of the waiters to investigate (this is circled in the example event shown in Figure 2-10).

Figure 2-10 Runtime Diagnostics z/OS UNIX Latch contention event

|

Tips for z/OS UNIX System Services file system latch contention detection:

•This function is available starting with z/OS 1.13.

•The event is created when a thread in any address space is waiting for a UNIX System Services latch for more than five minutes.

•The normal action to take is to issue the D OMVS,W,A command.

|

2.4.4 Address space execution

Runtime Diagnostics provides three types of checking to determine whether execution within any address space is behaving abnormally such that it might cause a soft failure.

CPU analysis

The Runtime Diagnostics CPU analysis is a point-in-time inspection to determine whether any task is using more than 95 percent of the capacity of a single CPU. This level of CPU consumption might indicate that the address space is in a loop.

The analysis is performed by taking two snapshots one second apart to calculate the percentage of CPU used based on the capacity of a single CPU within the LPAR. It is possible for the usage reported to be greater than 100 percent if the address space has multiple TCBs and several of the TCBs are individually using a high percentage of the capacity of a single CPU.

When a high CPU event and a loop event occur for the same job, there is high probability that the task in the job is in a loop in which case the normal corrective action is to cancel the job.

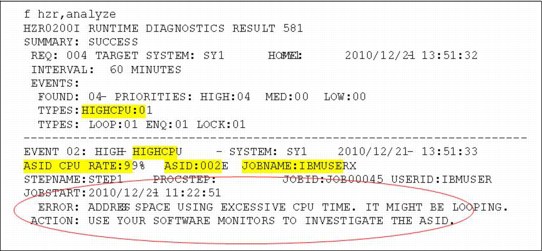

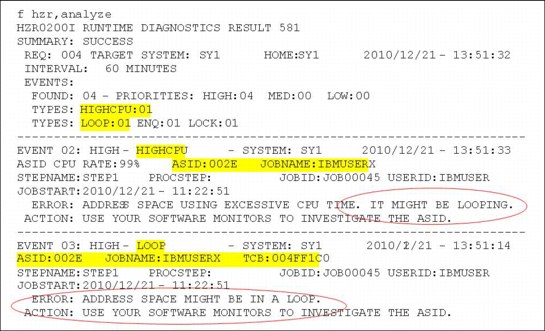

Figure 2-11 shows an example of a high CPU event created by Runtime Diagnostics.

Figure 2-11 Runtime Diagnostics High CPU event

|

Tips for CPU analysis:

•Two snapshots are taken one second apart.

•If any task is using more than 95 percent of a single CPU, a high CPU event is created.

•The event might show usage greater than 100 percent.

•A high CPU event combined with a loop event for the same job usually indicates the job is truly in a loop.

|

Local lock suspension

Local lock suspension analysis is a point-in-time check of local lock suspension for any address space.

Runtime Diagnostics calculates the amount of time an address space is suspended waiting for the local lock. If an address space is suspended more than 50 percent of the time waiting for a local lock, Runtime Diagnostics creates a lock event for that address space.

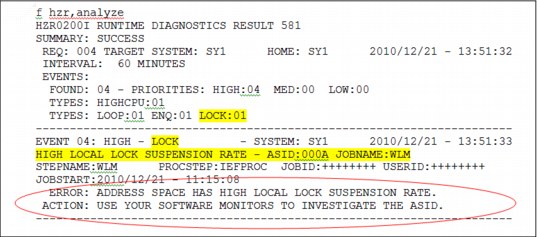

Figure 2-12 shows an example of a local lock suspension event.

Figure 2-12 Runtime Diagnostics local lock suspension event

|

Tip for local lock suspension analysis:

•If an address space is suspended for more than 50 percent of the time, a high local lock suspension rate event is created.

|

TCB loop detection

Runtime Diagnostics looks through all tasks in all address spaces to determine whether a task appears to be looping.

Runtime Diagnostics does this by examining various system information for indicators of consistent repetitive activity that typically appears when a task is in a loop.

When a high CPU event and a loop event occur for the same job, there is a high probability that the task in the job is in a loop, in which case the normal corrective action is to cancel the job.

Figure 2-13 shows an example of a high CPU event and a loop event for the same job.

Figure 2-13 Runtime Diagnostics High CPU event and loop e vent

|

Tips for TCB loop detection:

•The analysis is done for all tasks in all address spaces.

•A high CPU event combined with a loop event for the same job usually indicates that the job is truly in a loop.

|

2.5 Predictive Failure Analysis

Most experienced IT professionals have probably encountered many examples of soft failures. Consider the following example: a common transaction that rarely fails hits a glitch that causes it to fail more frequently. The recovery code in the underlying component is normally successful and applications continue to work even though the problem is occurring. The system programmer has not noticed or has not been notified that something is amiss.

By the time the system programmer is notified, there have been multiple failing transactions and the system has become heavily loaded. Recovery continues to occur, but it slows down the transaction manager to the point that completely unrelated transactions start experiencing time-outs.

Although this example is hypothetical, it shows that if a soft failure like the one described occurs, it needs to have some external symptoms before the system programmer can start to take corrective action.

2.5.1 Predictive Failure Analysis overview

The goal of predictive analysis and early detection is to notify the system programmer as soon as the system becomes aware that a problem is occurring. Predictive Failure Analysis (PFA) detects problems before they are visible externally by checking resources and metrics at different layers of the software stack that can indicate that resource exhaustion, damage to address spaces, or damage to the system could be occurring by comparing existing metric values to trends for the LPAR.

PFA is not intended to find problems that will bring the system down the instant they start. Rather, it can detect potential problems on a human-time scale.

Various of the metrics that PFA uses in its analysis are closer to the hardware, and others are closer to the application. It is easier for PFA to detect an error that is close to the hardware. The closer the metric is to the application, the more difficult it is to determine whether this is really a soft failure or simply an anomaly due to a workload change.

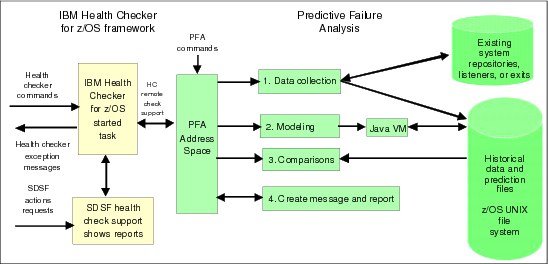

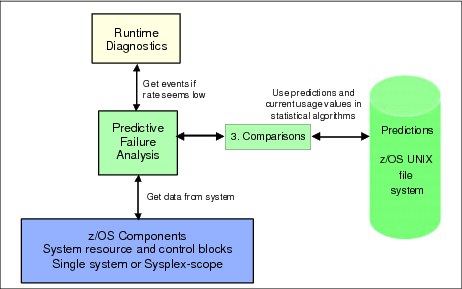

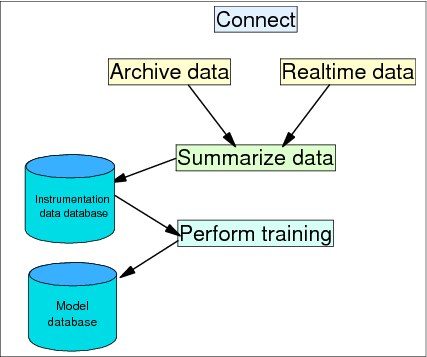

Figure 2-14 on page 43 shows how PFA fits into z/OS:

•PFA obtains its data from z/OS control blocks and published programming interfaces.

•It collaborates with Runtime Diagnostics when it thinks that some metric has an abnormally low value to determine whether this really represents a problem. This is a new capability that was delivered in z/OS 1.13. For more information about this capability, refer to “PFA and Runtime Diagnostics integration” on page 63.

•Finally, it sends the results to IBM Health Checker for z/OS, which issues the message as a WTO if necessary and makes the output viewable in SDSF.

Details about PFA processing and integration are provided in the following sections. All documentation related to PFA (including installation steps, management, and usage) is contained in z/OS Problem Management, G325-2564.

Figure 2-14 PFA input and output

2.5.2 Types of abnormal behavior detected

After the review of many soft failures on clients' systems, it was determined that there are four common reasons why a system has stopped functioning:

•Damaged address space or system

The indication of a damaged system is typically when there are recurring or recursive errors somewhere in the software stack.

•Serialization problems

Serialization problems are most often caused by priority inversion, classic deadlocks, and owner-gone scenarios.

•Physical or software resource exhaustion

Exhaustion of a shared and finite system resource.

•Indeterminate or unexpected states

PFA focuses on the damaged address space or system and the resource exhaustion categories.

PFA uses historical data together with machine learning and mathematical modeling to detect abnormal behavior and the potential causes of this abnormal behavior. It also predicts whether exhaustion of certain finite resources will occur if the current trend continues.

PFA’s objective is to convert soft failures to correctable incidents by alerting you as soon as it detects that a problem might be occurring or predicts that one will occur in the future.

PFA does not use hardcoded thresholds to determine abnormal behavior. Rather, it collects the data from the system and models it to determine what is normal for that system and what kinds of resource usage trends are occurring. It then uses advanced algorithms in conjunction with configurable values for sensitivity to determine whether an abnormal condition exists or whether the current trend indicates future exhaustion.

There are three types of abnormal behavior detection that PFA's algorithms incorporate:

Future prediction This processing performs trend analysis and models the behavior into the future to predict whether the current trend will exhaust a common resource.

Expected value This processing performs trend analysis and models the behavior to determine what value should be expected at the current time to determine whether an abnormal condition is occurring.

Expected rate This processing performs trend analysis and models the behavior to determine whether the current rate, when compared to rates for multiple time periods, indicates that an abnormal condition is occurring. The rate is often calculated by normalizing the value of the metric being analyzed by the CPU being used. By using a normalized rate and comparing against multiple time period predictions, normal workload changes do not appear as abnormal conditions.

For both the expected value and the expected rate types of predictions, PFA clusters the historical data so that trends within the data can be identified. It then determines which trend is currently active and uses the prediction for that trend in its comparisons.

|

Tip: PFA does not take the time of day or the day of the week into account when comparing current values to projections of what the current values should be. However, by using CPU consumption to normalize its rate metrics, it effectively takes into account whether the system is busy or quiet. In practice, this has been found to be effective and negates the need to take time or day into account when creating its model of system behavior.

|

2.5.3 PFA and IBM Health Checker for z/OS integration

It is important to understand the relationship between IBM Health Checker for z/OS and PFA so that you can fully benefit from IBM Health Checker for z/OS and PFA integration.

PFA exploits the remote check feature of the IBM Health Checker for z/OS framework to control the scheduling of the PFA checks and to present the results back to the operator. Therefore, the commands, SDSF health check interface, and reporting mechanism available through IBM Health Checker for z/OS are fully usable by the PFA checks.

Figure 2-15 on page 45 shows the flow of PFA processing to and from IBM Health Checker for z/OS. Each step is described separately in 2.5.4, “PFA processing” on page 46.

Information about the IBM Health Checker for z/OS can be found in the IBM Redpaper™ Exploiting the IBM Health Checker for z/OS Infrastructure, REDP-4590, and in IBM Health Checker for z/OS, SA22-7994.

Figure 2-15 PFA and IBM Health Checker for z/OS integration

If IBM Health Checker for z/OS is not active prior to PFA starting, PFA collects data and creates predictions as usual, but waits for IBM Health Checker for z/OS to start before performing comparisons or issuing results. This is because those functions are dependent on IBM Health Checker for z/OS.

If IBM Health Checker for z/OS is not available when it is started, PFA uses the default configuration values such as COLLECTINT and MODELINT. These values can be overridden by parameters you specify in IBM Health Checker for z/OS, but that depends on IBM Health Checker for z/OS being available. An example of the HZSPRMxx statements that you use to change the COLLECTINT are shown in Example 2-1.

Example 2-1 Sample HZSPRMxx statements to modify a PFA check

/* */

/*-------------------------------------------------------------------*/

/* Sample PFA override */

/*-------------------------------------------------------------------*/

/* */

ADD POLICY UPDATE CHECK(IBMPFA,PFA_COMMON_STORAGE_USAGE)

ACTIVE

PARMS=('COLLECTINT(10)')

DATE(20120827)

REASON('Update collection interval')

You can use the health check commands both to display and modify the PFA checks. Unlike other health checks, the PFA checks allow you to modify their parameters individually and accumulate the changes rather than requiring all parameters to be specified when modifying a check. This feature allows you to change one parameter without needing to remember the settings for all the others.

For example, to change only the STDDEV parameter of the JES spool usage check and set it to 6, issue the following command:

f hzsproc,update,check(ibmpfa,pfa_j*),parm(‘stddev(6)’)

|

Display all parameters and obtain status of PFA checks: IBM Health Checker for z/OS is unaware of the cumulative nature of the PFA checks’ parameters. Therefore, if you display a PFA check using the IBM Health Checker for z/OS modify command to display details, or you look at the parameters in the reports, only the parameters that were last modified are displayed.

To properly display all the parameters and to obtain the status of the PFA checks, use the PFA MODIFY command with the display details option such as shown:

f pfa,display,check(pfa_*),detail

Variations of the MODIFY PFA command and a description of the parameters can be found in z/OS Problem Management, G325-2564.

|

The results of PFA’s comparisons are sent to IBM Health Checker for z/OS, which writes the report. If an exception occurs, a WTO is issued by default. You can configure the type of message issued when an exception occurs by changing the check’s SEVERITY or WTOTYPE in IBM Health Checker for z/OS.

2.5.4 PFA processing

PFA contains check-specific code that collects the data for an individual check; creates a prediction; and compares the current values to an internal value created by PFA using the current predictions, domain knowledge, and customer-configurable parameters that adjust the sensitivity of the comparisons.

Depending on the result of the comparison, PFA uses the remote check support with IBM Health Checker for z/OS to issue an exception or informational message and to create a report in SDSF. Some of these functions are written in Java and therefore are eligible to run on a zAAP if the system is configured with one (see 2.5.9, “Achieving maximum benefit from PFA” on page 65 for more information).

Data collection

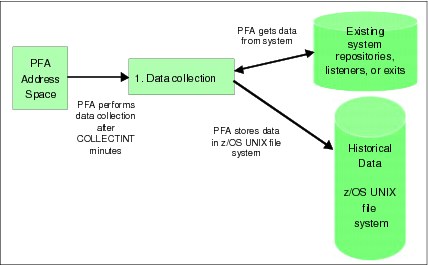

Data is collected for each check by interrogating system control blocks or using externally available interfaces such as APIs, ENF listeners, and dynamic exits. Data collection (shown in Figure 2-16 on page 47) occurs as follows:

1. Data collection occurs asynchronously on an interval that you can configure (although it is not anticipated that changing it will be necessary). For example, the default value for the data collection function for a check might be to collect data every fifteen minutes. This parameter is called COLLECTINT.

2. The data is stored in files in the z/OS UNIX file system. Data that is deemed by PFA to be too old for use in making new predictions is automatically deleted by PFA.

Figure 2-16 PFA data collection processing

Modeling

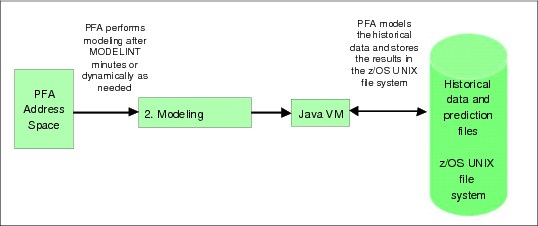

The data collected in the first step is modeled asynchronously to create predictions for the current trends.

Depending on the type of check, the result of the modeling might be a value that is predicted to occur in the future based on the current trends, or it might be a value that is expected at this point in time based on the current trends.

When the prediction is an expected value, PFA clusters the data into appropriate parts based on the historical values to determine workload differences within the data and creates predictions for each cluster. It then chooses which cluster’s prediction to use in the comparisons.

For expected rate comparisons over time, PFA creates predictions for multiple time ranges and then determines which time range predictions should be applied during the comparison. By performing this clustering and creating multiple predictions, PFA can better detect workload changes which otherwise will lead to unwarranted exceptions.

Modeling (shown in Figure 2-17 on page 48) occurs as follows:

•Modeling occurs asynchronously on an interval that you can control (although it is not anticipated that changing it will be necessary). For example, the default value for the modeling interval for all checks (starting in z/OS 1.12) is to run every twelve hours. This is controlled by the MODELINT parameter.

•When PFA starts, the first prediction is created after the check has determined that there is enough data to make a prediction, rather than necessarily waiting for the MODELINT number of minutes.

– For some checks, the first prediction is created seven hours after PFA starts as follows:

If PFA started at IPL, the first hour’s worth of data (when everything is in startup mode) is discarded so that it does not skew the prediction. If PFA started more than an hour after the IPL, data collection can begin immediately. This reduces the wait time for the first prediction from seven hours to six hours.

PFA then performs data collection for six hours (or the MODELINT number of minutes if it is less than six hours) and the first prediction is created. Subsequent predictions in a stable environment occur every twelve hours thereafter.

– For other checks, the first prediction is created thirteen hours after PFA starts.

The checks that first create a prediction thirteen hours after PFA starts are those that need a warm-up period to detect which jobs to track individually.

If PFA started at IPL, the first hour’s worth of data is discarded so that it does not skew the results of the warm-up. If PFA started more than an hour after IPL, data collection for the warm-up can begin immediately, thereby reducing the wait time for the first prediction from thirteen hours to twelve hours.

PFA then collects data for six hours for the warm-up, after which PFA determines the address spaces with the highest rates to track individually.

After the address spaces are properly categorized, PFA collects data for six hours (or the MODELINT number of minutes if it is less than six hours) and the first prediction is created. Subsequent predictions in a stable environment are made every twelve hours thereafter.

•Modeling runs when it is determined that it is time to update the model with new predictions based on the configured value. It can also run dynamically when PFA determines that the model might be too old to make accurate comparisons because the trend is changing.

•PFA requires at least four successful collections before modeling is attempted.

•The results of the modeling are stored in files in the z/OS UNIX file system. PFA automatically deletes files when they are no longer required.

Figure 2-17 PFA modeling processing

|

Important points about PFA modeling:

•Modeling is done in Java and is zAAP eligible. It is strongly advised to offload PFA’s Java processing to a zAAP.

•Even though the MODELINT value is configurable, you cannot force PFA to model at a specific time of day due to the fact that PFA models more frequently when your system is unstable to ensure that the model is current. When the system stabilizes, PFA resumes using the specified MODELINT value.

|

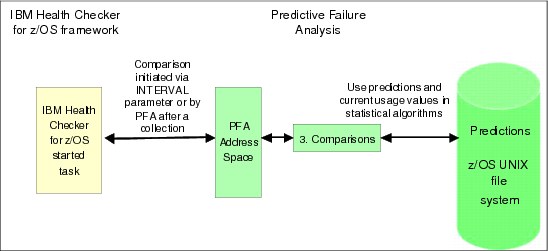

Comparisons

PFA performs the comparisons needed to determine whether an exception is to be issued. It compares what is currently occurring on the system to what was predicted by applying mathematical algorithms based on statistics and z/OS domain knowledge (information known about z/OS from experience) and by incorporating the user-defined parameters allowed for the check1. These algorithms determine whether the current values are abnormal, becoming abnormal, or everything is working fine.

If PFA determines the trend is abnormal or becoming abnormal, the comparison step might cause the check to create a new prediction before the next scheduled modeling interval.

Figure 2-18 PFA comparison processing

For some checks, comparisons are initiated by IBM Health Checker for z/OS when the number of minutes specified in the INTERVAL parameter for the check is reached. For other checks, the comparisons are performed at the end of every collection rather than using the INTERVAL parameter in IBM Health Checker for z/OS.

|

Important points about PFA comparisons:

•If the value of the INTERVAL parameter in IBM Health Checker for z/OS is set to ONETIME for a PFA check, it is a check that runs after every collection. Do not change this setting. If you do, the comparison uses the data collected at the last collection rather than retrieving new current usage data.

•Even though IBM Health Checker for z/OS allows you to run a health check on demand by using IBM Health Checker for z/OS interfaces, it is best to allow PFA to run the checks when PFA determines it is time for the comparisons to be performed.

When a check is run manually that has the INTERVAL parameter set to ONETIME, message HZS0400I is issued with message AIR023I embedded. You can display the current INTERVAL value by using the SDSF CK command and scrolling to the right to the column titled “INTERVAL.”

|

For each check, configuration parameters are available that affect the results of the check by changing the sensitivity of the comparisons. For further details about how to tune PFA comparison algorithms, see 2.5.9, “Achieving maximum benefit from PFA” on page 65.

Send results and alert the operator

For each comparison (regardless of whether the comparison resulted in an exception, or everything is working normally), PFA creates a report with the current data and predicted data. The report is only accessible by using the SDSF CK function and selecting the PFA check you are interested in.

1. Details specific to the exception are provided in the report to aid in your investigation. For example, if PFA issued the exception for comparisons done for specific address spaces, only those address spaces are listed on the report.

2. IBM Health Checker for z/OS issues message HZS000na with the PFA message (AIRHnnnE) embedded in it based on the SEVERITY and WTOTYPE settings of the check. This message goes to the system log and therefore is visible to your automation software.

3. Future WTOs for the same exception are suppressed until new data is available.

4. A directory is created with the data needed for IBM service to investigate the exception in cases where you believe the exception was unwarranted. Some of this information might be useful in your investigation as well.

The data is written to a subdirectory of the PFA check’s directory and is named EXC_timestamp, where the time stamp contains the date and time the exception occurred. A maximum of 30 directories per check are kept. When more are required, the oldest directory is deleted.

5. Modeling might occur more frequently until the data stabilizes.

Figure 2-19 PFA sends results to IBM Health Checker for z/OS

2.5.5 PFA reports

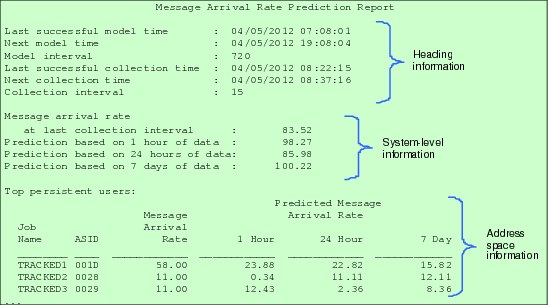

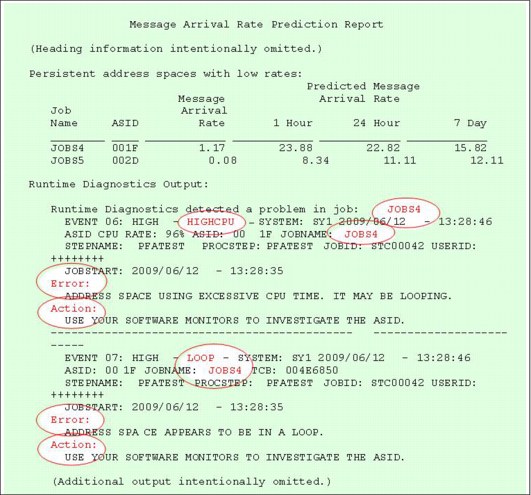

Figure 2-20 shows an example of a PFA report for the Message Arrival Rate check. When applicable, PFA reports have the following three parts:

•Heading information

This information includes the status and configuration of the collections and the models.

•System-level information

This information includes the current values and predictions for the total system.

•Address space information

This information includes details for address spaces that PFA is tracking, address spaces for which the exception was issued, or address spaces that might be the cause of the problem.

The report is accompanied by IBM Health Checker for z/OS information and the message that was issued.

Figure 2-20 PFA Message Arrival Rate Prediction Report

2.5.6 PFA checks

This section summarizes the PFA health checks and provides details about their execution. Other information about PFA checks such as example reports, file descriptions, best practices, and so on can be found in z/OS Problem Management, G325-2564.

With z/OS 1.13, there are six PFA health checks. One check detects resource exhaustion. The other five checks detect a damaged address space or system. Three of those checks can also detect a hung address space or system. A summary of the checks, what each detects, the type of analysis each uses, and the release each is available is shown in Table 2-1 on page 52.

Table 2-1 PFA checks

|

Resource or metric

|

Causes detected

|

Type of analysis

|

Release

|

|

Common storage usage

|

Detects resource exhaustion in common storage by looking for spikes, leaks, and creeps.

|

Future prediction

|

1.10

SPE

|

|

LOGREC record arrival rate

|

Detects a damaged address space or system.

|

Expected rate

|

1.10

SPE

|

|

Console message arrival rate

|

Detects a damaged or hung address space or system based on an abnormal rate in the WTOs and WTORs issued, normalized by the amount of CPU being used.

|

Expected rate

|

1.11

|

|

SMF record arrival rate

|

Detects a damaged or hung address space or system based on an abnormal rate of SMF record arrivals, normalized by the amount of CPU being used.

|

Expected rate

|

1.12

|

|

JES spool usage

|

Detects a damaged persistent address space based on an abnormal increase in the number of track groups used.

|

Expected value

|

1.13

|

|

Enqueue request rate

|

Detects a damaged or hung address space or system based on an abnormal rate of enqueue requests, normalized by the amount of CPU being used.

|

Expected rate

|

1.13

|

|

Apply the PTF for APAR OA40065: If you are familiar with PFA, you might have noticed that the check for frames and slots usage (PFA_FRAMES_AND_SLOTS_USAGE) was omitted from Table 2-1. This check has been permanently removed from PFA with APAR OA40065 because it caused unwarranted exceptions that could not be avoided with the available mechanisms.

It is advisable to apply the PTF for APAR OA40065 as soon as possible. To remove the check from PFA prior to applying the PTF, use the following IBM Health Checker for z/OS command:

f hzsproc,delete,check(ibmpfa,pfa_f*)

|

PFA is not an address space monitor. Rather, PFA attempts to detect when abnormal activity on the system could lead to a system outage and alert the operator before the system crashes or hangs.

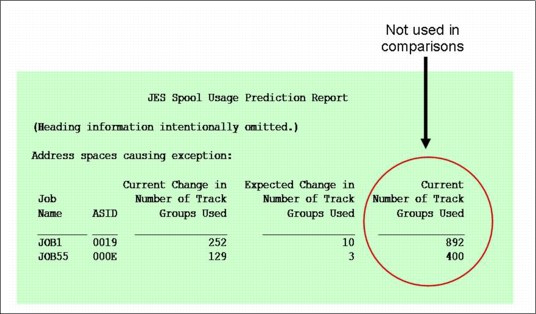

Notice in Table 2-1 that the only check looking for any type of resource exhaustion and using a future prediction is the one that checks for common storage exhaustion. Sometimes, clients are puzzled by the fact that the JES Spool Usage check does not detect exhaustion of track groups.

So, it is important to understand that the JES Spool Usage check is not looking at spool usage from the perspective of warning you when the spool starts to fill (JES2 already has messages that provide that information). Instead, it is looking for damaged persistent address spaces by detecting that an address space is using an abnormal number of track groups compared to what it normally uses. An address space that is increasing the number of track groups it uses at a rate significantly higher than it normally uses might be creating a large job log or might be in a loop, even if it is not exhausting the number of track groups available.

Common storage usage check

The PFA_COMMON_STORAGE_USAGE check is designed to detect future common storage exhaustion.

This check performs trend analysis to determine whether the current usage of common storage will exhaust common storage in the future. It does not detect when the common storage usage reaches a certain threshold, such as the percentage used compared to its available capacity.

For example, you might receive an exception for storage exhaustion even though only 53 percent of the available storage is currently being used. This is because PFA has determined that you will exhaust the storage in the future, based on your current trend. This early detection allows you to take action before your system starts behaving abnormally.

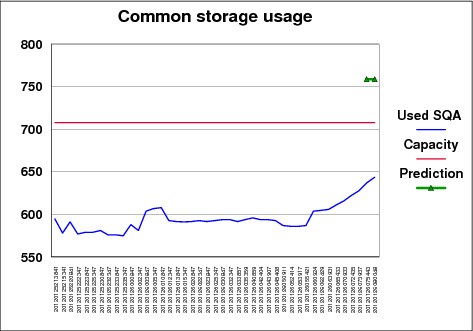

The example in Figure 2-21 shows an instance of how the current usage trend of SQA was fairly stable and was running at a fairly high percentage of used SQA compared to the capacity. However, the usage then started to increase such that if the new trend continued, SQA exhaustion would have occurred. The system overflows SQA into CSA eventually, but it was not yet doing so and the trend could have clearly indicated a problem on the system.

Figure 2-21 PFA Common Storage Usage Check example

In this example, the following sequence occurred:

1. The SQA capacity was 708 KB.

2. For about eight hours, the SQA usage hovered around 600 KB, which is about 85 percent of capacity.

3. Then, for about two hours, the trend started to gradually increase.

4. A new prediction was created after the last collection that detected, based on the current trend, that SQA usage would increase to 759 KB in the next six hours, which was well beyond the capacity.

5. Five minutes later, the current usage was continuing its upward trend and was then at 644 KB. At that point, the exception was issued.

Therefore, when usage was stable, even though it was rather high, the predictive trending indicated no problem would occur. However, when the usage started to steadily increase, the predictive trending indicated it would exceed the available capacity. The continued upward trend caused an alert to be issued to the operator.

PFA also applies z/OS domain knowledge in its comparison algorithms. That is, it knows at what usage level most systems tend to have soft failures for each storage location, and knows to issue exceptions for some locations earlier than others.

The common storage usage check also understands storage expansion from one location to another. If storage expands from SQA into CSA, it is included in the CSA usage, and predictions and comparisons for SQA are not performed. Similarly, when storage expands from ESQA into ECSA, it is included in the ECSA usage and predictions. Comparisons for ESQA are never performed (starting with APAR OA38279) because expansion into ECSA always occurs and such expansion does not cause system problems. This category is still tracked and modeled separately for your reference.

This check cannot detect exhaustion caused by fragmentation or rapid growth such as exhaustion that occurs within a collection interval or on a machine-time scale.

Also, this check does not monitor individual address spaces so it does not detect a storage leak in an individual address space unless that leak can result in storage exhaustion for the entire system.

When an exception occurs, it is not typically the address space that is using the most common storage that is the cause of the problem. Rather, it is the address space whose usage has increased the most recently.

Therefore, the exception report lists the top address spaces whose usage has increased the most in the last hour along with their current usage, and a prediction based on their trends (although comparisons are not performed using these values). If *SYSTEM* is listed, no attempt is made to determine the current usage due to performance considerations and UNAVAILABLE is displayed.

|

PFA_COMMON_STORAGE_USAGE check:

•This check requires that the following DIAGxx parmlib member options are set: VSM TRACK CSA(ON) SQA(ON)

•When an exception occurs, refer to the best practices defined for this check in z/OS Problem Management, G325-2564.

|

LOGREC arrival rate

The PFA_LOGREC_ARRIVAL_RATE check is designed to detect a potentially damaged address space or system based on the LOGREC arrival rate being too high. The rate produced by this check is the number of LOGREC record arrivals within the collection interval grouped by key. The key groupings are LOGREC records for key 0 by itself; keys 1 through 7 as a group; and keys 8 through 15 as a group.

This check models the expected number of LOGREC records by key for four time ranges: 1 hour, 24 hours, 7 days, and 30 days. This check does not use the CPU consumption to normalize the arrival rate. The reason for this is that it assumes that a completely healthy system will not create any LOGREC records, no matter how busy it is. So, any number of LOGREC records greater than zero is considered to be “bad”.

The check models the number of LOGREC records that are normally created by this system over each hour, day, week, and 30 days. This information is then used to detect changes in the record creation rate because this check assumes that an abnormal number of LOGREC records being created is highly likely to indicate a damaged address space.

Any one of the key groupings within any one of the time ranges can produce an exception. When a time range does not have enough data available for the entire time period, it is not included on the PFA report.

PFA is made aware every time a new LOGREC record is created, and it keeps a running count of the number of records created over the last x minutes. When a collection occurs, the current count is saved in the PFA files.

This check is somewhat unique when compared to the other “expected rate” checks in that it does not run after every collection. Instead, it runs whenever it is scheduled by the IBM Health Checker for z/OS, based on the INTERVAL value for the check. When the check is scheduled, it uses the current count of LOGREC records created over the last x minutes, where x is the collection interval value, not the value as it existed at the end of the last collection interval. This ensures that the check is always working with the latest information. For example:

•The time of the last collection was 8:00 a.m. (this data is collected for the time period 7:00 a.m. to 8:00 a.m. because COLLECTINT is 60).

•The time of the next comparison is 8:45 a.m. (every 15 minutes, because the INTERVAL is set to 15).

•The LOGREC record arrival rate used in the comparison is the arrival rate from 7:45 a.m. to 8:45 a.m.

This check does not look for individual LOGREC records, patterns of LOGREC records, or bursts of failures unless that burst is within a key grouping.

This check supports the ability to exclude address spaces that issue LOGREC records erratically by allowing you to define them in the PFA_LOGREC_ARRIVAL_RATE/config/EXCLUDED_JOBS file. This feature is especially useful when address spaces are not production-ready and create many LOGREC records on an irregular basis, or when address spaces that issue LOGREC records in a regular fashion are stopped and started frequently.

|

PFA_LOGREC_ARRIVAL_RATE check:

•The record must be a software LOGREC record with a usable SDWA to be counted as an arrival.

•z/OS LOGREC provides two options for the LOGREC recording medium: a System Logger log stream, or the LOGREC data set.

When a LOGREC record is produced, PFA is notified through an ENF listener. If you are writing a LOGREC record to the LOGREC data set and that data set fills up, PFA stops getting notified when a LOGREC is produced.

Therefore, for the best reliability, use the log stream method.

•If you change the COLLECTINT, perform the following steps to ensure that the values being modelled in the check remain consistent:

– First, stop PFA.

– Next, delete the files in the PFA_LOGREC_ARRIVAL_RATE/data directory.

– Finally, restart PFA.

•If an exception occurs, refer to the best practices in z/OS Problem Management, G325-2564.

|

Frames and slots usage

As noted in the label box Apply the PTF for APAR)A40065 on page 52, the PFA_FRAMES_AND_SLOTS_USAGE check has been permanently removed from PFA.

Message arrival rate

The PFA_MESSAGE_ARRIVAL_RATE check detects a potentially damaged address space or system based on the message arrival rate being higher or lower than expected. Messages included in the count of arrivals are single line and multi-line WTO and WTOR messages. Multi-line WTO messages are counted as one message. Branch Entry WTO messages are not counted. Messages are counted prior to being excluded or modified by other functions such as message flood automation.

The message arrival rate is a simple ratio calculated by dividing the number of arrivals in the collection interval by the CPU time used in that same collection interval.

This check does not track the message by individual message identifier, and therefore is not designed to detect abnormal message patterns or single critical messages.

The message arrival rate check performs its comparisons after every collection rather than being scheduled by the IBM Health Checker for z/OS INTERVAL parameter. By performing the check automatically upon successful completion of a collection, the check is able to compare the most recent arrivals with the predictions modeled at the last modeling interval. This design increases the validity of the check itself and the responsiveness of the check to the current activity of the system. Even though it can be run manually using IBM Health Checker for z/OS interfaces, there is no benefit because doing so only repeats the last comparison.

The message arrival rate check creates models and performs comparisons for time ranges based on 1 hour of data, 24 hours of data, and 7 days of data. If the amount of data is not available, the total system rate line for that time range is not printed on the report and UNAVAILABLE is printed for the individual address spaces for that time range.

|

PFA_MESSAGE_ARRIVAL_RATE check: So that address spaces that produce erratic numbers of messages do not skew the predictions, the following exclusions are done:

•The CONSOLE address space is excluded from this check’s processing. This behavior cannot be changed.

•Any address space that starts with “JES” is excluded from this check’s processing by default through the PFA_MESSAGE_ARRIVAL_RATE/config/EXCLUDED_JOBS file. This behavior can be changed, but is not suggested.

|

For guidance about what to do when an exception occurs for a rate that is too high, refer to 2.9.5, “PFA message arrival rate check exception issued for a high rate” on page 91.

If an unexpectedly low message arrival rate is detected, examine the report in SDSF for details about why the exception was issued. Use the Runtime Diagnostics output in the report to assist you in diagnosing and fixing the problem. Also, refer to 2.9.6, “PFA exception issued for a low rate” on page 92.

SMF arrival rate

The PFA_SMF_ARRIVAL_RATE check detects a potentially damaged address space or system based on the SMF record arrival rate being higher or lower than expected. The check’s metric is the rate derived from the count of the SMF arrivals per the amount of CPU used in the interval.

If SMF is not active, this check is not processed and a WTO message is issued.

The SMF arrival rate check is not designed to detect performance problems caused by insufficient resources, a faulty WLM policy, or spikes in work. However, it might help to determine whether a performance problem detected by a performance monitor is caused by a damaged system.

This check does not detect abnormal SMF patterns or individual types of SMF records.

If SMF is stopped and restarted across a collection interval, PFA detects this change and automatically deletes the previously collected data and re-enters the warm-up phase to detect which jobs to track.

|

PFA_SMF_ARRIVAL_RATE check: If a change to your SMF definitions would result in a significant change in the number of SMF records being produced, it is advisable to do the following:

1. Stop PFA.

2. Delete the files in the PFA_SMF_ARRIVAL_RATE/data directory.

3. Restart PFA.

This ensures that PFA does not use the previously collected data in the models so that the models accurately reflect the new configuration.

|

If an unexpectedly high SMF record arrival rate is detected, review the SMF records sent by the address spaces identified on the report and examine the system log to determine what caused the increase in SMF activity. Also, refer to 2.9.7, “PFA exception issued for a high SMF arrival or high ENQ request rate” on page 92.