Cutting Corners

Imagine a development team that seems to be moving at the speed of light. No matter how many items are brought into a sprint, they always appear to be accomplished at the end. But as the months pass, that speed exponentially slows down. Production bugs continually interrupt the sprint backlog, often making the sprint goal unachievable, and stakeholders are surprised by the lack of progress after such a fast start and how hard the team is working.

During a sprint retrospective, the development team had a heart to heart. They admitted that their early success was due to some short-sighted architectural decisions that were supposed to be temporary, and that they had been ignoring their definition of done for the past few months. The development team wanted to maintain the pace they had set to please the customers and stakeholders who were counting on them. The temporary decisions became permanent and, to keep things moving, the team intentionally ignored their definition of done. As they built more on top of the platform, it got harder and harder to add new functionality. The copy-and-paste method the team had been using was no longer working. They had created a large amount of technical debt.

In the old-school waterfall mentality, success is defined as being on scope, on time, and within the budget. When organizations adopt to Scrum, these waterfall definitions of success often carry over, which can be problematic because Scrum defines success differently. In Scrum, we take a product mentality where success is defined by delighting our customers with high-quality, valuable products. We do this by creating done product increments that are potentially shippable every sprint. This gives the product owner the option to incrementally ship product so they can frequently get customer feedback on what the dev team has built.

In [xxx](#chapter.sprintplanning), we discuss the importance of having a sprint goal. By focusing on accomplishing this goal and not on trying to finish all the product backlog items, we target a business-value based outcome (an increment of product that could bring value to a stakeholder). Trying to finish all the PBIs in the sprint backlog is waterfall thinking. In Scrum, it doesn’t matter how many PBIs the development team finishes each sprint so long as they’ve met the sprint goal.

When a project starts, it’s impossible to know exactly what kind of architectural patterns and practices will be best for the end product. Architecture should be a constant concern of the development team, and something that they regularly discuss throughout each sprint. The best architectures emerge in the same way the product backlog does, and should be validated by the development team as the product is built and delivered to customers.

A key component of developing an architecture that’s fit for purpose—meaning it meets the organization’s and customer’s current needs (and their likely future ones)—is a team’s definition of done. As we discuss in detail in Chapter 13, Deconstructing the Done Product Increment, the Scrum team creates this definition, providing a clear understanding within the team of what it means for something to be considered done. It also creates transparency with the product owner so that, when the development team says they are done, the PO knows exactly what that means.

Be sure to read Chapter 13, Deconstructing the Done Product Increment for more about defining done, but for now here’s a quick rundown. A development team’s definition of done should evolve over time, becoming more strict as a team matures. Evaluating and changing it should be part of every sprint retrospective. For example, a team’s initial definition of done might be something like this:

- Code has been reviewed by someone other than the person who wrote it.

- The PBI has been tested by someone other than the person who developed it.

- The PBI has been deployed to the development environment.

- The PBI has been tested by someone besides the code reviewer or the person who wrote it.

Many sprints later, this team’s definition of done might evolve into this:

- Automated tests have been written.

- The code passes the continuous integration build.

- The functionality is sitting in the staging environment ready for deployment.

The difference in these two definitions is substantial. A lot of architectural considerations, practices, and organizational constraints need to be resolved to get from the first definition to the second. This maturation shows that the team isn’t cutting corners but is instead trying to advance their capabilities to get to a releasable increment—which is required by Scrum.

What if a development team has a definition of done that they continually revise but which they don’t actually abide by? This will cause decreased transparency across the entire Scrum team, undone work, low-quality work, and the degradation of the Scrum values. It’s a scary situation that we’ve seen happen, and it comes with dire consequences.

Cutting corners by ignoring the definition of done trades short-term gains for long-term losses. These long-term losses can scar an organization and may put your product in an unrecoverable state. Even worse, ignoring the definition of done can cause irreparable damage to your company’s reputation. A development team must create a solution that is fit for the purpose but also considers a holistic design that can encompass the future of the product and the organization.

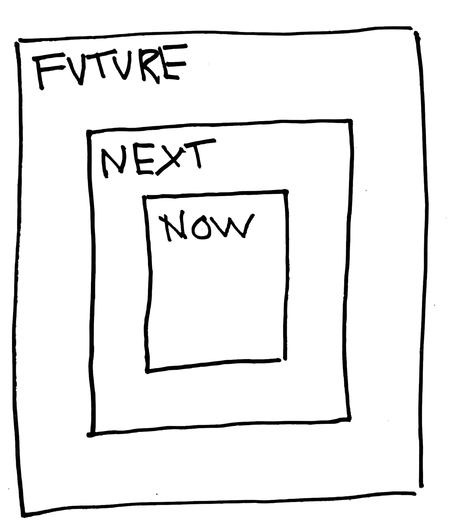

What can your team do to evaluate and evolve its definition of done? Examine your existing definition and think about the next things to add to it, and what the team hopes it will look like in the future. To get started, draw the diagram as shownhere, on a whiteboard or flip chart. Then have the development team place sticky notes in each of the three sections:

-

Now: Our definition of done as it stands today. What are we capable of right now? For instance, in our earlier example, this section would contain the following:

-

Code has been reviewed by someone other than the person who wrote it.

-

The PBI has been tested by someone other than the person who developed it.

-

The PBI has been deployed to the development environment.

-

The PBI has been tested by someone besides the code reviewer or the person who wrote it.

-

-

Next: What do we plan to do next to advance our architectural practices and make our definition of done more strict? Again, pulling from the earlier example:

-

Automated tests have been written.

-

The code passes the continuous integration build.

-

The functionality is sitting in the staging environment, ready for deployment.

-

-

Future: What things do we imagine being in our definition of done once we’re capable of them in the future? Here are some possibilities for our example scenario:

-

The PBI passes all automated tests (unit, integration, and functional).

-

The PBI has been reviewed by the entire Scrum team.

-

The PBI has been shipped into production.

-

Revisit this exercise frequently during sprint retrospectives to help evolve the team’s definition of done.