3 Why function purity matters

- What makes a function pure or impure

- Why purity matters in concurrent scenarios

- How purity relates to testability

- Reducing the impure footprint of your code

The initial name for this chapter was “The irresistible appeal of purity.” But if it was so irresistible, we’d have more functional programmers, right? Functional programmers, you see, are suckers for pure functions: functions with no side effects. In this chapter, you’ll see what that means exactly and why pure functions have some very desirable properties.

Unfortunately, this fascination with pure functions is partly why FP as a discipline has become disconnected from the industry. As you’ll soon realize, there’s little purity in most real-world applications. And yet, purity is still relevant in the real world, as I hope to show in this chapter.

We’ll start by looking at what makes a function pure (or impure), and then you’ll see how purity affects a program’s testability and even correctness, especially in concurrent scenarios. I hope that by the end of the chapter, you’ll find purity if not irresistible at least definitely worth keeping in mind.

3.1 What is function purity?

In chapter 2, you saw that mathematical functions are completely abstract entities. Although some programming functions are close representations of mathematical functions, this is often not the case. You often want a function to print something to the screen, to process a file, or to interact with another system. In short, you often want a function to do something, to have a side effect. Mathematical functions do nothing of the sort; they only return a value.

There’s a second important difference: mathematical functions exist in a vacuum, so their results are determined strictly by their arguments. The programming constructs we use to represent functions, on the other hand, all have access to a context: an instance method has access to instance fields, a lambda has access to variables in the enclosing scope, and many functions have access to things that are completely outside the scope of the program, such as the system clock, a database, or a remote service, for instance.

That this context exists, that its limits aren’t always clearly demarcated, and that it can consist of things that change outside of the program’s control means that the behavior of functions in programming is substantially more complex to analyze than functions in mathematics. This leads to a distinction between pure and impure functions.

3.1.1 Purity and side effects

Pure functions closely resemble mathematical functions: they do nothing other than compute an output value based on their input values. Table 3.1 contrasts pure and impure functions.

Table 3.1 Requirements of pure functions

To clarify this definition, we must define exactly what a side effect is. A function is said to have side effects if it does any of the following:

-

Mutates global state—Global here means any state that’s visible outside of the function’s scope. For example, a private instance field is considered global because it’s visible from all methods within the class.

-

Mutates its input arguments—Arguments passed by the caller are effectively a state that a function shares with its caller. If a function mutates one of its arguments, that’s a side effect that’s visible to the caller.

-

Throws exceptions—You can reason about pure functions in isolation; however, if a function throws exceptions, then the outcome of calling it is context-dependent. Namely, it differs depending on whether the function is called in a

try-catch. -

Performs any I/O operation—This includes any interaction between the program and the external world, including reading from or writing to the console, the filesystem, or a database, and interacting with any process outside the application’s boundary.

In summary, pure functions have no side effects, and their output is solely determined by their inputs. Note that both conditions must hold:

-

A function that has no side effects can still be impure. Namely, a function that reads from global mutable state is likely to have an output that depends on factors other than its inputs.

-

A function whose output depends entirely on its inputs can also be impure. It could still have side effects such as updating global mutable state.

The deterministic nature of pure functions (they always return the same output for the same input) has some interesting consequences. Pure functions are easy to test and to reason about.1

Furthermore, the fact that outputs only depend on inputs means that the order of evaluation isn’t important. Whether you evaluate the result of a function now or later, the result does not change. This means that the parts of your program that consist entirely of pure functions can be optimized in a number of ways:

-

Parallelization—Different threads carry out tasks in parallel.

-

Memoization—Caches the result of a function so that it’s only computed once.

On the other hand, using these techniques with impure functions can lead to rather nasty bugs. For these reasons, FP advocates that pure functions be preferred as far as possible.

3.1.2 Strategies for managing side effects

OK, let’s aim to use pure functions whenever possible. But is it always possible? Is it ever possible? Well, if you look at the list of things considered as side effects, it’s a pretty mixed bag, so the strategies for managing side effects depend on the types of side effects in question.

Mutating input arguments is the easiest of the lot. This side effect can always be avoided, and I’ll demonstrate this next. It’s also possible to always avoid throwing exceptions. We’ll look at error handling without throwing exceptions in chapters 8 and 14.

Writing programs (even stateful programs) without state mutation is also possible. You can write any program without ever mutating state.2 This may be a surprising realization for an OO programmer and requires a real shift in thinking. In section 3.2, I’ll show you a simple example of how avoiding state mutation enables you to easily parallelize a function. In later chapters, you’ll learn various techniques to tackle more complex tasks without relying on state mutation.

Finally, I’ll discuss how to manage I/O in section 3.3. By learning these techniques, you’ll be able to isolate or avoid side effects and, thus, harness the benefits of pure functions.

3.1.3 Avoid mutating arguments

You can view a function signature as a contract: the function receives some inputs and returns some output. When a function mutates its arguments, this muddies the waters because the caller relies on this side effect to happen, even though this is not declared in the function signature. For this reason, I’d argue that mutating arguments is a bad idea in any programming paradigm. Nonetheless, I’ve repeatedly stumbled on implementations that do something along these lines:

decimal RecomputeTotal(Order order, List<OrderLine> linesToDelete) { var result = 0m; foreach (var line in order.OrderLines) if (line.Quantity == 0) linesToDelete.Add(line); else result += line.Product.Price * line.Quantity; return result; }

RecomputeTotal is meant to be called when the quantity of items in an order is modified. It recomputes the total value of the order and, as a side effect, adds the order lines whose quantities have changed to zero to the given linesToDelete list. This is represented in figure 3.1.

The reason why this is such a terrible idea is that the behavior of the method is now tightly coupled with that of the caller: the caller relies on the method to perform its side effect, and the callee relies on the caller to initialize the list. As such, each method must be aware of the implementation details of the other, making it impossible to reason about the code in isolation.

WARNING Another problem with methods that mutate their argument is that, if you were to change the type of the argument from a class to a struct, you’d get a radically different behavior because structs are copied when passed between functions.

You can easily avoid this kind of side effect by returning all the computed information to the caller instead. It’s important to recognize that the method is effectively computing two pieces of data: the new total for the order and the list of lines that can be deleted. You can make this explicit by returning a tuple. The refactored code would be as follows:

(decimal NewTotal, IEnumerable<OrderLine> LinesToDelete) RecomputeTotal(Order order) => (order.OrderLines.Sum(l => l.Product.Price * l.Quantity) , order.OrderLines.Where(l => l.Quantity == 0));

Figure 3.2 represents this refactored version and appears simplified. After all, now it’s just a normal function that takes some input and returns an output.

Following this principle, you can always structure your code in such a way that functions never mutate their input arguments. In fact, it would be ideal to enforce this by always using immutable objects—objects that once created cannot be changed. We’ll discuss this in detail in chapter 11.

3.2 Enabling parallelization by avoiding state mutation

In this section, I’ll show you a simple scenario that illustrates why pure functions can always be parallelized while impure functions can’t. Imagine you want to format a list of strings as a numbered list:

To do this, you could define a ListFormatter class, with the following usage:

var shoppingList = new List<string> { "coffee beans", "BANANAS", "Dates" }; new ListFormatter() .Format(shoppingList) .ForEach(WriteLine); // prints: 1. Coffee beans // 2. Bananas // 3. Dates

The following listing shows one possible implementation of ListFormatter.

Listing 3.1 A list formatter combining pure and impure functions

static class StringExt { public static string ToSentenceCase(this string s) ❶ => s == string.Empty ? string.Empty : char.ToUpperInvariant(s[0]) + s.ToLower()[1..]; } class ListFormatter { int counter; string PrependCounter(string s) => $"{++counter}. {s}"; ❷ public List<string> Format(List<string> list) => list .Select(StringExt.ToSentenceCase) ❸ .Select(PrependCounter) ❸ .ToList(); }

❷ An impure function (it mutates global state).

❸ Pure and impure functions can be applied similarly.

There are a few things to point out with respect to purity:

-

ToSentenceCaseis pure (its output is strictly determined by the input). Because its computation only depends on the input parameter, it can be made static without any problems.3 -

PrependCounterincrements the counter, so it’s impure. Because it depends on an instance member (the counter), you can’t make it static. -

In the

Formatmethod, you apply both functions to items in the list withSelect, irrespective of purity. This isn’t ideal, as you’ll soon learn. In fact, there should ideally be a rule thatSelectshould only be used with pure functions.

If the list you’re formatting is big enough, would it make sense to perform the string manipulations in parallel? Could the runtime decide to do this as an optimization? We’ll tackle these questions next.

3.2.1 Pure functions parallelize well

Given a big enough set of data to process, it’s usually advantageous to process it in parallel, especially when the processing is CPU-intensive and the pieces of data can be processed independently. Pure functions parallelize well and are generally immune to the issues that make concurrency difficult. (For a refresher on concurrency and parallelism, see the sidebar on the “Meaning and types of concurrency”).

I’ll illustrate this by trying to parallelize our list-formatting functions with ListFormatter. Compare these two expressions:

The first expression uses the Select method defined in System.Linq.Enumerable to apply the pure function ToSentenceCase to each element in the list. The second expression is similar, but it uses methods provided by Parallel LINQ (PLINQ).4 AsParallel turns the list into a ParallelQuery. As a result, Select resolves to the implementation defined in ParallelEnumerable, which applies ToSentenceCase to each item in the list, but now in parallel.

The list is split into chunks, and several threads are fired to process each chunk. The results are then harvested into a single list when ToList is called. Figure 3.3 shows this process.

As you would expect, the two expressions yield the same results, but one does so sequentially and the other in parallel. This is nice. With just one call to AsParallel, you get parallelization almost for free.

Why almost for free? Why do you have to explicitly instruct the program to parallelize the operation? Why can’t the runtime figure out that it’s a good idea to parallelize an operation just like it figures out when it’s a good time to run the garbage collector?

The answer is that the runtime doesn’t know enough about the function to make an informed decision on whether parallelization might change the program flow. Because of their properties, pure functions can always be applied in parallel, but the runtime doesn’t know whether the function being applied is pure.

3.2.2 Parallelizing impure functions

You’ve seen that you could successfully apply the pure ToSentenceCase in parallel. Let’s see what happens if you naively apply the impure PrependCounter function in parallel:

If you now create a list with a million items and format it with the naively parallelized formatter, you’ll find that the last item in the list will be prepended not with 1,000,000, but with a smaller number. If you’ve downloaded the code samples, you can try it for yourself by running

The output will end with something like

Because PrependCounter increments the counter variable, the parallel version will have multiple threads reading and updating the counter, as figure 3.4 shows. As is well known, ++ is not an atomic operation, and because there’s no locking in place, we’ll lose some of the updates and end up with an incorrect result.

This will sound familiar if you have some multithreading experience. Because multiple processes are reading and writing to the counter at the same time, some of the updates are lost. Of course, you could fix this by using a lock or the Interlocked class when incrementing the counter. But this would entail a performance hit, wiping out some of the gains made by parallelizing the computation. Furthermore, locking is an imperative construct that we’d rather avoid when coding functionally.

Let’s summarize. Unlike pure functions, whose application can be parallelized by default, impure functions don’t parallelize out of the box. And because parallel execution is nondeterministic, you may get some cases in which your result is correct and others in which it isn’t (not the sort of bug you’d like to face).

Being aware of whether your functions are pure or not can help you understand these issues. Furthermore, if you develop with purity in mind, it’s easier to parallelize the execution if you decide to do so.

3.2.3 Avoiding state mutation

One possible way to avoid the pitfalls of concurrent updates is to remove the problem at the source; don’t use shared states to begin with. How this can be done varies with each scenario, but I’ll show you a solution for the current scenario that enables us to format the list in parallel.

Let’s go back to the drawing board and see if there’s a sequential solution that doesn’t involve mutation. What if, instead of updating a running counter, you generate a list of all the counter values you need and then pair items from the given list with items from the list of counters? For the list of integers, you can use Range, a convenience method on Enumerable, as the following listing demonstrates.

Listing 3.2 Generating a range of integers

The operation of pairing two parallel lists is common in FP. It’s called Zip. Zip takes two lists to pair up and a function to apply to each pair. The following listing shows an example.

Listing 3.3 Combining elements from parallel lists with Zip

Enumerable.Zip( new[] {1, 2, 3}, new[] {"ichi", "ni", "san"}, (number, name) => $"In Japanese, {number} is: {name}") // => ["In Japanese, 1 is: ichi", // "In Japanese, 2 is: ni", // "In Japanese, 3 is: san"]

You can rewrite the list formatter using Range and Zip as the following listing shows.

Listing 3.4 List formatter refactored to use pure functions only

using static System.Linq.Enumerable; static class ListFormatter { public static List<string> Format(List<string> list) { var left = list.Select(StringExt.ToSentenceCase); var right = Range(1, list.Count); var zipped = Zip(left, right, (s, i) => $"{i}. {s}"); return zipped.ToList(); } }

Here you use the list with ToSentenceCase applied to it as the left side of Zip. The right side is constructed with Range. The third argument to Zip is the pairing function: what to do with each pair of items. Because Zip can be used as an extension method, you can write the Format method using a more fluent syntax:

public static List<string> Format(List<string> list) => list .Select(StringExt.ToSentenceCase) .Zip(Range(1, list.Count), (s, i) => $"{i}. {s}") .ToList();

After this refactoring, Format is pure and can safely be made static. But what about making it parallel? That’s a piece of cake because PLINQ offers an implementation of Zip that works with parallel queries. The following listing provides a parallel implementation of the list formatter.

Listing 3.5 A pure implementation that executes in parallel

using static System.Linq.ParallelEnumerable; ❶ static class ListFormatter { public static List<string> Format(List<string> list) => list.AsParallel() ❷ .Select(StringExt.ToSentenceCase) .Zip(Range(1, list.Count), (s, i) => $"{i}. {s}") .ToList(); }

❶ Uses Range exposed by ParallelEnumerable

❷ Turns the original data source into a parallel query

This is almost identical to the sequential version; there are only two differences. First, AsParallel is used to turn the given list into a ParallelQuery so that everything after that is done in parallel. Second, the change in using static has the effect that Range now refers to the implementation defined in ParallelEnumerable (this returns a ParallelQuery, which is what the parallel version of Zip expects). The rest is the same as the sequential version, and the parallel version of Format is still a pure function.

In this scenario, it was possible to enable parallel execution by removing state updates altogether, but this isn’t always the case, nor is it always this easy. But the ideas you’ve seen so far put you in a better position when tackling issues related to parallelism and, more generally, concurrency.

3.3 Purity and testability

In the previous section, you saw the relevance of purity in a concurrent scenario. Because the side effect had to do with state mutation, we could remove the mutation, and the resulting pure function could be run in parallel without problems.

Now we’ll look at functions that perform I/O and how purity is relevant to unit testing. Unit tests have to be repeatable (if a test passes, it should do so irrespective of when it’s run, on what machine, whether there’s a connection, and so on). This is closely related to our requirement that pure functions be deterministic.

I’m aiming to leverage your knowledge of unit testing to help you understand the relevance of purity and also to dispel the notion that purity is only of a theoretical interest. Your manager may not care whether you write pure functions, but they’re probably keen on good test coverage.

3.3.1 Isolating I/O effects

Unlike mutation, you can’t avoid side effects related to I/O. Whereas mutation is an implementation detail, I/O is usually a requirement. Here are a few examples that help to clarify why functions that perform I/O can never be pure:

-

A function that takes a URL and returns the resource at that URL yields a different result any time the remote resource changes, or it may throw an error if the connection is unavailable.

-

A function that takes a file path and contents to be written to a file can throw an error if the directory doesn’t exist or if the process hosting the program lacks write permissions.

-

A function that returns the current time from the system clock returns a different result at any instant.

As you can see, any dependency on the external world gets in the way of function purity because the state of the world affects the function’s return value. On the other hand, if your program is to do anything of use, there’s no escaping the fact that some I/O is required. Even a purely mathematical program that just performs a computation must perform some I/O to communicate its result. Some of your code will have to be impure.

How can you reap the benefits of purity while satisfying the requirement to perform I/O? You isolate the pure, computational parts of your programs from the I/O. In this way, you minimize the footprint of I/O and reap the benefits of purity for the pure part of the program. For example, consider the following code:

using static System.Console; WriteLine("Enter your name:"); var name = ReadLine(); WriteLine($"Hello {name}");

This trivial program mixes I/O with logic that could be captured in a pure function, as follows:

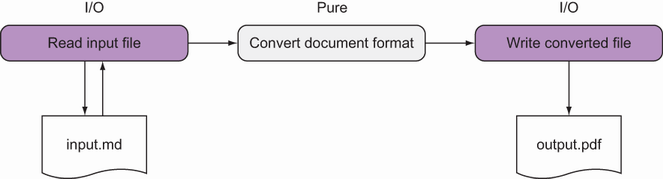

In some real-world programs, separating logic from I/O is relatively simple. For example, take a document format converter like Pandoc, which can be used to convert a file from, say, Markdown to PDF. When you execute Pandoc, it performs the steps shown in figure 3.5.

Figure 3.5 A program where I/O can easily be isolated. The core logic performing format conversion can be kept pure.

The computational part of the program, which performs the format conversion, can be made entirely of pure functions. The impure functions that perform I/O can call the pure functions that perform the translation, but the functions that perform the translation can’t call any function that performs I/O, or they will also become impure.

Most Line of Business (LOB) applications have a more complex structure in terms of I/O, so isolating the purely computational parts of the program from I/O is quite a challenge. Next, I’ll introduce a business scenario we’ll use throughout the book, and we’ll see how we can test some validation that performs I/O.

3.3.2 A business validation scenario

Imagine you’re writing some code for an online banking application. Your client is the Bank of Codeland (BOC); the BOC’s customers can use a web or mobile device to make money transfers. Before booking a transfer, the server has to validate the request, as figure 3.6 shows.

Let’s assume that the user’s request to make a transfer is represented by a MakeTransfer command. A command is a simple data transfer object (DTO) that the client sends the server, encapsulating details about the action it wants to be performed. The following listing shows our call to MakeTransfer.

Listing 3.6 A DTO representing a request to make a money transfer

public abstract record Command(DateTime Timestamp); public record MakeTransfer ( Guid DebitedAccountId, ❶ string Beneficiary, ❷ string Iban, ❷ string Bic, ❷ DateTime Date, ❸ decimal Amount, ❸ string Reference, ❸ DateTime Timestamp = default ) : Command(Timestamp) { internal static MakeTransfer Dummy ❹ => new(default, default, default , default, default, default, default); }

❶ Identifies the sender’s account

❷ Details about the beneficiary’s account

❹ We’ll use this for testing when you don’t need all the properties to be populated.

The properties of a MakeTransfer are populated by deserializing the client’s request, except for the Timestamp, which needs to be set by the server. An initial default value is therefore declared. When unit testing, we’ll have to populate the object manually, so having a Dummy instance allows you to only populate the properties relevant to the test, as you’ll see in a moment.

Validation in this scenario can be quite complex. For the purposes of this explanation, we’ll only look at the following validation:

-

The

Datefield, representing the date on which the transfer should be executed, should not be past. -

The BIC code, a standard identifier for the beneficiary’s bank, should be valid.

We’ll start with an OO design. (In chapter 9, I show a more thoroughly functional approach to this scenario.) Following the single-responsibility principle, we’ll write one class for each particular validation. Let’s draft a simple interface that all these validator classes will implement:

Now that we have our domain-specific abstractions in place, let’s start with a basic implementation. The next listing shows how this is done.

Listing 3.7 Implementing validation rules

using System.Text.RegularExpressions; public class BicFormatValidator : IValidator<MakeTransfer> { static readonly Regex regex = new Regex("^[A-Z]{6}[A-Z1-9]{5}$"); public bool IsValid(MakeTransfer transfer) => regex.IsMatch(transfer.Bic); } public class DateNotPastValidator : IValidator<MakeTransfer> { public bool IsValid(MakeTransfer transfer) => (DateTime.UtcNow.Date <= transfer.Date.Date); }

That was fairly easy. Is the logic in BicFormatValidator pure? Yes, because there are no side effects and the result of IsValid is deterministic. What about DateNotPastValidator? In this case, the result of IsValid depends on the current date, so clearly, the answer is no! What kind of side effect are we facing here? It’s I/O: DateTime.UtcNow queries the system clock, which is outside the context of the program.

Functions that perform I/O are difficult to test. For example, consider the following test:

[Test] public void WhenTransferDateIsFuture_ThenValidationPasses() { var sut = new DateNotPastValidator(); ❶ var transfer = MakeTransfer.Dummy with { Date = new DateTime(2021, 3, 12) ❷ }; var actual = sut.IsValid(transfer); Assert.AreEqual(true, actual); }

❶ sut stands for “structure under test.”

❷ This date used to be in the future!

This test creates a MakeTransfer command to make a transfer on 2021-03-12. (If you’re unfamiliar with the with expression syntax used in the example, I’ll discuss this in section 11.3.) It then asserts that the command should pass the date-not-past validation.

The test passes as I’m writing this, but it will fail by the time you’re reading it, unless you’re running it on a machine where the clock is set before 2021-03-12. Because the implementation relies on the system clock, the test is not repeatable.

Let’s take a step back and see why testing pure functions is fundamentally easier than testing impure ones. Then, in section 3.4, we’ll come back to this example and see how we can bring DateNotPastValidator under test.

3.3.3 Why testing impure functions is hard

When you write unit tests, what are you testing? A unit, of course, but what’s a unit exactly? Whatever unit you’re testing is a function or can be viewed as one.

Unit tests need to be isolated (no I/O) and repeatable (you always get the same result, given the same inputs). These properties are guaranteed when you use pure functions. When you’re testing a pure function, testing is easy: you just give it an input and verify that the output is as expected (as figure 3.7 illustrated).

Figure 3.7 Testing a pure function is easy: you simply provide inputs and verify that the outputs are as expected.

If you use the standard Arrange Act Assert (AAA) pattern in your unit tests and the unit you’re testing is a pure function, then the arrange step consists of defining the input values, the act step is the function invocation, and the assert step consists of checking that the output is as expected.5 If you do this for a representative set of input values, you can be confident that the function works as intended.

If, on the other hand, the unit you’re testing is an impure function, its behavior depends not only on its inputs but, possibly, also on the state of the program (any mutable state that’s not local to the function under test) and the state of the world (anything outside the context of your program). Furthermore, the function’s side effects can lead to a new state of the program and the world: for example,

-

The date validator depends on the state of the world, specifically the current time.

-

A

void-returning method that sends an email has no explicit output to assert against, but it results in a new state of the world. -

A method that sets a non-local variable results in a new state of the program.

As a result, you could view an impure function as a pure function that takes as input its arguments, along with the current state of the program and the world, and returns its outputs, along with a new state of the program and the world. Figure 3.8 shows this process.

Figure 3.8 Testing an impure function. You need to set up and assert against more than just the function inputs and output.

Another way to look at this is that an impure function has implicit inputs other than its arguments or implicit outputs other than its return value or both.

How does this affect testing? Well, in the case of an impure function, the arrange stage must not only provide the explicit inputs to the function under test, but must additionally set up a representation of the state of the program and the world. Similarly, the assert stage must not only check the result, but also that the expected changes have occurred in the state of the program and the world. This is summarized in table 3.2.

Tanle 3.2 Unit testing from a functional perspective

|

Sets up the (explicit and implicit) inputs to the function under test | |

|

Verifies the correctness of the (explicit and implicit) output |

Again, we should distinguish between different kinds of side effects with respect to testing:

-

Setting the state of the program and checking that it’s updated makes for brittle tests and breaks encapsulation.

-

The state of the world can be represented by using stubs that create an artificial world in which the test runs.

It’s hard work, but the technique is well understood. We’ll look at this next.

3.4 Testing code that performs I/O

In this section, you’ll see how we can bring code that depends on I/O operations under test. I’ll show you different approaches to dependency injection, contrasting the mainstream OO approach with a more functional approach.

To demonstrate this, let’s go back to the impure validation in DateNotPastValidator and see how we can refactor the code to make it testable. Here’s a reminder of the code:

public class DateNotPastValidator : IValidator<MakeTransfer> { public bool IsValid(MakeTransfer transfer) => (DateTime.UtcNow.Date <= transfer.Date.Date); }

The problem is that because DateTime.UtcNow accesses the system clock, it’s not possible to write tests that are guaranteed to behave consistently.6 Let’s see how we can address this.

3.4.1 Object-oriented dependency injection

The mainstream technique for testing code that depends on I/O operations is to abstract these operations in an interface and to use a deterministic implementation in the tests. If you’re already familiar with this approach, skip to section 3.4.2.

This interface-based approach to dependency injection is considered a best practice, but I’ve come to think of it as an anti-pattern. This is because of the amount of boilerplate it entails. It involves the following steps, which we’ll look at in greater detail in the following sections:

-

Define an interface that abstracts the I/O operations performed by the code you want to bring under test and put the impure implementation in a class that implements that interface.

-

In the class under test, require the interface in the constructor, store it in a field, and consume it as needed.

-

Create and inject a stubbed implementation for the purposes of unit testing.

-

Introduce some bootstrapping logic so that the impure implementation is provided at run time when the class under test is instantiated.

Abstracting I/O with an interface

Instead of calling DateTime.UtcNow directly, you abstract access to the system clock. That is, you define an interface and an implementation that performs the desired I/O like this:

public interface IDateTimeService { DateTime UtcNow { get; } ❶ } public class DefaultDateTimeService : IDateTimeService { public DateTime UtcNow => DateTime.UtcNow; ❷ }

❶ Encapsulates the impure behavior in an interface

❷ Provides a default implementation

You then refactor the date validator to consume this interface instead of accessing the system clock directly. The validator’s behavior now depends on the interface of which an instance should be injected (usually in the constructor). The following listing shows how to do this.

Listing 3.8 Refactoring a class to consume an interface

public class DateNotPastValidator : IValidator<MakeTransfer> { private readonly IDateTimeService dateService; public DateNotPastValidator (IDateTimeService dateService) ❶ { this.dateService = dateService; } public bool IsValid(MakeTransfer transfer) => dateService.UtcNow.Date <= transfer.Date.Date; ❷ }

❶ Injects the interface in the constructor

❷ Validation now depends on the interface.

Let’s look at the refactored IsValid method: is it a pure function? Well, the answer is, it depends! It depends, of course, on the implementation of IDateTimeService that’s injected:

-

When running normally, you’ll compose your objects so that you get the real impure implementation that checks the system clock.

-

When running unit tests, you’ll inject a fake pure implementation that does something predictable, such as always returning the same

DateTime, enabling you to write tests that are repeatable.

The following listing shows how you can write tests using this approach.

Listing 3.9 Testing by injecting a predictable implementation

public class DateNotPastValidatorTest { static DateTime presentDate = new DateTime(2021, 3, 12); private class FakeDateTimeService : IDateTimeService ❶ { public DateTime UtcNow => presentDate; } [Test] public void WhenTransferDateIsPast_ThenValidationFails() { var svc = new FakeDateTimeService(); var sut = new DateNotPastValidator(svc); ❷ var transfer = MakeTransfer.Dummy with { Date = presentDate.AddDays(-1) }; Assert.AreEqual(false, sut.IsValid(transfer)); } }

❶ Provides a pure, fake implementation

That is, we create a stub, a fake implementation that, unlike the real one, has a deterministic result.

We’re still not done because we need to provide DateNotPastValidator with the IDateTimeService it depends on at run time. This can be done in a variety of ways, both manually and with the help of a framework, depending on the complexity of your program and your technologies of choice.7 In an ASP.NET application, it may end up looking like this:

public void ConfigureServices(IServiceCollection services) { services.AddTransient<IDateTimeService, DefaultDateTimeService>(); services.AddTransient<DateNotPastValidator>(); }

This code registers the real, impure implementation DefaultDateTimeService, associating it with the IDateTimeService interface. As a result, when a DateNotPastValidator is required, ASP.NET sees that it needs an IDateTimeService in the constructor and provides it an instance of DefaultDateTimeService.

Pitfalls of the interface-based approach

Unit tests are so valuable that developers gladly put up with all this effort, even for something as simple as DateTime.UtcNow. One of the least desirable effects of using the interface-based approach systematically is the explosion in the number of interfaces because you must define an interface for every component that has an I/O element.

Most applications are developed with an interface for every service, even when only one concrete implementation is envisaged. These are called header interfaces, and they’re not what interfaces were initially designed for (a common contract with several different implementations), but they’re used across the board. You end up with more files, more indirection, more assemblies, and code that’s difficult to navigate.

3.4.2 Testability without so much boilerplate

I’ve discussed the pitfalls of the interface-based approach to dependency injection. In this subsection, I’ll show you some simpler alternatives. Namely, instead of consuming an interface, the code under test can consume a function or, sometimes, simply a value.

Pushing the pure boundary outwards

Can we get rid of the whole problem and make everything pure? No, we’re required to check the current date. This is an operation with a nondeterministic result. But sometimes, we can push the boundaries of pure code. For instance, what if you rewrote the date validator as in the following listing?

Listing 3.10 Injecting a specific value, not an interface, making IsValid pure

public record DateNotPastValidator(DateTime Today) : IValidator<MakeTransfer> { public bool IsValid(MakeTransfer transfer) => Today <= transfer.Date.Date; }

Instead of injecting an interface, exposing some method you can invoke, we inject a value. Now the implementation of IsValid is pure! You’ve effectively pushed the side effect of reading the current date outward to the code instantiating the validator. To set up the creation of this validator, you might use some code like this:

public void ConfigureServices(IServiceCollection services) { services.AddTransient<DateNotPastValidator> (_ => new DateNotPastValidator(DateTime.UtcNow.Date)); }

Without going into detail, this code defines a function to be called whenever a DateNotPastValidator is required, and within this function, the current date creates the new instance. Note that this requires DateNotPastValidator to be transient; we have a new instance created when one is needed to validate an incoming request. This is a reasonable behavior in this case.

Consuming a value rather than a method that performs I/O is an easy win, making more of your code pure and, thus, easily testable. This approach works well when your logic depends on, say, configurations that are stored in a file or environment-specific settings. But things are not always this easy, so let’s move on to a more general solution.

Injecting functions as dependencies

Imagine that when a MakeTransfer request is received, a list of several validators, each enforcing a different rule, is created. If one validation fails, the request fails, and the subsequent validators will not be called.

Furthermore, imagine that querying the system clock is expensive (it isn’t, but most I/O operations are). You don’t want to do that every time the validator is created, but only when it’s actually used. You can achieve this by injecting a function, rather than a value, which the validator calls as needed:

public record DateNotPastValidator(Func<DateTime> Clock) : IValidator<MakeTransfer> { public bool IsValid(MakeTransfer transfer) => Clock().Date <= transfer.Date.Date; }

I’ve called the injected function Clock, because what’s a clock if not a function you can call to get the current time? The implementation of IsValid now performs no side effects other than those performed by Clock, so it can easily be tested by injecting a “broken clock”:

readonly DateTime today = new(2021, 3, 12); [Test] public void WhenTransferDateIsToday_ThenValidatorPasses() { var sut = new DateNotPastValidator(() => today); var transfer = MakeTransfer.Dummy with { Date = today }; Assert.AreEqual(true, sut.IsValid(transfer)); }

On the other hand, when creating the validator, you’ll pass a function that actually queries the system clock, as follows:

public void ConfigureServices(IServiceCollection services) { services.AddSingleton<DateNotPastValidator> (_ => new DateNotPastValidator(() => DateTime.UtcNow.Date)); }

Notice that because the function that returns the current date is now called by the validator, it’s no longer required to have the validator be short-lived. You could use it as a singleton as I showed in the preceding snippet.

This solution ticks all the boxes: the validator can now be tested deterministically, no I/O will be performed unless required, and we don’t need to define any unnecessary interfaces or trivial classes. We’ll pursue this approach further in chapter 9.

Injecting a delegate for more clarity

If you go down the route of injecting a function, you could consider going the extra mile. You can define a delegate rather than simply using a Func:

public delegate DateTime Clock(); public record DateNotPastValidator(Clock Clock) : IValidator<MakeTransfer> { public bool IsValid(MakeTransfer transfer) => Clock().Date <= transfer.Date.Date; }

The code for testing remains identical; in the setup, you can potentially gain in clarity by just registering a Clock. Once that’s done, the framework knows to use that when the validator that requires a Clock is created:

public void ConfigureServices(IServiceCollection services) { services.AddTransient<Clock>(_ => () => DateTime.UtcNow); services.AddTransient<DateNotPastValidator>(); }

3.5 Purity and the evolution of computing

I hope that this chapter has made the concept of function purity less mysterious and has shown why extending the footprint of pure code is a worthwhile objective. This improves the maintainability, performance, and testability of your code.

The evolution of software and hardware also has important consequences for how we think about purity. Our systems are increasingly distributed, so the I/O part of our programs is increasingly important. With microservices architectures becoming mainstream, our programs consist less of doing computation and more of delegating computation to other services, which they communicate with via I/O.

This increase in I/O requirements means purity is harder to achieve. But it also means increased requirements for asynchronous I/O. As you’ve seen, purity helps you deal with concurrent scenarios, which include dealing with asynchronous messages.

Hardware evolution is also important: CPUs aren’t getting faster at the same pace as before, so hardware manufacturers are moving toward combining multiple processors and cores. Parallelization is becoming the main road to computing speed, so there’s a need to write programs that can be parallelized well. Indeed, the move toward multicore machines is one of the main reasons for the renewed interest we’re currently seeing in FP.

Exercises

Write a console app that calculates a user’s Body Mass Index (BMI):

-

Prompt the user for their height in meters and weight in kilograms.

-

Output a message: underweight (BMI < 18.5), overweight (BMI >= 25), or healthy.

-

Structure your code so that pure and impure parts are separate.

-

Unit test the overall workflow using the function-based approach to abstract away the reading from and writing to the console.

Because most of this chapter was devoted to seeing the concept of purity in practice, I encourage you to investigate, applying the techniques we discussed to some code you’re presently working on. You can learn something new while getting paid for it!

-

Find a place where you’re doing some non-trivial operation based on a list (search for

foreach). See if the operation can be parallelized; if not, see if you can extract a pure part of the operation and parallelize that part. -

Search for uses of

DateTime.NoworDateTime.UtcNowin your codebase. If that area isn’t under test, bring it under test using both the interface-based approach and the function-based approach described in this chapter. -

Look for other areas of your code where you’re relying on an impure dependency that has no transitive dependencies. The obvious candidates are static classes such as

ConfigurationManagerorEnvironmentthat cross the application boundary. Try to apply the function-based testing pattern.

Summary

-

Compared to mathematical functions, programming functions are more difficult to reason about because their output may depend on variables other than their input arguments.

-

Side effects include state mutation, throwing exceptions, and I/O.

-

Functions without side effects are called pure. These functions do nothing other than return a value that depends solely on their input arguments.

-

Pure functions can be more readily optimized and tested than impure ones, and they can be used more reliably in concurrent scenarios. You should prefer pure functions whenever possible.

-

Unlike other side effects, I/O can’t be avoided, but you can still isolate the parts of your application that perform I/O in order to reduce the footprint of impure code.

1 More theoretically inclined authors show how you can reason about pure functions algebraically to prove the correctness of your program; see, for example, Graham Hutton’s Programming in Haskell, 2nd ed. Cambridge, UK: Cambridge University Press, 2016.

2 I should point out that completely avoiding state mutation is not always easy and not always practical. But avoiding state mutation most of the time is, and this is something you should be aiming toward.

3 In many languages, you’d have functions like this as freestanding functions, but methods in C# need to be inside a class. It’s mostly a matter of taste where you put your static functions.

4 PLINQ is an implementation of LINQ that works in parallel.

5 AAA is a ubiquitous pattern for structuring the code in unit tests. According to this pattern, a test consists of three steps: arrange prepares any prerequisites, act performs the operation being tested, and assert runs assertions against the obtained result.

6 You could try writing a test that reads from the system clock when populating the input MakeTransfer. This may work in most cases, but there is a small window around midnight during which, when arranging the inputs for the test, the date is different than the date when IsValid is called. You’re not, in fact, guaranteed consistency after all. Furthermore, we need an approach that will work with any I/O operation, not just accessing the clock.

7 Manually composing all classes in a complex application can become quite a chore. To mitigate this, some frameworks allow you to declare what implementations to use for any interface that’s required. These are called IoC containers, where IoC stands for inversion of control.