Chapter 4. Kustomize

Deploying to a Kubernetes cluster is, in summary, applying some YAML files and checking the result.

The hard part is developing the initial YAML files version; after that, usually, they suffer only small changes such as updating the container image tag version, the number of replicas, or a new configuration value. One option is to make these changes directly in the YAML files—it works, but any error in this version (modification of the wrong line, deleting something by mistake, putting in the wrong whitespace) might be catastrophic.

For this reason, some tools let you define base Kubernetes manifests (which change infrequently) and specific files (maybe one for each environment) for setting the parameters that change more frequently. One of these tools is Kustomize.

In this chapter, you’ll learn how to use Kustomize to manage Kubernetes resource files in a template-free way without using any DSL.

The first step is to create a Kustomize project and deploy it to a Kubernetes cluster (see Recipe 4.1).

After the first deployment, the application is automatically updated with a new container image, a new configuration value, or any other field, such as the replica number (see Recipes 4.2 and 4.3).

If you’ve got several running environments (i.e., staging, production, etc.), you need to manage them similarly. Still, with its particularities, Kustomize lets you define a set of custom values per environment (see Recipe 4.4).

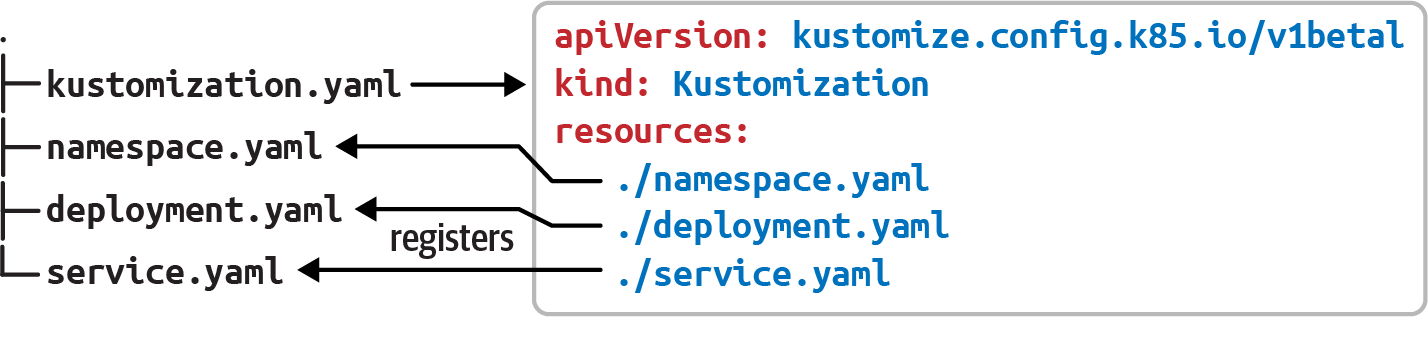

Application configuration values are properties usually mapped as a Kubernetes ConfigMap.

Any change (and its consequent update on the cluster) on a ConfigMap doesn’t trigger a rolling update of the application, which means that the application will run with the previous version until you manually restart it.

Kustomize provides some functions to automatically execute a rolling update when the ConfigMap of an application changes (see Recipe 4.5).

4.1 Using Kustomize to Deploy Kubernetes Resources

Solution

Use Kustomize to configure which resources to deploy.

Deploying an application to a Kubernetes cluster isn’t as trivial as just applying one YAML/JSON file containing a Kubernetes Deployment object.

Usually, other Kubernetes objects must be defined like Service, Ingress, ConfigMaps, etc., which makes things a bit more complicated in terms of managing and updating these resources (the more resources to maintain, the more chance to update the wrong one) as well as applying them to a cluster (should we run multiple kubectl commands?).

Kustomize is a CLI tool, integrated within the kubectl tool to manage, customize, and apply Kubernetes resources in a template-less way.

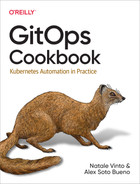

With Kustomize, you need to set a base directory with standard Kubernetes resource files (no placeholders are required) and create a kustomization.yaml file where resources and customizations are declared, as you can see in Figure 4-1.

Figure 4-1. Kustomize layout

Let’s deploy a simple web page with HTML, JavaScript, and CSS files.

First, open a terminal window and create a directory named pacman, then create three Kubernetes resource files to create a Namespace, a Deployment, and a Service with the following content.

The namespace at pacman/namespace.yaml:

apiVersion:v1kind:Namespacemetadata:name:pacman

The deployment file at pacman/deployment.yaml:

apiVersion:apps/v1kind:Deploymentmetadata:name:pacman-kikdnamespace:pacmanlabels:app.kubernetes.io/name:pacman-kikdspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikdports:-containerPort:8080name:httpprotocol:TCP

The service file at pacman/service.yaml:

apiVersion:v1kind:Servicemetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:ports:-name:httpport:8080targetPort:8080selector:app.kubernetes.io/name:pacman-kikd

Notice that these files are Kubernetes files that you could apply to a Kubernetes cluster without any problem as no special characters or placeholders are used.

The second thing is to create the kustomization.yaml file in the pacman directory containing the list of resources that belongs to the application and are applied when running Kustomize:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./namespace.yaml-./deployment.yaml-./service.yaml

At this point, we can apply the kustomization file into a running cluster by running the following command:

Prints the result of the kustomization run, without sending the result to the cluster

With

-koption setskubectlto use the kustomization file

Directory with parent kustomization.yaml file

Note

We assume you’ve already started a Minikube cluster as shown in Recipe 2.3.

The output is the YAML file that would be sent to the server if the dry-run option was not used:

apiVersion:v1items:-apiVersion:v1kind:Namespacemetadata:name:pacman-apiVersion:v1kind:Servicemetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:ports:-name:httpport:8080targetPort:8080selector:app.kubernetes.io/name:pacman-kikd-apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikdports:-containerPort:8080name:httpprotocol:TCPkind:Listmetadata:{}

Discussion

The resources section supports different inputs in addition to directly setting the YAML files.

For example, you can set a base directory with its own kustomization.yaml and Kubernetes resources files and refer it from another kustomization.yaml file placed in another directory.

Given the following directory layout:

. ├── base │ ├── kustomization.yaml │ └── deployment.yaml ├── kustomization.yaml ├── configmap.yaml

And the Kustomization definitions in the base directory:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yaml

You’ll see that the root directory has a link to the base directory and a ConfigMap definition:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./base-./configmap.yaml

So, applying the root kustomization file will automatically apply the resources defined in the base kustomization file.

Also, resources can reference external assets from a URL following the HashiCorp URL format.

For example, we refer to a GitHub repository by setting the URL:

Repository with a root-level kustomization.yaml file

Repository with a root-level kustomization.yaml file on branch test

You’ve seen the application of a Kustomize file using kubectl, but Kustomize also comes with its own CLI tool offering a set of commands to interact with Kustomize resources.

The equivalent command to build Kustomize resources using kustomize instead of kubectl is:

kustomize build

And the output is:

apiVersion:v1kind:Namespacemetadata:name:pacman---apiVersion:v1kind:Servicemetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:ports:-name:httpport:8080targetPort:8080selector:app.kubernetes.io/name:pacman-kikd---apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikdports:-containerPort:8080name:httpprotocol:TCP

If you want to apply this output generated by kustomize to the cluster, run the following command:

kustomize build . | kubectl apply -f -4.2 Updating the Container Image in Kustomize

Solution

Use the images section to update the container image.

One of the most important and most-used operations in software development is updating the application to a newer version either with a bug fix or with a new feature.

In Kubernetes, this means that you need to create a new container image, and name it accordingly using the tag section (<registry>/<username>/<project>:<tag>).

Given the following partial deployment file:

spec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikd

We can update the version tag to 1.0.1 by using the images section in the kustomization.yaml file:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./namespace.yaml-./deployment.yaml-./service.yamlimages:-name:lordofthejars/pacman-kikdnewTag:1.0.1

Finally, use kubectl in dry-run or kustomize to validate that the output of the deployment file contains the new tag version.

In a terminal window, run the following command:

kustomize build

The output of the preceding command is:

...apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.1imagePullPolicy:Alwaysname:pacman-kikdports:-containerPort:8080name:httpprotocol:TCP

Note

Kustomize is not intrusive, which means that the original deployment.yaml file still contains the original tag (1.0.0).

Discussion

One way to update the newTag field is by editing the kustomization.yaml file, but you can also use the kustomize tool for this purpose.

Run the following command in the same directory as the kustomization.yaml file:

kustomize edit set image lordofthejars/pacman-kikd:1.0.2Check the content of the kustomization.yaml file to see that the newTag field has been updated:

...images:-name:lordofthejars/pacman-kikdnewTag:1.0.2

4.3 Updating Any Kubernetes Field in Kustomize

Solution

Use the patches section to specify a change using the JSON Patch specification.

In the previous recipe, you saw how to update the container image tag, but sometimes you might change other parameters like the number of replicas or add annotations, labels, limits, etc.

To cover these scenarios, Kustomize supports the use of JSON Patch to modify any Kubernetes resource defined as a Kustomize resource. To use it, you need to specify the JSON Patch expression to apply and which resource to apply the patch to.

For example, we can modify the number of replicas in the following partial deployment file from one to three:

apiVersion:apps/v1kind:Deploymentmetadata:name:pacman-kikdnamespace:pacmanlabels:app.kubernetes.io/name:pacman-kikdspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:...

First, let’s update the kustomization.yaml file to modify the number of replicas defined in the deployment file:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yamlpatches:-target:version:v1group:appskind:Deploymentname:pacman-kikdnamespace:pacmanpatch:|-- op: replacepath: /spec/replicasvalue: 3

Patch resource.

targetsection sets which Kubernetes object needs to be changed. These values match the deployment file created previously.

Patch expression.

Modification of a value.

Path to the field to modify.

New value.

Finally, use kubectl in dry-run or kustomize to validate that the output of the deployment file contains the new tag version.

In a terminal window, run the following command:

kustomize build

The output of the preceding command is:

apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:replicas:3selector:matchLabels:app.kubernetes.io/name:pacman-kikd...

Tip

The replicas value can also be updated using the replicas field in the kustomization.yaml file.

The equivalent Kustomize file using the replicas field is shown in the following snippet:

Kustomize lets you add (or delete) values, in addition to modifying a value. Let’s see how to add a new label:

...patches:-target:version:v1group:appskind:Deploymentname:pacman-kikdnamespace:pacmanpatch:|-- op: replacepath: /spec/replicasvalue: 3- op: addpath: /metadata/labels/testkeyvalue: testvalue

The result of applying the file is:

Discussion

Instead of embedding a JSON Patch expression, you can create a YAML file with a Patch expression and refer to it using the path field instead of patch.

Create an external patch file named external_patch containing the JSON Patch expression:

-op:replacepath:/spec/replicasvalue:3-op:addpath:/metadata/labels/testkeyvalue:testvalue

And change the patch field to path pointing to the patch file:

...patches:-target:version:v1group:appskind:Deploymentname:pacman-kikdnamespace:pacmanpath:external_patch.yaml

In addition to the JSON Patch expression, Kustomize also supports Strategic Merge Patch to modify Kubernetes resources. In summary, a Strategic Merge Patch (or SMP) is an incomplete YAML file that is merged against a completed YAML file.

Only a minimal deployment file with container name information is required to update a container image:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yamlpatches:-target:labelSelector:"app.kubernetes.io/name=pacman-kikd"patch:|-apiVersion: apps/v1kind: Deploymentmetadata:name: pacman-kikdspec:template:spec:containers:- name: pacman-kikdimage: lordofthejars/pacman-kikd:1.2.0

Target is selected using label

Patch is smart enough to detect if it is an SMP or JSON Patch

This is a minimal deployment file

Sets only the field to change, the rest is left as is

The generated output is the original deployment.yaml file but with the new container image:

apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:pacmanspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.2.0imagePullPolicy:Always...

Tip

path is supported as well.

4.4 Deploying to Multiple Environments

Solution

Use the namespace field to set the target namespace.

In some circumstances, it’s good to have the application deployed in different namespaces; for example, one namespace can be used as a staging environment, and another one as the production namespace. In both cases, the base Kubernetes files are the same, with minimal changes like the namespace deployed, some configuration parameters, or container version, to mention a few. Figure 4-2 shows an example.

Figure 4-2. Kustomize layout

kustomize lets you define multiple changes with a different namespace, as overlays on a common base using the namespace field.

For this example, all base Kubernetes resources are put in the base directory and a new directory is created for customizations of each environment:

.├──base│├──deployment.yaml│└──kustomization.yaml├──production│└──kustomization.yaml└──staging└──kustomization.yaml

The base kustomization file contains a reference to its resources:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yaml

There is a kustomization file with some parameters set for each environment directory. These reference the base directory, the namespace to inject into Kubernetes resources, and finally, the image to deploy, which in production is 1.1.0 but in staging is 1.2.0-beta.

For the staging environment, kustomization.yaml content is shown in the following listing:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-../basenamespace:stagingimages:-name:lordofthejars/pacman-kikdnewTag:1.2.0-beta

References to base directory

Sets namespace to staging

Sets the container tag for the staging environment

The kustomization file for production is similar to the staging one, but changes the namespace and the tag:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-../basenamespace:prodimages:-name:lordofthejars/pacman-kikdnewTag:1.1.0

Running kustomize produces different output depending on the directory where it is run; for example, running kustomize build in the staging directory produces:

apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdnamespace:stagingspec:replicas:1...template:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.2.0-beta...

But if you run it in the production directory, the output is adapted to the production configuration:

Discussion

Kustomize can preappend/append a value to the names of all resources and references. This is useful when a different name in the resource is required depending on the environment, or to set the version deployed in the name:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-../basenamespace:stagingnamePrefix:staging-nameSuffix:-v1-2-0images:-name:lordofthejars/pacman-kikdnewTag:1.2.0-beta

And the resulting output is as follows:

4.5 Generating ConfigMaps in Kustomize

Solution

Use the ConfigMapGenerator feature field to generate a Kubernetes ConfigMap resource on the fly.

Kustomize provides two ways of adding a ConfigMap as a Kustomize resource: either by declaring a ConfigMap as any other resource or declaring a ConfigMap from a ConfigMapGenerator.

While using ConfigMap as a resource offers no other advantage than populating Kubernetes resources as any other resource, ConfigMapGenerator automatically appends a hash to the ConfigMap metadata name and also modifies the deployment file with the new hash.

This minimal change has a deep impact on the application’s lifecycle, as we’ll see soon in the example.

Let’s consider an application running in Kubernetes and configured using a ConfigMap—for example, a database timeout connection parameter.

We decided to increase this number at some point, so the ConfigMap file is changed to this new value, and we deploy the application again.

Since the ConfigMap is the only changed file, no rolling update of the application is done.

A manual rolling update of the application needs to be triggered to propagate the change to the application.

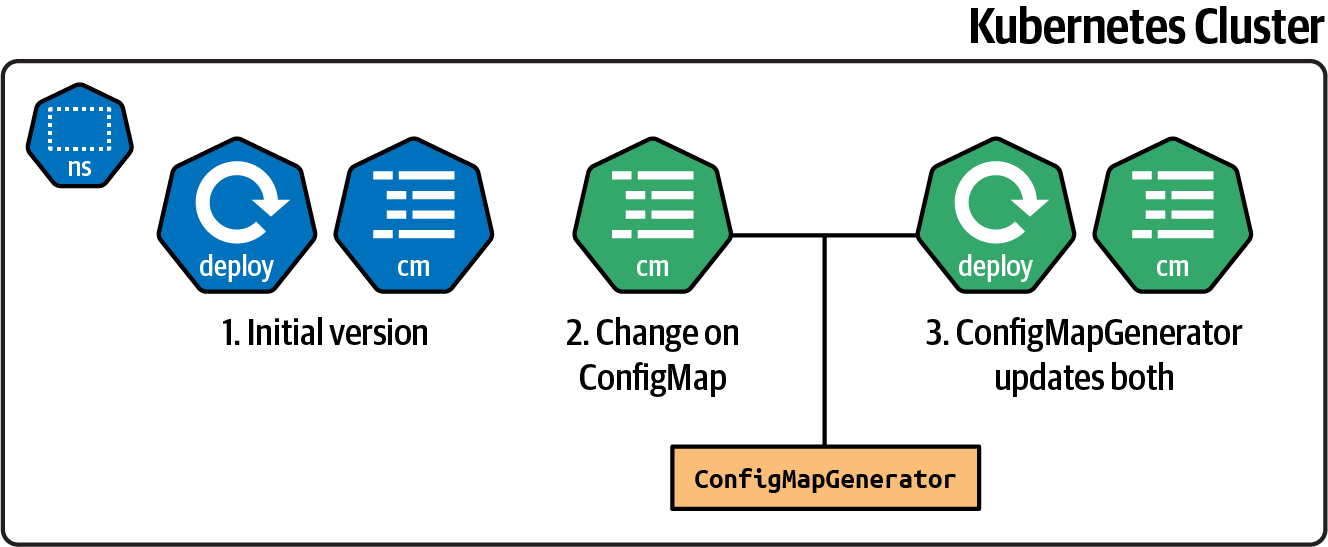

Figure 4-3 shows what is changed when a ConfigMap object is updated.

Figure 4-3. Change of a ConfigMap

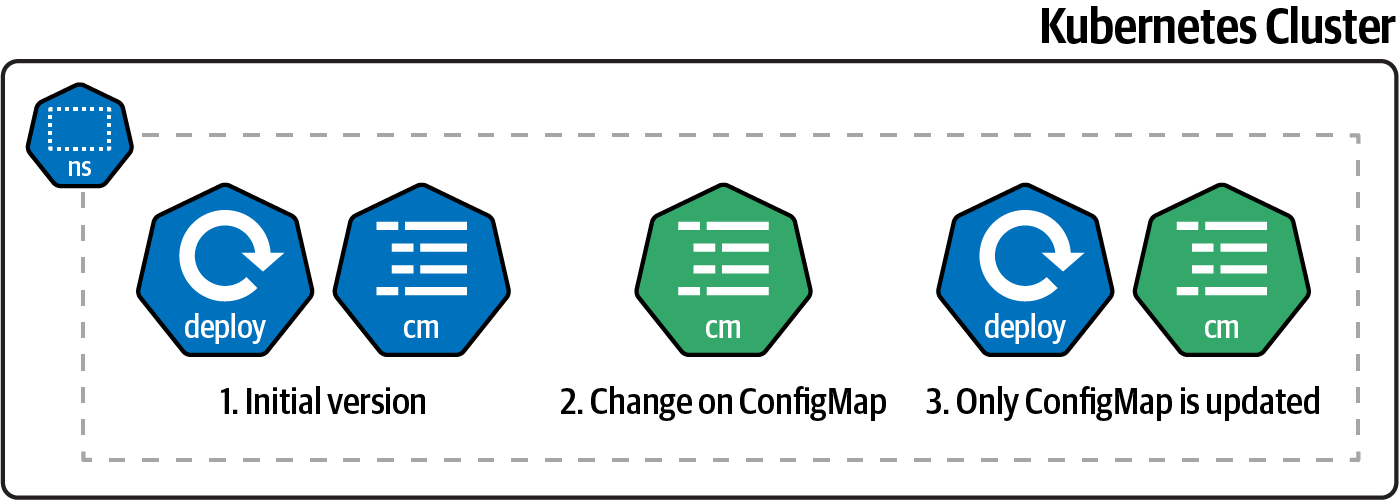

But, if ConfigMapGenerator manages the ConfigMap, any change on the configuration file also changes the deployment Kubernetes resource.

Since the deployment file has changed too, an automatic rolling update is triggered when the resources are applied, as shown in Figure 4-4.

Moreover, when using ConfigMapGenerator, multiple configuration datafiles can be combined into a single ConfigMap, making a perfect use case when every environment has different configuration files.

Figure 4-4. Change of a ConfigMap using ConfigMapGenerator

Let’s start with a simple example, adding the ConfigMapGenerator section in the kustomization.yaml file.

The deployment file is similar to the one used in previous sections of this chapter but includes the volumes section:

apiVersion:apps/v1kind:Deploymentmetadata:name:pacman-kikdspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikdvolumeMounts:-name:configmountPath:/configvolumes:-name:configconfigMap:name:pacman-configmap

The configuration properties are embedded within the kustomization.yaml file.

Notice that the ConfigMap object is created on the fly when the kustomization file is built:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yamlconfigMapGenerator:-name:pacman-configmapliterals:-db-timeout=2000-db-username=Ada

Name of the

ConfigMapset in the deployment file

Embeds configuration values in the file

Sets a key/value pair for the properties

Finally, use kubectl in dry-run or kustomize to validate that the output of the deployment file contains the new tag version.

In a terminal window, run the following command:

kustomize build

The output of the preceding command is a new ConfigMap with the configuration values set in kustomization.yaml.

Moreover, the name of the ConfigMap is updated by appending a hash in both the generated ConfigMap and deployment:

apiVersion:v1data:db-timeout:"2000"db-username:Adakind:ConfigMapmetadata:name:pacman-configmap-96kb69b6t4---apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikdspec:replicas:1selector:matchLabels:app.kubernetes.io/name:pacman-kikdtemplate:metadata:labels:app.kubernetes.io/name:pacman-kikdspec:containers:-image:lordofthejars/pacman-kikd:1.0.0imagePullPolicy:Alwaysname:pacman-kikdvolumeMounts:-mountPath:/configname:configvolumes:-configMap:name:pacman-configmap-96kb69b6t4name:config

ConfigMapwith properties

Name with hash

Name field is updated to the one with the hash triggering a rolling update

Since the hash is calculated for any change in the configuration properties, a change on them provokes a change on the output triggering a rolling update of the application.

Open the kustomization.yaml file and update the db-timeout literal from 2000 to 1000 and run kustomize build again.

Notice the change in the ConfigMap name using a new hashed value:

Discussion

ConfigMapGenerator also supports merging configuration properties from different sources.

Create a new kustomization.yaml file in the dev_literals directory, setting it as the previous directory and overriding the db-username value:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-../literalsconfigMapGenerator:-name:pacman-configmapbehavior:mergeliterals:-db-username=Alexandra

Running the kustomize build command produces a ConfigMap containing a merge of both configuration properties:

apiVersion:v1data:db-timeout:"1000"db-username:Alexandrakind:ConfigMapmetadata:name:pacman-configmap-ttfdfdk5t8---apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikd...

In addition to setting configuration properties as literals, Kustomize supports defining them as .properties files.

Create a connection.properties file with two properties inside:

db-url=prod:4321/dbdb-username=ada

The kustomization.yaml file uses the files field instead of literals:

apiVersion:kustomize.config.k8s.io/v1beta1kind:Kustomizationresources:-./deployment.yamlconfigMapGenerator:-name:pacman-configmapfiles:-./connection.properties

Running the kustomize build command produces a ConfigMap containing the name of the file as a key, and the value as the content of the file:

apiVersion:v1data:connection.properties:|-db-url=prod:4321/dbdb-username=adakind:ConfigMapmetadata:name:pacman-configmap-g9dm2gtt77---apiVersion:apps/v1kind:Deploymentmetadata:labels:app.kubernetes.io/name:pacman-kikdname:pacman-kikd...

See Also

Kustomize offers a similar way to deal with Kubernetes Secrets. But as we’ll see in Chapter 8, the best way to deal with Kubernetes Secrets is using Sealed Secrets.

4.6 Final Thoughts

Kustomize is a simple tool, using template-less technology that allows you to define plain YAML files and override values either using a merge strategy or using JSON Patch expressions. The structure of a project is free as you define the directory layout you feel most comfortable with; the only requirement is the presence of a kustomization.yaml file.

But there is another well-known tool to manage Kubernetes resources files, that in our opinion, is a bit more complicated but more powerful, especially when the application/service to deploy has several dependencies such as databases, mail servers, caches, etc. This tool is Helm, and we’ll cover it in Chapter 5.