Basic Webcasting Concepts

Webcasting combines a number of different disciplines, such as audio and video engineering, web page authoring, and server administration. Like many other technical fields, it also has its own jargon. When you’re trying to put a webcast together it’s helpful to understand how the different disciplines work together, and what the crew is talking about.

This chapter provides an overview of the mechanics of streaming media, which is the technology that webcasting is built on, as well as a look at the tools, technologies, and jargon used during a webcast. Specifically this chapter covers:

• Streaming Media: What It Is and How It Works

• The Importance of Bandwidth

• Webcasting System Components

• The Webcasting Process

• Webcasting Tools

Streaming Media: What It Is and How It Works

Webcasting is a specialized application of streaming media technology. Streaming media enables real time delivery of media presentations across a network. Streaming media technology is similar to the technology used to deliver web pages, but with a key difference—web servers download files, whereas streaming media servers stream files.

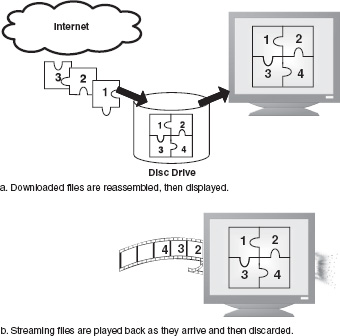

Downloaded files are displayed, or rendered only after the entire file has been delivered and reassembled. Streamed files are played back as they are delivered (see Figure 2-1). For very small media files, the difference may not be noticeable. For larger files, however, streaming media offer a number of advantages:

Figure 2-1

Downloading is different than streaming.

• Real-time: Streaming media files begin playback after a few seconds, rather than after the entire file has been delivered.

• Control/Interactivity: Streaming media enables viewers to control and interact with the presentation.

• Security: Streaming media is played back and discarded instead of being stored on the user’s hard drive.

• Live broadcasting: Perhaps most importantly, streaming media enables live broadcasts, or webcasts.

Some short on-demand media files are delivered using a hybrid technology known as progressive download. Progressive download files are delivered by a web server, but playback begins before the entire file has been delivered.

Depending on the size of the file, playback may begin anywhere from immediately to a few minutes after the file has been requested. While this is an improvement over pure download, it is still limited in its applications. It is fine for short files such as movie trailers or advertisements. For all other media delivery, streaming is a much better approach.

It should be obvious that there is no such thing as a live download, progressive or otherwise. Webcasting requires streaming technology, because it happens in real time. Webcasting also requires that you understand the concept of bandwidth.

The Importance of Bandwidth

Bandwidth refers to the concurrent capacity of an Internet connection—the greater the capacity, the higher the bandwidth. Bandwidth is generally measured in bits per second (bps). The bandwidths of typical internet connections are listed in Table 2-1.

Table 2-1

Typical Internet bandwidths.

| Connection Type | Bandwidth/Bit rate |

| Dial-up (56K Modem) | 34-37 Kilobits per second (Kbps) |

| Broadband (Cable, xDSL) | 200-1000 Kbps |

| Frame Relay, Fractional T-l | 128 Kbps-1.5 Megabits per second (Mbps) |

| T-l | 1.5 Mbps |

| Ethernet | 10 Mbps |

| T-3 | 45 Mbps |

These measurements represent the maximum theoretical capacities. Actual capacities can be far less.

Bandwidth is important in a number of ways during a webcast:

• The bandwidth of individual audience members determines how the webcast should be encoded

• The bandwidth available on site determines how much data can be sent to the distribution servers

• The aggregate bandwidth used determines the bandwidth charge

Each of these has an impact on a webcast, and must be taken into consideration. Bandwidth is a recurring issue during a webcast, and is discussed in more detail throughout the book as it pertains to each particular topic.

Webcasting System Components

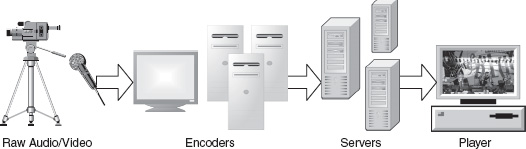

Webcasts vary in size and complexity, but at their core they are very simple. No matter how large or small, all webcasts are assembled using three basic building blocks: encoders, servers, and players (see Figure 2-2).

Figure 2-2

The basic flow of a webcast.

Encoders, Servers, and Players

Working from left to right in Figure 2-2, the first component of a webcast is the encoder. The encoder converts the raw audio and video signals into a format that can be streamed across a network. They are necessary because the data rates of raw audio and video are far too high for network delivery. Encoders are covered in more detail in Chapter 9.

The next component is the server. Streaming media servers receive the incoming encoded streams from encoders, and then distribute them to the audience. They may also distribute them to other servers, for load balancing and redundancy purposes. Load balancing is a technique where a number of servers are used to distribute a webcast to improve performance and reliability. Servers are covered in more detail in Chapter 11, Webcast Distribution.

The final component in a webcast is the player. The player communicates with the streaming media server, receives the incoming encoded stream, and renders it. It also provides the viewer with control over the playback of the stream. Different streaming media players may offer different features, but they all provide the same basic functionality.

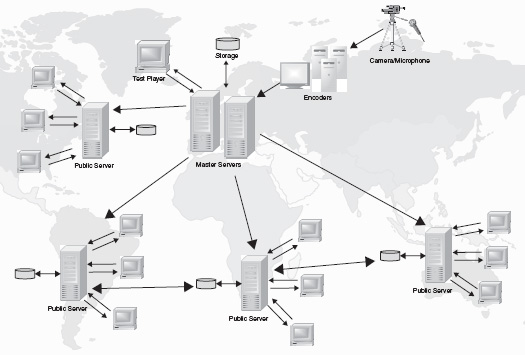

Large-scale webcast architectures are more complex, but are comprised of the same basic building blocks. Because redundancy is key to any webcast, multiple encoders and multiple servers are used, and of course the audience may include hundreds or thousands of players (see Figure 2-3).

Figure 2-3

Large scale, redundant webcast infrastructures are built from the same three components.

Protocols, File Formats, and Codecs

It’s not enough to have an encoder, a server, and a player. To be useful, the three components must be able to communicate and interact with each other. To this end, standardized protocols, file formats, and codecs have been developed.

Protocols determine how components communicate. For example, when you type a website location into your browser, it begins with “http://.” This stands for hypertext transfer protocol, which is the protocol web servers and browsers use. Streaming servers use a variety of protocols, the most common being RTSP (real time streaming protocol).

Once information has been exchanged via a standardized protocol, the applications need to know how the data is arranged. This is determined by the file format. A file format generally consists of a header which contains information about the file, followed by the audio and video data. There may also be additional tracks included in the file that contain information about the layout of the presentation, special effects, or closed caption text.

Most streaming media platforms use proprietary file formats, which is why files created for one format may not play back in another player. The notable exceptions to this are MPEG files, which use a standardized file format.

Inside the Industry

MPEG-4—File Format of the Future? The MPEG-4 standard defines a broad framework for creating interactive media presentations. A small subsection of the MPEG-4 standard pertains to streaming media. Included is a file format standard, which is based on the OuickTime file format. Though the MPEG-4 audio and video codecs are the focus of much development, the file format is still not widely in use. RealNetworks, Microsoft, and Macromedia still use their own proprietary file formats. Unsurprisingly, only OuickTime supports the MPEG-4 file format. Some of this was previously due to the licensing issues surrounding MPEG-4, but for the most part these have been resolved. (For the most up-to-date licensing details visit www.m4if.org.) At this point the problem is companies’ reluctance to do anything that might endanger their market share. Because most streaming formats are already moving to the RTSP protocol, a standardized file format would arguably be of most use to the streaming media community, since it’s a major hurdle to interoperability. Standardized codecs would also be useful, but until recently the standardized MPEG-4 codecs were simply not in the same league as the video codecs available from Microsoft and RealNetworks. This is rapidly changing. MPEG-4 is seeing rapid adoption in the cell phone industry, where standards are absolutely necessary. Whether or not MPEG-4 ever becomes the standard for streaming media on desktops remains to be seen. |

Finally, once the data has been exchanged, the streaming media player must decode the information so that it can be rendered. This is done using a codec. A codec (a contraction of coder-decoder) is a set of rules that dictates how data is encoded and decoded. Codecs are necessary because raw audio and video files are far too large for network delivery.

Codecs are used by many applications. Most images on the Internet are encoded using either the JPG or GIF codecs. Archiving applications such as WinZip and Stuff It use codecs. Streaming media platforms use audio and video codecs, and others if other data types are being streamed.

ALERT

|

Unfortunately, codecs are for the most part proprietary to each streaming media format. Files encoded in one format generally cannot be played in another format’s player. This is partially due to the file format (as mentioned previously), and also due to the codec. |

Streaming media companies are extremely protective of their codecs, because each new codec release generally represents a substantial leap in audio and video quality, and gives their platform a competitive edge.

The past few years, however, have seen less dramatic improvements. While some codecs are certainly better than others, the quality of all streaming media codecs is pretty good at this point. The decision as to which platform to use can be made without having to worry too much about which looks or sounds better.

Now that you know a little bit about the components of a webcasting system, it’s time to find out how they all fit together.

The Webcasting Process

Creating streaming media is usually a four-step process: production, encoding, authoring, and distribution. When creating streaming media archives, these steps can be followed sequentially. However, during a webcast everything happens at the same time. This means you need enough equipment and crew to do four things at once. And therefore, to ensure a webcast runs smoothly, a crucial step must be added: planning.

Planning

Without proper planning, the chances of a successful webcast diminish drastically. Planning begins the moment a webcast is suggested, and continues up to the moment the webcast goes live.

Planning begins with the business issues as discussed in chapter 4. Webcasts are expensive to produce because of the increased equipment, crew, and bandwidth requirements. Therefore before too much time and effort is wasted a good business case must be made for the proposed webcast.

Once a webcast has been justified financially, planning for each step of the webcasting process can begin. Each step has its own set of requirements as you’ll see in the subsequent sections. A detailed overview of the planning required for a webcast is covered in Chapters 4, 5, and 6.

Production

Production is the traditional side of a webcast, where cameras, microphones, and a host of other equipment are used to produce the audio and video signals. These may be combined with other elements such as PowerPoint slides, text, and animation to create the final presentation.

Production is particularly equipment-intensive; it also requires a lot of crew. In fact, production will always be the largest line item in your webcast budget, and is the reason why webcasting can be an expensive proposition. Much of this depends on the scale of your webcast. For example, webcasts of simple talking heads or audio-only events can be produced relatively cheaply. Webcast Production Best Practices are covered in Chapters 7 and 8.

Encoding

Encoding is where the raw audio and video feeds are converted into formats that can be delivered across a network. This is done either in software or hardware. The encoders must be connected to the Internet or whatever network you’re webcasting on.

Encoding can either be done on-site at the event, or at the broadcast operations center (BOC) if you can send raw audio and video feeds back via satellite or a fiber connection. Smaller scale webcasts are encoded on site because of the significant cost savings over a satellite link. Larger scale webcasts may use both methods for redundancy. Webcast Encoding Best Practices are covered in Chapter 9.

Authoring

Authoring is how the audience is connected to the webcast. This can be as simple as a direct link sent via email, or a fancy presentation embedded in a webpage. It’s generally a good idea to keep the authoring as simple as possible during a webcast. Webcast Authoring Best Practices are covered in Chapter 10.

Distribution

The final step of the webcasting process is distribution or delivery of the content. Small webcasts can easily be handled by a single streaming media server, but in general most webcasts use multiple servers for distribution. This is done for redundancy purposes, in case a server has trouble during a webcast.

Streaming servers operate differently than web servers, but from an administrative standpoint they can be treated in roughly the same manner. As long as they’re up and running, the streaming server software takes care of the rest. Webcast Distribution is covered in Chapter 11.

Webcasting Tools

Each step of the webcasting process requires its own set of tools. As mentioned previously, the production phase is the most equipment intensive.

Production Tools

The amount of production equipment you need is directly related to the scale of your broadcast. You’re going to need enough audio and video equipment to produce a high quality signal. Given the rapid advances in digital video and home recording technology, this need not be a crippling expense.

ALERT

|

The most important thing to remember is that you must have a backup for each crucial link in the signal chain. That means at a minimum you should have a spare camera, a spare microphone, and plenty of spare cables. If you’re bringing processing equipment, bring spares of those along as well. |

If you’re producing a fairly large webcast, it’s probably a good idea to bring in a production partner. Not only will they have all the equipment that is needed to produce your event, but they’ll assume responsibility for their part of the production, which reduces the amount of responsibility on your shoulders. Outsourcing vs. Do-lt-Yourself is covered in Chapter 4.

Encoding Tools

To encode your webcast, you’ll need enough machines to encode as many streams as you want to offer. There are encoding solutions that enable a single encoding machine to produce more than one live stream, but in general you’ll need one encoder per stream. It’s important to have spare encoders should any machines fail. At a minimum you’ll want one spare machine, more if you’re encoding multiple streams.

Author’s Tip

There are a number of webcasting specialists who can deliver sturdy encoding solutions in flight cases, typically referred to in the industry as road-encodes.

The encoding machines must be equipped to handle the webcast. For example, if it is a video webcast, each encoding machine must have a video capture card. Most modern multimedia computers are more than capable of encoding a live stream, but even so there’s no such thing as a computer that is too fast or has too much RAM. Get the best encoding machines you can afford.

Encoding is another part of the webcast that can be contracted out to a third party. If you’re not going to be webcasting on a regular basis, you might consider outsourcing the encoding requirements.

Authoring Tools

Authoring can be done in a simple text application such as Notepad or using what-you-see-is-what-you-get (WYSIWYG) web page authoring tools. If you’re creating a fancy presentation, you may need specialized authoring tools. In general this isn’t a good idea, particularly if you need to change the presentation in an emergency. If you can’t change your link quickly using a text editor, it’s probably too complicated.

Distribution Tools

Finally, to distribute your webcast you’ll need a robust streaming infrastructure. This consists of one or more servers running streaming media server applications. Streaming servers can run on their own or on the same box as other server applications.

After the webcast is finished, you’ll want to gauge the success of your webcast by analyzing the streaming server log files. These provide you with a wealth of information including how many people viewed the webcast, how long they watched, and if there were any errors during the webcast.

Platform Considerations

One of the decisions you must make when planning a webcast is which technology you’re going to use for distribution. As of 2005, the majority of all webcasts are done on the Windows Media and Real platforms, with OuickTime and Flash accounting for a smaller percentage.

Each platform has advantages and disadvantages that you’ll have to consider. Amongst the questions to ask are:

• What operating system is the audience on, and what players are they likely to have already installed?

• Are there specialized data types or features of one particular platform that you want to use?

• Are licensing fees an issue?

Most of the streaming media platforms provide enough free tools for you to try out their solution, and most webcast distribution partners offer a variety of platform solutions. In general the best approach is to offer a couple of platform options, to cover the widest possible audience if needed and if budgets permit.

Conclusion

Webcasting is a specialized application of streaming media technology. Webcasting systems are comprised of three basic elements: encoders, servers, and players. Webcasting is highly dependent on bandwidth, which determines how much data can be sent in real time from the encoders to the servers, and the servers to the audience. Finally, planning is essential to a successful webcast.

The next chapter covers the basics of digital audio and video. Understanding digital audio and video will help you understand the limitations of webcasting technology, and thereby help you produce higher quality webcasts.