The non-linear version of PCA in the Shogun library is implemented in the CKernelPCA class. It works the same as if we were to use the linear version of the CPCA class. The main difference is that it's configured with an additional method, set_kernel(), which should be used to pass the pointer to the specific kernel object. In the following example, we're initializing the instance of the CGaussianKernel class for a kernel object:

void KernelPCAReduction(Some<CDenseFeatures<DataType>> features,

const int target_dim) {

auto gauss_kernel = some<CGaussianKernel>(features, features, 0.5);

auto pca = some<CKernelPCA>();

pca->set_kernel(gauss_kernel.get());

pca->set_target_dim(target_dim);

pca->fit(features);

auto feature_matrix = features->get_feature_matrix();

for (index_t i = 0; i < features->get_num_vectors(); ++i) {

auto vector = feature_matrix.get_column(i);

auto new_vector = pca->apply_to_feature_vector(vector);

}

}

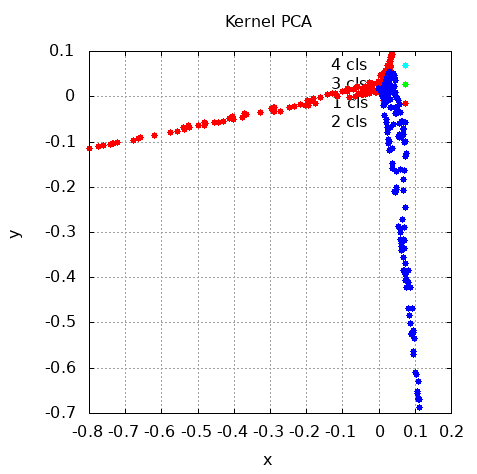

The following graph shows the result of applying Shogun kernel PCA implementation to our data:

We can see that this type of kernel makes some parts of the data separated, but that other ones were reduced too much.