It would be nice to describe the interests of the user with more extensive features—not in the format of they love movies X, Y, and Z, but in the format of they love romantic comedies. Besides the fact that it increases the generalizability of the model, it also solves the problem of having a large data dimension—after all, the interests are described not by the items vector, but by a significantly smaller preference vector.

Such approaches are also called spectral decomposition or high-frequency filtering (since we remove the noise and leave the useful signal). There are many different types of matrix decomposition in algebra, and one of the most commonly used is called Singular Value Decomposition (SVD).

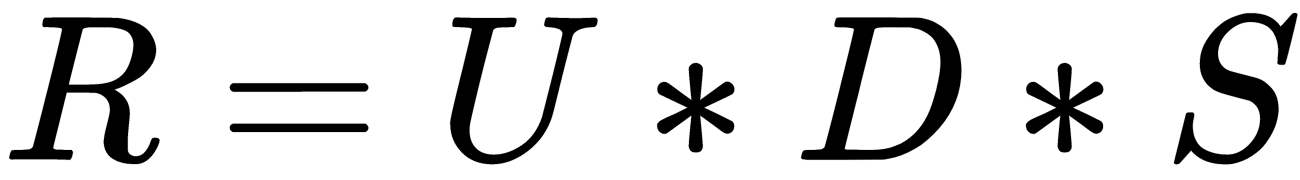

Initially, the SVD method was used to select pages that are similar in meaning but not in content. More recently, it has started being used in recommendations. The method is based on the decomposition of the original R rating matrix into a product of three matrices,  , where the sizes of the matrices are

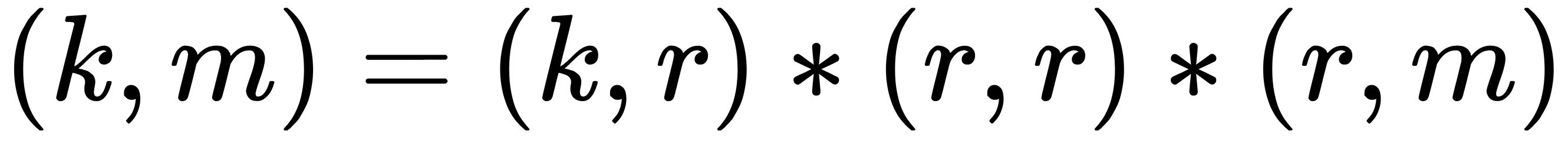

, where the sizes of the matrices are  and r is the rank of the decomposition, which is the parameter characterizing the degree of detail decomposition.

and r is the rank of the decomposition, which is the parameter characterizing the degree of detail decomposition.

Applying this decomposition to our matrix of preferences, we can get the following two matrices of factors (abbreviated descriptions):

- U: A compact description of user preferences.

- S: A compact description of the characteristics of the product.

It is important that with this approach, we do not know which particular characteristics correspond to the factors in the reduced descriptions; for us, they are encoded with some numbers. Therefore, SVD is an uninterpreted model. It is sufficient to multiply the matrix of factors to obtain an approximation of the matrix of preferences. By doing this, we get a rating for all customer-product pairs.

A typical family of such algorithms is called non-negative matrix factorization (NMF). As a rule, the calculation of such expansions is very computationally expensive. Therefore, in practice, they often resort to their approximate iterative variants. ALS is a popular iterative algorithm for decomposing a matrix of preferences into a product of two matrices: user factors (U) and product factors (I). It works on the principle of minimizing the root-mean-square error (RMSE) on the affixed ratings. Optimization takes place alternately—first by user factors, then by product factors. Also, to avoid retraining, the regularization coefficients are added to the RMSE.

If we supplement the matrix of preferences with a new dimension containing information about the user or product, then we can work not with the matrix of preferences, but with the tensor. Thus, we use more available information and possibly get a more accurate model.

In this section, we considered different approaches to solving recommender systems tasks. Now, we are going to discuss methods for the estimation of user preferences' similarity.