For the first sample, let's see how to implement a collaborative filtering recommender system based on matrix factorization with ALS and with a pure linear algebra library as a backend. In the following sample, we're using the Eigen library. The steps to implement a collaborative filtering recommender system are as follows:

- At first, we make base type definitions, as follows:

using DataType = float;

// using Eigen::ColMajor is Eigen restriction - todense method always returns

// matrices in ColMajor order

using Matrix =

Eigen::Matrix<DataType, Eigen::Dynamic, Eigen::Dynamic, Eigen::ColMajor>;

using SparseMatrix = Eigen::SparseMatrix<DataType, Eigen::ColMajor>;

using DiagonalMatrix =

Eigen::DiagonalMatrix<DataType, Eigen::Dynamic, Eigen::Dynamic>;

- These definitions allow us to write less source code for matrices' types and to quickly change floating-point precision. Then, we define and initialize the ratings (preferences) matrix, list of movie titles, and binary rating flags matrix, as follows:

SparseMatrix ratings_matrix; // user-item ratings

SparseMatrix p; // binary variables

std::vector<std::string> movie_titles;

We have a particular helper function, LoadMovies, which loads files to the map container, as shown in the following code snippet:

auto movies_file = root_path / "movies.csv";

auto movies = LoadMovies(movies_file);

auto ratings_file = root_path / "ratings.csv";

auto ratings = LoadRatings(ratings_file);

- After data is loaded, we initialize matrix objects with the right size, like this:

ratings_matrix.resize(static_cast<Eigen::Index>(ratings.size()),

static_cast<Eigen::Index>(movies.size()));

ratings_matrix.setZero();

p.resize(ratings_matrix.rows(), ratings_matrix.cols());

p.setZero();

movie_titles.resize(movies.size());

However, because we've loaded data to the map, we need to move the required rating values to the matrix object.

- So, we initialize the movie titles list, convert user IDs to our zero-based sequential order, and initialize the binary rating matrix (this is used in the algorithm to deal with implicit data), as follows:

Eigen::Index user_idx = 0;

for (auto& r : ratings) {

for (auto& m : r.second) {

auto mi = movies.find(m.first);

Eigen::Index movie_idx = std::distance(movies.begin(), mi);

movie_titles[static_cast<size_t>(movie_idx)] = mi->second;

ratings_matrix.insert(user_idx, movie_idx) =

static_cast<DataType>(m.second);

p.insert(user_idx, movie_idx) = 1.0;

}

++user_idx;

}

ratings_matrix.makeCompressed();

- After the rating matrix is initialized, we define and initialize our training variables, as follows:

auto m = ratings_matrix.rows();

auto n = ratings_matrix.cols();

Eigen::Index n_factors = 100;

auto y = InitializeMatrix(n, n_factors);

auto x = InitializeMatrix(m, n_factors);

In the preceding code snippet, the y matrix corresponds to user preferences, and the x matrix corresponds to the item parameters. Also, we defined the number of factors we were interested in after decomposition. These matrices are initialized with random values and normalized. Such an approach is used to speed up algorithm convergence, and can be seen in the following code snippet:

Matrix InitializeMatrix(Eigen::Index rows, Eigen::Index cols) {

Matrix mat = Matrix::Random(rows, cols).array().abs();

auto row_sums = mat.rowwise().sum();

mat.array().colwise() /= row_sums.array();

return mat;

}

- Then, we define and initialize the regularization matrix and identity matrices, which are constant during all learning cycles, as follows:

DataType reg_lambda = 0.1f;

SparseMatrix reg = (reg_lambda * Matrix::Identity(n_factors, n_factors)).sparseView();

// Define diagonal identity terms

SparseMatrix user_diag = -1 * Matrix::Identity(n, n).sparseView();

SparseMatrix item_diag = -1 * Matrix::Identity(m, m).sparseView();

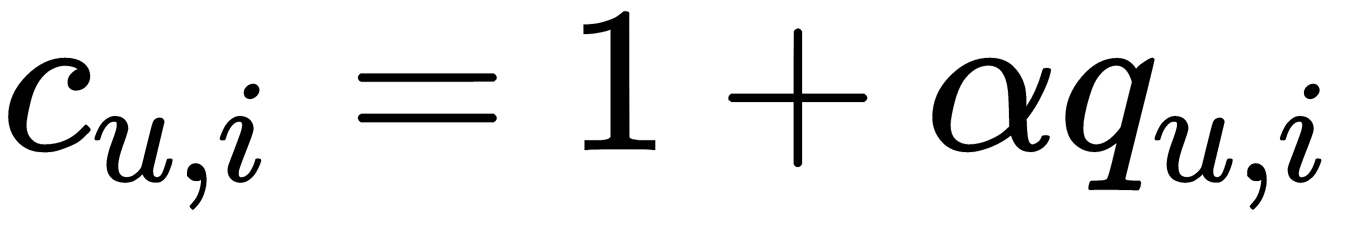

- Also, because we implement an algorithm version that can deal with implicit data, we need to convert our rating matrix to another view to decrease computational complexity. Our version of the algorithm needs user ratings in the form of

and as diagonal matrices for every user and item so that we can make two containers with corresponding matrix objects. The code for this can be seen in the following block:

and as diagonal matrices for every user and item so that we can make two containers with corresponding matrix objects. The code for this can be seen in the following block:

std::vector<DiagonalMatrix> user_weights(static_cast<size_t>(m));

std::vector<DiagonalMatrix> item_weights(static_cast<size_t>(n));

{

Matrix weights(ratings_matrix);

weights.array() *= alpha;

weights.array() += 1;

for (Eigen::Index i = 0; i < m; ++i) {

user_weights[static_cast<size_t>(i)] =

weights.row(i).asDiagonal();

}

for (Eigen::Index i = 0; i < n; ++i) {

item_weights[static_cast<size_t>(i)] =

weights.col(i).asDiagonal();

}

}

Now, we are ready to implement the main learning loop. As discussed, the ALS algorithm can be easily parallelized, so we use the OpenMP compiler extension to calculate user and item parameters in parallel.

Let's define the main learning cycle, which runs for a specified number of iterations, as follows:

size_t n_iterations = 5;

for (size_t k = 0; k < n_iterations; ++k) {

auto yt = y.transpose();

auto yty = yt * y;

...

// update item parameters

...

auto xt = x.transpose();

auto xtx = xt * x;

...

// update users preferences

...

auto w_mse = CalculateWeightedMse(x, y, p, ratings_matrix, alpha);

}

The following code shows how to update item parameters:

#pragma omp parallel

{

Matrix diff;

Matrix ytcuy;

Matrix a, b, update_y;

#pragma omp for private(diff, ytcuy, a, b, update_y)

for (size_t i = 0; i < static_cast<size_t>(m); ++i) {

diff = user_diag;

diff += user_weights[i];

ytcuy = yty + yt * diff * y;

auto p_val = p.row(static_cast<Eigen::Index>(i)).transpose();

a = ytcuy + reg;

b = yt * user_weights[i] * p_val;

update_y = a.colPivHouseholderQr().solve(b);

x.row(static_cast<Eigen::Index>(i)) = update_y.transpose();

}

}

The following code shows how to update users' preferences:

#pragma omp parallel

{

Matrix diff;

Matrix xtcux;

Matrix a, b, update_x;

#pragma omp for private(diff, xtcux, a, b, update_x)

for (size_t i = 0; i < static_cast<size_t>(n); ++i) {

diff = item_diag;

diff += item_weights[i];

xtcux = xtx + xt * diff * x;

auto p_val = p.col(static_cast<Eigen::Index>(i));

a = xtcux + reg;

b = xt * item_weights[i] * p_val;

update_x = a.colPivHouseholderQr().solve(b);

y.row(static_cast<Eigen::Index>(i)) = update_x.transpose();

}

}

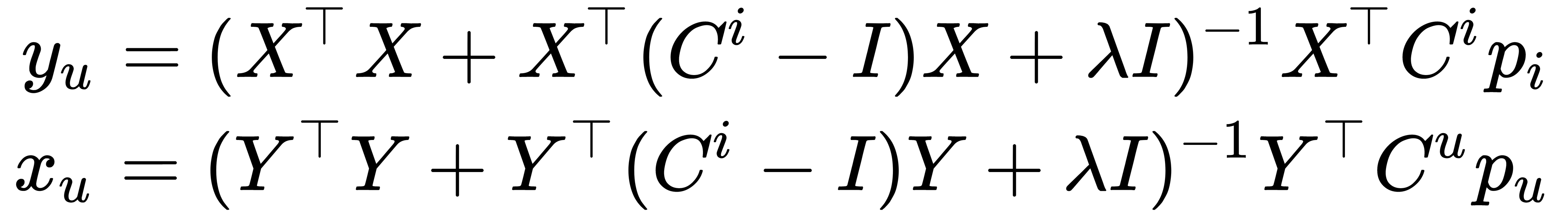

We have two parts of the loop body that are pretty much the same because at first, we updated item parameters with frizzed user options, and then we updated user preferences with frizzed item parameters. Notice that all matrix objects were moved outside of the internal loop body to reduce memory allocations and significantly improve program performance. Also, notice that we parallelized the user and item parameters' calculations separately because one of them should always be frizzed during the calculation of the other one. To calculate exact values for user preferences and item parameters, we use this formula:

X T X and Y T Y are precomputed at each step. Also, notice that these formulas are expressed in the form of the linear equation system, X = AB. We use the colPivHouseholderQr function from the Eigen library to solve it and get exact values for the user and item parameters. This linear equation system can be solved with other methods, too. The colPivHouseholderQr function was chosen because it shows a better ratio between computational speed and accuracy in the Eigen library implementation.

To estimate the progress of the learning process of our system, we can calculate the Mean Squared Error (MSE) between the original rating matrix and a predicted one. To calculate the predicted rating matrix, we define the next function, as follows:

Matrix RatingsPredictions(const Matrix& x, const Matrix& y) {

return x * y.transpose();

}

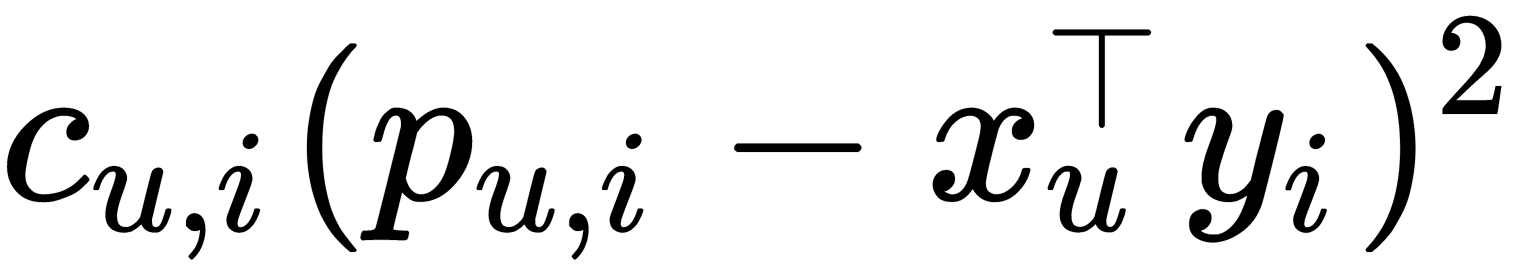

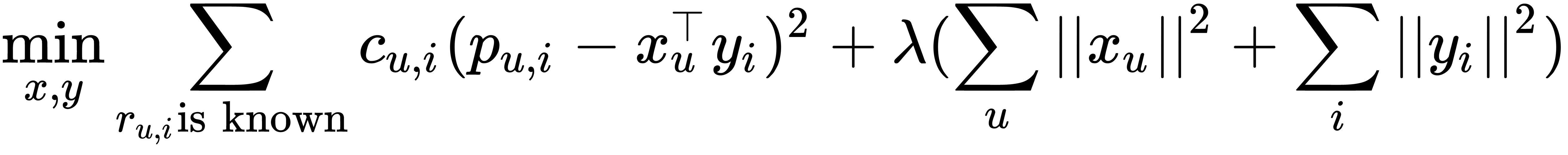

To calculate the MSE, we can use the expression  from our optimization function, like this:

from our optimization function, like this:

DataType CalculateWeightedMse(const Matrix& x,

const Matrix& y,

const SparseMatrix& p,

const SparseMatrix& ratings_matrix,

DataType alpha) {

Matrix c(ratings_matrix);

c.array() *= alpha;

c.array() += 1.0;

Matrix diff(p - RatingsPredictions(x, y));

diff = diff.array().pow(2.f);

Matrix weighted_diff = c.array() * diff.array();

return weighted_diff.array().mean();

}

Please note that we have to use weights and binary ratings to get a meaningful value for the error because a similar approach was used during the learning process. Direct error calculation gives the wrong result because the predicted matrix has non-zero predictions in the place where the original rating matrix has zeros. It is essential to understand that this algorithm doesn't learn the original scale of ratings (from 0 to 5), but instead it learns prediction values in the range from 0 to 1. It follows from the function we optimize, shown here:

We can use the previously defined movies list to show movie recommendations. The following function shows user preferences and system recommendations. To identify what a user likes, we show movie titles that the user has rated with a rating value of more than 3. We show movies that the system rates as equal to or higher than a 0.8 rating coefficient to identify which movie the system recommends to the user by running the following code:

void PrintRecommendations(const Matrix& ratings_matrix,

const Matrix& ratings_matrix_pred,

const std::vector<std::string>& movie_titles) {

auto n = ratings_matrix.cols();

std::vector<std::string> liked;

std::vector<std::string> recommended;

for (Eigen::Index u = 0; u < 5; ++u) {

for (Eigen::Index i = 0; i < n; ++i) {

DataType orig_value = ratings_matrix(u, i);

if (orig_value >= 3.f) {

liked.push_back(movie_titles[static_cast<size_t>(i)]);

}

DataType pred_value = ratings_matrix_pred(u, i);

if (pred_value >= 0.8f && orig_value < 1.f) {

recommended.push_back(movie_titles[

static_cast<size_t>(i)]);

}

}

std::cout << " User " << u << " liked :";

for (auto& l : liked) {

std::cout << l << "; ";

}

std::cout << " User " << u << " recommended :";

for (auto& r : recommended) {

std::cout << r << "; ";

}

std::cout << std::endl;

liked.clear();

recommended.clear();

}

}

This function can be used as follows:

PrintRecommendations(ratings_matrix, RatingsPredictions(x, y), movie_titles);