There is an implementation of the gradient boosting algorithm in the Shogun library but it is restricted, in that it only supports regression tasks. The algorithm is implemented in the CStochasticGBMachine class. The main parameters to configure objects of this class are the base ensemble algorithm model and the loss function, while other parameters include the number of iterations, the learning rate, and the fraction of training vectors to be chosen randomly at each iteration.

We will create an example using gradient boosting for cosine function approximation, assuming that we already have a training and testing dataset available (the exact implementation of a data generator can be found in the source code for this example). For this example, we will use an ensemble of decision trees. The implementation of the decision tree algorithm in the Shogun library can be found in the CCARTree class. A classification and regression tree (CART) is a binary decision tree that is constructed by splitting a node into two child nodes repeatedly, beginning with the root node that contains the whole dataset.

The first step is the creation and configuration of a CCARTree type object. The constructor of this object takes the vector of the feature types (nominal or continuous) and the problem type. After the object is constructed, we can configure the tree depth, which is the crucial parameter for the algorithm's performance.

Then, we have to create a loss function object. For the current task, the object of a CSquaredLoss type is a suitable choice.

With the model and the loss function object, we can then instantiate an object of the CStochasticGBMachine class. For the training, we have to use the set_labels and train methods with appropriate parameters: the object of the CRegressionLabels type and the object of the CDenseFeatures type respectively. For evaluation, the apply_regression method can be used, as illustrated in the following code block:

void GBMClassification(Some<CDenseFeatures<DataType>> features,

Some<CRegressionLabels> labels,

Some<CDenseFeatures<DataType>> test_features,

Some<CRegressionLabels> test_labels) {

// mark feature type as continuous

SGVector<bool> feature_type(1);

feature_type.set_const(false);

auto tree = some<CCARTree>(feature_type, PT_REGRESSION);

tree->set_max_depth(3);

auto loss = some<CSquaredLoss>();

auto sgbm = some<CStochasticGBMachine>(tree,

loss,

/*iterations*/ 100,

/*learning rate*/ 0.1,

/*sub-set fraction*/ 1.0);

sgbm->set_labels(labels);

sgbm->train(features);

// evaluate model on test data

auto new_labels = wrap(sgbm->apply_regression(test_features));

auto eval_criterium = some<CMeanSquaredError>();

auto accuracy = eval_criterium->evaluate(new_labels, test_labels);

...

}

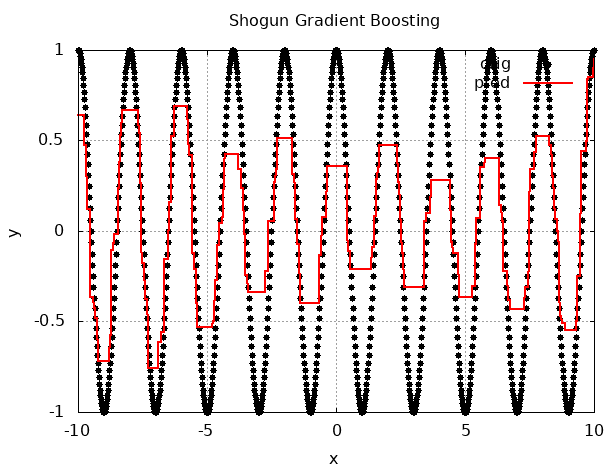

In the following diagram, we can see how gradient boosting approximates the cosine function in a case where we used a maximum CART tree depth equal to 2. Note that the generalization we achieved isn't particularly good:

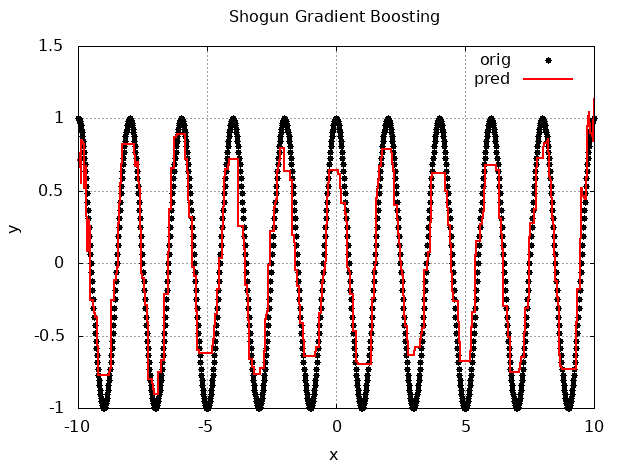

In the following diagram, we can see that the generalization significantly grew because we increased the CART tree depth to 3:

Other parameters that we can tune for this type of algorithm are the number of iterations, the learning rate, and the number of training samples used in one iteration.