The biological neuron consists of a body and processes that connect it to the outside world. The processes along which a neuron receives excitation are called dendrites. The process through which a neuron transmits excitation is called an axon. Each neuron has only one axon. Dendrites and axons have a rather complex branching structure. The junction of the axon and a dendrite is called a synapse. The main functionality of a neuron is to transfer excitation from dendrites to an axon. But signals that come from different dendrites can affect the signal in the axon. A neuron gives off a signal if the total excitation exceeds a certain limit value, which varies within certain limits. If the signal is not sent to the axon, the neuron does not respond to excitation. The intensity of the signal that the neuron receives (and therefore the activation possibility) strongly depends on synapse activity. A synapse is a contact for transmitting this information. Each synapse has a length, and special chemicals transmit a signal along it. This basic circuit has many simplifications and exceptions, but most neural networks model themselves on these simple properties.

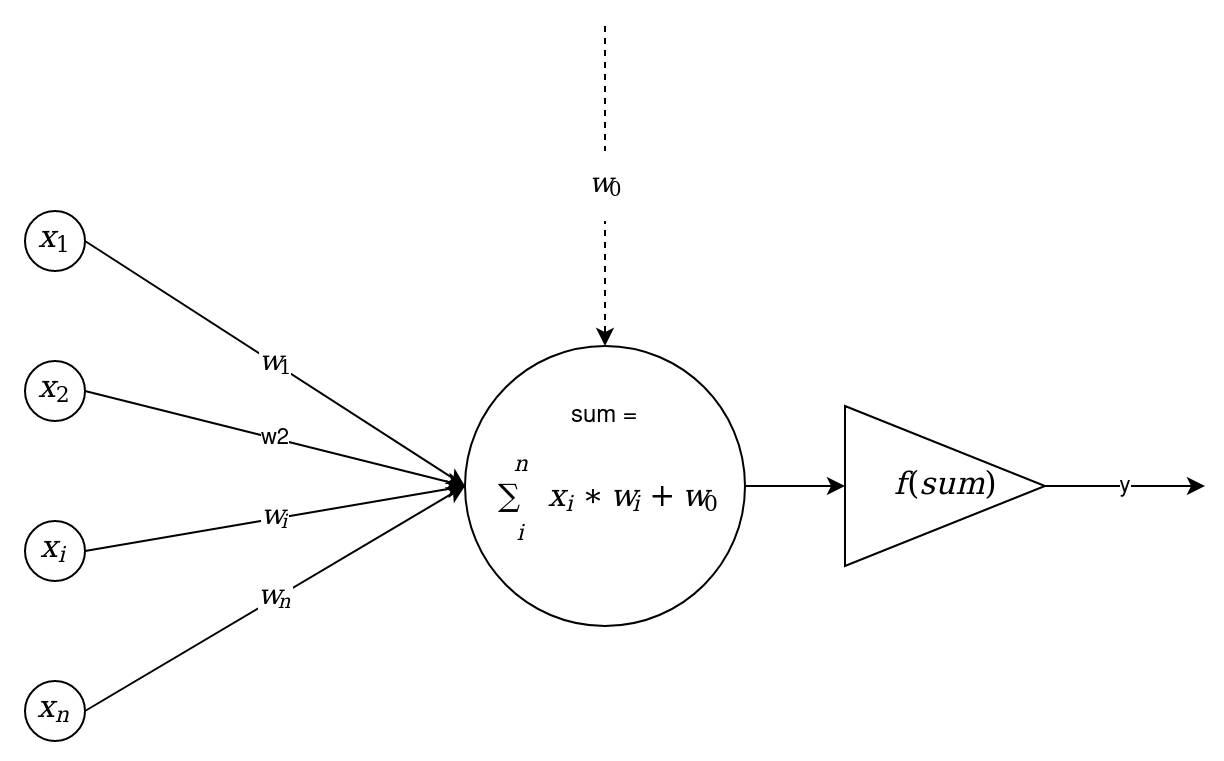

The artificial neuron receives a specific set of signals as input, each of which is the output of another neuron. Each input is multiplied by the corresponding weight, which is the equivalent to its synaptic power. Then, all the products are summed up and the result of this summation is used to determine the level of neuron activation. The following diagram shows a model that demonstrates this idea:

Here, a set of input signals, denoted by  , go to an artificial neuron. These input signals correspond to the signals that arrive at the synapses of a biological neuron. Each signal is multiplied by the corresponding weight,

, go to an artificial neuron. These input signals correspond to the signals that arrive at the synapses of a biological neuron. Each signal is multiplied by the corresponding weight,  , and passed to the summing block. Each weight corresponds to the strength of one biological synaptic connection. The summing block, which corresponds to the body of the biological neuron, algebraically combines the weighted inputs.

, and passed to the summing block. Each weight corresponds to the strength of one biological synaptic connection. The summing block, which corresponds to the body of the biological neuron, algebraically combines the weighted inputs.

The  signal, which is called bias, displays the function of the limit value, known as the shift. This signal allows us to shift the origin of the activation function, which subsequently leads to an increase in the neuron's learning speed. The bias signal is added to each neuron. It learns like all the other weights, except it connects to the signal, +1, instead of to the output of the previous neuron. The received signal is processed by the activation function, f, and gives a neural signal, y, as output. The activation function is a way to normalize the input data. It narrows the range of sum so that the values of f (sum) belong to a specific interval. That is, if we have a large input number, passing it through the activation function gets us output in the required range. There are many activation functions, and we'll go through them later in this chapter. To learn more about neural networks, we'll have a look at a few more of their components.

signal, which is called bias, displays the function of the limit value, known as the shift. This signal allows us to shift the origin of the activation function, which subsequently leads to an increase in the neuron's learning speed. The bias signal is added to each neuron. It learns like all the other weights, except it connects to the signal, +1, instead of to the output of the previous neuron. The received signal is processed by the activation function, f, and gives a neural signal, y, as output. The activation function is a way to normalize the input data. It narrows the range of sum so that the values of f (sum) belong to a specific interval. That is, if we have a large input number, passing it through the activation function gets us output in the required range. There are many activation functions, and we'll go through them later in this chapter. To learn more about neural networks, we'll have a look at a few more of their components.