With the loss function, neural network training is reduced to the process of optimally selecting the coefficients of the matrix of weights in order to minimize the error. This function should correspond to the task, for example, categorical cross-entropy for the classification problem or the square of the difference for regression. Differentiability is also an essential property of the loss function if the backpropagation method is used to train the network. Let's look at some of the popular loss functions that are used in neural networks:

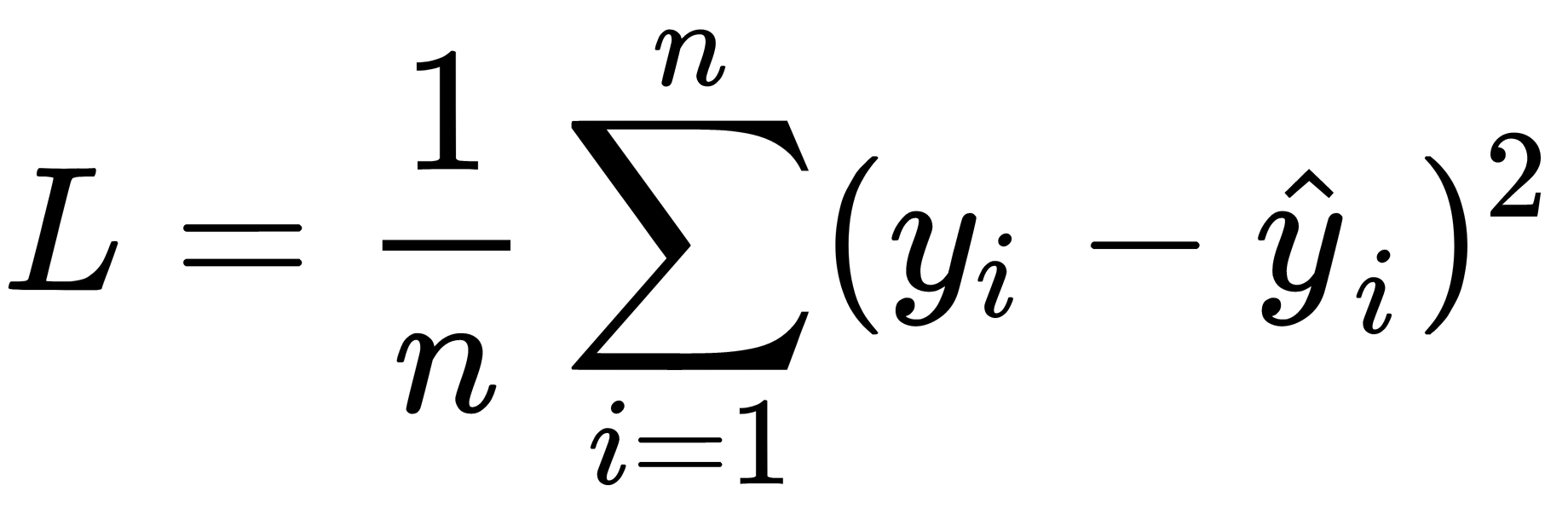

- The mean squared error (MSE) loss function is widely used for regression and classification tasks. Classifiers can predict continuous scores, which are intermediate results that are only converted into class labels (usually by a threshold) as the very last step of the classification process. MSE can be calculated using these continuous scores rather than the class labels. The advantage of this is that we avoid losing information due to dichotomization. The standard form of the MSE loss function is defined as follows:

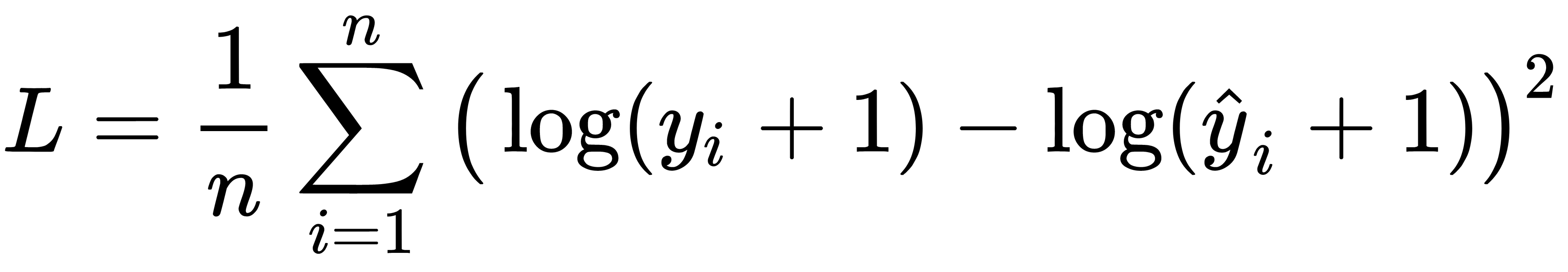

- The mean squared logarithmic error (MSLE) loss function is a variant of MSE and is defined as follows:

By taking the log of the predictions and target values, the variance that we are measuring has changed. It is often used when we do not want to penalize considerable differences in the predicted and target values when both the predicted and actual values are big numbers. Also, MSLE penalizes underestimates more than overestimates.

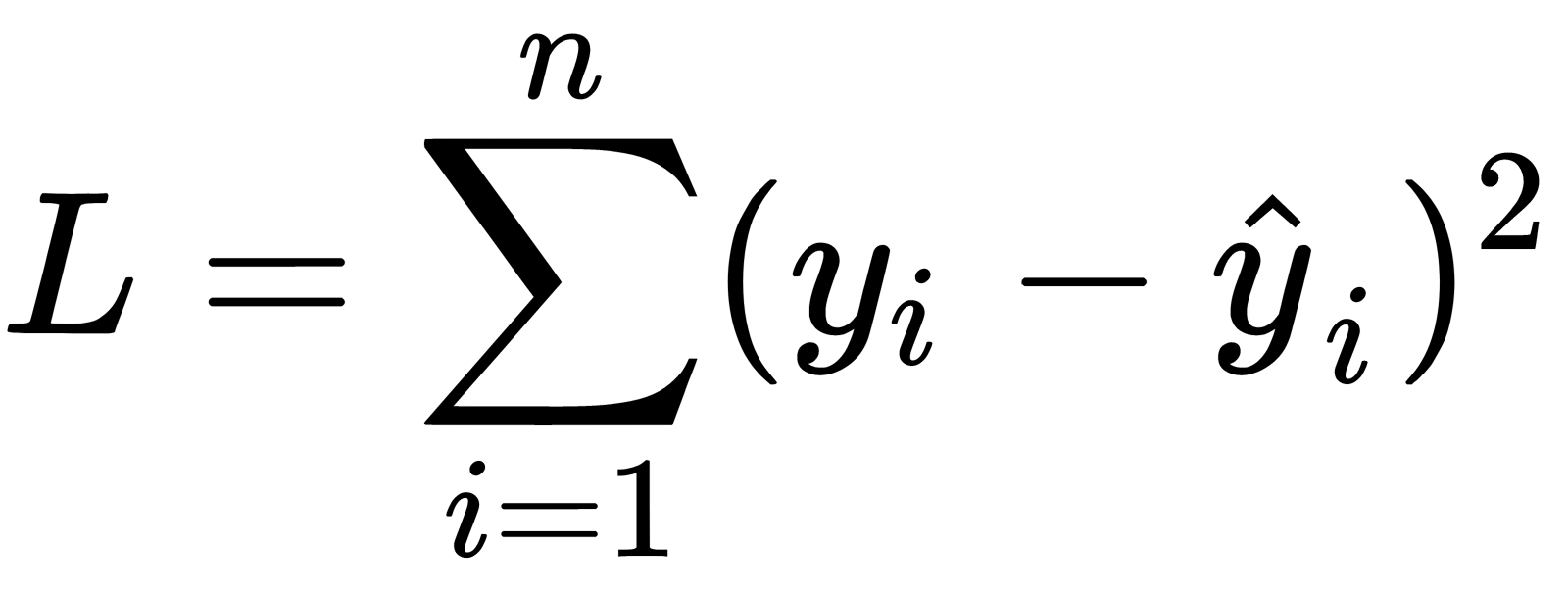

- The L2 loss function is the square of the L2 norm of the difference between the actual value and target value. It is defined as follows:

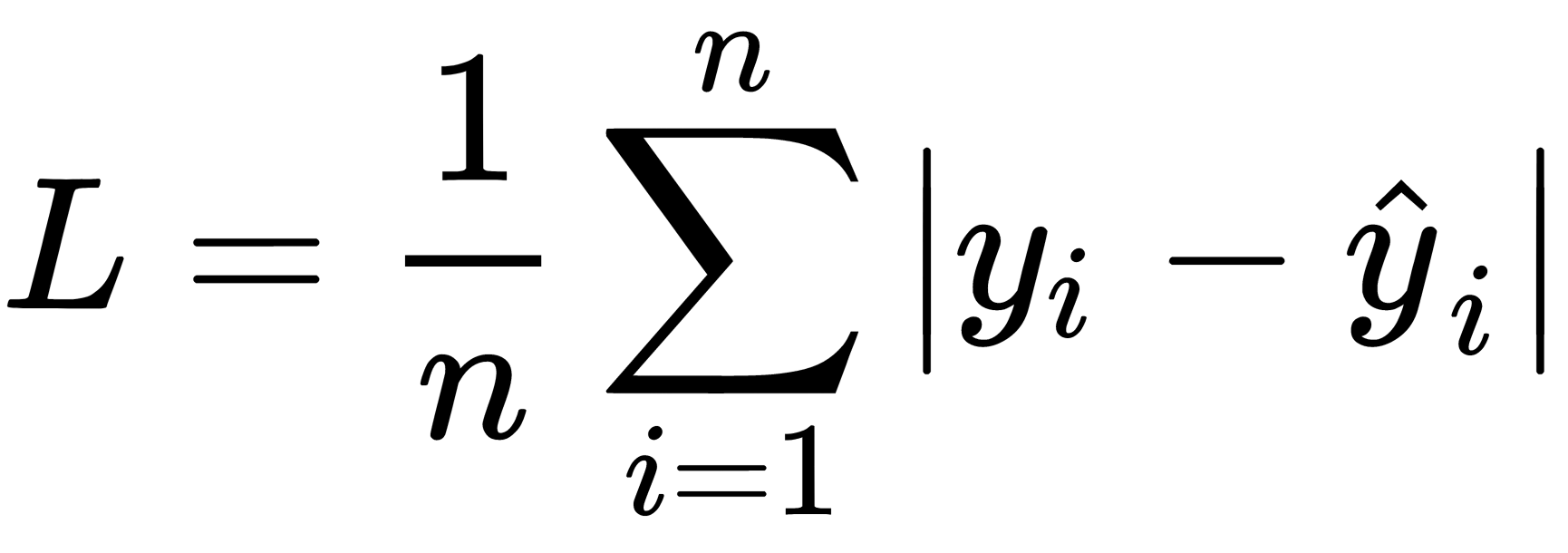

- The mean absolute error (MAE) loss function is used to measure how close forecasts or predictions are to the eventual outcomes:

MAE requires complicated tools such as linear programming to compute the gradient. MAE is more robust to outliers than MSE since it does not make use of the square.

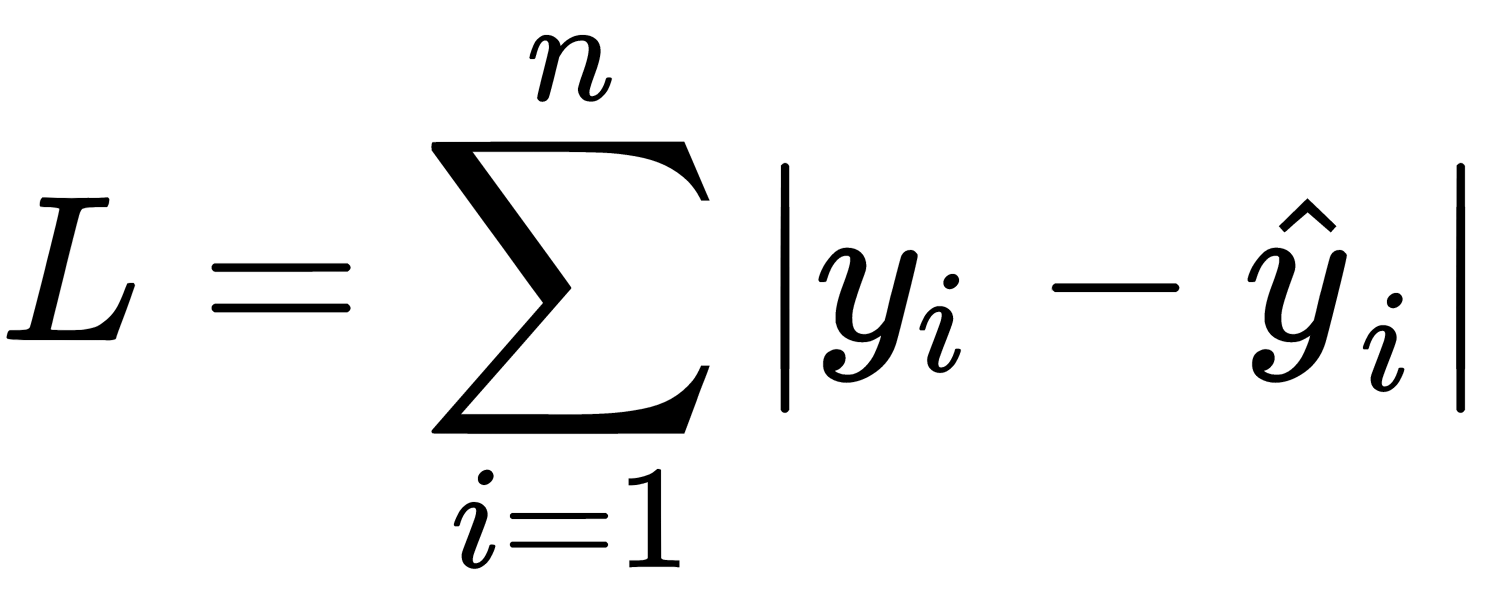

- The L1 loss function is the sum of absolute errors of the difference between the actual value and target value. Similar to the relationship between MSE and L2, L1 is mathematically similar to MAE except it does not have division by n. It is defined as follows:

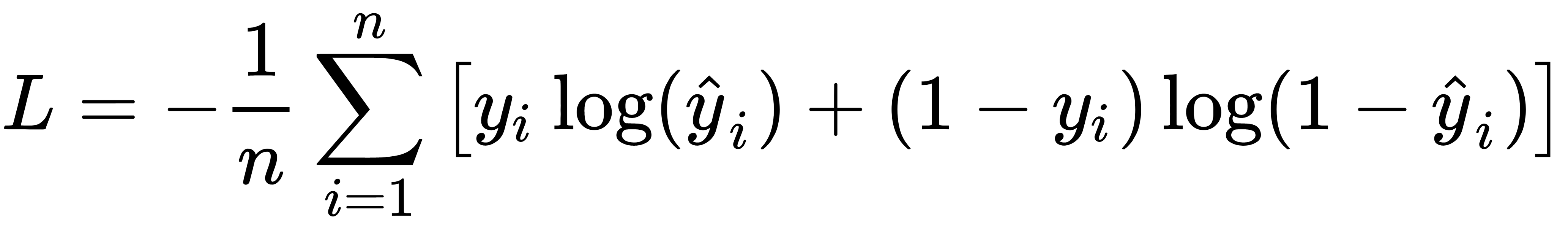

- The cross-entropy loss function is commonly used for binary classification tasks where labels are assumed to take values of 0 or 1. It is defined as follows:

Cross-entropy measures the divergence between two probability distributions. If the cross-entropy is large, this means that the difference between the two distributions is significant, while if the cross-entropy is small, this means that the two distributions are similar to each other. The cross-entropy loss function has the advantage of faster convergence, and it is more likely to reach global optimization than the quadratic loss function.

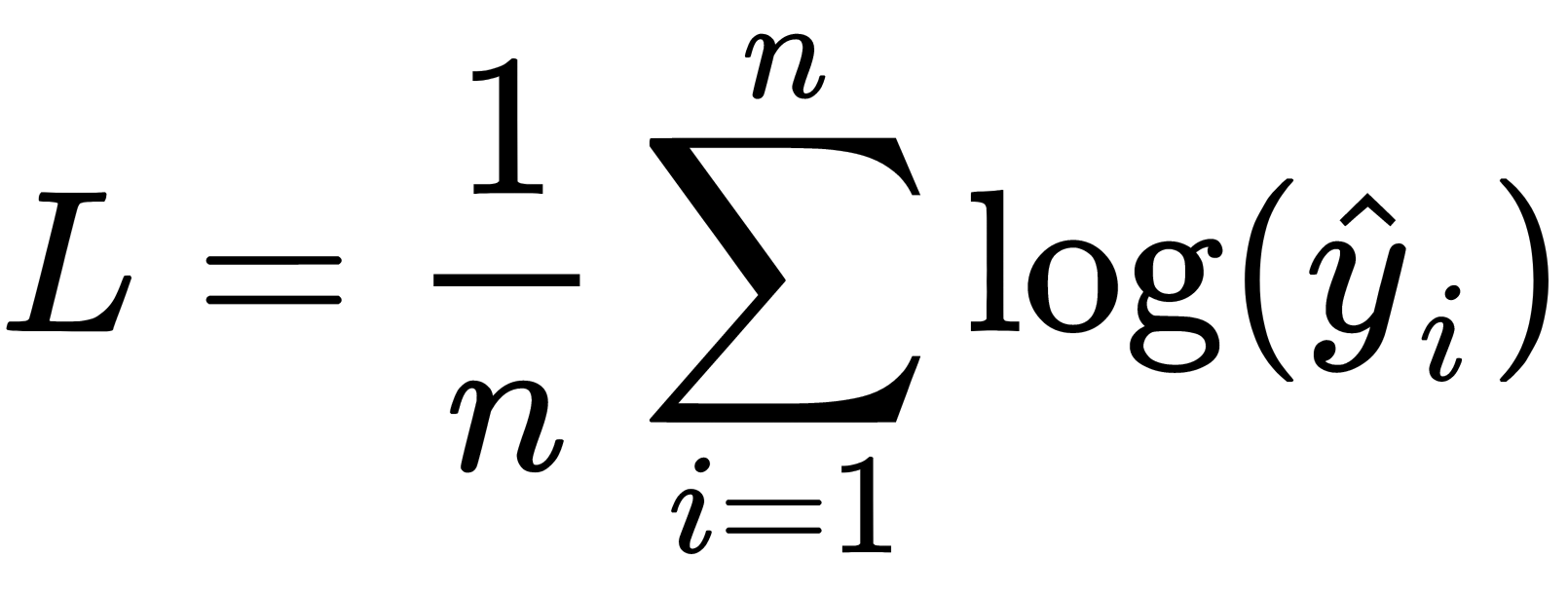

- The negative log-likelihood loss function is used in neural networks for classification tasks. It is used when the model outputs a probability for each class rather than the class label. It is defined as follows:

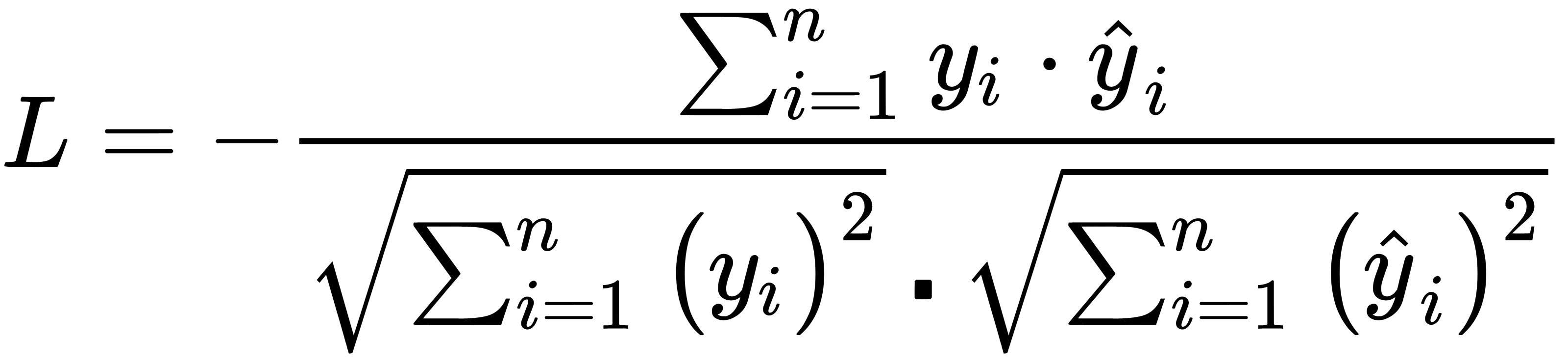

- The cosine proximity loss function computes the cosine proximity between the predicted value and the target value. It is defined as follows:

This function is the same as the cosine similarity, which is a measure of similarity between two non-zero vectors. This is expressed as the cosine of the angle between them. Unit vectors are maximally similar if they are parallel and maximally dissimilar if they are orthogonal.

- The hinge loss function is used for training classifiers. The hinge loss is also known as the max-margin objective and is used for maximum-margin classification. It uses the raw output of the classifier's decision function, not the predicted class label. It is defined as follows:

There are many other loss functions. Complex network architectures often use several loss functions to train different parts of a network. For example, the Mask RCNN architecture, which is used for predicting object classes and boundaries on images, uses different loss functions: one for regression and another for classifiers. In the next section, we will discuss the neuron's activation functions.