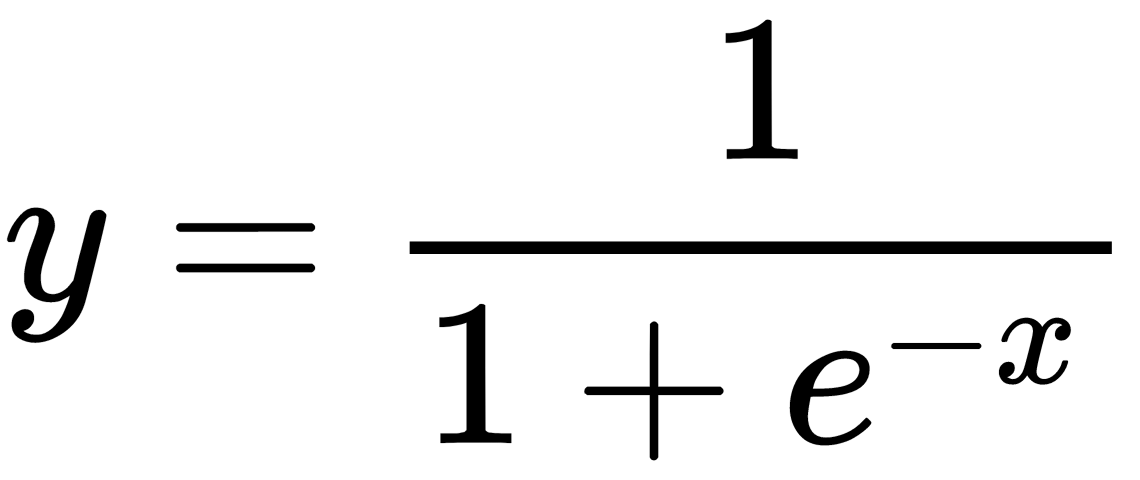

The sigmoid activation function,  , is a smooth function, similar to a stepwise function:

, is a smooth function, similar to a stepwise function:

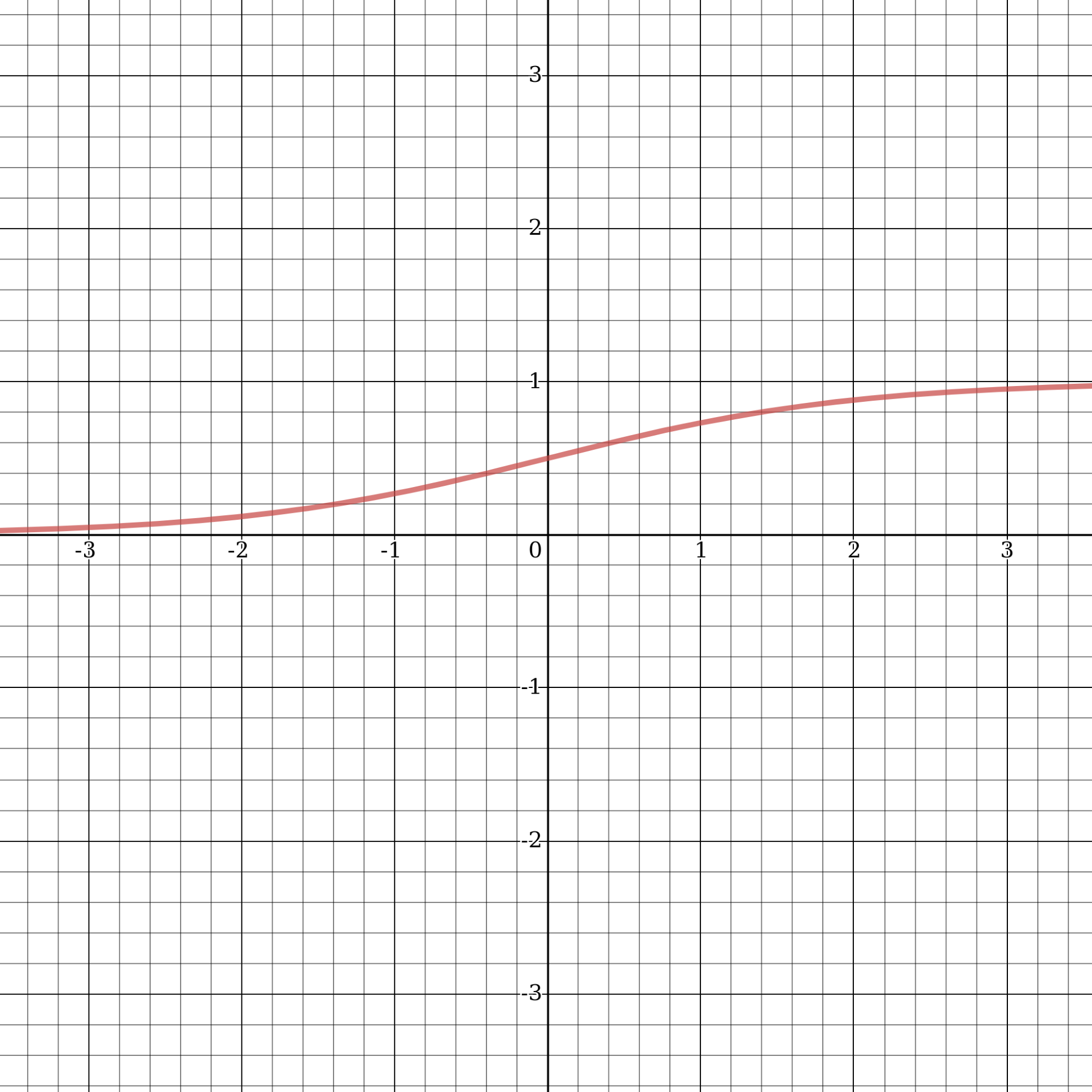

A sigmoid is a non-linear function, and a combination of sigmoids also produces a non-linear function. This allows us to combine neuron layers. A sigmoid activation function is not binary, which makes an activation with a set of values from the range [0,1], in contrast to a stepwise function. A smooth gradient also characterizes a sigmoid. In the range of values of  from -2 to 2, the values,

from -2 to 2, the values,  , change very quickly. This gradient property means that any small change in the value of

, change very quickly. This gradient property means that any small change in the value of  in this area entails a significant change in the value of

in this area entails a significant change in the value of  . This behavior of the function indicates that

. This behavior of the function indicates that  tends to cling to one of the edges of the curve.

tends to cling to one of the edges of the curve.

The sigmoid looks like a suitable function for classification tasks. It tries to bring the values to one of the sides of the curve (for example, to the upper edge at  and the lower edge at

and the lower edge at  ). This behavior allows us to find clear boundaries in the prediction.

). This behavior allows us to find clear boundaries in the prediction.

Another advantage of a sigmoid over a linear function is as follows: In the first case, we have a fixed range of function values, [0, 1], while a linear function varies within  . This is advantageous because it does not lead to errors in numerical calculations when dealing with large values on the activation function.

. This is advantageous because it does not lead to errors in numerical calculations when dealing with large values on the activation function.

Today, the sigmoid is one of the most widespread activation functions in neural networks. But it also has flaws that we have to take into account. When the sigmoid function approaches its maximum or minimum, the output value of  tends to weakly reflect changes in

tends to weakly reflect changes in  . This means that the gradient in such areas takes small values, and the small values cause the gradient to vanish. The vanishing gradient problem is a situation where a gradient value becomes too small or disappears and the neural network refuses to learn further or learns very slowly.

. This means that the gradient in such areas takes small values, and the small values cause the gradient to vanish. The vanishing gradient problem is a situation where a gradient value becomes too small or disappears and the neural network refuses to learn further or learns very slowly.