The network is developed from a small number of low-level filters in the initial stages to a vast number of filters, each of which finds a specific high-level attribute. The transition from level to level provides a hierarchy of pattern recognition.

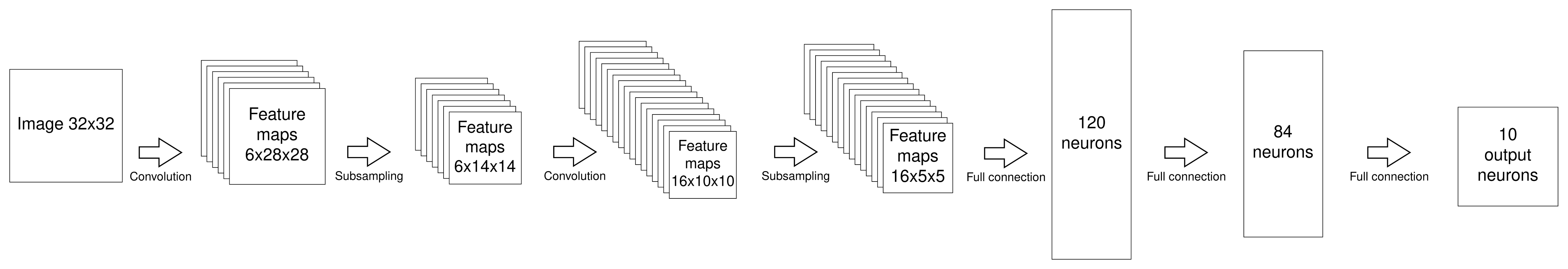

One of the first convolutional network architectures that was successfully applied to the pattern recognition task was the LeNet-5, which was developed by Yann LeCun, Leon Bottou, Yosuha Bengio, and Patrick Haffner. It was used to recognize handwritten and printed numbers in the 1990s. The following diagram shows this architecture:

The network layers of this architecture are explained in the following table:

| Number | Layer | Feature map (depth) | Size | Kernel size | Stride | Activation |

| Input | Image | 1 | 32 x 32 | - | - | - |

| 1 | Convolution | 6 | 28 x 28 | 5 x 5 | 1 | tanh |

| 2 | Average pool | 6 | 14 x 14 | 2 x 2 | 2 | tanh |

| 3 | Convolution | 16 | 10 x 10 | 5 x 5 | 1 | tanh |

| 4 | Average pool | 16 | 5 x 5 | 2 x 2 | 2 | tanh |

| 5 | Convolution | 120 | 1 x 1 | 5 x 5 | 1 | tanh |

| 6 | FC | 84 | - | - | tanh | |

| Output | FC | 10 | - | - |

softmax |

Notice how the depth and size of the layer are changing toward the final layer. We can see that the depth was increasing and that the size became smaller. This means that toward the final layer, the number of features the network can learn increased, but their size became smaller. Such behavior is very common among different convolutional network architectures.