We can represent tensor objects in computer memory in different ways. The most obvious method is a simple linear array in computer memory (random-access memory, or RAM). However, the linear array is also the most computationally effective data structure for modern CPUs. There are two standard practices to organize tensors with a linear array in memory: row-major ordering and column-major ordering. In row-major ordering, we place consecutive elements of a row in linear order one after the other, and each row is also placed after the end of the previous one. In column-major ordering, we do the same but with the column elements. Data layouts have a significant impact on computational performance because the speed of traversing an array relies on modern CPU architectures that work with sequential data more efficiently than with non-sequential data. CPU caching effects are the reasons for such behavior. Also, a contiguous data layout makes it possible to use SIMD vectorized instructions that work with sequential data more efficiently, and we can use them as a type of parallel processing.

Different libraries, even in the same programming language, can use different ordering. For example, Eigen uses column-major ordering, but PyTorch uses row-major ordering. So, developers should be aware of internal tensor representation in libraries they use, and also take care of this when performing data loading or implementing algorithms from scratch.

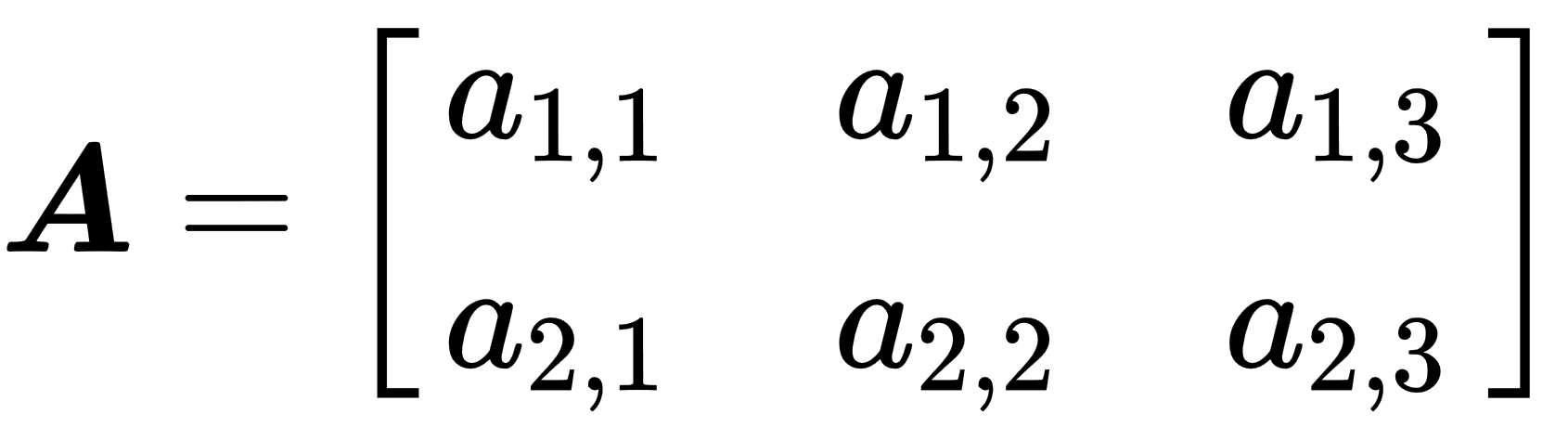

Consider the following matrix:

Then, in the row-major data layout, members of the matrix will have the following layout in memory:

|

0 |

1 |

2 |

3 |

4 |

5 |

|

a11 |

a12 |

a13 |

a21 |

a22 |

a23 |

In the case of the column-major data layout, order layout will be the next, as shown here:

|

0 |

1 |

2 |

3 |

4 |

5 |

|

a11 |

a21 |

a12 |

a22 |

a13 |

a23 |