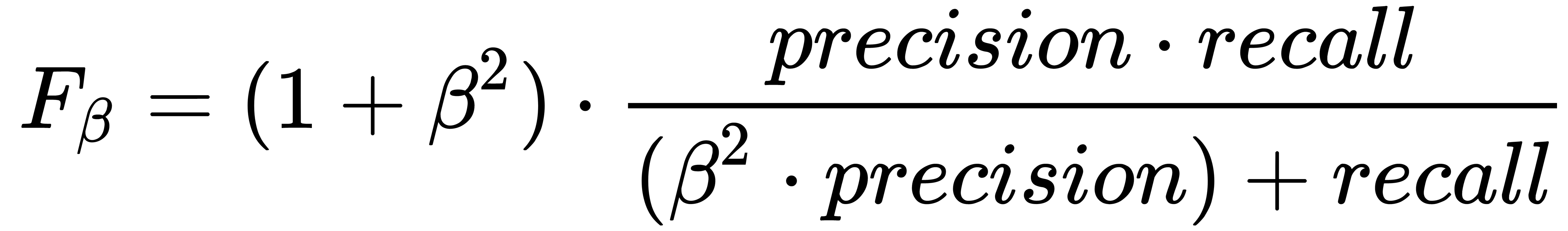

In many cases, it is useful to have only one metric that shows the classification's quality. For example, it makes sense to use some algorithms to search for the best hyperparameters, such as the GridSearch algorithm, which will be discussed later in this chapter. Such algorithms usually use one metric to compare different classification results after applying various parameter values during the search process. One of the most popular metrics for this case is the F-measure (or the F-score), which can be given as follows:

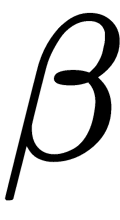

Here,  is the precision metric weight. Usually, the

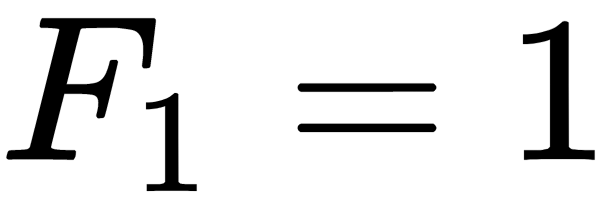

is the precision metric weight. Usually, the  value is equal to one. In such a case, we have the multiplier value equal to 2, which gives us

value is equal to one. In such a case, we have the multiplier value equal to 2, which gives us  if the precision = 1 and the recall = 1. In other cases, when the precision value or the recall value tends to be zero, the F-measure value will also decrease.

if the precision = 1 and the recall = 1. In other cases, when the precision value or the recall value tends to be zero, the F-measure value will also decrease.

There's a class called CF1Measure in the Shogun library that we can use to calculate this metric. The Shark-ML and Dlib libraries do not have classes or functions to calculate the F-measure.